Abstract

Objective

To determine whether e/Tablets (wireless tablet computers used in community oncology clinics to collect review of systems information at point of care) are feasible, acceptable, and valid for collecting research-quality data in academic oncology.

Data/Setting

Primary/Duke Breast Cancer Clinic.

Design

Pilot study enrolling sample of 66 breast cancer patients.

Methods

Data were collected using paper- and e/Tablet-based surveys: Functional Assessment of Cancer Therapy General, Functional Assessment of Cancer Therapy-Breast, MD Anderson Symptom Inventory, Functional Assessment of Chronic Illness Therapy (FACIT), Self-Efficacy; and two questionnaires: feasibility, satisfaction.

Principal Findings

Patients supported e/Tablets as: easy to read (94 percent), easy to respond to (98 percent), comfortable weight (87 percent). Generally, electronic responses validly reflected responses provided by standard paper data collection on nearly all subscales tested.

Conclusions

e/Tablets offer a valid, feasible, acceptable method for collecting research-quality, patient-reported outcomes data in outpatient academic oncology.

Keywords: Patient-reported outcomes, data collection, quality of life, neoplasms

Patient-reported outcomes (PROs) have increasingly gained acceptance as important and valid measures of symptoms, experiences, and health-related quality of life (HRQOL), and thus as critical metrics for evaluating the value of health care services to patients (Ganz 1994; Cella et al. 1997; Coates, Porzsolt, and Osoba 1997; Dancey et al. 1997). Major policy making entities which have emphasized the importance of incorporating PROs into cancer research and policy formation (Lipscomb, Gotay, and Snyder 2007) include the National Cancer Institute (2008), American Cancer Society (2007), U.S. Food and Drug Administration ( Johnson, Williams, and Pazdur 2003; U.S. Department of Health and Human Services 2007), U.S. Centers for Medicare & Medicaid Services (2004), and National Institutes of Health (2007). These agencies' interest in PROs reflects a growing national recognition that traditional medical outcomes (i.e., survival, disease progression) do not fully capture the patient's experience of health, an acknowledgement that patients' self-perceived well-being is an important outcome, and a new definition of “value” of health care which includes improvement in subjective outcomes of importance to patients.

The two principal, if not only, methods of gathering PROs before the advent of electronic methodologies are the paper survey and clinician interview, both labor and time-intensive methodologies. In order for PROs to be routinely integrated into clinical practice, PRO data collection methods must be efficient, that is, they must be easy, rapid, convenient, inexpensive, reliable, and clinically feasible (Cella 1995). Newly introduced technologies have revolutionized the data collection methods available to researchers and offer new, more efficient tools for clinical management.

Studies in diverse populations have found that technology-based methods of PRO data capture are well received by patients, if not preferred over paper-based instruments (Skinner and Allen 1983; O'Connor, Hallam, and Hinchcliffe 1989; Drummond et al. 1995; Lewis et al. 1996; Yarnold et al. 1996; Velikova et al. 1999; Ruland et al. 2003). A recent study also found that regular, repeated, PRO collection and feedback to physicians, in addition to facilitating physician–patient communication, have a positive impact on HRQOL and emotional functioning (Velikova et al. 2004). A recent meta-analysis of 65 studies directly assessed the equivalence of computer versus paper versions of PROs used in clinical trials and found that computer and paper-administered PROs are equivalent data collection methodologies, with very small mean differences that were neither statistically or clinically significant (Gwaltney, Shields, and Shiffman 2008). These findings directly compare the differences between assessments moved from paper to electronic versions, and cannot be generalized to all forms of electronic administration of PROs (Gwaltney et al. 2008).

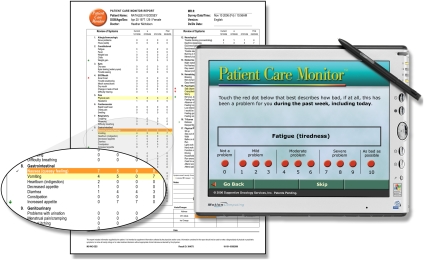

The e/Tablet technology utilized in the present study is a validated, widely implemented, HIPAA-compliant wireless tablet computer which patients use at the point of care to complete PRO surveys (Figure 1). The e/Tablet feeds PROs into a database system, which longitudinally warehouses data locally and/or across multiple sites. A network of >110 community oncology practices in the United States currently uses e/Tablets to administer a validated, medical review of systems (ROS) instrument, the Patient Care Monitor™ (PCM), which collects symptom, QOL, and performance status information (Fortner et al. 2003). Doctors from participating practices use these PROs to enhance clinical care; the ongoing use of e/Tablets by these practices suggests positive reception by community oncologists.

Figure 1.

e/Tablet and Patient Care Monitor Report

To date, the validity, acceptability, and clinical benefit of the use of the e/Tablets technology have not been investigated in the academic oncology setting. It cannot be assumed that an instrument validated in one clinical setting will perform equally well in another, nor that a system validated for one instrument (e.g., PCM) will prove similarly capable of delivering other instruments (e.g., research surveys). It is therefore important to study the validity and acceptability of e/Tablets in academic oncology to confirm previous findings in community settings and other patient populations.

METHODS

Objectives

The primary purpose of this pilot study was to determine whether e/Tablets, a PRO data-collection method successfully implemented in community oncology, can also be used in academic medicine to collect research-quality data while integrating clinical functions with research, thereby potentially improving efficiency and quality. Its principal objectives were:

To examine the validity of e/Tablets as a method for administering research surveys in academic oncology, by establishing agreement between data collected (a) electronically using e/Tablets, and (b) by traditional paper-based instruments, when patients complete well-recognized, validated, PRO surveys presented in these two formats; and

To explore the feasibility and acceptability of using e/Tablets in the academic oncology setting.

Design and Setting

This pilot feasibility study enrolled 66 patients from the Duke Breast Cancer Clinic.

Participants

Eligible participants were: (1) adults diagnosed with breast cancer; (2) expecting at least four clinical visits over the ensuing 6 months; and (3) able to speak and read English.

Surveys

e/Tablets were programmed with a standard ROS survey, the PCM. The PCM asks female patients 86 questions (80 for men) reflecting symptoms plus psychological distress and performance status. The PCM has seven subscales: General Physical Symptoms, Treatment Side Effects, Distress, Despair, Impaired Performance, Impaired Ambulation, and QOL. PCM subscale scores are normalized into T scores.

e/Tablet software was modified to deliver multiple PRO surveys. Individual survey instruments are selected using a modular survey selection screen, and electronically concatenated into a single stream of questions that are presented to the patient with appropriate instructions corresponding to the individual instrument. The Functional Assessment of Cancer Therapy General scale (FACT-G) (Cella et al. 1993), Functional Assessment of Cancer Therapy-Breast scale (FACT-B; disease-specific subscale that combines with the FACT-G) (Brady et al. 1997), MD Anderson Symptom Inventory (MDASI) (Cleeland, Mendoza, and Wang 2000), Functional Assessment of Chronic Illness Therapy-Fatigue (FACIT-Fatigue; symptom-specific subscale that combines with the FACT-G) (Yellen et al. 1997), and Self-Efficacy (Lorig et al. 1989) scales were selected because they: (a) capture data of greatest importance to comprehensive, patient-centered, oncology care; and (b) represent well-recognized, frequently used, PRO instruments. Approval was obtained from the author of each survey. An eight-item feasibility and a 19-item satisfaction with care questionnaire were also programmed onto e/Tablets. Description of completion of electronic and paper instruments appears under “Procedures.”

Technology Requirements

A wireless frequency site survey was conducted in the Duke University Medical Center (Durham, NC) cancer clinics. Duke has a secure WEP-enabled WiFi Protected Access system, across which data travel wirelessly from e/Tablets to a fully dedicated local server. The vendor's software was reconciled with Duke's wireless system.

Database

An ANSI-compliant database, which uses Microsoft SQL Server (version 08.00.0760, Seattle, WA), warehouses the Duke e/Tablet data behind the Duke firewall. The database is populated by information entered into the e/Tablets by patients, and is backed up nightly. Data can be viewed or extracted using a Microsoft Access 2003 front-end (Seattle, WA), or extracted and downloaded for analysis using a standard statistical package (e.g., SAS; SAS, Cary, NC).

Procedures

Attending physicians or mid-level providers suggested the study to patients during clinic visits. The research nurse approached potential participants in the clinic waiting area, described the e/Tablets and study purpose, and obtained informed consent. A “Patient Flow Protocol” for handing out e/Tablets, identifying appropriate instruments for each participant at each timepoint, collecting e/Tablets, and getting reports to clinicians was approved by all senior clinical staff. A “Clinical Response Thresholds Protocol” was developed to standardize response to urgent symptom scores.

At each of four study visits within 6 months, participants initially completed a paper version of one of four instruments (FACT-B, MDASI, FACIT-Fatigue, Self-Efficacy), and then completed electronic versions of PCM, FACT-B, MDASI, FACIT-Fatigue, and Self-Efficacy surveys. For each patient, the visit during which each of these paper instruments were administered was randomly ordered. Participants completed an electronically administered demographics questionnaire at the first visit. An eight-item satisfaction with e/Tablet questionnaire and a 19-item satisfaction with care questionnaire were also completed via e/Tablet at each visit.

Analysis

Analyses included all study surveys and questionnaires completed between March 19, 2006 and October 31, 2006.

All analyses were conducted using SAS 9.1. Results were summarized using descriptive statistics. Cronbach's α's were calculated to ensure internal consistency of the subscales. Mean subscale scores were calculated using published algorithms, visually inspected, and compared with published norms. Paper and electronic mean subscale scores were compared using paired Student's t-tests and verified with Sign tests. A two-sided p<.05 constituted statistical significance.

The main hypothesis tested, and on which the sample size estimate was calculated, was that there would no significant difference between subscale scores derived from data collected via paper and those same scores derived from data collected electronically via e/Tablets. If the true mean difference between paper and e/Tablet scores was greater than half the standard deviation of the mean difference, then e/Tablet data collection would not be considered equally accurate as paper data collection. The statistical hypothesis tested was as follows: H0; μd=0, versus H1; μd≠0, where μd is the unknown mean difference of the subscale score calculated from data collected via paper and e/Tablets. The study's sample size goal of 60 patients allowed the test for each subscale to have the type I error rate and power of 0.05 and 0.968, respectively, without accounting for multiple testing.

Funding and Ethical Approval

This study was funded through an Outcomes Research service agreement with Pfizer Inc. and approved by the Duke University Health System Institutional Review Board.

RESULTS

Participant Population

Of 73 referred and eligible patients, 66 provided informed consent. Baseline characteristics for participants (Table 1) were: non-Caucasian, 22 percent; ≥60 years of age, 38 percent; no college degree, 49 percent. Of the seven eligible women who declined to participate, three (43 percent) were non-Caucasian and mean age was 59 (SD 6; range 52–68).

Table 1.

Baseline Characteristics of the e/Tablet Study Population

| Characteristic | Frequency or Mean (SD) | % or Range |

|---|---|---|

| Total N | 66 | |

| Female gender | 66 | 100 |

| Age (years) | 55 (12) | 32–85 |

| Age <40 | 9 | 14 |

| Age 40–49 | 14 | 21 |

| Age 50–59 | 18 | 27 |

| Age 60–69 | 20 | 30 |

| Age ≥70 | 5 | 8 |

| Race/ethnicity | ||

| White/Caucasian | 51 | 77 |

| Black/African American | 13 | 20 |

| Chinese | 1 | 2 |

| Not stated | 1 | 2 |

| Education | ||

| Some high school/high school diploma | 9 | 14 |

| Some college/associate or technical degree | 23 | 35 |

| Bachelor degree | 16 | 24 |

| Some graduate school/graduate degree | 18 | 27 |

| Martial status | ||

| Married or permanent partner | 45 | 68 |

| Divorced or separated | 13 | 20 |

| Widowed | 5 | 8 |

| Not stated or missing | 3 | 5 |

| Metastatic breast cancer | 40 | 61 |

| Receiving infusional chemotherapy | 60 | 91 |

Feasibility and Acceptability of e/Tablets in Academic Oncology

An initial indication of e/Tablets' acceptability lies in the fact that 90 percent of screened, eligible patients enrolled in the study.

Feasibility and acceptability of e/Tablets were explored through analysis of participants' responses to an eight-item questionnaire (Table 2) delivered via e/Tablet at each of four study visits. Responses to this questionnaire have previously been presented (Abernethy et al. 2007). Highlights were that:

Table 2.

Logistical Feasibility with e/Tablets, Satisfaction, and Use of e/Tablets to Track Patient Concerns

| Question and Responses for the First Visit | Frequency | % |

|---|---|---|

| Total N | 64 | |

| Logistics of using the e/Tablets: | ||

| How easy was it to read the e/Tablet? | ||

| Very easy | 57 | 89 |

| Somewhat easy | 3 | 5 |

| Neither difficult nor easy | 2 | 3 |

| Somewhat difficult | 1 | 2 |

| Skipped question | 1 | 2 |

| How easy was it to use the e/Tablet computer to respond to the questions? | ||

| Very easy | 59 | 92 |

| Somewhat easy | 4 | 6 |

| Skipped question | 1 | 2 |

| How easy was it to navigate with the pen on the e/Tablet computer? | ||

| Very easy | 60 | 94 |

| Somewhat easy | 3 | 5 |

| Skipped question | 1 | 2 |

| Was the weight of the e/Tablet computer comfortable for your use? | ||

| Very comfortable | 49 | 77 |

| Somewhat comfortable | 8 | 13 |

| Neither comfortable nor uncomfortable | 1 | 2 |

| Somewhat uncomfortable | 4 | 6 |

| Very uncomfortable | 1 | 2 |

| Skipped question | 1 | 2 |

| In general, how satisfied were you with using the e/Tablet to report your symptoms? | ||

| Very satisfied | 22 | 34 |

| Satisfied | 26 | 41 |

| Neither satisfied or dissatisfied | 7 | 11 |

| Somewhat dissatisfied | 3 | 5 |

| Very dissatisfied | 4 | 6 |

| Skipped question | 2 | 3 |

| Would you recommend that other patients use the e/Tablet? | ||

| Yes | 54 | 84 |

| No | 0 | 0 |

| I don't know | 8 | 13 |

| Skipped question | 2 | 3 |

| Use of e/Tablets to track patient concerns | ||

| Did using the e/Tablet help you to remember when you were experiencing symptoms such as pain, fatigue, and depression? | ||

| Yes | 42 | 66 |

| No | 9 | 14 |

| I don't know | 6 | 9 |

| I haven't seen my doctor yet | 5 | 8 |

| Skipped question | 2 | 3 |

| Did the e/Tablet encourage you to discuss medical issues with your doctor that you might otherwise not have discussed? | ||

| Yes | 16 | 25 |

| No | 21 | 33 |

| I don't know | 13 | 20 |

| I haven't seen my doctor yet | 12 | 19 |

| Skipped question | 2 | 3 |

Ninety-four percent of participants indicated that the e/Tablet was either “very easy” or “somewhat easy” to read.

Ninety-eight percent of participants indicated that the e/Tablet was “easy to use.”

Ninety-nine percent of participants reported that it was “easy to navigate with the pen.”

Ninety percent of participants indicated that the weight of the e/Tablet was either “very comfortable” (77 percent) or “somewhat comfortable” (13 percent).

At the first visit, 75 percent of participants indicated that they were satisfied with the e/Tablets for reporting symptoms; this proportion was higher at later visits (85, 88, 79 percent).

At the first visit, 84 percent of participants indicated that they would recommend the e/Tablets to other patients; this proportion increased to 94 percent by the fourth visit.

Seventy-four percent (adjusted for skipped and “I haven't seen my doctor yet” responses) of participants felt that the e/Tablet system helped them remember symptoms they had experienced.

Thirty-two percent (adjusted for skipped and “I haven't seen my doctor yet” responses) reported that the e/Tablets encouraged them to discuss with their physician medical issues that they might have otherwise forgotten.

Validation of e/Tablets-Based PRO Surveys: Comparison of Paper and Electronic Data

To evaluate the utility of e/Tablets for research purposes, subscale scores were calculated for each of the surveys collected in both electronic and paper versions (FACT-G, FACT-B, MDASI, FACIT-Fatigue, Self-Efficacy). Cronbach's α coefficients were calculated to verify internal consistency (Table 3); all coefficients were acceptable and generally better than those previously published. Electronic scores were matched to paper scores for the timepoint at which the patient completed both the paper and the electronic version of the survey in question. Differences between electronic and paper scores were compared using raw scores and paired Student's t-tests; results were verified with the Sign test (Table 3). Paper and electronic versions of the FACT-B, MDASI, FACIT-Fatigue, and three of four FACT-G subscales were not significantly different. FACT-G Social Well Being and all Self-Efficacy subscales were significantly dissimilar.

Table 3.

Cronbach's α Coefficients and Mean Subscale Scores for Paper and Electronic Instruments

| Cronbach's α Coefficients | Comparison of Means | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Paper | Electronic | |||||||||

| Scale/Subscale | N* | Raw | Standardized | Raw | Standardized | Published (Reference) | Paper Raw Means (SD) | Electronic Raw Means (SD) | Difference Mean (SD) | t-Test p-Value† |

| Fact-G Physical Well-Being | 58 | 0.89 | 0.89 | 0.90 | 0.90 | 0.82 (1) | 20.41 | 20.49 | 0.07 | .7763 |

| 0.81 (2) | (6.27) | (5.86) | (1.99) | |||||||

| Fact-G Social Well-Being | 56 | 0.84 | 0.86 | 0.87 | 0.89 | 0.69 (1) | 23.24 | 22.27 | −0.97 | .0060 |

| 0.69 (2) | (5.25) | (5.69) | (2.53) | |||||||

| Fact-G Emotional Well-Being | 58 | 0.78 | 0.79 | 0.83 | 0.83 | 0.74 (1) | 18.39 | 18.66 | 0.27 | .3526‡ |

| 0.69 (2) | (4.40) | (4.47) | (2.23) | |||||||

| Fact-G Functional Well-Being | 58 | 0.87 | 0.87 | 0.85 | 0.86 | 0.80 (1) | 17.98 | 17.64 | −0.34 | .3112 |

| 0.86 (2) | (6.06) | (5.95) | (2.55) | |||||||

| Fact-B Breast Cancer Supplemental Scale | 55 | 0.67 | 0.66 | 0.68 | 0.67 | 0.63 (2) | 24.98 | 24.49 | −0.49 | .1955 |

| (5.76) | (5.76) | (2.76) | ||||||||

| MDASI Symptom | 50 | 0.87 | 0.87 | 0.87 | 0.86 | 0.87 (3) | 1.96 | 1.82 | −0.14 | .3291 |

| (1.56) | (1.50) | (0.97) | ||||||||

| MDASI Interference | 50 | 0.91 | 0.91 | 0.94 | 0.94 | 0.95 (3) | 2.30 | 2.22 | −0.08 | .5826 |

| (2.09) | (2.31) | (0.96) | ||||||||

| FACIT-Fatigue | 59 | 0.97 | 0.97 | 0.97 | 0.97 | 0.93 (4) | 34.12 | 34.47 | 0.36 | .4205 |

| (13.29) | (13.14) | (3.40) | ||||||||

| Self-Efficacy Other | 51 | 0.88 | 0.88 | 0.92 | 0.92 | 0.87 (5) | 67.06 | 62.00 | −5.06 | .0004§ |

| (21.67) | (23.34) | (9.59) | ||||||||

| Self-Efficacy Pain | 51 | 0.93 | 0.93 | 0.95 | 0.95 | 0.76 (5) | 63.92 | 57.62 | −6.29 | <.0001 |

| (21.53) | (21.88) | (10.16) | ||||||||

| Self-Efficacy Functioning | 52 | 0.93 | 0.93 | 0.93 | 0.93 | 0.89 (5) | 69.87 | 63.97 | −5.90 | .0010 |

| (24.51) | (25.10) | (12.15) | ||||||||

| Self-Efficacy Overall | 51 | 0.96 | 0.96 | 0.97 | 0.98 | Not available | 65.91 | 59.79 | −6.11 | <.0001 |

| (21.31) | (22.26) | (9.18) | ||||||||

References in (#): (1) Cella et al. (1993); (2) Brady et al. (1997); (3) Cleeland et al. (2000); (4) Yellen et al. (1997); (5) RCMAR Measurement Tools (2007).

N refers to the number of patients who have a paper and electronic version of the noted instrument completed at the same visit.

Validated with Sign Test.

Signed Rank Test borderline significant p-value.

Sign Test not significant p-value.

DISCUSSION

This pilot study demonstrated that: (1) e/Tablets can collect data of comparable validity to data collected by well-recognized paper-based PRO surveys; and (2) e/Tablets are feasible and acceptable to patients in an academic oncology clinic. Data yielded by paper and electronic format of certain subscales—specifically, the FACT-G Social Well-Being subscale and all Self-Efficacy subscales—were not statistically equivalent and thus we cannot recommend interchangeability of these two data collection methods for these subscales. Potential reasons for paper versus electronic discrepancies on these subscales are explored elsewhere (Abernethy et al. 2007).

e/Tablets functionally integrate the collection of clinical data and research data. They thus may exert a positive process impact; future studies might explore whether e/Tablets (or similar technology-based systems) enhance practice efficiency, support improved patient/provider communication, more expediently identify patient needs, and promote patient-centered care. In community oncology, the e/Tablet system has reduced dictation times, resulted in more accurate charting and billing, facilitated Joint Commission-compliant patient education, and enabled real-time quality monitoring (B. F., Fortner personal communication, August 2007). In academia, demonstration that e/Tablets can collect and longitudinally warehouse research-quality PROs, which can be efficiently matched with clinical trials, will be a strong motivator for their adoption. Linking clinical and research data with administrative data will cement their appeal to institutional leaders and administrators. Administrative data have been linked with e/Tablet data in community oncology; linkages at Duke are underway.

Patients today, through models such as consumer-directed health plans, are being asked to play an increasing role in medical decision making (Hibbard et al. 2004). Patients' effectiveness in this role hinges upon “patient activation,” i.e. patients' acquisition of the skills, knowledge, and motivation to participate in their own health care (von Korff et al. 1997). This pilot study suggests that e/Tablets may promote patient activation. Participants reported that e/Tablets helped them remember symptoms, and encouraged them to discuss medical issues with their doctor. At least one-third of participants felt that the e/Tablet enhanced the patient/physician dialogue. A study designed to more definitively evaluated e/Tablets' impact on patient activation might shed light on the technology's ability to facilitate patient activation. Current directions in software development, such as creation of Spanish and low-literacy versions, raise hopes that e/Tablets might help engage patients who are otherwise challenging to activate.

The data collection, integration, and storage capabilities of e/Tablets, and of similar validated technologies, could help advance initiatives designed to use PROs to enhance quality and value in health care. Nationally, the Patient Reported Outcomes Measures Information System (PROMIS), part of the NIH Roadmap, Re-engineering Clinical Research Initiative, is developing sophisticated methods of PRO collection, coordination, warehousing, and analysis. Internationally, countries such as Australia (Jackson 2007), England (Walley 2007), and Canada (Hailey 2007) are using health technology assessment (HTA) to evaluate the effectiveness, costs, and broad impact of medical interventions; evaluations include patient-centered metrics such as quality-adjusted life years and health state.

e/Tablets represent a possible mechanism for gathering, storing, and making available for analysis the PRO data that will become part of large-scale health services research initiatives, and that will support the national effort to optimize efficiency and value in health care. Ultimately, the success of initiatives such as PROMIS and HTA may hinge on their ability to integrate data collected through many new technology-based systems into large-scale repositories that leverage the strengths of local clinical/research data collection and management systems in service of quality, integrity, efficiency, and value goals.

Several limitations of this e/Tablets study warrant noting. This was a pilot study conducted at a single institution; it enrolled a small convenience sample; participants comprised only women; all participants had the same disease.

Acknowledgments

The authors thank all of the study participants who generously donated their time and personal information in an effort to improve the care of others. We are also deeply indebted to the clinical teams in the Duke Breast Cancer Clinic, without whom this study would not have been possible.

Disclosures: This pilot study was funded through an Outcomes Research service agreement with Pfizer, Inc. Pfizer does not have access to the individual data collected through this study. Supportive Oncology Services (SOS), Inc., as vendor, provided the hardware (i.e., the e/Tablets), which was not funded through the Pfizer agreement. Duke University Medical Center provided the wireless system and associated technical support.

Roles of contributors on this project are as follows: Amy Abernethy served as Principal Investigator and was involved in all aspects of the project. James Herndon served as lead statistician and contributed significantly to the statistical design and analysis of data. Meenal Patwardhan, Heather Shaw, H. Kim Lyerly, and Kevin Weinfurt contributed expert guidance, review, and content input during the study itself, the analysis of data, and the final presentation of results. Jane Wheeler served as lead writer who helped to frame the analysis and discussion, and contributed significantly to the content of the final manuscript. Krista Rowe served as Clinical Research Manager who oversaw all research nurse activity in conduct of the study. Laura Criscione provided critical administrative and data support.

Dr. Abernethy has a nominal consulting arrangement with SOS, Inc., developed after this study was completed; this consulting arrangement was declared to and is monitored by the Duke Conflict of Interest Committee. PROMIS is an NIH Roadmap Initiative. Dr. Weinfurt is Principal Investigator of the Duke PROMIS primary research site with a cancer supplement grant (1 U01AR052186-01); Dr. Abernethy is a co-investigator on the Duke PROMIS cancer supplement grant. No other authors or contributors have conflicts of interest to report.

Disclaimers: None.

Supporting Information

Additional supporting information may be found in the online version of this article:

Author matrix.

Please note: Blackwell Publishing is not responsible for the content or functionality of any supplementary materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Abernethy A P, Herndon J E, Day J D, Hood L, Wheeler J, Patwardhan M, Shaw H, Lyerly H K. E-Tablets to Collect Research-Quality Patient-Reported Data. Journal of Clinical Oncology. 2007;25:6609. [Google Scholar]

- American Cancer Society. 2015 Goals of the American Cancer Society. 2007 [accessed on August 27, 2007]. Available at http://www.cancer.org/docroot/COM/content/div_PA/COM_4_2X_Volunteer_11087.asp.

- Brady M J, Cella D F, Bonomi A E, Tulsky D S, Lloyd S R, Deasy S, Cobleigh M, Shiomoto G. Reliability and Validity of the Functional Assessment of Cancer Therapy-Breast Quality of Life Instruments. Journal of Clinical Oncology. 1997;15:974–86. doi: 10.1200/JCO.1997.15.3.974. [DOI] [PubMed] [Google Scholar]

- Brucker P, Yost K, Cashy J, Webster K, Cella D. General Population and Cancer Patient Norms for the Functional Assessment of Cancer Therapy— General (FACT-G) Evaluation and the Health Professions. 2005;28:192–211. doi: 10.1177/0163278705275341. [DOI] [PubMed] [Google Scholar]

- Cella D, Fairclough D L, Bonomi P B, Kim K, Johnson D. Quality of Life in Advanced Non-Small Cell Lung Cancer (NSCLC): Results from Eastern Cooperative Oncology Group (ECOG) Study E5592. Proceedings of the American Society for Clinical Oncology. 1997;16:2a. doi: 10.1200/JCO.2000.18.3.623. (abstract 4) [DOI] [PubMed] [Google Scholar]

- Cella D F. Methods and Problems in Measuring Quality of Life. Support Care Cancer. 1995;3:11–22. doi: 10.1007/BF00343916. [DOI] [PubMed] [Google Scholar]

- Cella D F, Tulsky D S, Gray G, Sarafian B, Linn E, Bonomi A, Silberman M, Yellen S B, Winicour P, Brannon J. The Functional Assessment of Cancer Therapy Scale: Development and Validation of the General Measure. Journal of Clinical Oncology. 1993;11:570–9. doi: 10.1200/JCO.1993.11.3.570. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services. Medicare Launches Efforts to Improve Care for Cancer Patients: New Coverage, Better Evidence, and New Support for Improving Quality of Care. 2004 [accessed on August 27, 2007]. Available at http://www.cms.hhs.gov/apps/media/press/release.asp?Counter=1244.

- Cleeland C S, Mendoza T R, Wang X S. Assessing Symptom Distress in Cancer Patients: The M.D. Anderson Symptom Inventory. Cancer. 2000;89:1634–46. doi: 10.1002/1097-0142(20001001)89:7<1634::aid-cncr29>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- Coates A, Porzsolt F, Osoba D. Quality of Life in Oncology Practice: Prognostic Value of EORTC QLQ-C30 Scores in Patients with Advanced Malignancy. European Journal of Cancer. 1997;33:1025–30. doi: 10.1016/s0959-8049(97)00049-x. [DOI] [PubMed] [Google Scholar]

- Dancey J, Zee B, Osoba D, Whitehead M, Lu F, Kaizer L, Latreille J, Pater J L. Quality of Life Scores: An Independent Prognostic Variable in a General Population of Cancer Patients Receiving Chemotherapy. Quality of Life Research. 1997;6:151–8. doi: 10.1023/a:1026442201191. [DOI] [PubMed] [Google Scholar]

- Drummond H E, Ghosh S, Ferguson A, Brackenridge D, Tiplady B. Electronic Quality of Life Questionnaires: A Comparison of Pen-Based Electronic Questionnaires with Conventional Paper in a Gastrointestinal Study. Quality of Life Research. 1995;4:21–6. doi: 10.1007/BF00434379. [DOI] [PubMed] [Google Scholar]

- Fortner B, Okon T, Schwartzberg L, Tauer K, Houts A C. The Cancer Care Monitor: Psychometric Content Evaluation and Pilot Testing of a Computer Administered System for Symptom Screening and Quality of Life in Adult Cancer Patients. Journal of Pain and Symptom Management. 2003;26:1077–92. doi: 10.1016/j.jpainsymman.2003.04.003. [DOI] [PubMed] [Google Scholar]

- Ganz P A. Quality of Life and the Patient with Cancer. Individual and Policy Implications. Cancer. 1994;74(suppl 4):1445–52. doi: 10.1002/1097-0142(19940815)74:4+<1445::aid-cncr2820741608>3.0.co;2-#. [DOI] [PubMed] [Google Scholar]

- Gwaltney C J, Shields A L, Shiffman S. Equivalence of Electronic and Paper-and-Pencil Administration of Patient-Reported Outcome Measures: A Meta-Analytic Review. Value in Health. 2008;11(2):322–33. doi: 10.1111/j.1524-4733.2007.00231.x. [DOI] [PubMed] [Google Scholar]

- Hailey D M. Health Technology Assessment in Canada: Diversity and Evolution. Medical Journal of Australia. 2007;187:286–8. doi: 10.5694/j.1326-5377.2007.tb01245.x. [DOI] [PubMed] [Google Scholar]

- Hibbard J H, Stockard J, Mahoney E R, Tusler M. Development of the Patient Activation Measure (PAM): Conceptualizing and Measuring Activation in Patients and Consumers. Health Services Research. 2004;39:1005–26. doi: 10.1111/j.1475-6773.2004.00269.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson T J. Health Technology in Australia: Challenges Ahead. Medical Journal of Australia. 2007;187:262–4. doi: 10.5694/j.1326-5377.2007.tb01238.x. [DOI] [PubMed] [Google Scholar]

- Johnson J R, Williams G, Pazdur R. End Points and United States Food and Drug Administration Approval of Oncology Drugs. Journal of Clinical Oncology. 2003;21:1404–11. doi: 10.1200/JCO.2003.08.072. [DOI] [PubMed] [Google Scholar]

- Lewis G, Sharp D, Bartholomew J, Pelosi A J. Computerized Assessment of Common Mental Disorders in Primary Care: Effect on Clinical Outcome. Family Practice. 1996;13:120–6. doi: 10.1093/fampra/13.2.120. [DOI] [PubMed] [Google Scholar]

- Lipscomb J, Gotay C C, Snyder C F. Patient-Reported Outcomes in Cancer: A Review of Recent Research and Policy. CA: A Cancer Journal for Clinicians. 2007;57:278–300. doi: 10.3322/CA.57.5.278. [DOI] [PubMed] [Google Scholar]

- Lorig K, Chastain R L, Ung E, Shoor S, Holman H R. Development and Evaluation of a Scale to Measure Perceived Self-Efficacy in People with Arthritis. Arthritis and Rheumatism. 1989;32:37–44. doi: 10.1002/anr.1780320107. [DOI] [PubMed] [Google Scholar]

- National Cancer Institute. The Nation's Investment in Cancer Research: A Plan and Budget Proposal for Fiscal Year 2008. 2008 [accessed on February 11, 2008]. NIH Publication No. 06–6090. Available at http://plan2008.cancer.gov/strategicobjectives3.shtml.

- National Institutes of Health. Patient-Reported Outcomes Measurement Information System: Dynamic Tools to Measure Health Outcomes from the Patient Perspective. 2007 [accessed on August 27, 2007]. Available at http://www.nihPROMIS.org.

- O'Connor K P, Hallam R S, Hinchcliffe R. Evaluation of a Computer Interview System for Use with Neuro-Otology Patients. Clinical Otolaryngology. 1989;14:3–9. doi: 10.1111/j.1365-2273.1989.tb00329.x. [DOI] [PubMed] [Google Scholar]

- Ruland C M, White T, Stevens M, Fanciullo G, Khilani S M. Effects of a Computerized System to Support Shared Decision Making in Symptom Management of Cancer Patients: Preliminary Results. Journal of the American Medical Information Association. 2003;10(6):573–9. doi: 10.1197/jamia.M1365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skinner H A, Allen B A. Does the Computer Make a Difference? Computerized versus Face-To-Face Versus Self-Report Assessment of Alcohol, drug, and Tobacco Use. Journal of Consulting and Clinical Psychology. 1983;51:267–75. doi: 10.1037//0022-006x.51.2.267. [DOI] [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services. Food and Drug Administration, Center for Drug Evaluation and Research (CDER), Center for Biologics Evaluation and Research (CBER), Center for Devices and Radiological Health (CDRH). Guidance for Industry-Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims. 2007 [accessed on August 27, 2007]. Available at http://www.fda.gov/cber/gdlns/prolbl.pdf.

- Velikova G, Booth L, Smith A B, Brown P M, Lynch P, Brown J M, Selby P J. Measuring Quality of Life in Routine Oncology Practice Improves Communication and Patient Well-Being: A Randomized Controlled Trial. Journal of Clinical Oncology. 2004;22(4):714–24. doi: 10.1200/JCO.2004.06.078. [DOI] [PubMed] [Google Scholar]

- Velikova G, Wright E P, Smith A B, Cull A, Gould A, Forman D, Perren T, Stead M, Brown J, Selby P J. Automated Collection of Quality-of-Life Data: A Comparison of Paper and Computer Touch-Screen Questionnaires. Journal of Clinical Oncology. 1999;17(3):998–1007. doi: 10.1200/JCO.1999.17.3.998. [DOI] [PubMed] [Google Scholar]

- Von Korff M, Gruman J, Schaefer J, Curry S J, Wagner E H. Collaborative Management of Chronic Illness. Annals of Internal Medicine. 1997;127:1097–102. doi: 10.7326/0003-4819-127-12-199712150-00008. [DOI] [PubMed] [Google Scholar]

- Walley T. Health Technology Assessment in England: Assessment and Appraisal. Medical Journal of Australia. 2007;187:283–5. doi: 10.5694/j.1326-5377.2007.tb01244.x. [DOI] [PubMed] [Google Scholar]

- Yarnold P R, Stewart M J, Stille F C, Martin G J. Assessing Functional Status of Elderly Adults via Microcomputer. Perceptual and Motor Skills. 1996;82:689–90. doi: 10.2466/pms.1996.82.2.689. [DOI] [PubMed] [Google Scholar]

- Yellen S B, Cella D F, Webster K, Blendowski C, Kaplan E. Measuring Fatigue and Other Anemia-Related Symptoms with the Functional Assessment of Cancer Therapy (FACT) Measurement System. Journal of Pain and Symptom Management. 1997;13:63–74. doi: 10.1016/s0885-3924(96)00274-6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Author matrix.