Abstract

Three experiments with rats examined reacquisition of an operant response after either extinction or a response-elimination procedure that included occasional reinforced responses during extinction. In each experiment, reacquisition was slower when response elimination had included occasional reinforced responses, although the effect was especially evident when responding was examined immediately following each response-reinforcer pairing during reacquisition (Experiments 2 and 3). An extinction procedure with added noncontingent reinforcers also slowed reacquisition (Experiment 3). The results are consistent with research in classical conditioning (Bouton, Woods, & Pineño, 2004) and suggest that rapid reacquisition after extinction is analogous to a renewal effect that occurs when reinforced responses signal a return to the conditioning context. Clinical implications are also discussed.

Keywords: extinction, partial reinforcement, reacquisition, operant conditioning, rats

In classical conditioning, reacquisition refers to the return of conditioned responding following extinction when the conditioned stimulus (CS) and unconditioned stimulus (US) are again paired (Bouton, 1993; Rescorla, 2001b). Reacquisition, or reconditioning, is often more rapid than the rate of initial conditioning (see Bouton, 2002). Rapid reacquisition has been found in the rabbit nictitating membrane response (e.g., MMacrae & Kehoe, 1999; Napier, Macrae, & Kehoe, 1992) and rat appetitive conditioning paradigms (e.g., Ricker & Bouton, 1996).

The finding that reacquisition is rapid suggests that the original learning (e.g., a CS-US association) is saved, not destroyed, following extinction (e.g., Bouton, 1993; Kehoe & Macrae, 1997; Pavlov, 1927; Rescorla, 2001a; cf. Rescorla & Wagner, 1972). One explanation of rapid reacquisition is that the savings of what was learned during conditioning means that less new learning is necessary to cause a return of the conditioned response (e.g., Kehoe, 1988). However, reacquisition is not always rapid (e.g., Bouton, 1986; Hart, Bourne, & Schachtman, 1995). It can also be slower than initial conditioning, which provides a problem for accounts such as that of Kehoe (1988) which emphasize that reacquisition should be rapid merely due to savings of the CS-US association after extinction. Slow reacquisition depends on extensive extinction training (Bouton, 1986) and on the subject being in the context in which extinction occurred (Bouton & Swartzentruber, 1989). These findings suggest that slow reacquisition could be due to the context retrieving extinction learning (e.g., a CS-no US association; Bouton, 1993). In a corresponding way, rapid reacquisition could be due to the context retrieving what was learned in conditioning.

Ricker and Bouton (1996) found that reacquisition could be either rapid or slow in an appetitive conditioning paradigm in which a tone CS was paired with a food-pellet US. Based in part on this finding, they suggested that reacquisition is controlled by a “trial-signaling” mechanism. The trial-signaling view (Ricker & Bouton, 1996) is consistent with Capaldi’s (1967, 1994) sequential learning theory which suggests that previous trials can come to control responding on subsequent trials. Trial signaling can develop when an animal learns that a certain type of trial, specifically a reinforced or a nonreinforced trial, reliably signals the type of upcoming trial. During conditioning, the CS always signals the US. As a result, animals learn a CS-US association, and they might also learn that reinforced trials follow other reinforced trials. During extinction, in contrast, the CS no longer signals the US. Thus, animals learn a CS-no US association (e.g., Bouton, 1993), and they might also learn that nonreinforced trials follow other nonreinforced trials. Basically, the type of recent trial (reinforced or nonreinforced) becomes part of the context that can control responding to the CS (see Bouton, 1993). Consistent with this idea, Ricker and Bouton (1996) found greater conditioned responding after reinforced than after nonreinforced trials during reacquisition, presumably because the type of trial signaled conditioning or extinction, respectively.

The explanation of rapid reacquisition, according to the trial-signaling view, is that it is at least partly a renewal effect (e.g., Bouton & King, 1983) in which reinforced trials after extinction return the animal to the conditioning “trial context” (see Bouton, 1993, Bouton, 2002). Trial signaling is more likely to develop and lead to rapid reacquisition in paradigms with many conditioning trials (rabbit nictitating membrane, e.g., Napier et al., 1992) versus relatively few conditioning trials (fear conditioning, e.g., Bouton, 1986; taste aversion, e.g., Hart et al., 1995). Support for these ideas has been reported in appetitive conditioning with rats where rapid reacquisition occurred when there were many conditioning trials, but slow reacquisition occurred when there were only a few conditioning trials (Ricker & Bouton, 1996). In addition, using rabbit nictitating membrane and heart rate conditioning, Weidemann and Kehoe (2003) found that the rate of reacquisition was reduced when only a limited number of acquisition trials occurred before extended extinction.

One implication of the trial-signaling view is that response-elimination procedures that somehow weaken the link between reinforced trials and other reinforced trials should slow down rapid reacquisition. Bouton (2000) suggested that presenting occasional reinforced trials along with nonreinforced trials during extinction (i.e., a partial reinforcement schedule, “PRF”) might allow reinforced trials to become associated with extinction as well as conditioning. Reinforced trials should be less likely to cause a renewal of conditioned responding when reintroduced after extinction if they are part of the “trial context” of both conditioning and extinction. Specifically, if a reinforced trial does not necessarily signal another reinforced trial, then an animal should be slower to reacquire conditioned responding than if reinforced trials were associated only with conditioning. Although a PRF schedule would slow the complete loss of conditioned responding compared to extinction (e.g., Gibbs, Latham, & Gormezano, 1978), “lean” schedules that introduce only occasional reinforced trials should reduce responding from its original level and also slow rapid reacquisition.

Bouton, Woods, and Pineño (2004) found support for these predictions in a set of classical appetitive conditioning experiments with rats. Compared to a typical extinction procedure, presenting a small number of reinforced trials during the response-elimination phase (i.e., a PRF procedure) slowed the loss of conditioned responding to the CS, but most importantly, also slowed the rate of reacquisition when CS-US pairings were fully resumed. Reacquisition after extinction was rapid, whereas the PRF procedure slowed this rapid reacquisition (even though it was still faster than initial conditioning). This finding is inconsistent with several theoretical approaches. For example, according to the Pearce-Hall model of conditioning (Pearce & Hall, 1980), occasional reinforced trials should maintain surprise and attention to the CS, and compared with simple extinction should therefore increase (rather than decrease) the rate of reacquisition. In addition, the model of Kehoe (1988) predicts that occasional reinforced trials during response elimination should maintain a stronger CS-US association than extinction and therefore increase (rather than decrease) the rate of reacquisition. The effect of the PRF procedure may be uniquely consistent with the trial-signaling view (Bouton et al., 2004; see also Capaldi, 1994).

The finding that rapid reacquisition can be slowed by a PRF response-elimination procedure is both practically and theoretically significant. In particular, it may have important implications for the treatment of maladaptive behaviors, such as substance abuse, overeating, and gambling. For example, the finding suggests that an occasional lapse in behavior, such as smoking a cigarette occasionally while trying to quit, could be more effective than complete abstinence at preventing a full-blown relapse because it would help break the connection between smoking one cigarette and then “binging” on others (e.g., Bouton, 2000; Marlatt & Gordon, 1985; cf. Alessi, Badger, & Higgins, 2004; Heil, Alessi, Lussier, Badger, & Higgins, 2004, for support of abstinence). However, cigarette smoking, like drug self-administration, overeating, and gambling, involves an operant behavioral component in which a voluntary action is emitted to earn the reinforcer. In contrast, classical conditioning involves no voluntary action. Although operant conditioning involves similar associative learning (e.g., an association is learned between a response and reinforcer rather than a CS and a US; e.g., Adams & Dickinson, 1981; Colwill, 1994; Rescorla, 1987), it is clearly necessary to investigate the effects of presenting occasional reinforced responses during response elimination on reacquisition in an operant conditioning paradigm. Reinforced responses in operant conditioning are analogous to reinforced trials in classical conditioning.

The present experiments were thus designed to examine reacquisition of an operant, rather than a classical, conditioned response. Although rapid reacquisition of an operant response is assumed to occur when the response-reinforcer contingency is reintroduced after extinction (e.g., Bouton & Swartzentruber, 1991), information about reacquisition in operant conditioning appears to be sparse. Bullock and Smith (1953) reported rapid reacquisition of an operant lever-press response in rats when conditioning and extinction sessions were alternated on the same day. In the present experiments, rats earning occasional reinforcers for responding during a response-elimination phase were predicted to show slower reacquisition than rats earning no reinforcers in a traditional extinction procedure.

Experiment 1

Experiment 1 involved a 2 ×2 factorial design. After lever-press training for food reinforcement on a variable interval (VI) - 30 s schedule, there was a response-elimination phase during which half the rats received no reinforcers for lever pressing and thus underwent extinction (“Ext”), whereas the other half occasionally received reinforcers for lever pressing on a lean PRF schedule (“Prf”). On a final test, the groups were compared on the rapidity with which the lever-press response was reacquired when a response-reinforcer contingency was reintroduced. Slower reacquisition was predicted after partial reinforcement than after extinction, because response elimination as well as acquisition contained some reinforced responses (cf. “trial context;” e.g., Ricker & Bouton, 1996).

Reacquisition was examined with two schedules of reinforcement, both of which were leaner than the VI-30 s schedule used in conditioning. The rats could again earn food pellets, but now for the first response after either every two (Ext2 and Prf2) or eight (Ext8 and Prf8) minutes on average. There were two reasons for using leaner schedules during reacquisition than acquisition. First, the longer intervals between reinforcers would allow reacquisition to be examined over a protracted period of time. Second, returning the rats to the original VI-30 s schedule might provide an especially potent contextual cue for conditioning that could potentially cause an ABA renewal effect; thus, by using a slightly different schedule on the test, we expected to minimize the likelihood of this.

Method

Subjects

Thirty-two female Wistar rats (Charles River, Quebec, Canada) ranging in age from 75–90 days and weighing 200–300 g (at the start of the experiment) were used. They were housed individually in suspended stainless steel cages in a colony room. The rats were food deprived one week prior to the start of the experiment and maintained at 80% of their free-feeding weights. They had free access to water in the home cages. The experiment was conducted on consecutive days during the light portion of a 16:8-hr light-dark cycle. There was one session per day.

Apparatus

All experimental sessions occurred in two sets of four Skinner boxes (Med Associates, Georgia, VT) which were located in separate rooms. The two sets contained different contextual cues but were not used for this purpose in the present experiments. Type of box was counterbalanced over groups, and each rat received all sessions in the same box. Each box measured 30.5 ×24.1 ×21 cm (l × w × h) and was housed in a sound-attenuation chamber. The front and back walls were brushed aluminum; the side walls and ceiling were clear acrylic plastic. The rats entered the box through a door in the right wall. A 5.1 × 5.1 cm recessed food cup was centered in the front wall and positioned 2.5 cm above the floor. A thin, flat 4.8 cm stainless steel operant lever protruded 1.9 cm from the front wall and was positioned 6.2 cm above the grid floor and located to the left of the food cup. In one set of four boxes, the floor consisted of stainless steel bars, 0.48 cm in diameter, spaced 1.6 cm center to center and mounted parallel to the front wall. In addition, both the ceiling and left wall of the box had three 2 cm black stripes, spaced 2.5 cm apart, and stretching diagonally between two corners. The back wall contained three 8.3 cm dark squares arranged diagonally. In the other set of boxes, the floor consisted of similar bars, except that the height of the bars was staggered such that the height of adjacent bars differed by 1.6 cm. The other visual cues were absent. In both sets of boxes, illumination was provided by two 7.5-W white incandescent bulbs mounted to the ceiling of the sound-attenuation chamber. Ventilation fans provided a background noise of approximately 60 dB. The reinforcer was one 45-mg food pellet (Research Diets, New Brunswick, NJ). Computer equipment located in a separate room controlled the apparatus and data collection.

Procedure

Shaping and conditioning

All rats received two 30-min sessions (one per day) of lever-press training (shaping). Food-pellet reinforcers were delivered for successive approximations of the lever-press response until the rat began pressing the lever independently and initially earning reinforcers on a continuous schedule of reinforcement. After emitting 30–40 lever presses, the rats were switched to a fixed-ratio, FR-7, schedule of reinforcement until five pellets were earned. The rats were then put on a VI-30 s schedule of reinforcement (interval range = 5–55 s, including all intervals that were multiples of 5 s) for the remainder of shaping. Unless otherwise stated, for any VI schedule, the computer randomly sampled each interval without replacement. If a rat was not pressing the lever reliably by the end of the second shaping session, then it received an extra session later the same day. Lever-press training was followed by five 30-min sessions of conditioning (one per day) on a VI-30 s schedule of reinforcement in order to increase the rate of lever pressing. Following conditioning, the rats were matched on their responding and assigned to Groups Ext2, Prf2, Ext8, and Prf8 (ns = 8).

Response elimination

After conditioning, the rats received eight 60-min sessions (one per day) that were designed to eliminate lever pressing. Half the rats (Groups Ext2 and Ext8) received traditional extinction in which lever presses were no longer followed by food. The other rats (Groups Prf2 and Prf8) received a PRF procedure during which lever presses were occasionally paired with food on a series of VI schedules (each of which had a distribution of intervals that was proportional to those used for the VI-30 s schedule). In order to reduce responding during the PRF procedure, the number of reinforced responses was gradually reduced during each session by making the VI schedule leaner every 30 minutes. For the first four sessions of this phase, the schedule of reinforcement was made leaner in increments of four minutes: VI-4 to VI-8, VI-12 to VI-16, VI-20 to VI-24, and VI-28 to VI-32 min. There were two additional sessions of VI-28 to VI-32 min, and the last two sessions contained two segments of VI-32 min. To avoid simple extinction training in the PRF groups, the schedule of reinforcement was not made leaner than VI-32 min.

Reacquisition

After the last response-elimination session, the rats received two 60-min reacquisition sessions. Groups Ext2 and Prf2 were tested on a VI-2 min reinforcement schedule (interval range = 20–220 s, including all intervals that were multiples of 20 s), whereas Groups Ext8 and Prf8 were tested on a VI-8 min schedule (interval range = 80–880 s, including all intervals that were multiples of 80 s). For the reacquisition test sessions, the computer controlled the sequence of intervals for the VI schedules, so that all rats experienced the same list. The list of intervals was repeated when necessary (VI-2 min: 120, 180, 40, 60, 140, 20, 200, 220, 80, 100, 160 s; VI-8 min: 480, 720, 160, 240, 560, 80, 800, 880, 320, 400, 640 s).

Data Analysis

The computer recorded the total number of lever presses for each rat during each session. These data were converted to a rate (mean number of lever presses per minute). Each rat’s data from the response-elimination and reacquisition sessions were expressed and analyzed in terms of the percentage of responding during the last conditioning session. Data were analyzed using mixed-design and one-way analyses of variance (ANOVAs). A rejection criterion of p < .05 was used for all statistical tests.

Results

Conditioning

The mean number of lever presses per minute on the first conditioning session was 12.4, 11.9, 13.8, and 13.7 for Groups Ext2, Prf2, Ext8, and Prf8, respectively. All groups exhibited a significant increase in responding over the conditioning sessions, F(4, 112) = 47.61. There were no main effects or interactions, Fs < 1. Mean responding per minute on the last conditioning session was 23.9, 23.7, 23.6, and 23.5 for Groups Ext2, Prf2, Ext8, and Prf8, respectively.

Response Elimination

Figure 1 shows data from the response-elimination and reacquisition sessions expressed as a percentage of responding during the last conditioning session. Responding diminished rapidly in Groups Ext2 and Ext8 during extinction, whereas responding diminished more slowly in Groups Prf2 and Prf8. These observations were confirmed by a 2 (Response-Elimination Treatment) × 2 (Test Schedule) × 8 (Session) ANOVA that revealed main effects of Response-Elimination Treatment, F(1, 28) = 54.94, Session, F(7, 196) = 283.67, and a Response-Elimination Treatment × Session interaction, F(7, 196) = 39.13. All other main effects and interactions failed to reach significance, Fs ≤ 1.60. Responding on the last response-elimination session was 0.7, 9.1, 0.9, and 9.1 percent of conditioned responding for Groups Ext2, Prf2, Ext8, and Prf8, respectively.

Figure 1.

Responses per minute expressed as a percentage of responding during the last conditioning session for data collected during the eight response-elimination (E) and two reacquisition (R) sessions for Groups Ext2, Prf2, Ext8, and Prf8 in Experiment 1.

Reacquisition

The right-hand portion of Figure 1 suggests that receiving occasional reinforced responses during the response-elimination phase (Groups Prf2 and Prf8) slowed the rate of reacquisition compared to extinction (Groups Ext2 and Ext8). This was true for both schedules of reinforcement used on the test. A 2 (Response-Elimination Treatment) × 2 (Test Schedule) × 3 (Session) ANOVA on data from the last response-elimination session (E8) and the two reacquisition sessions (R1 and R2) confirmed these observations. There was a significant effect of Session, F(2, 56) = 209.65, and more important, a Session × Response-Elimination Treatment crossover interaction, F(2, 56) = 10.50. The interaction indicates that the Prf groups were slower than the Ext groups to increase their response rates after response elimination. In addition, there was a main effect of Test Schedule, F(1, 28) = 26.10, and a Session × Test Schedule interaction, F(2, 56) = 26.04, indicating greater responding on the VI-2 min schedule than on VI-8 min. The main effect of Response-Elimination Treatment, the Response-Elimination Treatment × Test Schedule interaction, and the 3-way interaction failed to reach significance, Fs ≤ 1.76.

The fact that the Response-Elimination Treatment × Test Schedule × Session interaction was not significant suggests that the effect of the PRF procedure did not depend on the test schedule of reinforcement; however, other planned analyses revealed that the slowed reacquisition pattern was especially strong with the VI-8 min schedule. For the 8-min groups, a 2 (Response-Elimination Treatment) × 3 (Session) ANOVA on data from the last response-elimination session (E8) and the two reacquisition sessions (R1 and R2) confirmed a significant Session × Response-Elimination Treatment crossover interaction, F(2, 28) = 15.43. Simple effects tests indicated that Group Prf8 maintained a significantly higher level of responding than Group Ext8 on the last response-elimination session, F(1, 14) = 6.56, but significantly less responding on the two reacquisition sessions, Fs(1, 14) ≥ 4.84. In contrast, a similar analysis of the 2-min groups revealed no Session × Response-Elimination Treatment interaction, F(2, 28) = 2.18.

Discussion

Not surprisingly, the partial reinforcement procedure caused a slower loss of responding than did simple extinction (see also Bouton et al., 2004; Gibbs et al., 1978). However, it also produced slower reacquisition. This result is analogous to findings in classical conditioning (e.g., Bouton et al., 2004). Slower reacquisition after partial reinforcement was especially clear with the VI-8 min reinforcement schedule, although there was no Response-Elimination Treatment × Test Schedule interaction.

A plausible explanation of the results (see also Bouton et al., 2004; Capaldi, 1994) is that a PRF procedure can slow reacquisition because maintaining occasional reinforced responses during response elimination allows reinforced responses to be associated with both conditioning and extinction. As a result, renewal is less likely when reinforced responses are reintroduced during reacquisition. Rapid reacquisition, in contrast, is a renewal effect that occurs after extinction when animals are returned to a reinforced “trial context.”

Experiment 2

Experiment 2 was designed to replicate and extend the results of Experiment 1. The same groups were used, but data were now recorded during reacquisition in a way that allowed a finer-grained analysis of behavior. Responding during the first reacquisition session (R1) was recorded in real time so that response rates immediately after each response-reinforcer pairing could be examined. If the type of response (reinforced or nonreinforced) comes to signal the type of subsequent responses, then the effects of such sequential learning should be especially visible after each reinforcer is earned during reacquisition (see Capaldi, 1994; Ricker & Bouton, 1996). That is, after simple extinction, each reinforced response during reacquisition constitutes a return to the conditioning context and thus could cause an immediate renewal of responding. In contrast, after response elimination in the presence of occasional reinforced responses, a single reinforced response during reacquisition is less likely to signal upcoming reinforced responses and therefore should slow the rate of reacquisition.

Method

Subjects and Apparatus

The subjects were 32 female Wistar rats of the same age and stock as in Experiment 1. They were housed and maintained as in Experiment 1, and all experimental sessions occurred in the same two sets of Skinner boxes.

Procedure

All procedural details were the same as in Experiment 1 unless otherwise noted. As described previously, all rats received two sessions of lever-press training and five sessions of conditioning on a VI-30 s schedule. The rats were then matched on their conditioning performance and assigned to Groups Ext2, Prf2, Ext8, and Prf8 (ns = 8). The rats then received eight response-elimination sessions. Half the rats received extinction and the other half received occasional reinforced responses. After response elimination, the rats received two 60-min reacquisition sessions on either a VI-2 or a VI-8 min schedule of reinforcement. The distribution of VI intervals was the same as in Experiment 1.

Data analysis

Data collapsed over the entire test session were analyzed as in Experiment 1. In addition, the computer recorded responses and reinforcers in real time (rounded to the second) during the first reacquisition session. These data made it possible to examine the number of lever presses emitted during a window of time after each reinforcer was earned. Responding in the VI-2 min groups was analyzed during the 1-min period that followed each response-reinforcer pairing; responding in the VI-8 min groups was analyzed during the corresponding 4-min windows. These periods were chosen for the following reasons. First, a period as long as the mean VI value would be problematic because additional reinforcers could be earned within that window and create considerable overlap in the data. Second, if the periods were too short (e.g., 20 s), then any food-cup behavior elicited by presentation of the reinforcer could interfere with subsequent lever pressing. Windows of half the VI value were thus chosen and viewed as a compromise, even though another reinforcer could still be earned during some of these time windows (see the list of intervals in Experiment 1). As before, the response rates were analyzed as a percentage of responding during the last conditioning session. Mixed-design and one-way ANOVAs were applied.

Results

Conditioning

The mean number of lever presses per minute on the first conditioning session was 13.9, 13.8, 12.6, and 12.1 for Groups Ext2, Prf2, Ext8, and Prf8, respectively. All groups exhibited a significant increase in responding over the conditioning sessions, F(4, 112) = 25.78. There were no main effects or interactions, Fs < 1. Mean responding per minute on the last conditioning session was 21.9, 21.4, 21.6, and 21.6 for Groups Ext2, Prf2, Ext8, and Prf8, respectively.

Response Elimination

Results of the response-elimination phase were essentially identical to those in Experiment 1 and therefore are not shown. Once again, responding diminished rapidly in Groups Ext2 and Ext8 during extinction, whereas the loss of responding diminished more slowly and was incomplete in Groups Prf2 and Prf8. A 2 (Response-Elimination Treatment) × 2 (Test Schedule) × 8 (Session) ANOVA confirmed main effects of Response-Elimination Treatment, F(1, 28) = 53.98, Session, F(7, 196) = 242.69, and a Response-Elimination Treatment × Session interaction, F(7, 196) = 28.84. All other main effects and interactions failed to reach significance, Fs ≤ 1.36. Responding on the last response-elimination session was 0.6, 9.1, 1.1, and 5.6 percent of conditioned responding for Groups Ext2, Prf2, Ext8, and Prf8, respectively.

Reacquisition

Fine-grained analysis

Figure 2 shows data that were collected after the first six reinforcers earned during the first reacquisition session. The data are expressed as a percentage of responding during the last conditioning session. Seven reinforcers could be earned per session on the VI-8 min schedule. However, Group Ext8 earned its first reinforcer later in the session than Group Prf8 (at minutes 19.76 and 8.90, respectively), a pattern consistent with the fact that the PRF procedure maintained more responding during response elimination. Group Ext8 therefore earned fewer reinforcers than Group Prf8, and most rats in that group earned six or less. In order to have sufficient subjects and statistical power, analyses therefore included only six post-reinforcer periods of responding. One rat in Group Ext2 did not earn any reinforcers and was therefore excluded from the analyses.

Figure 2.

Responses per minute expressed as a percentage of responding during the last conditioning session for data collected before the first and after the first six response-reinforcer pairings during the first reacquisition session (R1) in Experiment 2. The upper panel shows responding in 4-min periods after each response-reinforcer pairing in the VI-8 min groups. The lower panel shows responding in 1-min periods after each response-reinforcer pairing in the VI-2 min groups.

The leftmost parts of the panels in Figure 2 show that before any reinforcers were earned during the session, the Prf groups were responding more than the corresponding Ext groups, a continuation of the pattern from the response-elimination phase. However, the PRF procedure thereafter slowed the rate of responding after each response-reinforcer pairing.

A 2 (Response-Elimination Treatment) × 2 (Test Schedule) × 7 (Period) ANOVA on the periods of responding before any reinforcers were earned and after the first six reinforcers revealed significant main effects of Response-Elimination Treatment, F(1, 23) = 6.26, Test Schedule, F(1, 23) = 11.45, and Period, F(6, 138) = 46.33. More important, there was a significant Response-Elimination Treatment × Period interaction, F(6, 138) = 2.66, which suggests that the PRF schedule during response elimination had reduced the level of responding during reacquisition. The Test Schedule × Period interaction was significant, F(6, 138) = 7.90. The Response-Elimination Treatment × Test Schedule interaction was marginally significant, F(1, 23) = 3.96, p = .059. The 3-way interaction was not significant, F(6, 138) = 1.42, suggesting that the effect of the PRF procedure did not depend on the test schedule of reinforcement.

Once again, however, planned analyses of the two groups tested with each reacquisition schedule suggest that the slowed reacquisition pattern was especially clear with the VI-8 min schedule (upper panel). A 2 (Response-Elimination Treatment) × 7 (Period) ANOVA on the periods of responding before any reinforcers were earned and after the first six reinforcers confirmed significant main effects of Response-Elimination Treatment, F(1, 10) = 11.28, and Period, F(6, 60) = 25.64, and a Response-Elimination × Period interaction, F(6, 60) = 7.83. Simple effects tests indicated that Group Prf8 responded less than Group Ext8 after reinforcers 2, 4, 5, and 6, Fs ≥ 6.53, but not after reinforcer 1, F(1, 14) ≤ 2.38. The difference after reinforcer 3 approached significance, F(1, 12) = 3.99, p = .069.

For the 2-min groups (lower panel), a 2 (Response-Elimination Treatment) × 7 (Period) ANOVA for the periods of responding before any reinforcers were earned and after the first six reinforcers confirmed a significant main effect of Period, F(6, 78) = 34.88, but no main effect of Response-Elimination Treatment or a Response-Elimination Treatment × Period interaction, Fs < 1. Group Prf2 responded less than Group Ext2 in the 1-min periods after each reinforcer, but the differences were not reliable in simple effects tests, Fs ≤ 2.01. Group Ext2 earned the first reinforcer later in the session than Group Prf2 (at minutes 8.48 and 2.29, respectively).

It is worth noting that the lack of effect within the VI-2 min groups appears to be a result of the reinforcement schedule rather than the shorter (1-min) post-reinforcer window that was examined. When the VI-8 min groups were compared on responding before any reinforcers were earned and during the 1-min period after each of the first six response-reinforcer pairings, there was a main effect of Response-Elimination Treatment, F(1, 11) = 6.84, and Period, F(6, 66) = 10.65, and a Response-Elimination Treatment × Period interaction, F(6, 66) = 2.63. Group Prf8 responded less than Group Ext8 after the response-reinforcer pairings.

Whole-session analysis

When averaged over reacquisition sessions, the response rates in this experiment suggest that occasional reinforced responses during response elimination (Groups Prf2 and Prf8) had little effect on the rate of reacquisition (cf. Experiment 1). Responding on the first reacquisition session was 57.0, 76.2, 19.4, and 23.5 percent of conditioned responding for Groups Ext2, Prf2, Ext8, and Prf8, respectively. On the second reacquisition session, responding for the groups was 84.1, 106.5, 44.0, and 35.8 percent. A 2 (Response-Elimination Treatment) × 2 (Test Schedule) × 3 (Session) ANOVA on data from the last response-elimination session and the two reacquisition sessions revealed a significant main effect of Session, F(2, 56) = 197.42. A main effect of Test Schedule, F(1, 28) = 42.16, and a Test Schedule × Session interaction, F(2, 56) = 39.21, were also significant, indicating greater responding on the VI-2 min versus the VI-8 min reinforcement schedule. However, the main effect of Response-Elimination Treatment, the Response-Elimination Treatment × Session interaction, as well as other remaining interactions, failed to reach significance, Fs ≤ 2.57. The success of the finer-grained analysis of the data suggests that the failure to find slower reacquisition after PRF was an artifact of aggregating data over the entire 60-min session.

Discussion

As demonstrated in Experiment 1, partial reinforcement again slowed the loss of responding during response elimination. In the present experiment, the finding of slower reacquisition after partial reinforcement was observed in the finer-grained analyses of responding after each response-reinforcer pairing. Specifically, the PRF procedure was effective at slowing responding in the period immediately following each reinforcer; this was true when reacquisition occurred with a VI-8 min schedule of reinforcement.

Although the Prf groups responded less than the Ext groups after each response-reinforcer pairing, the slowed reacquisition effect was not visible when data were aggregated over the entire session in this experiment. The potential reasons for this are interesting and instructive. Due to the very low amount of lever pressing after extinction, rats in the Ext groups did not earn the first reinforcer, and thus re-experience the response-reinforcer contingency, until relatively late in the reacquisition session. These rats therefore had less time remaining in the session to lever press after the first reinforcer was earned, which yielded a low overall rate of responding over 60 min. In contrast, because the Prf groups earned the first reinforcer earlier in the session, they had more time to accumulate lever-press responses, which thereby inflated their overall rate of responding. The results of Experiment 1 suggest that rats can sometimes overcome this bias. But, it is evident that calculating the rate of responding after the first response-reinforcer pairing provides a fairer and more accurate assessment of reacquisition, which by definition is the return of responding that occurs when the response-reinforcer contingency is reintroduced after response elimination.

Slower reacquisition after PRF compared with extinction is consistent with the trial-signaling view (Bouton et al., 2004; Ricker & Bouton, 1996) and suggests that reinforced responses after extinction can signal a return to the conditioning context and set the occasion for more responding (see also Capaldi, 1994). The fact that the effect of occasional reinforced responses was especially evident in the VI-8 min schedule suggests that the effect of partial reinforcement as a response-elimination procedure may be mainly on the animal’s reaction to reinforcers that are distributed relatively widely in time. As mentioned earlier, in this method richer reinforcement schedules in reacquisition may return the animal to a closer approximation of the original VI-30 schedule context and thus produce a stronger renewal effect.

Experiment 3

Bouton et al. (2004) found that presenting the CS and US in an explicitly unpaired manner during response elimination was even more effective than partial reinforcement at slowing rapid reacquisition in classical appetitive conditioning with rats. This finding suggests that presenting the reinforcer alone, rather than response-reinforcer pairings, might also reduce the rate of reacquisition in an operant setting. Consistent with this possibility, Baker (1990) and Rescorla and Skucy (1969) have shown that noncontingent reinforcers during operant extinction can slow the loss of responding in extinction and also reduce the response-reinstating effects of noncontingent pellets then delivered subsequently.

Experiment 3 therefore examined reacquisition, after extinction, partial reinforcement, and a treatment that involved noncontingent reinforcers during response elimination. Each rat in the new group (Group Yoked) was linked to a rat in Group Prf so that it received food pellets at the same time, though noncontingently, during the response elimination phase. Group Ext was expected to show the fastest reacquisition, whereas Groups Prf and Yoked were both expected to show a slower rate of responding when response-reinforcer pairings were reintroduced. The fine-grained analysis of responding in reacquisition was used again.

Method

Subjects and Apparatus

The subjects were 24 female Wistar rats of the same age and stock as in Experiment 1. They were housed and maintained as in Experiment 1, and all experimental sessions occurred in the same two sets of Skinner boxes.

Procedure

All procedural details were the same as in Experiments 1 and 2 unless otherwise specified. As described previously, all rats received two sessions of lever-press training and five sessions of conditioning on a VI-30 s schedule. The rats were then matched on their conditioning performance and assigned to Groups Ext, Prf, and Yoked (ns = 8). The rats then received eight response-elimination sessions during which Group Ext did not receive any reinforcers. Group Prf received the procedure given to the corresponding group in Experiment 2. Each rat in Group Yoked was linked to a rat in Group Prf and received pellets at the same point in time. Group Yoked thus received the same number and temporal distribution of reinforcers as Group Prf, but not contingent on behavior. After response elimination, the rats received two 60-min reacquisition sessions on a VI-8 min schedule of reinforcement.

Data analysis

Data from the first reacquisition session were analyzed according to the finer-grained analysis as in Experiment 2. Data collapsed over the entire test session were analyzed as in Experiments 1 and 2.

Results

Conditioning

The mean number of lever presses per minute on the first conditioning session was 11.5, 11.4, and 11.1 for Groups Ext, Prf, and Yoked, respectively. As in the previous experiments, all groups exhibited a significant increase in responding over the conditioning sessions, F(4, 84) = 33.02. There was no main effect of Response-Elimination Treatment and no interaction, Fs(2, 21) < 1. Mean responding per minute on the last conditioning session was 21.9, 22.1, and 22.6 for Groups Ext, Prf, and Yoked, respectively.

Response Elimination

Responding diminished rapidly in Group Ext during extinction, whereas the loss of responding diminished more slowly and was incomplete in Group Prf (see Figure 3). The loss of responding in Group Yoked was faster than that in Group Prf but slightly slower than that in Group Ext, although the final level resembled that of Group Ext. A 3 (Response-Elimination Treatment) × 8 (Session) ANOVA confirmed main effects of Response-Elimination Treatment, F(2, 21) = 35.73, Session, F(7, 147) = 197.03, and a Response-Elimination Treatment × Session interaction, F(14, 147) = 6.15. Pairwise comparisons confirmed that the PRF procedure maintained significantly higher responding than in both the Ext and Yoked groups during each response-elimination session, Fs(1, 21) ≥ 9.73. The yoked procedure also initially maintained more responding than in Group Ext on session 1, F(1, 21) = 3.46, p = .077, and session 2, F(1, 21) = 7.89, but this difference dissipated by session 3, Fs(1, 21) ≤ 2.07. (Recall that pellets were delivered with decreasing frequency over sessions.) Responding on the last response-elimination session was 0.7, 8.8, and 0.9 percent of conditioned responding for Groups Ext, Prf, and Yoked, respectively.

Figure 3.

Responses per minute expressed as a percentage of responding during the last conditioning session for data collected during the eight response-elimination (E) sessions for Groups Ext, Prf, and Yoked in Experiment 3.

Reacquisition

Fine-grained analysis

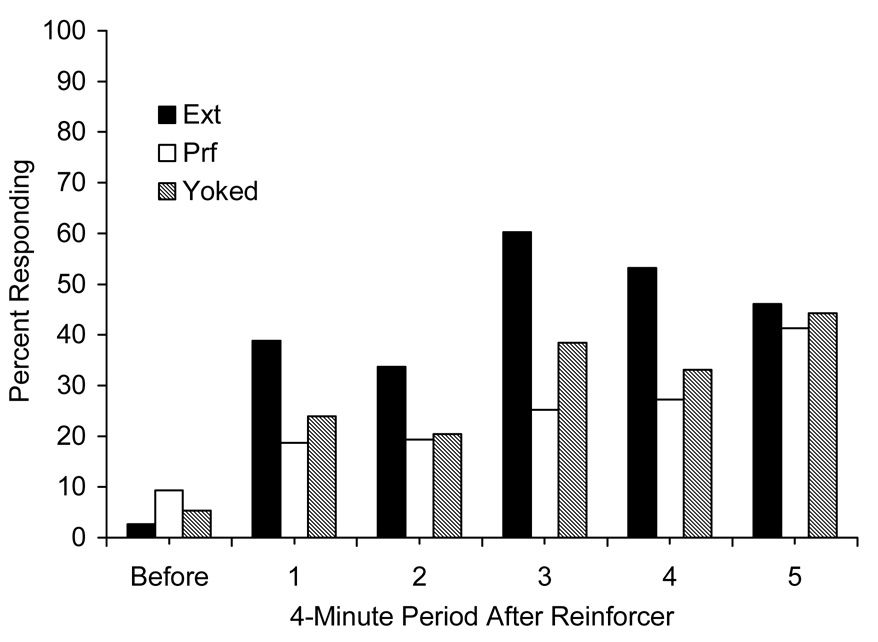

Figure 4 shows data that were collected before any reinforcer was earned and data in 4-min periods after the first five reinforcers were earned during the first reacquisition session. Analyses included only periods after the first five reinforcers due to a decreasing number of rats earning additional pellets. Two rats in Group Ext never earned a reinforcer during the session and therefore were excluded from this analysis. As in Experiment 2, the overall analysis of data (across six periods) included only rats that earned all the reinforcers analyzed, whereas the comparisons of groups on responding after each period (and the data shown in Figure 4) included all rats that earned each specific reinforcer.

Figure 4.

Responses per minute expressed as a percentage of responding during the last conditioning session for data collected before the first and after the first five response-reinforcer pairings during the first reacquisition session (R1) for Groups Ext, Prf, and Yoked in Experiment 3.

The leftmost part of Figure 4 shows that Group Prf exhibited more responding than Groups Ext and Yoked early in the session before any reinforcers were earned, a continuation of the pattern from response elimination. Thereafter, however, both Groups Prf and Yoked responded less than Group Ext in the 4-min periods that followed the first four out of five reinforcers. Thus, the PRF and Yoked procedures slowed the rate of responding after each response-reinforcer pairing as compared with Group Ext which showed the fastest reacquisition.

A 3 (Response-Elimination Treatment) × 6 (Period) ANOVA on the periods of responding before any reinforcers were earned and after the first five reinforcers on the first reacquisition session confirmed a significant main effect of Period, F(5, 80) = 21.07, and the Response-Elimination Treatment × Period interaction, F(10, 80) = 2.24. The main effect of Response-Elimination Treatment failed to reach significance, F(2, 16) = 1.23. Pairwise comparisons revealed that Group Prf responded significantly less than Group Ext after reinforcers 3 and 4, Fs(1, 17) ≥ 4.64, and marginally so after reinforcer 1, F(1, 19) = 2.83, p = .10. The difference after reinforcers 2 and 5 was not significant, Fs ≤ 2.09. Although Group Yoked responded numerically less than Group Ext after each reinforcer, the differences failed to reach significance, Fs ≤ 1.99. Group Yoked responded similarly to Group Prf after each reinforcer, Fs < 1, and these groups were therefore combined and compared with Group Ext in order to assess the effect of occasional reinforcers on the rate of reacquisition. Groups Prf and Yoked (combined) responded significantly less than Group Ext after reinforcer 4, F(1, 18) = 5.04, and marginally so after reinforcer 3, F(1, 18) = 4.06, p = .059. The differences among the groups were not reliable after reinforcer 1, F(1, 20) = 2.85, p = .10, reinforcer 2, F(1, 18) = 2.54, p = .12, and reinforcer 5, F(1, 17) < 1. These findings suggest that the presence of a reinforcer during response elimination can slow the rate of reacquisition, but in the present method there was little difference between reinforcers earned on a PRF schedule or delivered at the same rate noncontingently.

Whole-session analysis

As in Experiment 2, the response-elimination treatment did not affect the rate of reacquisition when the response rates were averaged over the entire 60-min session. Responding on the first reacquisition session was 19.6, 19.3, and 14.8 percent of conditioned responding for Groups Ext, Prf, and Yoked, respectively. On the second reacquisition session, responding for the groups was 31.5, 33.1, and 30.2 percent. A 3 (Response-Elimination Treatment) × 3 (Session) ANOVA on data from the last response-elimination session and the two reacquisition sessions revealed a significant main effect of Session, F(2, 42) = 79.78, but the effect of Response-Elimination Treatment and the interaction failed to reach significance, Fs < 1. As shown by the finer-grained analysis, the failure to find slower reacquisition after PRF and Yoked than after Ext was again likely an artifact of aggregating data over the entire session.

Discussion

Similar to Experiment 2, the slowed reacquisition effect was not visible when data were aggregated over 60 min, but the finer-grained analysis again revealed that the PRF response-elimination procedure slowed reacquisition. The fact that the effect of the yoked procedure did not differ reliably from that of the PRF procedure may appear to contrast with previous research in classical conditioning which shows that an unpaired procedure was actually more effective than a PRF procedure at slowing rapid reacquisition of a magazine-entry response (Bouton et al., 2004, Experiment 2). However, the yoked procedure in this experiment involved noncontingent reinforcers, whereas the earlier research involved an explicitly unpaired procedure (technically, a negative contingency between the CS and US). The current yoked procedure could have allowed some adventitious response-reinforcer pairings if the rat was lever pressing when the noncontingent pellet was delivered. In the end, it is clear that occasional contingent or noncontingent reinforcers during response elimination can reduce the amount of responding in reacquisition. This is perhaps especially noteworthy for the PRF procedure in the sense that it deliberately maintains a response-reinforcer relation throughout the response elimination phase.

General Discussion

These experiments indicate that maintaining occasional reinforced responses during response elimination can slow reacquisition of an operant lever-press response compared to a traditional extinction procedure. The findings are consistent with earlier results in classical conditioning (e.g., Bouton et. al., 2004) and also with the trial-signaling view (Ricker & Bouton, 1996). In the operant setting, analogous to the classical conditioning one, reinforced responses or merely reinforcers become part of the conditioning context. That context then changes during extinction when responses are not reinforced. Rapid reacquisition of operant behavior may then occur because it is a renewal effect created when reinforced responses or the reinforcer alone after extinction signal a return to conditioning (e.g., Bouton, 1993, 2002; see also Capaldi, 1967, 1994). Rapid reacquisition reveals that extinction does not destroy the original learning. Instead, some part of it is saved and can return depending on the context (e.g., Bouton, 1993; Kehoe & Macrae, 1997; Pavlov, 1927; Rescorla, 2001a).

The results of Experiments 2 and 3 suggest that the partial reinforcement procedure may mainly serve to reduce the animal’s reaction to early response-reinforcer pairings. Although slowed reacquisition was evident when the data were aggregated over whole sessions in Experiment 1, the result was only observed in responding after each response-reinforcer pairing in Experiments 2 and 3. As noted earlier, this result may be a consequence of the fact that the Prf and Ext groups entered reacquisition with different response rates. Because of that difference, the Ext groups received their first response-reinforcer pairing relatively late in the session, giving them less opportunity to accumulate high numbers of responses after response-reinforcer pairings. In contrast, the Prf groups received the first response-reinforcer pairing relatively early. Since reacquisition is by definition the return of responding after the response-reinforcer contingency is reintroduced, examining response rates after the first response-reinforcer pairing is a more sensitive way to detect the slowed reacquisition effect.

It is worth noting that the impact of the PRF response-elimination treatment was especially clear with the VI-8 min reacquisition schedule. The results suggest that the PRF procedure may be most effective at slowing reacquisition when reinforcers are distributed sparsely in time. This could be because the reinforcement schedule itself generates contextual cues. In the present method, a richer schedule in the reacquisition phase could have created contextual cues more similar to conditioning and therefore promoted a more robust renewal effect.

Bouton et al. (2004) provided a useful description of why a PRF or unpaired response-elimination procedure might be effective at slowing the rate of reacquisition. By including reinforced trials or responses or the reinforcer alone during response elimination, the PRF and unpaired procedures allow for more generalization from extinction to reacquisition. Thus, for example, slower reacquisition after partial reinforcement is a kind of “partial reinforcement reacquisition effect” with an explanation similar to that provided by sequential theory (Capaldi, 1967, 1994) for the “partial reinforcement extinction effect” (PREE). The PREE is the well-known effect in which the loss of responding during extinction is slow following training on a PRF schedule. According to sequential theory, the loss of responding is slower after training with partial than with continuous reinforcement because reinforcement after nonreinforced as well as reinforced trials allows for more generalization from conditioning to extinction (see Bouton et al., 2004). In the case of the unpaired procedure, presenting the US unpaired with the CS during the response-elimination phase allows the reinforcer to be associated with both conditioning and extinction and should therefore also reduce relapse. An analogous idea applies to a response-elimination procedure that includes noncontingent reinforcers.

Bouton, Woods, Moody, Sunsay, and García-Gutiérrez (2006) have reviewed the effectiveness of several different treatments used in the laboratory at reducing different types of relapse. The evidence reveals that extinction is highly ineffective at preventing relapse; the original behavior can readily return. A more effective way to prevent or reduce relapse involves providing a contextual “bridge” between extinction and testing, probably because the similar cues allow for more generalization between the phases. For example, presenting contextual cues during extinction and again later during the test serve to remind the subject of extinction and thereby reduce relapse (e.g., Brooks & Bouton, 1993, 1994). Likewise, a PRF response-elimination procedure may also be promising because it provides a stimulus that bridges extinction and the context of relapse.

Maintaining occasional reinforcers during response elimination is analogous to clinical procedures, such as controlled-drinking procedures, that allow the individual to earn an occasional reinforcer during treatment (e.g., Marlatt & Gordon, 1985; Sobell & Sobell, 1973, 1995). Extinction, on the other hand, is analogous to abstinence during which no drinks (reinforcers) are permitted. Evidence suggests that controlled-drinking procedures can, under some circumstances, yield lower relapse rates than strict abstinence (see Marlatt & Witkiewitz, 2002; Sitharthan et al., 1997). One way to describe this effect is that the user is taught that a slip is not a precursor to a full binge (Marlatt & Gordon, 1985). In other words, the user is no longer reinforced again for responding after the first reinforced response. Similarly, even though the current results might seem paradoxical, they suggest that maintaining occasional reinforcers might be more effective than extinction at preventing rapid reacquisition (relapse) under some circumstances. In particular, the results suggest that a PRF response-elimination procedure may be especially successful at affecting the immediate impact of the first several reinforcers after treatment. The PRF or a yoked procedure may therefore prevent a lapse from spiraling into a full-blown relapse of reinforcer-seeking behavior. Overall, the present results further emphasize the value of a learning-theory analysis of the development, elimination, and relapse of potentially harmful behavior. Theories of associative learning can help uncover mechanisms that potentially underlie relapse, and in the process discover ways to combat it.

Acknowledgments

This research was supported by Grant RO1 MH 64847 from the National Institute of Mental Health. Send correspondence to either author at the Department of Psychology, University of Vermont, Burlington, VT 05405.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adams CD, Dickinson A. Instrumental responding following reinforcer devaluation. Quarterly Journal of Experimental Psychology B: Comparative and Physiological Psychology. 1981;33:109–121. [Google Scholar]

- Alessi SM, Badger GJ, Higgins ST. An experimental examination of the initial weeks of abstinence in cigarette smokers. Experimental & Clinical Psychopharmacology. 2004;12:276–287. doi: 10.1037/1064-1297.12.4.276. [DOI] [PubMed] [Google Scholar]

- Baker AG. Contextual conditioning during free-operant extinction: Unsignaled, signaled, and backward-signaled noncontingent food. Animal Learning & Behavior. 1990;18:59–70. [Google Scholar]

- Boutonx ME. Slow reacquisition following extinction of conditioned suppression. Learning & Motivation. 1986;17:1–15. [Google Scholar]

- Bouton ME. Context, time, and memory retrieval in the interference paradigms of Pavlovian learning. Psychological Bulletin. 1993;114:80–99. doi: 10.1037/0033-2909.114.1.80. [DOI] [PubMed] [Google Scholar]

- Bouton ME. A learning-theory perspective on lapse, relapse, and the maintenance of behavior change. Health Psychology. 2000;19:57–63. doi: 10.1037/0278-6133.19.suppl1.57. [DOI] [PubMed] [Google Scholar]

- Bouton ME. Context, ambiguity, and unlearning: Sources of relapse after behavioral extinction. Biological Psychiatry. 2002;52:976–986. doi: 10.1016/s0006-3223(02)01546-9. [DOI] [PubMed] [Google Scholar]

- Bouton ME, King DA. Contextual control of the extinction of conditioned fear: Tests for the associative value of the context. Journal of Experimental Psychology: Animal Behavior Processes. 1983;9:248–265. [PubMed] [Google Scholar]

- Bouton ME, Rosengard C, Achenbach GG, Peck CA, Brooks DC. Effects of contextual conditioning and unconditional stimulus presentation on performance in appetitive conditioning. Quarterly Journal of Experimental Psychology: Comparative & Physiological Psychology. 1993;46(B):63–95. [PubMed] [Google Scholar]

- Bouton ME, Swartzentruber D. Slow reacquisition following extinction: Context, encoding, and retrieval mechanisms. Journal of Experimental Psychology: Animal Behavior Processes. 1989;15:43–53. [Google Scholar]

- Bouton ME, Swartzentruber D. Sources of relapse after extinction in Pavlovian and instrumental learning. Clinical Psychology Review. 1991;11:123–140. [Google Scholar]

- Bouton ME, Woods AM, Moody EW, Sunsay C, García-Gutiérrez A. Counteracting the context-dependence of extinction: Relapse and some tests of possible methods of relapse prevention. In: Craske MG, Hermans D, Vansteenwegen D, editors. Fear and learning: From Basic processes to clinical implications. Washington, DC: American Psychological Association; 2006. [Google Scholar]

- Bouton ME, Woods AM, Pineño O. Occasional reinforced trials during extinction can slow the rate of rapid reacquisition. Learning & Motivation. 2004;35:371–390. doi: 10.1016/j.lmot.2006.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks DC, Bouton ME. A retrieval cue for extinction attenuates spontaneous recovery. Journal of Experimental Psychology: Animal Behavior Processes. 1993;19:77–89. doi: 10.1037//0097-7403.19.1.77. [DOI] [PubMed] [Google Scholar]

- Brooks DC, Bouton ME. A retrieval cue for extinction attenuates response recovery (renewal) caused by a return to the conditioning context. Journal of Experimental Psychology: Animal Behavior Processes. 1994;20:366–379. doi: 10.1037//0097-7403.19.1.77. [DOI] [PubMed] [Google Scholar]

- Bullock DH, Smith WC. An effect of repeated conditioning-extinction upon operant strength. Journal of Experimental Psychology. 1953;46:349–352. doi: 10.1037/h0054544. [DOI] [PubMed] [Google Scholar]

- Capaldi EJ. A sequential hypothesis of instrumental learning. In: Spence KW, Spence JT, editors. The psychology of learning and motivation. New York: Academic Press; 1967. pp. 67–156. [Google Scholar]

- Capaldi EJ. The sequential view: From rapidly fading stimulus traces to the organization of memory and the abstract concept of number. Psychonomic Bulletin & Review. 1994;1:156–181. doi: 10.3758/BF03200771. [DOI] [PubMed] [Google Scholar]

- Colwill RM. Associative representations of instrumental contingencies. In: Medin DL, editor. The psychology of learning and motivation: Advances in research and theory. Vol. 31. San Diego: Academic Press; 1994. pp. 1–72. [Google Scholar]

- Gibbs CM, Latham SB, Gormezano I. Classical conditioning of the rabbit nictitating membrane response: Effects of reinforcement schedule on response maintenance and resistance to extinction. Animal Learning & Behavior. 1978;6:209–215. doi: 10.3758/bf03209603. [DOI] [PubMed] [Google Scholar]

- Hart JA, Bourne MJ, Schachtman TR. Slow reacquisition of a conditioned taste aversion. Animal Learning & Behavior. 1995;23:297–303. [Google Scholar]

- Heil SH, Alessi SM, Lussier JP, Badger GJ, Higgins ST. An experimental test of the influence of prior cigarette smoking abstinence on future abstinence. Nicotine & Tobacco Research. 2004;6:471–479. doi: 10.1080/14622200410001696619. [DOI] [PubMed] [Google Scholar]

- Kehoe EJ. A layered network model of associative learning: Learning to learn and configuration. Psychological Review. 1988;95:411–433. doi: 10.1037/0033-295x.95.4.411. [DOI] [PubMed] [Google Scholar]

- Kehoe EJ, Macrae M. Savings in animal learning: Implications for relapse and maintenance after therapy. Behavior Therapy. 1997;28:141–155. [Google Scholar]

- Macrae M, Kehoeq EJ. Savings after extinction in conditioning of the rabbit’s nictitating membrane response. Psychobiology. 1999;27:85–94. [Google Scholar]

- Marlatt GA, Gordon JR. Relapse prevention: Maintenance strategies in the treatment of addictive behaviors. New York: Guilford Press; 1985. [Google Scholar]

- Marlatt GA, Witkiewitz K. Harm reduction approaches to alcohol use: Health promotion, prevention, and treatment. Addictive Behaviors. 2002;27:867–886. doi: 10.1016/s0306-4603(02)00294-0. [DOI] [PubMed] [Google Scholar]

- Napier RM, Macrae M, Kehoe EJ. Rapid reacquisition in conditioning of the rabbit’s nictitating membrane response. Journal of Experimental Psychology: Animal Behavior Processes. 1992;18:182–192. doi: 10.1037//0097-7403.18.2.182. [DOI] [PubMed] [Google Scholar]

- Pavlov IP. Conditioned reflexes. London: Oxford University Press; 1927. [Google Scholar]

- Pearce JM, Hall G. A model for Pavlovian learning: Variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychological Review. 1980;87:532–552. [PubMed] [Google Scholar]

- Rescorlax RA. A Pavlovian analysis of goal-directed behavior. American Psychologist. 1987;42:119–129. [Google Scholar]

- Rescorla RA. Experimental extinction. In: Mowrer RR, Klein SB, editors. Handbook of contemporary learning theories. Mahwah, NJ: Erlbaum; 2001a. pp. 119–154. [Google Scholar]

- Rescorla RA. Retraining of extinguished Pavlovian stimuli. Journal of Experimental Psychology: Animal Behavior Processes. 2001b;27:115–124. [PubMed] [Google Scholar]

- Rescorla RA, Skucy JC. Effect of response-independent reinforcers during extinction. Journal of Comparative & Physiological Psychology. 1969;67:381–389. [Google Scholar]

- Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical conditioning II: Current research and theory. New York: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- Ricker ST, Bouton ME. Reacquisition following extinction in appetitive conditioning. Animal Learning & Behavior. 1996;24:423–436. [Google Scholar]

- Sitharthan T, Sitharthan G, Hough MJ, Kavanagh DJ. Cue exposure in moderation drinking: A comparison with cognitive-behavior therapy. Journal of Consulting & Clinical Psychology. 1997;65:878–882. doi: 10.1037//0022-006x.65.5.878. [DOI] [PubMed] [Google Scholar]

- Sobell MB, Sobell LC. Individualized behavior therapy for alcoholics. Behavior Therapy. 1973;4:49–72. [Google Scholar]

- Sobell MB, Sobell LC. Controlled drinking after 25 years: How important was the great debate? Addiction. 1995;90:1149–1153. [PubMed] [Google Scholar]

- Weidemann G, Kehoe EJ. Savings in classical conditioning in the rabbit as a function of extended extinction. Learning & Behavior. 2003;31:49–68. doi: 10.3758/bf03195970. [DOI] [PubMed] [Google Scholar]