Abstract

Objective To assess the educational effectiveness on learning evidence based medicine of a handheld computer clinical decision support tool compared with a pocket card containing guidelines and a control.

Design Randomised controlled trial.

Setting University of Hong Kong, 2001.

Participants 169 fourth year medical students.

Main outcome measures Factor and individual item scores from a validated questionnaire on five key self reported measures: personal application and current use of evidence based medicine; future use of evidence based medicine; use of evidence during and after clerking patients; frequency of discussing the role of evidence during teaching rounds; and self perceived confidence in clinical decision making.

Results The handheld computer improved participants' educational experience with evidence based medicine the most, with significant improvements in all outcome scores. More modest improvements were found with the pocket card, whereas the control group showed no appreciable changes in any of the key outcomes. No significant deterioration was observed in the improvements even after withdrawal of the handheld computer during an eight week washout period, suggesting at least short term sustainability of effects.

Conclusions Rapid and convenient access to valid and relevant evidence on a portable computing device can improve learning in evidence based medicine, increase current and future use of evidence, and boost students' confidence in clinical decision making.

Introduction

Despite the widespread dissemination of evidence based medicine into curricula, much of what is known about the outcomes of teaching methods relies on observational data, mostly before and after intervention. Although evaluation of the quality of research evidence is a core competency of evidence based medicine, the quantity and quality of such evidence for teaching evidence based medicine are poor.1

Information available to clinicians at the point of care in an “evidence cart” increased the extent to which evidence was sought and incorporated into decisions on patient care.2 Such a cart would only be useful during team ward rounds, however, and could not be easily transplanted to ambulatory or other settings where most doctor-patient interactions occur. Handheld computers (personal digital assistants), containing concise summaries of evidence and decision making tools, can perform more functions than an evidence cart and hold promise in further promoting the practice and learning of evidence based medicine anywhere.3

We conducted a randomised controlled trial to test whether providing medical students with a handheld computer clinical decision support tool coupled with a brief teaching intervention could improve learning in evidence based medicine.

Participants and methods

All 169 fourth year undergraduates attending the University of Hong Kong consented to participate in the study during their senior clerkship in 2001. It was emphasised that participation was voluntary and would not affect academic records, and they were assured of anonymity and confidentiality.

The senior clerkship is organised into three teaching blocks: block A (internal medicine), block B (surgery), and block C (multidisciplinary, comprising microbiology, obstetrics and gynaecology, pathology, paediatrics, psychiatry, public health, and radiology). Students were assigned to start in one block and rotated through all three blocks for eight weeks each.

The sample size required under the assumptions of a medium effect size of 0.25, α and β levels of 0.05 and 0.2, respectively, and using one way analysis of variance to test for differences between three independent groups yielded a sample size of 159, divided equally into three intervention arms.4

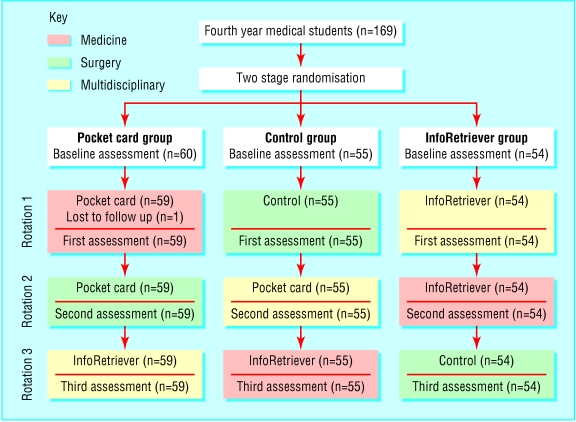

The students were randomised in two stages. Firstly, they were divided into three groups of about equal size using random numbers generated by computer at the faculty's Medical Education Unit, independently from the research team. Secondly, the groups were randomly allocated to start the clerkship in one of the three teaching blocks (groups A to C). Assignment and randomisation were concealed from the students and investigators.

Educational interventions

We used a crossover design with three intervention arms. InfoRetriever software (InfoPOEMs; Charlottesville, VA) loaded onto a personal digital assistant, a pocket card containing guidelines on clinical decisions, and a control arm. InfoRetriever is designed for rapid access to relevant, current, best medical evidence at the point of care. It contains seven evidence databases (including abstracts from the Cochrane Systematic Reviews Database and patient oriented evidence that matters), clinical decision rules and practice guidelines, risk calculators, and basic information on drugs.5 This software as well as a digital version of the pocket card were loaded onto a personal digital assistant (iPAQ 3630, 2001 release; Compaq, Hong Kong).

The pocket card was similar in format to a pharmaceutical pocket card and contained information such as the evidence based decision making cycle, levels and sources of evidence, and abbreviated guidelines on appraising the relevance and validity of articles about diagnostic tests, prognosis, treatment, and practice guidelines.6,7 The card was designed to remind and prompt students to apply evidence based medicine techniques in their clinical learning.

Each active intervention (InfoRetriever and pocket card) was accompanied by two interactive sessions lasting two hours and conducted by the same clinician in groups of about 20 students. During the first session, the principles and practice of evidence based medicine were revisited and reinforced (the students had already received 20 hours teaching for the first three years of the curriculum). The students were given specific guidance on how evidence based medicine techniques could and should be used in the clinical setting. The application of the pocket card (in conjunction with traditional library facilities such as Medline searches) or InfoRetriever were shown through a simulated patient case. For the next session, the students were asked to apply the same techniques of identifying an answerable clinical question, searching for and appraising the evidence, and applying the newly acquired information to making clinical decisions to a particular patient they had recently clerked. They shared their findings and experience with the rest of the group, where interactive discussion, led by the clinician, was encouraged.

For the group that was assigned the InfoRetriever intervention for rotations 1 and 2, this active intervention was withdrawn during rotation 3, the washout period.

Measurement of outcomes

We defined a priori five key outcomes most relevant to the learning needs and objectives of the students, as assessed by self report through a locally validated, standardised questionnaire.8 The items gauged respondents' current and future behavioural outcomes such as personal application and current use of evidence based medicine, future use of evidence based medicine, self reported actual use of evidence in clinical learning, and self perceived confidence in clinical decision making. These outcomes were measured by two summary factor scores and three individual item scores derived from the questionnaire (see table A on bmj.com). We excluded knowledge or attitudinal items because these have been often criticised as unreliable: knowledge items should be tested in an objective examination format, and attitudinal items rarely translate into actual behaviour.1

The students completed the questionnaire at baseline and after rotation through each of the teaching blocks. Response rates were 100% throughout. All responses were anonymous and linked to individual respondents through a unique identifier, where only independent research assistants were able to match identifiers to students. Investigators had access only to aggregate results and were blinded to data at the individual level.

Statistical analysis

We tested baseline equivalence of the three study arms using analysis of variance for continuous variables and χ2 tests for categorical variables. Effects of the educational interventions in each arm were assessed on an intention to treat basis by changes in mean scores for the five outcomes using analysis of covariance.9,10 We also used paired t tests to look for changes in mean scores within groups as the same groups of students progressed through different interventions longitudinally. All analyses were performed with SPSS version 10.

Results

The figure shows the progress of students through the trial. One student initially randomised to block A withdrew from medical school and therefore dropped out of the study during the first rotation.

Figure 1.

Study organisation

The characteristics of the students at baseline were similar between intervention arms for age, sex, educational background, and examination scores by year 3 (table 1). Imbalances were, however, evident in the three item scores, which we accounted for by using analysis of covariance.9,4

Table 1.

Characteristics of students at baseline in two active intervention arms (InfoRetriever and pocket card) and control arm. Values are numbers (percentages) of students unless stated otherwise

| Characteristic | Pocket card group (n=59) | Control group (n=55) | InfoRetriver group (n=54) |

|---|---|---|---|

| Mean (SD) age (years) | 22.4 (1.1) | 22.3 (0.7) | 22.5 (1.3) |

| Women | 25 (42) | 25 (45) | 20 (37) |

| Educational background: | |||

| Local secondary school | 44 (75) | 46 (84) | 45 (83) |

| Local international or overseas secondary school | 11 (19) | 8 (15) | 6 (11) |

| University | 4 (7) | 1 (2) | 3 (6) |

| Mean (SD) examination scores by year 3 | 68.1 (3.7) | 69.7 (4.1) | 68.8 (3.6) |

| Mean (SD) factor scores: | |||

| Personal application and current use of evidence based medicine | 2.2 (0.5) | 2.4 (0.5) | 2.3 (0.6) |

| Future use of evidence based medicine | 3.9 (0.6) | 3.7 (0.6) | 3.8 (0.7) |

| Individual item scores: | |||

| Frequency of looking up evidence1* | 1.2 (1.2) | 1.1 (1.0) | 0.7 (0.8) |

| Frequency of raising role of current best evidence2† | 2.6 (1.0) | 2.9 (1.0) | 2.5 (0.8) |

| Confidence in clinical decision making3‡ | 2.8 (0.8) | 2.9 (0.7) | 2.6 (0.8) |

On average, how often do you look up evidence during or after clerking each patient on the ward or in the clinic?

How frequently have you raised the role of current best evidence during teaching rounds or bedside teaching?

How much confidence do you have in your clinical decision making?

Table 2 shows changes in mean scores from baseline to the end of the first rotation. Both the InfoRetriever and pocket card group showed improvements in scores for personal application and current use of evidence based medicine. They also reported more frequent use of evidence during clinical teaching rounds, when we observed a significant dose-response gradient in relation to the intensity of the intervention (P for linear trend 0.001). The InfoRetriever group only showed significant gains in self reported use of evidence based medicine and self perceived confidence in clinical decision making. The control group showed no statistically significant changes in any of the outcome measures.

Table 2.

Changes in mean scores from baseline to first assessment in three intervention arms. Values are mean differences (95% confidence intervals) unless stated otherwise†

|

P values

|

||||||||

|---|---|---|---|---|---|---|---|---|

| Scores | Pocket card group | Control group | InfoRetriever group | Between groups overall | Group A v B | Group B v C | Group A v C | For linear trend‡ |

| Factor scores: | ||||||||

| Personal application and current use of evidence based medicine | 0.47 (0.31 to 0.63) | −0.07 (−0.25 to 0.10) | 0.45 (0.29 to 0.61) | <0.001 | <0.001*** | <0.001*** | 0.84 | <0.001 |

| Future use of evidence based medicine | 0.01 (−0.15 to 0.17) | −0.01 (−0.17 to 0.14) | 0.03 (−0.15 to 0.22) | 0.47 | NA | NA | NA | NA |

| Individual item scores‡ | ||||||||

| Frequency of looking up evidence | −0.26 (−0.59 to 0.08) | −0.25 (−0.52 to 0.03) | 0.56 (0.25 to 0.86) | 0.012 | 0.70 | 0.0039* | 0.032 | <0.001 |

| Frequency of raising role of current best evidence | 0.32 (0.04 to 0.60) | 0.15 (−0.16 to 0.45) | 0.87 (0.56 to 1.18) | 0.024 | 0.87 | 0.036 | 0.015* | 0.001 |

| Confidence in clinical decision making | 0.09 (−0.13 to 0.30) | 0.04 (−0.21 to 0.28) | 0.30 (0.06 to 0.55) | 0.60 | NA | NA | NA | NA |

Levels of significance after Bonferroni correction: *<0.017; **<0.003; ***<0.0003.

NA=not available (>0.05 in between group comparison overall).

Control group versus pocket card group versus InfoRetriever group C during first rotation.

Full questions in footnote to table 1.

During the second rotation, the InfoRetriever and pocket card groups continued with the interventions whereas the control group received the pocket card. We observed similar changes in mean scores from baseline to second assessment (table 3). The control group showed improvements for the two factor scores representing both current and future use of evidence based medicine. The InfoRetriever group showed slightly higher scores in all five outcomes, whereas those for the pocket card group were not appreciably different from the previous rotation.

Table 3.

Changes in mean scores from baseline to second assessment. Values are mean differences (95% confidence intervals) unless stated otherwise

|

P values

|

|||||||

|---|---|---|---|---|---|---|---|

| Scores | Pocket card group | Control group | InfoRetriever group | Between-group comparisons overall | Pocket card v controls | Control v InfoRetriever | Pocket card v InfoRetriever |

| Factor scores: | |||||||

| Personal application and current use of evidence based medicine | 0.38 (0.21 to 0.55) | 0.20 (0.03 to 0.36) | 0.59 (0.44 to 0.74) | 0.004 | 0.52 | 0.001** | 0.022 |

| Future use of evidence based medicine | 0.16 (0.03 to 0.29) | 0.20 (0.03 to 0.36) | 0.29 (0.12 to 0.45) | 0.30 | NA | NA | NA |

| Individual item scores‡: | |||||||

| Frequency of looking up evidence | −0.36 (−0.67 to −0.05) | −0.18 (−0.38 to 0.01) | 0.57 (0.28 to 0.86) | <0.001 | 0.67 | <0.001** | 0.002** |

| Frequency of raising role of current best evidence | 0.51 (0.22 to 0.80) | 0.16 (−0.13 to 0.46) | 0.93 (0.67 to 1.18) | 0.027 | 0.45 | 0.008* | 0.058 |

| Confidence in clinical decision making | 0.29 (0.06 to 0.52) | 0 (−0.24 to 0.24) | 0.57 (0.32 to 0.83) | 0.095 | NA | NA | NA |

Significance after Bonferroni correction: *<0.017; **<0.003; ***<0.0003.

NA=not available (P>0.05 in between group comparison overall).

Full questions in footnote to table 1.

To exclude confounding by teaching block (medicine, surgery, and multidisciplinary), we performed a sensitivity analysis by comparing changes in mean scores for the five outcomes between the control group (second assessment minus baseline assessment scores, where the first rotation was control) and the InfoRetriever group (first assessment minus baseline assessment scores). The superior effects of InfoRetriever were evident for four of the five outcomes, and the lack of benefit in future use of evidence based medicine is also consistent with data (see tables 2 and 3 and table B on bmj.com).

To better study the effect of progressing from control to pocket card, we pooled the results of the control group (first to second assessment) and the pocket card group (baseline to first assessment; table 4). The two factor scores showed significant improvements whereas the mean score for frequency that evidence was considered during bedside rounds achieved only borderline significance. The remaining two outcome measures did not change significantly. Both groups from second to third assessments were similarly combined to reflect the effect of progressing from pocket card to InfoRetriever. The two factor scores showed additional increases, and the three individual item scores all showed significant improvements.

Table 4.

Pooled effects of the progression from control to pocket card and from pocket card to InfoRetriever. Values are mean differences (95% confidence intervals) unless stated otherwise

| Scores | Control to pocket card* | P value | Pocket card to InfoRetriever† | P value |

|---|---|---|---|---|

| Factor scores: | ||||

| Personal and current use of evidence based medicine | 0.38 (0.26 to 0.49) | <0.001 | 0.19 (0.08 to 0.30) | <0.001 |

| Future use of evidence based medicine | 0.12 (0.01 to 0.24) | 0.04 | 0.12 (0.03 to 0.21) | 0.007 |

| Individual item scores‡: | ||||

| Frequency of looking up evidence | −0.12 (−0.32 to 0.09) | 0.25 | 0.48 (0.22 to 0.74) | <0.001 |

| Frequency of raising role of current best evidence | 0.18 (−0.03 to 0.38) | 0.086 | 0.32 (0.13 to 0.51) | 0.001 |

| Confidence in clinical decision making | 0.03 (−0.13 to 0.18) | 0.73 | 0.19 (0.04 to 0.33) | 0.011 |

Pocket card group from baseline to first assessment combined with control group from first assessment to second assessment.

Pocket card and control groups from second to third assessment.

Full questions in footnote to table 1.

To exclude confounding by period effects—that is, increase in experience rather than the educational intervention was responsible for progression—we carried out a sensitivity analysis to compare changes in mean outcome scores between the InfoRetriever group (first assessment minus baseline assessment) and the pocket card group (third assessment minus baseline assessment) where both groups received the InfoRetriever intervention for the duration of one block during the multidisciplinary teaching block in the first rotation for the InfoRetriver group and in the third rotation for the pocket card group. Period effects did not appreciably or significantly influence four out of the five outcomes—that is, the effect of InfoRetriever did not change with time as students matured and progressed through the year (see table C on bmj.com). One possible remaining confounder was when the pocket card group received the pocket card intervention for two blocks (plus one additional tutorial briefing consisting of two sessions for two hours each), which could have accounted for the inconsistencies of the higher factor scores.

The InfoRetriever showed improvements in all five outcomes. Such gains continued on an upward trend with time in three outcomes (table 5). During the washout period there was no significant deterioration in any outcome measure, suggesting a sustained effect, at least in the short term, that persisted after the withdrawal of the personal digital assistant.

Table 5.

Changes in mean scores for InfoRetriever group from baseline to final assessment. Values are mean differences (95% confidence intervals) unless stated otherwise

| Scores | Baseline to first assessment (InfoRetriever) | P value | First to second assessment (InfoRetriever) | P value | Second to third assessment (control washout period) | P value |

|---|---|---|---|---|---|---|

| Factor scores: | ||||||

| Personal application and current use of evidence based medicine | 0.45 (0.29 to 0.61) | <0.001*** | 0.14 (−0.01 to 0.29) | 0.07 | −0.10 (−0.37 to 0.18) | 0.48 |

| Future use of evidence based medicine | 0.03 (−0.15 to 0.22) | 0.72 | 0.25 (0.10 to 0.40) | 0.001* | 0.01 (−0.18 to 0.20) | 0.92 |

| Individual item scores† | ||||||

| Frequency of looking up evidence | 0.56 (0.25 to 0.86) | <0.001** | 0.01 (−0.37 to 0.39) | 0.96 | −0.20 (−0.60 to 0.20) | 0.33 |

| Frequency of raising role of current best evidence | 0.87 (0.56 to 1.18) | <0.001*** | 0.06 (−0.25 to 0.36) | 0.72 | 0.19 (−0.14 to 0.52) | 0.25 |

| Confidence in clinical decision making | 0.30 (0.06 to 0.55) | 0.017* | 0.26 (0.08 to 0.45) | 0.007** | 0.04 (−0.22 to 0.30) | 0.74 |

Levels of significance after Bonferroni correction: *<0.017; **<0.003; ***<0.0003.

Full questions in footnote to table 1.

Discussion

Providing students with a decision support tool on a personal digital assistant has the potential to improve their educational experience with evidence based medicine. Furthermore, this improvement was in addition to that achieved by the pocket card, suggesting that neither the pocket card itself nor the two accompanying teaching sessions could wholly account for the observed gains. Although the results preclude the assessment of the long term effects of InfoRetriever, there did not seem to be any significant deterioration in the improvements attained after withdrawal of the personal digital assistant during an eight week washout period, suggesting short term sustainability of effects. We caution, however, that the potential for confounding by teaching block, intervention timing, and progression, as well as the effect of student experience and maturity, should be considered in interpreting the findings. Sensitivity analyses did, however, support our hypothesis of the educational effectiveness of InfoRetriever (see tables B and C on bmj.com).

To our knowledge this study is the first to evaluate, through a randomised trial design, the educational effectiveness of a handheld clinical decision support tool as measured by five predetermined self reported outcomes. It is also one of only a few trials on evidence based medicine learning in the undergraduate setting, the traditional focus being on postgraduate residency programmes.10,11

Improving access to the medical literature through a personal digital assistant at the point of care yielded considerable positive changes in students' self reported actual use of evidence while clerking patients and seemed to boost their confidence in clinical decision making generally. These findings were consistent with a study using an evidence cart during ward rounds.2 Evidence from other studies suggests that improved patient outcomes may result from this evidence based approach.12-14

In addition, ready access to current best evidence prompted the students with personal digital assistants in our study to raise the role of evidence during ward rounds or bedside teaching more often, as ascertained through self reports. Such activity promotes active learning, which is crucial to achieve deep learning and retained knowledge and is a cornerstone of adult learning theory.15 These attributes are hallmarks of a modern medical curriculum that have been incorporated into many reformed curricula internationally.

Those students who were assigned the InfoRetriever intervention reported that they were more likely to be willing to adopt evidence based medicine techniques in their future clinical practice. This is important given the emphasis on lifelong learning and continuous professional development recognised and espoused by most medical organisations worldwide.

The prerequisites for a valid evaluation of educational interventions have been previously outlined.16 They include a rigorous study design with control groups, standardised and validated instruments for measuring outcomes, and high participation and response rates.16 Our study satisfies all three core criteria, thereby ensuring the internal validity of our findings and conclusions.

Limitations of study

Several caveats bear mention. Firstly, we did not have expressly objective or evaluative type outcome measures. All five outcomes were self reported behaviour and future intentions. Also, the mean score differences between groups did not give a clear indication of the absolute magnitude of benefit, although in the context of educating undergraduates in evidence based medicine, standard measures of outcome are limited. Patient related outcomes are not directly applicable to junior clinical clerks whereas commonly adopted tests for abilities in evidence based medicine such as critical appraisal skills may not be better proxies of the desired educational benefits.8 In the absence of better alternatives, nevertheless, we measured changes as a result of the educational interventions under study using a locally validated, standardised questionnaire to ensure consistency and applicability. In the context of routine practice more generally, the impact of educational initiatives on evidence based medicine can be measured through standardised clinical audits using a before and after intervention or a crossover randomised design similar to our present strategy to estimate the proportion of interventions that are grounded in current, best evidence.17 Secondly, our study design was complex and the duration of intervention was relatively brief in the overall context of the medical curriculum. These pitfalls are often encountered in medical education research, when schools are often reluctant to allow students to be selectively exposed to “unproven” new interventions. Therefore we adopted a complicated crossover design for our study. In addition, medical students in their clinical years rotate through a series of teaching blocks rapidly, and it is often difficult to arrange the necessary logistics that would allow the undertaking of a trial. The window period for intervention and measurement is therefore often shorter than optimal. Thirdly, cross contamination of interventions between study arms is a potential limitation in educational trials of this kind.15,16 Such a phenomenon would, however, only dilute the observed effects, and our findings might therefore have underestimated the true magnitude of benefit. Fourthly, part of the observations could have been influenced by differences between the core content and timing of the three teaching blocks, whereby a particular block might have presented relatively more or fewer opportunities for evidence based medicine learning. Some of these effects would likely have been buffered by the crossover design. Future research could further examine this issue through multiple trials of an intervention at different study sites or by replication. Finally, it is difficult to predict the generalisability of our findings; whether other schools with different institutional arrangements and student bodies would achieve similar improvements with InfoRetriever is unknown.16,18 Therefore, we repeat the calls for collaborative, multicentred efforts in such kinds of educational research in evidence based medicine, which will broaden the representativeness of study results and enhance the statistical power of trials to detect subtle changes in relevant outcomes.8

What is already known on this topic

Information in an evidence cart at the point of care increased the extent to which evidence was sought and incorporated into decisions about patient care

The potential benefits of portable clinical decision tools to support students learning evidence based medicine are unknown

What this study adds

Rapid access to evidence on a portable computing device can improve learning of evidence based medicine in medical students

Other benefits are increased current and future use of evidence and more confidence in clinical decision making

Supplementary Material

Additional tables appear on bmj.com

Additional tables appear on bmj.com

We thank Compaq Computers (Hong Kong) and InfoPOEMs for technical support; Dorothy Lam, Jacqueline Wong, and Matthew Tse for informatics support and assistance with data collection; Marie Chi for secretarial assistance in the preparation of the manuscript; the Undergraduate Education Committee of the Faculty of Medicine; and the medical students who participated in the trial.

Contributors: GML designed the study in consultation with THL and obtained funding for the project. GML, JMJ, KYKT, and WWTL were responsible for implementing the protocol. KYTK and WWTL collected and collated all data items. JJ, GML, IOLW, KYTK, and LMH analysed the data. JJ wrote the initial draft of the manuscript and all authors contributed to the revision of subsequent drafts and approved the final version. GML will act as guarantor for the paper.

Funding: The University of Hong Kong provided a teaching development grant; Compaq Computers (Hong Kong) supplied 140 iPAQ pocket PC computers; and InfoPOEMs provided a training site license for the InfoRetriever software at a discounted rate. The guarantor accepts full responsibility for the conduct of the study, had access to the data, and controlled the decision to publish.

Competing interests: None declared.

Ethical approval: Ethical approval for the study was granted from the Faculty of Medicine Research Ethics Committee, University of Hong Kong.

References

- 1.Hatala R, Guyatt G. Evaluating the teaching of evidence-based medicine. JAMA 2002;288: 1110-2. [DOI] [PubMed] [Google Scholar]

- 2.Sackett DL, Straus SE. Finding and applying evidence during clinical rounds: the “evidence cart.” JAMA 1998;280: 1336-8. [DOI] [PubMed] [Google Scholar]

- 3.Ebell M, Rovner D. Information in the palm of your hand. J Fam Pract 2000;49: 243-51. [PubMed] [Google Scholar]

- 4.Cohen J. Statistical power analysis for the behavioral sciences, 2nd ed. Hillsdale, NJ: Erlbaum, 1988.

- 5.InfoPOEMs website. www.InfoPOEMs.com/ (accessed 24 Nov, 2002).

- 6.Leung GM. Evidence-based practice revisited. Asia Pac J Public Health 2001;13: 116-21. [DOI] [PubMed] [Google Scholar]

- 7.Information Mastery Working Group. Course materials from the information mastery workshop organised by the University of Virginia, Charlottesville (4-7 Apr, 2001).

- 8.Johnston JM, Leung GM, Fielding R, Tin KYK, Ho LM. The development and validation of a knowledge, attitude, and behaviour questionnaire to assess undergraduate evidence-based practice teaching and learning. Med Educ (in press). [DOI] [PubMed]

- 9.Vickers AJ, Altman DG. Analysing controlled trials with baseline and follow up measurements. BMJ 2001;323: 1123-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bennett KJ, Sackett DL, Haynes RB, Neufeld VR, Tugwell P, Roberts R. A controlled trial of teaching critical appraisal of the clinical literature to medical students. JAMA 1987;257: 2451-4. [PubMed] [Google Scholar]

- 11.Green ML. Evidence-based medicine training in graduate medical education: past, present and future. J Eval Clin Pract 2000;6: 121-38. [DOI] [PubMed] [Google Scholar]

- 12.Marshall JG. The impact of the hospital library on clinical decision making: the Rochester study. Bull Med Libr Assoc 1992;80: 169-78. [PMC free article] [PubMed] [Google Scholar]

- 13.Haynes RBH, McKibbon A, Walker CJ, Ryan N, Fitzgerald D, Ramsden MF. Online access to MEDLINE in clinical settings. Ann Intern Med 1990;112: 78-84. [DOI] [PubMed] [Google Scholar]

- 14.Langdorf MI, Koenig KL, Brault A, Bradman S, Bearie BJ. Computerized literature research in the ED: patient care impact. Acad Emerg Med 1995;2: 157-9. [DOI] [PubMed] [Google Scholar]

- 15.Ghali WA, Saitz R, Eskew AH, Gupta M, Quan H, Hershman WY. Successful teaching in evidence-based medicine. Med Educ 2000;34: 18-22. [DOI] [PubMed] [Google Scholar]

- 16.Hutchinson L. Evaluating and researching the effectiveness of educational interventions. BMJ 1999;318: 1267-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lai TYY, Wong VWY, Leung GM. Evidence-based ophthalmology: a clinical audit of the emergency unit of a regional eye hospital. Br J Ophthalmol 2003;37: 385-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Campbell M, Fitzpatrick R, Haines A, Kinmonth AL, Sandercock P, Speigelhalter D, et al. Framework for design and evaluation of complex interventions to improve health. BMJ 2000;321: 694-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.