Some 200,000 people live with partial or nearly total permanent paralysis in the United States, with spinal cord injuries adding 11,000 new cases each year. Most research aimed at recovering motor function has focused on repairing damaged nerve fibers, which has succeeded in restoring limited movement in animal experiments. But regenerating nerves and restoring complex motor behavior in humans are far more difficult, prompting researchers to explore alternatives to spinal cord rehabilitation. One promising approach involves circumventing neuronal damage by establishing connections between healthy areas of the brain and virtual devices, called brain–machine interfaces (BMIs), programmed to transform neural impulses into signals that can control a robotic device. While experiments have shown that animals using these artificial actuators can learn to adjust their brain activity to move robot arms, many issues remain unresolved, including what type of brain signal would provide the most appropriate inputs to program these machines.

As they report in this paper, Miguel Nicolelis and colleagues have helped clarify some of the fundamental issues surrounding the programming and use of BMIs. Presenting results from a series of long-term studies in monkeys, they demonstrate that the same set of brain cells can control two distinct movements, the reaching and grasping of a robotic arm. This finding has important practical implications for spinal-cord patients—if different cells can perform the same functions, then surgeons have far more flexibility in how and where they can introduce electrodes or other functional enhancements into the brain. The researchers also show how monkeys learn to manipulate a robotic arm using a BMI. And they suggest how to compensate for delays and other limitations inherent in robotic devices to improve performance.

While other studies have focused on discrete areas of the brain—the primary motor cortex in one case and the parietal cortex in another—Nicolelis et al. targeted multiple areas in both regions to operate robotic devices, based on evidence indicating that neurons involved in motor control are found in many areas of the brain. The researchers gathered data on both brain signals and motor coordinates—such as hand position, velocity, and gripping force—to create multiple models for the BMI. They used different models according to which task the monkeys were learning—a reaching task, a hand-gripping task, and a reach-and-grasp task.

The BMI worked best, Nicolelis et al. show, when the programming models incorporated data recorded from large groups of neurons from both frontal and parietal brain regions, supporting the idea that each of these areas contains neurons directing multiple motor coordinates. When the researchers combined all the motor parameter models to optimize the control of the robotic arm through the BMI, they fixed those parameters and transferred control to the BMI and away from the monkeys' direct manipulation via a pole. The monkeys quickly learned that the robotic arm moved without their overt manipulations, and they periodically stopped moving their arms. Amazingly, when the researchers removed the pole, the monkeys were able to make the robotic arm reach and grasp without moving their own arms, though they did have visual feedback on the robotic arm's movements. Even more surprising, the monkeys' ability to manipulate the arm through “brain control” gradually improved over time.

One way the brain retains flexibility in responding to multiple tasks is through visual feedback. The researchers suggest that the success of the model may be the result of providing the monkeys with continuous feedback on their performance. This feedback may help integrate intention and action—including the action of the robotic arm—in the brain, allowing the monkey to get better at manipulating the robotic arm without moving.

By charting the relationship between neural signals and motor movements, Nicolelis et al. demonstrate how BMIs can work with healthy neural areas to reconfigure the brain's motor command neuronal elements and help restore intentional movement. These findings, they say, suggest that such artificial models of arm dynamics could one day be used to retrain the brain of a patient with paralysis, offering patients not only better control of prosthetic devices but the sense that these devices are truly an extension of themselves.

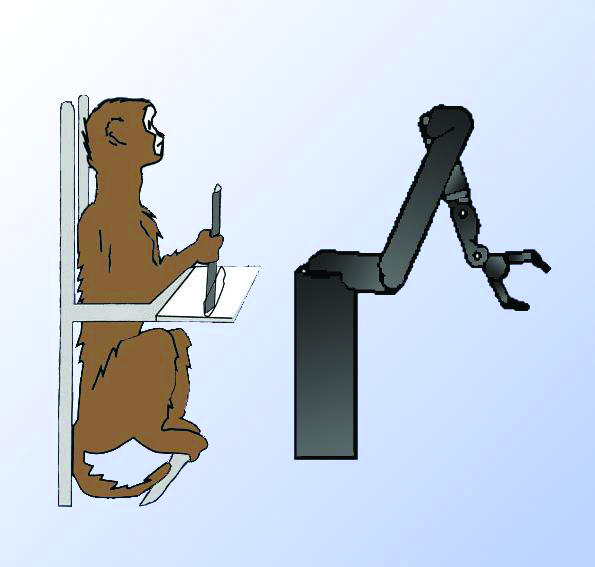

Monkey learns to control BMI.