Abstract

Many important decisions involve outcomes that are either probabilistic or delayed. Based on similarities in decision preferences, models of decision making have postulated that the same psychological processes may underlie decisions involving probabilities (i.e., risky choice) and decisions involving delay (i.e., intertemporal choice). Equivocal behavioral evidence has made this hypothesis difficult to evaluate. However, a combination of functional neuroimaging and behavioral data may allow identification of differences between these forms of decision making. Here, we used functional magnetic resonance imaging (fMRI) to examine brain activation in subjects making a series of choices between pairs of real monetary rewards that differed either in their relative risk or their relative delay. While both sorts of choices evoked activation in brain systems previously implicated in executive control, we observed clear distinctions between these forms of decision making. Notably, choices involving risk evoked greater activation in posterior parietal and lateral prefrontal cortices, whereas choices involving delay evoked greater activation in the posterior cingulate cortex and the striatum. Moreover, activation of regions associated with reward evaluation predicted choices of a more-risky option, whereas activation of control regions predicted choices of more-delayed or less-risky options. These results indicate that there are differences in the patterns of brain activation evoked by risky and intertemporal choices, suggesting that the two domains utilize at least partially distinct sets of cognitive processes.

Keywords: risk, delay, utility, posterior parietal cortex, intraparietal sulcus, choice

1. Introduction

Uncertainty arises when one does not know which of several outcomes will occur. In real-world decision making, uncertainty is present both when an outcome occurs with some probability and when an outcome occurs after some delay. In either case, a decision maker must consider the possibility that the outcome may not be realized. Classical models of decision making have considered risky and intertemporal choices to be distinct categories. However, researchers have noticed several similarities between the preferences of decision makers when choosing between risky outcomes and when choosing between delayed outcomes.

For example, subjects exhibit some similar decision biases in risky and intertemporal choice. Decision-makers tend to overvalue certain outcomes (the certainty effect): given a choice between a 100% chance of $32 and an 80% chance of $40 most decision makers prefer the certain $32 (Allais, 1953). However, if probabilities are reduced by half (i.e., a choice between a 50% chance of $32 and a 40% chance of $40), the larger but riskier reward may now be preferred. In both pairs the outcomes are matched for expected value – the riskier option offers 25% more money in exchange for a 25% lower probability of success – and, thus, a reversal in preference would not be consistent with normative models. Decision makers similarly overweight immediate rewards. When choosing between $100 now and $110 in two weeks, they prefer the smaller, sooner outcome, but when choosing between $100 in 36 weeks and $110 in 38 weeks, they prefer the larger, later outcome. In both cases, the larger later reward offers an extra $10 for a delay of two weeks, making this pattern of behavior (the immediacy effect) internally inconsistent (Thaler, 1981). Moreover, there are similarities in the discounting functions for risky and delayed outcomes: when probability is converted to odds-against, value decreases hyperbolically both with increasing time or increasing odds (Green, et al., 1999; Rachlin, et al., 1991).

Because of these and other similarities, some researchers have proposed that risky and intertemporal choice utilize the same psychological mechanism (Rotter, 1954). Such proposals usually take one of two forms. First, the processes used by intertemporal choice may be a subset of those used by risky choice because any delayed outcome is inherently risky (Green and Myerson, 1996; Keren and Roelofsma, 1995). Alternatively, the processes used by risky choice might be a subset of those used by intertemporal choice because for repeated trials, the smaller the probability of receiving an outcome, the longer the time to receiving the outcome will be (Hayden and Platt, 2007; Mazur, 1989; Rachlin, et al., 1986).

Although the similarities in biases and discount functions provide some evidence for same-mechanism theories, other behavioral evidence argues against this view. If risk and delay were processed by the same psychological mechanism, risk preferences and delay preferences should be strongly correlated across individuals. Some studies have found evidence of such correlations (Crean, et al., 2000; Mitchell, 1999; Myerson, et al., 2003; Reynolds, et al., 2003; Richards, et al., 1999), while others find correlations to be weak or absent (Ohmura, et al., 2005; Reynolds, et al., 2004). Furthermore, changes in payout magnitude have opposite effects in risky and intertemporal choice. Decision makers are more willing to wait for large outcomes than they are for small ones, but they are less willing to take risks for large outcomes than for small ones (Chapman and Weber, 2006; Green, et al., 1999; Prelec and Loewenstein, 1991; Rachlin, et al., 2000; Weber and Chapman, 2005). Differences such as these have led to the proposition that risky and intertemporal choice do not use the same processes, but share some psychophysical properties that produce behavioral similarities (Prelec and Loewenstein, 1991). Another possibility lies between the same-mechanism and different-mechanism proposals: it is possible that the processes used by risky and intertemporal choice overlap, with some processes unique to each domain and some shared by both.

Neuroscientific data may clarify the similarities and differences between probabilisitic and intertemporal choice where behavioral evidence alone cannot. There are numerous functional neuroimaging studies of probabilistic choice in humans (Dickhaut, et al., 2003; Hsu, et al., 2005; Huettel, et al., 2005; Huettel, et al., 2006; Kuhnen and Knutson, 2005; Paulus, et al., 2003; Rogers, et al., 1999), most implicating the lateral and inferior prefrontal cortex (PFC), the posterior parietal cortex (PPC), and the insular cortex in decision making under risk. Yet, complementary studies on processing of delay are few. McClure and colleagues found that a diverse system including ventral striatum, the ventromedial PFC, the posterior cingulate cortex, and other regions was activated by immediately available rewards but not by delayed ones (McClure, et al., 2007; McClure, et al., 2004). Several groups have shown similar elicitation of the reward system, specifically the ventral striatum, for selection of immediate rewards (Tanaka, et al., 2004; Wittmann, et al., 2007). A recent study by Kable and Glimcher demonstrated that this system tracked the subjective value of outcomes, regardless of their time until delivery, indicating a broader role for these reward-related regions in the representation of utility (Kable and Glimcher, 2007). Supporting this idea, Hariri and colleagues demonstrated that behavioral measures of delay discounting track reward sensitivity in the ventral striatum (Hariri, et al., 2006).

Pharmacological experiments have likewise produced equivocal results. Some studies of human subjects have found cigarette usage influences delay discounting but not risk discounting (Mitchell, 1999; Ohmura, et al., 2005), others report correlations with risk discounting but not delay discounting (Reynolds, et al., 2003), and still others report correlations with both risk discounting and delay discounting (Reynolds, et al., 2004). Nicotine deprivation (in smokers) has been found to influence both risk and delay discounting (Mitchell, 2004) while alcohol consumption has been found to affect neither risk nor delay discounting (Richards, et al., 1999). Data from non-human animals are also mixed. Lesions in the rat orbitofrontal cortex (Kheramin, et al., 2003; Mobini, et al., 2000) or in the nucleus accumbens (Cardinal and Cheung, 2005; Cardinal and Howes, 2005), which influence the function of the dopaminergic system, have been found to cause both risk aversion and steeper discount rates. However, lesions of the dorsal and median raphe nuclei in rats, which result in serotonin depletion, increase temporal discount rates but do not change risk preferences (Mobini, et al., 2000).

Although there remain no direct comparisons between probabilistic and intertemporal choice, these prior human and animal studies suggest a possible distinction between lateral parietal and prefrontal regions associated with evaluation of risky gambles, and medial parietal and striatal regions associated with evaluation of delayed outcomes. To fill this gap in the literature, we compared decisions involving risk and decisions involving delay within a single functional magnetic resonance imaging (fMRI) experiment. All decisions involved real rewards and thus were highly consequential to the subjects. In two risk conditions, subjects chose either between a certain amount and a risky gamble, or between two risky gambles. In two delay conditions, they chose either between an immediate outcome and a delayed outcome, or between two delayed outcomes. In the control condition, subjects simply picked which of two outcomes was larger. (Figure 1). This design allowed us to identify differences in evoked brain activation, even if risk and delay modulated behavior similarly in some circumstances. Additionally, we examined how brain activation in response to risky and intertemporal choice relates to the risk and time preferences of individual subjects: were there regions whose activation predicted whether particular individuals made risk-seeking/-averse or delay-seeking/-averse choices?

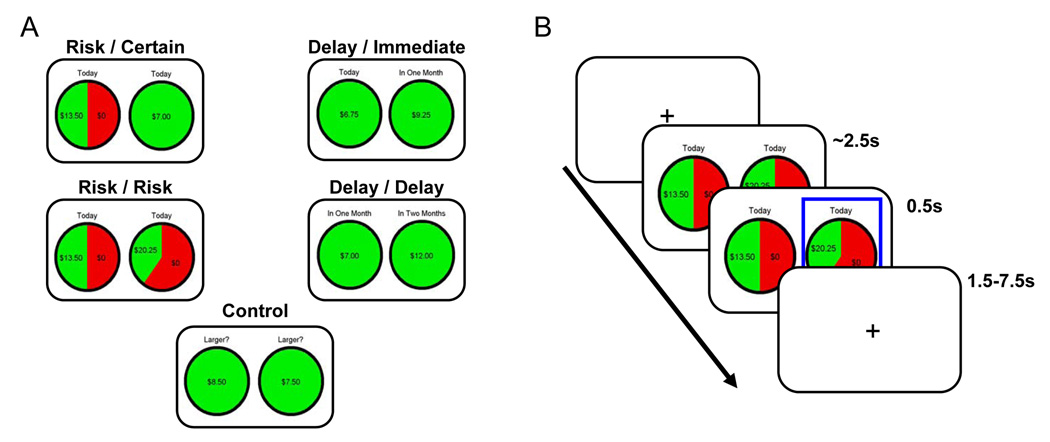

Figure 1. Task design.

(A) Five experimental conditions were used in the fMRI task: Risk/Certain, Risk/Risk, Delay/Immediate, Delay/Delay, and Control. Each consisted of a pair of monetary gambles between which the subject could choose. Risky gambles involved a monetary gain that could be obtained with some probability (e.g., a 50% chance of receiving $13.50). Delay gambles involved a known monetary gain that would be delivered after some known interval (e.g., $12 in two months). (B) At the beginning of the trial, a pair of gambles or delayed amounts was shown and subjects had unlimited time to make their choice (mean response time was approximately 2.5s). The selected box was highlighted for 0.5s before the intertrial interval (1.5–7.5s). No feedback about choice outcomes was provided until after the fMRI session.

2. Results

2.1. Behavioral Data

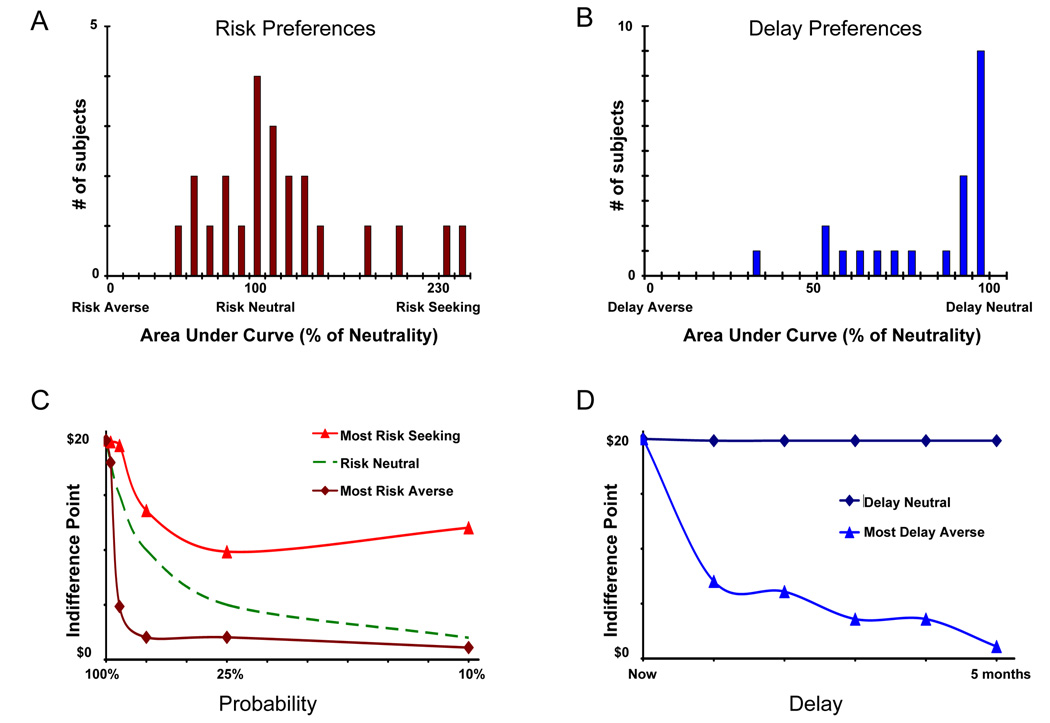

We first obtained measures of risk and delay preference for a set of young adult subjects (Figure 2) using an independent preference elicitation task (see Experimental Procedures). Risk preferences across subjects followed an approximately normal distribution that was slightly biased toward risk-aversion. Delay preferences, in contrast, were strongly skewed: near-delay-neutrality was the modal response. Thus, there was greater across-subjects variability in their attitudes toward risk than their attitudes toward delay. Risk preferences were not significantly correlated with delay preferences across subjects [r(21)=0.18, p =0.42]. These preference measures were used to derive the decision problems used in the subsequent fMRI scanner session.

Figure 2. Risk and delay preferences in the preference elicitation task.

For each subject, we estimated their risk preferences and delay preferences in an independent preference elicitation task. We used the area under the indifference curves (see Experimental Procedures for details) as our measure of preference. (A) Across subjects, risk preferences were centered roughly around risk neutrality, with some subjects risk-seeking and others risk-averse. (B) Delay preferences were highly skewed, with the modal response being delay neutrality. (C) Examples of risk equivalence curves for extreme subjects (solid lines), with an idealized risk-neutral response shown for comparison (dashed lines). Each point represents the amount of money (y-axis) for which a subject equally values a given probability (x-axis) of winning $20. (D) Examples of equivalence curves for delay-neutral and delay averse subjects; indifference points calculated similarly as in C, with temporal delay along the x-axis.

The pattern of risk and delay preferences expressed in the scanner session (Table 1) was similar to that expressed in the preference elicitation task, for both risky [r(23)=0.51, p=0.01] and intertemporal [r(23)=0.77, p=0.0001] choices. Subjects were equally likely to choose risky and certain outcomes [χ2(1, N=23)= 2.59, p=0.11] and were willing to sacrifice time to receive a larger outcome [χ2(1, N=23)= 0.37, p=0.54]. When considering only those subjects who showed delay aversion, there were nonsignificant trends towards both the immediacy effect and the certainty effect: 46% chose more delayed option with immediacy present, 64% with immediacy absent [χ2(1, N=9)= 2.34, p=0.13], while 38% chose more risky option with certainty present, 52% with certainty absent, χ2(1, N=9)= 1.89, p=0.17].

Table 1. Behavioral data.

Each row indicates one trial type, as defined by the experimental condition (risk, delay, or control) and the subject’s choice. We separate trials based on whether certainty/immediacy is present or absent, to reflect the five basic classes of trials shown in Figure 1. For each type, we indicate the mean (SD) response times in seconds and the percentage (n) of trials in which that choice was selected.

| Certainty/Immediacy Present | Certainty/Immediacy Absent | ||||

|---|---|---|---|---|---|

| RT | % choice | RT | % choice | ||

| Risk | Certain | 2.4s (1.6) | 49.7% (639) | 2.9s (2.8) | 46.7% (587) |

| Risky | 3.0s (2.0) | 50.3% (647) | 3.0s (2.0) | 53.3% (671) | |

| Delay | Immediate | 2.7s (1.5) | 21.4%(270) | 3.0s (1.6) | 13.8% (172) |

| Delayed | 2.1s (1.4) | 78.6% (991) | 2.1s (1.3) | 86.2% (1070) | |

| Control | Smaller | 1.4s (0.87) | 0.8% (10) | -- | -- |

| Larger | 1.7s (1.0) | 99.2% (1310) | -- | -- | |

Mean response times for each condition are displayed in Table 1. In the delay condition, the mean response time was longer when choosing the delayed outcome than when choosing the immediate outcome [F(1,11) = 101.39, p<0.0001] and was also longer when both outcomes were delayed than when one outcome was immediate [F(1,22) = 5.08, p<0.035]. There was no significant interaction [F(1,7) = 3.44, p=0.11]. For risky choices, subjects were faster when choosing the less risky outcome than when choosing the more risky outcome [F(1,22) = 22.12, p<0.0001], and they were also faster when certainty was present than when both outcomes were risky [F(1,22) = 7.32, p=0.013]. The interaction was significant [F(1,22) = 8.46, p=0.009], indicating that subjects took were faster to choose a less risky option when that option was certain than when it was risky. Response times for the risk condition were significantly longer than response times for the delay condition [F(1,22) = 162.48, p<0.0001]. Additionally, response times for both risky [F(1,22) = 327.25, p<0.0001] and delayed choices [F(2,44) = 111.94, p<0.0001] were significantly longer than the control condition.

2.2. fMRI Data

We adopted a forward inference approach (Henson, 2006) to identify qualitative differences between processes associated with decision making under risk and processes associated with decision making under delay. Regions were identified as associated with one process (i.e., risk or delay) if they exhibited significantly greater activation during that condition compared to both the other condition and to the control condition. Use of this conjunction criterion allows us to rule out the alternative explanation that differences between regions reflect a single process, one that increases activation in some regions while decreasing activation in others. We additionally included the utility of the selected choice option in the model as a nuisance regressor.

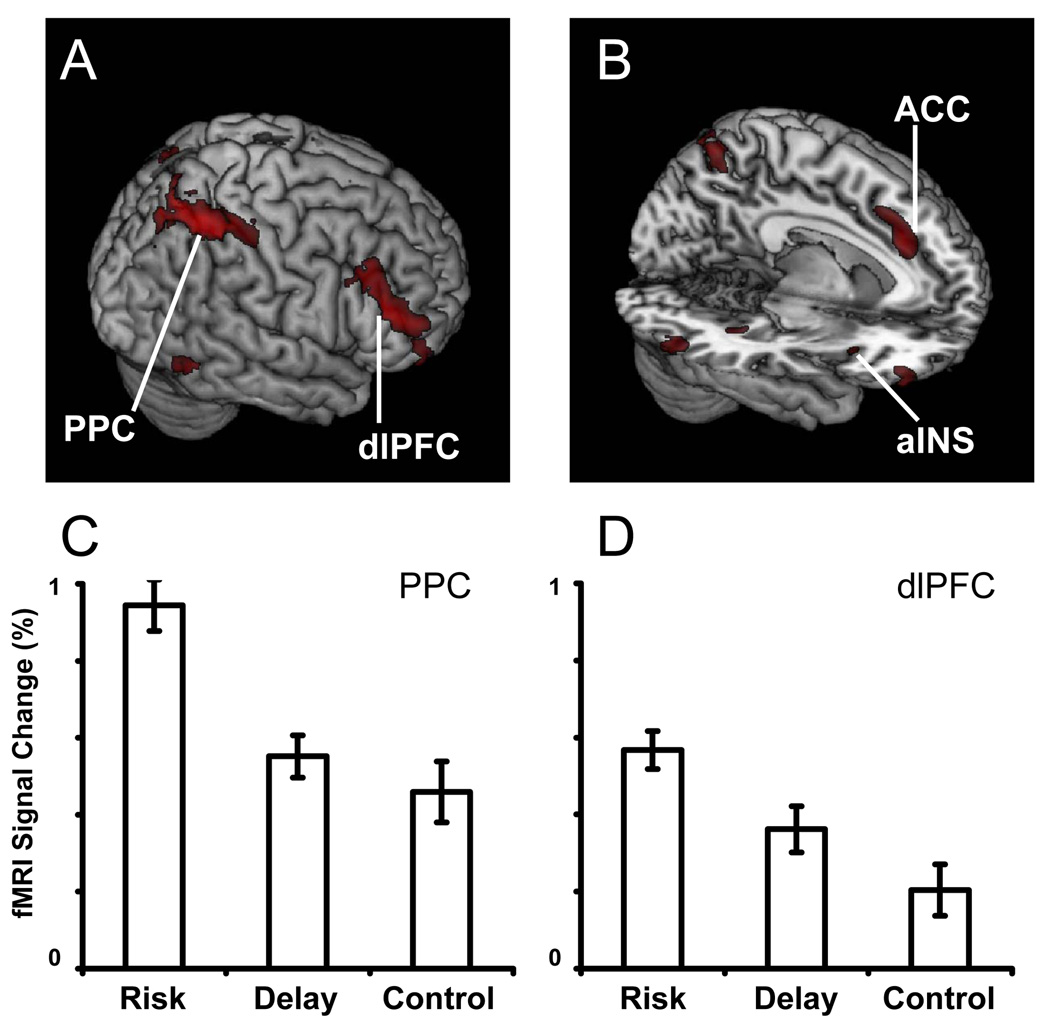

2.2.1. Decision Making under Risk

To evaluate the overall effects of the presence of risk upon brain systems for decision making, we collapsed across the Risky/Risky and Certain/Risky conditions. Increased activation to these conditions, relative to the delay conditions, was found bilaterally in regions including posterior parietal cortex (PPC), lateral prefrontal cortex (LPFC), posterior hippocampus, orbitofrontal cortex (OFC), anterior insula, and anterior cingulate. All of these regions also showed greater activation in the risk conditions than the control condition. Figure 3 displays regions that exhibited greater activation to risk in both the risk>delay and risk>control contrasts, while Table 2 provides coordinates of clusters identified in the conjunction of these contrasts.

Figure 3. Neural effects of risk during decision making.

(A,B) We identified regions who exhibited greater activation in the risk conditions than in both the control and delay conditions. Notably, we observed increased activation to decisions involving risk in the dorsolateral prefrontal cortex (dlPFC) and the posterior parietal cortex (PPC), as well as the anterior cingulate cortex (ACC) and anterior insula (aINS). Active voxels passed a corrected significance threshold of z > 2.3. (C,D) Shown are the mean activations of the PPC and dlPFC, expressed as percent signal change, to each of the three conditions. In these region, activation was greatest to risk trials, intermediate to delay trials, and least to control trials.

Table 2. Brain regions whose activation increased to risk.

We list significant clusters of activation (z>2.3; 32 significant voxels) to the conjunction of the Risk > Control and Risk>Delay conditions. Shown are the Brodmann Area, the size of the conjunction cluster, the coordinates of the local maxima (in MNI space), and the maximal z-statistic. Coordinates represent the local maxima in the Risk > Control contrast. If multiple local maxima existed in the same region, only the maximum with the highest z−score is shown.

| Region | BA | Size | x | y | z | Max Z |

|---|---|---|---|---|---|---|

| Left | ||||||

| Lingual Gyrus | 17 | 219 | −20 | −90 | −7 | 4.11 |

| Middle Occipital Gyrus | 19 | 56 | −56 | −67 | −8 | 3.07 |

| Sup. Par. Lobule / | ||||||

| Precuneus | 7 | 2872 | −28 | −57 | 47 | 4.80 |

| Middle Frontal Gyrus | 6 | 137 | −28 | 4 | 52 | 3.89 |

| Inferior Frontal Gyrus | 9 | 315 | −45 | 8 | 30 | 3.74 |

| Middle Frontal Gyrus | 46 | 283 | −50 | 33 | 17 | 3.66 |

| Midline | ||||||

| Anterior Cingulate Cortex | 9 | 973 | −3 | 29 | 35 | 4.37 |

| Right | ||||||

| Orbitofrontal Cortex | 11 | 70 | 22 | 35 | −23 | 3.61 |

| Superior Frontal Sulcus | 6 | 316 | 26 | 3 | 51 | 4.07 |

| Sup. Par. Lobule / | ||||||

| Precuneus | 7 | 4773 | 28 | −54 | 50 | 6.33 |

| Hippocampus | -- | 134 | 30 | −37 | −7 | 3.72 |

| Anterior Insula | 47 | 122 | 31 | 19 | −10 | 3.31 |

| Middle Frontal Gyrus | 11 | 516 | 41 | 51 | −10 | 4.39 |

| Middle Frontal Gyrus | 46 | 1355 | 44 | 35 | 17 | 4.89 |

| Inferior Frontal Gyrus | 44 | 288 | 49 | 8 | 25 | 3.51 |

| Inferior Temporal Gyrus | 19 | 429 | 49 | −59 | −6 | 4.48 |

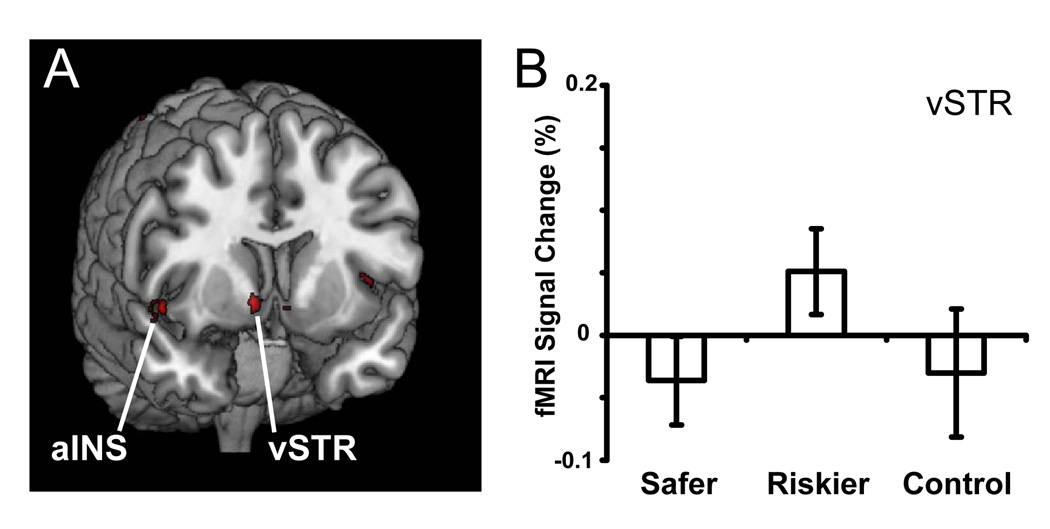

We next identified brain regions whose activation predicted whether subjects chose the more risky or less risky gamble in a pair. Choices of the riskier of the two options were predicted by increased activation in the anterior and posterior cingulate, the caudate, the insula, and ventrolateral PFC (Figure 4 and Table 3). The only cluster whose activation was predictive of choosing the safer of the two options was located in dorsolateral prefrontal cortex (dlPFC).

Figure 4. Regions whose activation predicted choices of the riskier option.

(A) We additionally identified regions whose activation was greater for choices of the riskier option than for choices of safer option, on trials involving risky choice. Choice of the risky option was predicted by increased activation in the anterior insula (aINS), the ventral striatum (vSTR), the caudate (not shown) and the ventromedial PFC (not shown). Active voxels passed a corrected significance threshold of z > 2.3. (B) Within the ventral striatum, activation increased to choices of the riskier option, but not to choices of the safe option nor to decisions in the control condition.

Table 3. Brain regions whose activation predicted choices.

Within each experimental condition, we identified brain regions whose activation predicted particular choices made by our subjects. Listed are significant clusters of activation (z>2.3; 32 significant voxels), described by their Brodmann Area, the size of the cluster, the coordinates of the local maxima (in MNI space), and the maximal z-statistic. If multiple local maxima existed in the same region, only the maximum with the highest z-score is shown. No significant clusters predicted choices of the more-delayed option when making decisions under delay.

| Region | BA | Size | x | y | z | Max Z |

|---|---|---|---|---|---|---|

| Risk – Predicts Choice of Riskier Option | ||||||

| Left | ||||||

| Insula | 44 | 59 | −40 | 6 | 6 | 2.82 |

| Posterior Cingulate | 30 | 208 | −8 | −56 | 18 | 3.31 |

| Midline | ||||||

| Anterior Cingulate | 24 | 78 | 2 | 32 | 10 | 2.77 |

| Anterior Cingulate | 24 | 76 | 2 | 34 | 0 | 2.84 |

| Right | ||||||

| Medial Frontal Gyrus | 10 | 409 | 10 | 60 | 14 | 3.34 |

| Caudate | -- | 154 | 10 | 8 | −2 | 3.07 |

| Postcentral Gyrus | 5 | 83 | 38 | −46 | 64 | 2.95 |

| Superior Temporal Gyrus/Insula | 22 | 194 | 50 | 8 | −6 | 2.85 |

| Inferior Frontal Gyrus | 47 | 108 | 58 | 38 | 0 | 3.17 |

| Risk – Predicts Choice of Safer Option | ||||||

| Right | ||||||

| Middle Frontal Gyrus | 6 | 89 | 36 | 6 | 52 | 2.8 |

| Delay – Predicts Choice of Later Option | ||||||

| Left | ||||||

| Superior Frontal Sulcus | 9 | 35 | −30 | 34 | 40 | 2.47 |

2.2.2 Decision Making under Delay

We collapsed across the Delay/Delay and Delay/Immediate conditions to identify regions preferentially engaged by decisions involving delay. Regions whose activation significantly increased when delay was present included the lateral parietal cortex, the vmPFC, dlPFC, the posterior cingulate and adjacent precuneus. We note that while some of these regions have been associated with evaluation of delayed rewards (Kable and Glimcher, 2007; McClure, et al., 2007; McClure, et al., 2004) they have also been implicated in task-independent (i.e., default-network) processing (Gusnard and Raichle, 2001; Raichle, et al., 2001). Thus, to distinguish delay-specific effects from non-specific effects of task difficulty, we used the conjunction analysis outlined at the beginning of this section. We found that three of these regions also exhibited increased activation to delay trials compared to control trials: the posterior cingulate / precuneus, the caudate, and dlPFC. Figure 5 displays regions exhibiting greater activation to delay in the both the delay>risk and delay>control contrasts, while Table 4 provides coordinates of clusters identified in each contrast.

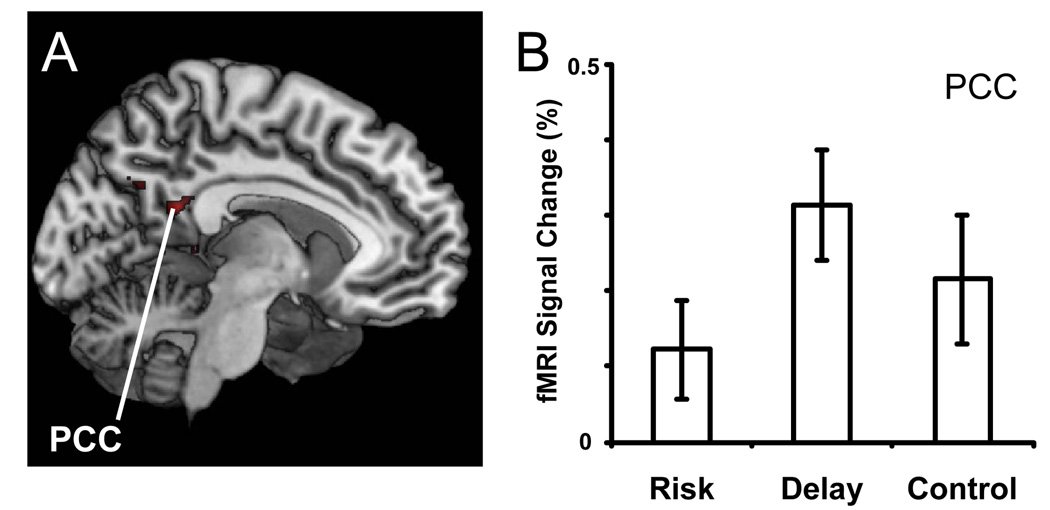

Figure 5. Neural effects of delay during decision making.

(A) We identified regions who exhibited greater activation in the delay conditions than in both the control and risk conditions. Notably, increased activation to decisions involving delay was observed in the posterior cingulate (PCC) and the caudate (not shown). Active voxels passed a corrected significance threshold of z > 2.3. (B) Shown is the mean PCC activation, expressed as percent signal change, to each of the three conditions. In this region, activation was greatest to delay trials, intermediate to control trials, and least to risk trials.

Table 4. Brain regions whose activation increased to delay.

We list significant clusters of activation (z>2.3; 32 significant voxels) to the conjunction of the Delay > Control and Delay >Risk conditions. Shown are the Brodmann Area, the size of the conjunction cluster, the coordinates of the local maxima (in MNI space), and the maximal z-statistic. Coordinates represent the local maxima in the Delay > Control contrast. If multiple local maxima existed in the same region, only the maximum with the highest z-score is shown.

| Region | BA | Size | x | y | z | Max Z |

|---|---|---|---|---|---|---|

| Left | ||||||

| Superior Frontal Sulcus | 9 | 37 | −23 | 31 | 39 | 3.26 |

| Precuneus | 30 | 32 | −18 | −41 | 7 | 2.98 |

| Precuneus | 7 | 35 | −9 | −62 | 32 | 2.80 |

| Midline | ||||||

| Posterior Cingulate | 23 | 219 | −4 | −46 | 21 | 3.44 |

| Right | ||||||

| Caudate | -- | 51 | 18 | 1 | 23 | 2.93 |

Given that our subjects expressed a wide range of delay preferences, from considerable aversion to delay to perfect delay neutrality, we additionally repeated our analyses on the subset of nine subjects who showed the greatest delay discounting. Activation within the posterior cingulate and caudate was still greater in the delay condition than the risk condition. Finally, we identified brain regions whose activation predicted whether subjects chose the later or sooner option in a pair. Choices of the later option were predicted by increased dlPFC activation (Table 3); there were no regions whose activation predicted choices of the more-immediate option.

3. Discussion

We sought to characterize whether the nominal distinction between two forms of decision making, choices about risky options and choices about delayed options, was mirrored by a distinction in their neural substrates. Using forward-inference criteria for distinguishing cognitive processes (Henson, 2006), we found evidence for a neural dissociation between risk and delay. Activation in control regions including the posterior parietal cortex and lateral prefrontal cortex was greater to decisions involving risk, whereas activation in the posterior cingulate cortex and caudate increased to decisions involving delay. This dissociation does not mean that risky and intertemporal choices rely on completely distinct neural substrates; on the contrary, these decisions undoubtedly take advantage of common mechanisms for setting up decision problems and processing the value of choice options. However, our results provide evidence for a partial dissociation in the neural systems, and thus potentially the forms of computations, that underlie these two forms of decision making.

3.1 Behavioral measures of decision preference

Subjects’ choices demonstrated a wide range of risk preferences around risk neutrality. They did now show the risk-averse behavior more commonly seen in choices between gambles representing gains, nor did they not show the commonly-found preference for certainty (Kahneman and Tversky, 1979). These results may indicate that subjects responded as if they were to be paid for all choices instead of the two for which they were actually paid. In such repeated-gambles experiments, risk-seeking behavior generally increases relative to one-shot gambles, and preference for certainty is reduced (Barron and Erev, 2003; Keren and Wagenaar, 1987).

The majority of subjects in the study were relatively delay-neutral, although delay aversion is usual for positive monetary outcomes. This may be due to the use of Amazon.com gift certificates, which could have induced anticipatory feelings during the delay period that are themselves valued. (For example, one subject remarked to the experimenter that by the time he received the gift certificate he will have forgotten all about being in the study; thus, the reward would be a nice surprise.) There is evidence that such anticipation leads to delay-neutral or even delay-seeking behavior (Loewenstein, 1987) Even so, a prior fMRI study of choice under delay used Amazon.com gift certificates as payment, although no explicit calculation of delay preference was reported (McClure, et al., 2004). However, we note that the average monetary benefit to waiting was smaller in McClure et al. (2004) than in the present experiment. The effect of choosing repeatedly between pairs of delayed outcome is not well-understood, making it possible that the use of repeated choices influenced the risk preferences of our subjects.

3.2 Neural activation to risk

In the present experiment, we observed consistent risk-specific activation in the PPC, along the intraparietal sulcus, both to risky decisions themselves and to the utility associated with a risky gamble. Activation in the intraparietal sulcus is observed in decision making tasks (Huettel, et al., 2005; Huettel, et al., 2006; Paulus, et al., 2001), potentially reflecting a role of this region in the mapping of decision value onto task output (e.g., responses, spatial locations) as shown by both functional neuroimaging (Bunge, et al., 2002; Huettel, et al., 2004) and primate electrophysiology (Platt and Glimcher, 1999).

One interpretation of this result is that decisions under risk involve an increased demand for numerical estimation and calculation, processes supported in part by PPC (Cantlon, et al., 2006; Dehaene, et al., 1998; Dehaene, et al., 2003; Piazza, et al., 2004; Venkatraman, et al., 2005). Based on a review of neuroimaging and lesion studies, Dehaene and colleagues proposed that distinct regions within the PPC are associated with three types of numerical processing: the angular gyrus supports verbal retrieval of calculations and their rules, the anterior IPS supports inexact calculations and numerical comparisons, and the posterior IPS supports numerical estimation (Dehaene, et al., 2003). Our loci of risk-related activation are consistent with the latter two functions, inexact calculation and numerical estimation, but not with the first, exact calculation. We emphasize that canonical perspectives on delay discounting posit that choice under delay involves more complex value estimation (e.g., working with exponential or hyperbolic functions) than choice under risk. Thus, this finding provides evidence against the hypothesis that risk and delay involve similar systems for computation of the value of choice options.

Of particular interest were the findings that increased activation in vmPFC, ventral striatum, and insular cortex predicted the selection of the more-risky choice option. The vmPFC has often been linked to emotion, especially emotions related to decision making (Damasio, et al., 1996) and previous studies have found that its activation tracks the regret associated with a decision (Coricelli, et al., 2005). As a behavioral parallel, the affect associated with risky decisions generally increases for decisions with greater consequences (Mellers, et al., 1997). Moreover, recent work suggests that regions within vmPFC may code for the decision utility associated with a choice option, rather than experienced utility of an obtained reward (Plassman, et al., 2007). Prior research has also shown that activation in these regions not only tracks anticipated and delivered rewards, but also predicts choices of more-risky options (Kuhnen and Knutson, 2005), consistent with our results. However, we additionally found increased activation in insular cortex to risky choices. Prior research has shown that insular activation increases to increasing risk of a decision (Paulus, et al., 2003; Preuschoff, et al., 2008), but can also push behavior away from risky options (Kuhnen and Knutson, 2005). Collectively, these results indicate a clear role for insular cortex in risky choice, but future research will be necessary to identify the specific computations that region supports.

3.3 Neural activation to delay

As noted earlier, few studies have explicitly examined the neural mechanisms that might underlie utility calculations for delayed outcomes. Two seminal studies conducted by McClure and colleagues (McClure, et al., 2004) and by Kable and Glimcher (Kable and Glimcher, 2007) implicated medial frontal cortex, the striatum (Hariri, et al., 2006), and the posterior cingulate cortex in the evaluation of delayed gambles. Consistent with these studies, we found that activation within the posterior cingulate cortex and caudate increased to decisions involving delay compared to the risk and control conditions. (Note that although there was a significant difference between delay and risk in the medial frontal cortex, there was no clear difference between delay and control in that region.) Our results therefore support the conception that these regions, which reflect important components of a neural system for valuation (Kable and Glimcher, 2007), contribute to decision making under delay.

The posterior cingulate cortex, along with other regions including part of medial prefrontal cortex, has been hypothesized to be part of the brain’s default network (Gusnard and Raichle, 2001; Mason, et al., 2007; Raichle, et al., 2001). The existence of this network has been postulated because of a set of commonly observed results: increased metabolism when a subject is an awake but inactive resting state, greater activation in non-task conditions than task conditions, and a high degree of functional connectivity during both resting state and active tasks (Bellec, et al., 2006; Damoiseaux, et al., 2006). Given these characteristics, one possible interpretation of our delay-related activation is that the decisions about delay were simply easier than those about risk, as consistent with the relatively low discount rates exhibited by many of our subjects. Although otherwise plausible, this interpretation is precluded by our analysis approach and contradicted by our results. Mean response time was considerably longer for the Delay condition than the Control condition, making untenable a task-difficulty explanation of the Delay > Control activation. Moreover, our use of a conjunction criterion (i.e., increased activation in both the Delay > Risk and Delay > Control contrasts) allows us to reject the idea that a single cognitive process could account for both our risk-related and delay-related activations (Henson, 2006).

A different, and more speculative, interpretation is that default network processes were used by our subjects to evaluate the delay gambles. It has been widely speculated that the mind-wandering that produces default mode activation may consist, in part, of planning for future events, e.g. (Buckner and Carroll, 2007). Many areas of the default network have also been found to be active in tasks involving autobiographical episodic memory (Cabeza, et al., 2004) and in tasks that involve thinking about the autobiographical future (Addis, et al., 2007; Okuda, et al., 2003; Szpunar, et al., 2007). Areas in the precuneus and posterior cingulate cortex that showed effects of delay in the present experiment also have been implicated in imagining the past and the future in (Addis, et al., 2007; Okuda, et al., 2003; Szpunar, et al., 2007). Therefore, the default network activation in the present experiment may be caused by prospective thinking, as needed to evaluate the potential future value of a gift-certificate reward. We note, however, that neurons within posterior cingulate cortex have been shown to track elements of risky decisions (McCoy and Platt, 2005), and thus further work will be necessary to elucidate the specific computations performed by this region.

3.4 Conclusions

The present study used a combination of behavioral and neuroscientific data to investigate the relations between risk and delay in decision making. Our results provide clear evidence that risky and intertemporal choices invoke different patterns of neural activation, consistent with the idea that they engage partially distinct cognitive processing. When faced with decisions involving risk, subjects engage brain regions associated with estimation and calculation, most notably the intraparietal sulcus. In contrast, intertemporal choice activates regions potentially associated with remembering past events and planning for future ones, suggesting that intertemporal choice may invoke visualizing oneself receiving the outcome to a greater extent than risky choice. Given the limitations of functional neuroimaging, these differences between conditions undoubtedly are matched by substantial similarities. Future research will be necessary to evaluate models for risky and intertemporal choice, to move beyond differences between conditions to develop specific models for choice computations.

4. Experimental Procedures

4.1. Subjects

Twenty-three subjects (12 male) aged 19–36 years (mean age: 23y) participated in an experiment that combined behavioral and fMRI testing. All acclimated to the MRI environment through a mock-scanner session. All gave informed consent according to a protocol approved by the Institutional Review Board of the Duke University Medical Center. Subjects were compensated with a guaranteed $15 in cash in addition to a bonus payout in Amazon.com gift certificates. The average bonus payouts, which depended on the choices made by the subject, were $16 for the behavioral session and $16 for the fMRI session.

4.2 Experimental Stimuli and Tasks

All subjects participated in a two-part experiment. In the first part, which was conducted in a small sound-attenuating chamber outside the scanner, subjects made a series of choices between different risky or delayed outcomes. We used this data to estimate parameters corresponding to their preferences for or aversions to risk and delay. The second part was conducted in the fMRI scanner, so that brain activation could be measured during decision making. Stimuli for both portions of the experiment were presented using the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997) for MATLAB (Mathworks, Inc.).

4.2.1. Preference Elicitation Task

Outside of the scanner and before their fMRI session, subjects chose between pairs of monetary gambles presented as pie charts. On one option in each pair, the subject could win $20 with some probability or with some delay. For risky choices, the probability of receiving the payout was indicated by the proportion of the pie chart that was green; for intertemporal choices, the delay until the payout would be received was displayed above the pie chart. We used 10 payouts: immediate delivery of $20 with probability of 10%, 25%, 50%, 75%, or 90% or certain delivery of $20 with a delay of 1, 2, 3, 4, or 5 months.

The other choice in each pair was always a certain, immediate payout. The magnitude of this control gamble started at $10, and then was varied between $0 and $20 in a binary search algorithm according to the subject’s choices. Doing this for 6 successive choices provided the subject’s indifference point: the amount of money the subject found neither better nor worse than the risky/delayed $20, with a precision of $0.31. Two additional questions using values slightly above and below the indifference point were presented to check for errors. If the subject’s response to these questions was not consistent with the calculated indifference point, the sequence of choices was started over. (Subjects could also restart any series of choices if they felt that they had made an error.) Choices were self-paced and subjects completed the screening task in approximately 10 minutes.

Subjects were compensated according to one of their expressed preferences in the preference screening task, using a variant on a technique common in experimental economics, the Becker-DeGroot-Marschak procedure (Becker, et al., 1964). Under this procedure, subjects have financial incentive to make decisions in line with their true preferences. Briefly, we selected randomly one of the ten payouts and then subjects rolled a 20-sided die to determine a value from $1 to $20. If the value they rolled was higher than the calculated indifference point for that condition, then they received the value of the die roll payable immediately. If not they received the delayed amount or gamble, the latter resolved by rolling the 20-sided die.

Subjects were paid using emailed Amazon.com gift certificates. For immediate outcomes, gift certificates were sent while the subject was in the laboratory. For delayed outcomes, the subject was emailed their gift certificate after the specified delay. This method of payment was chosen largely to ensure accurate temporal delivery of the delayed gambles; for comparison, distributing cash requires subjects to return to the laboratory, while the mailing of checks increases temporal uncertainty and requires an additional trip to deposit or cash the check. We note that subjects were told before the session that their payment would include gift certificates and that subjects reported being motivated by the opportunity to earn the gift certificates.

To analyze the preference elicitation data, we converted all probabilities to odds-against ratios. This ensures that both probability and delay preferences are bounded similarly (i.e., a lower bound of zero and no upper bound). We then plotted indifference curves over probability and delay. The area under these discounting curves provides a theory-independent estimate of risk and delay preferences (Myerson, et al., 2001); for comparison with the k-values obtained during the scanning session, we reverse its sign so that more positive values indicate greater aversion.

4.2.2. fMRI Experimental Task

During the fMRI session, subjects chose between pairs of gambles. The form of each gamble was similar to that used in the preference elicitation task, but the pairing and order of gambles was different. Two of our conditions involved risk, but not delay: Certain/Risky (C/R) and Risky/Risky(R/R). Two other conditions involved delay, but not risk: Immediate/Delayed (I/D) and Delayed/Delayed (D/D). In the last condition, Control (C/C), the subject saw two certain, immediate outcomes and could simply choose the larger amount (Figure 1).

Outcome probabilities in the C/R and R/R conditions had either high variance (50% and 25% for R/R and 100% and 50% for C/R) or low variance (50% and 40% for R/R, 100% and 80% for R/R). Delays were either 0 months and 1 month (I/D) or 1 month and 2 months (D/D). The expected value of one option in each choice pair was chosen at random in a range of $6.70 and $7.75 for the two risk conditions, and between $5 and $7 for the two delay conditions. The expected value of the second option was determined by multiplying the expected value of the first option by a randomly chosen multiplier. This multiplier was between .9 and 1.25 in the risk conditions, between 1.2 and 1.8 in the delay conditions. Values for the payouts in the control condition were chosen randomly from a range of $5–$10.

The gambles remained on the screen until the subjects indicated their choice by pressing a button. No feedback was provided during the scanner session. Trials were separated by a jittered interval of 2–8s. Subjects performed 5–6 runs of the task, each 6 minutes in duration. Subjects made as many choices as time allowed during the scanner session, averaging 215 trials per subject. The trials were randomly distributed among the five conditions. Two of the fMRI trials were randomly selected and subjects were paid according to the outcomes of their choices on those trials. As in the preference elicitation task, subjects played any risky gambles by rolling a die and received their payments in the form of Amazon.com gift certificates.

4.3. fMRI Methods and Analysis

The fMRI data were collected using an echo-planar imaging sequence with standard imaging parameters (TR = 2s; TE = 27ms; 30 axial slices parallel to the AC-PC plane, voxel size of 4 × 4 × 4mm) on a GE 3T scanner with an 8-channel head coil.

Functional images were corrected for subject motion and time of acquisition within a TR and were normalized into a standard stereotaxic space (Montreal Neurological Institute, MNI) for intersubject comparison, using FSL (Smith, et al., 2004). A smoothing filter of width 8mm was applied following normalization. All analyses were conducted via multiple regression using FSL’s FEAT tool (Smith, et al., 2005). Initial multiple regression analyses were conducted on individual runs for each subject, including the task-related regressors as described below and the subjects’ motion parameters. We then combined data across runs within a subject using a fixed-effects analysis, and then combined data across subjects using a random-effects analysis via FSL’s FLAME tool (Beckmann, et al., 2003). Our third-level analyses were controlled for multiple comparisons using a voxel-significance threshold of z > 2.3 and a minimum cluster size of 32 voxels. All figures show voxels passing this threshold (colormap: dark red > 2.3; bright red > 4.0).

We identified areas of significant activation using a multiple regression analysis. There were five regressors in the model. Three were condition-related: risk, collapsing across both types of risky trials; delay, collapsing across both types of delay trials; and control. Each of these regressors was modeled as a 1s impulse response, time-locked to the onset of the decision gamble, that was then convolved with a double-gamma hemodynamic response function. To account for subjects’ economic preferences, we also included two regressors, one for risk and one for delay, reflecting the utility of the chosen gambles. These were calculated by fitting hyperbolic curves to subjects’ in-scanner choices over probability (using an odds-against ratio) and over delay. For each subject we calculated the hyperbolic parameter k that produced a discount function consistent with the largest number of scanner-session choices. We used this k-value to calculate the utility of the chosen option for each choice. The utility regressors were normalized individually for each subject over each run so that the maximal utility gamble had a value of 1 and the minimal utility value had a value of -1. We orthogonalized the regressors in our model such that variance shared between condition regressors and the utility regressors was assigned to the utility regressor.

Separate analysis models subdivided trials according to the chosen option (i.e., more or less risky, more or less delayed) to identify regions whose activation predicted choice. All subjects were included in the analysis of risky trials, whereas only those subjects who exhibited consistent delay discounting (N = 7) were included in the analyses of delay trials. Additional analyses subdivided the risk and delay conditions according to whether or not the option had contained a certain or immediate outcome. We note that there were no significant effects of certainty/immediacy, and thus we do not discuss those analyses further. Finally, to control for the observed differences in response time across trial types, we changed our regression models by setting each event duration to that trial’s response time, and then repeated all analyses. This had minimal effects upon our results – it slightly reduced the spatial extent of most activations, but did not eliminate or introduce activated regions – and thus we report only results from the regression model using a unit duration across all events.

Acknowledgments

This research was supported by the US National Institute of Mental Health (NIMH-70685) and by the Duke Institute for Brain Sciences. Dr. Weber is now within the Department of Psychology at Iowa State University.

Abbreviations

- ACC

Anterior Cingulate

- DLPFC

Dorsolateral Prefrontal Cortex

- IPS

Intraparietal Sulcus

- LPC

Lateral Parietal Cortex

- LPFC

Lateral Prefrontal Cortex

- MPC

Medial Parietal Cortex

- PCC

Posterior Cingulate Cortex

- PFC

Prefrontal Cortex

- PPC

Posterior Parietal Cortex

- VMPFC

Ventromedial Prefrontal Cortex

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Addis DR, Wong AT, Schacter DL. Remembering the past and imagining the future: Common and distinct neural substrates during event construction and elaboration. Neuropsychologia. 2007;45:1363–1377. doi: 10.1016/j.neuropsychologia.2006.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allais M. Le comportement de l’homme rationnel devant le risque: Critique le postulats et axioms de L’École Américaine. Econometrica. 1953;21:503–546. [Google Scholar]

- Barron G, Erev I. Small feedback-based decisions and their limited correspondence to description-based decisions. Journal Of Behavioral Decision Making. 2003;16:215–233. [Google Scholar]

- Becker G, DeGroot M, Marschak J. Measuring utility by a single-response sequential method. Behavioural Science. 1964;9 doi: 10.1002/bs.3830090304. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in FMRI. NeuroImage. 2003;20:1052–1063. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Bellec P, Perlbarg V, Jbabdi S, Pelegrini-Issac M, Anton JL, Doyon J, Benali H. Identification of large-scale networks in the brain using fMRI. Neuroimage. 2006;29:1231–1243. doi: 10.1016/j.neuroimage.2005.08.044. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Buckner RL, Carroll DC. Self-projection and the brain. Trends in Cognitive Sciences. 2007;11:49–57. doi: 10.1016/j.tics.2006.11.004. [DOI] [PubMed] [Google Scholar]

- Bunge SA, Hazeltine E, Scanlon MD, Rosen AC, Gabrieli JD. Dissociable contributions of prefrontal and parietal cortices to response selection. NeuroImage. 2002;17:1562–1571. doi: 10.1006/nimg.2002.1252. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Prince SE, Daselaar SM, Greenberg DL, Budde M, Dolcos F, LaBar KS, Rubin DC. Brain Activity during Episodic Retrieval of Autobiographical and Laboratory Events: An fMRI Study using a Novel Photo Paradigm. J. Cogn. Neurosci. 2004;16:1583–1594. doi: 10.1162/0898929042568578. [DOI] [PubMed] [Google Scholar]

- Cantlon JF, Brannon EM, Carter EJ, Pelphrey KA. Functional imaging of numerical processing in adults and 4-y-old children. Plos Biology. 2006;4:844–854. doi: 10.1371/journal.pbio.0040125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal RN, Cheung TH. Nucleus accumbens core lesions retard instrumental learning and performance with delayed reinforcement in the rat. BMC Neuroscience. 2005;6 doi: 10.1186/1471-2202-6-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal RN, Howes NJ. Effects of lesions of the nucleus accumbens core on choice between small certain rewards and large uncertain rewards in rats. Bmc Neuroscience. 2005;6 doi: 10.1186/1471-2202-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapman GB, Weber BJ. Decision biases in intertemporal choice and choice under uncertainty: Testing a common account. Memory and Cognition. 2006;34:589–602. doi: 10.3758/bf03193582. [DOI] [PubMed] [Google Scholar]

- Coricelli G, Critchley HD, Joffily M, O'Doherty JP, Sirigu A, Dolan RJ. Regret and its avoidance: a neuroimaging study of choice behavior. Nature Neuroscience. 2005;8:1255–1262. doi: 10.1038/nn1514. [DOI] [PubMed] [Google Scholar]

- Crean JP, de Wit H, Richards JB. Reward discounting as a measure of impulsive behavior in a psychiatric outpatient population. Experimental and Clinical Psychopharmacology. 2000;8:155–162. doi: 10.1037//1064-1297.8.2.155. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Everitt BJ, Bishop D. The Somatic Marker Hypothesis and the Possible Functions of the Prefrontal Cortex [and Discussion] Philosophical Transactions: Biological Sciences. 1996;351:1413–1420. doi: 10.1098/rstb.1996.0125. [DOI] [PubMed] [Google Scholar]

- Damoiseaux JS, Rombouts SA, Barkhof F, Scheltens P, Stam CJ, Smith SM, Beckmann CF. Consistent resting-state networks across healthy subjects. Proc Natl Acad Sci U S A. 2006;103:13848–13853. doi: 10.1073/pnas.0601417103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S, Dehaene-Lambertz G, Cohen L. Abstract representation of numbers in the animal and human brain (vol 21, pg 355, 1998) Trends in Neurosciences. 1998;21:509–509. doi: 10.1016/s0166-2236(98)01263-6. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Piazza M, Pinel P, Cohen L. Three parietal circuits for number processing. Cognitive Neuropsychology. 2003;20:487–506. doi: 10.1080/02643290244000239. [DOI] [PubMed] [Google Scholar]

- Dickhaut J, McCabe K, Nagode JC, Rustichini A, Smith K, Pardo JV. The impact of the certainty context on the process of choice. Proceedings of the National Academy of Sciences. 2003;100:3536–3541. doi: 10.1073/pnas.0530279100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Myerson J. Exponential versus hyperbolic discounting of delayed outcomes: Risk and waiting time. American Zoologist. 1996;36:496–505. [Google Scholar]

- Green L, Myerson J, Ostaszewski P. Amount of reward has opposite effects on the discounting of delayed and probabilistic outcomes. Journal Of Experimental Psychology-Learning Memory And Cognition. 1999;25:418–427. doi: 10.1037//0278-7393.25.2.418. [DOI] [PubMed] [Google Scholar]

- Gusnard DA, Raichle ME. Searching for a baseline: Functional Imaging and the Resting Human Brain. Nature Reviews Neuroscience. 2001;2:685–694. doi: 10.1038/35094500. [DOI] [PubMed] [Google Scholar]

- Hariri AR, Brown SM, Williamson DE, Flory JD, de Wit H, Manuck SB. Preference for immediate over delayed rewards is associated with magnitude of ventral striatal activity. J Neurosci. 2006;26:13213–13217. doi: 10.1523/JNEUROSCI.3446-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Platt ML. Temporal Discounting Predicts Risk Sensitivity in Rhesus Macaques. Current Biology. 2007;17:49–53. doi: 10.1016/j.cub.2006.10.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson R. Forward inference using functional neuroimaging: dissociations versus associations. Trends Cogn Sci. 2006;10:64–69. doi: 10.1016/j.tics.2005.12.005. [DOI] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310:1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Misiurek J, Jurkowski AJ, McCarthy G. Dynamic and strategic aspects of executive processing. Brain Research. 2004;1000:78–84. doi: 10.1016/j.brainres.2003.11.041. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Song AW, McCarthy G. Decisions under uncertainty: Probabilistic context influences activation of prefrontal and parietal cortices. Journal Of Neuroscience. 2005;25:3304–3311. doi: 10.1523/JNEUROSCI.5070-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nature Neuroscience. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Prospect Theory: Analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- Keren G, Roelofsma P. Immediacy And Certainty In Intertemporal Choice. Organizational Behavior And Human Decision Processes. 1995;63:287–297. [Google Scholar]

- Keren G, Wagenaar WA. Violation Of Utility-Theory In Unique And Repeated Gambles. Journal Of Experimental Psychology-Learning Memory And Cognition. 1987;13:387–391. [Google Scholar]

- Kheramin S, Body S, Ho MY, Velazquez-Martinez DN, Bradshaw CM, Szabadi E, Deakin JFW, Anderson IM. Role of the orbital prefrontal cortex in choice between delayed and uncertain reinforcers: a quantitative analysis. Behavioural Processes. 2003;64:239–250. doi: 10.1016/s0376-6357(03)00142-6. [DOI] [PubMed] [Google Scholar]

- Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47:763–770. doi: 10.1016/j.neuron.2005.08.008. [DOI] [PubMed] [Google Scholar]

- Loewenstein G. Anticipation and the Valuation of Delayed Consumption. Economic Journal. 1987;97:666–684. [Google Scholar]

- Mason MF, Norton MI, Van Horn JD, Wegner DM, Grafton ST, Macrae CN. Wandering Minds: The Default Network and Stimulus-Independent Thought. Science. 2007;315:393–395. doi: 10.1126/science.1131295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur JE. Theories of Probabilistic Reinforcement. Journal of the Experimental Analysis of Behavior. 1989;51:87–99. doi: 10.1901/jeab.1989.51-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure SM, Ericson KM, Laibson DI, Loewenstein G, Cohen JD. Time Discounting for Primary Rewards. J. Neurosci. 2007;27:5796–5804. doi: 10.1523/JNEUROSCI.4246-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nature Neuroscience. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- Mellers BA, Schwartz A, Ho K, Ritov I. Decision affect theory: Emotional reactions to the outcomes of risky options. Psychological Science. 1997;8:423–429. [Google Scholar]

- Mitchell SH. Measures of impulsivity in cigarette smokers and non-smokers. Psychopharmacology. 1999;146:455–464. doi: 10.1007/pl00005491. [DOI] [PubMed] [Google Scholar]

- Mitchell SH. Effects of short-term nicotine deprivation on decision-making: Delay, uncertainty and effort discounting. Nicotine & Tobacco Research. 2004;6:819–828. doi: 10.1080/14622200412331296002. [DOI] [PubMed] [Google Scholar]

- Mobini S, Chiang TJ, Ho MY, Bradshaw CM, Szabadi E. Effects of central 5-hydroxytryptamine depletion on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology. 2000;152:390–397. doi: 10.1007/s002130000542. [DOI] [PubMed] [Google Scholar]

- Myerson J, Green L, Hanson JS, Holt DD, Estle SJ. Discounting delayed and probabilistic rewards: Processes and traits. Journal of Economic Psychology. 2003;24:619–635. [Google Scholar]

- Myerson J, Green L, Warusawitharana M. Area under the curve as a measure of discounting. Journal Of The Experimental Analysis Of Behavior. 2001;76:235–243. doi: 10.1901/jeab.2001.76-235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohmura Y, Takahashi T, Kitamura N. Discounting delayed and probabilistic monetary gains and losses by smokers of cigarettes. Psychopharmacology. 2005;182:508–515. doi: 10.1007/s00213-005-0110-8. [DOI] [PubMed] [Google Scholar]

- Okuda J, Fujii T, Ohtake H, Tsukiura T, Tanji K, Suzuki K, Kawashima R, Fukuda H, Itoh M, Yamadori A. Thinking of the future and past: the roles of the frontal pole and the medial temporal lobes. Neuroimage. 2003;19:1369–1380. doi: 10.1016/s1053-8119(03)00179-4. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Hozack N, Zauscher B, McDowell JE, Frank L, Brown GG, Braff DL. Prefrontal, parietal, and temporal cortex networks underlie decision-making in the presence of uncertainty. Neuroimage. 2001;13:91–100. doi: 10.1006/nimg.2000.0667. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Rogalsky C, Simmons A, Feinstein JS, Stein MB. Increased activation in the right insula during risk-taking decision making is related to harm avoidance and neuroticism. Neuroimage. 2003;19:1439–1448. doi: 10.1016/s1053-8119(03)00251-9. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- Piazza M, Izard V, Pinel P, Le Bihan D, Dehaene S. Tuning curves for approximate numerosity in the human intraparietal sulcus. Neuron. 2004;44:547–555. doi: 10.1016/j.neuron.2004.10.014. [DOI] [PubMed] [Google Scholar]

- Plassman H, O'Doherty JP, Rangel A. Orbitofrontal Cortex Encodes Willingness to Pay in Everyday Economic Transactions. Journal of Neuroscience. 2007;27:9984–9988. doi: 10.1523/JNEUROSCI.2131-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Prelec D, Loewenstein G. Decision-Making Over Time And Under Uncertainty - A Common Approach. Management Science. 1991;37:770–786. [Google Scholar]

- Preuschoff K, Quartz SR, Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. J Neurosci. 2008;28:2745–2752. doi: 10.1523/JNEUROSCI.4286-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H, Brown J, Cross D. Discounting in judgments of delay and probability. Journal of Behavioral Decision Making. 2000;13:145–159. [Google Scholar]

- Rachlin H, Logue AW, Gibbon J, Frankel M. Cognition and Behavior in Studies of Choice. Psychological Review. 1986;93:33–45. [Google Scholar]

- Rachlin H, Raineri A, Cross D. Subjective Probability And Delay. Journal Of The Experimental Analysis Of Behavior. 1991;55:233–244. doi: 10.1901/jeab.1991.55-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. A default mode of brain function. Proceedings of the National Academy of Sciences. 2001;98:676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds B, Karraker K, Horn K, Richards JB. Delay and probability discounting as related to different stages of adolescent smoking and non-smoking. Behavioural Processes. 2003;64:333–344. doi: 10.1016/s0376-6357(03)00168-2. [DOI] [PubMed] [Google Scholar]

- Reynolds B, Richards JB, Horn K, Karraker K. Delay discounting and probability discounting as related to cigarette smoking status in adults. Behavioural Processes. 2004;65:35–42. doi: 10.1016/s0376-6357(03)00109-8. [DOI] [PubMed] [Google Scholar]

- Richards JB, Zhang L, Mitchell SH, de Wit H. Delay or probability discounting in a model of impulsive behavior: Effect of alcohol. Journal of the Experimental Analysis of Behavior. 1999;71:121–143. doi: 10.1901/jeab.1999.71-121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers RD, Owen AM, Middleton HC, Williams EJ, Pickard JD, Sahakian BJ, Robbins TW. Choosing between small, likely rewards and large, unlikely rewards activates inferior and orbital prefrontal cortex. Journal of Neuroscience. 1999;19:9029–9038. doi: 10.1523/JNEUROSCI.19-20-09029.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rotter JB. Social Learning and Clinical Psychology. Englewood Cliffs, NJ: Prentice-Hall; 1954. [Google Scholar]

- Smith SM, Beckmann CF, Ramnani N, Woolrich MW, Bannister PR, Jenkinson M, Matthews PM, McGonigle DJ. Variability in fMRI: A re-examination of inter-session differences. Human Brain Mapping. 2005;24:248–257. doi: 10.1002/hbm.20080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage. 2004;23:S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Szpunar KK, Watson JM, McDermott KB. Neural substrates of envisioning the future. Proceedings of the National Academy of Sciences. 2007;104:642–647. doi: 10.1073/pnas.0610082104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka SC, Doya K, Okada G, Ueda K, Okamoto Y, Yamawaki S. Prediction of immediate and future rewards differentially recruits cortico-basal ganglia loops. Nature Neuroscience. 2004;7:887–893. doi: 10.1038/nn1279. [DOI] [PubMed] [Google Scholar]

- Thaler R. Some Empirical-Evidence On Dynamic Inconsistency. Economics Letters. 1981;8:201–207. [Google Scholar]

- Venkatraman V, Ansari D, Chee MWL. Neural correlates of symbolic and non-symbolic arithmetic. Neuropsychologia. 2005;43:744–753. doi: 10.1016/j.neuropsychologia.2004.08.005. [DOI] [PubMed] [Google Scholar]

- Weber BJ, Chapman GB. Playing for peanuts: Why is risk seeking more common for low-stakes gambles? Organizational Behavior And Human Decision Processes. 2005;97:31–46. [Google Scholar]

- Wittmann M, Leland DS, Paulus MP. Time and decision making: differential contribution of the posterior insular cortex and the striatum during a delay discounting task. Exp Brain Res. 2007;179:643–653. doi: 10.1007/s00221-006-0822-y. [DOI] [PubMed] [Google Scholar]