Abstract

A challenge in pharmacy education is to document student learning and retention. With the unveiling of the Standards 2007 by the Accreditation Council for Pharmacy Education, the impetus has been placed on colleges and schools of pharmacy that must meet those standards. One possible response to this challenge is administering progress examinations to assess a student's knowledge base at specified points in the curriculum. The University of Houston College of Pharmacy has developed an annual comprehensive assessment to evaluate student learning and retention at each level of the didactic portion of the curriculum. The development, utilization, and results of MileMarker Assessments are described.

Keywords: progress examinations, assessment, student learning and retention, MileMarker

INTRODUCTION

It is becoming increasingly more common and necessary for schools and colleges to develop methods and processes for assessing their curricula and programs. In many cases this process is driven by their respective accrediting agencies. This is especially true in pharmacy where the Accreditation Council for Pharmacy Education (ACPE) requires the use of assessments in all aspects of a self-study. Although assessment tools can be developed to evaluate courses, programs, or student and employer satisfaction, perhaps a major impetus for educators is to determine whether students are actually learning and retaining information.

Methods that can be used to assess student learning fall into 2 general categories: formative and summative assessment.1 These assessments, which include portfolios, capstone projects, standardized tests, course-embedded activities, and in-class examinations, can provide direct evidence of student learning.2 Indirect evidence of student learning can be obtained from student focus groups, employer, student or alumni surveys, and data concerning career success (position, admission to graduate programs, job placement) after graduation. A combination of methods is likely to yield a more comprehensive understanding of the success of a curriculum or program in facilitating student learning.2

Formative assessments have minimal impact on grades, and can provide students with ongoing feedback concerning their performance and provide a means for students to practice what is being learned as well as to gauge their progress throughout the academic year. This kind of assessment provides educators with a means of informing students of their progress throughout the semester.1,3 In contrast, summative assessments are high stakes and actually provide a means of judging student performance and assigning a grade at the end of a designated time. Summative assessments provide the information necessary to determine a student's grade point average, success in a course, and preparedness to continue into subsequent courses, and ultimately, to award a degree to the student indicating his/her readiness to enter a chosen career path.3

Medical schools use a well-defined process for assessing the competencies of their students at several stages in their academic careers. In pharmacy, the concept of an assessment to determine a student's preparedness to progress into the experiential portion of their education or to enter the profession is not new. However, assessment is only now becoming an important initiative nationally as indicated by discussions regarding progress examinations.4 In addition, ACPE Standards 2007, Guideline 15.1 recommends that schools incorporate “… periodic, psychometrically sound, comprehensive, knowledge-based and performance-based formative and summative assessments…”5 Attempts to develop examinations that can be used to evaluate students for placement into experiential courses or general competency have not been widespread and have been of varying success.6,7 In 2000, only 19.6% of 46 colleges and schools were administering cumulative examinations and 5 planned to implement such a process.8 Another report indicated an interest in assessing students as they progress through the pharmacy curriculum.9 At that institution, an assessment was administered in each of the 4 years of the curriculum. With each subsequent assessment, the number of questions increased to reflect the amassed knowledge of the students as they completed each year of their academic training.9

As the assessment program at the University of Houston College of Pharmacy evolved, we needed to determine whether our students actually acquired and retained knowledge that could be applied to subsequent courses or advanced pharmacy practice experiences (APPEs). Students often perform well on classroom examinations in order to earn a grade; however, it is not uncommon for them to forget information in a relatively short period of time. When our students were retested on material from a recently completed semester, the examination scores provided little evidence that the students had retained the newly acquired material. Thus, we found it essential to emphasize to students that learning is a continuous process, during which new material builds upon a solid base of previously learned material.

In order to address the dynamic changes in the profession of pharmacy, the College of Pharmacy at the University of Houston has continually endeavored to improve pharmacy education with ongoing student and curricular evaluation. The purpose of our assessment efforts is to ensure that our students receive the best education possible and are well prepared to enter the profession upon graduation. The College has developed and implemented MileMarker Assessments to assess student learning and retention as one portion of this ongoing process. MileMarker Assessments began as a pilot project in 1997 and the MileMarker Assessment process was approved by the College of Pharmacy faculty in 1999. The first MileMarker Assessment tested only knowledge retention from the previous fall semester. It was given at the beginning of a spring semester without notifying the students in advance. The results were dismal, providing further evidence that information learned in a semester is rapidly lost. MileMarker Assessments took on a number of forms and went through a number of revisions before reaching its current form, first administered in 2000. The purpose of this manuscript is to provide information regarding the development, utilization, and success of annual comprehensive assessments of learning and retention at the University of Houston College of Pharmacy.

IMPLEMENTATION OF THE MILEMARKER ASSESSMENTS

In order to assess student learning and retention of information we developed what we call the MileMarker Assessments. These educational devices are designed with a number of objectives in mind.

(1) To obtain quantitative assessment data on student learning and retention of knowledge.

(2)To encourage review of newly acquired knowledge and instill lifelong learning practices.

(3)To determine the students' knowledge base as a preparation for subsequent courses and advanced pharmacy practice experiences.

(4)To demonstrate the interrelationship of material from different courses and disciplines from basic science to clinical application.

(5)To serve as an objective measure of the success of the curriculum and the students.

The team appointed to develop the assessments and oversee the process included preceptors, practitioners, and faculty members. Additional faculty members were charged with writing cases relevant to the content of the courses. After review, the cases were distributed to all faculty members for input and suggestions.

MileMarker Assessments are cumulative and comprehensive, using a case-based format with 200 single-answer multiple-choice questions. Multiple-choice questions for the assessment, based upon the cases, are submitted by faculty members assigned to teach the relevant material. The number of questions collected from each course is proportional to the number of credit hours devoted to each course. The MileMarker team and faculty members with expertise in each subject area reviewed and rated each question using a technique developed by Angoff.10 The experts were asked to predict what percent of minimally competent students (C students) will answer a specific question correctly. A mean of the values was determined and linked to that particular question. The team determined the mean of the Angoff score for all questions selected for the assessment and through this process determined the passing score for each MileMarker Assessment.

Each year the faculty members are asked to review the questions for accuracy, applicability, and relevance. The item analysis of the results is also provided as additional information. This procedure may initiate a process whereby a question is refined (stem of foils) or replaced by one that is deemed more suitable. The Angoff process is repeated for amended or new questions. Each year faculty members provide new questions to update the assessment or to contribute to the MileMarker question bank.

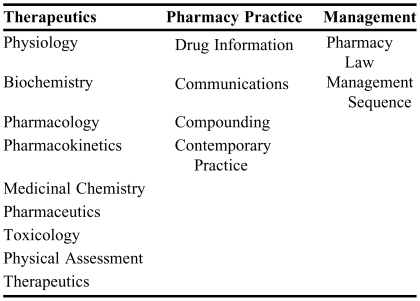

Beginning in 2000, for each pharmacy class, 3 MileMarker Assessments are administered over the first 3 years (P1, P2 and P3) of the curriculum, representing the didactic portion of the pharmacy program. MileMarkers are designed to test specific outcomes expected of students at various stages in the curriculum. Focus areas of each MileMarker Assessment include therapeutics, pharmacy practice, and management. Table 1 provides the distribution of material in each category on the MileMarker Assessment instruments.

Table 1.

Distribution of Material on the MileMarker Assessments

Both formative and summative, MileMarker I and II are administered during the first 2 days of the fall semester of years 2 and 3. MileMarker I consists of year 1 material. Although MileMarker II emphasizes P2 material, approximately 30% of the questions relate to P1 material. Credit awarded to students for successfully passing MileMarker I and/or II counts towards the overall passing score for MileMarker IIIa. Performance on these assessments does not impact progression in the program. However, students failing to pass MileMarker I and/or II (performing below minimum competency), must complete an appropriate remediation assignment based upon a determined area or areas of weakness. Students who must remediate will not receive points toward MileMarker III. Students receive individual score reports that provide detailed information regarding their overall performance as well as how they scored in each subject area. In this way, they are informed of their strengths and weaknesses.

Students are expected to pass MileMarker III, which is summative in nature and given near the end of the third didactic year. While third-year material is emphasized, material from P1 and P2 is also included. A passing score is determined through the Angoff procedure. Students failing to meet or exceed the minimum standard are considered as not passing and are required to retake the entire assessment (MileMarker IIIb) prior to the start of the experiential rotations. Students failing to meet or exceed the predetermined competency score on MileMarker IIIb do not progress into their APPEs until they are successful. Additional opportunities to take MileMarker III are provided at specified intervals. Students taking MileMarker III also receive the individualized, detailed score report.

STUDENT PERFORMANCE ON THE MILEMARKERS

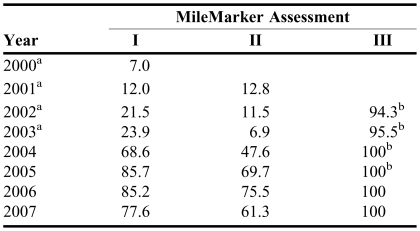

At the time the current format was first approved by the College faculty only MileMarker III was summative. It determined progression into the experiential year of the curriculum. MileMarkers I and II were to be strictly formative. As a result, student success/performance on the first 2 MileMarker Assessments was low as indicated in Table 2. This was attributed to no rewards/punishments being associated with the outcome even though the College provided recognition and gifts in the form of useful reference books to the students with the best performance on the assessment. Many students openly admitted that they made no effort to prepare for the assessment because of lack of incentives. In response to the poor performance of students on MileMarkers I and II, the faculty voted in 2004 to include rewards (credit toward MileMarker IIIa) and punishment (remediation requirement) in the process as described earlier. Since making MileMarkers I and II summative with the addition of rewards and punishment, the pass rate has increased significantly, with improvement in performance reaching statistical significance (p < 0.01) for both MileMarker I and II (Table 2). The level of success has been much greater with MileMarker III, with pass rates at or near 100%. This can be attributed to the MileMarker III Assessment always being high stakes. In the first 2 years of offering MileMarker III, 5 students were held back due to their performance on the assessment. All 5 passed on their third attempt after being held back for 1 APPE. Over the past 4 years, the pass rate has been 100% and for the last 2 years all have passed on their first attempt.

Table 2.

Pass Rates on the MileMarker Assessments, %

Prior to points/remediation, postincentive performance t-test p < 0.01 for MileMarker I and II

Pass rate after 2 attempts

DISCUSSION

Acceptance by all stakeholders was, and still is, essential for the success of our MileMarker Assessments. Faculty buy-in and engagement in the process was critical to the success of the MileMarker Assessments. Faculty members are asked to participate in all aspects of the development, writing, and validation of the instrument, and results are shared with the faculty in a timely manner. As a result, there is a feeling of ownership of the process and outcomes.

Acceptance by the students is also essential. When the MileMarker Assessments were first introduced, there was considerable resistance on the part of the students. Perceived inequities in the process caused much of that resistance. Senior students were not required to take the MileMarker, while new students were required to take all 3, beginning in the fall of their second year. However, over time the MileMarker has become an accepted and expected assessment along with the traditional course examinations and demonstrations of skills.

The results of the MileMarker Assessments appear to corroborate findings of investigations illustrating that some sort of punishment has a stronger influence upon behavior than rewards.11,12 The greatest improvement in performance on MileMarkers I and II occurred when there was the possibility of remediation in contrast to only the possibility of receiving an award or credit toward MileMarker III. The latter has been a strong motivating force because MileMarker III has always been summative and success on this assessment has been high as a result.

Our MileMarker Assessments provide the College with objective data concerning student learning and retention. Furthermore, the results have provided preliminary data to corroborate findings from other college assessment practices. Each semester students are asked to express their level of confidence in response to course proficiency statements. Students who felt confident about an area also did well in the same area on the final examination in a course, and a majority also performed well in the same area on the MileMarker. In contrast, areas in which a low level of confidence was expressed correlated with low performance levels on both the final examination and MileMarker. Therefore, we have been able to further correlate quantitative data to complement the qualitative data obtained from student feedback. This observation is encouraging and could lead to proactive interventions for students with identified weaknesses.

The process of developing the MileMarker Assessments has provided new insight into the teaching of our curriculum. The assessments have brought together goals and objectives of the courses and curriculum with actual teaching and examination. The faculty members involved in the ongoing process have developed a broader view of the educational process beyond that contained within their individual courses. The development and implementation of the MileMarker Assessments was, and still is, time consuming and not without some problems. Overall, our experience with the MileMarker Assessments has been informative and successful in achieving the objectives and rationale supporting the use of MileMarker Assessments.

ACKNOWLEDGEMENTS

The author would like to thank Thomas Lemke, PhD, for his valuable input regarding the MileMarkers as well as assessment in general. Dr. Lemke spearheaded the development of the MileMarker Assessments at the University of Houston College of Pharmacy.

REFERENCES

- 1.Abate MA, Stamatakis MK, Haggett RR. Excellence in curricular development and assessment. Am J Pharm Educ. 2003;67 Article 89. [Google Scholar]

- 2.Kirschenbaum HL, Brown ME, Kalis MM. Programmatic curricular outcomes assessment at colleges and schools of pharmacy in the United States and Peurto Rico. Am J Pharm Educ. 2006;70 doi: 10.5688/aj700108. Article 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Miller AH, Imrie BW, Cox K. Student Assessment in Higher Education. London: Kogen Page Ltd; 1998. pp. 32–6. [Google Scholar]

- 4.Plaza CM. Progress Examinations in Pharmacy Education. Am J Pharm Educ. 2007;71 doi: 10.5688/aj710466. Article 66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Accreditation Council for Pharmacy Educatin. Accreditation Standards and Guidelines. http://www.acpe-accredit.org/standards/default.asp. Accessed December 14, 2007.

- 6.Gehres RW, Mergener MA, Sarnoff D. Comprehensive placement examinations for sequencing pharmacy students into experiential courses and for determining appropriate remediation. Am J Pharm Educ. 1989;53:16–19. [Google Scholar]

- 7.Pray WS, Popovich NG. The development of a standardized competency examination for Doctor of Pharmacy students. Am J Pharm Educ. 1985;49:1–9. [Google Scholar]

- 8.Ryan GJ, Nykamp D. Use of cumulative examinations at US schools of pharmacy. Am J Pharm Educ. 2000;64:409–12. [Google Scholar]

- 9.Supernaw RB, Mehvar R. Methodology for the assessment of competence and the definition of deficiencies of students in all levels of the curriculum. Am J Pharm Educ. 2002;66:1–4. [Google Scholar]

- 10.Angoff WH. Scales, norms, and equivalent scores. In: Thorndike RL, editor. Educational Measurement. Washington, DC: American Council on Education; 1971. pp. 514–5. [Google Scholar]

- 11.Sansgiry SS, Chanda S, Lemke TL, Szilagyi JE. Effect of incentives on student performance on Milemarker examinations. Am J Pharm Educ. 2006;70 doi: 10.5688/aj7005103. Article 103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Oliver P. Rewards and punishments as selective incentives for collective action: theoretical investigations. Am J Sociol. 1980;85:1356–75. [Google Scholar]