Abstract

Spoken word recognition shows gradient sensitivity to within-category voice onset time (VOT), as predicted by several current models of spoken word recognition, including TRACE (McClelland & Elman, Cognitive Psychology, 1986). It remains unclear, however, whether this sensitivity is short-lived or whether it persists over multiple syllables. VOT continua were synthesized for pairs of words like barricade and parakeet, which differ in the voicing of their initial phoneme, but otherwise overlap for at least four phonemes, creating an opportunity for “lexical garden-paths” when listeners encounter the phonemic information consistent with only one member of the pair. Simulations established that phoneme-level inhibition in TRACE eliminates sensitivity to VOT too rapidly to influence recovery. However, in two Visual World experiments, look-contingent and response-contingent analyses demonstrated effects of word initial VOT on lexical garden-path recovery. These results are inconsistent with inhibition at the phoneme level and support models of spoken word recognition in which sub-phonetic detail is preserved throughout the processing system.

Keywords: Spoken Word Recognition, Speech Perception, Gradiency, Eye Movements, Lexical ambiguity

Speech perception has been classically framed as a problem of overcoming variability in the signal to recover underlying linguistic categories, such as features, phonemes and words. This variability arises because the signal unfolds as a transient series of acoustic events created by partially overlapping articulatory gestures. These gestures are conditioned by the segment currently being uttered and by the properties of preceding and upcoming segments, such processes impose significant variability on the signal. Even multiple utterances of the same word produced by a single speaker in a consistent context show significant variability (Newman, Clouse, & Burnham, 2001). Therefore, different tokens of the same sound and same word are likely to vary along a number of different dimensions.

While acoustic cues are variable, listeners must ultimately make a more-or-less discrete decision about word identity. Although early work suggested that within-category variation was lost during categorization, especially for consonants (Liberman, Harris, Hoffman & Griffith, 1957; Liberman, Harris, Kinney & Lane, 1961; Ferrero, Pelamatti & Vagges, 1982; Kopp, 1969; Larkey, Wald & Strange, 1978), more recent research demonstrates that spoken word recognition is exquisitely sensitive to sub-phonetic variation. For example, listeners use segmental duration to help distinguish between a monosyllabic word, such as ham, and a potential carrier word, such as hamster (Davis, Gaskell & Marslen-Wilson, 2002; Gow & Gordon, 1995; Salverda, Dahan & McQueen, 2003; Salverda, Dahan, Tanenhaus, Crosswhite, Masharov & McDonough, 2007). Misleading coarticulatory information in vowels delays recognition, especially when the misleading information is temporarily consistent with another lexical candidate (Marslen-Wilson & Warren, 1994; McQueen, Norris & Cutler, 1999; Dahan, Magnuson, Tanenhaus & Hogan, 2001b; Whalen, 1991). In addition, allophonic variation in /t/ can augment or decrease lexical activation (McLennan, Luce & Charles-Luce, 2003; Connine, 2004)

Some of the most striking evidence for sensitivity to sub-phonetic variation comes from studies examining within-category differences in lexically contrastive dimensions which were once believed to be perceived categorically, such as Voice Onset Time (VOT). VOT is defined as the time delay between the release of airflow blocked by the lips and the onset of glottal pulses from the closed vocal folds, and is the strongest cue for distinguishing between voiced plosives like /b/, /d/, and /g/ from voiceless sounds like /p/, /t/ and /k/. VOT is shorter for voiced than it is for voiceless sounds. A significant amount of variation is created by the surrounding phonetic context. Factors such as prosodic strength (Fougeron & Keating, 1997), speaking rate (Miller, Green & Reeves, 1986), and place of articulation (Lisker & Abramson, 1964) all add variation to VOT. It varies consistently between speakers (Allen, Miller & De Steno, 2003) and as a function of whether the segment arose from a speech error (Goldrick & Blumstein, 2006). This variability means that for a given segment and VOT there is some degree of uncertainty about the voicing category of that segment.

In a seminal study, Andruski, Blumstein and Burton (1994) assessed cross modal lexical priming as listeners heard fully voiceless targets or stimuli with 1/3 or 2/3 of the prototypical VOT value. Importantly, both the 1/3- and 2/3-voiced conditions represented within-category variants (reduced VOTs) that were nevertheless categorized reliably as tokens of the same voiceless phoneme. Andruski et al. found reduced priming in the 2/3 voiced-condition (compared to the fully voiceless-condition), demonstrating that within-category variation in the signal can affect online word recognition. Utman, Blumstein and Burton (2000) replicated these findings by showing greater priming for competitor words (e.g., dime, after hearing time). In several eye-tracking studies, McMurray and colleagues extended the Andruski et al. (1994) results by demonstrating that activation of target words and their competitors show gradient sensitivity to very small, within-category differences in VOT (McMurray, Tanenhaus & Aslin, 2002, McMurray, Aslin, Tanenhaus, Spivey & Subik, in press). Finally, a recent imaging study by Blumstein and colleagues reported activation to within-category differences in VOT in cortical areas believed to be sensitive to early speech processing (Blumstein, Myers & Rissman, 2005).

The studies reviewed above clearly establish that word recognition shows sensitivity to fine-grained sub-phonetic detail. However, they do not establish whether within-category detail is short-lived or maintained for longer durations. If the spoken word recognition system maintains within-category detail, it could be useful for interpreting and integrating later arriving input. However, many models of speech processing, including models of phonetic and phonemic categories based on attractor dynamics (e.g. McClelland & Elman, 1986; Kuhl, 1991; McMurray & Spivey, 1999; McMurray, Horst, Toscano & Samuelson, in press; Damper & Harnad, 2000), predict that initial gradient sensitivity to within-category detail is rapidly lost as the system gravitates toward an attractor state and a categorical representation of the phoneme. In simulations reported after Experiment 1, we show that, under most circumstances, the influential TRACE model (McClelland & Elman, 1986) makes just this prediction.

The experiments reported here evaluate the time-course of sensitivity to fine-grained within-category differences in VOT by creating VOT continua for words such as parakeet and barricade ([bεɹ əkeɪd] and [pεɹ əkit]). These words differ in initial voicing, and then overlap for multiple segments, before the point of phonemic disambiguation (the vowel in the final syllable). We used these stimuli to induce lexical garden-paths in which the final phonetic material is inconsistent with the preferred interpretation based on the initial segment (i.e., the nonwords barakeet and parricade). If a continuous representation of VOT is available at the point-of-disambiguation, then the ease of recovery should be easier when the VOT is closer to the category boundary because the evidence for the preferred interpretation of the initial phoneme would be weaker than when the VOT is further from the category boundary. If gradient sensitivity is short-lived, such that the representation of the initial phoneme is more categorical, then the details of within-category VOT should have minimal effects on garden-path recovery.

The empirical literature does not establish whether sensitivity to sub-phonetic detail is retained across multiple phonetic segments or decays quickly, as predicted by attractor models. To the best of our knowledge, only a handful of studies have examined the time course of sub-phonetic detail. Andruski et al. (1994) found that sub-phonetic differences as assessed in a priming task decayed within 250 ms. Using similar methods, Utman et al. (2000) found longer-lasting effects (at an ISI of 250 ms). However, both studies only found within-category sensitivity for a single token near the boundary, so it is unclear how long a gradient representation within the category could be maintained. McMurray, Tanenhaus, Aslin and Spivey (2003) found evidence for sensitivity in visual fixations one second after word onset, but these effects could be due to fixations that were programmed in response to input from early in processing. All of these studies examined such effects only with single-syllable minimal-pair words in a decontextualized presentation. None of them examined whether this gradiency is preserved in a way that is functionally available to further word recognition processes.

Work by Luce and Cluff (1998) does suggest that the word recognition system might keep multiple options available for quite some time (which they characterize as a delayed commitment process). They demonstrated that offset-embedded words (e.g., lock in hemlock) are active well after the target word (hemlock) is unambiguously recognized, suggesting that the system can maintain alternative interpretations have been ruled out. However, this study did not examine representations below the lexical level—it is quite possible that sublexical representations (e.g., phonemes) settle on a fairly discrete representation (in accord with attractor-models), while lexical commitments operate over a longer time course.

The most relevant studies were conducted by Connine and colleagues (Connine, 1987; Connine, Blasko & Hall, 1991). Connine et al. (1991) created ambiguity at a phonetic level that could be resolved by later sentential context. They embedded a dent/tent VOT continuum in sentences in which the subsequent context favored one interpretation over the other (e.g., “Because the d/tent on the pick-up was hard to find…” or “After the d/tent in the campground collapsed…”). Participants were instructed to identify the first phoneme of the target word as either /d/ or /t/. Results showed a small boundary shift in response to sentential context; contexts favoring a voiced interpretation yielded more /d/ responses than those favoring a voiceless context. Connine et al. (1991) found effects of context when the disambiguating information was 0, 1 or 3 syllables away from the target, and even when it crossed a clause boundary, but not with a 6–8 syllable delay. Connine et al. (1991) concluded that phonetic codes persist (and are available for integration with semantic material) for up to about a second, and more importantly that the system is capable of deferring a sub-lexical commitment (or at least modifying one) when the signal is phonetically and semantically ambiguous. Converging evidence comes from an unpublished study by Samuel (personal communication) who reports that phoneme restoration can be affected by subsequent sentential context.

While the Connine et al. (1991) study shows that ambiguous phonetic material can be retained for several syllables, the effect of the surrounding phonetic context was limited to VOTs near the category boundary. As we demonstrate in the simulations reported later, these are the only cases where TRACE successfully recovers from lexical garden-paths. Thus, it is possible that maintenance of phonetic detail is limited to phonetically ambiguous stimuli.

In sum, prior work makes a clear case for initial gradiency, but is unclear about whether it persists (Andruski et al, 1994; Utman et al, 2000; McMurray et al, 2003). It demonstrates that lexical commitments are delayed (Luce & Cluff, 1998), but that they may be only malleable near the category boundary (Connine et al, 1991). All of this evidence is consistent with a model in which sub-phonetic detail is rapidly lost while lexical commitments are more graded.

Thus, the current studies examined whether sub-phonetic detail is rapidly lost, as predicted by TRACE, when the VOT is substantially further from the category boundary. We examined garden-path recovery for pairs of words such as barricade/parakeet and bassinet/passenger because they afforded us sufficient time after the initial consonant to measure the maintenance of within-category information. Each pair contained words that begin with an initial bilabial or coronal stop that differs in voicing, but otherwise overlap for at least four phonemes. We created a synthetic VOT continuum that spanned the target word and the cross-voicing non-word (barricade->parricade) in 5 ms steps. Word recognition was assessed using the visual world paradigm (Cooper, 1974, Tanenhaus, Spivey-Knowlton, Eberhard & Sedivy, 1995). While eye-movements are monitored, participants heard the word and selected its depicted referent from a computer display that contained five pictures: the two competitors (e.g., a barricade and a parakeet), two unrelated distracters, and a large red “X”. We added the X to the standard four-picture display introduced by Allopenna, Magnuson and Tanenhaus (1998) to avoid forcing participants to choose among lexical alternatives when they might have heard a non-word mispronunciation (e.g. parricade or barakeet).

Our study was designed to answer two questions. First, are listeners able to quickly revise their lexical hypotheses when subsequent information is inconsistent with a strongly preferred lexical bias? Our simulations with TRACE predict that revision will be difficult or impossible, except for tokens near the VOT category boundary. Second, and most importantly, we assess whether a gradient representation of the initial segment affects the revision process. If information about the VOT of the first phoneme is available when subsequent information that is inconsistent with the preferred lexical hypothesis arrives, then the time to recover from the “lexical garden-path” will be a function of VOT. As VOT approaches the category boundary (from the misperceived, “garden-path” end of the continuum), the time to revise the initial (provisional) lexical commitment should decrease.

The strongest test comes from those trials in which participants initially interpret the input as the competitor, and then revise this provisional interpretation after the disambiguating information arrives. We can then use look-contingent analyses to examine those trials in which participants are substantially down this garden path. Thus trials can be identified as trials on which participants are fixating on the cross-category competitor (e.g., barricade when the auditory stimulus was b/parakeet) when the disambiguating information arrives (e.g., ...keet), on the assumption that what the participant is looking at is likely to represent his or her lexical hypothesis at that moment in time. For those trials, we can then examine the time it takes to arrive at the target, when (a) the next look is to the referent consistent with the last syllable (e.g., the parakeet); and (b) the participant selects that picture as the referent. If the system preserves gradient information about VOT, the time to launch a corrective saccade should be a function of the distance of the initial VOT from the category boundary.

Experiment 1

Methods

Participants

Eighteen University of Rochester undergraduates served as participants in this experiment. All reported normal hearing and normal or corrected-to-normal vision. Informed consent was obtained in accordance with University and APA ethical guidelines. Testing was conducted in two sessions lasting approximately one hour and participants were compensated $10 for each session. Data from one participant who did not return for the second session were excluded.

Auditory Stimuli

Experimental materials consisted of ten pairs of phonemically similar words, each beginning with labial or coronal consonants (6 b/p pairs and 4 t/d pairs). Pairs were selected that differed in initial voicing of the first consonant, but were identical for the next 3–5 phonemes. Table 1 lists these pairs, including the amount of overlap in both number of phonemes and in ms. Two 10-step (0–45 ms) VOT continua were created from these pairs (one for each word)1.

Table 1.

Experimental items used in experiments 1 – 3. Point of disambiguation refers to the index of the phoneme at which the two words could be differentiated (assuming ambiguous initial voicing).

| Voiced | Voiceless | Point of Disambiguation (phonemes) | Point of Disambiguation (ms) |

|---|---|---|---|

| Bumpercar | Pumpernickel | 6 | 240 |

| Barricade | Parakeet | 6 | 270 |

| Bassinet | Passenger | 6 | 335 |

| Blanket | Plankton | 6 | 225 |

| Beachball | Peachpit | 5 | 280 |

| Billboard | Pillbox | 5 | 210 |

| Drain Pipes | Train Tracks | 6 | 285 |

| Dreadlocks | Treadmill | 5 | 170 |

| Delaware | Telephone | 5 | 225 |

| Delicatessen | Television | 5 | 165 |

VOT continua were synthesized with the KlattWorks (McMurray, in preparation) interface to the Klatt (1980) synthesizer, modeled on tokens of natural speech. Several recordings of each pair were made by an adult male speaker in a quiet room. From these recordings, tokens were selected that best matched each other for speaking rate, voice quality and for which automated formant analysis yielded the best results. After selecting these pairs, pitch and formant tracks were extracted automatically using Praat (Boersma & Weenink, 2005) and used as the basis for the F0 – F5 parameters of the synthesizer.

For each pair, synthesis was based on the voiced endpoint (e.g., barricade). Formant frequencies were based on the measurements made with Praat. The remaining parameters (voicing [AV], aspiration [AH], frication [AF] and formant bandwidths [b1–b6]) were selected to best match the spectrogram and envelope of the original recorded token. Several recorded tokens had non-zero VOTs (5–10 ms). For these, the VOT was set to 0 ms by adjusting the onset of voicing to be temporally coincident with the release burst.

After constructing a natural-sounding voiced token (e.g., barricade), the voiceless competitor (e.g., parakeet) was created in three steps, adopting methods chosen to ensure maximally natural sounding synthetic speech that was completely identical for the overlapping segments within each pair. First the parameters of the voiced token were copied. Second, the VOT of the token was set to 45 ms by setting the onset of voicing to 0 and aspiration to 60 dB for the first 10 frames (45 ms). Next, parameters that occurred after the point of disambiguation (e.g., /eI/ in the final syllable of barricade) were modified so that the remainder of the word best matched the voiceless token by comparing the spectrograms of the synthetic and natural versions. The stimuli are available from the first author.

Having synthesized the two tokens for each of the 10 pairs, twenty 10-step VOT continua were constructed (one from each token). To construct continua from the voiced words (e.g., barricade), the onset of voicing was cut back in 5ms increments and replaced with 60 dB of aspiration. To construct continua from the voiceless words (e.g., parakeet), the onset of voicing was decreased in 5 ms increments, and the duration of aspiration was decreased accordingly. These procedures guaranteed that for a given VOT, targets and competitors were parametrically and acoustically identical until after the point of disambiguation (POD: /eet/ vs /ade/). After synthesis, the POD was measured directly from the synthetic stimuli. The mean POD for the set was 240ms or 4.5 phonemes after word onset (Table 1). KlattWorks scripts for each of these continua are available on the web at [JML online archive].

In addition to these 20 VOT continua, 10 pairs of filler items were also synthesized (Table 2). Filler items began with continuants and fricatives so as to be minimally overlapping with the target continua, and the filler set was roughly analogous to the distribution of voiced and voiceless labials and coronals in the target set (4 /l/- and /s/- initial items and 6 /r/- and /f/-initial items). Filler items were not phonetically similar to each other and had minimal overlap. Filler items were synthesized similarly to target items using automatically extracted pitch and formant frequencies, and by matching synthetic spectrograms to the spectrogram of the recorded items.

Table 2.

Filler items used in experiment 2.

| /l/ | /r/ | /s/ | /f/ |

|---|---|---|---|

| Lemonade | Restaurant | Saxophone | Photograph |

| Limousine | Rabbit | Secretary | Fountain |

| Lobster | Reptile | Sunbeam | Factory |

| Lantern | Raspberry | Spiderweb | Farmyard |

| Referee | Fireplace | ||

| Rectangle | Footstep |

We assumed that participants would perceive some of the test stimuli as mispronounced, especially for stimuli taken from opposite ends of the VOT continuum (e.g., barricade with a VOT of 45 might be heard as the non-word parricade). In order to minimize the likelihood that mispronunciation would be a cue that distinguished the target/competitor pairs from the filler pairs, we created a mispronounced version of each filler item: /l/-initial items were “mispronounced” as /r/-initial (e.g. rimousine); /r/-initial items were mispronounced as /l/-initial (lestaurant); /s/-initial items were mispronounced as /f/-initial (faxophone); and /f/ items were mispronounced as /s/-initial (sotograph). Note that /l/ items never appeared with /r/ items (/l/ was paired with /s/), and /f/ items did not appear with /s/ items, so the mispronunciation did not introduce competition among the displayed alternatives due to phonetic similarity. During the course of the experiment, the correctly pronounced version of the filler items was heard on 75% of the filler trials, and the mispronounced version on the other 25%.

Visual Stimuli

Visual stimuli were 40 color drawings corresponding to the 20 target/competitor pairs and the 20 filler items. For each item, several pictures were downloaded from a large commercial clipart database. One picture was selected by groups of 3–4 viewers as being the most representative, easiest to identify, and least similar to the others in the complete set. In a few cases, images were edited to remove extraneous components or to alter colors.

Procedure

When participants arrived in the lab, informed consent was obtained and the instructions were given. An Eyelink II eye tracker was calibrated using the standard 9-point calibration procedure. The experiment was programmed using PsyScope (Cohen, MacWhinney, Flatt & Provost, 1993). On each trial, participants saw five pictures in a pentagonal formation on a 20” computer monitor. Each picture was equidistant from the center of the screen and was 250 x 250 pixels in size. While the five screen locations did not change, the ordering of the pictures within these locations was randomly selected for each trial. These pictures corresponded to the target (e.g., barricade), the competitor (e.g., parakeet), and the two matching filler items (e.g., lemonade and restaurant) that had been randomly selected (for that participant) to form a set of four. The fifth picture was a large red X that participants were instructed to select when they heard a mispronounced word.

Each trial began with a display of the pictures and a small blue circle, which was presented in the center of the screen. After 750 ms this circle turned red, signaling the participant to click on it with the computer mouse. The red circle then disappeared and one of the auditory tokens was played. Participants clicked on a picture, which ended the trial. There was no time-limit on the trials, but subjects typically responded in less than 2 sec (M=1601 ms, SD=166.7). Eye-movements were only analyzed before and after the POD of the stimulus (M=240 ms), so very late mouse-click responding was unlikely to affect the eye-movement data.

Design

Experiment 1 made use of 10 target/competitor pairs, each of which was composed of 10 step VOT continua. In addition, pairs were designed such that either word could serve as the spoken target. Thus on some trials the voiced word (barricade) was the target and the competitor was voiceless. On other trials the voiceless word (parakeet) was the target and the voiced word (barricade) was the competitor. With an equal number of filler trials, this yielded 10 (items) x 2 (voiced vs. voiceless target) x 10 (VOTs) x 2 (fillers) = 400 trials for a single repetition of the design. We estimated that 7 repetitions of each VOT would be needed for adequate statistical power, yielding a total of 2800 trials—far too many for a participant to complete. Thus, we adopted a Latin-square design in which each participant was randomly assigned 5 of the 10 item-sets. There were no constraints on this randomization—each subject’s 5 item-sets were chosen independently of the others, although the distribution was roughly equivalent across subjects2. This led to 700 experimental trials (5 items x 2 target-types x 10 VOTs x 7 reps). We further reduced the number of fillers to slightly less than the number of experimental trials (632). This yielded 1332 total trials per participant, which were administered in two sessions, each lasting approximately one hour.

To maintain consistency with prior work (McMurray et al., 2002; in press), filler items were consistently paired with experimental items by initial consonant. If fillers had been selected at random on each trial, participants might have noticed a relationship between the voicing pairs (since, for example barricade is consistently present with parakeet, but not with the other two items). Thus, sets of four items were randomly selected from each category, and were paired consistently throughout the experiment. Each set (randomly determined for each participant) consisted of one continuant (/l/ or /r/) and one fricative (/s/ or /f/). B/p pairs were paired with /r/- and /f/- initial fillers. D/t pairs were paired with /l/- and /s/- initial fillers. Pairs were randomly assigned with the exception that filler items could not be semantically related to each other or to the target/competitor pairs.

Eye-movement Recording and Analysis

Eye-movements were recorded at a 250 Hz sampling rate using an SR Research Eyelink II eyetracker. This tracker uses the pupil and corneal reflection to determine eye-position, and a set of infrared lights mounted on the corners of the computer monitor to compensate for head-movements. Moment-by-moment eye-position coordinates were automatically parsed into saccades, fixations, and blinks, using the conservative default settings. Saccades and the subsequent fixation were then combined into “look”. A look began at the onset of a saccade and ended at the offset of the ensuing fixation. All analyses reported here were conducted on the basis of these looks.

The Eyelink II system shows an inherent drift in its report of eye-gaze. To counter this, the drift correction procedure was run every 40 trials. In addition, the area-of-interest corresponding to each picture was expanded by 40 pixels to relax the criterion for what counted as a fixation. This did not result in any overlap between the areas-of-interest of the five pictures. Thus any look for which the fixation fell within this expanded area-of-interest was counted as a look to the corresponding picture.

Results

Three separate sets of analyses were performed. The first set of analyses was conducted to confirm that our stimuli produced initial gradient effects of VOT, replicating the pattern found in McMurray et al. (2002, in press) because finding this pattern was a prerequisite for addressing questions about time-course. The second examined the pattern of phoneme identification (mouse-click) responses to assess whether participants’ overt labeling demonstrated an ability to recover from initial ambiguity. In particular, it asked if participants clicked the target consistent with the end of the stimulus, or did they generally garden-path and classify the stimuli as non-words (or the competitor). The third analysis addressed our primary question: do differences in VOT systematically affect ambiguity resolution? This analysis examined trials in which participants’ initial fixation was directed to the competitor and measured the latency to fixate the target.

Eye movement evidence for initial gradiency

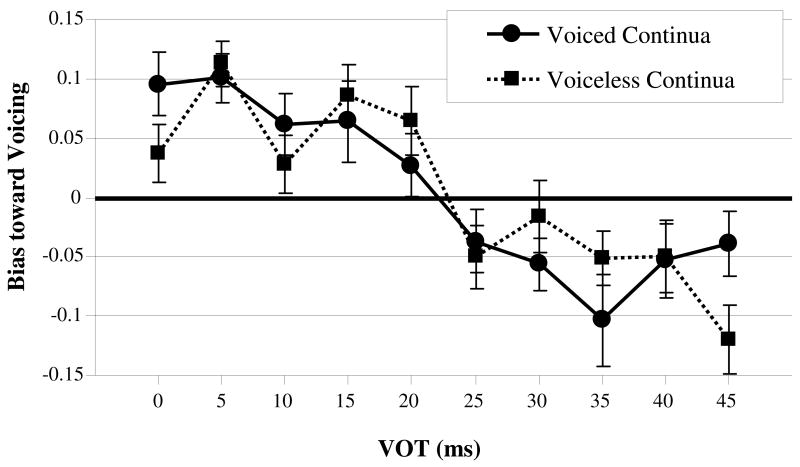

On each trial, we limited our analysis to the last fixation prior to the point of disambiguation (plus 200 ms to account for saccadic planning). From this segment of each trial, the proportion of fixations to the voiced and voiceless target was computed for each participant, at each VOT. This was then converted to a measure of bias by subtracting the proportion of fixations to the voiceless target from the proportion of fixations to the voiced target. Thus, a value of 0 would indicate that participants were equally likely to fixate both, positive values would indicate they were biased towards a voiced interpretation and negative values would indicate bias towards a voiceless interpretation.

Figure 1 shows that as the VOT increased from 0 to 45 ms participants were increasingly more likely to commit to the voiceless interpretation, replicating the gradient effects of VOT reported by McMurray et al. (2002, in press). This did not appear to vary as a function of the lexical-endpoint of the continuum—the effect was the same whether it was the barricade/parricade or the parakeet/barakeet continuum. This pattern of results is consistent with the fact that these initial eye-movements were generated prior to the POD and well before the offset of the word.

Figure 1.

Degree of bias toward voiced or voiceless competitors prior to the point of disambiguation as a function of VOT in Experiment 1.

Statistical support for these patterns came from an ANOVA examining this bias measure as a function of VOT and the lexical endpoint (whether lexical end-point of the continuum was voiced—barricade—or voiceless—parakeet). Here we expected an effect of VOT, but no effect of lexical-endpoint—since these eye-movements were launched prior to the point of disambiguation, listeners should not know what the word was (Table 3 for statistical results referenced by row). There was a significant main effect of VOT (row 1), with more fixations directed to the voiceless target as VOT increased. This relationship took the form of a linear trend (row 2). Lexical-endpoint was not significant (row 3) and did not interact with VOT (row 4).

Table 3.

Statistical tests examining effect of VOT and target-type on the proportions of fixations to the competitor in Experiment 1.

| Factor | Df | F | P | ||

|---|---|---|---|---|---|

| 1 | VOT | F1 | 9, 144 | 16.9 | <0.0001 |

| F2 | 9, 81 | 6.5 | <0.0001 | ||

| minF’ | 9, 144 | 4.7 | 0.0001 | ||

| 2 | VOT (trend) | F1 | 1, 16 | 46.3 | <0.0001 |

| F2 | 1, 9 | 10.2 | 0.01 | ||

| minF’ | 1, 13 | 8.4 | 0.013 | ||

| 3 | Lexical-Endpoint | F1 | <1 | ||

| F2 | <1 | ||||

| 4 | Endpoint x VOT | F1 | 9, 144 | 1.6 | >.1 |

| F2 | 9, 81 | 1.8 | .13 | ||

| minF’ | 9, 215 | <1 | |||

While the trend analysis demonstrates that the linear (gradient) model provides a good description of the relationship between VOT and initial commitment, we cannot conclude from the trend alone that it is a better fit than other non-linear functions. Of greatest concern, of course, is the possibility of a categorical step function at the boundary. Additionally, even if a linear model is a better fit overall, it may still derive from an underlying categorical relationship if there is variability between participants in the location of the category boundary. Thus, we conducted an additional analysis to more firmly establish the gradiency of the initial commitment.

In this analysis, two models were compared to determine the best fit to the data (see Appendix B for details). In the first (linear) model, the relationship between increasing VOT and bias toward the voiceless interpretation was modeled as a linear function. In the second (categorical) model, this relationship was modeled as a step-function approximated by a three-parameter logistic function. In this function the lower asymptote, the upper asymptote and the cross-over point (category boundary) were free to vary, but the slope was fixed at a very steep value to approximate a step function. Both models were simple mixed effects models in which each participant’s data was fit to the linear and logistic functions separately (to avoid issues of between-subject category boundary variability). Thus, the linear model as a whole had separate slopes and intercepts for each subject, while the logistic model had separate upper asymptotes, lower asymptotes and cross-over points for each subject.

The non-linear model should in principle be superior to the linear model because it has one extra degree of freedom (for each subject). This makes it difficult to directly compare models. However, the Bayesian Information Criteria (BIC) measure (Schwarz, 1978) was designed to facilitate this comparison (when both models are fit to the same data), by taking into account the number of parameters and the sample size when evaluating a goodness of fit measure (in this case log-likelihood). The model with the lower BIC is the better fit.

Both models were fit to a dataset consisting of the voicing-bias measure averaged at each VOT and for each continuum for each subject. Both fits were good, with the linear model accounting for 11.1% of the variance and the logistic model accounting for 11.8%. However, the BIC measure strongly favored the linear model, yielding a BIC of 68.8 for the linear model and 180.2 for the categorical model. Moreover, an analysis of the BIC for individual subjects revealed that 15 of the 17 subjects showed lower BIC values for the linear than the logistic model. Thus, we can safely rule out a non-linear (categorical) model as the underlying form of the function relating time-to-target-disambiguation to VOT.

Mouse-click evidence for recovery

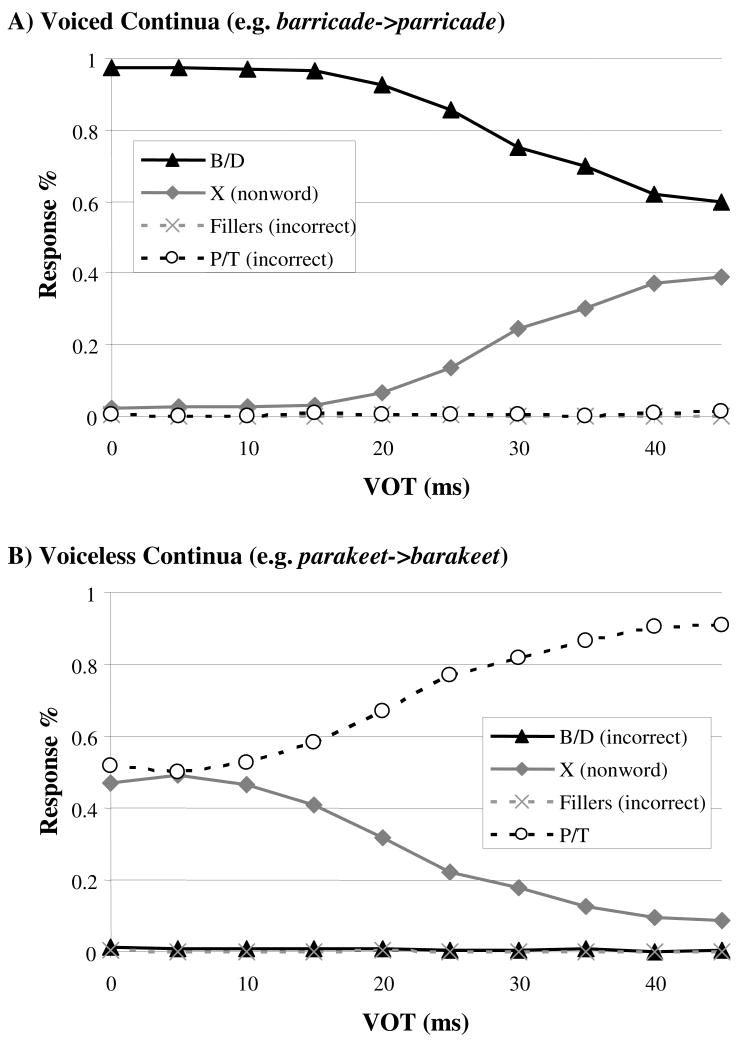

Participants chose the target that was consistent with the final disambiguating segment 83% of the time for the voiced targets and 70.5% of the time for the voiceless targets. These sub-asymptotic percentages can be attributed to the ambiguous initial segment and were largely due to increased non-word responding when the VOT indicated an initial phoneme that conflicted with the target (e.g., barricade with a VOT of 45 ms). When nonword (X) responses were excluded, these figures increased to 99.4% and 98.6%, respectively—subjects rarely chose the competitor. Moreover, when the VOT of the initial consonant was consistent with the correct pronunciation (e.g., barricade with a VOT of 0), participants responded correctly to 92% and 86% of the items from the voiced and voiceless regions of the continua, respectively.

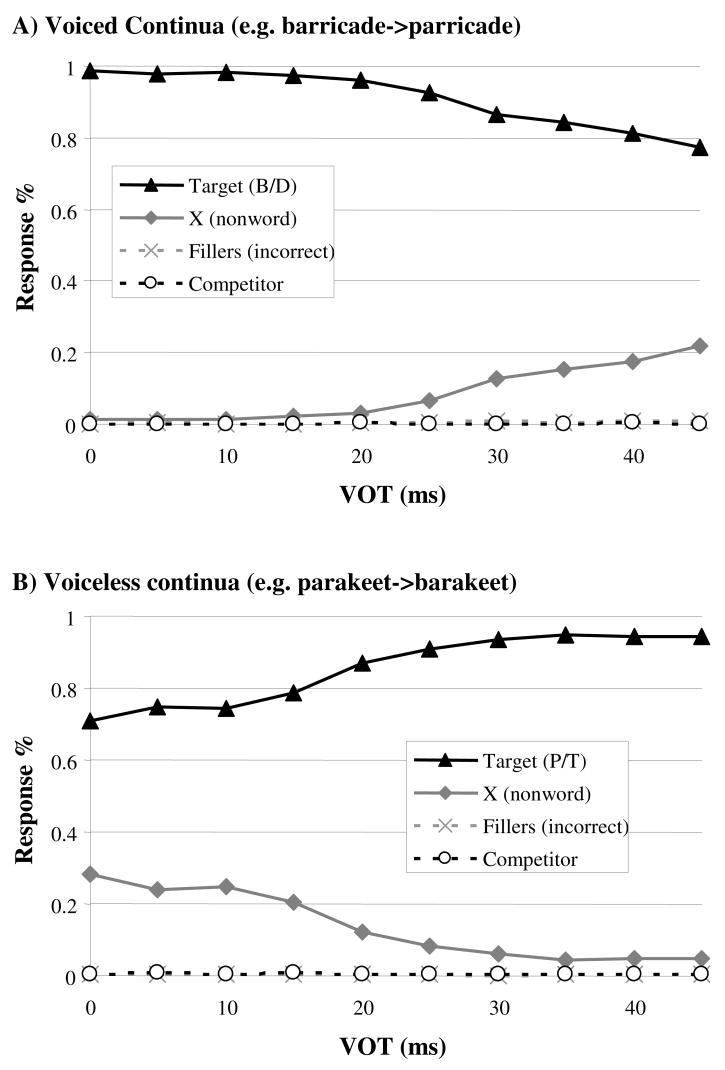

Figure 2 shows the percentage of trials in which participants clicked the voiced target (B/D), the voiceless competitor (P/T), the X, or the fillers for trials in which the target was voiced (panel A) or voiceless (panel B) as a function of VOT. These data show that identification is systematically related to VOT: as the VOT departs from the endpoint (0 ms for voiced words, 45 ms for voiceless words), identification responses to the target decrease and identification responses to the non-word increase.

Figure 2.

Identification results for Experiment 1. Panel A: identification for voiced targets (e.g. barricade). Panel B: identification for voiceless targets (e.g. parakeet).

These results also indicate that participants’ final judgment about the identity of the target word was not irretrievably influenced by the initial consonant. Participants rarely clicked the competitor word (e.g., parakeet when the target was from the barricade continuum), averaging 0.4% (SD=.4%) for the voiced continua (e.g., those based on barricade) and 0.6% (SD=1.2%) for the voiceless (e.g., those based on parakeet). Thus, true, irrecoverable garden-paths were rare.

The more likely response given misleading onsets was to click the X (non-word). However, as shown in Figure 2A, even when the onset consonant was maximally discrepant from the target word (e.g., it was incorrectly pronounced as parricade with a VOT of 45 ms), participants recovered from the phonetic mismatch and selected the target picture (the barricade) that was consistent with the disambiguating information. Moreover, 10 of the 17 participants almost never used the X response category: their average rate of target responding across the entire VOT continuum was 95.6% (SD=4.5%), and they averaged 88.9% target responding on the maximally distal VOT (SD=13.4%). Thus, these 10 participants were consistently able to recover from a mismatch in the initial consonant at VOT values well beyond the category boundary.

A similar pattern was observed for the voiceless continua (Figure 2B), with participants selecting the picture of the parakeet far more than the barricade or the X overall. Even for the most extreme mismatch (e.g., barakeet with a VOT of 0 ms), participants selected the parakeet 51.6% of the time (SD=38.5%). Moreover, 9 of the 17 participants almost never used the X response category: their average rate of target responding across the entire VOT continuum was 90.9% (SD=12.4%), and was 84.8% at the most extreme VOT (SD=16.0%).

Finally, the overall pattern of identification results was not consistent with a sharp categorical boundary of any kind—identification functions transitioned slowly and smoothly towards non-words. Thus, the identification results demonstrate that many participants were able to fully recover from a lexical garden-path and all participants were able to recover partially. Moreover, recovery appears to be a gradient function of VOT. A stronger test of the gradiency hypothesis involves an assessment of how much temporary activation is elicited by the garden-path information from the “incorrect” initial consonant. Such a test cannot be provided by mouse-click judgments alone, but rather requires an on-line assessment such as eye-movements to the pictured alternatives.

Eye movement evidence for gradient recovery

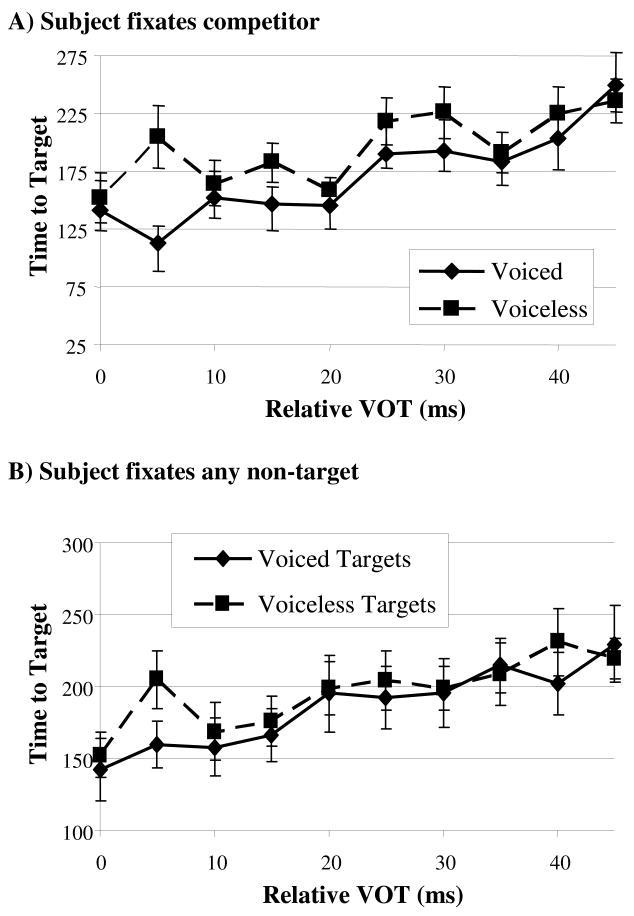

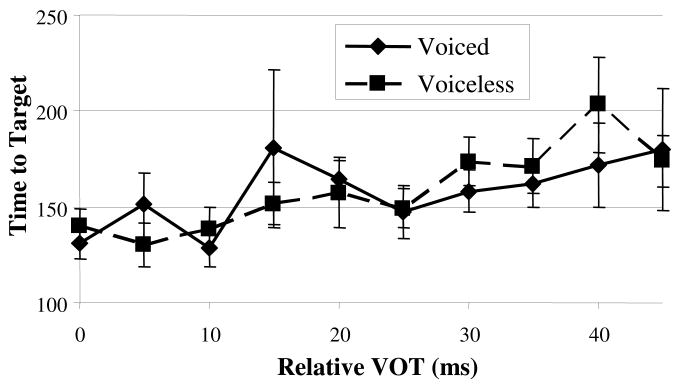

In order to assess our hypothesis that VOT would systematically affect listener’s ability to resolve the initial ambiguity, two series of analyses based on the sequence of fixations were conducted. Trials were included in the first series of analyses if 1) the participant was fixating the competitor prior to the POD (plus the 200 ms of oculomotor planning time), and 2) the next fixation after the POD was directed to the target. For these trials, the time between the POD and the fixation to the target was the dependent variable [time-to-target]. Unfortunately, these criteria led to a fairly sparse dataset. On average participants had 2.55 trials contributing to each VOT, but there was high variance in the number of trials (VOT: SDwithin participant=1.31; SDbetween participant=.77), yielding 60 empty cells out of the 340 cell ANOVA (2 target-types x 10 VOTs x 17 participants).

To deal with the sparse data problem, a hierarchical regression analysis was conducted by treating VOT as a continuous covariate (Figure 3A). In this analysis, VOT was recoded as distance from the word-endpoint of the continuum, which we term relative VOT (rVOT). Thus, a 0 ms VOT in the b/parricade continuum was coded as an rVOT of 0, while a VOT of 0 in the b/parakeet continuum was coded as an rVOT of 45. This meant that for both voiced (b/parricade) and voiceless (b/parakeet) continua, we predicted an increase in time-to-target as rVOT increased. In addition, whether the continuum had a voiced or voiceless target (lexical-endpoint), as well as the interaction, were included as covariates. We did not expect that the lexical-endpoint would influence recovery (as this would suggest, for example, that participants were faster overall to recover in voiced continua or voiceless continua), nor did we expect an interaction. This type of analysis more gracefully copes with missing data because it treats VOT as a single continuous covariate rather than a set of 10 independent cells. Complete results for these analyses are presented in Table 4.

Figure 3.

Time to fixate the target after the point of disambiguation as a function of distance from the lexical endpoint (rVOT) for continua based on voiced and voiceless targets. Panel A: time-to-target for trials in which the participant was fixating the competitor just before the point of disambiguation. Panel B: time-to-target for trials in which the participant was fixating any non-target.

Table 4.

Results of a regression analysis examining the effect of VOT and lexical endpoint on time-to target in Experiment 1.

| Analysis | Step | Factor | R2change | df | F/t | P |

|---|---|---|---|---|---|---|

| Subject analysis | ||||||

|

| ||||||

| 1 | Subject | .139 | 15, 263 | 2.6 | .001 | |

|

| ||||||

| 2 | Main effects | .131 | 2, 261 | 23.5 | <0.001 | |

| Lexical Endpoint | 261 | 2.7 | .007 | |||

| rVOT | 261 | 6.3 | <0.001 | |||

|

| ||||||

| 3 | Interaction | .006 | 1, 260 | 2.3 | >0.1 | |

|

| ||||||

| Item analysis

| ||||||

| 1 | Items | .054 | 9, 171 | 1.1 | >0.1 | |

|

| ||||||

| 2 | Main Effects | .237 | 2, 169 | 28.3 | <.0001 | |

| Lexical Endpoint | 169 | 3.3 | .001 | |||

| rVOT | 169 | 6.7 | .001 | |||

|

| ||||||

| 3 | Interaction | .001 | <1 | |||

On the first step of the regression, participant codes were added to the model and found to account for 13.9% of the variance. In the second step, rVOT and lexical-endpoint accounted for an additional 13.1% of the variance. Lexical-endpoint was significant individually, with participants fixating the target approximately 24 ms faster for voiced continua than for voiced continua (B=24.4, 95% CIslope: 17.6 ms). This asymmetry is likely due to the fact that our continua appeared to have boundaries that were not centered between 0 and 45 ms of VOT – more voiced sounds were perceived than voiceless. Thus, there was a token-frequency bias to recover to voiced sounds, which could result in the significant effect of endpoint. Crucially, time-to-target was significantly related to rVOT (B=1.98 ms/VOT, 95% CIslope: .61). As the VOT departed from the lexical endpoint, participants took longer to switch from the competitor to the target. Finally, the interaction term was added to the regression and accounted for no additional variance4. Thus, the effect of rVOT was not different for the two types of continua.

A second regression analysis that used items as the fixed effect revealed similar effects (also in Table 4). On the first step, item codes accounted for 5.4% of the variance but did not reach significance, suggesting that variance in time-to-target may be due more to between-participant factors (e.g., generalized reaction time) than item-specific factors. When rVOT and lexical-endpoint were added in the second step, an additional 23.7% of the variance was accounted for. As before, lexical-endpoint was significant in that participants were faster to fixate the target for voiced continua (B=31.3 ms, 95% CIslope=18.4 ms). Importantly, rVOT was also significant (B=2.13, 95% CIslope=.632). Finally, the interaction term did not account for any additional variance on the third step (R2change=.003).

Follow-up analyses were conducted in which the inclusion criteria were relaxed to allow any trial in which the participant was fixating any non-target object prior to the POD to be included. These less restrictive criteria allowed more data to contribute to the analyses (M=7.4 trials per cell), and were necessary for comparison with Experiment 2. Because there are now no empty cells, this enabled the use of a 10 (rVOT) x 2 (voiced or voiceless target) ANOVA to assess effects of VOT on time-to-target (Table 5). Mean time-to-targets at each VOT for this analysis are shown in Figure 3b. The effect of lexical-endpoint was not significant under this analysis, suggesting that the effect seen in the first analysis may have been relatively small (row 1). However, rVOT was highly significant (row 2). This was the result of a significant linear trend (row 3): as VOT departed from the value of the lexical endpoint, time-to-target systematically increased. rVOT did not interact with lexical-endpoint (row 4), suggesting that this effect was not different for voiced and voiceless targets.

Table 5.

Results of an ANOVA examining time-to-target as a function of lexical-endpoint and rVOT in Experiment 1.

| Factor | df | F | P | ||

|---|---|---|---|---|---|

| 1 | rVOT | F1 | 9, 144 | 6.5 | <0.001 |

| F2 | 9, 81 | 3.9 | <0.0001 | ||

| minF’ | 9, 172 | 2.4 | 0.012 | ||

|

| |||||

| 2 | rVOT (trend) | F1 | 1, 16 | 35.6 | <0.001 |

| F2 | 1, 9 | 16.0 | 0.003 | ||

| minF’ | 1, 17 | 11.0 | 0.004 | ||

|

| |||||

| 3 | Lexical-Endpoint | F1 | 1, 16 | 2.4 | >0.1 |

| F2 | <1 | ||||

|

| |||||

| 4 | Endpoint x rVOT | F1 | <1 | ||

| F2 | <1 | ||||

Gradient or Categorical Recovery?

As in our analysis of the initial commitment, we again needed to determine that the linear relationship seen in the prior analysis was a better fit than a non-linear (categorical) function, once variability in category boundary was accounted for. Thus, we used a similar procedure as before, fitting two mixed effects models to the data. In these models the linear or logistic functions described the relationship between VOT and time-to-target. Unlike the prior analysis, however, the time-to-target dataset was already limited to trials during which the participant was fixating particular objects prior to the point-of-disambiguation. Thus, dividing the data further by participant left us with relatively small dataset for each fit. As a result, we used the data from the second analysis, which computed time-to-target for trials in which the participant was fixating any non-target object prior to the point-of-disambiguation. This yielded, on average, 148.3 data points per participant (SD=58.5).

Results strongly supported a gradient model over a categorical model. In absolute terms, both models fit the data quite well, although the linear fit was better (Linear: R2=.199; Logistic: R2=.120). However, the BIC measure showed a strong advantage for the linear model over the logistic model (Linear: 32133; Logistic: 32375), and every participant had a lower BIC score for the linear model than the logistic model. Thus, we can conclusively rule out a categorical model as the underlying form of the function relating time-to-target to VOT.

Discussion

During the temporal interval prior to phonemic disambiguation, fixations to the voiced and voiceless target showed gradient sensitivity to VOT, replicating earlier results and demonstrating that participants make provisional rather than categorical commitments. Importantly, participants were able to revise their initial interpretations when they encountered new phonetic evidence after an ambiguous initial segment. All participants showed an ability to identify some of the garden-path stimuli (e.g., parricade as a poor exemplar of barricade), although there were individual differences in how much of a mismatch was tolerated. Most crucially, the continuous-valued VOT of the initial segment was linearly related to time to recover from the initial interpretation, suggesting that fine-grained information about VOT was retained over the 240 ms period prior to the POD.

We have argued that models that incorporate attractor dynamics at the phoneme level are likely to predict short-lived sensitivity to within-category differences in VOT. To illustrate this claim we conducted simulations using TRACE, as an example of an attractor-based model, because it successfully simulates a wide range of effects in spoken word recognition (for recent review see Gaskell, 2007), and because it includes quasi-featural level information that can be used to simulate sensitivity to sub-phonetic detail.

TRACE predicts that as a word unfolds over time, multiple lexical candidates become activated, including words that overlap at onset and words that rhyme (Allopenna, Magnuson & Tanenhaus, 1998). TRACE also exhibits initial fine-grained sensitivity to sub-phonetic detail, with activation for lexical competitors being proportional to continuous variation at the feature-level. For example, TRACE successfully simulates the pattern of results presented in McMurray et al. (2002; in press) for monosyllabic words such as p/beach (we are using p/b as short hand for a VOT continuum). The question here, then, is whether TRACE can preserve this initial sensitivity to enable recovery from subsequent mismatching information.

TRACE Simulations

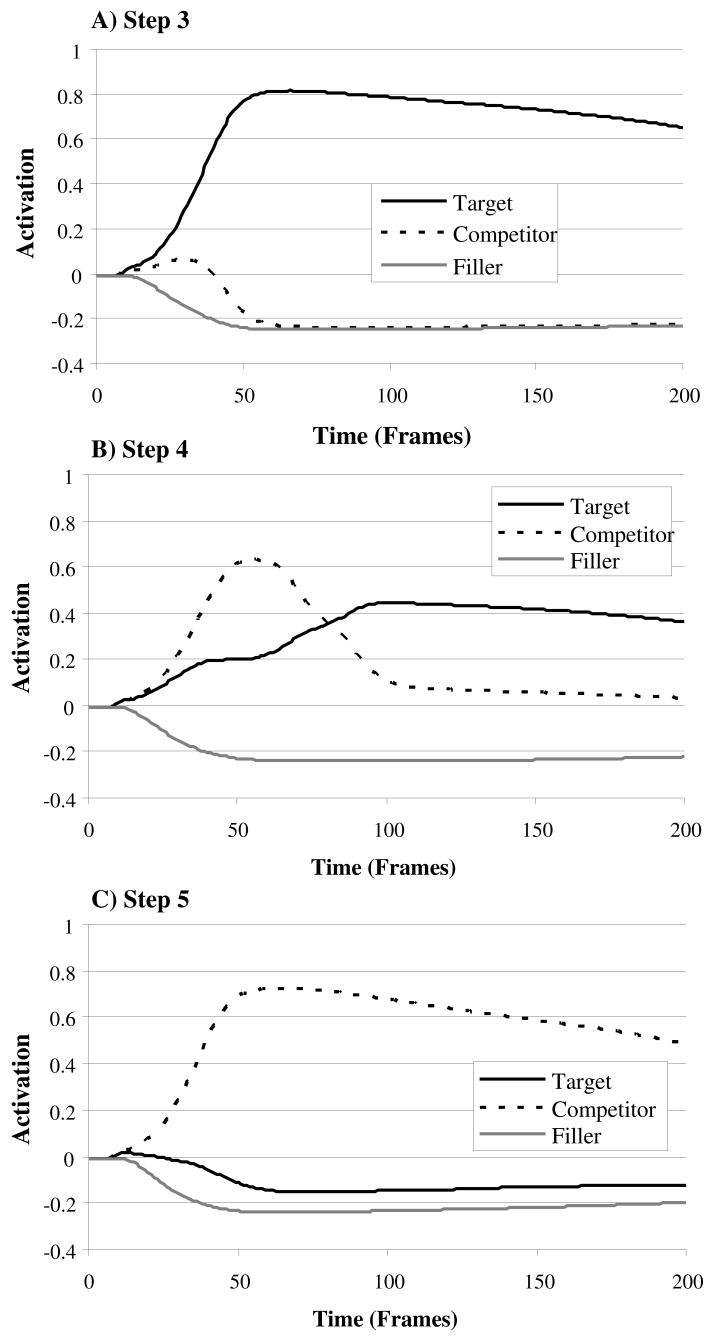

All simulations were conducted using the jTRACE simulator (Strauss, Harris & Magnuson, in press). Stimuli were analogous to Experiment 1 (though they were limited by TRACE’s phonetic inventory), consisting of three pairs of temporarily ambiguous words: barricade/parakeet, billboard/pillbox, and beachball/peachpit. Each word-pair was used to create 9-step VOT continua. Each pair was yoked with two fillers (an l- and s- initial multisyllabic word). Each simulation was run for 200 epochs, with the feature-spread parameter set to the default (6). This means that there were six frames between the peak activation for each phoneme’s featureal input. Thus, the POD for the barricade/parakeet and beachball/peachpit continua was at frame 30, and for billboard/pillbox at frame 24.

We first simulated the results from Experiment 1 using the standard TRACE parameters. Figure 4 reports TRACE simulations for parakeet and barricade showing gradient effects of VOT near the category boundary, but an inability to recover from the initial mismatch when the stimulus was one-step over the category boundary. In fact, in Panel C, the competitor (e.g. parakeet after hearing parricade) is nearly as active as barricade in Panel A. Figure 5 shows the final activation values for each word in those same simulations as a function of VOT. In a sense, this reveals the model’s final decision (analogous to the mouse-response data). At the disambiguating phoneme, TRACE only shifts its preferred interpretation when the initial VOT is at the category boundary, thereby showing little capacity to recover from garden-path effects. This suggests a contrast with the mouse clicking results of Experiment 1. We did not observe that nothing was active in the model (e.g. a “non-word” response). Rather, the model has fully garden-pathed to the competitor, something we did not observe in subjects’ responding.

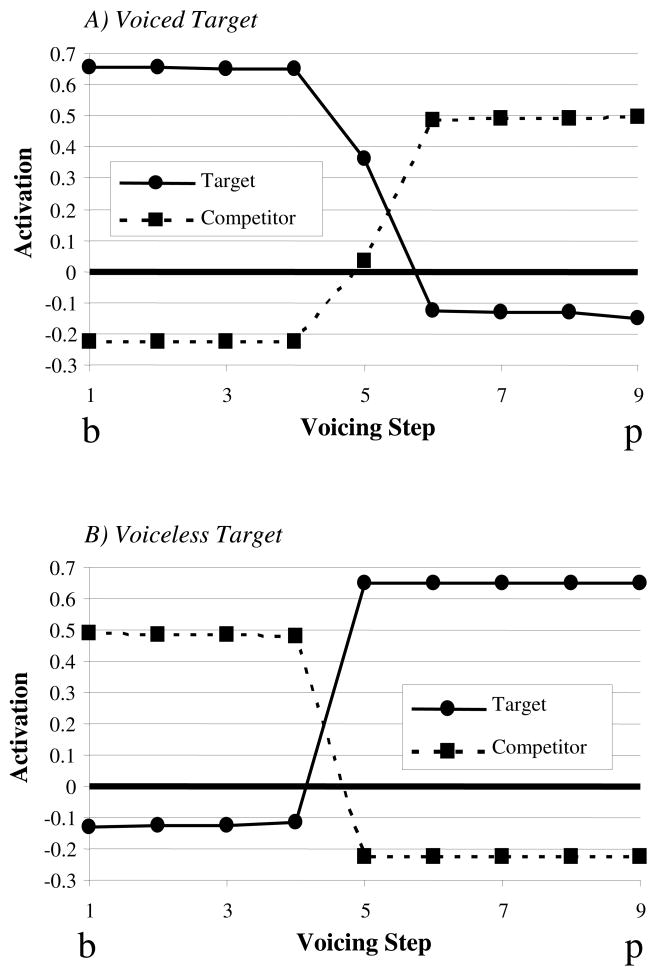

Figure 4.

Activation for the target (barricade), competitor (parakeet) and fillers as a function of time in TRACE given a 9-step VOT continuum. A) Step 3, a voiced sound adjacent to the category boundary. B) Step 4: near the category boundary. C) Step 5, a voiceless sound just over the category boundary.

Figure 5.

Final activation (after 200 processing cycles) for a barricade->parricade continuum (Panel A) or a barakeet->parakeet continuum (Panel B).

In order to determine the conditions under which TRACE could or could not simulate the data from Experiment 1, we then manipulated a number of parameters that control the dynamics of activation flow in TRACE. In particular we sought to determine if there are any instantiations of TRACE which can defer a commitment long enough to recover from the garden-path. For ease of comparison with the empirical data, TRACE activations were converted to fixation proportions using the Luce-choice rule, as in previous simulations of eye movement data from TRACE (Allopenna et al, 1998, Dahan et al 2001a; 2001b; See Appendix A for details), although results were similar when the raw activations were examined.

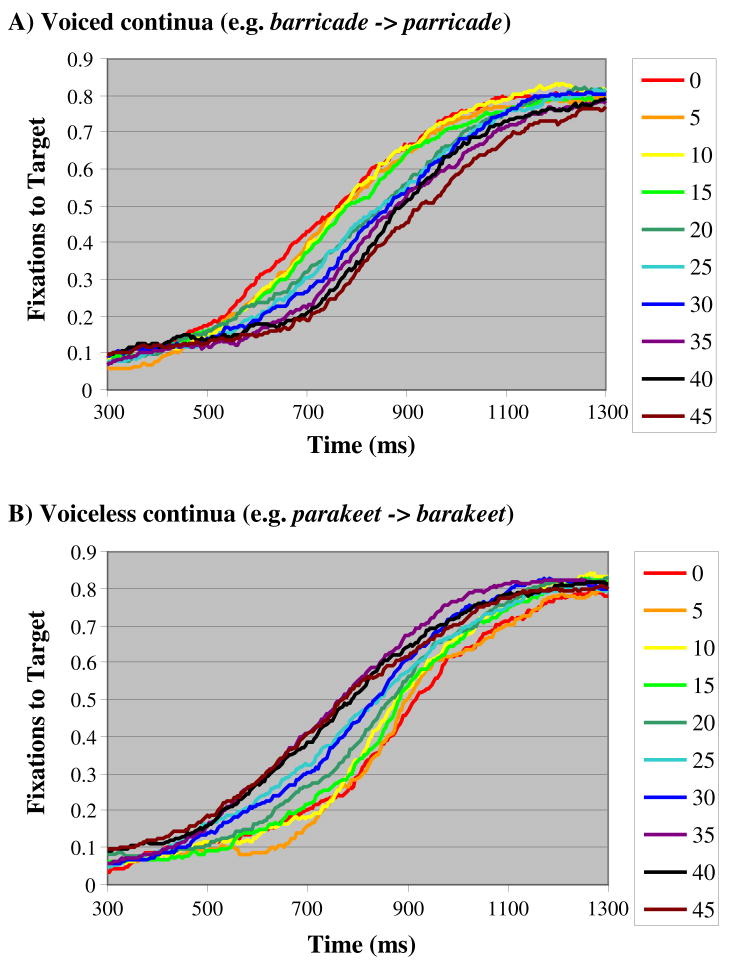

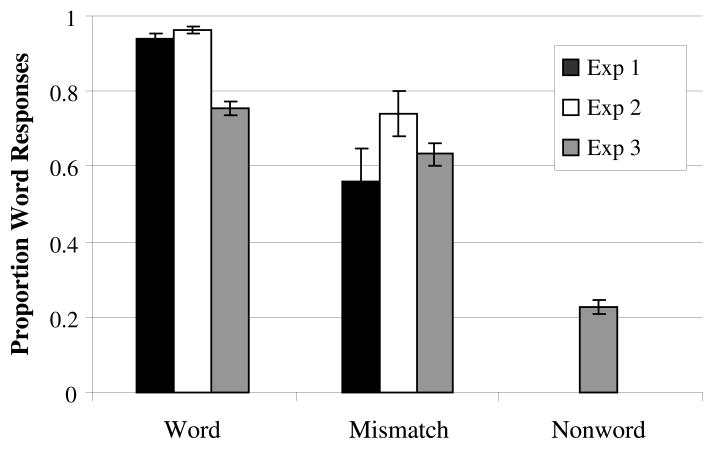

The use of the Luce-Choice rule as a linking hypothesis provides a transformation of model activation that can be compared to empirical results from studies examining the proportion of trials on which the participant is fixating on each target at any point in time. The empirical data for Experiment 1 are shown in Figure 6. As shown in the figure, participants correctly identified the target (all of the curves asymptote at .8—participants looked at the target 80% of the time regardless of VOT). It also shows that this process was systematically affected by the initial VOT, in that the rise-time to the target is delayed in the non-prototypical VOTs, and that this effect is small and graded with distance from the category boundary.

Figure 6.

Proportion of fixations to the target as a function of time and VOT for voiced (Panel A) and voiceless (Panel B) targets in Experiment 1. This data is provided as the closest analogue of the simulations reported in Figure 7.

Given this ability to make a more detailed comparison between model and data, we next sought to determine which versions of TRACE (if any) could successfully recover from the mismatch, and show gradient sensitivity to the initial phoneme. There are 19 parameters that control the activation flow of TRACE, making an exhaustive search of the parameter space impractical. Therefore we examined a variety of parameters that would control the speed at which the model commits to a single word, as well as parameters that affect its ability to retain alternatives over time. These included (a) feedback from word nodes to phoneme nodes; (b) lateral inhibition at the phoneme level and the word level, (c) rate of decay of activation; and (d) growth of activation. The complete set of parameters that were manipulated (along with their default and manipulated values) is provided in Table 6.

Table 6.

The complete set of parameters used in the TRACE simulations, including their default and manipulated values.

| Parameter | Default | Variant | Panel (Fig 11) | Description |

|---|---|---|---|---|

| Activation Flow | ||||

| Input->Feature | 1 | - | ||

| Feature->Phoneme | .02 | .01 | I | ½ feature->phoneme |

| Phoneme->Word | .05 | .1 | J | Phoneme->word x2 |

| Word->Phoneme | .03 | 0 | B | No feedback |

| Lateral Inhibition | ||||

| Feature Inhibition | .04 | - | ||

| Phoneme Inhibition | .04 | 0

.02 |

C

D |

No phoneme inhibition

½ phoneme inhibition |

| Lexical Inhibition | .03 | .015 | E | ½ lexical inhibition |

| Decay | ||||

| Feature Decay | .01 | .005 | F | ½ feature decay |

| Phoneme Decay | .03 | .06 | G | Phoneme decay x2 |

| Lexical Decay | .05 | .1 | H | Lexical decay x2 |

| Not manipulated | ||||

| Feature rest. Level | −.1 | - | ||

| Phoneme rest. level | −.1 | - | ||

| Word rest. Level | −.01 | - | ||

| Input noise | 0 | - | ||

| Feature Spread | 6 | - | ||

| Min activation | −.3 | - | ||

| Max activation | 1 | - | ||

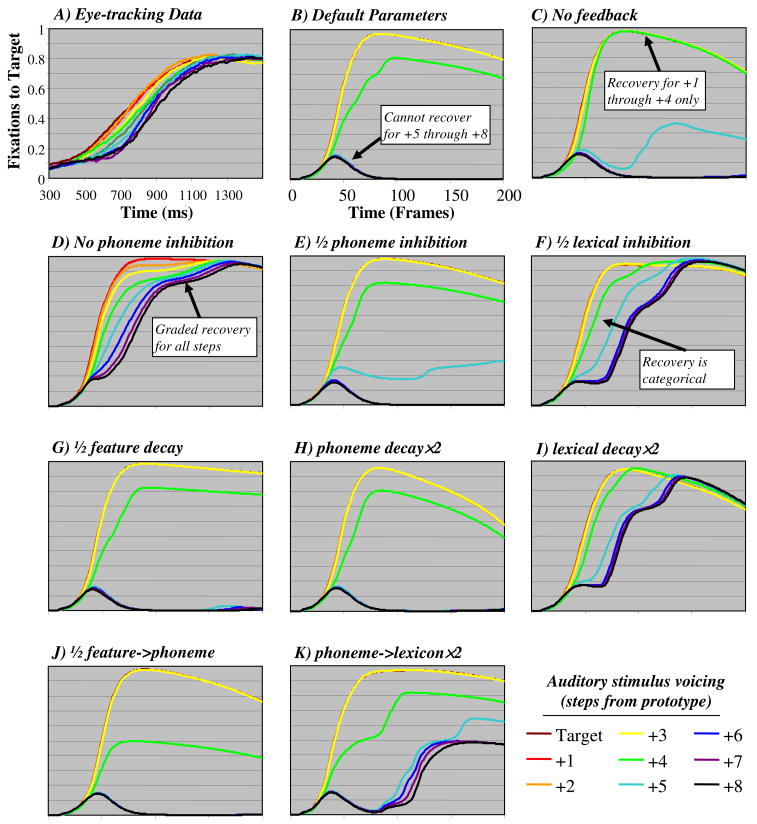

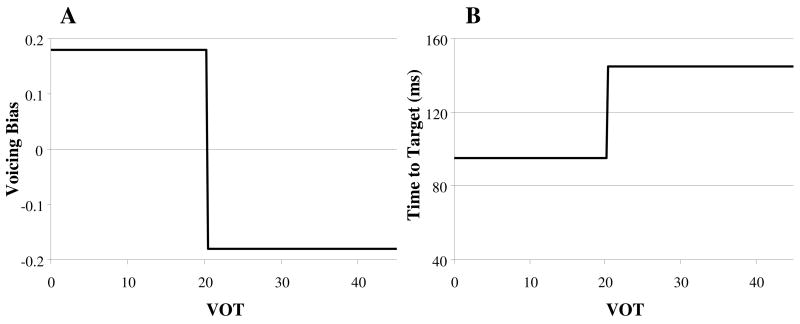

Figure 7 shows the results of a number of representative parameter manipulations. Each panel displays the predicted fixation proportions as a function of time, for each continuum step, and for each parameter of TRACE that was manipulated. In order to simplify the analysis, the continua with voiced targets (e.g., b/parricade) were combined with the voiceless counterparts (b/parakeet) by recoding the continuum step as distance from the target (e.g., a fully voiceless token became +9 for the b/parricade continuum, and +0 for the p/barakeet continuum). The first panel (7A) shows a synopsis of the eye-tracking data from Figure 6 for ease of comparison.

Figure 7.

Predicted fixations as a function of time for TRACE simulations of Experiment 1. Each panel represents a single variant of one parameter. All panels have identical X and Y axes—labels were left off for visual clarity. See Appendix A for the specific parameter values.

Figure 7B displays the results for the default parameter set. The model can correctly identify the target at +0 through +3 steps from the lexical endpoint, but it consistently fails to activate the target at steps +5 through +8. When word-to-phoneme feedback was eliminated (Panel C), results were similar–the only difference was that the model could reactivate the target at step +5 for peachpit and billboard, but not for the other continua (hence the asymptote at .4).

We next examined the parameters that control lateral inhibition between phonemes and words. Inhibition in TRACE causes the most active word or phoneme to suppress activation for competitors, moving the system from a state in which multiple candidates (either phonemic or lexical) are active in parallel toward one in which a single item (phoneme or word) has all of the activation. Inhibition therefore seems a likely candidate for why TRACE makes too strong a commitment to a single word, and quickly loses within-category phonetic detail. Panel D shows the results when phoneme inhibition was eliminated. Here the model provides a good fit for the empirical results, exhibiting both recovery and gradiency. As panel E demonstrates, reducing phoneme inhibition by half is not sufficient to drive this pattern of results – it must be eliminated entirely. Lexical inhibition did not have this effect. Completely eliminating lexical inhibition made the model very unstable (it did not always identify the unambiguous words). However, when the inhibition was halved (Panel F), the model was now able to recover from the garden-path, but its recovery was slow and the influence of stimulus voicing was more or less categorical: steps +0 through +3 showed uniformly quick recovery; +6 through +8 showed equally slow recovery, and +4 and +5 were intermediate. This suggests that continuous variability in voicing may have been lost by earlier processes (in this case phoneme inhibition), but that the lack of commitment at the lexical level allows it to recover nonetheless (from what would be a complete feature mismatch).

The foregoing simulations provide evidence that inhibition seems crucial in both determining whether gradiency can be preserved and whether lexical activation can recover from mismatching input. However, given the flexible dynamics of TRACE, it was possible that similar results could be achieved by other means. Thus, we next assessed the decay parameters. One possibility is that if feature information decays more slowly, it may be available to facilitate reactivation. However, as Panel G shows this was insufficient – the model was still unable to recover from mismatch. We also examined whether slowing the decay rate for lexical and phonetic representations would increase sensitivity to subphonetic detail over time. However, doubling the phoneme decay rate (Panel H) had no effect. Doubling the lexical decay (Panel I) did have the desired effect. However, as in the lexical inhibition simulations, the model’s recovery was categorical with respect to voicing—steps +0 through +3 were identical, +6 through +8 were equally slow and +4 and +5 were in between. Once again, gradiency was lost because of earlier processes like phoneme inhibition.

If increasing the decay of lexical activation can help the model recover from mismatch, we hypothesized that weakening its rate of growth might have similar effects. To test this hypothesis we halved the strength of the feedforward connections between features, phonemes and words. Weakening the feature-to-phoneme connections had little effect (Panel J). However, halving the phoneme-to-word weights (Panel K) permitted the model to recover from mismatch for at least some of the continua (billboard, pillbox, and peachpit but not beachball, barricade or parakeet). However, this recovery was delayed, and largely categorical.

In sum, across ten parametric variants, TRACE was largely unable to account for recovery from initial mismatch. The inability to recover from mismatch observed here stands in contrast to now classic (and much debated) simulations reported in McClelland and Elman (1986) demonstrating that TRACE successfully recognizes words like pleasant from mismatching input like bleasant. The discrepancy between these simulations and ours is most likely due to the lack of competitors for the target word in the limited lexicon of TRACE. Indeed, we were able to replicate McClelland & Elman’s (1986) demonstration that the word rugged can be recognized given a stimulus of lugged. However, there are no lug-initial words in the default TRACE lexicon. As lug-initial competitors are added to the lexicon, however, TRACE fails to recognize rugged. Of course, in a real lexicon, virtually every word will have many cohort competitors—thus, our simulations are more representative of TRACE’s behavior with a more realistic lexicon.

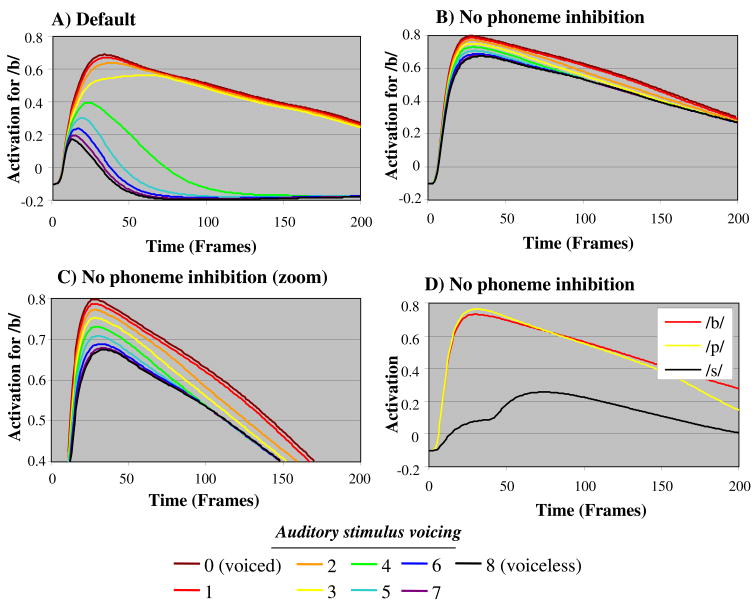

To return to our simulations of the garden-path stimuli, however, even when TRACE was able to recover from the mismatch, most of the simulations did not show gradient recovery—that is, TRACE’s ability to recover was not influenced by the voicing of the initial syllable. TRACE could only simulate both of these effects when phoneme inhibition was eliminated. The benefits of removing phoneme inhibition are clear when we examine activation of the phoneme units. Figure 8 shows the raw activation of the /b/ phoneme for a barricade/parricide continuum as a function of VOT. Panel A shows the default parameters of TRACE. From steps 1 through 4, the model fully activated /b/ with little delay. At step 5, the model does not end up deciding on /b/ (it chooses /p/), however, activation for /b/ remains sufficiently long enough to be potentially useful. However, by step 6, activation for /b/ decays quite rapidly, and the activation is close to zero when the disambiguating information arrives. In contrast, Panel B shows the same activations when phoneme inhibition is removed. Here the pattern is quite different—from the beginning, both /b/ and /p/ are active and gradiently reflect the voicing in the input. While activation for both gradually decays, this is relatively slow (to the default case) and the gradiency is preserved for both.

Figure 8.

Activation in the phoneme units during recognition of a barricade->parricade continuum. A) Activation of /b/ as a function of time and VOT for default parameters. By step 5, /b/ is completely inactive. B) Activation of /b/ as a function of time and VOT for simulations without phoneme inhibition—/b/ is active across all VOTs. C) Zoomed-in view of simulations reported in B demonstrating that activation for /b/ is a gradient function of VOT. D) Activation for /b/, /p/ and a filler, /s/ as a function of time for simulations without phoneme inhibition. While /b/ and /p/ are both active both are much more active than an unrelated phoneme.

In this modified TRACE model, phoneme activation more veridically reflects the input. Both /p/ and /b/ are similarly active because they are both labial stop consonants (the coronal fricative /s/, for example, was not active at this time). The relative difference between them reflects their differences in voicing. This veridical representation of the input then allows lexical competition and feedback to efficiently sort out the input since it is not hindered by an earlier decision. Even when the continuous value of the input is irrelevant (e.g., complete phonemic mismatch), such decisions can be detrimental. For example, /b/ and /p/ share manner and place features, even if voicing is unambiguous. By deciding that a given input is one or the other, the system ignores the possibility that it was incorrect on only one of the three features and is prevented from revising this decision. Thus, minimal sublexical processing may in fact be optimal, provided that phoneme-level inhibition is reduced to prevent early commitment.

Experiment 2

Although the results of Experiment 1 provide compelling evidence for long-lasting gradiency on the way to resolving lexical ambiguity, the robustness of these results would be bolstered by addressing a potential problem with the design. In Experiment 1 the presence of pictures corresponding to the voiced and voiceless competitors in the display might increase the activation of each competitor. Pairs like we used are unlikely to be of equal salience in normal language use (One is unlikely to talk about barricades and parakeets in the same conversation.) We were therefore concerned that our results might overestimate the extent to which listeners can recover from lexical garden-paths and the duration over which sub-phonetic detail is available. Experiment 2, addresses this concern in a Visual World study by not displaying the competitor with the target. In Experiment 3 we go beyond the Visual World paradigm entirely by conducting an auditory lexical decision studies designed to further evaluate the possibility that listeners are unusually good at recovering from lexical garden-paths in Experiments 1 and 2 because of repeatedly presenting a small set of stimuli and in the context of visual referents.

Methods

Participants

Twenty University of Rochester undergraduates served as participants in this experiment. All reported normal hearing and normal or corrected-to-normal vision. Informed consent was obtained in accordance with University and APA ethical guidelines. Testing was conducted in two sessions lasting approximately one hour and participants were compensated $10 / session. One participant was excluded from analysis for failure to return for the second session of testing.

Stimuli

Experimental materials consisted of the same 10 pairs of phonemically similar words (Table 1) that were used in Experiment 1 (10 step continua), along with the same visual stimuli.

Procedure

The task used in Experiment 2 was the same as Experiment 1, with the exception of the particular set of pictures visible on the screen. As before there was no deadline for responses and participants responded in about a second and half (M=1560 ms, SD=158.9 ms).

Design

Experiment 2 used a different arrangement of pictures than Experiment 1. Specifically, the competitor (e.g., parakeet for barricade trials) was not visible at the same time as the target. However, because participants were likely to be at ceiling in identifying a target from among three completely unrelated objects, it may not have been possible to see any effects of the non-displayed competitor in the patterns of fixations to the target. Thus, the competitor object was replaced with one of the other target objects that shared the initial phoneme (e.g., barricade and bassinet). When this non-target word was presented in the context of the display containing the target object, it served in effect as a very short-overlap cohort competitor. This technique has been used in other studies that have examined possible effects of non-displayed competitors on target fixations (e.g., Dahan et al., 2001b). Similar to Experiment 1, the target was consistently paired with this competitor (as well as two fillers) throughout the experiment, although this set of 4 pictures was randomized between participants and pairings were selected to avoid any semantic overlap.

To achieve this design, four voiced words (e.g., barricade) were chosen along with their voiceless counterparts (e.g., parakeet) to create a list of eight possible targets. This list was selected to include no more than two of the t/d-pairs and no more than four of the b/p pairs so that there would be enough remaining items to select competitors that matched on the same phoneme (not just the same voicing condition). Next competitors were chosen to match the first phonemes of the eight targets selected. Note that these selections were made independently of each other. For example, while barricade may have beachball as a competitor, parakeet was not guaranteed to have beachball’s counterpart, peachpit (and it rarely did). Since on any given trial either of the two items could serve as the target (in this example, beachball or barricade), this resulted in each participant being exposed to eight sets of visual items, but sixteen different continua.

Given this slightly larger number of continua compared to Experiment 1, the number of repetitions at each step was reduced from seven to five. We used the same 10-step continua from Experiment 1, resulting in 800 experimental trials. An additional 640 filler trials were included, yielding 1440 trials, which were run in two sessions lasting approximately an hour.

Results

The analysis of Experiment 2 was similar to that of Experiment 1. We first asked whether VOT influenced the likelihood that participants would choose the target picture rather than the X, when the onset of the auditory stimulus was mismatching. We then examined the time to fixate the target when the initial fixation was on one of the competitors and the first fixation after the point-of-disambiguation was to the target. In contrast to Experiment 1, however, we did not examine the probability of fixating the competitor object prior to the point-of-disambiguation to establish that participants were making an initial graded commitment (similar to McMurray et al, 2002). This was not possible in Experiment 2 because there was no competitor present on the screen to fixate.

Mouse click evidence for gradient recovery

Participants chose the target that was consistent with the final segment 91% of the time for voiced targets and 85% of the time for voiceless targets. When non-word (X) responses were excluded these figures increased to 99.3% and 98.9%, respectively, demonstrating that non-word responses accounted for the large majority of non-target responses. Interestingly, overall X (non-word) responding was reduced in this experiment (despite identical auditory stimuli) compared to Experiment 1. Recall that we were concerned that the availability of the competitor in the display in Experiment 1 might have inflated effects of within-category phonetic detail and ease of garden-path recovery. Instead, however, participants were more successful in Experiment 2 at using subsequent phonetic context to resolve a mismatching onset segment when the competitor was not displayed. Thus the system seems capable of recovering from mismatching onsets quite gracefully. In fact, the visual competitor may have enhanced competition between the two lexical competitors, leading to the possibility (on some trials) that neither alternative wins, and a non-word response is given.

Figure 9A shows the proportion of fixations to the target, competitor, and X for the voiced continua (e.g., barricade/parricade). As in Experiment 1, the overall pattern of identification results was not consistent with a sharp categorical boundary. The proportion of non-word choices increased gradually as a function of VOT. In addition, as in Experiment 1, even at the most extreme mismatch (parricade with a VOT of 45ms), participants chose the target 73.3% of the time (SD=26.2%) and only 3 of the 20 participants responded with the X more than the target. For voiceless continua (Figure 9B), participants responded with the target significantly more than the X at all VOTs, and only 5 out of 20 responded with more X’s than targets at the most extreme mismatch (barakeet with a 0 ms VOT).

Figure 9.

Identification results for Experiment 2. Panel A: identification for voiced targets (e.g. barricade). Panel B: identification for voiceless targets (e.g. parakeet).

Eye movement evidence for gradient recovery

The next series of analyses examined the sequence of fixations to determine if recovery from the garden-path was affected by VOT. Since the competitor belonged to the same voicing category as the target, it did not represent a garden-path interpretation. Thus, the present analysis adopted the more relaxed criteria used in the second analysis of Experiment 1. Trials were included in these analyses if 1) the participant was fixating any object other than the target prior to the POD, and 2) the next fixation after the POD was directed to the target. For each trial, the time between the POD and this first fixation was coded as the time-to-target. Mean time-to-target as a function of VOT is shown in Figure 10.

Figure 10.

Time to fixate the target after the point of disambiguation as a function of distance from lexical endpoint in VOT for voiced and voiceless targets. Time-to-target was computed for trials in which the subject was fixating any non-target object.

These data were analyzed in a 2 (lexical-endpoint) x 10 (VOT) ANOVA (Table 7). One participant was excluded for missing data. The ANOVA yielded a non-significant main effect of lexical-endpoint by both participants and items (row 1). More importantly the main effect of rVOT was significant, (row 2), as was the linear trend (row 3). Participants were faster to fixate the correct target for VOTs near the prototypical value (i.e., away from the category boundary). This did not interact with target-type (row 4). Thus even with no visible competitor on the screen, variation in VOT was systematically related to the time it took to correctly identify the target after the POD.

Table 7.

Results of an ANOVA examining the effect of lexical endpoint and rVOT on the time-to-target in Experiment 2.

| Factor | df | F | P | ||

|---|---|---|---|---|---|

| 1 | Lexical-Endpoint | F1 | 1, 18 | 3.4 | 0.083 |

| F2 | 1, 9 | 3.5 | 0.093 | ||

| 1, 24 | 1.7 | 0.2 | |||

|

| |||||

| 2 | rVOT | F1 | 9, 162 | 4.8 | 0.0001 |

| F2 | 9, 81 | 3.3 | 0.002 | ||

| minF’ | 9, 185 | 2.0 | 0.04 | ||

|

| |||||

| 3 | rVOT (trend) | F1 | 1, 18 | 29.8 | 0.0001 |

| F2 | 1, 9 | 30.6 | 0.0001 | ||

| minF’ | 1, 24 | 15.1 | 0.0007 | ||

|

| |||||

| 4 | Endpoint x rVOT | F1 | <1 | ||

| F2 | <1 | ||||

The foregoing analysis excluded one participant (who was missing a data point at a single VOT), and therefore was repeated as a hierarchical regression analysis, similar to the one conducted in Experiment 1 (see Table 8). Again participant codes were added in the first step and found to account for 42.5% of the variance. In the second step, rVOT and lexical-endpoint accounted for an additional 2.1% of the variance, but only VOT reached significance individually (B=.81 ms/VOT, 95% CIslope=.43). Finally, the interaction term was added and accounted for no additional variance.

Table 8.

Results of a hierarchical regression analysis examining the effect of lexical endpoint and rVOT on the time-to-target in Experiment 2.

| Analysis | Step | Factor | R2change | df | F/t | P |

|---|---|---|---|---|---|---|

| Subject analysis

| ||||||

| 1 | Subject | .425 | 19, 380 | 14.8 | 0.0001 | |

|

| ||||||

| 2 | Main effects | .021 | 2, 378 | 7.2 | 0.001 | |

| Lexical Endpoint | 378 | .9 | >.1 | |||

| rVOT | 378 | 3.7 | 0.001 | |||

|

| ||||||

| 3 | Interaction | .0001 | 1, 377 | <1 | ||

|

| ||||||

| Item analysis

| ||||||

| 1 | Items | .506 | 9, 190 | 21.6 | 0.0001 | |

|

| ||||||

| 2 | Main Effects | .076 | 2, 188 | 17.13 | 0.0001 | |

| Lexical Endpoint | 188 | 2.4 | 0.016 | |||

| rVOT | 188 | 5.3 | 0.0001 | |||

|

| ||||||

| 3 | Interaction | .006 | 1, 187 | 2.9 | 0.088 | |

Results were the same when this analysis was repeated with items as the fixed effect. On the first step item codes accounted for 50.6% of the variance. When VOT and lexical-endpoint were added in the second step, an additional 7.6% of the variance was accounted for, and both factors were individually significant (Endpoint: B=9.84 ms, 95% CIslope=8.0; VOT: B=.75 ms/VOT, 95% CIslope=.48). Finally, the interaction term did not significantly account for any additional variance on the third step.

Categorical or Gradient?

In order to verify that the linear model relating time-to-target and VOT was superior to a logistic (categorical) model, we again compared two mixed effects models in which linear and logistic functions were applied to each participant’s data individually. As before, datasets were constructed for each participant, consisting of the time-to-target for trials in which the participant was fixating any non-target object. On average there were 252.0 data-points per participant (SD=75.1).

Results strongly supported the linear model. The linear model accounted for 9.9% of the variance, and the categorical model for 2.5%. More importantly, the BIC measure showed a difference of 620.1 in favor of the linear model (Linear: 62440, Logistic: 63060), and all 20 participants had a lower BIC score for the linear model than the logistic model. Thus, this analysis provides evidence against a categorical model and in favor of a gradient model as the underlying form of the function relating time-to-target to VOT.

Discussion

Experiment 2 replicated the central results from Experiment 1. Recovery from a lexical garden-path was again related to the VOT of the initial consonant, demonstrating that sensitivity to fine-grained sub-phonetic detail persists for at least 240 ms after the POD. The results further demonstrate that gradient effects do not depend on the presence of a visual competitor within the display. Instead the ability of listeners to recover from the mismatch (as seen in their mouse click responses) was significantly enhanced when the visual competitor was removed in Experiment 2. One likely explanation for this difference is that in Experiment 1, the integration of visual and linguistic information made garden-path recovery more difficult. That is, participants who were looking at the competitor while it was consistent with the input had additional evidence that favored the competitor, whereas participants in Experiment 2 had no picture of the competitor available for fixation. Thus, there were two sources of information to prevent (or delay) an eye movement to the target in Experiment 1, but only one source (linguistic) in Experiment 2. An alternative is a more strategic explanation, that the presence of the visual competitor simply raised subjects’ threshold for what counts as a positive exemplar of the target.