Abstract

Eye tracking was combined with the visual half-field procedure to examine hemispheric asymmetries in meaning selection and revision. In two experiments, gaze was monitored as participants searched a four-word array for a target that was semantically related to a lateralized ambiguous or unambiguous prime. Primes were preceded by a related or unrelated centrally-presented context word. In Experiment 1, unambiguous primes were paired with concordant weakly-related context words and strongly-related targets that were similar in associative strength to discordant subordinate-related context words and dominant-related targets in the ambiguous condition. Context words and targets were reversed in Experiment 2. A parallel study involved the measurement of event-related potentials (ERPs; Meyer, A. M., and Federmeier, K. D., 2007. The effects of context, meaning frequency, and associative strength on semantic selection: Distinct contributions from each cerebral hemisphere. Brain Res. 1183, 91–108). Similar to the ERP findings, gaze revealed context effects for both visual fields/hemispheres when subordinate-related targets were presented: initial gaze revealed meaning activation when an unrelated context was utilized, whereas later gaze also revealed activation in the discordant context, indicating that meaning revision had occurred. However, eye tracking and ERP measures diverged when dominant-related targets were presented: for both visual fields/hemispheres, initial gaze indicated the presence of meaning activation in the discordant context, and, for the right hemisphere, discordant context information actually facilitated gaze relative to unrelated context information. These findings are discussed with respect to the activeness of the task and hemispheric asymmetries in the flexible use of context information.

Keywords: Lexical Ambiguity, Cerebral Hemispheres, Context Effect, Eye Tracking

Most English words are ambiguous (Rodd, Gaskell, and Marslen-Wilson, 2004), having either multiple unrelated meanings (homonymy) or multiple related senses (polysemy). For example, the homonym bank can refer to a financial institution or the edge of a river, and the polysemous word lamb can refer to food or a living animal. Lexical ambiguity has been the subject of much research over the past several decades; many studies (e.g., Duffy et al., 1988; Simpson, 1981; Swinney and Hakes, 1976; Tabossi, 1988; Vu et al., 1998) have presented homonyms in a biasing context in order to explore the possible effects of non-lexical sources of information on lexical access (e.g., the semantic context in a sentence like "The office walls were so thin that they could hear the ring …"; Onifer & Swinney, 1981). Several classes of models have developed out of this research: exhaustive models (Swinney, 1979), which argue that multiple meanings of an ambiguous word are automatically activated; selective models (Swinney & Hakes, 1976), which suggest that only the contextually-consistent meaning is initially activated; and hybrid models, which argue that context interacts with meaning frequency (e.g., Duffy et al., 1988; Tabossi, 1988). For example, the hybrid reordered access model developed by Duffy et al. postulates that the dominant (most frequent) meaning is activated first in a neutral context, but that context biased toward the subordinate (less frequent) meaning results in simultaneous activation of both meanings. Although exhaustive models prevailed for a time, the constraining effects of context are widely acknowledged today, with current disputes focusing on the potential interaction between meaning frequency and contextual constraint (see, e.g., Binder and Rayner, 1999, and Kellas and Vu, 1999).

In recent years, studies of ambiguity resolution have increasingly focused on selection processes, rather than initial meaning access (see Gorfein, 2001). Such studies have used a variety of tasks (including naming, relatedness judgment, sentence verification, self-paced reading, and eye tracking) to probe activation for unselected meanings of an ambiguous word, subsequent to selection of the contextually-consistent meaning. Findings suggest that selection involves the inhibition of unselected meanings, which then results in difficulty accessing those meanings (Gernsbacher, Varner, and Faust, 1990; Gernsbacher, Robertson, and Werner, 2001; Simpson and Kang, 1994; Simpson and Adamopoulos, 2001) or integrating them with discourse (Morris and Binder, 2001). In contrast to the interactive effects of meaning frequency and context on lexical access (e.g., Duffy et al., 1988), similar inhibition effects have been observed following dominant- and subordinate-biased contexts (Simpson and Kang, 1994; Gernsbacher et al., 2001; Morris and Binder, 2001).

As interest in the time course of meaning selection has increased, researchers have moved from the use of traditional behavioral measures, which provide information regarding the cognitive processing that occurs at a specific, discrete time point, toward methodologies such as event-related potentials (ERPs; Van Petten and Kutas, 1987) and eye tracking (Rayner and Duffy, 1986), which allow for continuous sampling of cognitive processing and provide multidimensional indices of processing. For example, the visual world paradigm (Cooper, 1974; Tanenhaus et al., 1995) has been combined with eye tracking in order to explore the time course of ambiguity resolution. In one such study, participants listened to neutral or subordinate-biased sentences containing an ambiguous word (e.g., “First, the man got ready quickly, but then he checked the pen …” or “First, the welder locked up carefully, but then he checked the pen …”) while viewing an array of pictures that included a dominant referent (a writing instrument), a subordinate referent (an animal enclosure), and two unrelated distractors (Huettig and Altmann, 2007). At the onset of the ambiguous word in the subordinate-biased context, the probability of fixating the subordinate referent was greater than that for distractors, indicating that the context was successful in directing attention toward the “animal enclosure” concept. However, this did not prevent activation of the dominant meaning when the ambiguous word was processed. At the offset of the ambiguous word in both contexts, the probability of fixating each referent image was greater than that for the unrelated distractors, indicating that multiple meanings had been accessed. The findings of this study appear to be consistent with both exhaustive and hybrid models but inconsistent with selective models. However, it is possible that above-baseline fixation of the dominant referent in the subordinate-biased context reflects backward priming from the referent to the ambiguous word (Kiger and Glass, 1983; Van Petten and Kutas, 1987), or priming that originates when the referent is viewed prior to the presentation of the ambiguous word. In other words, although the referent image was intended to serve as a probe for activation of the dominant meaning, the dominant meaning may be activated as a result of the temporal proximity between the referent image and the ambiguous word. To rule out such an interpretation, it may be necessary to temporally separate the prime and target or use a complementary methodology such as ERPs.

Eye-tracking and ERPs have previously been utilized in parallel to obtain converging or complementary evidence regarding issues in language comprehension (e.g., Ledoux, Traxler, and Swaab, 2007; Knoeferle et al., 2005; Knoeferle et al., 2008). Because ERPs provide multiple, functionally-dissociable measures of word and sentence processing, they have proven useful for examining the nature and time course of meaning activation and revision. Two components that have played a particularly important role in studies of language processing are the N400 (Kutas and Hillyard, 1980) and the late positive complex, or LPC (e.g., Curran et al., 1993). The N400 is a negative-going potential that peaks around 400 ms after the onset of a meaningful stimulus. Its amplitude is reduced in the presence of supportive context information, such as a related word or a congruent sentence (see Kutas and Federmeier, 2001, for a review). Differences in N400 amplitude relative to an unrelated baseline have been used to examine the extent to which specific meanings of ambiguous words are activated or selected (e.g., Van Petten and Kutas, 1987). The LPC, a positive-going potential following the N400, has instead been linked to more explicit aspects of meaning selection and revision, such as the realization that a target word is related to an unselected meaning of a previously-presented ambiguous word (Swaab, Brown, & Hagoort, 1998). Through these components, it has proven possible to dynamically track not only whether particular word meanings are active, but when and how that activation occurs. For example, some studies have found evidence that contextual information initially suppresses activation of the inconsistent meaning of an ambiguous word, as suggested by a lack of N400 facilitation to a target word related to that dispreferred meaning. More positive LPC responses to these targets, however, suggested that the suppressed meaning was eventually (re)activated (Swaab et al., 1998, 2003).

Hemispheric Asymmetries in Ambiguity Resolution

In addition to the fact that the multiple meanings associated with a given lexical item may become active at different points in time, there are reasons to believe that meaning activation and selection may take place differentially in the two cerebral hemispheres (e.g., Jung-Beeman, 2005; Burgess and Simpson, 1988; Tompkins et al., 2000). However, the findings of studies investigating hemispheric asymmetries in the selection of ambiguous word meanings have often been conflicting, in both neutral (e.g., Burgess & Simpson, 1988; Hasbrooke & Chiarello, 1998) and biasing contexts (e.g., Coney and Evans, 2000; Faust and Gernsbacher, 1996; Swaab et al., 1998; Tompkins et al., 2000). These studies have used either visual half-field (VF) presentation methods (Gazzaniga et al., 1962) with behavioral measures in neurologically-intact individuals1, or behavioral or ERP measures with participants who have unilateral brain damage. Disparate findings have been observed both within and between participant populations. For example, with the presentation of homonyms as primes for lateralized dominant- or subordinate-related targets (e.g., BANK-MONEY or BANK-RIVER), Burgess and Simpson (1988) found that the LH initially activates both meanings but selects the dominant meaning, whereas the RH appears to maintain both meanings. Yet, another study using very similar methods reported the opposite pattern: the RH selected the dominant meaning, whereas the LH failed to select a meaning (Hasbrooke and Chiarello, 19982).

When sentence context information is available to constrain the possible meaning of homonyms, VF studies with neurologically-intact participants suggest that the LH is more likely to select the meaning that is consistent with context (Faust and Gernsbacher, 1996; Faust and Chiarello, 1998), whereas the RH fails to select (but see also Coney and Evans, 2000). These studies, along with evidence suggesting that LH damage is associated with meaning selection deficits (Copland et al., 2002; Swaab et al., 1998), point to a critical role for the LH in the control of meaning selection. However, deficits in context-based meaning selection have also been observed in patients with unilateral RH damage (McDonald et al., 2005; Tompkins et al., 2000). Thus, it may be that the functions of both hemispheres are essential to normal meaning processing, although the literature to date does not provide a clear picture of what those asymmetric functions are.

Building on work using VF presentation methods with ERP measures to examine hemispheric differences in language comprehension (see, e.g., review by Federmeier, Wlotko, and Meyer, in press), we (Meyer and Federmeier, 2007) examined hemispheric asymmetries in ambiguity resolution, focusing on the effects of context, meaning frequency, and associative strength. ERPs were recorded as neurologically-intact participants decided if a lateralized ambiguous or unambiguous prime was related in meaning to a centrally-presented target. Prime-target pairs were preceded by a centrally-presented context word that could be related or unrelated in meaning. Unambiguous primes were paired with concordant weakly-related context words and strongly-related targets (e.g., taste-sweet-candy) that were similar in associative strength to discordant subordinate-related context words and dominant-related targets in a condition using ambiguous primes (e.g., river-bank-deposit). Context words and targets were reversed in a second experiment, resulting in strongly-related context words and weakly-related targets in the unambiguous condition, and dominant-related context words and subordinate-related targets in the ambiguous condition. In each experiment, there were four general conditions: UU (unrelated context, unrelated target), UR (unrelated context, related target), RR (related context, related target), and RU (related context, unrelated target). The last condition was included in the experiment to prevent the targets from being predictable, but was not analyzed because it was not of theoretical relevance. The UU condition was used as a baseline.

In both experiments, when the context word was unrelated (UR condition), N400 responses were more positive than baseline (facilitated) for all targets associated with ambiguous primes, except when subordinate targets were presented on left visual field-right hemisphere (LVF-RH) trials in Experiment 2. Thus, in the absence of biasing context information, the hemispheres seem to be differentially affected by meaning frequency, with the left maintaining multiple meanings and the right selecting the dominant meaning (cf. Hasbrooke and Chiarello, 1998). In the presence of discordant context information in either experiment (ambiguous RR condition), N400 facilitation was absent in both visual fields, indicating that the contextually-consistent meaning of the ambiguous word had been selected by both hemispheres. Later increases in LPC amplitude to these items indicated that the inconsistent meaning was eventually recovered (cf. Swaab et al., 1998), and this recovery occurred more quickly for dominant-related targets (cf. Duffy et al., 1988).

In contrast to the ambiguous conditions, N400 facilitation occurred in both of the unambiguous conditions (RR and UR) in both experiments. However, there were some asymmetries in the pattern of response. Specifically, in Experiment 2 the LH showed less facilitation for the weakly-related target when a strongly-related context had been presented (compared to the unrelated context condition), suggesting that with LH processing, context information shaped meaning selection even for these unambiguous words. In Experiment 1, the RH also showed greater facilitation than the LH for the strongly-related target when a weakly-related context was presented, suggesting that it obtained more of a boost from weak contextual information. Overall, the priming patterns across the experiments indicated that both hemispheres process ambiguous and unambiguous words in parallel, but that the LH may be more likely to focus activation on a single, contextually-relevant sense, whereas the RH is more sensitive to meaning frequency when contextual information is absent.

Our findings were inconsistent with the primary tenet of the coarse coding hypothesis (Beeman et al., 1994; Jung-Beeman, 2005), namely that the RH activates a broader range of semantic features than the LH. We found that whereas the LH activated both the dominant and subordinate meanings of ambiguous words out of context, the RH initially either inhibited or failed to activate the subordinate meaning, both of which are inconsistent with coarse coding. However, our results did align with a secondary assumption of the coarse coding hypothesis regarding the nature of meaning processing by the LH, which is postulated to strongly activate central features of words. This focal activation is assumed to be influenced by attention: priming by weak associates does occur in the RVF-LH, and, under conditions that promote automatic processing, such priming is equivalent across the hemispheres (Beeman et al., 1994). Thus, the hypothesis suggests that weakly-related information can be activated in the LH, but that controlled processes focus attention on more strongly-related information. Our findings for the LH were consistent with the idea of controlled processing being stronger in this hemisphere, at least for unambiguous words, as the LH was more likely to focus activation on a single contextually-relevant sense.

The Current Study

Given the finding that the LH may be more likely to engage in controlled meaning selection processes, in the current study our aim was to seek converging or complementary evidence for our ERP study through the use of eye tracking and an active meaning selection task. The combination of VF methods with eye tracking is novel to the literature; thus, a secondary goal of the study was to test this new method pairing. We modified the procedure used in the ERP study, such that the target word was presented with three unrelated distractors, and participants were asked to search for a related word while their eye movements were monitored. Our primary dependent measure is the gaze proportion for each word within the array. If participants are more likely to look at a related word than to look at unrelated words within a given time window, it can be inferred that the related meaning is active at that time. As in the previous study, participants also performed a relatedness judgment task, pressing a “yes” or “no” button to indicate whether or not one of the four words in the array was related to the lateralized prime. In Experiment 1, subordinate- or weakly-related context words and dominant- or strongly-related targets were presented. In order to fully explore the effects of context, meaning frequency and associative strength, we reversed the order of the primes and targets in Experiment 2, which allowed us to examine the processing of subordinate- or weakly-related targets following a dominant- or strongly-related context. The two experiments were run in parallel, with random assignment of participants. Similar to our ERP study (Meyer and Federmeier, 2007), there were four general conditions in each experiment: UU (unrelated context, unrelated target), UR (unrelated context, related target), RR (related context, related target), and RU (related context, unrelated target). As in our ERP study, the RU condition was not analyzed due to its lack of theoretical relevance. The UU condition was used as a baseline for the behavioral analysis, but not for the eyetracking analysis, where, instead, we followed the standard practice in visual world studies (e.g., Huettig and Altmann, 2007) of using as baseline the average gaze directed to the unrelated distractors that were presented along with the critical word on related trials.

It is important to note that the methods used in this study differ in some respects from those used in typical visual world eye-tracking studies. In such studies, participants’ eye movements are monitored as they listen to instructions directing them to manipulate objects (Tanenhaus et al., 1995) or to click on clip-art pictures using a computer mouse (Allopena et al., 1998), or as they passively view pictures that are referred to by auditory sentence stimuli (Altmann and Kamide, 1999). In the current study, visual context words and primes were used rather than auditory stimuli, the visual stimuli consisted of words rather than pictures3, semantically-related items were used rather than referents4, and participants were given explicit instructions to search for related items. This explicit task has more in common with the search tasks used in studies of visual attention (e.g., Walsh et al., 1999).

Right hemisphere dominance in spatial attention and visual search tasks is well established in the literature (see O’Shea et al., 2006, for a review). The right hemisphere advantage for spatial attention is especially prevalent in right-handed individuals, with 95% of these participants showing greater right cerebral perfusion during a spatial attention task (Floel et al., 2005). Thus, it may be the case that the participants in the current study (all right-handed) will exhibit above-baseline gaze for the related target more rapidly on trials in which the prime is presented to the LVF-RH. On the other hand, a RVF-LH advantage for word recognition is also well established in the literature (e.g., Jordan et al., 2003), making it difficult to predict the likely direction of a general hemispheric advantage for this type of task. To make it less likely that the VF of target presentation would interact with the VF of prime presentation, each word in the target array was placed so that its closest edge was approximately 7 degrees from the central fixation point. Thus, when the targets were initially presented they were not within the functional field of view in which information processing can occur, which for letters and words is limited to 5 degrees surrounding fixation when the display contains multiple items (see Irwin, 2004).

Turning to more specific predictions, we expected that initial above-baseline gaze would reflect initial meaning selection, whereas later above-baseline gaze would reflect meaning revision processes, similar to the N400 and LPC components, respectively. Thus, based on the N400 facilitation effects we observed (Meyer and Federmeier, 2007), for both experiments we predicted initial above-baseline gaze for all related targets in the unrelated context (UR) condition, except for subordinate-related targets presented on LVF-RH trials (the ambiguous UR condition in Experiment 2). This pattern would indicate that the LH maintains multiple meanings, while the RH selects the dominant meaning or activates the subordinate meaning more slowly

Following a discordant context (ambiguous RR condition) in both experiments, we predicted that above-baseline gaze to related targets would occur slowly for both hemispheres (compared to the ambiguous UR condition and the unambiguous UR and RR conditions), paralleling the LPC effects we observed and indicating that meaning selection is driven by context (Meyer and Federmeier, 2007). Further, we predicted that revision would occur more quickly for dominant-related targets (Experiment 1), which would be consistent with models arguing that the dominant meaning is automatically accessed in a subordinate-biased context (Duffy et al., 1988; Tabossi et al., 1987).

In the concordant context (unambiguous RR) condition in both experiments, we predicted initial above-baseline gaze to related targets for both hemispheres. However, we expected weaker activation in the LH (compared to the RH or the unambiguous UR condition), similar to the N400 effects that we observed in both ERP experiments (Meyer & Federmeier, 2007).

Results

Recognition Accuracy

Mean A' was .79 (SE = .01) 5, indicating that participants attended to the context words and could discriminate between these words and distractors.

Relatedness Judgment Performance

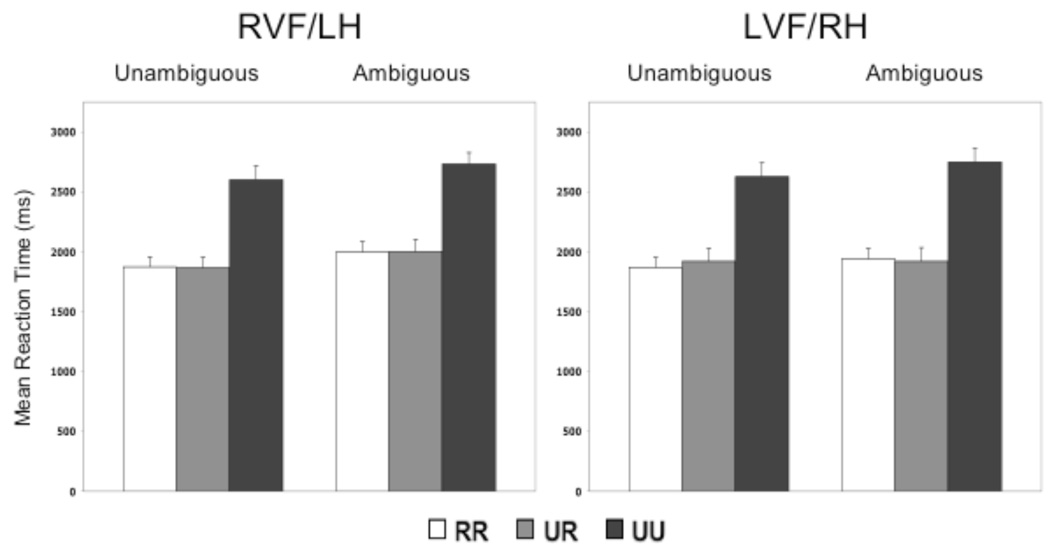

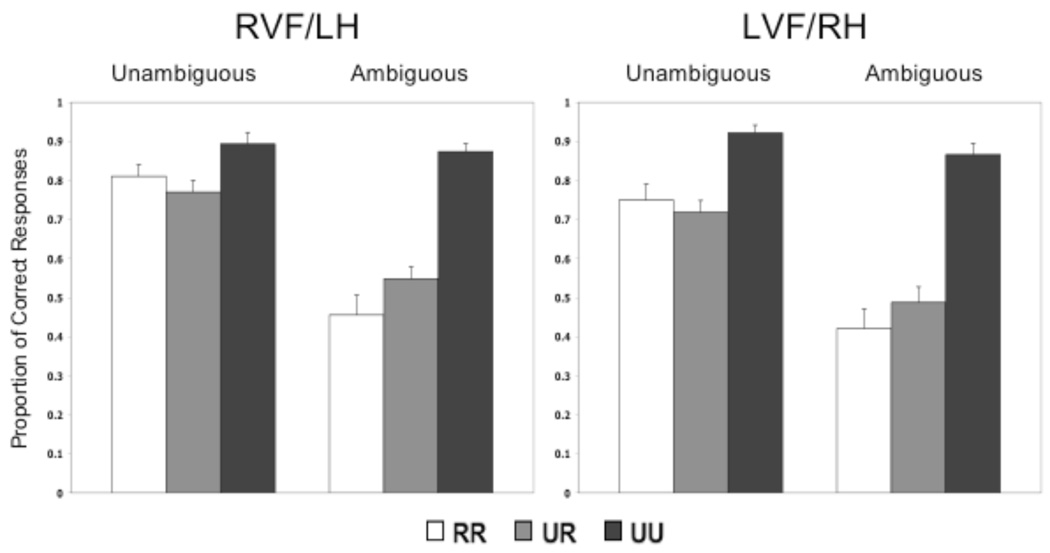

Reaction time (RT) and accuracy data are presented in Figure 1 and Figure 2, respectively. RT and accuracy data were subjected to separate 2 (Visual Field: RVF vs. LVF) × 2 (Ambiguity: Unambiguous vs. Ambiguous) × 3 (Relatedness: RR vs. UR vs. UU) analyses of variance (ANOVAs). The RT analysis utilized individual participants’ median response times from trials involving a correct judgment response (the means and standard errors presented in the figures are based on these medians). None of the interaction effects were significant (all p’s > .22). There were significant main effects of Ambiguity [F(1, 21) = 14.02, p < .01, MSE = 44,965] and Relatedness [F(2, 42) = 95.67, p < .001, MSE = 174,696], whereas the main effect of Visual Field was not significant [F(1, 21) < 1]. Responses were faster in the unambiguous condition, indicating that distinct meanings made the task more difficult. Responses were also faster in the related conditions, indicating that participants complied with the instruction to respond as soon as they saw a related word.

Figure 1.

Mean RT from the relatedness judgment task, Experiment 1. The means and standard error bars are based on individual participants’ median RTs.

Figure 2.

Relatedness judgment accuracy, Experiment 1. Bars represent standard error.

An ANOVA involving the proportion of correct responses revealed main effects of Visual Field [F(1, 21) = 11.25, p < .01, MSE = .01], Ambiguity [F(1, 21) = 28.41, p < .001, MSE = .01], and Relatedness [F(2, 42) = 6.09, p < .01, MSE = .03]. Consistent with the RVF-LH advantage for word recognition that is typically observed in VF studies (e.g., Jordan et al., 2003), accuracy was higher on RVF-LH trials. Similar to the faster RTs in the unambiguous condition, accuracy in the unambiguous condition was also higher. Responses were also more accurate for unrelated trials, whereas RTs for this condition were slower; thus, participants may have traded-off speed for accuracy in this condition.

Because the Visual Field × Ambiguity × Relatedness interaction was significant [F(2, 42) = 4.41, p < .05, MSE = .01], the Ambiguity × Relatedness interaction was examined separately in each visual field. In the LVF-RH, the interaction was significant [F(2, 42) = 3.70, p < .05, MSE = .01]: in the ambiguous condition, responses were more accurate for the unrelated (UU) condition than for either of the related conditions [F(2, 42) = 8.24, p < .01, MSE = .01], whereas in the unambiguous condition, the effect of Relatedness was not significant [F(2, 42) < 1]. The Ambiguity × Relatedness interaction was also significant in the RVF [F(2, 42) = 11.43, p < .001, MSE = .01], but the pattern of effects was different. In the ambiguous condition, accuracy was reduced for the RR condition relative to either the UU or the UR conditions [F(2, 42) = 9.29, p < .001, MSE = .01]. The effect of Relatedness was also significant in the RVF unambiguous condition [F(2, 42) = 4.34, p < .05, MSE = .01], with less accurate responses to the UR condition than to the other two condition types.

Thus, whereas response accuracy in the LVF-RH was unaffected by the presence of the context word, response accuracy in the RVF-LH was sensitive to context, with reduced accuracy to detect the semantic relationship when the context word was discordant (in the ambiguous condition) and improved accuracy (relative to the UR condition) to detect the semantic relationship when the context word was concordant.

Eye Tracking

Total eye-tracking data loss (including saccades directed to the lateralized prime) accounted for 11% of the data. Our analysis of the remaining data focuses on gaze proportions during an epoch of 1650 ms that began at the onset of the target array6. This epoch includes data from the period prior to the average relatedness judgment RT for related conditions, which was 1923 ms. The 1650 ms epoch was divided into 300 ms time windows, beginning at 150 ms. 7

Initial Target Array Apprehension

Participants typically viewed the array in a clockwise or counterclockwise pattern, beginning with the upper left quadrant. The average gaze proportion for the upper left quadrant was .62 in the 150–450 ms window, while the proportion for the other three quadrants was at .10 or less. The gaze proportion for the upper right and lower left quadrants increased in the 450–750 ms window (to .27 and .25, respectively). The gaze proportion for the lower right quadrant increased more slowly, being equal to .22 in the 750–1050 ms window and .28 in the 1050–1350 ms window.

Interactions between Prime VF and Target VF

In order to ascertain whether processing was affected by a general match (or mismatch) between the spatial position of the target in the array and the position of the prime, we compared (within each window, for each combination of prime VF and target VF) the average gaze directed to related targets with the average gaze directed to words in the same VF when the related target was presented in the other VF. Above-baseline gaze to related targets occurred earlier (in the 450–750 ms window) when the prime was presented to the LVF-RH; this was true for targets in both the left and right halves of the array [t(21) = 2.97, p < .01, and t(21) = 3.72, p < .01, respectively]. For primes presented to the RVF-LH, above-baseline gaze began in the 750–1050 ms window for targets in both the left and right halves of the array [t(21) = 4.16, p < .001, and t(21) = 2.67, p < .05, respectively]. To compare effect sizes across the two halves of the target array, unrelated gaze proportions were subtracted from related gaze proportions and the resulting difference measures were compared using t tests. For LVF primes, effect sizes did not differ as a function of target position within the 450–750 ms window, t(21) = 0.82, p = .42. In contrast, for RVF primes, effect sizes did differ for targets in the right and left halves of the array within the 750–1050 ms window, t(21) = 2.46, p < .05, with greater effect sizes when the target location mismatched the prime location (i.e., when the target was on the left: M = .18, vs. M =.08 for targets on the right). Thus, there did not appear to be a tendency for greater priming when primes and targets were in the same VF (and thus potentially initially directed to the same hemisphere), perhaps because the words in the target array were located outside of the functional field of view when gaze was directed at the fixation cross (see Irwin, 2004). Nevertheless, earlier gaze to related targets on LVF prime trials may reflect a general RH advantage for spatial attention (see O’Shea et al., 2006), although a more detailed analysis indicates that earlier gaze following LVF primes is limited to conditions involving a related context word (see below).

Gaze to Targets

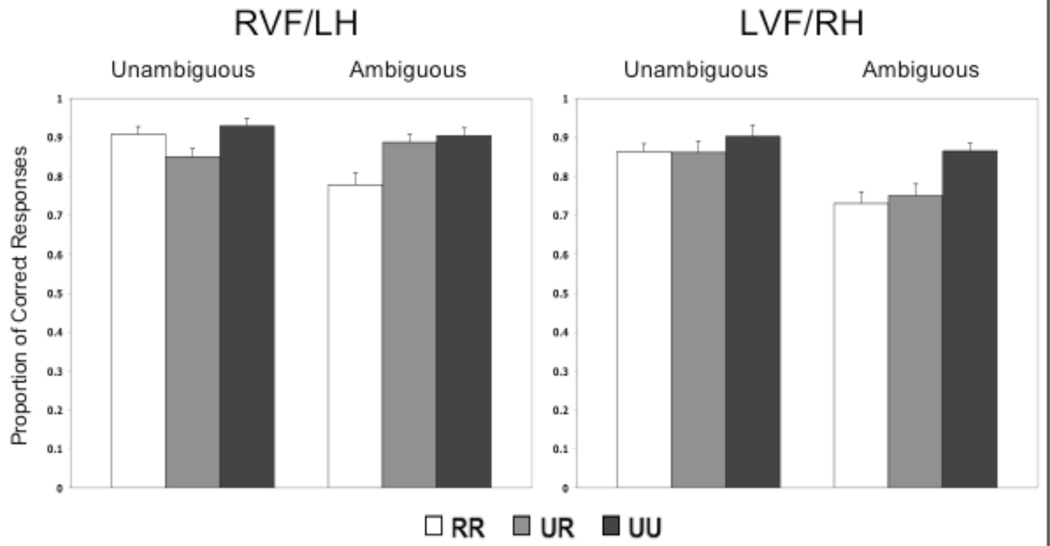

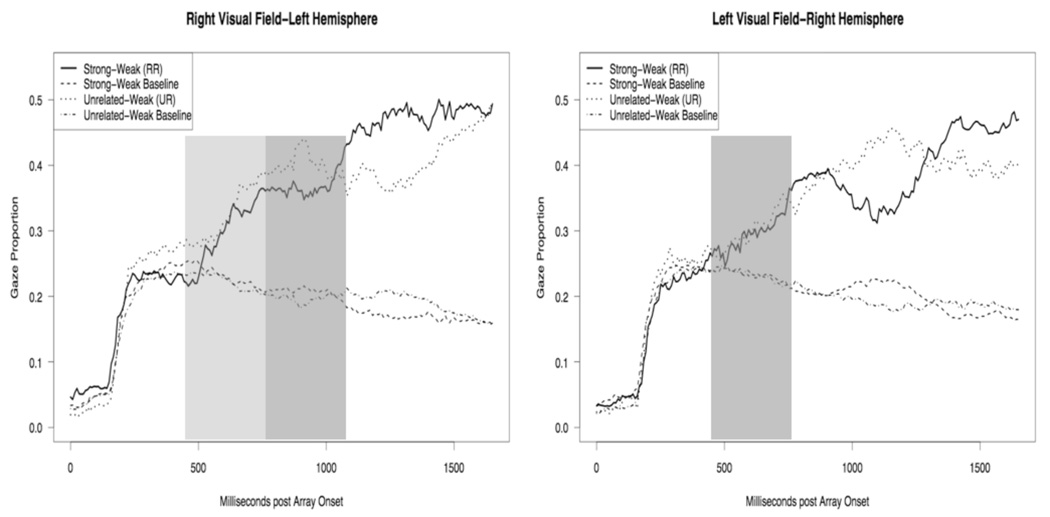

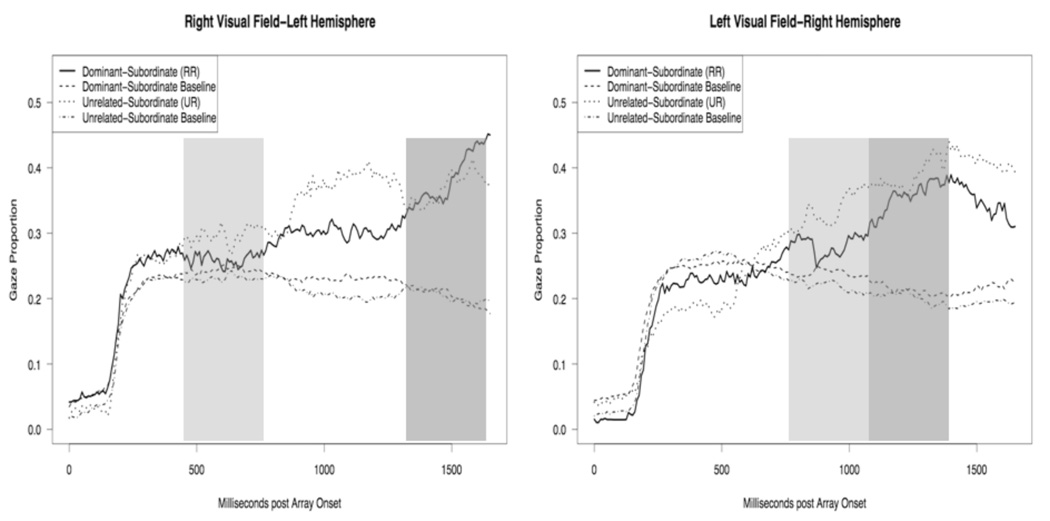

Further analyses were conducted with the data collapsed over target position (in our description of these analyses, “Visual Field” thus always refers to prime VF). As is typical in visual world studies (e.g., Huettig and Altmann, 2007), the average gaze directed to the three unrelated distractors was used as a baseline. Beginning 150 ms after target array onset, Visual Field (RVF vs. LVF) × Ambiguity (Unambiguous vs. Ambiguous) × Context (RR vs. UR) × Relatedness (Target vs. Distractors) ANOVAs were conducted within 300 ms time windows. Figure 3 and Figure 4 depict the gaze data from unambiguous and ambiguous conditions, respectively.

Figure 3.

Gaze proportions from unambiguous conditions, Experiment 1. For this figure and those that follow, “baseline” refers to the average gaze directed at the three unrelated distractors. Lighter shading indicates above-baseline gaze in one context condition (UR or RR), and darker shading indicates above-baseline gaze in both conditions. In the LVF-RH, gaze was marginally significant in the UR condition during the 450–750 ms window, p = .08.

Figure 4.

Gaze proportions from ambiguous conditions, Experiment 1.

Above-baseline gaze first occurred in the 450–750 ms window, when the main effect of Relatedness became significant, F(1,21) = 31.0, p < .001, MSE = .01; see Figure 3 and Figure 4. This effect was significant throughout the remainder of the 1650 ms epoch [750–1050 ms: F(1,21) = 105.9, p < .001, MSE = .02; 1050–1350 ms: F(1,21) = 80.4, p < .001, MSE = .05; 1350–1650 ms: F(1,21) = 69.52, p < .001, MSE = .08]. The only other significant main effect occurred in the 1050–1350 ms window, when gaze (to both related and unrelated words) was greater in the Unambiguous condition, F(1,21) = 5.73, p < .05, MSE = .005; see Figure 3 and Figure 4. These main effects were moderated by an Ambiguity × Context × Relatedness interaction in the last two time windows [1050–1350 ms: F(1,21) = 5.47, p < .05, MSE = .01; 1350–1650 ms: F(1,21) = 5.58, p < .05, MSE = .01]. This interaction was explored by examining potential Context × Relatedness interactions within each Ambiguity condition. In the Unambiguous condition (see Figure 3), the Context × Relatedness interaction was significant in both windows [1050–1350 ms: F(1,21) = 8.65, p < .01, MSE = .01; 1350–1650 ms: F(1,21) = 7.46, p < .05, MSE = .02]. Within both windows, above-baseline gaze occurred in both context conditions, but the effect was larger in the UR condition [1050–1350 ms: RR η2 = .65, UR η2 = .77; 1350–1650 ms: RR η2 = .61, UR η2 = .78]. In the Ambiguous condition (see Figure 4), the Context × Relatedness interaction was not significant (F < 1 in both time windows), but above-baseline gaze occurred in both windows [1050–1350 ms: F(1,21) = 47.61, p < .001, MSE = .03; 1350–1650 ms: F(1,21) = 54.06, p < .001, MSE = .04].

Thus, the results of the ANOVAs indicate that meaning activation had occurred and affected eye position by around 450 ms after array presentation. Above-baseline gaze to related targets then continued for the remainder of the epoch. In later time windows, this effect was stronger on UR trials within the unambiguous condition. These results therefore suggest that both hemispheres initially select dominant-related or strongly-associated meanings, regardless of context, but that a weakly-associated concordant context reduces the strength of the selection effect during later time windows that may reflect more explicit meaning processing.

To more fully examine the time course of meaning activation and selection within each condition, planned t tests were used to compare the average gaze to related targets with the average gaze directed to the three unrelated distractors within the same context condition (RR or UR). Table 1 reports the results of the planned comparisons.

Table 1.

t Values from Planned Comparisons, Experiment 1

| Unambiguous |

Ambiguous |

|||||||

|---|---|---|---|---|---|---|---|---|

| RVF-LH |

LVF-RH |

RVF-LH |

LVF-RH |

|||||

| Window (in ms) |

RR |

UR |

RR |

UR |

RR |

UR |

RR |

UR |

| 150–450 | −1.00 | −2.51 | 1.59 | 0.51 | 1.27 | 0.03 | 0.21 | 0.78 |

| 450–750 | 1.16 | 1.17 | 2.78 | 1.87 | 1.07 | 1.18 | 3.54 | 1.62 |

| 750–1050 | 4.36 | 3.32 | 3.47 | 4.96 | 3.81 | 3.13 | 4.09 | 5.25 |

| 1050–1350 | 6.72 | 6.39 | 3.97 | 8.24 | 4.50 | 4.37 | 5.36 | 4.82 |

| 1350–1650 | 5.20 | 7.20 | 5.10 | 7.61 | 5.92 | 7.22 | 3.72 | 4.59 |

df = 21, α = .05, critical t = 2.08

For targets following unambiguous primes (see Figure 3), the earliest activation was seen in the 450–750 ms window of the LVF-RH condition: gaze was above baseline when a concordant (RR) context was presented; the comparison approached significance when an unrelated (UR) context was presented (p = .08), and became significant in the following window. For the RVF-LH, the earliest activation was seen in the 750–1050 ms window, when both unambiguous conditions were above baseline. Thus, it appears that meaning selection in the RH was facilitated by the presence of a weakly-related context, whereas context did not influence initial meaning selection in the LH.

For targets following ambiguous primes (see Figure 4), the earliest activation was again seen in the 450–750 ms window of the LVF-RH condition, with above-baseline gaze when a discordant (RR) context was presented. Activation in the unrelated (UR) context condition began in the 750–1050 ms window. In the RVF-LH, activation for both context conditions occurred in the 750–1050 ms window. Similar to the unambiguous conditions, the subordinate-related context facilitated selection of the dominant-related target in the RH, whereas context did not influence initial meaning selection in the LH.

Discussion

The results of this experiment revealed a time course of meaning activation that differed in the two hemispheres. The earliest evidence for meaning selection was seen for targets presented to the LVF-RH, in the presence of related context information (RR condition). Whether context information was concordant and weakly-related or discordant and related to the subordinate meaning of an ambiguous prime, gaze to strongly- and dominant-related targets was above baseline in the 450–750 ms time window for the RR condition with LVF-RH presentation. Above-baseline gaze to these same LVF-RH targets in an unrelated context and to all RVF-LH targets (independent of context) occurred later, in the 750–1050 ms window. In still later time windows, a context effect was seen in both VFs, in the form of stronger gaze effects on unrelated context (UR) than related context (RR) trials within the unambiguous condition.

These findings indicate that for the RH, subordinate-related context words facilitate initial processing of the dominant meaning (despite the discordance between prime and target). This is not a pattern predicted by any extant model of lexical ambiguity resolution, since models that incorporate context sensitivity generally assume that context-based selection will reduce, rather than enhance, activation of the alternative meaning (e.g., Swinney and Hakes, 1976; Vu et al., 1998). However, the pattern is consistent with the predictions of the coarse coding hypothesis (Beeman et al., 1994; Jung-Beeman, 2005), which postulates that meaning activation in the RH is diffuse, and thus more likely to encompass multiple, disparate senses of an ambiguous word. The initial gaze pattern observed for the unambiguous condition is also consistent with coarse coding -- weakly-related words facilitated the processing of strongly-related information (cf. summation priming, Beeman et al., 1994). A similar pattern was seen in the ERP version of this experiment (Meyer and Federmeier, 2007): N400 priming in the concordant RR condition was larger in the RH than in the LH, suggesting that the RH is more likely to use weakly-related information to facilitate the processing of more strongly-related information. However, in the present experiment, gaze effects later in the epoch (perhaps reflecting more explicit processing of semantic relationships) were then stronger in unrelated (UR) than in weakly-related (RR) contexts, suggesting that, with time, the RH may use context information to narrow its scope of activation. Behavioral patterns (subsequent to the gaze effects) did not reveal any influence of context for LVF trials in either the ambiguous or the unambiguous conditions; however, responses were overall slower and less accurate for detection of the related targets in the ambiguous condition, suggesting that ambiguity impaired meaning selection for the RH.

In contrast, when primes were initially processed by the LH, evidence for meaning activation began within the same time window for all conditions, suggesting that subordinate-and weakly-related contexts did not initially either suppress or facilitate the processing of dominant meanings and strongly-associated information in the LH. These results are consistent with hybrid models (Duffy et al., 1988; Tabossi et al., 1987), which argue that the dominant meaning is automatically activated in a subordinate-biased context, but are also consistent with exhaustive models (Swinney, 1979), which argue that all meanings of an ambiguous word are initially activated, regardless of the context. This pattern was also seen in a previous visual world study that involved a passive viewing task and target pictures that depicted subordinate and dominant referents (Huettig and Altmann, 2007). Later in the epoch, context effects were seen for the unambiguous condition, with greater gaze to related targets following an unrelated than a weakly-related context word (i.e., UR > RR, the same pattern that was observed for LVF-RH trials). Interestingly, these effects were reversed in the pattern of behavioral responses: participants looked longer at UR than at RR targets, but were less accurate at explicitly reporting the presence of a related word in the UR condition. In the ambiguous condition, responses were instead less accurate for RR than for UR trials, suggesting that selection of the subordinate meaning did impair downstream selection of the dominant target. The overall pattern of behavioral responses for the RVF-LH, with reduced accuracy both when a discordant context is presented (for ambiguous trials) and when a concordant context is lacking (for unambiguous trials) is consistent with claims that LH meaning selection is more sensitive to context (Faust and Chiarello, 1998; Faust and Gernsbacher, 1996).

It is noteworthy that for the ambiguous condition, the eye-tracking results of the current experiment are largely inconsistent with the context effects observed in our ERP study using the same materials (Meyer and Federmeier, 2007). In the ERP version of Experiment 1, both hemispheres showed initial activation (in the N400 window) of the dominant meaning when an unrelated context was presented (UR condition). However, for the discordant RR condition, activation of the dominant meaning occurred later, in the LPC windows, indicating that the subordinate context had initially suppressed the dominant meaning of the ambiguous word, which was recovered later through meaning revision processes. This initial suppression of the dominant meaning was not revealed in the eye gaze patterns of the present experiment. Instead, the LH showed initial activation for the RR and UR conditions within the same time window, and the RH actually showed earlier activation in the RR condition. Thus, it may be the case that the initial gaze proportions reported in the current experiment are more reflective of the later meaning revision processes thought to be indexed by the LPC, rather than the initial meaning activation and selection processes indexed by the N400. Alternatively, it is possible that the active search for related information (as in the present experiment) yields a different pattern of meaning activation and selection than do more passive language comprehension tasks (as in the ERP version of this experiment).

Clear hemispheric asymmetries were present in Experiment 2 of our ERP study, which involved dominant- or strongly-related primes and subordinate- or weakly-related targets. In particular, we found differences between the hemispheres in the initial (N400) processing of subordinate meanings of ambiguous words (cf. Burgess and Simpson, 1988; Hasbrooke and Chiarello, 1998). If these effects are also evident in gaze patterns in a more active task, then for the ambiguous UR condition, we would expect to find initial above-baseline gaze directed to related targets on RVF-LH trials, but not LVF-RH trials. On the other hand, if gaze reflects processes associated with the LPC, then the initial gaze data from Experiment 2 may be consistent with the LPC findings from our ERP study: for both VFs, we would expect to find initial gaze directed to related targets in the ambiguous UR condition.

Experiment 2

Results

Recognition Accuracy

Mean A' was .81 (SE = .01), indicating that participants attended to the context words and were able to discriminate between these words and distractors.

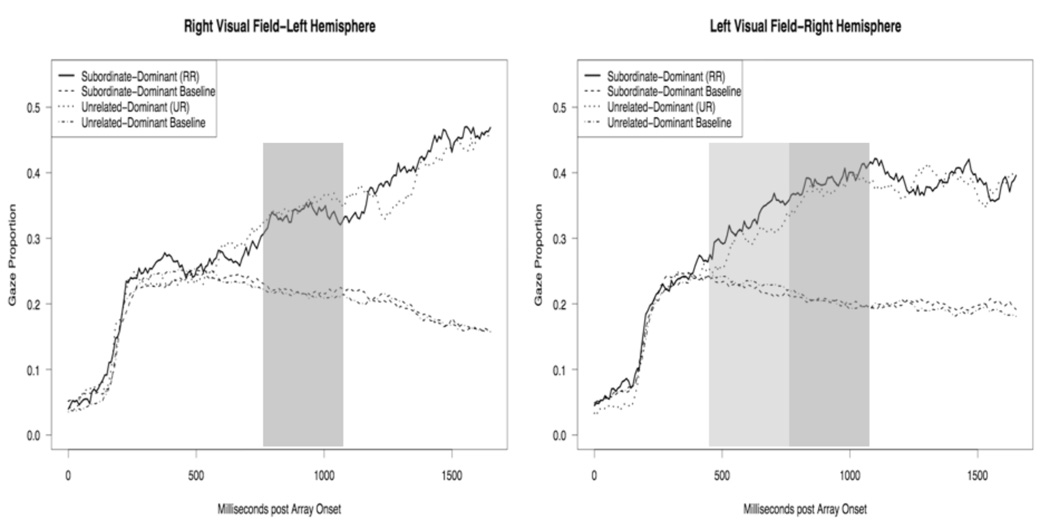

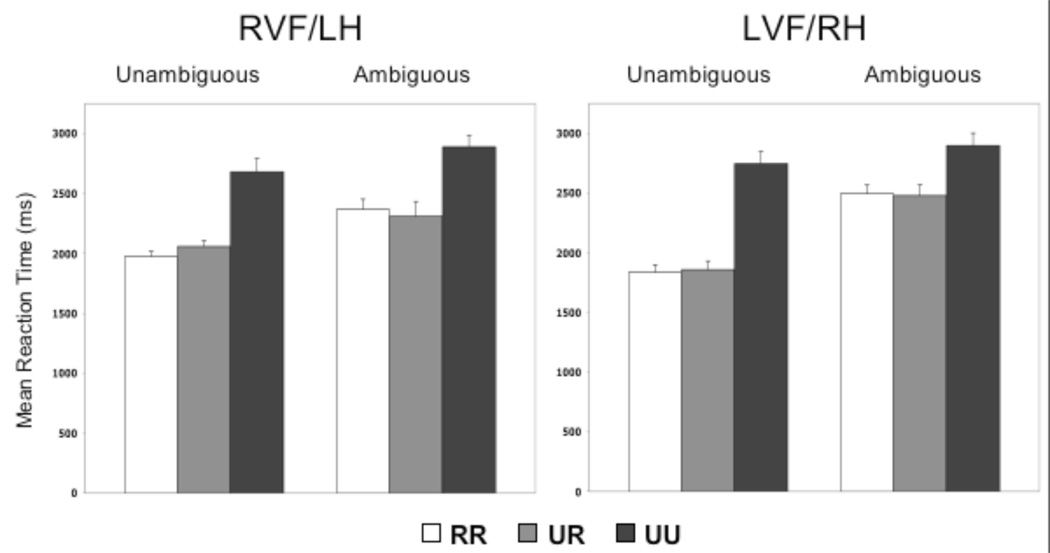

Relatedness Judgment Performance

The RT and accuracy data are presented in Figure 5 and Figure 6, respectively. These data were subjected to separate 2 (Visual Field) × 2 (Ambiguity) × 3 (Relatedness) ANOVAs. In the RT analysis, there were significant main effects of Ambiguity [F(1, 21) = 69.70, p < .001, MSE = 137,387] and Relatedness [F(2, 42) = 42.04, p < .001, MSE = 278,741], whereas the main effect of Visual Field was not significant [F(1, 21) < 1]. As in Experiment 1, responses were faster for unambiguous and related conditions. Because the Ambiguity × Relatedness interaction was also significant, the effect of Relatedness was examined for each ambiguity condition. The effect was significant in both the ambiguous condition [F(2, 42) = 15.56, p < .001, MSE = 219,442] and the unambiguous condition [F(2, 42) = 51.71, p < .001, MSE = 174,280], and in both cases responses were faster for related conditions. Thus, although the effect was larger for the unambiguous condition (η2 = .71, vs. η 2 = .43 for the ambiguous condition), the same pattern of faster responses in related conditions was seen in each.

Figure 5.

Mean RT from the relatedness judgment task, Experiment 2. The means and standard error bars are based on individual participants’ median RTs.

Figure 6.

Relatedness judgment accuracy, Experiment 2. Bars represent standard error.

The same analysis performed on response accuracy revealed main effects of Visual Field [F(1, 21) = 6.56, p < .05, MSE = .01], Ambiguity [F(1, 21) = 66.26, p < .001, MSE = .04], and Relatedness [F(2, 42) = 51.52, p < .001, MSE = .04]. As in Experiment 1, responses were more accurate for RVF trials and in unambiguous and unrelated conditions. The Ambiguity × Relatedness interaction was also significant [F(2, 42) = 25.55, p < .001, MSE = .02]. In the ambiguous condition, the effect of Relatedness was significant [F(2, 42) = 80.89, p < .001, MSE = .03], with more accurate responses in the UU condition, and greater accuracy for UR compared to RR. The effect of Relatedness was also significant in the unambiguous condition [F(2, 42) = 9.82, p < .001, MSE = .03], but in this case the UU condition was more accurate than the related conditions, while UR and RR were not different. These findings indicate that both hemispheres were negatively affected by a discordant dominant-related context.

Compared to the overall relatedness judgment responses in Experiment 1 [RT: M = 2174.93, SE = 81.74; Accuracy: M = .85, SE = .01], overall responses in the current experiment [RT: M = 2381.87, SE = 48.45; Accuracy: M = .71, SE = .01] were slower and less accurate [RT: t(42) = −2.18, p < .05; Accuracy: t(42) = 7.37, p < .001]. Given that the data for the two experiments were collected in parallel from participants who were drawn from the same subject pool and randomly assigned to an experiment, it is likely that the slower, less accurate responses seen in the second experiment are due to the greater difficulty associated with the relatedness judgment task when the target is weakly related to the prime. Supporting this argument, in the UU condition there were no significant differences across experiments in either RT or accuracy [RT: t(42) =−.89, p = .38; Accuracy: t(42) = .44, p = .66]. Furthermore, a similar pattern was present in the accuracy data from our ERP experiments (the RT data were not analyzed; Meyer and Federmeier, 2007).

Eye Tracking

Analyses were conducted in the same manner as Experiment 1. Total eye-tracking data loss (including saccades directed to the lateralized prime) accounted for 13% of the data.

Initial Target Array Apprehension

Participants typically viewed the array in a clockwise or counterclockwise pattern, beginning with the upper left quadrant, similar to Experiment 1. The average gaze proportion for the upper left quadrant was .57 in the 150–450 ms window, whereas the proportion for the other quadrants was at .12 or less. The proportion for the upper right and lower left quadrants increased in the 450–750 ms window, becoming .29 and .20, respectively, and continued to increase to .38 and .23 in the 750–1050 ms window. The proportion for the lower right quadrant increased more slowly, becoming .18 in the 750–1050 ms window and .28 in the 1050–1350 ms window.

Interactions between Prime and Target VF

For all combinations of prime VF and target VF, above-baseline gaze began in the 750–1050 ms window [LVF prime, LVF target: t(21) = 3.89, p < .001; LVF prime, RVF target: t(21) = 2.48, p < .05; RVF prime, LVF target: t(21) = 2.80, p < .05; RVF prime, RVF target: t(21) = 2.32, p < .05]. For each prime VF, effect sizes did not differ across target VF (both p’s > .65). Thus, unlike Experiment 1, in this case there was no evidence of a RH advantage or an interaction between prime VF and target VF.

Gaze to Targets

Figure 7 and Figure 8 depict the gaze data from unambiguous and ambiguous conditions, respectively. A main effect of Visual Field was present between 150 and 750 ms [150–450: F(1,21) = 6.21, p < .05, MSE = .002; 450–750: F(1,21) = 4.27, p = .05, MSE = .001], indicating that gaze (to both targets and distractors) was greater following presentation of the prime to the RVF-LH. A main effect of Ambiguity occurred between 450 and 1650 ms [450–750: F(1,21) = 11.02, p < .01, MSE = .002; 750–1050: F(1,21) = 13.81, p < .01, MSE = .003; 1050–1350: F(1,21) = 5.10, p < .05, MSE = .005; 1350–1650: F(1,21) = 5.11, p < .05, MSE = .01], indicating that gaze (to both targets and distractors) was greater following unambiguous primes (see Figure 7 and Figure 8). A main effect of Relatedness was present between 450 and 1650 ms [450–750: F(1,21) = 17.52, p < .001, MSE = .01; 750–1050: F(1,21) = 65.53, p < .001, MSE = .02; 1050–1350: F(1,21) = 80.03, p < .001, MSE = .04; 1350–1650: F(1,21) = 75.8, p < .001, MSE = .06], indicating that above-baseline gaze was directed to related targets during these windows.

Figure 7.

Gaze proportions from unambiguous conditions, Experiment 2. In the RVF-LH, gaze was marginally significant in the RR condition during the 450–750 ms window, p = .06.

Figure 8.

Gaze proportions from ambiguous conditions, Experiment 2.

The Visual Field × Ambiguity × Relatedness interaction was significant between 150 and 450 ms, F(1,21) = 4.77, p < .05, MSE = .004. For the RVF-LH condition, the Ambiguity × Relatedness interaction was not significant (F < 1), whereas the main effect of Relatedness was significant, F(1,21) = 5.48, p < .05, MSE = .004, indicating that gaze to related targets was greater than baseline. For the LVF-RH condition, the Ambiguity × Relatedness interaction was significant, F(1,21) = 5.47, p < .05, MSE = .004. Gaze to related targets was lower than baseline in the ambiguous condition [t(21) = −2.59, p < .05], whereas there was no difference in the unambiguous condition [t(21) = .31, p = .76].

A significant VF × Relatedness interaction was present in the 450–750 ms window, F(1,21) = 6.83, p < .05, MSE = .004. The effect of relatedness was significant in both VF conditions, but was larger in the RVF-LH [RVF: F(1,21) = 16.25, p < .001, MSE = .01, η2 = .44; LVF: F(1,21) = 8.32, p < .01, MSE = .003, η2 = .28]

An Ambiguity × Relatedness interaction occurred between 450 and 1050 ms [450–750: F(1,21) = 9.84, p < .01, MSE = .01; 750–1050: F(1,21) = 14.31, p < .01, MSE = .01]. In the 450–750 ms window, the effect of Relatedness was significant within the unambiguous condition [F(1,21) = 47.21, p < .001, MSE = .001; see Figure 7], but not the ambiguous condition [F(1,21) = 1.41, p = .24, MSE = .002; see Figure 8]. In the 750–1050 ms window, the effect of Relatedness was significant in both conditions, but was larger in the Unambiguous condition [Unambiguous: F(1,21) = 120.20, p < .001, MSE = .003, η2 = .85; Ambiguous: F(1,21) = 30.15, p < .001, MSE = .003, η2 = .59].

In the 1050–1350 ms window, there was a significant VF × Ambiguity × Context × Relatedness interaction, F(1,21) = 9.30, p < .01, MSE = .01. In the LVF-RH, only the main effect of Relatedness was significant, F(1,21) = 71.14, p < .001, MSE = .02, indicating above-baseline gaze. In the RVF-LH, the Ambiguity × Context × Relatedness interaction was significant, F(1,21) = 11.27, p < .01, MSE = .003. In the UR context condition, only the main effect of Relatedness was significant, F(1,21) = 40.79, p < .001, MSE = .02, indicating above-baseline gaze. The Ambiguity × Relatedness interaction was significant in the RR context condition, F(1,21) = 10.99, p < .01, MSE = .02. Above-baseline gaze occurred in the Unambiguous condition, t(21) = 5.45, p < .001 (see Figure 7), but not the Ambiguous condition, t(21) = 1.78, p = .09 (see Figure 8).

An Ambiguity × Context × Relatedness interaction was present in the 1350–1650 ms window, F(1,21) = 4.95, p < .05, MSE = .01. Only the main effect of Relatedness was significant in the UR context condition, F(1,21) = 57.89, p < .001, MSE = .04, indicating above-baseline gaze. The Ambiguity × Relatedness interaction was significant in the RR context condition, F(1,21) = 10.79, p < .01, MSE = .02. Above-baseline gaze occurred in both Ambiguity conditions, but the effect was larger in the unambiguous condition [Unambiguous: t(21) = 7.59, p < .001, η2 = .73 (see Figure 7); Ambiguous: t(21) = 4.88, p < .001, η2 = .53 (see Figure 8)].

The results of the ANOVAS revealed earlier gaze to related targets following presentation of the prime to the RVF-LH, as well as earlier above-baseline gaze in the unambiguous condition. In the ambiguous condition, a discordant (RR) context delayed gaze to related targets in the RVF-LH, and also decreased the magnitude of the Relatedness effect in both VF conditions.

Table 2 reports the results of the planned comparisons. For targets following unambiguous primes (see Figure 7), above-baseline gaze began in the 450–750 ms window of both LVF-RH conditions. In the same window of the RVF-LH condition, priming occurred in the UR condition, whereas priming in the RR condition was marginal (p = .06), and became significant in the following window.

Table 2.

t Values from Planned Comparisons, Experiment 2

| Unambiguous |

Ambiguous |

|||||||

|---|---|---|---|---|---|---|---|---|

| RVF-LH |

LVF-RH |

RVF-LH |

LVF-RH |

|||||

| Window (in ms) |

RR |

UR |

RR |

UR |

RR |

UR |

RR |

UR |

| 150–450 | 0.06 | 1.74 | −0.82 | 0.99 | 1.11 | 1.29 | −1.20 | −2.63 |

| 450–750 | 2.00 | 4.67 | 2.60 | 2.60 | 0.58 | 2.42 | −0.53 | −0.60 |

| 750–1050 | 4.92 | 6.94 | 5.46 | 5.25 | 1.60 | 4.14 | 1.19 | 4.55 |

| 1050–1350 | 5.45 | 5.12 | 4.15 | 6.63 | 1.78 | 5.06 | 4.00 | 6.22 |

| 1350–1650 | 7.19 | 6.05 | 6.50 | 6.55 | 3.80 | 3.79 | 4.02 | 5.20 |

df = 21, α = .05, critical t = 2.08

For targets following ambiguous primes (see Figure 8), facilitation began in the 450–750 ms window of the RVF-LH condition when an unrelated (UR) context was presented, and in the 1350–1650 ms window when a discordant (RR) context was presented. In the LVF-RH condition, priming began in the 750–1050 ms window when an unrelated context was presented, and in the 1050–1350 ms window when a discordant context was presented. Thus, the planned comparisons indicate that gaze was delayed for both hemispheres when a discordant context was presented, but recovery occurred more quickly for the RH. When an unrelated context was presented, priming occurred earlier for the LH.

Despite the fact that the related targets were more strongly associated in Experiment 1, above-baseline gaze in the unrelated context (UR) condition typically occurred earlier in the current experiment. However, behavioral responses were slower and less accurate in the related conditions of Experiment 2, suggesting that participants found the task to be more difficult when the targets were weakly associated, and searched the display more quickly in order to complete the task prior to the response deadline. In support of this explanation, there was a numerical trend toward a greater proportion of between-quadrant saccades in Experiment 2 (M = .122, SE = .011), compared to Experiment 1 (M = .105, SE = .012), which is consistent with the idea that participants moved their eyes more quickly.

Discussion

As in Experiment 1, the results revealed differing time courses of meaning selection and revision for the two hemispheres. In particular, in an unrelated (UR) context, gaze to subordinate associates of ambiguous primes reached above baseline levels more quickly with RVF-LH presentation than with LVF-RH presentation. Gaze to subordinate targets in both hemispheres then manifested an effect of context, with delayed responses to these items in a discordant (RR) context condition, compared to an unrelated (UR) context. This effect of context was longer-lasting in the RVF-LH (and a tendency for the same pattern was also seen in the RVF-LH for the unambiguous condition). The effect of context was apparent in the behavioral data as well, as relatedness judgment accuracy was higher in the UR ambiguous condition compared to the discordant (RR) condition for both VFs.

Different from the pattern seen in Experiment 1, but replicating the pattern seen in our ERP study (Meyer and Federmeier, 2007), in the RH, meaning activation in the unambiguous UR condition preceded activation in the ambiguous UR condition. Since the UR conditions were controlled for associative strength with the prime, this pattern indicates that processing in the RH is affected by meaning frequency, over and above the effects of associative strength. Thus, it would seem that the RH activates the subordinate meanings of ambiguous words more slowly, or activates them but then either inhibits them or allows them to decay (such that they must be reactivated later). This activation/reactivation process takes longer in the presence of discordant contextual information (pointing to the dominant meaning of the homograph). Note that this inhibitory effect of context on the processing of the subordinate-related target stands in contrast to the facilitating effect of a subordinate-related context on dominant meaning processing that was observed in Experiment 1. Thus, whereas the pattern in Experiment 1 was consistent with the idea, derived from coarse coding (Beeman et al., 1994), that the RH might have an advantage for activating and maintaining multiple meanings of words, this pattern was not seen in Experiment 2 -- indeed, the data from Experiment 2 were inconsistent with core tenets of the coarse coding hypothesis. Whereas coarse coding posits a RH advantage for the activation and maintenance of more distantly-related information (such as the subordinate meaning of an ambiguous word, although the coarse coding hypothesis does not specifically discuss the role of meaning frequency), Experiment 2 – and our prior ERP results – actually revealed a RH disadvantage for processing targets related to the subordinate meaning of ambiguous words.

For the LH, in both experiments, activation patterns in the UR conditions were similar for ambiguous and unambiguous conditions, suggesting no special effect of meaning frequency independent of associative strength. However, in the present experiment, but not in Experiment 1, a discordant context had an inhibitory effect on gaze: above-baseline gaze to the subordinate targets was delayed by at least 600 ms when the context information pointed to the dominant meaning of the ambiguous word (the RR condition) as compared to when it was unrelated in meaning (the UR condition). A similar pattern was seen in the corresponding ERP experiment. Across the experiments, the pattern for the LH (and to a lesser extent for the RH) is consistent with the predictions of the reordered access model (Duffy et al., 1988), which suggests that meaning activation depends on both meaning frequency and context. In particular, following a subordinate-biased context, the dominant meaning may be activated quickly, but following a dominant-biased context, the subordinate meaning may be activated (or reactivated) slowly. 8 Interestingly, a hint of this pattern was evident in the unambiguous condition as well, as gaze did not significantly rise above baseline for the unambiguous RR condition in the 450–750 ms time window in which gaze was above baseline for UR. This, again, is similar to the pattern seen in the ERPs, and suggests that the LH may tend to use context to focus its activation even for words with more homogenous meaning features.

Overall, whereas the pattern of eye gaze results for Experiment 1 diverged from the corresponding ERP data, the pattern of results for Experiment 2 largely replicated the ERP findings, which showed delayed activation of the subordinate meaning in the RH (manifesting on the LPC but not on the N400) and inhibitory effects of discordant context information for both hemispheres. As will be discussed in more detail in the general discussion, the fact that gaze measures and ERP measures sometimes converge and sometime diverge points to the possibility of interesting differences between tasks in which meaning is actively sought as opposed to more passively assessed.

General Discussion

This study had two goals: to combine (for the first time) eye tracking with the VF paradigm in order to study hemispheric asymmetries in language processing, and, in doing so, to seek converging evidence for our ERP study of ambiguity resolution (Meyer and Federmeier, 2007). Gaze was monitored as participants searched an array of four words for a target that was related in meaning to an ambiguous or unambiguous lateralized prime. Primes were preceded by a centrally-presented context word. Experiment 1 involved the presentation of dominant-related/strongly-associated targets in the presence of unrelated or subordinate-biased/weakly-associated context words. Context and target words were reversed in Experiment 2 in order to examine the processing of subordinate-related/weakly-associated targets in the presence of unrelated or dominant-biased/strongly-associated context words.

When context information was unrelated (UR condition), gaze patterns across the two experiments indicated that dominant and subordinate meanings generally became active in the same time window as the corresponding strongly- and weakly-associated targets. The only exception to this occurred for LVF-RH primes in Experiment 2, in which there was delayed activation of the subordinate meaning. Thus, there was evidence that the RH is sensitive to meaning frequency, over and above the effect of associative strength -- a conclusion that was also indicated by our prior ERP data (Meyer and Federmeier, 2007).

When context information biased processing toward the dominant meaning of an ambiguous prime (Experiment 2 RR condition), activation of the subordinate meaning was delayed, indicating initial selection of the dominant meaning. This pattern was quite consistent across measures, as accuracy was also reduced in this condition, and a similar pattern of delayed access to the subordinate meaning was seen with ERPs (Meyer and Federmeier, 2007). Gaze patterns indicated that recovery of the subordinate meaning happened more quickly in the RH. The LH also showed a tendency toward slower activation of weakly-related information in a strongly-related context.

In Experiment 1, the presence of a subordinate-biased context actually facilitated processing of the dominant meaning in the RH (relative to the unrelated context condition), similar to the facilitation seen in this hemisphere for strongly-related targets in the presence of a concordant, but weakly-related context word. In contrast, in the LH initial activation was unaffected by context. However, the discordant context did reduce behavioral accuracy for the LH, suggesting that the subordinate context impaired explicit processing of the dominant meaning. This discrepancy between gaze and behavior may be due to a greater sensitivity of gaze as a measure of implicit aspects of meaning activation. ERPs (Meyer and Federmeier, 2007) showed a still different pattern, with both hemispheres showing delayed access to the dominant meaning, indicating initial selection of the subordinate meaning. These discrepancies may reflect an interaction between meaning frequency and the nature of the task. The visual search task employed in the present study was more active than the task used in the ERP experiments, in which participants simply judged the relatedness of the prime and a single target, without having to engage in a search for related information. In the less active task, both hemispheres selected the contextually-consistent meaning of ambiguous words, regardless of meaning frequency. In contrast, in the more active task, the dominant meaning remained active in an inconsistent context. These findings suggest that meaning frequency or associative strength has a greater effect in an active task (cf. Meyer, 2005). One explanation for such an effect is that the explicit search for meaning enhances bottom-up processing, making the strength of the relationship a more salient variable. This would seem especially likely to occur if context was not reliably helpful in the performance of the task. In the current study, the context and target words were related to the same meaning of the prime (though unassociated to one another) in only one condition, unambiguous RR, which comprised only 12.5% of trials.

Behavioral VF studies of lexical ambiguity, largely using passive tasks, have typically found evidence that the LH selects the contextually-consistent meaning, whereas the RH does not select (for homographs with two meanings of roughly equal frequency, see Faust and Gernsbacher, 1996; for homographs with a dominant meaning, see Faust and Chiarello, 1998). However, measures that may be more sensitive to early, implicit aspects of meaning processing point to context-based selection in the RH as well; ERPs evidenced selection of both meanings (Meyer and Federmeier, 2007) and gaze patterns in the present study evidenced selection for the dominant meaning. In addition, behavioral measures in the present study showed evidence of RH selection of the dominant meaning in Experiment 2. Thus, it seems that asymmetric selection effects in behavior may be less likely to occur when the response occurs in the context of an active search for related meanings.

For the RH, there is converging evidence from gaze and ERPs that indicates that the subordinate meaning is activated more slowly or that it is subjected to an inhibition or decay process. Findings from behavioral VF studies are conflicting regarding this issue, with some evidence of slower activation (occurring by a 750 ms SOA, Burgess and Simpson, 1988) and some evidence of early activation (at SOAs between 80 and 200 ms, Chiarello et al., 1995), possibly followed by inhibition or decay (by a 750 ms SOA, Hasbrooke and Chiarello, 1998). These findings, along with those of other studies using similar materials (e.g., Kandhadai and Federmeier, 2007), are inconsistent with the main tenet of the coarse coding hypothesis, which argues that meaning activation is broader in the RH, including both strongly-related information such as the dominant meaning and weakly-related information such as subordinate meanings (Beeman et al., 1994; Jung-Beeman, 2005). Instead, studies that have manipulated SOA or utilized measures that allow continuous sampling of cognitive processing have found evidence that the subordinate meaning is activated in both hemispheres, but with a different time course in each (Burgess and Simpson, 1988; Meyer and Federmeier, 2007; see also Coulson and Severens, 2007, for similar findings in the context of pun comprehension).

However, several aspects of our ERP, gaze, and behavioral data suggest that the RH might have an advantage in making broader or more flexible use of context information. First, compared to the LH, the RH showed greater N400 priming when a weakly-biased context preceded strongly-related targets, and the LH also showed reduced N400 priming (compared to the unrelated context condition) when a strongly-biased context preceded weakly-related targets. Second, in the current study, the RH showed no decrease in accuracy when contextually-inconsistent dominant targets were presented, whereas the LH did display lower accuracy. Third, compared to both the LH and the unrelated context condition, above-baseline gaze occurred more quickly in the RH when a subordinate- or weakly-related context preceded a dominant- or strongly-related target. Finally, gaze also indicated that the RH recovered the contextually-inconsistent subordinate meaning more quickly than the LH, and there was some indication of faster recovery in the ERP data as well. Other studies that have examined the processing of non-literal aspects of language have similarly yielded evidence that the RH is able to flexibly integrate an unexpected meaning based on prior context (e.g., in a joke such as “When I asked the bartender for something cold and full of rum, he recommended his wife.”; Coulson and Williams, 2005).

Compared to our ERP experiments (Meyer and Federmeier, 2007), the RH advantage for a broader or more flexible use of context was more apparent in the gaze data of the current experiments. This pattern suggests that the advantage may result from or at least be more prominent in active tasks. Interestingly, some of the strongest evidence for the coarse coding hypothesis comes from the literature on insight problems that involve a directed search for information, such as the compound remote associates test (e.g., attempting to think of a word that can be combined with boot, summer, and ground to form compound words or phrases [camp]; see Bowden et al., 2005). Thus, rather than focusing on differences in the scope of meaning activation, a promising area of future research on hemispheric asymmetries in language comprehension would be to focus on the possibility that a broader and/or more flexible use of context information occurs when the right hemisphere’s processing is engaged by an active task.

Experimental Procedure

Experiment 1

Participants

The final set of participants included 22 native English speakers (12 female) with no early (< age 5) exposure to a second language. The mean age was 19.3 (range = 18 to 25). All participants were right-handed as determined by the Edinburgh handedness inventory (Oldfield, 1971); mean laterality quotient was 0.77 (range = 0.18 to 1.0), with 1.0 being strongly right-handed and −1.0 being strongly left-handed. Twelve participants reported having immediate family members who were left-handed.

Apparatus

An Applied Science Laboratories Model 504 High-Speed Eye-Tracking System was used. The eye-tracking system consists of a pan/tilt infrared camera and a control unit, sampling at 120 Hz. Accuracy is within one degree, and precision is within half of one degree. The vertical and horizontal eye position coordinates were recorded on disk during each time sample, and these coordinates were used to determine participants' gaze to entities such as related words, unrelated words, and the fixation cross. Visual stimuli were presented on a 21” monitor. Head movements were virtually eliminated through the use of a chin rest.

Materials

The stimuli consisted of the word triplets utilized by Meyer and Federmeier (2007), which were augmented with additional unrelated words (selected from the MRC database, Coltheart, 1981) to create the four-word target arrays. A total of 104 ambiguous and 104 unambiguous words were used. Both word types were short (mean length of 4.4 letters for ambiguous, 4.8 letters for unambiguous), concrete (mean concreteness rating of 505 for ambiguous, 514 for unambiguous), imageable (mean imageability rating of 518 for ambiguous, 551 for unambiguous), and moderately frequent (mean log frequency of 1.59 for ambiguous, 1.85 for unambiguous; Kucera and Francis, 1967). For each ambiguous word, one dominant-related and one subordinate-related word was selected; the mean dominant and subordinate meaning frequencies were 74% and 13%, respectively (Twilley et al., 1994). Primes were associated with dominant-related words with a mean strength of 0.13 and with subordinate-related words with a mean strength of 0.03, respectively (all association proportions are taken from Nelson et al., 1998). For each unambiguous word, one strongly-related word (mean associative strength 0.14) and one weakly-related word (mean associative strength 0.05) were also selected. In addition, five unrelated words were selected for each ambiguous and unambiguous word. For all related and unrelated word types, the selected words were concrete (mean concreteness ratings between 474 and 510), imageable (mean imageability ratings between 495 and 530), and of moderate frequency (Kucera-Francis mean log frequency between 1.12 and 1.70). Mean word length was between 5.0 and 5.7 letters. At least 75% of the words in each target condition had a noun meaning, approximately half had a verb meaning, and the overall distribution of word types was similar.

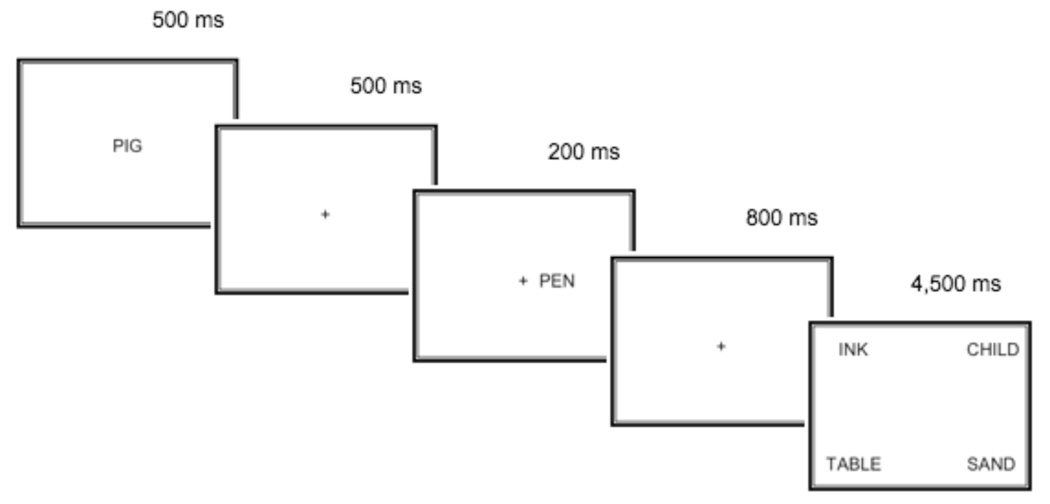

Ambiguous trials involved the presentation of a subordinate-related or unrelated context word and an array containing either a dominant-related target (with three distractors) or four unrelated targets (e.g., pig-pen-ink if both prime and target were related; see top half of Table 3 and Figure 9). The parallel unambiguous trials involved the presentation of a related weak context or an unrelated context and an array containing a related strong target or four unrelated targets (e.g., taste-sweet-candy if both prime and target were related). For all conditions, context words and targets were unassociated. There were thus four possible conditions: UU (unrelated context, unrelated target), UR (unrelated context, related target), RR (related context, related target), and RU (related context, unrelated target). This last condition was included in the experiment to prevent the targets from being predictable, but was not analyzed because it was not of theoretical relevance for the current experiment. The UU condition was included in the analysis of the behavioral data, but not the eye-tracking data. As is typical in visual world studies (e.g., Huettig and Altmann, 2007), we used the average gaze to the three unrelated distractors (from the RR or UR condition) as a baseline in the eye-tracking analyses.

Table 3.

Example Triplets

| Experiment 1 | ||||

|---|---|---|---|---|

| Condition |

Ambiguous (Subordinate-Dominant) |

Unambiguous (Weak-Strong) |

||

| Unrelated-Relate | Ever-Pen-Ink | Boom-Bank-Deposit | Check-Sweet-Candy | Script-Chair-Desk |

| Related-Related | Pig-Pen-Ink | River-Bank-Deposit | Taste-Sweet-Candy | Couch-Chair-Desk |

| Unrelated-Unrelated | Ever-Pen-Braid | Boom-Bank-New | Check-Sweet-Fiber | Script-Chair-Sinister |

| Related-Unrelated | Pig-Pen-Braid | River-Bank-New | Taste-Sweet-Fiber | Couch-Chair-Sinister |

| Experiment 2 | ||||

| Condition |

Ambiguous (Dominant-Subordinate) |

Unambiguous (Strong-Weak) |

||

| Unrelated-Related | Shutter-Ruler-King | Student-Yellow-Coward | Fate-Door-Handle | Charm-Horse-Ranch |

| Related-Related | Measure-Ruler-King | Golden-Yellow-Coward | Window-Door-Handle | Ride-Horse-Ranch |

| Unrelated-Unrelated | Shutter-Ruler-Bait | Student-Yellow-Girl | Fate-Door-Wasp | Charm-Horse-Planet |

| Related-Unrelated | Measure-Ruler-Bait | Golden-Yellow-Girl | Window-Door-Wasp | Ride-Horse-Planet |

Figure 9.

An example of the procedure used in both experiments (stimuli taken from Experiment 1).

When the four relatedness conditions (UU, UR, RR, and RU) were crossed with VF (left or right), there were eight conditions for each ambiguity type, resulting in a total of 16 conditions. Eight stimulus lists (208 trials total) were created, and each ambiguous or unambiguous word appeared in the eight possible conditions across these lists. Within each condition and across lists, the target was presented an equal number of times in each quadrant.

Procedure

Participants were seated in a dimly-lit room, 90 centimeters from a computer screen. They were informed that the following series of stimulus items would be presented: a centrally-presented context word, a lateralized word, and an array of four words (see Figure 9). Participants were instructed to read the lateralized word without moving their eyes from a central fixation cross, and informed that the primary task was to decide if one of the four words in the array had a meaning that was related to the meaning of the lateralized word. Participants were also told that the context word might be related to the second word and that reading the context word could facilitate perception of the rapidly-presented, lateralized prime. In addition, they were informed that there would be a recognition test over the context words between blocks.

On each trial, context words were presented centrally for 500 ms, with a 1000 ms stimulus onset asynchrony (SOA). The prime (ambiguous or unambiguous) was presented with its inner edge located two degrees to the left or right of fixation for 200 ms, with a 1000 ms SOA. The fixation cross then disappeared at the onset of the target array, which was presented for 4,500 ms. Items in the target array were placed with their inner edge located five degrees from the horizontal and vertical midlines, or seven diagonal degrees from the fixation cross. Participants were instructed to make their relatedness judgment response by pressing a “yes” or “no” button (the Y and N keys on a standard keyboard were used) when they saw a related word or determined that none of the four words was related. For a given participant, each response button was paired with the left or right hand; response/hand pairings were counterbalanced across participants.

There were four blocks of trials, with 52 trials per block. Between blocks, participants took a short break and were also given a recognition memory test over a subset of the centrally-presented context words from the previous block, to ensure that participants attended to these words.

Data Analysis

If gaze within a quadrant was greater than three degrees away from the horizontal and vertical midlines of the display, it was scored as being on the word within that quadrant. Gaze proportions were calculated within participants and then averaged across participants for each condition. Eye tracking and behavioral data from a given trial were excluded from analysis if participants moved their eyes from the central fixation cross during the presentation of the lateralized word. Tracking failure and blinking were also excluded from the reported gaze proportions. Gaze directed to the central fixation area is included, and thus the plotted proportions do not sum to 1. In the statistical analyses, the average gaze to the unrelated distractor words was used as a baseline.

Experiment 2

Participants