Abstract

Purpose

Recent studies from our laboratory have suggested that reduced audibility in the high frequencies (due to the bandwidth of hearing instruments) may play a role in the delays in phonological development often exhibited by children with hearing impairment. The goal of the current study was to extend previous findings on the effect of bandwidth on fricatives/affricates to more complex stimuli.

Method

Nine fricatives/affricates embedded in 2-syllable nonsense words were filtered at 5 and 10 kHz and presented to normal-hearing 6–7 year olds who repeated words exactly as heard. Responses were recorded for subsequent phonetic and acoustic analyses.

Results

Significant effects of talker gender and bandwidth were found, with better performance for the male talker and the wider bandwidth condition. In contrast to previous studies, relatively small (5%) mean bandwidth effects were observed for /s/ and /z/ spoken by the female talker. Acoustic analyses of stimuli used in the previous and the current studies failed to explain this discrepancy.

Conclusions

It appears likely that a combination of factors (i.e., dynamic cues, prior phonotactic knowledge, and perhaps other unidentified cues to fricative identity) may have facilitated the perception of these complex nonsense words in the current study.

Previous studies have shown that even mild-to-moderate hearing loss in young children can affect communication abilities, vocabulary development, verbal abilities, reasoning skills, and psychosocial development (Bess, Dodd-Murphy, & Parker, 1998; Davis, Elfenbein, Schum, & Bentler, 1986; Davis, Shepard, Stelmachowicz, & Gorga, 1981; Markides, 1970; 1983). Particular difficulties demonstrated by these children are the production of fricatives and affricates (Elfenbein, Hardin-Jones, & Davis, 1994) and increased errors in noun and verb morphology (Davis et al., 1986; McGuckian & Henry, 2007; Norbury, Bishop, & Briscoe, 2001).

Such performance deficits are often attributed to reduced signal audibility. One factor that influences audibility of the high-frequency components of speech is the bandwidth of hearing instruments. A wider bandwidth can be achieved in custom devices (e.g., in-the-canal and in-the-ear instruments) than in the behind-the-ear (BTE) instruments that are typically used with infants and young children. These differences occur because of tubing resonances associated with the earmold coupling for BTE instruments (Killion, 1980). Thus, even with digital technology, the usable upper limit of bandwidth for these instruments generally is 5–6 kHz. Such bandwidth restriction is less likely to influence the perception of speech for adults with post-lingual hearing loss because linguistic and semantic cues supplement missing acoustic information in conversational speech. For children with acquired hearing loss (HL) who are still in the process of developing speech and language skills, however, this form of signal degradation may be more detrimental.

Of particular concern is the audibility of the high-frequency fricative /s/ (and its voiced cognate /z/) because of its linguistic importance in the English language. They are the 4th and 12th most frequently occurring phonemes, respectively (Tobias, 1959) and serve multiple linguistic functions, including rules for marking plurality, possession, and verb tense (Denes, 1963; Rudmin, 1983). They are also among the most frequently misperceived phonemes for adults with hearing loss (Dubno & Dirks, 1982; Owens, 1978; Owens, Benedict, & Schubert, 1972). In addition, language samples from children with mild-to-moderate hearing loss include numerous errors in noun and verb morphology (e.g., sock vs. socks, jump vs. jumps) (Elfenbein et al., 1994; McGuckian & Henry, 2007; Norbury et al., 2001).

Numerous studies of the acoustic spectra of /s/ have examined talker gender differences (Boothroyd, Erickson, & Medwetsky, 1994; Boothroyd & Medwetsky, 1992; Hughes & Halle, 1956; Jongman, Wayland, & Wong, 2000; Yeni-Komshian & Soli, 1981) while others have included data from children (McGowen & Nittrouer, 1988; Munson, 2004; Nissen & Fox, 2005; Nittrouer, 1995; Nittrouer, Studdert-Kennedy, & McGowan, 1989; Stelmachowicz, Pittman, Hoover, & Lewis, 2001). It is somewhat difficult to characterize results across studies due to differences in vowel context, acoustic analysis procedures, and experimental details. In general, however, the spectral peak for male talkers tends to occur in the 4.2–6.9 kHz range while for adult female and child talkers, the corresponding range is 6.3–8.8 kHz. Thus, the effective bandwidth1 of current BTE hearing aids (approximately 5–6 kHz) is likely to limit the audibility of /s/ and /z/ for female and child talkers. As a result, children with hearing loss may hear the plural form of words reasonably well when spoken by an adult male, but inconsistently or not at all when spoken by an adult female or a child. It is important to note that early childhood experiences often involve interactions with female caregivers and other children. As such, children may experience inconsistent exposure to /s/ across different talkers, situations, and contexts. Such inconsistencies in stimulus input may help explain delays in the understanding and use of morphological rules (e.g., understanding and using rules for possession, plurality, and verb tense).

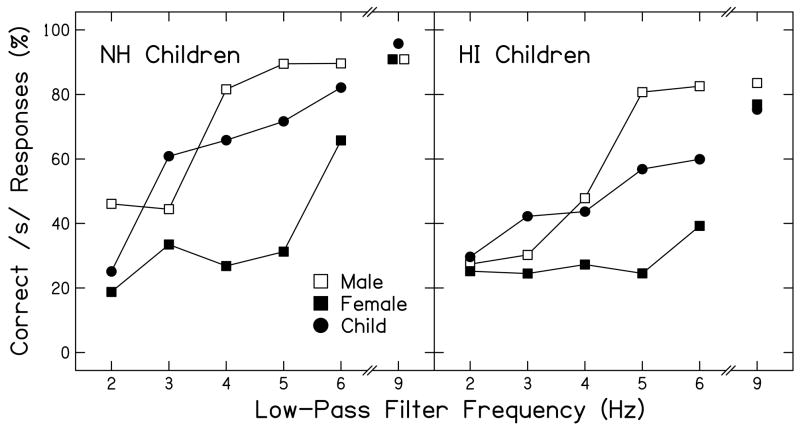

Kortekaas & Stelmachowicz (2000) evaluated the effects of low-pass filtering on the perception of /s/ in adults and three groups of normal-hearing children (5, 7, and 10 yr. olds). Results revealed that the children required a wider signal bandwidth than the adults to perceive /s/ correctly. In a follow-up study, Stelmachowicz et al. (2001) investigated the effect of stimulus bandwidth on the perception of /s/ by children and adults with normal hearing (NH) and HL. Consonant-vowel (CV) and vowel-consonant (VC) nonsense syllables (/si/, /fi/, /θi/, /is/, /if/, /iθ/) were produced by an adult male, an adult female, and a child talker and were presented in a 3-choice, closed-set format. Stimuli were low-pass filtered at six frequencies from 2–9 kHz. For the listeners with HL, stimuli were altered to provide frequency/gain characteristics recommended by the Desired Sensation Level Procedure (Seewald et al., 1997). Figure 1 shows the percent of correct /s/ responses as a function of low-pass filter frequency for the three talkers. The left and right panels show data from 20 children with NH and 20 children with HL (5-to-8 years), respectively. In general, the performance of the children with HL was poorer than that of the children with NH. For both groups, mean performance for the male talker reached its maximum at a bandwidth of 5 kHz. For the female and child talkers, however, mean performance continued to improve up to a bandwidth of 9 kHz for the listeners with and without HL. These results are consistent with the acoustic characteristics of /s/ as spoken by these three talkers.

Fig. 1.

Percent of correct /s/ responses as a function of low-pass filter frequency for children with NH and HL. The parameter in each panel is talker gender (adapted from Stelmachowicz et al., 2001).

In a subsequent study, Stelmachowicz, Pittman, Hoover, and Lewis (2002) investigated the ability of children with HL to perceive the bound morphemes -s and –z (e.g., cat vs. cats, bug vs. bugs) when listening through personal hearing aids. A picture-based test of perception, consisting of 20 nouns in either plural or singular form was developed. Test items (e.g., “Show me babies”) were spoken by both male and female talkers and the child’s task was to point to the correct picture. Data were obtained from 40 children (5–13 years) with a wide range of sensorineural hearing losses. Results revealed significant main effects for both talker and plurality. Mean performance for the female talker was poorer than for the male talker and a factor analysis revealed that mid-frequency audibility (2–4 kHz) appeared to be most important for perception of the fricative noise for the male talker while a wider frequency range (2–8 kHz) was important for the female talker.

An additional concern regarding audibility of high-frequency speech cues is the ability of children with HL to adequately monitor their own speech. Numerous factors influence self-monitoring, including degree and configuration of HL, and hearing-aid characteristics. In addition, Cornelisse, Gagné, and Seewald (1991) showed that the spectrum of self-generated speech (measured at the ear) contains less energy above 2 kHz than in face-to-face conversation at 0° azimuth. Pittman, Stelmachowicz, Lewis, and Hoover (2003) compared the spectral characteristics of speech simultaneously recorded at the ear and at a position 30 cm in front of the mouth for both adults and children (2–4 years of age) with NH. Long-term average spectra were calculated for sentences and short-term spectra were calculated for selected phonemes. Results revealed a reduction in amplitude (for speech levels measured at the ear) of approximately 8–10 dB for frequencies ≥ 4 kHz relative to speech received at a 0° azimuth. The lower intensity of higher frequencies in self-generated speech is likely to be exacerbated by the bandwidth limitations of hearing aids. As a result, the ability of children with HL to adequately monitor their fricative productions may be compromised.

Recently, Stelmachowicz, Lewis, Choi, and Hoover (2007) investigated the effects of stimulus bandwidth (5 kHz vs. 10 kHz) on a variety of auditory tasks (i.e., novel word learning, listening effort, the perception of nonsense syllables and words) for children with NH and children with mild to moderate HL. In general, the children with HL showed poorer performance than the children with NH on all tasks. However, results for both groups revealed no effect of bandwidth on novel word learning or listening effort, but significant bandwidth effects for nonsense syllable and word recognition. In addition, significantly larger bandwidth benefits for /s/ and /z/ were observed for the children with HL in comparison to the group with NH.

Indirect evidence in support of a bandwidth effect comes from a longitudinal study of early phonological development in children with both NH and HL (Moeller et al., 2007a). In this study, 21 children with NH and 12 children with mild-to-profound sensorineural HL were videotaped interacting with their mothers every 6–8 weeks from 9 months to 3 years of age. The mean age at which the children with HL were aided was 5 months. Despite early intervention, the group with HL showed statistically significant delays in the acquisition of many phonemes relative to their peers with NH. At 16 months of age, children in the group with HL produced only 80% of the vowels produced by the children with NH. Values for the non-fricatives (stops, nasals, glides, and liquids) and fricatives/affricates were 70% and 40%, respectively. In addition, results suggested that delays occurred for 10 of the 10 fricatives/affricates analyzed, not just the few selected fricatives studied in the previous investigations. In general, many fricatives tend to have peak frequencies higher than 5 kHz (Baken & Orlikoff, 2000). It is possible that the limited bandwidth of current hearing instruments may have contributed to these delays. Since approximately 50% of the consonants in English are fricatives, these delays are likely to have a substantial influence on later speech and language development.

When learning new words under natural circumstances, it is assumed that young children will repeat what they hear and that, if the signal is altered or distorted in some way, productions will reflect these stimulus alterations. One way to assess the effects of stimulus bandwidth on phonological development would be to fit two groups of children with HL with typical and extended bandwidth hearing instruments and follow them in a longitudinal study. Unfortunately, a wearable BTE device with an extended high-frequency response is not currently available2. Thus, in the current study we assessed the effects of bandwidth on speech production by asking young children with NH to repeat low-pass filtered and unfiltered two-syllable nonsense words (e.g., /rǝˈziv/) that they believed to be words from a foreign language. This approach was used to extend the previous finding on bandwidth restriction using nonsense monosyllables and to minimize the likelihood that children would rely upon their current lexical knowledge to repair speech sounds that had been altered in some way. It was hypothesized that speech production would be affected by the quality of speech information in the signal from the “alien language” model. Based on considerations of the acoustic cues for fricatives, it was predicted that overall performance would be better for the male talker and that a restricted bandwidth would have a greater influence on performance for the female talker than for the male talker. To the extent that the input bandwidth affects the production of fricatives, these results may have implications for the determination of hearing-aid bandwidth and/or recommended hearing-aid gain for children with HL.

Method

Subjects

Twenty-four children with NH (6–7 years) who were native speakers of English participated in this study. Subjects were restricted to this age range because it is likely that they would have developed sufficient metalinguistic knowledge to understand that different spoken languages exist in the world. All children were screened for NH bilaterally (≤ 15 dB HL from 250–8000 Hz). To ensure that children could adequately produce the nine fricatives/affricates used in the study, they were shown pictures of common objects that contained the target phonemes and were asked to name them. They were also asked to produce each fricative or affricate in initial, middle, and final positions in nonsense syllables (e.g., /sⱭ/, /ⱭsⱭ/, /Ɑs/).

Stimuli

Table 1 shows the 14 two-syllable nonsense words used as stimuli. These words were created combining nine fricatives and affricates (/s/, /z/, /θ/, /ð/, /∫/, /t∫/, /dƷ/, /f/, /v/), seven vowels (four corner vowels: /i/, /æ/, /Ɑ/, /u/; two diphthongs: /eI/, /oƱ/; a weak vowel /ǝ/), and 10 additional non-fricative consonants/consonant clusters. Phonemes were combined so that: 1) all syllables within words were legal English syllables, and 2) each word consisted of one or two fricatives/affricates, two vowels, and an additional non-fricative consonant/consonant cluster. Additionally, each target consonant appeared in the initial, medial, and final positions. Stress patterns varied across words, but included a disproportionate number of words with stress on the second syllable to help reinforce the idea that these were non-English words. All 14 test words were spoken by a male talker and a female talker. The female talker was one of the talkers from the Stelmachowicz et al. (2007) study. Stimuli were digitally recorded at a sampling rate of 44.1 kHz (16 bits) using a dynamic microphone with a 0.055–14 kHz response (Shure, SM48) connected to Computerized Speech Lab, Model 4500 (Kay Elemetrics Corp). The tokens were then low-pass filtered at 5 kHz and 10 kHz with a rejection rate >100 dB/octave for a total of 56 items (14 words × 2 talkers × 2 bandwidths).

Table 1.

Test stimuli (1–14). Items C1–C4 were included in the test set, but were not scored. The target phonemes are double- underlined.

| Stimulus No. | Nonsense Words |

|---|---|

| 01 | brǝvi∫ |

| 02 | ðǝˈgeIs |

| 03 | Ɑˈθup |

| 04 | rǝˈziv |

| 05 | ˈdƷæmⱭf |

| 06 | zǝˈfin |

| 07 | θǝˈsⱭg |

| 08 | ˈt∫Ɑðɚ |

| 09 | ˈpoƱt∫ið |

| 10 | fǝˈ∫ul |

| 11 | ˈvidⱭθ |

| 12 | ˈkudƷⱭz |

| 13 | ∫ǝˈrⱭdƷ |

| 14 | ˈsoƱkǝt∫ |

| C1 | Ɑˈfup |

| C2 | ˈdƷæmⱭ |

| C3 | ǝˈsⱭg |

| C4 | ˈvidⱭ |

Pilot data revealed that children consistently omitted /f/ and /θ/ in the words /ˈdƷæmⱭf/, /θǝˈsⱭg/, /ˈvidⱭθ/, and /Ɑˈθup/ when repeating these words produced by the female talker. Thus four additional words with /θ/ and /f/ omitted (/ˈdƷæmⱭ/, /ǝˈsⱭg/, /ˈvidⱭ/) or substituted with the most frequent error (/Ɑˈfup/) were created for the male talker condition only, but were not scored. This was necessary to ensure that listeners would not “repair” words spoken by the female talker based on their knowledge of the same words spoken by the male talker. These additional words were low-pass filtered as described above. To simulate typical listening environments and the reduced audibility of the low-amplitude components of speech experienced by listeners with HL, all stimuli were presented in a background of speech-shaped noise at a +15 dB SNR (based on rms amplitude). The noise was mixed with each stimulus waveform. The onset of the noise occurred 100 ms prior to presentation of the target stimulus and terminated 100 ms after the target.

Procedures

Prior to data collection, each child viewed a 30-second animated slideshow (Microsoft PowerPoint, 2003) in which an alien creature from the planet “Nano” told the children that they would be asked to repeat words in his native language. Additionally, the examiner told the children to imitate the words as closely as possible, including any “accent” they may hear. These instructions were intended to deter children from “repairing” an unknown nonsense word by defaulting to a similar English word, which often happens when words are outside the lexicon of a child (i.e., upon hearing the word “hurl”, children often respond with “girl”). To allow children an opportunity to refine their productions, each word was presented and recorded twice, but only the second response was used in the analysis. On rare occasions when a sample was corrupted with noise or was peak clipped, the first response was used. The 64 words (14 words × 2 bandwidths × 2 talkers + 8 unscored words) were presented randomly at an rms level of 65 dB SPL via wideband supra-aural headphones (Sennheiser, HD 25-1). The children’s responses were digitally recorded in a sound booth using a condenser microphone with a flat response (+/− 2 dB) from 0.2 to 20 kHz (AKG Acoustics C535 EB). Speech tokens were amplified (Shure M267) and sampled at a rate of 44.1 kHz with a quantization of 16 bits. Children were given no feedback on the accuracy of their productions.

Two judges with experience in phonetic coding transcribed the consonants in each word using broad transcription. Broad transcription, rather than narrow transcription was chosen to capture phonemic changes due to bandwidth restriction that would be considered communicatively meaningful (e.g., in English real words, phoneme substitution can change word meaning as in, “park /pⱭɹk/” vs. “part /pⱭɹt/,” but both /phⱭɹk/ and /pⱭɹk/ are understood as variants of “park”). Judges were blinded with respect to talker and bandwidth conditions. Only the target fricatives were scored. In the event of a disagreement, a third judge transcribed the disputed test items. This procedure was necessary for less than 1% of the sample.

Results and Discussion

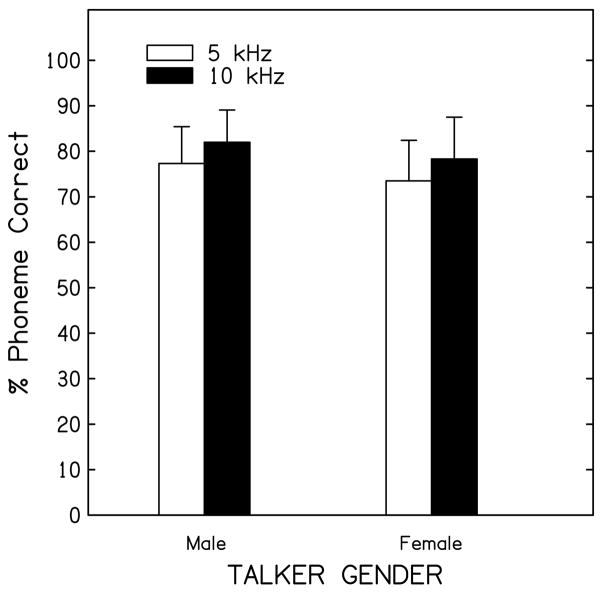

The dependent variable (% correct fricatives) was subjected to a repeated-measures ANOVA with bandwidth (5 kHz, 10 kHz) and talker (male, female) as within-subjects factors. Figure 2 shows the effects of bandwidth and talker gender on all fricative scores. Collapsed across bandwidths, performance was significantly better for the male talker compared to the female talker (F(1,22) = 9.17, p<0.01). In addition, a significant bandwidth effect was observed (F(1,22) = 22.39, p<0.001). Surprisingly, the trends are similar for both talkers with an average increase in fricative perception of 5% at the wider bandwidth.

Fig. 2.

Percentage of correct phonemes as a function of talker gender for stimuli low-pass filtered at 5 and 10 kHz. Error bars represent +/− 1 SD from the mean.

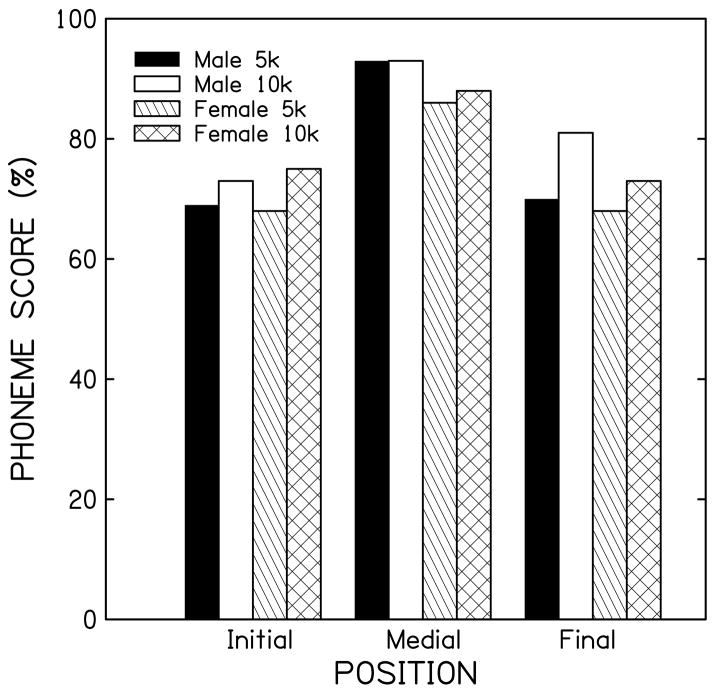

Figure 3 shows fricative scores as a function of target position in the word for the male and female talkers at both bandwidths. Performance was highest for the medial position, possibly because secondary cues to fricative identity may have been provided by both onset and offset transitions.

Fig. 3.

Phoneme score as a function of consonant position in nonsense words. The parameters are talker gender and bandwidth condition.

Error Rates and Error Patterns

While the mean bandwidth effects shown in Figures 2 and 3 are rather small, relatively large effects were observed for specific phonemes. To explore the bandwidth effect in more detail, error rates and error patterns were examined for each talker/bandwidth combination. Table 2 shows scores (in % correct) for all nine fricatives across both talker and bandwidth. Thirteen of the 18 cases showed positive changes in performance (ranging from 1–24%) at the 10 kHz bandwidth. As might be expected for children with NH, overall performance was relatively high with the poorest scores occurring for /θ/, /ð/, and /f/ which are relatively low in amplitude. These three fricatives showed the largest effects of bandwidth suggesting that the limited bandwidth altered their acoustic cues. Planned comparison paired t-tests were performed for each fricative to determine if clinically relevant (i.e., ≥10%) bandwidth differences were statistically significant. A Bonferroni correction was used to control familywise error (p < 0.05). Significant bandwidth effects were observed only for /θ/ spoken by both male and female talkers, and for /f/ spoken by the male talker. These results suggest that even if overall results reveal statistically significant differences in the perception of fricatives filtered at 5 and 10 kHz, the differences were small for most of the phonemes. It is likely that the significant bandwidth effect was driven by the combination of small improvements across a large number of fricatives and moderate improvements for a few fricatives.

Table 2.

Mean percent correct scores by phoneme for both talker and bandwidth. A significance level of 0.05 was adjusted for multiple comparisons using the Bonferroni method. Difference scores marked with an asterisk indicate statistically significant differences (p< 0.0125).

| Male Talker

|

Female Talker

|

|||||

|---|---|---|---|---|---|---|

| Phoneme | 5 kHz | 10 kHz | Diff | 5 kHz | 10 kHz | Diff |

| /θ/ | 29% | 53% | 24%* | 32% | 49% | 17%* |

| /ð/ | 46% | 50% | 4% | 53% | 63% | 10% |

| /t∫/ | 99% | 96% | −3% | 97% | 94% | −3% |

| /dƷ/ | 97% | 96% | −1% | 86% | 85% | −1% |

| /f/ | 68% | 78% | 10%* | 56% | 57% | 1% |

| /v/ | 86% | 93% | 7% | 75% | 79% | 4% |

| /∫/ | 85% | 89% | 4% | 87% | 89% | 2% |

| /s/ | 94% | 97% | 3% | 92% | 97% | 5% |

| /z/ | 92% | 88% | −4% | 86% | 92% | 6% |

|

| ||||||

| Mean | 83% | 86% | 79% | 82% | ||

The significant results with /θ/ and /f/ are not surprising because previous studies of consonant perception in noise with adults revealed that these are among the least accurately perceived consonants and are frequently confused with each other (Cutler, Weber, Smits, & Cooper, 2004; Miller & Nicely, 1955). However, the error patterns for these phonemes in the current study were somewhat unexpected. Table 3 lists errors >10% across the three positions for /θ/ and /f/. Underlined entries indicate confusions that were rarely observed (< 5%) in previous studies at 0 dB SNR (recall that the SNR in the current study was +15 dB). Notably, the fact that different error patterns were observed for the female and male talkers suggests that children did not use knowledge of nonsense words spoken by the male talker at the 10 kHz bandwidth to identify words spoken by the female talker at a similar or narrower bandwidth.

Table 3.

The most commonly observed errors (>10%) for /θ/ and /f/. Numbers in parentheses show the frequency of each response category. Underlined entries indicate the errors that were minimal (< 5%) at 0 dB SNR in Cutler et al. (2004) or Miller & Nicely (1955).

| Condition | /θ/ (frequency/all errors) | /f/ (frequency/all errors) |

|---|---|---|

| Male 5 kHz | Omission (24/51) | /θ/ (9/23) |

| /f/ (7/51) | ||

| Male 10 kHz | /d/ (10/34) | /θ/ (7/16) |

| /s/ (8/34) | ||

| Female 5 kHz | /s/ (16/48) | Omission (19/32) |

| Omission (10/48) | ||

| /ð/ (9/48) | ||

| /f/ (8/48) | ||

| Female 10 kHz | /s/ (18/37) | Omission (15/31) |

| /ð/ (7/37) |

Note. Total number of cases per condition was 72.

The higher omission rate for female /f/ was probably due to its particularly low amplitude in the final position in combination with the narrower bandwidth (5 kHz). Consistent with this explanation, 14 of 19 female /f/ omissions at 5 kHz and all 15 omissions at 10 kHz occurred with /f/ in the final position. Voicing errors (/ð/) were found for female /θ/, probably due to the presence of background noise.

Among the unexpected errors, /d/ responses for /θ/ were observed only for the male talker in the initial position. In contrast, the /s/ responses for female /θ/ at both bandwidths and for the male /θ/ at 10 kHz occurred in all three positions. These unexpected errors were not reported in the previously mentioned consonant confusion studies with adults.

Comparison to Previous Studies

The most surprising finding in this study was the lack of a bandwidth effect for the phoneme /s/ spoken by the female talker. As discussed previously, significant bandwidth effects for the perception of /s/ spoken by a female talker were observed in the Stelmachowicz et al. (2001) study and the Stelmachowicz et al. (2007) study. Procedural differences between these earlier studies and the current investigation may have contributed to these differences. In the Stelmachowicz et al. (2001) study, one token each for /si/ and /is/ spoken by a female talker was low-pass filtered at six frequencies from 2 to 9 kHz and presented in quiet. The vowel /i/ was used to minimize the influence of the vocalic transition. The format was a 3-alternative forced choice (3 AFC) task with the most commonly occurring errors as foils (/fi/ and /θi/ for /si/; /if/ and /iθ/ for /is/). For 5–8 year old children with NH, the mean difference in performance between the 5 kHz and 9 kHz bandwidth conditions was 14% (see Fig. 1). In the Stelmachowicz et al. (2007) study, nine fricatives and affricates were used to construct VC nonsense syllables (also in an /i/ context). To provide a range of token variability, three different female talkers produced five exemplars of each VC for a total of 15 tokens for each fricative. Stimuli were presented at a +10 dB SNR in a closed set response format with 11 choices (9 phonemes, the vowel /i/ in isolation, and an “other” category). In that study, the mean difference in perception of /s/ (10 kHz – 5 kHz) for the 7–14 year old children with NH was 24%. In the current study, /s/ occurred in the initial, medial, and final positions of two-syllable nonsense words (constructed by following English phonotactics) and a wider range of vowels was used. Stimuli were presented at a +15 dB SNR. As discussed previously, the mean performance difference (10 kHz – 5 kHz) for the female talker tokens was only 5%. In short, experimental variations across these three studies include differences in SNR (quiet, +10 dB, +15 dB), response format, talkers, and speech materials (CV and VC; VC; two-syllable nonsense words).

With respect to SNR differences across studies, previous studies have shown that, for listeners with NH, a +15 dB SNR is essentially equivalent to presenting stimuli in quiet (Crandell & Smaldino, 1995). Yet, large bandwidth effects were observed in our previous studies (Stelmachowicz et al., 2001; 2007), which were conducted in quiet and +10 dB SNR, and minimal bandwidth effects were found in the current study (+15 dB SNR). The response format also differed across the three studies (3 AFC; 11 AFC; open set), but it is unclear how this factor might account for the observed performance differences. The remaining two differences among the studies are talkers and materials. Recall that one of the three talkers (DIH) in the Stelmachowicz et al. (2007) study was the sole female talker in the current investigation. For comparison, data from the earlier study were re-analyzed to assess bandwidth effects for /is/ tokens produced only by talker DIH. Results revealed a mean bandwidth effect of 19%, which was statistically significant (F(1, 31) = 13.65, p < 0.01).

Acoustic Analyses of /s/ Stimuli

Spectral moments

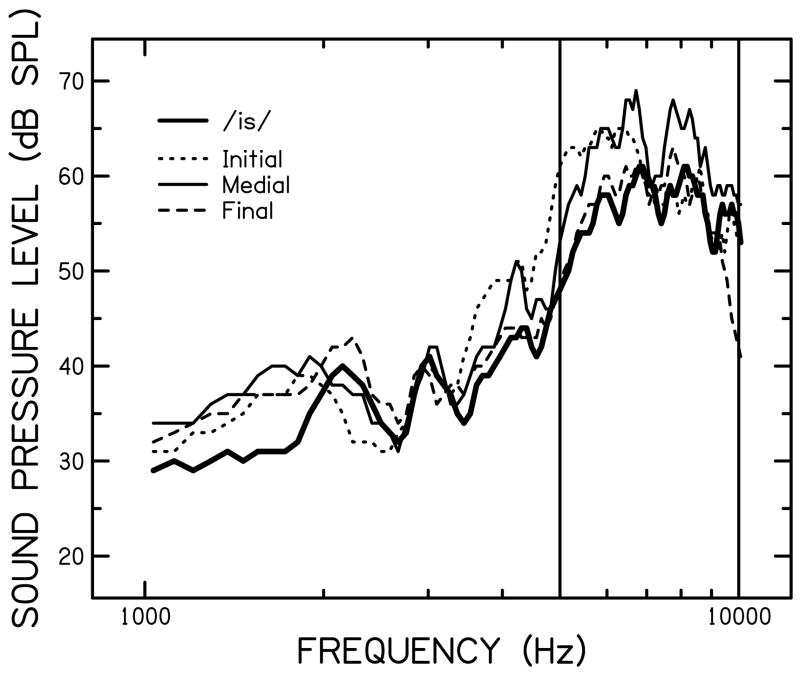

Because the effects of bandwidth differed markedly between the current study and one of the earlier studies (Stelmachowicz et al., 2007) in which the same talker participated, acoustic analyses of the fricative /s/ in each case were conducted. Fig. 4 shows the spectra of /s/ from the previous study (heavy line) in comparison to /s/ in three positions in the current study measured for the entire /s/ duration. Vertical lines are drawn at 5 and 10 kHz to indicate the cutoff frequencies of the two bandwidth conditions. Visual examination of this figure suggests that the primary energy in all four spectra was concentrated at frequencies above 5 kHz, but amplitude differences in the 3–5 kHz region occurred as a function of the position of /s/ for the complex words in the current study. As would be expected, the spectra of /s/ from the earlier study are most similar to the final position in the current study. In the 5 kHz condition in the current study, children may have been able to use spectral information in the 3–5 kHz region to identify /s/. To quantify acoustic differences in the fricative noise for both the 5 kHz and 10 kHz bandwidth conditions in these two studies, four spectral moments for /s/ tokens spoken by the same female talker were measured using Praat (Boersma & Weenink, 2006). Spectral moments were calculated from an 80 ms segment centered at the middle of the fricative noise. For both stimuli at both bandwidths, the frequency range for analysis was restricted to between 0.9 and 12 kHz in order to analyze only the frication component (cf., Jesus & Shadle, 2002) and to insure that the roll off was higher than the cutoff frequencies. Within the selected 80-ms segment, three overlapping 40-ms Hamming windows (512 FFT) were used. The measurements from these three windows were averaged. Table 4 shows the results of these analyses (by bandwidth) and frication duration for both studies along with the listeners’ performance changes (% correct) across the bandwidth conditions. When the measurements for the stimuli in the 10 kHz condition were examined, /is/ in the previous study was found to be similar to the medial and final positions in the current study. This is consistent with the similar listener performance for the 10 kHz bandwidth condition in the two studies. An analogous comparison for the 5 kHz condition, however, revealed no clear trend to explain the performance differences between the two studies. The common changes across the four stimuli as a function of bandwidth were a downward shift in the center of gravity, reduced spectral variance, and reduced skewness. These shifts are all in the direction of typical spectral moments for /f/ and /θ/ (lower center of gravity, higher variance, and lower skewness; see Nissen and Fox, 2005). The phoneme /θ/ was, in fact, the most common error substitute for /s/ in the 5-kHz condition in the earlier study (15%) and the current study (8%).

Fig. 4.

Sound pressure level (in dB) as a function of frequency for /s/ in initial, medial, and final word positions in the current study. For comparative purposes, the thick line shows final position /s/ produced by the same talker (DIH) in Stelmachowicz et al. (in press) study.

Table 4.

Spectral moments and duration for /s/ spoken by the female talker in the current study (rows 3–5) and the same talker (DIH) in the Stelmachowicz et al. (2007) study (last row). Results are shown for both the 10- and 5-kHz conditions.

| % correct

|

Center of Gravity (Hz)

|

Variance (Hz)

|

Skewness

|

Kurtosis

|

Duration (ms) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Bandwidth (kHz) | 10 | 5 | 10 | 5 | 10 | 5 | 10 | 5 | 10 | 5 | |

| /ˈsoƱkǝt∫/Initial | 100 | 92 | 6475 | 4302 | 1055 | 647 | 0.80 | −1.97 | 1.31 | 6.27 | 231 |

| /θǝˈsⱭg/Medial | 92 | 83 | 7485 | 4072 | 1078 | 949 | 0 | −1.91 | −0.51 | 2.30 | 179 |

| /ðǝˈgeIs/Final | 100 | 100 | 7388 | 3800 | 1144 | 979 | −0.54 | −1.25 | 0.56 | 0.58 | 369 |

| /is/Final | 92 | 73 | 7755 | 4027 | 1036 | 999 | −0.31 | −1.83 | 1.24 | 3.13 | 326 |

Acoustic Analyses of Children’s /s/ Imitations

It is also of interest to explore whether children’s imitation of fricatives might differ acoustically across the two bandwidth conditions. That is, even though the phonemic confusion analyses using broad phonetic transcription did not reveal bandwidth differences, it is still possible that children’s correct /s/ response productions might reveal subtle acoustic differences as a function of stimulus bandwidth. To test this hypothesis, acoustic analyses were performed on children’s recordings for correct /s/ productions. The phoneme /s/ was chosen because the current finding for this phoneme was inconsistent with our previous results and because the relatively high performance allowed analysis of 98% of the /s/ samples. Only the correct responses were analyzed to avoid confounding acoustic differences that would occur for phoneme substitutions. Using the acoustic analysis method described earlier, four spectral moments were measured for all 12 conditions (2 bandwidths × 2 talkers × 3 positions) for all the correctly produced /s/ tokens (/ˈsoƱkǝt∫/, /θǝˈsⱭg/, /ðǝˈgeIs/) for each of the 24 children. A repeated-measures ANOVA with children’s age as a between subjects factor and position of /s/ and bandwidth as within-subjects factors was conducted separately for each of the moment measures. No significant bandwidth or age effects were observed for any of the moments suggesting that regardless of their age, children did not alter their productions to match the spectral characteristics of the input. The only significant result was the effect of position on spectral variance (F(2,28) = 4.96, p < 0.02). A follow-up test (Tukey HSD) revealed that the /s/ produced in the initial position (1847 Hz) had significantly greater spectral variance than those in the final position (1631 Hz) (p < 0.05). This difference is probably due to the vowel context. For /s/ in real English words (i.e., seal, sock, and soup) produced by 5 year olds, Nissen and Fox (2005) reported a variance of 1730 Hz for /i/ context, 1860 Hz for /Ɑ/ context, and 2400 Hz for /u/ context. Thus, although the difference is statistically significant, spectral variances for /s/ production in the current study (as well as the trend for increased variance with vowel backness) are consistent with the Nissen and Fox results, and they also show the trend of increased variance with vowel backness. Therefore, contrary to our hypothesis, children’s /s/ productions were not affected by the bandwidth restriction of stimuli.

Influence of Stimulus Complexity

Another possible explanation for the high performance for /s/ in the current study is related to stimulus complexity. There are at least two possible mechanisms: the use of acoustic cues and the use of prior phonological knowledge. In the Stelmachowicz et al. (2007) study, stimulus materials were CV and VC syllables where the vowel context was /i/ and the F2 transition was minimal. In such a context, children’s responses might have been based primarily on center of gravity. However, when the stimulus structure is more complex and the variability in vowel context is increased, children may have shifted their attention to the dynamic cues. Specifically, dynamic cues can provide coarticulatory information that facilitates the prediction of subsequent phonemes. Cues associated with the vocalic transitions in the current study might have been more prominent than in the previous studies, thus facilitating the perception and production of /s/. The fact that overall performance was highest for phonemes in the medial position (see Figure 3) is consistent with this explanation. Previous studies have shown that young children tend to focus more on transitional cues than steady-state information (Morrongiello, Robson, Best & Clifton, 1984; Nittrouer & Studdert-Kennedy, 1987). To explore the possibility that children in the current study used the F2 transition to determine fricative identity, the extent and direction of the second formant (F2) transitions were measured for the syllable /is/ spoken by talker DIH in the Stelmachowicz et al. (2007) study and for /s/ in initial, medial, and final positions in the current study. Using a 25-ms Hamming window (512 FFT) for frequencies up to 12 kHz, analysis was performed for stimuli in both bandwidth conditions following procedures described by Jongman et al. (2000)3. Table 5 shows F2 transitions in Bark scale (column 2) and F2 values measured at the two windows in Hz (column 3). The F2 at the boundaries (underlined) showed coarticulatory effects from the surrounding vowels and varied from 1570 Hz (with /oʊ/) to 2550 Hz (with /i/) as the F2 for the vowels increased. Jongman et al. (2000) reported a similar average value (1967 Hz) for female talkers across six vowel contexts, /i,eI,æ,Ɑ,oƱ,u/. The largest and the smallest F2 transitions occurred for the CV and VC, respectively, in the medial position of /θǝˈsⱭg/. The transition values for the VC positions (/ðǝˈgeIs/ and /is/) are similar to each other and to the initial position /ˈsoʊkǝt∫/. In the Stelmachowicz et al. (2007) study, the vowel context /i/ was chosen to minimize the influence of F2 transitions. Because a wider range of vowel contexts were used in the present study, it is possible that larger vocalic transitions might have occurred, facilitating the perception of /s/. Yet, with one exception (/θǝˈsⱭg/), the F2 transition data in Table 5 suggest that the extent of the F2 transitions for /s/ in the current study were not considerably greater than those in the previous study. Since dynamic cues are thought to aid perception, the presence of two transitions could facilitate performance for the medial position relative to the initial and final positions. The higher overall phoneme scores for the medial position (Fig. 3) support this notion. However, as shown in Table 6, when position effects for /s/ were analyzed independently, the percent correct /s/ scores are actually lowest in the medial position. This occurred despite the fact that the F2 transition from /s/ into /Ɑ/ in /θǝˈsⱭg/ was the largest among those examined (see Table 5). These results suggest that the higher performance for the medial position seen in Fig. 3 is due to phonemes other than /s/. Since the same dynamic information was available at both BWs, the use of F2 transitional cues as measured here cannot explain the lack of a BW effect in the current study. It is possible, however, that other aspects of F2 below 5 kHz, that were not measured, may have contributed to saliency in the present study. Another option is that the acoustic cues below 5 kHz were equally salient across studies, but that children weighted the cues more heavily in two-syllable words than in CV and VC syllables.

Table 5.

F2 formant transitions (in Bark) for /s/ in three positions spoken by the female talker in the current study (rows 2–5) and similar results for /is/ from the same talker in the Stelmachowicz et al. (2007) study (row 6). In column 2, sign (+/−) indicates the direction of the transition. Column 3 depicts the beginning and end points of the F2 measurements in Hz (vowel midpoint to/from VC or CV boundary). F2 for the boundary is underlined. Because the frequency range of the F2 transition was always well below 5 kHz and F2 transitions were identical between the two bandwidth conditions, only the results for the 10 kHz stimuli are reported here.

| Stimulus | F2 Transition (Bark) | F2 (Hz) |

|---|---|---|

| ˈsoƱkǝt∫ (CV) | −1.02 | 1570/1350 |

| θǝˈsⱭg (VC) | +0.58 | 1708/1866 |

| θǝˈsⱭg (CV) | −2.03 | 1867/1378 |

| ðǝˈgeIs (VC) | −0.99 | 2693/2292 |

| /is/ (VC) | −0.81 | 2920/2550 |

Table 6.

Percent correct /s/ scores by talker, bandwidth, and position.

| Male | Female | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 5K | 10k | 5K | 10K | ||||||||

| Initial | Middle | Final | Initial | Middle | Final | Initial | Middle | Final | Initial | Middle | Final |

| 87.5 | 91.7 | 100.0 | 95.8 | 91.7 | 100.0 | 91.7 | 83.3 | 100.0 | 100.0 | 91.7 | 100.0 |

Another potential cue to perception is the use of phonotactic knowledge. It is known that as early as 9–10 months of age, infants prefer to listen to stimuli that contain phoneme sequences that are common in their ambient language (see Werker & Yeung, 2005 for a summary). Children undergo further refinement of phonological knowledge during childhood. In general, probabilistic phonotactic knowledge has been shown to influence wordlikeness judgments and recognition memory of nonsense words for adults (Frisch, Large, & Pisoni, 2000) and imitation of nonsense words by children (Munson, Edwards, & Beckman, 2005). When asked to imitate a mispronounced monosyllabic word (e.g., /θʌn/), preschoolers with NH often respond with real words (e.g., “sun”), while early school-age children tend to produce faithful imitations (Mowrer & Scoville, 1978). However, none of the 6–7 year old NH children in the current study responded with real words. One difference between the Mowrer and Scoville study and the current study was that the stimuli in the current study were two-syllable nonsense words with varying stress patterns that could not easily be converted into real words. In the previous studies, even though children were not told the focus of the study, it is possible that they quickly learned to direct their attention to the only obvious difference across stimuli, the consonant. In the current study, children also were not told the focus of the study (i.e., fricatives). However, a higher level of attention and memory may have been required to remember the correct sequence of multiple phonemes in the unfamiliar nonsense words. To perform at their best, children might have subconsciously reduced cognitive demands by encoding words using the residual acoustic cues weighted by the phonotactic probability in English. All words in the current study were presented in background noise, so some degree of frication was always present. Even when the identity of a phoneme was diminished by bandwidth restriction, the noise was still audible. Since /s/ is the fourth most frequent consonant and the most frequent fricative in English (Tobias, 1959), when the phoneme was masked by the noise, children might have interpreted this noise as /s/ based on prior phonological knowledge; this strategy might have increased the total number of /s/ responses, which in turn, increased the number of correct /s/ responses.

Recall that nonsense word repetition was used in this study so that we might predict the effects of bandwidth restriction on word imitation by children with HL. It is not clear, however, whether the confusion patterns or encoding strategies observed here also would occur for young children with HL. Moeller et al. (2007a; 2007b) found that young hearing-aid users were delayed in the onset of canonical babble, syllable complexity, the acquisition of fricatives/affricates, and vocabulary growth. These findings were attributed to reduced auditory experiences (due to the negative consequences of distance, reverberation, and noise) as well as to the limited bandwidth of hearing aids. Interestingly, Cleary, Dillon, & Pisoni (2002) found that some 8–9 year old children with cochlear implants were able to use sublexical phonological knowledge to decode spoken nonsense words. Munson et al. (2005) suggested that children with phonological delays might have trouble extracting categorical phonemic representations that link lexical, articulatory, and acoustic representations to each other. It is possible that reduced auditory experiences also might delay the acquisition of phonological knowledge and/or use of acoustic cues by young hearing-aid users.

Alternatively, the results from NH children in the current study could be interpreted to suggest that limiting signal bandwidth may not be as problematic for multi-syllabic words as for VC and CV syllables. However, in a study of 40 children with HL (5–18 yrs), Elfenbein et al. (1994) found that even children with mild hearing losses frequently misarticulated fricatives. In order to fully understand the effects of bandwidth restriction on the perception and production of fricative sounds and on phonological development, additional studies with children with HL over a range of ages are needed.

Conclusions

The overall goal of the current study was to explore the effects of stimulus bandwidth on the production of fricatives by young children with NH. Based on previous perceptual studies, it was hypothesized that a restricted stimulus bandwidth would have a negative effect upon fricative imitation. Although significant talker gender differences and talker gender by bandwidth interactions were observed, the differences were relatively small. In particular, the negative effects of filtering on the fricatives /s/ and /z/ spoken by the female talker were smaller than reported in previous perceptual studies. Explanations for these differences across studies were sought in terms of: 1) the effects of bandwidth restriction on the particular stimuli used in each study, 2) acoustic analyses (F2 transitions, spectral moments), and 3) prior phonotactic knowledge. Recall that nonsense words were used in an attempt to minimize reliance on prior lexical knowledge when repeating words so that we might interpret results within the context of early phonological development. It is possible that a combination of factors facilitated the perception of /s/ by the 6–7 year old NH children in this study. Specifically, children might have used acoustic information, probabilistic expectations, and perhaps other unidentified cues to fricative perception to facilitate the perception of /s/ in the 5 kHz bandwidth condition. It remains to be seen if children with hearing loss perform in a similar manner.

Acknowledgments

This work was supported by grants from the National Institute on Deafness and Other Communication Disorders, National Institutes of Health (R01 DC04300 and P30 DC04662).

Footnotes

Patricia G. Stelmachowicz, Kanae Nishi, Sangsook Choi, Dawna E. Lewis, Brenda M. Hoover, and Darcia Dierking, Boys Town National Research Hospital. Andrew Lotto, University of Arizona, Speech, Language and Hearing Sciences.

Sangsook Choi is now at the Department of Speech Language and Hearing Science, Purdue University.

The ANSI standard for designation of hearing aid bandwidth is −20 dB re: the high-frequency average full-on gain. Thus, the manufacturer’s specified bandwidth will generally over-estimate the actual bandwidth of audible speech.

Although in-the-ear, in-the-canal, and recently-developed open-fit hearing instruments (with the receiver in the ear canal) can provide an extended high-frequency response (i.e., 7kHz), these instruments are too large for the ear canals of infants and young children. It is difficult to achieve a wider bandwidth for behind-the-ear hearing aids. The combined length of the tone hook and tubing create tubing resonances that must be damped, and in doing so the high-frequency response is compromised.

F2 values were obtained from two windows in each stimulus: 1) at the boundary between /s/ and the adjacent vowel and 2) at the midpoint of the adjacent vowel. The transition was calculated by subtracting the F2 at the boundary from the F2 at vowel midpoint.

References

- Baken RJ, Orlikoff RF. Clinical measurement of speech and voice. 2. Clifton Park, NY: Delmar Learning; 2000. [Google Scholar]

- Bess FH, Dodd-Murphy J, Parker RA. Children with minimal sensorineural hearing loss: Prevalence, educational performance, and functional status. Ear and Hearing. 1998;19:339–354. doi: 10.1097/00003446-199810000-00001. [DOI] [PubMed] [Google Scholar]

- Boersma P, Weenink D. Praat: doing phonetics by computer (Version 4.4.13) [Computer program] 2006 from http://www.praat.org/

- Boothroyd A, Erickson FN, Medwetsky L. The hearing aid input: A phonemic approach to assessing the spectral distribution of speech. Ear and Hearing. 1994;15:432–442. [PubMed] [Google Scholar]

- Boothroyd A, Medwetsky L. Spectral distribution of /s/ and the frequency response of hearing aids. Ear and Hearing. 1992;13:150–157. doi: 10.1097/00003446-199206000-00003. [DOI] [PubMed] [Google Scholar]

- Cleary M, Dillon C, Pisoni D. Imitation of nonwords by deaf children after cochlear implantation: Preliminary findings. Annals of Otology, Rhinolology, and Laryngology. 2002;111:91–96. doi: 10.1177/00034894021110s519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornelisse LE, Gagné JP, Seewald R. Ear level recordings of the long-term average spectrum of speech. Ear and Hearing. 1991;12:47–54. doi: 10.1097/00003446-199102000-00006. [DOI] [PubMed] [Google Scholar]

- Crandell C, Smaldino J. Speech perception in the classroom. In: Crandell CC, Smaldino JJ, Flexor C, editors. Sound field FM amplification: Theory and practical applications. San Diego: Singular; 1995. pp. 29–48. [Google Scholar]

- Cutler A, Weber A, Smits R, Cooper N. Patterns of English phoneme confusions by native and non-native listeners. Journal of the Acoustical Society of America. 2004;116:3668–3678. doi: 10.1121/1.1810292. [DOI] [PubMed] [Google Scholar]

- Davis JM, Elfenbein J, Schum R, Bentler RA. Effects of mild and moderate hearing impairments on language, educational, and psychosocial behavior of children. Journal of Speech and Hearing Disorders. 1986;51:53–62. doi: 10.1044/jshd.5101.53. [DOI] [PubMed] [Google Scholar]

- Davis JM, Shepard N, Stelmachowicz P, Gorga M. Characteristics of hearing-impaired children in the public schools: Part II--Psychoeducational data. Journal of Speech and Hearing Disorders. 1981;46:130–137. doi: 10.1044/jshd.4602.130. [DOI] [PubMed] [Google Scholar]

- Denes PB. On the statistics of spoken English. Journal of the Acoustical Society of America. 1963;35:892–904. [Google Scholar]

- Dubno JR, Dirks DD. Evaluation of hearing-impaired listeners using a nonsense-syllable test. I. Test reliability. Journal of Speech and Hearing Research. 1982;25:135–141. doi: 10.1044/jshr.2501.135. [DOI] [PubMed] [Google Scholar]

- Elfenbein JL, Hardin-Jones MA, Davis JM. Oral communication skills of children who are hard of hearing. Journal of Speech and Hearing Research. 1994;37:216–226. doi: 10.1044/jshr.3701.216. [DOI] [PubMed] [Google Scholar]

- Frisch SA, Large NR, Pisoni DB. Perception of wordlikeness: Effects of segment probability and length on the processing of nonwords. Journal of Memory and Language. 2000;42:481–496. doi: 10.1006/jmla.1999.2692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughes GW, Halle M. Spectral properties of voiceless fricative consonants. Journal of the Acoustical Society of America. 1956;28:301–310. [Google Scholar]

- Jesus LMT, Shadle CH. A parametric study of spectral characteristics of European Portuguese fricatives. Journal of Phonetics. 2002;30:437–464. [Google Scholar]

- Jongman A, Wayland F, Wong S. Acoustic characteristics of English fricatives. Journal of the Acoustical Society of America. 2000;108:1252–1263. doi: 10.1121/1.1288413. [DOI] [PubMed] [Google Scholar]

- Killion MC. Problems in the application of broadband hearing aid earphones. In: Studebaker GA, Hochberg I, editors. Acoustical factors affecting hearing Aid performance. Baltimore, MD: University Park Press; 1980. pp. 219–264. [Google Scholar]

- Kortekaas R, Stelmachowicz P. Bandwidth effects on children’s perception of the inflectional morpheme /s/: Acoustical measurements, auditory detection, and clarity rating. Journal Speech, Language, Hearing Research. 2000;43:645–660. doi: 10.1044/jslhr.4303.645. [DOI] [PubMed] [Google Scholar]

- Markides A. The speech of deaf and partially-hearing children with special reference to factors affecting intelligibility. British Journal of Disorders in Communication. 1970;5:126–140. doi: 10.3109/13682827009011511. [DOI] [PubMed] [Google Scholar]

- Markides A. The speech of hearing impaired children. Dover, New Hampshire: Manchester University Press; 1983. [Google Scholar]

- McGowen RS, Nittrouer S. Differences in fricative production between children and adults: Evidence from an acoustic analysis of /∫/ and /s/ Journal of the Acoustical Society of America. 1988;83:229–236. doi: 10.1121/1.396425. [DOI] [PubMed] [Google Scholar]

- McGuckian M, Henry A. The grammatical morpheme deficit in moderate hearing impairement. International Journal of Language and Communication Disorders. 2007;42:17–36. doi: 10.1080/13682820601171555. [DOI] [PubMed] [Google Scholar]

- Miller GA, Nicely PE. An analysis of perceptual confusions among some English consonants. Journal of Acoustical Society of America. 1955;27:338–352. [Google Scholar]

- Moeller MP, Hoover B, Putman C, Arbataitis K, Bohnenkamp G, Peterson B, Wood S, Lewis D, Pittman A, Stelmachowicz PG. Vocalizations of infants with hearing loss compared to infants with normal hearing: Part I – Phonetic Development. Ear and Hearing. doi: 10.1097/AUD.0b013e31812564ab. in press-a. [DOI] [PubMed] [Google Scholar]

- Moeller MP, Hoover B, Putman C, Arbataitis K, Bohnenkamp G, Peterson B, Lewis D, Pittman A, Stelmachowicz PG. Vocalizations of infants with and without Hearing Loss: Part II – Transition to Words. Ear and Hearing. doi: 10.1097/AUD.0b013e31812564c9. in press-b. [DOI] [PubMed] [Google Scholar]

- Morrongiello BA, Robson RC, Best CT, Clifton RK. Trading relations in the perception of speech by 5-year-old children. Journal of Experimental Child Psychology. 1984;37:231–250. doi: 10.1016/0022-0965(84)90002-x. [DOI] [PubMed] [Google Scholar]

- Mowrer D, Scoville A. Response bias in children’s phonological systems. Journal of Speech and Hearing Disorders. 1978;43:473–481. doi: 10.1044/jshd.4304.473. [DOI] [PubMed] [Google Scholar]

- Munson B. Variability in /s/ production in children and adults: Evidence from dynamic measures of spectral mean. Journal of Speech, Language, and Hearing Research. 2004;47:58–69. doi: 10.1044/1092-4388(2004/006). [DOI] [PubMed] [Google Scholar]

- Munson B, Edwards J, Beckman ME. Relationships between nonword repetition accuracy and other measures of linguistic development in children with phonological disorders. Journal of Speech, Language, and Hearing Research. 2005;48:61–78. doi: 10.1044/1092-4388(2005/006). [DOI] [PubMed] [Google Scholar]

- Nissen SL, Fox RA. Acoustic and spectral characteristics of young children’s fricative productions: A developmental perspective. Journal of the Acoustical Society of America. 2005;118:2570–2578. doi: 10.1121/1.2010407. [DOI] [PubMed] [Google Scholar]

- Nittrouer S. Children learn separate aspects of speech production at different rates: Evidence from spectral moments. Journal of the Acoustical Society of America. 1995;97:520–530. doi: 10.1121/1.412278. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Studdert-Kennedy M. The role of coarticulatory effects in the perception of fricatives by children and adults. Journal of Speech and Hearing Research. 1987;30:319–329. doi: 10.1044/jshr.3003.319. [DOI] [PubMed] [Google Scholar]

- Nittrouer S, Studdert-Kennedy M, McGowan RS. The emergence of phonetic segments: evidence from the spectral structure of fricative-vowel syllables spoken by children and adults. Journal of Speech and Hearing Research. 1989;32:120–132. [PubMed] [Google Scholar]

- Norbury CF, Bishop DVM, Briscoe J. Production of English finite verb morphology: A comparison of SLI and mild-moderate hearing impairment. Journal of Speech, Language, and Hearing Research. 2001;44:165–178. doi: 10.1044/1092-4388(2001/015). [DOI] [PubMed] [Google Scholar]

- Owens E. Consonant errors and remediation in sensorineural hearing loss. Journal of Speech and Hearing Disorders. 1978;43:331–347. doi: 10.1044/jshd.4303.331. [DOI] [PubMed] [Google Scholar]

- Owens E, Benedict M, Schubert ED. Consonant phonemic errors associated with pure-tone configurations and certain kinds of hearing impairment. Journal of Speech and Hearing Research. 1972;15:308–322. doi: 10.1044/jshr.1502.308. [DOI] [PubMed] [Google Scholar]

- Pittman AL, Stelmachowicz PG, Lewis DE, Hoover BM. Spectral characteristics of speech at the ear: implications for amplification in children. Journal Speech, Language, and Hearing Research. 2003;46:649–657. doi: 10.1044/1092-4388(2003/051). [DOI] [PubMed] [Google Scholar]

- Rudmin F. The why and how of hearing /s/ Volta Review. 1983;85:263–269. [Google Scholar]

- Seewald RC, Cornelisse LE, Ramji KV, Sinclair ST, Moodie KS, Jamieson DG. DSL V4.1 for Windows: A software implementation of the desired sensation level (DSL i/o) method for fitting linear gain and wide-dynamic-range compression hearing instruments. London, Ontario, Canada: University of Western Ontario; 1997. [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. The effect of stimulus bandwidth on the perception of /s/ in normal and hearing-impaired children and adults. Journal of the Acoustical Society of America. 2001;110:2183–2190. doi: 10.1121/1.1400757. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Aided perception of /s/ and /z/ by hearing-impaired children. Ear and Hearing. 2002;23:316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Lewis DE, Choi S, Hoover BM. The effect of stimulus bandwidth on auditory skills in normal-hearing and hearing-impaired children. Ear and Hearing. 2007;28:483–494. doi: 10.1097/AUD.0b013e31806dc265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobias JV. Relative occurrence of phonemes in American English. Journal of the Acoustical Society of America. 1959;31:631. [Google Scholar]

- Yeni-Komshian GH, Soli SD. Recognition of vowels from information in fricatives: perceptual consequences of fricative-vowel coarticulation. Journal of the Acoustical Society of America. 1981;70:966–975. doi: 10.1121/1.387031. [DOI] [PubMed] [Google Scholar]