Abstract

Most decision-making capacity (DMC) research has focused on measuring the decision-making abilities of patients, rather than on how such persons may be categorized as competent or incompetent. However, research ethics policies and practices either assume that we can differentiate or attempt to guide the differentiation of the competent from the incompetent. Thus there is a need to build on the recent advances in capacity research by conceptualizing and studying DMC as a categorical concept. This review discusses why there is a need for such research and addresses challenges and obstacles, both practical and theoretical. After a discussion of the potential obstacles and suggesting ways to overcome them, it discusses why clinicians with expertise in capacity assessments may be the best source of a provisional “gold standard” for criterion validation of categorical capacity status. The review provides discussions of selected key methodological issues in conducting research that treats DMC as a categorical concept, such as the issue of the optimal number of expert judges needed to generate a criterion standard and the kinds of information presented to the experts in obtaining their judgments. Future research needs are outlined.

Keywords: decision-making capacity, competence, research ethics, categorical determinations of capacity, expert judgment

Introduction

“Are [persons who perform much worse than control subjects on competence-related measures] therefore incompetent to consent to participation in research? Perhaps surprisingly, it is difficult to say.”1(p260)

Persons with schizophrenia as a group perform less well than normal controls on measures of decision-making capacity (DMC), but there is considerable heterogeneity in their performance.2–4 Thus, even if a person has schizophrenia, diagnosis cannot be equated with decisional incapacity.5 But how are we to distinguish those who are competent from those who are not? That is, how can we translate data on the decision-making ability of subjects (generally treated as a dimensional concept) into data on the categorical capacity status of those subjects? This article's goal is to provide a general framework for thinking about and empirically investigating this surprisingly difficult question.

Importance of Studying Categorical Capacity Determinations

The determination of capacity in the clinical and research setting involves at least 3 elements: the abilities manifested by the patient; the contextual factors of the decision, of which the risk-benefit profile of the various decision options is primary; and the translation of the first 2 elements into a categorical determination, usually by an authorized clinician (such as a psychiatrist or psychologist).5 Most capacity research has focused on the first element, that is, the decision-making abilities of the subject. The role of contextual factors and the translation into a categorical judgment have been relatively less explored.

There are probably several reasons for this trend. First, since the controversies that often generate this area of research have been framed around the issue of whether certain groups of patients are too impaired to give consent, it is natural that the initial focus has been on defining the level and nature of decision-making abilities in certain groups of patients. This approach has yielded important and useful evidence. For example, the now widely established view that persons with schizophrenia show diverse levels of performance is based upon studies that used DMC as a dimensional concept.2 Showing that patient/subjects can perform as well as normal subjects with some types of interventions can be immensely informative.3, 6 Characterizing the neuropsychological, psychopathological, and general functional correlates of decisional impairment can be done without a categorical dependent variable.3–4, 7

Second, studying categorical determinations of capacity is complex because there are “value judgments” involved: to show that someone does not perform well on an instrument seems like a fact about the world; to pronounce that that person therefore must be stripped of his or her decisional authority seems beyond the reach of empirical research. However, an important goal of capacity research is precisely to arrive at policies and practices that help guide the differentiation of (or policies that assume that we can differentiate) the competent from the incompetent. In the real world, a potential research subject is either allowed or not allowed to consent for him- or herself; such decisions force a categorization. This indeed is a value judgment, but that by itself does not mean that we cannot study it empirically. The ultimate goal is to provide a way of making reliable competence determinations that balances autonomy and welfare in a way that reflects societal values. If data to guide such decisions are not provided, the vacuum will inevitably be filled by ill-informed practices.

Another potential reason why capacity research has not focused on categorical capacity determinations is that the “value judgment” about a person's DMC is difficult to study because it must reflect the contextual factors (such as the risk-benefit profiles of decision options) of the decision-making situation. These factors vary by context, making conclusions with external validity difficult to draw. Indeed, the issue of translating dimensional data into categorical data has a long history of controversy because there are potential adverse effects of focusing on the “cutoff scores” of capacity-assessment instruments.8 Such a focus may wrongly be taken to mean that cutoff scores are inherent features of the instrument (rather than needing context-by-context validation and context-sensitive application), which is, as noted by Grisso and Appelbaum, “conceptually illogical.”8 A superficial understanding and use of cutoff scores would surely invite massive “clinical misuse.”8

This difficulty, however, is relatively easily addressed in the research consent context because it is reasonable to assume that those who are eligible to enter a particular research protocol face similar risk-benefit prospects. Assuming that reasonable risk-benefit generalizations can be made across similar types of research protocols, the research consent context provides a useful venue for studying ways of translating impairment into categorizations of competence.

The need for capacity research to provide conclusions about categorical capacity status has been implicitly acknowledged in studies in which the researchers have used various methods to provide some categorical conclusions. One option has been to provide an a priori cutoff score or criterion.7, 9 The advantage of such an approach is that it gives the readers more “usable” information: the subjects are identified as either impaired or not, or capable or not. However, these cutoff scores remain somewhat arbitrary and may or may not reflect societal values. Such ad hoc solutions to the problem could in fact promote practices that have little ethical validity, since even if the dimensional data regarding DMC are valid, that does not guarantee a valid categorizing scheme.

Another frequently used method of generating categorical conclusions about subjects' DMC is the psychometric standard (e.g., using a cutoff score that is 2 standard deviations below the mean of control group performance).10–12 This norm-based method has a venerable tradition in psychological measurement and can provide important comparative information. But it has 2 drawbacks. First, it does not have an intrinsic connection to the ethical issue at hand. There is no intrinsic connection between statistical norms and ethics. Second, the psychometric method does not provide independent validation; it is simply a statistical method of creating normed comparisons.

Another method of generating categorizations of capacity status is to use clinician judgments of capacity.13–20 This method has the following advantages. First, it is ethically more preferable because, in fact, our society authorizes clinicians to make such decisions. Second, by using clinician judgments, an independent criterion validation can be performed. Third, by validating decisional ability measures against clinician judgments, one can generate data that could be useful in stereotyped risk-benefit situations such as the research consent context. For the research consent context in which the risk-benefit profile can be held constant, and where the thresholds for competence can be benchmarked against an instrument for assessing decisional abilities, it may even be possible to train research assistants to perform substantive screening evaluations of decision-making competence.

Thus, from the point of view of ethical validity, clinician judgments are the provisional “gold standard.” The main drawback is that, at this stage of research on expert judgments of capacity, we know relatively little about how clinicians make capacity judgments. In terms of the reliability of clinician judgments (an essential requirement for any criterion standard), studies so far have produced somewhat mixed results. Marson et al. have shown that, in the context of Alzheimer's disease, physicians of different specialties apparently make diverse capacity judgments (group kappa of 0.14 among 5 judges), even when they base their decisions on identical clinical information.21 Others have shown that geriatric and consultation/liaison psychiatrists can often achieve a considerable degree of agreement.13 Although it appears that giving the experts clear, standard specific instructions may be helpful,22 we clearly need more data on how reliable clinician judgments are. In my experiences with Alzheimer's disease patients, pair-wise kappa scores among clinician judges have been quite good, ranging from 0.42 to 0.76.13, 23 Few expert judgment data from patients with schizophrenia are available. In a preliminary analysis of 34 patients with schizophrenia, pair-wise kappa scores of expert judgments ranged from 0.78 to 0.86 (unpublished data, presented by S. Kim at the 2003 Annual Meeting of the American College of Neuropsychopharmacology).

Methodological Issues

There are many methodological and practical issues that arise when employing expert categorical judgments in capacity research. The following is not intended as an exhaustive discussion but, rather, as an initial framework with elaboration of selected key issues. The methodological issues can be divided into 2 broad areas: issues regarding who the “expert” capacity evaluators are and issues related to the information they are given to render their categorical decisions.

Expert Clinician Factors

Who may serve as “expert judges” conducting capacity determinations in capacity research studies? I suggest 2 minimum qualifications. First, they should be clinicians who have adequate experience with neuropsychiatric patients (such as supervised experience during training or, ideally, further practice experience); it seems important that they not be distracted by the novelty of seeing a patient impaired by a mental illness, and they should have experience forming working relationships, even if only for an interview, with ill patients. This requirement helps minimize extraneous influences on the evaluative judgment and helps ensure optimal performance in the patient/subjects. Second, they should have specific expertise in conducting capacity determinations. For example, some part of their professional work should be devoted to conducting capacity determinations. Since very few clinicians, if any, primarily evaluate persons' capacity for research consent decisions, generally the experts will be persons who conduct capacity evaluations in the clinical setting, such as consultation psychiatrists in general hospitals or geriatric psychiatrists. In fact, they need not be psychiatrists, as some psychologists also have similar scope of practice and experience.14

This recommendation for clinicians with expertise in capacity assessments is based on the common practice of physicians (usually psychiatrists) conducting capacity assessments for treatment decisions in health care settings. However, some may argue that the normative standard should reflect a wider input, such as persons who are stakeholders or persons who usually have a role in the policy-making process or in the implementation of policy. Such persons could include patient representatives, bioethicists, researchers, judges, Institutional Review Board members, and others. For instance, studies have been conducted comparing lawyers' versus health care professionals' judgments of patients' capacity for consenting to electroconvulsive therapy treatments.24 If such persons are used to conduct validation studies of competence, it would seem important to ensure that there is sufficient training provided, as well as sufficient background education on neuropsychiatric conditions, to maximize validity. It remains to be seen whether a sufficiently strong normative argument can be made for involving such nonclinicians and whether sufficiently reliable empirical evidence can be generated about their ability to make valid judgments.

One of the most important methodological issues regarding the use of expert clinicians is the number of experts to employ to provide criterion validation. Although studies have been conducted in which capacity measurements have been validated against a single expert,11, 15, 20 this risks loss in reliability given the potential variability among clinicians who conduct capacity assessments.21, 24

How many judges should be used to validate the final categorical status of the subjects? The answer will be a balance of the incremental advantage of having more judges in relation to cost, the availability of experts, and the purpose of the study. For instance, an initial pilot study might employ a single expert to assess the sufficient correlation between an instrument and categorical judgments, but if the goal is to provide more reliable data for the implementation of policy (e.g., if a capacity-assessment instrument is to be routinely used in research with significant risk involving a population known to have a high incidence of incapacity), then several experts might be used to generate the criterion thresholds.

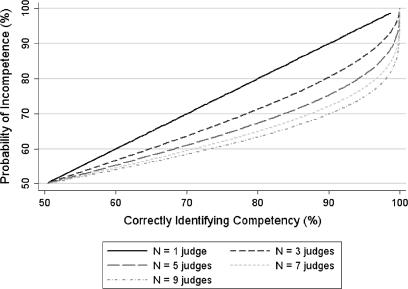

What is the incremental advantage in accuracy as the number of expert judges increases? Figure 1 provides a simulation that demonstrates the statistical gain in increasing the number of judges used in determining the categorical DMC status of subjects. Given that the real-world normative standard is the judgments of experts, for the purposes of this simulation, the true competency status of a subject is defined by a majority view of the universe of expert judges. Thus, any subject who is likely to be judged incompetent by greater than 50% of judges has a “true” status of incompetence. The y-axis in figure 1 corresponds to the proportion of the universe of expert judges who find a subject incompetent (which is the probability that a subject is actually incompetent). However, if a subject's performance is somewhat marginal, there will be greater disagreement among the universe of experts; for instance, only 60% of judges may find that subject incompetent. For such a subject, if our “expert group” (i.e., a random subset of experts from the universe of experts) consisted of a single randomly selected expert, the likelihood of correctly identifying the subject as incompetent would be 60%. However, if we randomly chose a higher number of experts and used their majority or higher agreement as the final judgment, then we would have a greater than 60% chance of correctly identifying the subject as incompetent. For example, if N = 5 judges are used, there is a nearly 70% chance of correctly identifying the subject as incompetent.

Fig. 1.

A Statistical Simulation of the Effect of Number of Expert Judges on Accuracy of Capacity Determinations Given a Prior Probability of Competence

Note: The simulation uses a binomial distribution where the competency status is a dichotomous random variable, with each expert's judgment representing an independent trial. The x-axis is the probability of the number of “successes” for N number of trials, defined as majority or better agreement within the expert group of varying numbers of experts. The y-axis represents the probability that a subject is actually incompetent.

This situation can be captured by a binomial distribution, if competency status is taken as a dichotomous random variable, and the number of expert judges in the group (figure 1 simulates for 1 to 9 judges), as the number of Bernoulli trials.25(pp147–153) A helpful way to think about this simulation is to treat the random sample of experts who render judgments as a “probe” for detecting what the universe of judges would determine. Suppose, for example, a person is decisionally impaired so that 65% of the universe of judges would find that person incompetent. Then what is the probability that a random group of N judges would give independent opinions whose majority or higher agreement would be in the direction of finding this person incompetent? If N = 1, it is 65%; however, if, for instance, 7 experts are used, then the probability of detecting incompetence rises to about 80%. For a patient who has a 75% probability of incompetence, a 5-judge panel would detect incompetence with 90% probability. Figure 1 is simply a graphical representation of this idea for subjects with varying probabilities of incompetence (y-axis) judged by expert judge groups whose numbers vary from 1 to 9 (x-axis).

Of course, the real world is much more complicated. It is unlikely that one could ever select a truly random sample of judges from the universe of expert clinicians who regularly perform capacity evaluations. Also, even if it were possible to determine the views of the actual universe of judges, it is not clear, as a normative matter, that a simple majority or a similar rule is the correct rule. Perhaps, given our society's relative favoring of autonomy, the y-axis probabilities should be set such that a person would be deemed incompetent only if 60% of the universe of judges would deem that person incompetent. (Note, however, that in this case the simulation in figure 1 is still informative, as one needs only to look at the graph from the y-axis value of 60% and above.) Despite these limitations of an idealized simulation, it is still useful to examine figure 1 because it nicely captures the important statistical considerations involved in choosing the number of expert judges in a validation study; it demonstrates that using more than 1 judge as a criterion standard improves the likelihood of predicting the correct competency status and gives a quantitative estimate of this incremental improvement.

Materials and Instructions for the Experts

How should the experts be instructed, if at all? This may have consequences on the reliability of their judgments. Marson et al. have found that the reliability of their experts' judgments improved when they were explicitly trained on assessing competence related to each legal standard.21–22 There are good reasons to implement some type of training of expert clinicians at the outset of a project. Even clinicians who routinely conduct capacity evaluations rarely have an opportunity to “calibrate” their judgments by comparing them with others' judgments or even by having to talk about their perceptions and judgments. The capacity-assessment education that clinicians will have received in their training is likely to be heterogeneous. And clinicians from different jurisdictions may have differing practices, as treatment-consent DMC standards do vary by jurisdictions.5 Finally, although it is not difficult to find clinicians who routinely perform capacity evaluations for medical treatment decisions, it is relatively difficult to find clinicians who conduct them for research participation decisions.

What exactly should the training look like? Since there are no explicit legal standards for DMC for research consent, the training would involve reminders of broad principles that are common to assessments of decision-making abilities.5(p211) An especially important principle is the risk-sensitive or “sliding scale” approach to categorical judgments of DMC, and this principle needs to be combined with information regarding the current federal regulations that outline how research involving adults is to be assessed in terms of risks and potential benefits (45CRF46.111.a.1–2), as well as the positions of various commissions and work groups on this issue.26

On what information should the experts base their judgments? The method that probably has the most face validity is to give each clinician a chance to interview each subject.17 This would allow each clinician the opportunity to probe, explore, and confirm his or her clinical impressions. The main drawback is that the increased face validity comes at the expense of introducing many more uncontrolled variables (thus potentially decreasing reliability) and tremendously increased cost and effort.

The other option (more widely used) is to provide judges with information from a capacity-assessment interview, in the form of a video, audio, or transcript (transcripts can be combined with video or audio information). Given the advances in computer-based video technology, it is now relatively inexpensive and straightforward to use digitized video that can be played on universally available video graphic software. I favor audiovisual format over either audio or transcripts. In my experience, the pauses, the facial and body language, the tone of voice, and so on convey valuable information about whether the subject is appropriately engaged with the interview and answering questions correctly.

Another very important design issue is the distribution of abilities exhibited by the group of subjects reviewed by the clinician experts. If the experts are exposed to persons whose decision-making abilities are obviously intact or obviously absent, then the agreement among the subjects will be very high. Some published studies have impressive agreement rates, but further examination of their patient samples indicates that the agreement may be an artifact, as would occur when a significantly impaired patient group is mixed in with normal controls or when there is a bimodal distribution of abilities.16–17 Such a finding gives a falsely optimistic impression about the degree of reliability that can be achieved using expert judgments. Thus, unless one has the budget to recruit a very large random sample of patient/subjects and to have experts view such a large sample of tapes, the patient sample needs to be stratified according to their dimensional decisional abilities in such a way that a relatively significant proportion of the subject sample is in the range of performance in which they would benefit from expert consultation. Otherwise, the study yields high agreement among experts that can be uninformative at best (since even nonexperts could have given the same judgments) and misleading at worst (creating a false impression of uniformly high agreement among judges).

Potential Directions for Future Research

The use of the expert judgments given by clinicians experienced in capacity determinations can be used to fulfill 2 types of research goals. First, their views can provide criterion validation of the competence status of a sample of subjects. Such a study can provide useful cutoff scores for the capacity-assessment instruments used, with the caveat that such scores are applicable only under contexts that are similar to the context of the experts' validation, for example, within the same research protocol or across similar protocols that have similar risks and burdens. These studies assume that the experts' judgments provide a normative standard. The above discussion has been focused on this type of study.

Another goal in studying the categorical judgments of clinician experts is to learn more about how clinicians in fact render their capacity judgments. In a developing field such as decisional capacity research, there is a need to study these judgments in their own right.27 This kind of problem is quite common in clinical medicine, since even when advanced technologies are used, the quantitative data still need to be translated into meaningful clinical decisional guides.28

There are many potential research questions regarding expert judgments of capacity. A nonexhaustive list might include the following:

What is the range of impairment (as measured by capacity interviews or by other symptom/cognitive measures) that is associated with the greatest amount of disagreement among judges? Reliably and validly defining such a “gray zone” of uncertainty can be extremely valuable for policy making, since specific safeguards can be designed for that range.

Do clinicians in fact follow normative recommendations? For example, do clinicians make capacity judgments incorporating risk-benefit considerations?

What explains the variability among clinicians' judgments? It would be useful to estimate the degree and sources of the variability, as such information can be useful in training programs to promote more reliable and valid capacity judgments. For example, do personal philosophies in balancing autonomy and welfare affect their judgments? How do such views conform with societal values? Also, it will be important to assess whether the judges are influenced by or “key in” on specific types of symptoms,29 for example, psychotic symptoms exhibited by patients in capacity interviews. What type of training is optimal to increase both the validity and the reliability of expert judgments?

Conclusions

A significant goal of capacity research—such as research focusing on the capacity of persons with schizophrenia to give consent to research—is to help formulate and implement ethically valid policies. These policies either assume that we can reliably and validly differentiate or attempt to guide the differentiation of those who are competent from those who are not. Thus, a cornerstone of research ethics policy regarding the decisionally impaired is a reliable and valid means of distinguishing the competent from the incompetent. It is highly unlikely that such means can be generated a priori by intuition or can be inferred from data that treat DMC as a dimensional concept only. Although there are unique challenges and potential pitfalls to studying DMC as a categorical concept, they can be overcome to provide the needed evidence for rational policy and practice.

Acknowledgments

This research was supported by National Institutes of Health grant K23 MH67142. I thank H. Myra Kim, Sc.D., for assistance with the simulation and also the reviewers who made many excellent suggestions to improve the article.

References

- 1.Appelbaum PS. Patients' competence to consent to neurobiological research. In: Shamoo A, editor. Ethics in Neurobiological Research With Human Subjects. Amsterdam: Gordon and Breach Publishers; 1997. pp. 253–263. [Google Scholar]

- 2.Grisso T, Appelbaum PS. The MacArthur Treatment Competence Study. III: abilities of patients to consent to psychiatric and medical treatments. Law Human Behav. 1995;19:149–174. doi: 10.1007/BF01499323. [DOI] [PubMed] [Google Scholar]

- 3.Carpenter WT, Jr., Gold J, Lahti A, et al. Decisional capacity for informed consent in schizophrenia research. Arch Gen Psychiat. 2000;57:533–538. doi: 10.1001/archpsyc.57.6.533. [DOI] [PubMed] [Google Scholar]

- 4.Palmer BW, Dunn LB, Appelbaum PS, Jeste DV. Correlates of treatment-related decision-making capacity among middle-aged and older patients with schizophrenia. Arch Gen Psychiat. 2004;61:230–236. doi: 10.1001/archpsyc.61.3.230. [DOI] [PubMed] [Google Scholar]

- 5.Grisso T, Appelbaum PS. Assessing Competence to Consent to Treatment: A Guide for Physicians and Other Health Professionals. New York: Oxford University Press; 1998. [Google Scholar]

- 6.Dunn L, Lindamer L, Palmer BW, Golshan S, Schneiderman L, Jeste DV. Improving understanding of research consent in middle-aged and elderly patients with psychotic disorders. Am J Geriat Psychiat. 2002;10:142–150. [PubMed] [Google Scholar]

- 7.Moser DJ, Schultz SK, Arndt S, et al. Capacity to provide informed consent for participation in schizophrenia and HIV research. Am J Psychiat. 2002;159:1201–1207. doi: 10.1176/appi.ajp.159.7.1201. [DOI] [PubMed] [Google Scholar]

- 8.Grisso T, Appelbaum PS. Values and limits of the MacArthur Treatment Competence Study. Psychol Public Pol L. 1996;2:167–181. [Google Scholar]

- 9.Wirshing DA, Wirshing WC, Marder SR, Liberman RP, Mintz J. Informed consent: assessment of comprehension. Am J Psychiat. 1998;155:1508–1511. doi: 10.1176/ajp.155.11.1508. [DOI] [PubMed] [Google Scholar]

- 10.Marson DC, Ingram KK, Cody HA, Harrell LE. Assessing the competency of patients with Alzheimer's disease under different legal standards. a prototype instrument. Arch Neurol—Chicago. 1995;52:949–954. doi: 10.1001/archneur.1995.00540340029010. [DOI] [PubMed] [Google Scholar]

- 11.Schmand B, Gouwenberg B, Smit JH, Jonker C. Assessment of mental competency in community-dwelling elderly. Alz Dis Assoc Dis. 1999;13:80–87. doi: 10.1097/00002093-199904000-00004. [DOI] [PubMed] [Google Scholar]

- 12.Grisso T, Appelbaum PS, Hill-Fotouhi C. The MacCAT-T: a clinical tool to assess patients' capacities to make treatment decisions. Psychiatr Serv. 1997;48:1415–1419. doi: 10.1176/ps.48.11.1415. [DOI] [PubMed] [Google Scholar]

- 13.Kim SYH, Caine ED, Currier GW, Leibovici A, Ryan JM. Assessing the competence of persons with Alzheimer's disease in providing informed consent for participation in research. Am J Psychiat. 2001;158:712–717. doi: 10.1176/appi.ajp.158.5.712. [DOI] [PubMed] [Google Scholar]

- 14.Pruchno RA, Smyer MA, Rose MS, Hartman-Stein PE, Henderson-Laribee DL. Competence of long-term care residents to participate in decisions about their medical care: a brief, objective assessment. Gerontologist. 1995;35:622–629. doi: 10.1093/geront/35.5.622. [DOI] [PubMed] [Google Scholar]

- 15.Carney M, Neugroschl R, Morrison R, Marin D, Siu A. The development and piloting of a capacity assessment tool. J Clin Ethic. 2001;12:17–22. [PubMed] [Google Scholar]

- 16.Fazel S, Hope T, Jacoby R. Assessment of competence to complete advance directives: validation of a patient centered approach. Brit Med J. 1999;318:493–497. doi: 10.1136/bmj.318.7182.493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Etchells E, Darzins P, Silberfeld M, et al. Assessment of patient capacity to consent to treatment. J Gen Intern Med. 1999;14:27–34. doi: 10.1046/j.1525-1497.1999.00277.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tymchuk AJ, Ouslander JG, Rahbar B, Fitten J. Medical decision-making among elderly people in long term care. Gerontologist. 1988;28(Suppl):59–63. doi: 10.1093/geront/28.suppl.59. [DOI] [PubMed] [Google Scholar]

- 19.Molloy DW, Silberfeld M, Darzins P, et al. Measuring capacity to complete an advance directive. J Am Geriatr Soc. 1996;44:660–664. doi: 10.1111/j.1532-5415.1996.tb01828.x. [DOI] [PubMed] [Google Scholar]

- 20.Janofsky JS, McCarthy RJ, Folstein MF. The Hopkins Competency Assessment Test: a brief method for evaluating patients' capacity to give informed consent [comments] Hosp Community Psych. 1992;43:132–136. doi: 10.1176/ps.43.2.132. [DOI] [PubMed] [Google Scholar]

- 21.Marson DC, McInturff B, Hawkins L, Bartolucci A, Harrell LE. Consistency of physician judgments of capacity to consent in mild Alzheimer's disease. J Am Geriatr Soc. 1997;45:453–457. doi: 10.1111/j.1532-5415.1997.tb05170.x. [DOI] [PubMed] [Google Scholar]

- 22.Marson DC, Earnst K, Jamil F, Bartolucci A, Harrell L. Consistency of physicians' legal standard and personal judgments of competency in patients with Alzheimer's disease. J Am Geriatr Soc. 2000;48:911–918. doi: 10.1111/j.1532-5415.2000.tb06887.x. [DOI] [PubMed] [Google Scholar]

- 23.Karlawish JHT, Casarett DJ, James BD, Xie SX, Kim SYH. The ability of persons with Alzheimer disease (AD) to make a decision about taking an AD treatment. Neurology. 2005;64:1514–1519. doi: 10.1212/01.WNL.0000160000.01742.9D. [DOI] [PubMed] [Google Scholar]

- 24.Bean G, Nishisato S, Rector NA, Glancy G. The assessment of competence to make a treatment decision: an empirical approach [comments] Can J Psychiat. 1996;41:85–92. doi: 10.1177/070674379604100205. [DOI] [PubMed] [Google Scholar]

- 25.Pagano M, Gauvreau K. Principles of Biostatistics. Belmont, CA: Duxbury Press; 1993. [Google Scholar]

- 26.Wendler D, Prasad K. Core safeguards for clinical research with adults who are unable to consent. Ann Intern Med. 2001;135:514–523. doi: 10.7326/0003-4819-135-7-200110020-00011. [DOI] [PubMed] [Google Scholar]

- 27.Kim SYH, Karlawish JHT, Caine ED. Current state of research on decision-making competence of cognitively impaired elderly persons. Am J Geriat Psychiat. 2002;10:151–165. [PubMed] [Google Scholar]

- 28.Grant E, Duerinckx AJ, El Saden S, et al. Doppler sonographic parameters for detection of carotid stenosis: is there an optimum method for their selection? Am J Roentgenol. 1999;172:1123–1129. doi: 10.2214/ajr.172.4.10587159. [DOI] [PubMed] [Google Scholar]

- 29.Earnst K, Marson DC, Harrell LE. Cognitive models of physicians' legal standard and personal judgments of competency in patients with Alzheimer's disease. J Am Geriatr Soc. 2000;48:919–927. doi: 10.1111/j.1532-5415.2000.tb06888.x. [DOI] [PubMed] [Google Scholar]