Abstract

By testing the facial fear-recognition ability of 341 men in the general population, we show that 8.8% have deficits akin to those seen with acquired amygdala damage. Using psychological tests and functional magnetic resonance imaging (fMRI) we tested the hypothesis that poor fear recognition would predict deficits in other domains of social cognition and, in response to socially relevant stimuli, abnormal activation in brain regions that putatively reflect engagement of the “social brain.” On tests of “theory of mind” ability, 25 “low fear scorers” (LFS) performed significantly worse than 25 age- and IQ-matched “normal (good) fear scorers” (NFS). In fMRI, we compared evoked activity during a gender judgement task to neutral faces portraying different head and eye gaze orientations in 12 NFS and 12 LFS subjects. Despite identical between-group accuracy in gender discrimination, LFS demonstrated significantly reduced activation in amygdala, fusiform gyrus, and anterior superior temporal cortices when viewing faces with direct versus averted gaze. In a functional connectivity analysis, NFS show enhanced connectivity between the amygdala and anterior temporal cortex in the context of direct gaze; this enhanced coupling is absent in LFS. We suggest that important individual differences in social cognitive skills are expressed within the healthy male population, which appear to have a basis in a compromised neural system that underpins social information processing.

INTRODUCTION

Solving the question of how social stimuli are processed is fundamental to understanding social and emotional behavior. Cognitive neuroscientists have highlighted a modularity within neural systems supporting the processing of social stimuli, such as faces (e.g., Kanwisher, McDermott, & Chun, 1997), and related social cognitive1 skills, such as the ability to infer others’ thoughts, feelings, and desires (see Gallagher & Frith, 2003, for a review). There is increasing support for the concept of a “social brain” specialized for orchestrating appropriate conspecific behaviors in higher order primates (Brothers, 1990) whose components include the amygdala, superior temporal gyrus (STG), fusiform gyrus (FG), temporal poles (TPs), and medial prefrontal cortex (MPfC).

The amygdala is primarily associated with processing emotional stimuli, such as facial expressions of emotion. For example, functional imaging studies report amygdala activation to emotional relative to neutral faces, most reliably for fearful expressions (e.g., Winston, O’Doherty, & Dolan, 2003; Morris et al., 1996). Furthermore, damage to one or both amygdalae causes deficits in recognizing “basic” emotional expressions, again most reliably for fear (e.g., Calder, Young, et al., 1996; Adolphs, Tranel, Damasio, & Damasio, 1994), as well as impairing an ability to infer more complex “social” emotions, such as guilt (Adolphs, Baron-Cohen, & Tranel, 2002).

Recently, however, research has highlighted a role for the amygdala in more general social cognitive processes. For example, individuals with acquired amygdala damage show deficits on “theory of mind” (ToM) tasks, which test the capacity to attribute independent mental states to others, an ability that provides a basis for explaining and predicting others’ behavior (Shaw et al., 2004; Stone, Baron-Cohen, Calder, Keane, & Young, 2003). Damage to the amygdala may also interfere with a natural predilection to imbue the world with social meaning (Heberlein & Adolphs, 2004). Similarly, neuroimaging studies have shown that the amygdala becomes activated when healthy subjects attach social meaning to animations of geometric shapes in motion (Martin & Weisberg, 2003; Schultz et al., 2003). Finally, there is some evidence that autism—a disorder primarily of social cognition—is associated with abnormal anatomy and function of the amygdala (for a review, see Sweeten, Posey, Shekhar, & McDougle, 2002).

A face with a gaze directed straight towards the viewer is a stimulus that is often replete with both emotional and social significance, and can indicate such diverse signals as threat, sexual desire, or simply the desire to communicate. Correspondingly, neuroimaging findings show that faces with direct, relative to averted, gaze, engage diffuse areas of the social brain including the amygdala (Whalen et al., 2004; Wicker, Perrett, Baron-Cohen, & Decety, 2003; Kawashima et al., 1999), the FG (George, Driver, & Dolan, 2001), the STG (Wicker et al., 2003; Calder, Lawrence, et al., 2002), the TP, and the MPfC (Kampe, Frith, & Frith, 2003).

To date, few studies have examined individual differences in social cognitive ability and linked these to underlying neural function. As part of a larger study on the genetics of social cognition, we examined the emotional expression recognition ability of a large sample of adult men (see Methods). Given that poor recognition of fear, compared to other emotions, is most often associated with amygdala damage, we examined fear recognition abilities within the sample. Interestingly, we found that 8.8% of the sample, despite an absence of psychological or neurological problems, showed deficits in fear recognition akin to those reported in patients with amygdala damage.

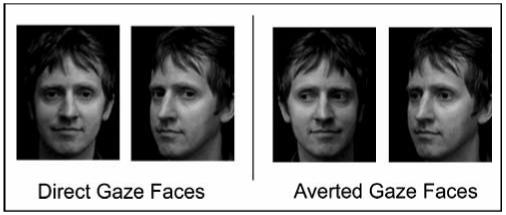

We hypothesized that low fear recognition ability would be related to reduced functional integrity of the amygdala and associated regions of the social brain (namely, the FG, STG, TP, and MPfC). Thus, for “low fear scorers” (LFS), compared to “normal fear scorers” (NFS), we predicted deficits on a test of ToM abilities—a social cognitive task known to involve these brain regions (see Saxe, Carey, & Kanwisher, 2004, for a review). Second, for LFS compared to NFS, we predicted reduced activation in the amygdala and associated brain regions when processing socially relevant stimuli. We tested this prediction by comparing neural activation evoked by faces with high social significance (direct gaze) to that evoked by faces with lower social significance (averted gaze; see Figure 1). Our reasoning for manipulating social significance by altering eye gaze, rather than by altering emotional expression, was that, because the subject groups had been selected on the basis of differences in fear recognition abilities, any difference in response to another socially relevant dimension of face stimuli would provide a more stringent test of our hypotheses. Secondly, as discussed above, faces with direct gaze are known to engage diffuse areas of the social brain, including areas associated with ToM processing. Specifically, we predicted that LFS, compared to NFS, would show reduced activation of the bilateral amygdala, anterior STG, TP, and MPfC when viewing direct versus averted gaze stimuli.

Figure 1.

Example stimuli used to compare neural activity induced by direct gaze compared to averted gaze.Identical sets of stimuli from 11 additional sitters were formed. Mirror images of each picture were created, producing double the number of stimuli.

METHODS

We obtained ethical permission to conduct all aspects of our study from the Institute of Child Health/Great Ormond Street Hospital research ethics committee. Subjects gave written informed consent before taking part in any aspect of our study.

Population Study

On-line Test of Emotional Expression Recognition

An e-mail was circulated to each department of University College London, asking students and staff to participate in an on-line psychology experiment. A total of 341 men took part (mean age was 29.9 years, SD = 8.95).

The Ekman-Friesen Test of Facial Affect Recognition (Ekman & Friesen, 1976) was administered. Subjects viewed 60 halftone photographs of emotionally expressive faces, presented in the same order as that used in the original Ekman-Friesen Test (Ekman & Friesen, 1976). There were 10 exemplars of each of the six basic emotions (happiness, sadness, fear, surprise, anger, and disgust). The six emotion labels were presented adjacent to each image, and participants were required to indicate which emotion they thought was being portrayed via a mouse click. Subjects had an unlimited time to answer. Before beginning the experiment, subjects completed six practice examples, one for each emotion. Feedback was not given on these items, the stimuli were not used in the subsequent test and responses to them were not analyzed.

The subjects were classified according to their performance on recognition of fearful facial expressions. Subjects scoring 5/10 or less for fear recognition (8.8% of the population) were labeled as LFS. This cutoff was chosen as it encompasses the range of scores reported for patients with damaged amygdalae on similar tests (Broks et al., 1998; Calder, Young, et al., 1996; Young, Hellawell, VanDeWal, & Johnson, 1996). The mean score on the test was 8.2, and individuals scoring greater than or equal to 8/10 for fear recognition were labeled as NFS. This dichotomous approach, using extremes of the population, was chosen in preference to a correlational approach in order to maximize the potential differences associated with variation in fear-recognition ability.

Neuropsychological Examination

Twenty-five LFS volunteered to participate in further experiments and stratified randomization was used to age-match 25 NFS volunteers (NFS, 30 ± 7.6 years; LFS, 31 ± 10.3 years, mean ± SD). None of the subjects had any history of psychological or neurological disorder.

The full-scale IQ of each subject was estimated by using the two-subset form of the Wechsler Abbreviated Scale of Intelligence (WASI). LFS and NFS were examined on the Frith-Happé theory of mind task (Castelli, Happé, Frith, & Frith, 2000).

Retest of Emotion Recognition Abilities

As a validation of our on-line test, the LFS and NFS repeated the Ekman-Friesen Test of Facial Affect Recognition in the laboratory (see Results).

Frith-Happé Theory of Mind Task—Attributing

Mental States to Animated Shapes

This animated task has been clinically validated in studies of children (Abell, Happé, & Frith, 2000) and adults (Castelli, Frith, Happé, & Frith, 2002; Castelli, Happé, et al., 2000). Eight silent cartoons, each featuring a large red triangle and a smaller blue triangle, were shown on a computer screen. Each animation lasted between 34 and 45 sec. There were two conditions with four animations in each condition. In one type of animation, the actions of one character responded simply to those of the other. Animations of this type of action tended to elicit goal-directed action descriptions (e.g., following, fighting) and thus are referred to as “goal-directed” (GD) animations. The “scripts” of the GD cartoons involved the triangles chasing each other, following each other, fighting with each other, and dancing with each other. The second type of cartoon, by contrast, showed one character reacting to the other character’s mental state. This type of action pattern usually elicited more mental state (ToM) descriptions (e.g., mocking, coaxing) and will hereafter be referred to as ToM animations. The “scripts” to the ToM cartoons involved the triangles persuading, surprising, mocking and seducing each other (see Abell et al., 2000, for more details).

Participants were read the task instructions (Castelli, Frith, et al., 2002; Castelli, Happé, et al., 2000) asking them to describe what they thought the triangles were doing. The animations were shown in a random order. Participants’ spoken responses were recorded, transcribed, and scored. Following Castelli, Happé, et al. (2000), and Castelli, Frith, et al. (2002), responses to each animation were scored on three dimensions: intentionality (degree of intentional attribution, range 0-5, with absence of intentional language at one extreme and elaborate use at the other), appropriateness (range 0-2), with incorrect at one extreme and highly appropriate at the other, and length of description (0-4, ranging from no response to four or more clauses). Responses were scored by two trained raters who were blind to NFS/LFS group membership. Where there was discrepancy between raters, the item was discussed and a compromise score was agreed upon.

Group differences in behavioral scores were tested using t tests or Mann-Whitney tests, as appropriate. Threshold significance was set at p < .05, corrected for multiple comparisons via the Bonferroni-Holm method (Hochberg & Tamhane, 1987).

Functional Imaging Experiment

Twelve LFS and twelve NFS volunteers were randomly selected from the right-handed members of the earlier groups and provided written informed consent to take part in the functional magnetic resonance imaging (fMRI) study.

Stimuli

Stimuli were selected from those used in a previous study (George et al., 2001) and consisted of images of 12 people (6 men and 6 women) portraying a neutral expression. Each individual was photographed in full-face frontal view and with the head rotated toward the right by 30°; for each of these head views, there were two images, one with the subject looking straight at the camera and one with gaze averted at 30° (Figure 1). Each face was centered in the image frame so that the edge of the nose between the two eyes—where faces are usually fixated at first glance (Yarbus, 1967)—always fell in the same location for frontal faces and for deviated faces. Four additional stimuli were then generated for each face, representing mirror images of those already obtained. There were 96 images that differed in gaze direction (48 direct gaze and 48 averted gaze).

Task

Subjects were required to make a gender judgment for each face. Each stimulus was presented for 700 msec with an interstimulus interval of 500 msec. A blocked presentation of stimuli was used. The eight subconditions of stimuli (i.e., the four main conditions further divided by mirror imaging of the stimuli) resulted in eight blocks of 12 stimuli. The blocks and stimuli within each block were presented in a different random order for each subject. Between each block, a blank screen was presented for 5498 msec. Before each block, a fixation cross (placed in the area of the screen midway between where the eyes appear for face stimuli) was shown for 1000 msec.

fMRI Data Acquisition and Preprocessing

A Siemens 1.5T Sonata system (Siemens, Erlangen, Germany) was used to acquire BOLD contrast-weighted echo-planar images (EPIs) for functional scans. Volumes, which consisted of 48 horizontal slices of 2 mm thickness with a 1-mm gap, were acquired continuously every 4.32 sec enabling whole-brain coverage. In-plane resolution was 3 × 3 mm. Each subject’s head was mildly restrained within the head coil to discourage movement. Response judgments were made during scanning with a button box held in their right hand. The first six EPI volumes were discarded to allow for T1 equilibration effects. Functional data sets were then preprocessed using SPM2 (Wellcome Department of Imaging Neuroscience; www.fil.ion.ucl.ac.uk/spm) on a MatLab platform (Mathworks Inc, Natick, MA) correcting for head movement by realignment and normalizing the functional scans to an EPI template corresponding to the MNI reference brain in standard space. Normalized images were smoothed using an 8-mm Gaussian kernel.

Statistical Analysis

Data analyses were conducted using SPM2, applying a mass univariate general linear model (GLM) (Friston, Holmes, et al., 1995). A mixed-effects two-level framework was used (Holmes & Friston, 1998). In individual subject analyses, each of the four main stimulus categories (averted gaze/deviated head, averted gaze/straight head, direct gaze/deviated head, and direct gaze/straight head) were modeled separately by convolving a box-car function with a synthetic hemodynamic response function. A high-pass filter with a cutoff of 238 sec was applied to remove low-frequency noise.

Subject-specific parameter estimates were calculated for each stimulus category at every voxel. Specific effects (e.g., direct gaze-averted gaze) were tested by applying linear contrasts to the parameter estimates for each block. Contrast images from each subject were entered into second-level (random effects) analyses. In the a priori regions of interest (see Introduction), statistical threshold was set at p < .001, uncorrected for multiple comparisons.

Functional Connectivity

To investigate functional connectivity between the amygdala and other brain regions in the context of direct gaze we tested for “psychophysiological interactions.” These are condition-dependent (e.g., direct vs. averted gaze) changes in the covariation of response between a reference brain region and other brain regions (Friston, Buchel, et al., 1997). Separately for each subject, values of adjusted responses to direct gaze faces relative to averted gaze were extracted from the voxel in the left amygdala maximally activated in the direct-averted gaze contrast of the main analysis (maximal voxel: x -21, y -3, z -21). Using a specially developed routine in SPM2, we first deconvolved the adjusted data and extracted amygdala activity at the time of the direct gaze blocks. The resulting condition-specific estimate of neuronal activity was then reconvolved with a synthetic hemodynamic response function. This procedure was repeated for the averted gaze blocks. The resulting regressors were entered as variables of interest into a separate analysis. Linear contrasts were applied to the parameter estimates for the regressors in order to identify regions where responses exhibited significant condition-dependent interactions with amygdala activity. This was done separately for each subject. The resulting contrast images were entered into a second-level (random effects) analysis in order to allow generalization to the population.

RESULTS

Behavioral Findings

Population Study

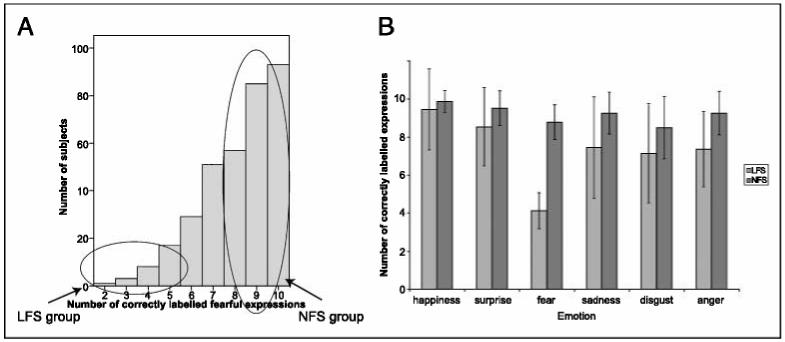

There were 341 men among the respondents to the Web-based survey of emotional recognition ability. Of these male respondents, 8.8% scored 5/10 or less for fear recognition and were labeled as LFS; 68.9% of the population scored at least 8/10 for fear recognition and were labeled as NFS (Figure 2A). The reaction times for fear responses were very similar for both groups—LFS: (mean ± SD) 925 ± 332 msec; NFS: 958 ± 384 msec; t(48) = -0.33, p = .74—suggesting that differences in fear recognition were not due to differences in attention span. Milder impairments in the responses of LFS were apparent in the recognition of sad and angry facial expressions (Figure 2B).

Figure 2.

Results of the Ekman-Friesen test of facial affect recognition. (A) Distribution of fear recognition scores among the 341 male respondents to the on-line Ekman-Friesen test (max score = 10). Mean score ± SD = 8.2 ± 1.7. Those scoring < 5 were labeled as low fear scorers (LFS). Those scoring > 8 were labeled as normal fear scorers (NFS). (B) Mean number of correct responses for all six basic emotions on the Ekman-Friesen test for the 25 LFS and 25 NFS who took part in further study. Scores are out of 10. Error bars indicate ± 1 standard deviation. As well as the difference in fear recognition, LFS were impaired, relative to NFS, at recognition of sad and angry expressions.Sadness: Mann-Whitney U = 113.5, p < .05; anger: Mann-Whitney U = 84.5, p < .01, Bonferroni corrected.

Neuropsychological Tests

Age-matched subsamples of 25 LFS and 25 NFS were retested on their ability to correctly identify emotional expressions, as well as undertaking tests of gaze perception and “mentalizing” ability. The IQs of the two groups did not differ significantly: mean ± SD, LFS 117 ± 10.2; NFS 121 ± 8.5; t(48) = -1.3,p = .20.

Retest of Emotion Recognition Abilities

Subjects were retested on the Ekman-Friesen test of Facial Affect. Although both groups showed higher fear recognition scores on retest, there remained a highly significant difference between LFS and NFS (mean ± SD, LFS 6.9 ± 2.3; NFS 9.7 ± 0.7; Mann-Whitney U = 66.5, p < .001). The milder differences in the ability to correctly label sad and angry expressions also remained, but on this occasion did not reach our corrected threshold for statistical significance—sadness: LFS 7.6 ± 2.0, NFS 8.4 ± 1.2, U = 159,p = .09; anger: LFS 8.2 ± 2.2, NFS 9.0 ± 1.0, U = 170.5,p = .15.

Theory of Mind Task

Table 1 shows results for this task. As predicted, LFS made fewer, and less appropriate, mental state attributions to the ToM animations than did NFS. These differences were significant at the 5% level, corrected for multiple comparisons (see Table 1). The two groups did not differ in their intentionality ratings of the GD animations. LFS showed a trend towards lower appropriateness scores for these animations but this did not reach significance. There were no differences between the two groups on the length of their descriptions.

Table 1.

Scores on the Frith-Happé ToM Task. Mean Scores (Standard Deviations)

| Ratings Type (Range) and Group | Animation Type | |

|---|---|---|

| ToM | GD | |

| Intentionality (0-5) | ||

| LFS | 3.7(0.8) | 3.1 (0.6) |

| NFS | 4.2(0.5) | 3.1(0.5) |

| Appropriateness (0-3) | ||

| LFS | 1.1(0.5)* | 1.4 (0.3) |

| NFS | 1.4(0.4) | 1.6 (0.4) |

| Length (0-4) | ||

| LFS | 3.7(0.5) | 3.5 (0.7) |

| NFS | 3.8(0.4) | 3.5 (0.7) |

ToM = theory of mind; GD = goal directed.

p < .05, comparing LFS to NFS, corrected for multiple comparisons.

Neuroimaging Findings

Task Performance

Mean accuracy across both groups was 96% on the gender discrimination task. There were no significant differences between the NFS and LFS subject groups (percent correct ± SD, NFS 97 ± 2.5, LFS 94 ± 4.5, z = -1.7,p = .1).

Direct Gaze versus Averted Gaze

Across both subject groups, direct, relative to averted, eye-gaze enhanced activity within the bilateral amygdala, bilateral FFA, and in regions surrounding the anterior STG—areas attributed to the social brain (Brothers, 1990; see Table 2).

Table 2.

Regions Activated in a Direct-Averted Gaze Contrast

| Group | Brain Region | MNI Coordinates x,y,z | zValue |

|---|---|---|---|

| Combined | Left amygdala | —21, —3, —21 | 3.29 |

| Right amygdala | 21, —5, —15 | 3.24 | |

| Left lateral FG | —54, —63, —12 | 3.14 | |

| Left lateral FG | —48, —51, —18 | 3.04 | |

| Right FG | 36, —57, —18 | 2.89* | |

| Left anterior STG | —57, 3, —3 | 3.61 | |

| Left anterior middle temporal gyrus | —60, 0, —18 | 3.37 | |

| NFS only | Left amygdala | —27, —3, —15 | 3.30 |

| Right amygdala | 21, —6, —12 | 3.68 | |

| Left lateral FG | —51, —63, —12 | 3.60 | |

| Left anterior STG | —57, 6, —3 | 3.16 | |

| Left anterior middle temporal gyrus | —48, 0, —18 | 3.27 | |

| Right TP | 39, 21, —36 | 3.17 | |

| Right MPfC | 9, 69, 12 | 3.04 | |

| LFS only | No significant voxels |

FG = fusiform gyrus; STG = superior temporal gyrus; TP = temporal pole; MPfC = and medial prefrontal cortex.

Activations are significant atp <.001 (uncorrected), except

p = .002 (uncorrected).

We then tested the hypothesis that direct gaze would activate these regions more strongly in NFS compared to LFS. In the comparison of group by condition interaction (NFS direct gaze-NFS averted gaze)-(LFS direct gaze-LFS averted gaze) we observed greater activity in the left amygdala (x -27, y 0, z -18, z = 3.29), left lateral posterior FG (x -51, y -60, z -12, z = 3.13), and left anterior STG (x -57, y 3, z -3, z = 3.82) in NFS compared to LFS (Figure 3). For each of these regions, there were equivalent activations in the right hemisphere, which showed a trend towards greater activation in NFS compared to LFS (right amygdala, x 30, y -6, z -21, z = 2.40; right posterior FG, x 45, y -69, z -9, z = 1.50; right anterior STG, x 60, y 6, z -3, z = 1.71). Moreover, when a direct gaze-averted gaze contrast was performed separately in both groups, NFS activated the left and right amygdala, left anterior STG, left lateral FG, right TP, and right MPfC, whereas LFS showed no activation in voxels within these areas thresholded at p < .001, uncorrected (see Table 2).

Figure 3.

(Top) regions where NFS show greater responses to direct versus averted gaze than LFS. (Bottom) parameter estimates of the activity in the maxima, showing estimates of mean corrected percent signal change (relative to overall mean activity at each voxel), averaged across repetitions and subjects, for the two groups (NFS and LFS) and two conditions (direct or averted gaze). Error bars indicate standard errors.

Functional Connectivity

We next tested for group differences in the functional connectivity of amygdalae responses as a function of the gaze direction of the perceived faces (see Methods). NFS, compared to LFS, showed greater functional coupling of the left amygdala with left anterior STG (x -54, y —3, z 0, z = 3.56) and right TP (x 36, y 3, z —48, z = 3.01) when processing direct eye gaze.

DISCUSSION

Our findings indicate that a significant minority (8.8%) of the healthy male population (within a university environment) have a marked deficit in the recognition of emotional expressions, especially fear. These deficits are at a level akin to those reported in patients with acquired amygdala damage (Calder, Young, et al., 1996). An associated finding is that these deficits extend to encompass other socially relevant skills. These deficits are associated with abnormalities in the brain’s response to socially relevant facial cues as well as in coupling between brain regions previously implicated in processing social information and the development of social skills.

In the Frith-Happé task, LFS attributed fewer and less appropriate mental states to the triangles in the ToM cartoons, but showed no deficits in the attribution of mental states to the GD cartoons. This pattern of scores is remarkably similar to that seen with high-functioning autistic/Asperger syndrome subjects as reported by Castelli, Frith, et al. (2002). Amygdala-damaged patients also show deficits on various ToM tasks (Shaw et al., 2004; Stone et al., 2003; Fine, Lumsden, & Blair, 2001), including one requiring anthropomorphizing of abstract animations (Heberlein & Adolphs, 2004). Also, normal subjects show activation in the amygdala, FG, TP, and MPfC when attributing social meaning to such abstract animations (Martin & Weisberg, 2003; Schultz et al., 2003). Hence, we show that poor ability to recognize fear from facial expressions among members of the general population is predictive of a pattern of social cognitive deficits consistent with impaired functioning within the so-called social brain (amygdala, FG, STG, TP, and MPfC).

It should be noted, however, that although we chose a categorical experimental design, comparing very low to normal fear scorers (see Methods), these data should not necessarily be interpreted as implying the existence of a “special population” of LFS. It may indeed prove to be the case that there are genetic and/or other biological variables that can predispose one to poor fear recognition and any associated social cognitive deficits. However, fear recognition score appears to be a continuous variable (see Figure 2A) and its variation may reflect normal individual differences with multiple and complex etiologies.

In the neuroimaging experiment, subjects were not required to make explicit judgements about eye gaze—hence, any processing of gaze direction was incidental. We replicated earlier findings suggesting an involvement of the amygdala in processing direct gaze (Whalen et al., 2004; Wicker et al., 2003; George et al., 2001; Kawashima et al., 1999). Theories of amygdala function stress its role in rapid, and often automatic, detection of biologically (emotionally) significant events (Dolan, 2002). The amygdala acts both to heighten perception of a salient stimulus and to enhance memory of its occurrence, thus effecting both immediate and future behavior (for a review, see Dolan, 2002). Amygdala activation to direct eye gaze may serve to increase attention toward the gazer and to enhance perception of the face and situation. In this context, the difference between NFS and LFS in amygdala responses to direct eye gaze (NFS showed strong bilateral amygdala activation, whereas LFS showed no amygdala voxels above threshold) provides support for a fundamental contribution of the amygdala to the development of social cognitive abilities.

Another area showing greater activation to social stimuli among the NFS was found on the left anterior STG (see Figure 3). Several studies have implicated the STG in processing of seen gaze direction (e.g., Hoffman & Haxby, 2000; Puce, Allison, Bentin, Gore, & McCarthy, 1998); however, the locus of activity in these studies was far more posterior to the one reported here, in an area surrounding the temporoparietal junction (TPJ). The discrepancy between these earlier studies and our own is likely to be due to the focus in the previous studies being on neural correlates of perception of averted gaze direction, rather than the contrast of direct-averted gaze.

Areas corresponding to an anterior STG locus, similar to that activated in our study, were activated in a direct-averted gaze contrast in the study by Calder, Lawrence, et al. (2002), albeit in the right hemisphere. We found significant anterior STG activation to direct gaze only in the left hemisphere, although there was a trend toward significance in equivalent areas in the right hemisphere. Interestingly, Wicker et al. (2003) found bilateral activation of the anterior STG in the same area as our locus, both when subjects had to judge emotion from eyes, keeping gaze constant, and in a direct-averted gaze contrast. An interaction analysis showed that the anterior STG was selectively activated when subjects interpreted emotions that were personally directed.

Wicker et al. (2003) hypothesize that the anterior STG may be a component of a neural system for processing “second-person” intentional relations through eye contact. Baron-Cohen (1994) has argued that such a system is a precursor to the “ToM module.” Evidence in favor of this perspective comes from several neuroimaging studies, which show that the anterior STG is one of a number of brain regions associated with the attribution of mental states (see Saxe et al., 2004, for a review). Thus, the activation of the anterior STG in NFS when someone looks directly at them may reflect engagement of “mentalizing networks,” even though the explicit task did not require ToM reasoning. LFS, however, lack this activation, perhaps indicating that they do not automatically analyze the intentions of someone looking directly at them. This may underlie their relatively poor performance on the Frith-Happé ToM task in the current study.

In another study of eye gaze processing, Kampe et al. (2003) report activation in the MPfC and the TP in response to direct gaze faces. The authors suggest that activity in these regions, which are associated with ToM/mentalization processing (for a review, see Gallagher & Frith, 2003), reflects the representation of communicative intent—a process that relies on mentalizing ability (Leslie & Happé, 1989). We observed activation of both these regions in NFS, but not LFS, in response to direct gaze. Moreover, as was seen with the anterior STG, the functional connectivity of the TP with the amygdala was strengthened during direct eye gaze in NFS but not in LFS. The lack of response in these regions demonstrated by the LFS group is consistent with a crucial contribution of MPfC and TP to rapid, automatic engagement of mentalizing.

Our functional connectivity analysis suggests links, in NFS, between areas involved in decoding the intentions of the gazer (anterior STG and TP) with those that extract affective significance from a face (amygdala). Although the direction of the relationship cannot be determined, this may be a specific example of the amygdala’s neuromodulatory role in enhancing the processing of biologically/socially relevant stimuli in other brain regions (Dolan, 2002). That is to say, when an arousing stimulus (such as someone staring straight at you) signals the need to analyze the intent of a conspecific, the amygdala may promote recruitment of mentalizing regions. If this mechanism is impaired, deficits in mentalizing ability may arise.

An important caveat is that although we presented a fixation cross in the eye region of the stimuli before each block, we did not monitor subjects’ fixation during the experiment, for example, via eye-tracking technology. Nevertheless, LFS behavioral performance in the fMRI experiment was high (>94% correct responses), suggesting that their attention was directed toward the stimuli. However, we cannot rule out the possibility that LFS may not have been tracking the eye region of the stimuli as much as NFS, spending more time on other facial features such as the mouth. This suggestion merits further exploration because recent evidence by Adolphs, Gosselin, et al. (2005) shows that a patient with bilateral amygdala damage (S.M.) fails to track eyes normally and that this may be a causal factor in her poor fear-recognition ability. If LFS, like patient S.M., are tracking eyes abnormally, this may lead to their low fear scores as well as their failure to activate the amygdala and other areas in response to direct gaze. Future work needs to examine the relationship between the tracking of eyes and fear recognition in the normal population. How aberrant tracking of eyes may lead (perhaps developmentally) to deficits on social cognitive tasks that do not require the processing of face stimuli (such as the Frith-Happé ToM task) is another intriguing and pertinent question.

It has been argued that social cognition represents a distinct cognitive “domain” or “module” (e.g., Brothers, 1990). One form of evidence for modularity is the existence of individuals with a selective deficit in the area of cognition under question, especially if this deficit can be associated with a reduction in functional capability in a given neural system (Gardner, 1983). In the domain of social cognition, individuals with autism have been cited as a population with a selective social cognitive deficit (Karmioff-Smith, Klima, Bellugi, Grant, & Baron-Cohen, 1995; Brothers, 1990), and such individuals fail to activate areas of the social brain when processing socially relevant stimuli (for reviews, see Schultz, 2005; Gallagher & Frith, 2003; Baron-Cohen et al., 2000). Patients with prosopagnosia or lesions to the orbitofrontal cortex provide examples of individuals with selective deficits in subsystems of the social cognitive “module” resulting from circumscribed brain damage (Brothers, 1990). In the present study we show that subjects from the normal population can demonstrate variability in their social cognitive abilities in the absence of a corresponding variability in global cognitive abilities (there were no differences in IQ between our two groups) and that these deficits are associated with reduced activity and functional connectivity within the social brain. As such, we provide evidence in support of a social cognitive module, subserving behavior in response to conspecifics.

However, it should be noted that the roles of the structures purported to be involved in the social module are not restricted to mediating behavior in social situations. For example, the amygdala has an established and significant role supporting fear conditioning (LeDoux, 2003) and the FG has been shown to be involved in processing any set of stimuli in which a person becomes “expert” (Gauthier, Skudlarski, Gore, & Anderson, 2000). Therefore, the structural architecture of cognitive modules should be thought of as, in some sense, “fluid,” their exact makeup depending on the nature of the cognitive task at hand.

Our study defines a neurobiological network wherein functional variation is associated with individual differences in social cognition. Sources of this variation may in part be genetic. Linking deviation in social cognitive performance to neural functioning and, ultimately, genetics, may prove to be a fruitful method for understanding the etiology of individual differences in social functioning.

Acknowledgments

The authors thank Natalie George for providing stimuli used in this experiment and John Morris for help and advice.

Footnotes

The definition of “social cognition” varies across fields, as well as authors and contexts. Here, we are referring to those cognitive processes that underpin behavior during a social interaction, including the human capacity to perceive the intentions and dispositions of others.

The data reported in this experiment have been deposited with the fMRI Data Center (www.fmridc.org). The accession number is 2-2003-1206T.

REFERENCES

- Abell F, Happé F, Frith U. Do triangles play tricks? Attribution of mental states to animated shapes in normal and abnormal development. Cognitive Development. 2000;15:1–20. [Google Scholar]

- Adolphs R, Baron-Cohen S, Tranel D. Impaired recognition of social emotions following amygdala damage. Journal of Cognitive Neuroscience. 2002;14:1264–1274. doi: 10.1162/089892902760807258. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S. How to build a baby that can read minds—Cognitive mechanisms in mindreading. Cahiers de Psychologie Cognitive (Current Psychology of Cognition) 1994;13:513–552. [Google Scholar]

- Baron-Cohen S, Ring HA, Bullmore ET, Wheelwright S, Ashwin C, Williams SCR. The amygdala theory of autism. Neuroscience and Biobehavioral Reviews. 2000;24:355–364. doi: 10.1016/s0149-7634(00)00011-7. [DOI] [PubMed] [Google Scholar]

- Broks P, Young AW, Maratos EJ, Coffey PJ, Calder AJ, Isaac CL, Mayes AR, Hodges JR, Montaldi D, Cezayirli E, Roberts N, Hadley D. Face processing impairments after encephalitis: Amygdala damage and recognition of fear. Neuropsychologia. 1998;36:59–70. doi: 10.1016/s0028-3932(97)00105-x. [DOI] [PubMed] [Google Scholar]

- Brothers L. The social brain: A project for integrating primate behaviour and neurophysiology in a new domain. Concepts in Neuroscience. 1990;1:27–5 1. [Google Scholar]

- Calder AJ, Lawrence AD, Keane J, Scott SK, Owen AM, Christoffels I, Young AW. Reading the mind from eye gaze. Neuropsychologia. 2002;40:1129–1138. doi: 10.1016/s0028-3932(02)00008-8. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Rowland D, Perrett DI, Hodges JR, Etcoff NL. Facial emotion recognition after bilateral amygdala damage: Differentially severe impairment of fear. Cognitive Neuropsychology. 1996;13:699–745. [Google Scholar]

- Castelli F, Frith C, Happé F, Frith U. Autism, Asperger syndrome and brain mechanisms for the attribution of mental states to animated shapes. Brain. 2002;125:1839–1849. doi: 10.1093/brain/awf189. [DOI] [PubMed] [Google Scholar]

- Castelli F, Happé F, Frith U, Frith C. Movement and mind: A functional imaging study of perception and interpretation of complex intentional movement patterns. Neuroimage. 2000;12:314–325. doi: 10.1006/nimg.2000.0612. [DOI] [PubMed] [Google Scholar]

- Dolan RJ. Emotion, cognition, and behavior. Science. 2002;298:1191–1194. doi: 10.1126/science.1076358. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen W. Pictures offacial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Fine C, Lumsden J, Blair RJR. Dissociation between “theory of mind” and executive functions in a patient with early left amygdala damage. Brain. 2001;124:287–298. doi: 10.1093/brain/124.2.287. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Buchel C, Fink G, Morris JS, Rolls ET, Dolan RJ. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage. 1997;6:218–229. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley K, Poline J-B, Frith C, Frackowiak RSJ. Statistical parametric maps in functional imaging: A general linear approach. Human Brain Mapping. 1995;2:189–210. [Google Scholar]

- Gallagher HL, Frith CD. Functional imaging of “theory of mind.”. Trends in Cognitive Sciences. 2003;7:77–83. doi: 10.1016/s1364-6613(02)00025-6. [DOI] [PubMed] [Google Scholar]

- Gardner H. Frames of mind: The theory of multiple intelligences. New York: Basic Books; 1983. [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience. 2000;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- George N, Driver J, Dolan RJ. Seen gaze-direction modulates fusiform activity and its coupling with other brain areas during face processing. Neuroimage. 2001;13:1102–1112. doi: 10.1006/nimg.2001.0769. [DOI] [PubMed] [Google Scholar]

- Heberlein AS, Adolphs R. Impaired spontaneous anthropomorphizing despite intact perception and social knowledge. Proceedings of the National Academy of Sciences, U.S.A. 2004;101:7487–7491. doi: 10.1073/pnas.0308220101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hochberg Y, Tamhane AC. Multiple comparison procedures. New York: Wiley; 1987. [Google Scholar]

- Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nature Neuroscience. 2000;3:80–84. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- Holmes AP, Friston KJ. Generalisability, random effects and population inference. Neuroimage. 1998;7:S754. [Google Scholar]

- Kampe KKW, Frith CD, Frith U. “Hey John”: Signals conveying communicative intention toward the self activate brain regions associated with “mentalizing,” regardless of modality. Journal of Neuroscience. 2003;23:5258–5263. doi: 10.1523/JNEUROSCI.23-12-05258.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karmiloff-Smith A, Klima E, Bellugi U, Grant J, Baron-Cohen S. There a social module—Language, face processing, and theory of mind in individuals with Williams-syndrome. Journal of Cognitive Neuroscience. 1995;7:196–208. doi: 10.1162/jocn.1995.7.2.196. [DOI] [PubMed] [Google Scholar]

- Kawashima R, Sugiura M, Kato T, Nakamura A, Hatano K, Ito K, Fukuda H, Kojima S, Nakamura K. The human amygdala plays an important role in gaze monitoring—A PET study. Brain. 1999;122:779–783. doi: 10.1093/brain/122.4.779. [DOI] [PubMed] [Google Scholar]

- LeDoux J. The emotional brain, fear, and the amygdala. Cellular and Molecular Neurobiology. 2003;23:727–738. doi: 10.1023/A:1025048802629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leslie AM, Happé F. Autism and ostensive communication: The relevance of metarepresentation. Developmental Psychopathology. 1989;1:205–212. [Google Scholar]

- Martin A, Weisberg J. Neural foundations for understanding social and mechanical concepts. Cognitive Neuropsychology. 2003;20:575–587. doi: 10.1080/02643290342000005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ. A differential neural response in the human amygdala to fearful and happy facial expressions. Nature. 1996;383:812–815. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans viewing eye and mouth movements. Journal of Neuroscience. 1998;18:2188–2199. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxe R, Carey S, Kanwisher N. Understanding other minds: Linking developmental psychology and functional neuroimaging. Annual Review of Psychology. 2004;55:87–124. doi: 10.1146/annurev.psych.55.090902.142044. [DOI] [PubMed] [Google Scholar]

- Schultz RT. Developmental deficits in social perception in autism: The role of the amygdala and fusiform face area. International Journal of Developmental Neuroscience. 2005;23:125–141. doi: 10.1016/j.ijdevneu.2004.12.012. [DOI] [PubMed] [Google Scholar]

- Schultz RT, Grelotti DJ, Klin A, Kleinman J, Van der Gaag C, Marois R, Skudlarski P. The role of the fusiform face area in social cognition: Implications for the pathobiology of autism. Philosophical Transactions of the Royal Society ofLondon, Series B, Biological Sciences. 2003;358:415–427. doi: 10.1098/rstb.2002.1208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaw P, Lawrence EJ, Radbourne J, Bramham J, Polkey CE, David AS. The impact of early and late damage to the human amygdala on ‘theory of mind’ reasoning. Brain. 2004;127:1535–1548. doi: 10.1093/brain/awh168. [DOI] [PubMed] [Google Scholar]

- Stone VE, Baron-Cohen S, Calder AJ, Keane J, Young A. Acquired theory of mind impairments in individuals with bilateral amygdala lesions. Neuropsychologia. 2003;41:209–220. doi: 10.1016/s0028-3932(02)00151-3. [DOI] [PubMed] [Google Scholar]

- Sweeten TL, Posey DJ, Shekhar A, McDougle CJ. The amygdala and related structures in the pathophysiology of autism. Pharmacology, Biochemistry and Behavior. 2002;71:449–455. doi: 10.1016/s0091-3057(01)00697-9. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Wicker B, Perrett DI, Baron-Cohen S, Decety J. Being the target of another’s emotion: A PET study. Neuropsychologia. 2003;41:139–146. doi: 10.1016/s0028-3932(02)00144-6. [DOI] [PubMed] [Google Scholar]

- Winston JS, O’Doherty J, Dolan RJ. Common and distinct neural responses during direct and incidental processing of multiple facial emotions. Neuroimage. 2003;20:84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]

- Yarbus AL. Eye movements and vision. New York: Plenum; 1967. [Google Scholar]

- Young AW, Hellawell DJ, VanDeWal C, Johnson M. Facial expression processing after amygdalotomy. Neuropsychologia. 1996;34:3 1–39. doi: 10.1016/0028-3932(95)00062-3. [DOI] [PubMed] [Google Scholar]