Abstract

Human and mouse subjects tried to anticipate at which of 2 locations a reward would appear. On a randomly scheduled fraction of the trials, it appeared with a short latency at one location; on the complementary fraction, it appeared after a longer latency at the other location. Subjects of both species accurately assessed the exogenous uncertainty (the probability of a short versus a long trial) and the endogenous uncertainty (from the scalar variability in their estimates of an elapsed duration) to compute the optimal target latency for a switch from the short- to the long-latency location. The optimal latency was arrived at so rapidly that there was no reliably discernible improvement over trials. Under these nonverbal conditions, humans and mice accurately assess risks and behave nearly optimally. That this capacity is well-developed in the mouse opens up the possibility of a genetic approach to the neurobiological mechanisms underlying risk assessment.

Keywords: genetics, human, interval timing, optimality, statistical decision theory

Humans and other animals make decisions in the face of uncertainties arising from both exogenous and endogenous stochastic processes. The uncertainty about the outcome of a coin toss is exogenous, whereas the uncertainty in your estimate of the time elapsed since you started reading this paragraph is endogenous. Historically, most of the research conducted in the area of decision-making in the face of risk has focused on the nonnormative phenomena repeatedly demonstrated in decision making that relies on verbally or numerically specified exogenous probabilities (1, 2).

A recent, very different approach to this problem tests subjects' ability to choose the optimal aiming point in a rapid target-touching task in which endogenous motor variability creates risks of either missing the positive pay-off region or straying into a negative pay-off region (3). From the perspective of normative statistical decision theory, the choice of an optimal aiming point is computationally as complex or more complex than the identification of the optimal choice in the verbal tasks. It requires weighing both pay-off magnitudes and probabilities, including very small probabilities at the tails of the distribution of landing points around a target point. Surprisingly, under most conditions, subjects rapidly identify a nearly optimal target point (4, 5). They also optimize the timing of their motor end-points (6, 7).

Inspired by this work, we adapted a simple timing task that requires subjects to judge when it is time to switch from betting on an option with a short-latency payoff to betting on an option with a longer-latency payoff. The subjects were adult humans and a common strain of laboratory mouse (C57/B6). The task was essentially the same for both; they had to anticipate the appearance of a reward at 1 of 2 locations, with often repeated trials. On some fraction of the trials, the reward appeared in one location after a fixed short latency; on the complementary fraction, it appeared at the other location after a fixed longer latency. The trials were randomly intermixed, so subjects did not know at trial outset whether it was a short or long latency. They began each trial by waiting at the short-latency location. If and when they judged that the short latency had passed without a payoff, they switched to the long-latency location. If they switched too soon on a short trial or too late on a long trial, they lost the reward (or, under some conditions with human subjects, they were assessed penalty points).

The probability of a given outcome depended jointly on the probability of a short versus a long trial and the probability of the subject's switching too soon or too late. Our data are the switch latencies on the long trials, the trials when switching paid-off. Subjects' switch latencies varied from trial-to-trial because of the well-established scalar variability (Weber's law characteristic) in estimates of elapsed duration, which is seen in both human and nonhuman subjects (8–11); repeated estimates of the same duration are accurate only to within roughly ±15% (12). The fraction of short trials, which varied between blocks of many hundred trials each, was the parameter of the exogenous stochastic process. The variability in a subject's estimate of the time elapsed in a trial and the subject's choice of a target latency for switching were the parameters of the Gaussian endogenous process. From our knowledge of the objective probability of a short trial and an estimate of the standard deviation in a subject's estimate of elapsed duration, it is possible to compute the optimal target time for a switch, that is, the optimal value of the mean switch latency. In our data analysis, we asked how closely our estimates of the optimal switch time, T̂o, corresponded to our estimate of the mean of the distribution of a subject's switch latencies, T̂.

Results

Fig. 1 shows plot T̂o, which is our estimate of the optimal mean switch latency, versus T̂, which is our estimate of the mean of the distribution from which the sample of observed switch latencies was drawn, for the human (A) and mouse (B) subjects, respectively. We computed the mutual regression between the 2 estimates across different conditions for individual subjects. For human subjects, the average slope was 0.47, which was significantly >0 [t(6) = 3.12, P < 0.05] and <1 [t(6) = 3.53, P < 0.05]. For mouse subjects, the average slope was 1.31, which was significantly >0 (t (11) = 6.15, P < 0.0001) and not significantly different from 1 (P = 0.18). Fig. 1C plots group averages of T̂o and T̂ as functions of the probability of a short trial in the mouse data.

Fig. 1.

Performance of human and mouse subjects. (A and B) To versus T̂ for human and mouse subjects, respectively, with different symbols for different subjects. (C) T̂ and T̂o as functions of the probability of a short trial in mouse subjects (means ± 1 SE). (D) The proportion of experimental conditions (sessions in humans or experimental phases in mice) in which performance improved significantly (white bar) or worsened significantly (black bar) between the first and last quartiles or first and last deciles in the sequence of trials within a given condition.

Fig. 1 A–C show that the observed mean switch latencies closely tracked our estimate of the optimal target latencies. The average absolute temporal distance between the two, (T̂ − T̂o), was 172 ± SE 33 ms in the human subjects, which was 5% of the 3-s range of possible values that T̂ could take. In the mice subjects, the average absolute temporal distance was 436 ± SE 60 ms, which was 7% of the 6-s range of possible values that T̂ could take.

These absolute temporal differences are not meaningful until we take into account the statistical uncertainties surrounding the estimates from which they derive. T̂, the mean of a sample of switch latencies, is only an estimate of the mean of the population from which the sample was drawn. Similarly, our values for T̂o depend on the within-sample variability, from which an estimate of the population SD derives. To take into account the statistical uncertainty about the true values of the population parameters, T and σ, we plotted for each subject in each condition the likelihood function and the relative expected-gain function on the plane defined by the possible values of T and σ (Fig. 2).

Fig. 2.

Representative plots of level curves of the likelihood function (green ovals), the optimal mean switch latency (red dots), and relative gain contours (black curves) under varying conditions: p(S) = probability of a short trial; gain and loss, humans only, is the relation between points won (gain) or lost given the 4 possible outcomes on a trial.

Assuming an uninformative prior, the likelihood function tells us how likely it is that any given parameter pair, 〈Ti,σi〉, are the true values for the mean and variability of the subjects' underlying switch-latency distribution, given the data in our sample from this distribution. The source distributions were assumed normal, because most of the datasets were better fit by normal distributions than by log normal or Weibull distributions.

The expected gain at a point in the parameter plane is the expected gain for a decision maker with known variability σi in his/her representation of elapsed time, by using decision criterion Ti. The “relative” expected gain is this expected gain normalized by the expected gain for a decision maker with the same temporal uncertainty (σi), by using the “optimal” decision criterion (target switch time).

With both the relative likelihood function for T and σ and the relative expected-gain function mapped onto the common parameter plane, the subject's optimality may be measured either by the relative likelihood of the closest point on the ridge of the gain function (the subject's statistical distance from the optimal point) or by relative expected gain at 〈T̂,σ̂〉, which is the mode of the likelihood function, the point marked by a “+” in the innermost ovals in Fig. 2. This mode is the best estimate of the population parameters.

A second measure is the estimate of the reduction in expected gain that the subject incurs by using a nonoptimal target latency. From the subject's perspective, it is the more appropriate measure. A subject whose expected gain is 99% of the gain to be expected from an optimal choice of target latency would rationally be indifferent to the possibility that their target latency was “very significantly” different from the optimal target latency.

Fig. 2 shows level curves (equal-likelihood contours) of the likelihood function, together with contours of the relative expected gain function, for 2 different conditions (one with a human subject and one with a mouse subject). Fig. 2 also shows the locus of the optimal decision criterion (mean switch latency) as a function of the variability in the switch latencies. The relative-likelihood contours are at natural log unit levels (e−1, e−2, etc). If the innermost relative likelihood contour encompasses a red dot (a point on the ridge of the expected gain function), then the statistical distance from optimality is negligible. Conversely, if the outermost relative likelihood contour does not encompass a red dot, then the difference between the subject's estimated decision criterion and the optimal criterion is, from a purely statistical perspective, highly significant. Whether this statistical difference is of any practical consequence depends on the relative expected gain at the “+” in the center of the innermost relative likelihood contour. When the “+” is inside the 0.99 relative gain contour, the best estimate of the subject's expected gain is >99% of the maximum possible expected gain for a subject with that degree of variability in their switch latencies (hereafter referred to as relative score). In such cases, the statistical distance from the optimal, whether large or small, is of no consequence.

Human subjects' median relative score was 0.98 (interquartile interval: 0.02), and mice subjects' relative score was 0.99 [interquartile interval: 0.01 (excluding from the human data conditions where the maximum possible expected gain was ≤0)]. Thus, we conclude that both humans and mice can compute a temporal decision criterion—a target switch time—that is sufficiently close to the optimal so as to very nearly maximize their expected gain.

Subjects did not appear to converge on a nearly optimal decision criterion by trial-and-error hill-climbing processes like those proposed by operant psychologists (13, 14) and advocated within the reinforcement-learning tradition in computer science (15). If they did, we should consistently observe that -T̂o − T̂-, the difference between our estimate of a subject's decision criterion and our estimate of the optimal criterion, grew smaller as the number of trials under a given condition increased. However, in the great majority of cases, there was no discernible improvement in the location of a subject's target switch latency over trials (Fig. 1D).

Discussion

In this simple timing task, which we believe captures the essence of temporal decision making that confronts human and nonhuman animal subjects in everyday life, both humans and mice are nearly optimal decision makers in the face of uncertainties of both external and internal origin. This nearly optimal performance would seem to imply that they rapidly form an accurate representation of both their endogenous uncertainty (how good they are) (cf. 16) and the exogenous uncertainty (the objective risk) and that they combine these uncertainty estimates in a normative way. The nearly optimal performance of humans in our task contrasts with the traditional view that humans are nonnormative decision-makers under probabilistic conditions.

Given our results it can be argued that nonrational decisions made under probabilistic conditions in traditional decision-making tasks derive in substantial measure from the form in which the probabilistic information is presented to the subjects (see also refs. 17–19). Specifically, in traditional paper–pencil decision-making tasks, subjects are presented with the exogenous probabilistic information via one-shot verbal descriptions and asked to combine this information with other probabilistic information and/or magnitudes. By contrast, in our task where subjects performed near optimally, they experience both the exogenous and endogenous uncertainty, and they must combine these representations of uncertainty and magnitudes to arrive at an estimate of an appropriate duration target.

We conclude that the decision-making processes might take normative account of probabilistic input only when the probabilities are derived from direct experience of the generative stochastic product and that language-derived representations of uncertainty may not be able to access these processes. Alternatively, the linguistic interface may lead to systematic distortions of the specified uncertainties (e.g., overestimation of the low probabilities).

In our experiments, the output of the decision-making process in both humans and mice was instantiated by temporally controlled responses. Given the ubiquity of timing as a cognitive domain (ranging from fish to humans), the use of a temporal discrimination paradigm (thus temporal information processing) as the context for decision-making under uncertainty adds a translational character to our decision-making task. The translational nature of this cognitive domain has started to receive increasing appreciation in preclinical research (20–21).

The similarity of the results from human and mice subjects in this complex temporal decision-making task further supplies the behaviorally necessary tools to study the genetic and neurobiological underpinnings of risk assessment in animal models. Risk is not an exotic aspect of a uniquely human life; risk is inherent in life at every level of complexity. Thus, it is not far-fetched to suppose that mechanisms for near-optimal risk assessment in many everyday contexts evolved long ago (cf. 19). An obvious question is whether there may exist ways of tapping into those mechanisms to mediate the many human assessments of risk that depend on verbal or symbolically transmitted information about probabilities.

Methods

Subjects.

Human.

The subjects were undergraduate students, graduate students, and postdoctoral volunteers at Rutgers University. There were 7 subjects, 4 males and 3 females. The age of subjects ranged between 21–34. They gave informed consent and were paid for their participation.

Mice.

Twelve naïve C57BL/6N female mice (Harlan, Indianapolis, IN) were used in this experiment. They were 8 weeks old on arrival. The mice were housed individually in polypropylene cages. The cabinets that held the cages were lit on a 12:12 light/dark cycle (lights on at 8:00 PM). The experiments were run during the dark cycle. The mice were maintained at 85% of their free-feeding weight by being fed lab chow after each session. During the sessions, they were fed 20-mg Noyes food pellets (PJAI-0020) as a reinforcer. Water was available ad libitum in the home cages and experimental chambers. They were treated in accordance with the Guide for the Animal Care and Use of Laboratory Animals (22).

Apparatus.

Human.

The temporal parameters, presentation of the stimulus, and recording of the responses were controlled with a Macintosh running OS X 10.3.9. The controlling program was written in MATLAB 7.04 by using the Psychophysics Toolbox extensions (23).

Mice.

Six operant chambers (Med Associates, ENV 307-W: 21.6 cm × 17.8 cm × 12.7 cm) inside ventilated, sound-attenuated boxes (Med Associates, ENV-018-M: 55.9 cm × 55.9 cm × 35.6 cm) were used in this experiment. Two opposing sidewalls were made of metal, the other 2 walls and the ceiling of clear Plexiglas. Each chamber had a grid floor with 25 evenly spaced metal rungs. Two pellet dispensers (ENV-203–20) were able to deliver food to 2 cubic hoppers (Med Associates ENV-203-20, 24 mm on each side) spaced 5 inches apart on one wall. On the opposite wall, another cubic hopper was used to initiate trials. Along that same wall, a water bottle protruded into the chamber. The hoppers were illuminable and equipped with an infrared beam that detected nose pokes. A white-noise generator delivered an 80-dB, flat 10–25,000-Hz white noise for programmable durations. The experiment was controlled by software (Med-PC IV, Med Associates) that logged and time-stamped the events—the onsets and offsets of interruptions of the IR beams in the station, the onsets and offsets of white noise, and the delivery of food pellets. Event times were recorded with a resolution of 20 ms.

Procedure

General Procedure.

There were 2 types of trials. On short trials, a signal (a visual stimulus for humans and visual/auditory stimuli for mice) appeared and stayed on for a short latency. The subjects were rewarded if they made the response associated with the short latency at (or after, for mice) the termination of the signal. On long trials, the same signal appeared, but stayed on for a long latency, and the subjects were rewarded if they made the response associated with the long latency at (or after, for mice) the termination of the signal. The response for the long latency was in a different location than the response for the short latency. All subjects started their responding in the short latency location and switched to the long latency location if and when they judged that the trial was a long trial. If the subject emitted the long latency response at (or after, for mice) the termination of the signal in a short trial (e.g., early switch) or the short response at (or after, for mice) the termination of the signal in a long trial (e.g., late switch), it was a missed trial. There were 2 parameters which were varied, in the expectation that they would have an effect on the mean switch latency: (i) The probability of a short trial and (ii) the Payoff Matrix; the rewards for catching short or long latency targets and penalties for switching early in short-latency trials and late in long-latency trials. The latter factor was varied only with human subjects.

Humans.

The short latency was 2 seconds, and the long latency was 3 seconds. A cross (+) appeared in the middle of the computer screen to start a trial. A press on the “V” key caused an open red square to appear to the left of the +. The “B” key caused it to appear to the right. The open square did not stay (was not effective in catching the target) after the key was released. Thus, the subject was required to keep the key pressed at all times during the trial. Whether the trial was short or long, if the open square was in the correct location at the time of signal termination, points were added to their score. There were 2 types of errors: A premature switch (before the short trial interval had elapsed) and a late switch (after the long trial interval had elapsed). Points were deducted for either error. At the short and long latency, a target appeared on the screen in the correct location associated with short and long latencies, respectively. If the subject caught the target, the color of the target was green; if the subject missed the target, the color was red. Because of this feedback, the subjects re-experienced the short and long durations every trial. Each subject participated in at most 20 sessions and each session had 3 phases.

Phase 1: Training.

The + appeared in the middle of the screen and stayed on for either the short or long latency. On the first 2 presentations, the subjects were told the correct answer (the screen printed either “Short” or “Long”). For the next 10 trials, the subjects had to classify the duration of the + by pressing either “S” or “L” after its termination. The subjects were given feedback after each trial. This phase ensured that the subjects learned/re-experienced the references durations before their testing trials.

Phase 2: Practice.

Subjects were asked to participate in the experiment as previously described. No points were rewarded or deducted. The probability of the short trial [p(S)] of this practice phase matched the probability in the testing phase. In this phase, the subjects were expected to form an estimate of the variability in their representation of elapsed time—in the unlikely event that they did not bring an estimate with them to the experiment.

Phase 3: Testing.

Testing consisted of 500 trials. Although subjects were allowed to take a break after every 10 trials, they were prompted to take a break after every 100 trials. The testing phase was identical to the practice phase except that the goal of this phase was the maximization of the subject's score. The score was updated after every trial, and the results were shown to the subject according to their assigned type-of-feedback condition. For the first type, numerals represented their score. In this condition, after a given trial duration (short or long), the target that appeared on the side associated with that trial type was a filled square. In the other condition, the target was a set of filled circles, and the number of circles represented how many points had been gained or lost. The subjects in this feedback condition were shown their updated score by the length of a line that ran across the screen. The cumulative score got longer after the subject was rewarded and shorter after the subject was penalized. The color of the line was green, if the cumulative score was above zero, and red otherwise. Payoffs (rewards/penalties) ranged from −50 to 50 points. The probability of a short trial was also manipulated. Both of these manipulations were made only between sessions. Type of feedback had no effect.

Mice.

This procedure is an adaptation of the switch paradigm described by Balci et al. (24). The illumination of the control hopper (opposite the 2 feeding hoppers) signaled that a trial could be initiated. A mouse initiated a trial by a poke into this illuminated control hopper. This response requirement ensured that the mouse was in a fixed location at the start of a trial. The trial-initiating poke turned off the light in the control hopper, turned on the lights in both feeding hoppers, and turned on a white noise. The noise went off at the end of the fixed interval for that trial (either 3 s or 6 s). The hopper lights turned off after a nose poke on either hole at or after the termination of the noise. A reward was delivered only if the first nose poke at or after the termination of the noise was in the appropriate hopper. After each trial, there was a 30-s fixed delay plus a variable interval of 60 s (drawn from an exponential distribution) before the next trial could be initiated. Although, in theory, mice could wait for the termination of the temporal signal before emitting the short or long latency responses, this did not occur during the experiment.

The feeding hopper associated with the short duration was counterbalanced across mice. Phases 1–4 served as training. Phases 5–7 served as the testing phases and were the phases used in the analysis. Each phase continued until the performance of the mouse stabilized. Unlike the human experiment, the mouse experiment did not manipulate the payoff matrix. Only the probability of a short trial was manipulated across phases. Only the last 10 sessions of the phases were included in the analysis.

Phase 1.

Three days before the experiment, the mice were food-deprived to 85% of their free-feeding weight. In addition, on each of these 3 days the mice were placed in the experimental chambers for 30 min, and five 20-mg Noyes food pellets (PJAI-0020) were left in their home cages to familiarize them with the pellets used in the experiment.

Phases 2–4.

We have found that mice do not readily discriminate a 1:2 duration ratio when it is used for the initial training set. Thus, we started training with a 1:3 duration ratio, where the short duration was 3 s and the long duration was 9 s. After training with this pair of temporal intervals, and after several parameter manipulations (i.e., changing the probability of a given trial's occurrence), the trial duration ratio was reduced to 1:2, using a 3-s short trial and a 6-s long trial. These values were used throughout the rest of the experiment.

Phase 5.

In phase 5, the probability of a given trial being short was 0.5. This phase was run for 22 daily sessions.

Phase 6.

In phase 6, the probability of a short trial was 0.25 for half the mice (Group 1) and 0.75 for the other half (Group 2). This phase was run for 20 daily sessions.

Phase 7.

In phase 7, the probability of a short trial for Group 1 was 0.9, whereas for Group 2 the probability was 0.1. This session was run for 22 daily sessions.

Optimal Decision-Making Model.

The data were compared to a model in which animals switch from the short location to the long location at the time that maximizes their expected gain. The expected gain function is

|

where S is the switch time (latency of the subject's departure from the short location), T is the subject's target (intended) departure time (the decision criterion), w is the subject's Weber fraction (the constant of proportionality relating the variability in switch latencies to the mean switch latency), p(S) is the probability of a short trial, 1 − p(S) is the complementary probability (the probability of a long trial), S is the short reward latency and L the long reward latency, g(s < S) is the loss from a premature departure on a short trial, g(s > S) is the gain from staying at the short-latency location for t > S on a short trial, g(s < L) is the gain from departing whereas t < L on a long trial, and g(s > L) is the loss from departing too late on a long trial. The probability of a premature departure is p(s < S) = (S,T,wT), which is the value at S of the cumulative Gaussian with mean T and standard deviation wT. The complementary probability is the probability of an on-time departure, p(s > S). Similarly, the complementary probability for an on-time departure is p(s < L) and for a too-late departure is p(s > L).

Fig. 3 illustrates the dependence of the probabilities of the different possible outcomes on the (cumulative) distribution of the switch times.

Fig. 3.

The cumulative distribution of switch times, Φ(s,T̂,w T̂), determines the probabilities of premature departure, p(s < S); on-time departure, p(s > S); in-time departure, p(s < L); and too-late departure, p(s > L). In-time departures are rewarded; whether a premature or too-late departure is rewarded depends on the complementary probabilities of the trial being short or long.

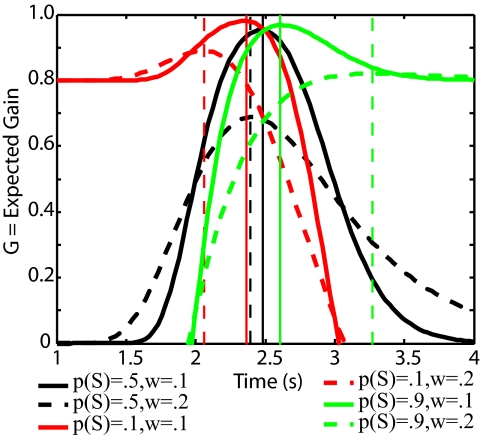

By using the formula specified in the text, the expected gain was computed for multiple Ts uniformly distributed between 0 and L + 1 s (L + 3 s for mice) at small increments (e.g., 0, 0.005, 0.01, …, 3.995, 4 for humans). The T that resulted in the maximum expected gain was defined as the optimal point of switching. The optimal point, T, thus depends on 9 quantities. Fig. 4 illustrates the output of this computation for different levels/conditions of exogenous (probability of short and long trials) and endogenous uncertainty (3 of the 9 critical quantities). The other 6 quantities are the short and long durations and the pay-off matrix (4 signed magnitudes). Only one of these quantities, endogenous uncertainty, was estimated from the empirical data.

Fig. 4.

The effects of the relative probability of short and long trials versus the effects of different levels of timing variability (noise, uncertainty) on the expected gain curve. Vertical lines show the modes (locations of the optimal target time, To). The computations were made with g(s < S S) = −1, g(s > S S) = 1, g(s < L L) = 1, g(s > L L) = −1. The locations of the optimal targets do not change if the negative gains are made 0. The solid curves are computed with w = 0.1, that is, ±10% temporal precision; the dashed curves are computed with w = 0.2, that is, ±20% temporal precision. The different colors indicate different relative probabilities of short and long trials: Red = p(S) = 0.1, long trials are 9× more likely than short trials; black = p(S) = 0.5, the two kinds of trials are equally likely; green = p(S) = 0.9, short trials are 9× more likely than long trials.

Note that in Fig. 4 the effect of a 2-fold change in timing imprecision on the location of the optimal target (vertical lines) is greater than the effects of an order-of-magnitude change in the relative likelihoods of the 2 kinds of trials. Thus, the accurate representation of the variability in one's timing is critical to the determination of an optimal switch criterion. Detailed information about the data analysis can be found in the supporting information (SI) Text and Figs. S1 and S2.

Supplementary Material

Acknowledgments.

We thank Efstathios Papachristos and Daniel Gottlieb for many helpful discussions and Aaron Kheifets and Jacqueline Gibson for experimental assistance. This work was supported by National Institute of Mental Health Grant R21 MH63866 (to C.R.G.).

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/cgi/content/full/0812709106/DCSupplemental.

References

- 1.Kahneman D, Tversky A. Prospect Theory: An analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- 2.Tversky A, Fox CR. Weighing risk and uncertainty. Psychol Rev. 1995;102:269–283. [Google Scholar]

- 3.Trommershäuser J, Maloney LT, Landy MS. Decision making, movement planning, and statistical decision theory. Trends Cognit Sci. 2008;12:291–297. doi: 10.1016/j.tics.2008.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Trommershäuser J, Maloney LT, Landy MS. Statistical decision theory and trade-offs in the control of motor response. Spat Vis. 2003;16:255–275. doi: 10.1163/156856803322467527. [DOI] [PubMed] [Google Scholar]

- 5.Trommershäuser J, Maloney LT, Landy MS. Statistical decision theory and the selection of rapid, goal-directed movements. J Opt Soc Am A. 2003;20:1419–1432. doi: 10.1364/josaa.20.001419. [DOI] [PubMed] [Google Scholar]

- 6.Hudson TE, Maloney LT, Landy MS. Optimal movement timing with temporally asymmetric penalties and rewards. PLoS Biol. 2008;4:e1000130. doi: 10.1371/journal.pcbi.1000130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dean M, Wu S-W, Maloney LT. Trading off speed and accuracy in rapid, goal-directed movements. J Vis. 2007;7:1–12. doi: 10.1167/7.5.10. [DOI] [PubMed] [Google Scholar]

- 8.Fetterman JG. The psychophysics of remembered duration. Anim Behav. 1995;23:49–62. [Google Scholar]

- 9.Gallistel CR, Gibbon J. Time, rate, and conditioning. Psychol Rev. 2000;107:289–344. doi: 10.1037/0033-295x.107.2.289. [DOI] [PubMed] [Google Scholar]

- 10.Gibbon J, Allan L, editors. Timing and Time Perception. New York: New York Academy of Sciences; 1984. [PubMed] [Google Scholar]

- 11.Rakitin BC, et al. Scalar expectancy theory and peak-interval timing in humans. J Exp Psychol Anim Behav Processes. 1998;24:1–19. doi: 10.1037//0097-7403.24.1.15. [DOI] [PubMed] [Google Scholar]

- 12.Gallistel CR, King A, McDonald R. Sources of variability and systematic error in mouse timing behavior. J Exp Psychol Anim Behav Processes. 2004;30:3–16. doi: 10.1037/0097-7403.30.1.3. [DOI] [PubMed] [Google Scholar]

- 13.Staddon J. Rationality, melioration, and law-of-effect models for choice. Psychol Sci. 1992;3:136–141. [Google Scholar]

- 14.Vaughan WJ. Choice: A local analysis. J Exp Anal Behav. 1985;43:383–405. doi: 10.1901/jeab.1985.43-383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Sutton RS, Barto AG, editors. Reinforcement Learning. Cambridge, MA: MIT Press; 1998. [Google Scholar]

- 16.Kepecs A, Uchida N, Zariwala HA, Mainen ZF. Neural correlates, computation, and behavioural impact of decision confidence. Nature. 2008;455:227–231. doi: 10.1038/nature07200. [DOI] [PubMed] [Google Scholar]

- 17.Maloney LT, Trommershäuser J, Landy MS. In: Integrated Models of Cognitive Systems. Gray W, editor. New York: Oxford Univ Press; 2006. pp. 297–315. [Google Scholar]

- 18.Barron G, Erev I. Small feedback-based decisions and their limited correspondence description based decisions. J Behav Decis Making. 2003;16:215–233. [Google Scholar]

- 19.Weber EU, Shafir S, Blais A-R. Predicting risk-sensitivity in humans and lower animals: Risk as variance or coefficient of variation. Psychol Rev. 2004;111:430–445. doi: 10.1037/0033-295X.111.2.430. [DOI] [PubMed] [Google Scholar]

- 20.Balci F, et al. Pharmacological manipulations of interval timing on the peak procedure in mice. Psychopharmacology. 2008a doi: 10.1007/s00213-008-1248-y. [DOI] [PubMed] [Google Scholar]

- 21.Balci F, Meck W, Moore H, Brunner D. In: Animal Models of Human Cognitive Aging. Bizon JL, Woods AG, editors. Totowa, NJ: Humana Press; 2009. [Google Scholar]

- 22.National Research Council. Guide for the Care and Use of Laboratory Animals. Washington, DC: National Academy; 1996. [Google Scholar]

- 23.Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- 24.Balci F, et al. Interval-timing in the genetically modified mouse: A simple paradigm. Genes, Brain, and Behavior. 2008;7:373–384. doi: 10.1111/j.1601-183X.2007.00348.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.