Abstract

The Adaptive Visual Analog Scales is a freely available computer software package designed to be a flexible tool for the creation, administration, and automated scoring of both continuous and discrete visual analog scale formats. The continuous format is a series of individual items that are rated along a solid line and scored as a percentage of distance from one of the two anchors of the rating line. The discrete format is a series of individual items that use a specific number of ordinal choices for rating each item. This software offers separate options for the creation and use of standardized instructions, practice sessions, and rating administration, all of which can be customized by the investigator. A unique participant/patient ID is used to store scores for each item and individual data from each administration is automatically appended to that scale’s data storage file. This software provides flexible, time-saving access for data management and/or importing data into statistical packages. This tool can be adapted to gather ratings for a wide range of clinical and research uses and is freely available at www.nrlc-group.net.

The visual analog scale (VAS) is a common method for rapidly gathering quantifiable subjective ratings in both research and clinical settings. There are a number of different versions of this type of scale that fall into two general categories. One is a continuous scale where items are rated by placing a single mark on a straight line. The other is a discrete visual analog scale (DVAS) which is an ordered-categorical scale where the rater makes a single choice from a set of graduated ratings. Both types of scales are anchored at each end by labels that typically describe the extremes of a characteristic being rated. The anchors are usually descriptors of attitudes or feelings and may be bipolar scales, representing exact opposites (e.g., cold – hot), or unipolar scales (e.g., never – more than 5 per day), representing concepts without exact opposites (Nyren, 1988). These scales require little effort/motivation from the rater (resulting in high rates of compliance), allow careful discrimination of values (Ahearn, 1997), and are a rapid means of gathering ratings from both adults (e.g., Flynn, van Schaik, & van Wersch, 2004) and children (e.g., van Laerhoven, van der Zaag-Loonen, & Derkx, 2004).

A PubMed (June 25, 2008) search of the term “visual analog scale” returned 5,594 articles, which indicates just how commonly these scales are applied in research and medical settings. Different visual analog scales have been used to examine effects across multiple disciplines including, but not limited to, the presence of symptoms of side effects following pharmacological manipulations (Cleare & Bond, 1995), health functional status (Casey, Tarride, Keresteci, & Torrance, 2006), self-perception of performance (Dougherty, Dew, Mathias, Marsh, Addicott, & Barratt, 2007), mood (Bond & Lader, 1974; Steiner, & Steiner, 2005), physician rapport (Millis et al., 2001), and ratings of pain (Choiniere, Auger, & Latarjet, 1994; Gallagher, Bijur, Latimer, & Silver, 2002; Ohnhaus & Adler, 1975).

The continuous version of the VAS typically uses a line that is 100 mm long and positioned horizontally, although occasionally a vertical orientation may be used (for reviews see Ahearn, 1997; Wewers & Lowe, 1990). This method of rating is thought to provide greater sensitivity for reliable measurement of subjective phenomena, such as various qualities of pain or mood (Pfennings, Cohen, & van der Ploeg, 1995). This method may be preferred by raters when they perceive their response as falling between the categories of a graduated scale (Aitken, 1969; Holmes & Dickerson, 1987), because it allows more freedom to express a uniquely subjective experience compared to choosing from a set of restricted categories (Aitken, 1969; Brunier & Graydon, 1996).

An alternative to the continuous version of the VAS rating scale is the categorical DVAS, which uses an ordinal (often treated as interval) forced-choice method (e.g., Likert or Guttman scales). The Likert (Likert, 1972) and Likert-type scales are probably the two most popular and well-known versions of the DVAS (for summary of scale differences, see Uebersax, 2006). The Likert scale was originally developed to quantify social attitudes using very specific rules: it is anchored using bipolar and symmetrical extremes, and is individually numbered on a five-point scale that includes a neutral middle choice (e.g., 1 = Strongly Agree, 2 = Agree, 3 = Neither Agree nor Disagree, 4 = Disagree, 5 = Strongly Disagree). These choices are presented horizontally in evenly spaced gradations. A subtle adaptation of the Likert scale is the Likert-type scale in which the anchors are unipolar, without direct opposites (e.g., Never – Most of the time), and can include an even number of choices (e.g., Never, Sometimes, Most of the time, Always) to require the individual to choose a specific rating rather than a neutral or exact middle choice.

The VAS (i.e., continuous scale) was first introduced in 1921 by two employees of the Scott Paper Company (Hayes & Patterson, 1921). They developed the scale as a method for supervisors to rate their workers. This permitted the supervisors a means to rate performance using quantitatively descriptive terms on a standardized scale. Two years later, Freyd (1923) published guidelines for the construction of these scales, which included the use of a line that was no longer than 5 inches (i.e., 127 mm), with no breaks or divisions in the line, and the use of anchor words to represent the extremes of the trait being measured. He also suggested that the scales should occasionally vary the direction of the favorable extremes, to prevent a tendency for placing marks along one margin of the page. This type of scale’s broad continuum of ratings appears to be a more sensitive method for discriminating performance on an interval rather than ordinal scale (Reips & Funke, in press).

Working with Gardner Murphy, Rensis Likert developed the Likert (DVAS) scale in the early 1930s to measure social attitudes. His primary intent was to create a scale that would allow empirical testing and statistical analyses for determining the independence of different social attitudes and for identifying the characteristics that would form a cluster within a particular attitude (Likert, 1972). Likert created his scale using statements that corresponded to a set of values from 1 to 5, which allowed the rater to assign a value to a statement. This was a major departure from the previous method created in 1928 by Louis Thurstone. In Thurstone’s method for measuring religious attitudes, a panel of judges assigned values to statements regarding attitudes and the respondent agreed or disagreed with each statement. Mean of the values of the agreed upon statements was calculated as an index of that person’s attitude. One important drawback of Thurstone’s method was the potential confound of attitudes imposed by the judges when assigning statement values. By allowing individual respondents to assign their own values to statements in his survey, the method created by Likert allowed for scale values that were independent of the attitudes of the panel of judges. Likert went on to apply this method of attitude survey to business management and supervision (Likert, 1961, 1963).

While visual analog scales are very popular methods of collecting ratings, the use of technology may promote the ease of their design and administration. One particular drawback of using the traditional paper-and-pencil visual analog scale is the effort required to design and to manually score them. This manuscript describes a computer software package for the creation, administration, and automated scoring of both continuous and discrete visual analog scales. This method was designed to provide flexible, time-saving access for data management and/or importing data into statistical packages.

Adaptive Visual Analog Scales (AVAS)

The Adaptive Visual Analog Scales (AVAS) is a new, freely available software package designed to be a flexible tool for the creation, administration, and automated scoring of visual analog scales. This computer software package (compatible with Microsoft Windows 2000 and later) includes the program and a manual describing the installation and use of the software. This software may be downloaded at no charge from www.nrlc-group.net. A brief description of the program and its components follows.

Startup Menu and Program Settings

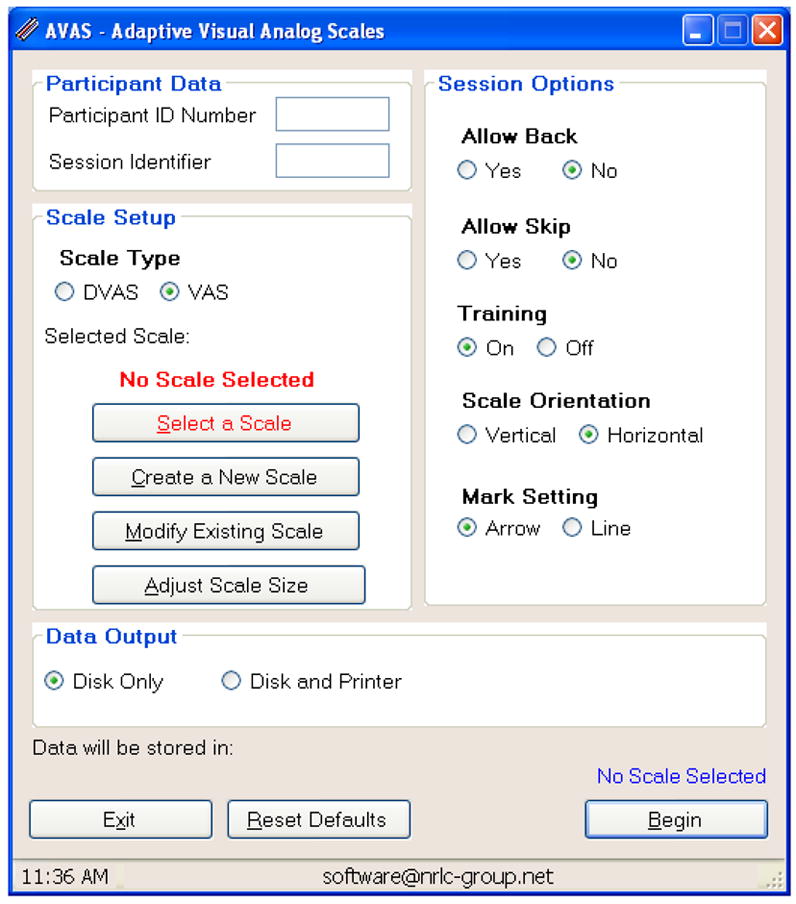

Once the program is successfully installed and initiated (AVAS.exe), the Startup Menu (Figure 1) appears on the computer monitor; this menu is used to enter participant information and to create and adjust parameters for any scale. To ensure the most accurate timing, it is recommended that other programs that are being used be closed prior to starting the AVAS. Each parameter option, or frame, is described below.

Figure 1.

The Adaptive Visual Analog Scales (AVAS) has a number of parameters that can be manipulated by the experimenter. The program’s startup menu depicts the areas used for adjusting parameters of this versatile software package. These parameters are described in detail in the text.

Participant Data Frame. This frame is used to enter unique identifying information for each participant (i.e., Participant ID Number) and for each testing session (i.e., Session Identifier). Information entered in these text boxes is used to create the file name for the data stored on the computer and for identification on the printout that is produced at the end of a session. Any combination of alphanumeric characters may be used as a unique identifier for each user and testing session.

-

Scale Setup Frame. This frame is used to select, create, or modify scales. At the top of this frame, the type of scale (DVAS or VAS) is chosen. For the DVAS scale, each individual test item involves presentation of several words or phrases. The participant selects the response that best represents their rating for that item. The word or phrase is selected by clicking the button that is immediately adjacent to the phrase. For the VAS, a rating line is presented and bordered at both ends by boundary markers (small lines set at right angles to the rating line), which are critically important for conveying the limits of the response area to the participant (Huskisson, 1983). The participant makes their response by clicking on the line at some point between the boundary markers to indicate their rating.

Select a Scale. Choosing this option opens the “Data Folder,” where previously created and saved scales are selected and loaded for test administration. The name of the currently selected scale appears in red, and selection buttons located directly below can be used to modify the scale selection.

-

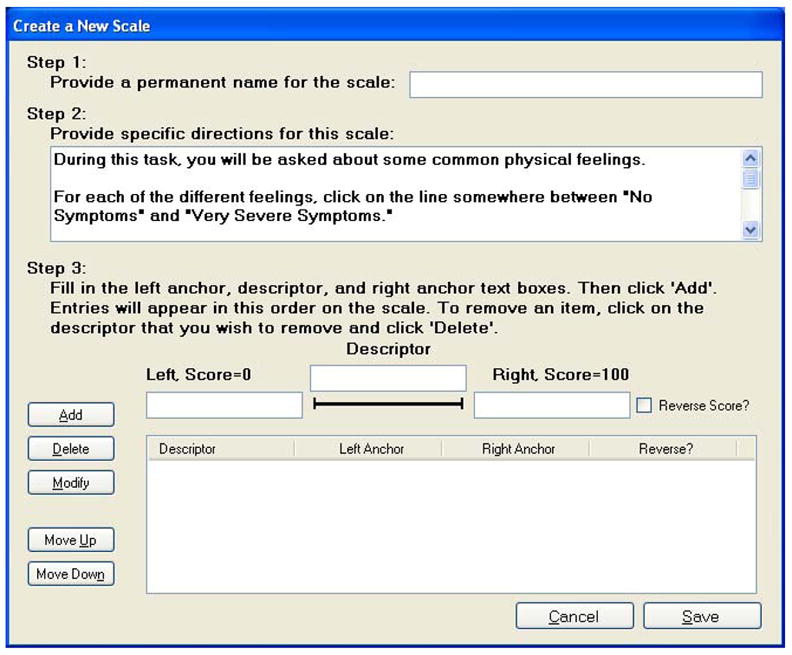

Create a New Scale. When a new scale is needed, choosing this option will open a window that will allow the user to produce and save a new scale. As shown in Figure 2, there are three steps to creating a new visual analog scale: (1) creating a unique name for the scale; (2) creating instructions, and (3) creating scale items. For the DVAS scale, each item is labeled by a word or phrase to be rated, and then individual rating descriptors are defined in the “Responses” section. A maximum of 10 response choices are allowed for each item.

For the VAS, two methods may be used when creating a new scale: unique descriptors may be coupled with a line that uses the same anchors for each item, or the anchors themselves may be unique descriptors representing opposing ends of a scale (e.g., happy - sad). The VAS does not allow for multiple descriptors or anchors along the rating line (e.g., Never, Sometimes, Often, Always) because previous research indicates that this tends to yield discontinuous results (Scott & Huskisson, 1976), which resemble the DVAS.

Finally, to control for a participant’s tendency to position responses in the same place for each item (Freyd, 1923), the scoring direction of extremes of any anchor may be reversed using the Reverse Score? option.

Modify Existing Scale. Once a scale has been created it can always be edited by selecting the modify option.

Adjust Scale Size. The default size of the scale is 500 pixels, corresponding to 132 mm, with the screen resolution set to 1024 × 768 pixels and a screen size of 17 inches. However, the Adjust Scale Size option can be used to change the length of the rating line to fit other screen sizes and resolutions. The length of the rating line is primarily determined by the number of pixels (at a particular screen resolution). By changing the number of pixels, the line length is adjusted to fit various screen sizes, as needed. Regardless of the size (i.e., number of pixels), the rating line is bounded at both ends by boundary markers (small lines set at right angles to the rating line), which are critically important for conveying the limits of the response area to the participant (Huskisson, 1983).

-

Session Options Frame. Items contained within this frame are used to modify the appearance and administration of the scale. These options can be selected by clicking on the buttons.

Allow Back. This gives the option of either allowing or restricting the participant from returning to previously rated items. If the option to allow a participant to return to a previously rated item is selected, the response latency for their final choice is recorded. The participant does not have to change their response when using the Allow Back feature, and items already rated retain their rating unless they are changed. If they return to a previously rated item and change their response, this response latency is also recorded as the time between this new re-appearance of the item and the final rating. Response latencies for each rating of an item are recorded separately. An asterisk will appear next to the latency if the item has been revised during the Allow Back procedure.

Allow Skip. Choosing this will either allow or restrict the participant from advancing to the next item without responding.

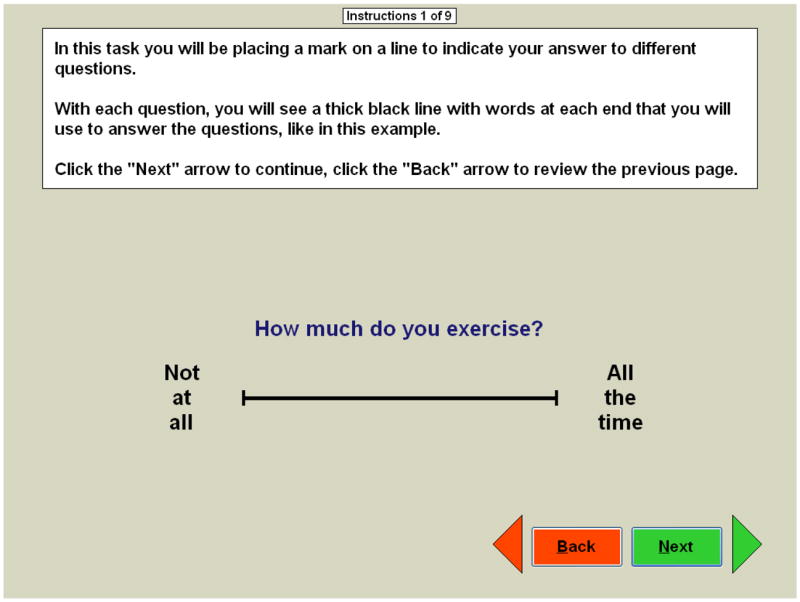

Training. Both scale types include standardized instructions that provide general information about how to conduct the ratings (Figure 3). For the VAS a practice session can be created for administration following this standardized instruction. During practice trials the participant can be instructed to answer a question by placing a mark at some pre-determined point along the line, if the participant responds incorrectly (not at the instructed position along the line), then feedback is provided about the error. For example, if the practice trial directed the participant to place a mark near the “Never” anchor and instead they placed the mark near the “All the Time” anchor, then this response is incorrect and they would be given another opportunity to respond. With this option turned on, the sequence of instructions and the scale presentation occurs without interruption. If a participant has performed this task previously, the instructions may be turned off and the task will begin with presentation of the test items. Responses made during the practice are not recorded in the Data Output. For the DVAS, which generally requires less instruction, the training feature is not available.

Scale Orientation. This option orients the direction of the rating line of a VAS in either a vertical or horizontal direction. While there has been some debate about the appropriate orientation of the scale for making ratings (Ahearn, 1997; Champney, 1941), the horizontal orientation purportedly results in better distributions of ratings (Scott & Huskisson, 1976) and is the most common orientation reported in the literature. The scale orientation may not be set for the DVAS scale, which is always administered in a vertical orientation.

Mark Setting. This option is used to determine whether a line or arrow is displayed to mark the participants’ selection on the rating line.

-

Data Output Frame. This frame is used to specify the format of the data output generated at the end of an AVAS session.

Disk Only. Selecting this option automatically saves the data to a Microsoft Excel© spreadsheet. When a new visual analog scale is created, an Excel© file is automatically generated with the name of the new scale. This file will be created on the C:\ drive, in either the VAS or DVAS folder (located in C:\Program Files\AVAS\VAS or C:\Program Files\AVAS\DVAS). The first row of the Excel© data file is automatically populated with the scale descriptors (or polar anchors for the VAS) as variable labels in separate columns. Each time a participant completes a testing session this file is automatically appended with the new data in the next available row of the spreadsheet. As a result, it is important to assign unique participant numbers and/or session identifiers prior to beginning a testing session. If a unique identifier is not used, previous data will be overwritten by the most recent session results. This Excel© file is useful for data management and for importing the data into statistical software packages.

Disk and Printer. In addition to saving the data to the computer data file as described above, selecting this option will send the data to the computer’s default printer. Whereas the Excel© file is used for data storage and analysis, the printout is structured to allow easy and immediate review of the data following the participant’s completion of the task. When testing situations do not allow for a printer connection, the computer’s printer default can be set to the PDF file format, allowing for printing to be completed later. To use this function the computer must have Adobe Acrobat (see http://www.adobe.com/products/acrobat/) or similar software (e.g., doPDF; www.dopdf.com).

Begin, Reset Defaults, and Exit Buttons. Three buttons appear at the bottom of the Startup Menu that can be used to initiate administration of the visual analog scale (Begin), to return the program’s parameters to their default settings (Reset Defaults), or to exit the program (Exit).

Status Bar. Information at the bottom of the startup window displays the computer’s clock time and the contact e-mail (software@nrlc-group.net) for the Adaptive Visual Analog Scales user support.

Figure 2.

The “Create a New Scale” function allows for construction of a visual analog scale using anchors and descriptors chosen by the experimenter or administrator.

Figure 3.

The “Training” function allows for construction of a customized instruction and practice for a specific VAS.

Adaptive Visual Analog Scales Administration

The session is initiated by using the Begin button, which will start the standardized instructions. Following the instructions, the participant will see individual screens of each item in the scale’s list; each individual item is presented sequentially on the computer screen with no limitation placed on response time to individual items. Once a rating has been selected, the participant is free to change the rating, advance to the next item (“Next”), skip the item (“Skip”), or return to a previously rated item (“Back”), depending on the parameters selected. The participant is free to change their response prior to advancing the program and it is the last of the selections that they make that is recorded. The time between this final response and the initial appearance of the item to be rated is recorded as the response latency for that item. If the response is changed after using the Back function, response latency is the difference in time between the re-appearance of the item and their final response.

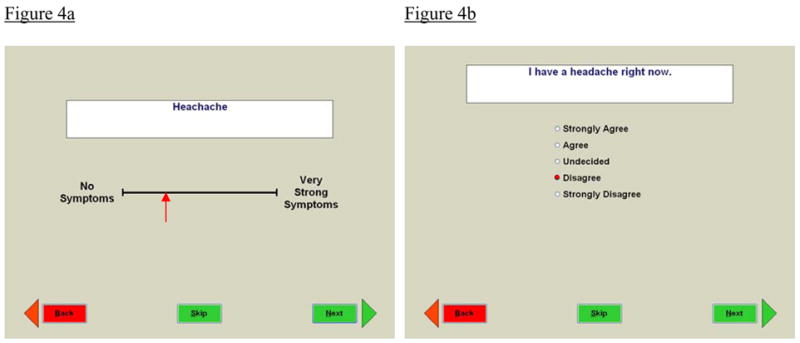

For the DVAS scales, each item consists of a series of descriptive words or phrases accompanied by rating buttons (Figure 4b). Ratings are made by clicking on the button adjacent to the word or phrase that best describes the participant’s rating of the item.

Figure 4.

Figure 4a. During administration of the VAS, the monitor shows a black line with boundary marks, anchors, and a descriptor. The participant is to rate the descriptor at some point between the boundary marks by clicking on the line.

Figure 4b. During the DVAS, the monitor shows a descriptive word or phrase accompanied by rating buttons. The participant can click on a rating button to indicate their response selection.

For the VAS, depending on the option selected (see #3c, above), the standardized instructions may or may not be followed by a practice session. Items are presented in a text box near the rating line, with the predefined anchors appearing at both ends of the rating line (see Figure 4a). Anchors appear next to the boundaries at each end of the rating line because this is thought to be superior to placement below or above the rating line (Huskisson, 1983). If anchor words are also the descriptors against which the participant will rate responses (e.g., happy - sad), no separate descriptor or text box will appear. Anchor words are limited to 30 characters each and descriptors are limited to 140 characters. If an anchor consists of more than one word, words are wrapped and placed on separate lines to fit on the screen. Descriptor words/phrases fit onto one line across the top of the screen on a horizontally oriented VAS scale, and on a vertically oriented scale they break across multiple lines to fit on the screen. The participant can click on the line to mark their ratings for each item. The rating mark will be either a line or arrow, depending upon the settings (see #3e, Session Options, above). The placement of their mark may be moved or adjusted by clicking a different point along the line, which is described for the participant in the general instructions (see #3c, above). Like the DVAS scale, after an item has been rated, they can advance to the next item, return to a previous item, or skip the item that they are currently rating, depending on the parameters selected.

After completing all ratings contained within a scale, the output data are automatically spooled to the disk and/or printer and the participant is presented with the message “You have finished. Thank you.” If the “Allow Back” option is selected, the participant is presented with the option to either confirm their completion of the scale by clicking the “Submit” button or return to previously rated items using the “Back” button. Once test administration has begun, the program can be terminated at any time by pressing the “Esc” key. If the program is terminated early, the data from the session will not be recorded.

Data Output

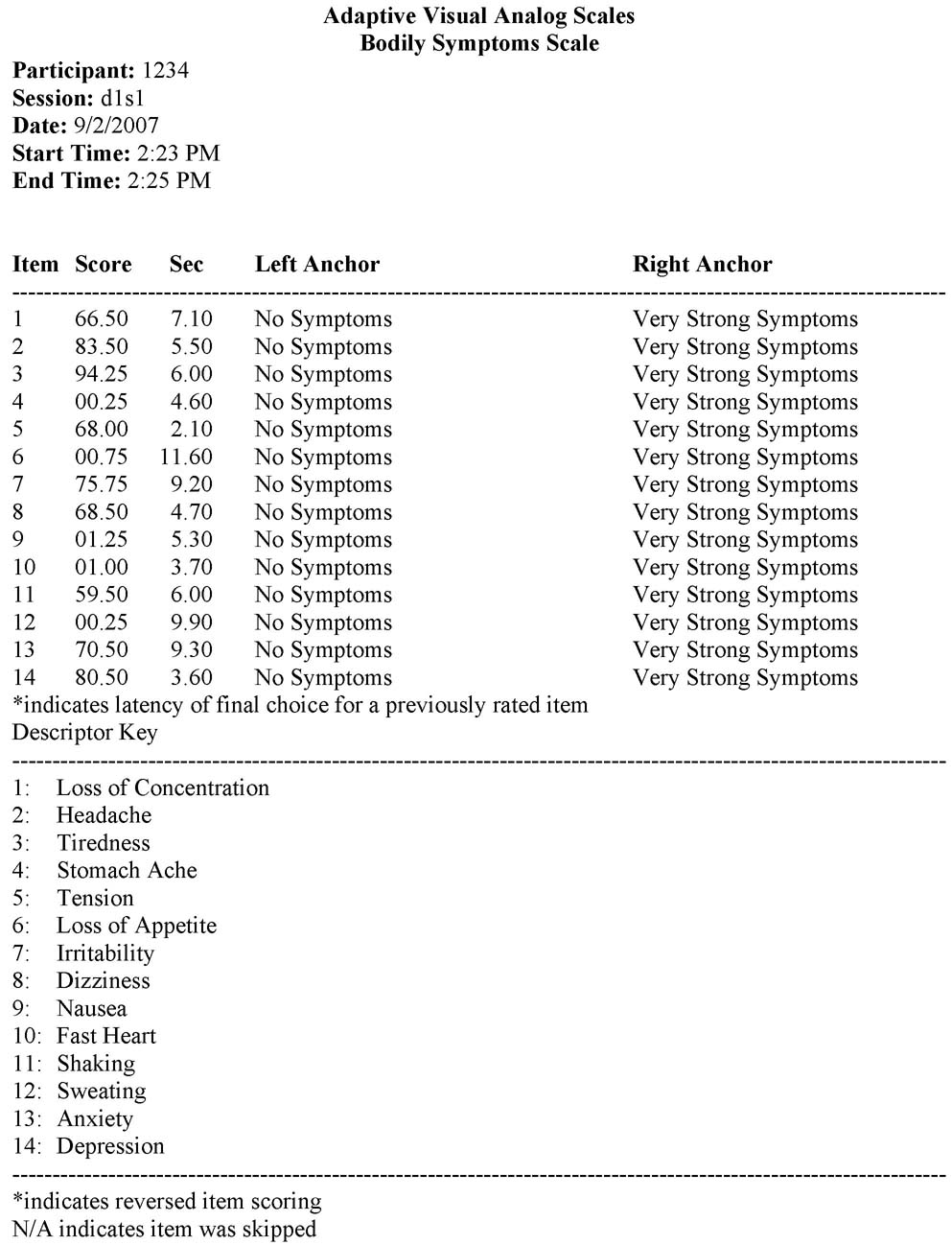

An example of the printed output from the Adaptive Visual Analog Scales appears in the Appendix. This output is divided into three sections. Items at the top of the printout include the name of the scale, participant identification number, session identifier, testing date, and testing time (acquired automatically from the computer’s calendar and clock). For the DVAS the middle portion of the printout lists the item number, numerical value, response latency, and the phrase selection of the rating. For the VAS, the middle portion of the printout lists the item number, rating value, response latency, and rating line anchors. At the bottom of the printout is a key lists of the descriptors that where rated. Rating scores are presented as a percentage, and a larger number indicates more extreme or stronger symptoms. See section #2b, above, for descriptions of anchors and polarity that are automatically scored in reverse, to unify positive and negative polarity for all items.

Comparison of Adaptive Visual Analog Scale and Paper Ratings

To compare the relative ratings of the computerized administration of the AVAS with traditional paper methods, we recruited 30 healthy adults from the community. Using a within-subjects design, all participants completed both the paper- and the computer-based measures of the Bodily Symptoms Scale (Cleare & Bond, 1995). Order of administration of the two types of measures was randomized to prevent systematic differences resulting from the order of administration of paper- and computer-based measures. The training portion of the computer-based AVAS rating was administered to all participants immediately prior to completing the rating items. None of the participants reported difficulty understanding or performing the computer-based measure. There were no significant differences by method of rating for total score on the Bodily Symptoms Scale (t29 = −.96, p =.35; AVAS M = 129.8, SD = 166.2; paper M = 118.1, SD = 155.2). The correlations across each of the 14 items for the two forms of administration ranged from an r =.40 (p =.08) to r =.99 (p <.001), with a median correlation of r =.92 (p <.001). Eight of the fourteen items had correlations greater than .92, two were greater than .83, and three had correlations greater than .62. The single correlation less than .62 (i.e., r = .40) was the very first item rated (Loss of Concentration).

To investigate why the lowest correlation occurred on the very first item, we would suggest pilot testing the rating scale of interest to determine whether this is a function of the item being rated or the fact that it was the first item on the list. Testing list placement as the source of the low agreement between the items could be accomplished by adding unrelated “dummy” items to the beginning of the list, and/or changing the presentation order of the ratings.

Software Program Utility and Conclusions

The use of visual analog scales has far exceeded the original uses proposed by its early creators and proponents (Freyd, 1923; Hayes & Patterson, 1921). These instruments have a long-standing history of use for data collection across a wide variety of disciplines, especially in the medical field (e.g., pain and fatigue assessments) and in biomedical research (e.g., self-perception and mood assessments). Given the popularity of these types of assessments, it is important to consider the benefits and limitations of the different response formats when choosing either the continuous (VAS) or discrete (DVAS) method. Results of studies comparing the two types of scales have found that each may yield different information. For example, Flynn and colleagues (2004) compared a VAS with a Likert scale to evaluate psychosocial coping strategies in a sample of college students. While the Likert (DVAS) scale captured a wider range of coping patterns, the VAS captured a greater number of relationships. A study of measurement preference compared the Likert to the VAS in 120 hospitalized children from 6 to 18 years old (van Laerhoven et al., 2004). The children’s responses showed a bias for consistently scoring at the left end of the VAS, even after it was revised in an effort to prevent that bias. Alternatively, when those children completed a 5-point Likert version of the same questionnaire (e.g., “very nice” to “very annoying”), they chose the middle, neutral option (i.e., “in between”) more frequently. With the exception of immigrant children (who liked marking the line on the VAS better), the children preferred to use the Likert scale regardless of age or gender. In addition to their preference for the Likert scale, the children overlooked, or chose not to answer, more of the items on the VAS (3.0%) compared to the Likert (0.5%). Despite the children’s general preference for the Likert scale, the comparison between responses on the two types of scales showed strong correlations among the seven response options (r =.76 to.82). Collectively, these studies illustrate that different individuals may respond differently to each of the scales, which may be a function of language skills (van Laerhoven et al., 2004), gender, age, physical, and/or intellectual abilities (Flynn et al., 2004). When using the AVAS, if you have concerns about your participants’ comfort with computerized response to questions, it is advisable to pilot test the AVAS with paper-pencil version of your questions to confirm the correspondence of these methods for your local sample.

Aside from the fact that these two types of scales may yield different information and that there may be a preferential bias for one method over the other, these two types of scales also present unique disadvantages that are important to consider when selecting which method to use in a given population. A drawback of the VAS is that raters may have difficulty understanding how to respond and may require instruction that is more detailed. For this reason, the AVAS has an option for standardized automated training to instruct the participant as to how to make their ratings. Another problem is the difficulty interpreting the clinical relevance or meaning of a 10 mm or 20 mm change on a VAS item compared to a one-point change on the DVAS which some believe is more easily interpreted (Brunier & Graydon, 1996; Guyatt, Townsend, Berman, & Keller, 1987). On the other hand, despite the evenly spaced intervals, a drawback of the DVAS is that the respondent may not consider the magnitude of the difference between the individual choices to be the same. An additional barrier to accurate assessments with the DVAS is if a rater is unable to decide what category to choose when they believe their most accurate response falls between categories.

While these disadvantages are relevant to both the computerized and paper-and-pencil measures, it is important to note that the widely used paper-and-pencil versions of this instrument have additional disadvantages. Use of the paper-and-pencil versions can be hampered by inconsistency in the instruction and practice procedures, difficulty in the construction of new or modified testing instruments, and variability in scoring reliability (e.g., different raters producing different measurements of a participant’s ratings). In response to these drawbacks, the Adaptive Visual Analog Scales (AVAS) has been designed as a flexible software package to standardize and expedite the creation, administration, and scoring of responses on multiple types of visual analog scales. The automated nature of the AVAS provides a standardized method of instruction and practice with an option for the participant to repeat instructions if desired. New scales may be constructed, or previous scales modified, to suit particular clinical or research needs. The program also standardizes scoring of VAS responses by automatically calculating and storing the measurement data of participants’ responses, thereby reducing the potential of introducing errors that may result from manual scoring and data entry. The scored data is automatically saved in an Excel file that is specific to the scale being administered, which provides for easy analyses or import to other statistical software.

As society continues to incorporate the use of computer technology at a rapid pace, computerized assessments will increasingly become the preferred format for testing. Automated scoring and data storage of research data will save vast amounts of time devoted to manual scoring, eliminate the variability between scorers, and eliminate loss of data by preventing the participant from skipping a response to an item. Collectively, the AVAS software package will provide researchers with efficient data collection and extraordinary flexibility for customization of testing parameters to suit a particular question or testing sample, both of which make the application of this software package exceedingly useful across a wide variety of disciplines.

Acknowledgments

This was sponsored by grants from the National Institutes of Health (R01-AA014988, R21-DA020993, R01-MH077684; and T32-AA007565). Dr. Dougherty gratefully acknowledges support from the William & Marguerite Wurzbach Distinguished Professorship. We thank Karen Klein (Research Support Core, Wake Forest University Health Sciences) for her editorial contributions to this manuscript.

Appendix

An example of output from the AVAS

References

- Ahearn EP. The use of visual analog scales in mood disorders: A critical review. Journal of Psychiatric Research. 1997;31:569–579. doi: 10.1016/s0022-3956(97)00029-0. [DOI] [PubMed] [Google Scholar]

- Aitken RC. Measurement of feelings using visual analogue scales. Proceedings of the Royal Society of Medicine. 1969;62:989–993. [PMC free article] [PubMed] [Google Scholar]

- Bond A, Lader MH. The use of analogue scales in rating subjective feelings. British Journal of Medical Psychology. 1974;47:211–218. [Google Scholar]

- Brunier G, Graydon J. A comparison of two methods of measuring fatigue in patients on chronic haemodialysis: visual analogue vs Likert scale. International Journal of Nursing Studies. 1996;33:338–348. doi: 10.1016/0020-7489(95)00065-8. [DOI] [PubMed] [Google Scholar]

- Casey R, Tarride JE, Keresteci MA, Torrance GW. The Erectile Function Visual Analog Scale (EF-VAS): A disease-specific utility instrument for the assessment of erectile function. Canadian Journal of Urology. 2006;13:3016–3025. [PubMed] [Google Scholar]

- Champney H. The measurement of parent behavior. Child Development. 1941;12:131–166. [Google Scholar]

- Choiniere M, Auger FA, Latarjet J. Visual analogue thermometer: A valid and useful instrument for measuring pain in burned patients. Burns. 1994;20:229–235. doi: 10.1016/0305-4179(94)90188-0. [DOI] [PubMed] [Google Scholar]

- Cleare AJ, Bond AJ. The effect of tryptophan depletion and enhancement on subjective and behavioral aggression in normal male subjects. Psychopharmacology. 1995;118:72–81. doi: 10.1007/BF02245252. [DOI] [PubMed] [Google Scholar]

- Dougherty DM, Dew RE, Mathias CW, Marsh DM, Addicott MA, Barratt ES. Impulsive and premeditated subtypes of aggression in conduct disorder: Differences in time estimation. Aggressive Behavior. 2007;33:574–582. doi: 10.1002/ab.20219. [DOI] [PubMed] [Google Scholar]

- Freyd M. The graphic rating scale. Journal of Educational Psychology. 1923;14:83–102. [Google Scholar]

- Flynn D, van Schaik P, van Wersch A. A comparison of multi-item Likert and visual analogue scales for the assessment of transactionally defined coping function. European Journal of Psychological Assessment. 2004;20:49–58. [Google Scholar]

- Gallagher EJ, Bijur PE, Latimer C, Silver W. Reliability and validity of a visual analog scale for acute abdominal pain in the ED. American Journal of Emergency Medicine. 2002;20:287–290. doi: 10.1053/ajem.2002.33778. [DOI] [PubMed] [Google Scholar]

- Guyatt GH, Townsend M, Berman LB, Keller JL. A comparison of Likert and visual analogue scales for measuring change in function. Journal of Chronic Diseases. 1987;40:1129–1133. doi: 10.1016/0021-9681(87)90080-4. [DOI] [PubMed] [Google Scholar]

- Hayes MHS, Patterson DG. Experimental development of the graphic rating method. Psychological Bulletin. 1921;18:98–99. [Google Scholar]

- Holmes S, Dickerson J. The quality of life: design and evaluation of a self-assessment instrument for use with cancer patients. International Journal of Nursing Studies. 1987;24:15–24. doi: 10.1016/0020-7489(87)90035-6. [DOI] [PubMed] [Google Scholar]

- Huskisson EC. Visual analogue scales. In: Melzack R, editor. Pain Measurement and Assessment. Raven Press; New York: 1983. pp. 33–40. [Google Scholar]

- Likert R. New Patterns of Management. McGraw-Hill Book Company, Inc; NY: 1961. [Google Scholar]

- Likert R. The Human Organization: Its Management and Value. McGraw-Hill Book Company, Inc; NY: 1963. [Google Scholar]

- Likert R. A technique for the measurement of attitudes. In: Hollander EP, Hunt RG, editors. Classic Contributions to Social Psychology. Oxford University Press; New York: 1972. [Google Scholar]

- Millis SR, Jain SS, Eyles M, Tulsky D, Nadler SF, Foye PM, DeLisa JA. Visual analog scale to rate physician – patient rapport. American Journal of Physical Medicine & Rehabilitation. 2001;80:324. [Google Scholar]

- Nyren O. Visual analogue scale. In: Hersen M, Bellack AS, editors. Dictionary of Behavioral Assessment Techniques. Pergamon Press; New York: 1988. [Google Scholar]

- Ohnhaus EE, Adler R. Methodological problems in the measurement of pain: a comparison between the verbal rating scale and the visual analogue scale. Pain. 1975;1:379–384. doi: 10.1016/0304-3959(75)90075-5. [DOI] [PubMed] [Google Scholar]

- Pfennings L, Cohen L, van der Ploeg H. Preconditions for sensitivity in measuring change: Visual analogue scales compared to rating scales in a Likert format. Psychological Reports. 1995;77:475–480. doi: 10.2466/pr0.1995.77.2.475. [DOI] [PubMed] [Google Scholar]

- Reips UD, Funke F. Interval level measurement with visual analogue scales in Internet-based research: VAS Generator. Behavior Research Methods. doi: 10.3758/brm.40.3.699. in press. [DOI] [PubMed] [Google Scholar]

- Scott J, Huskisson EC. Graphic representation of pain. Pain. 1976;2:175–184. [PubMed] [Google Scholar]

- Steiner M, Steiner DL. Validation of a revised visual analog scale for premenstrual mood symptoms: Results from prospective and retrospective trials. Canadian Journal of Psychiatry. 2005;50:327–332. doi: 10.1177/070674370505000607. [DOI] [PubMed] [Google Scholar]

- Uebersax JS. Likert scales: dispelling the confusion. [Accessed: December 18, 2007];Statistical Methods for Rater Agreement. 2006 website. Available at: http://ourworld.compuserve.com/homepages/jsuebersax/likert2.htm.

- Van Laerhoven H, van der Zaag-Loonen HJ, Derkx BH. A comparison of Likert scale and visual analogue scales as response options in children’s questionnaires. Acta Paediatrica. 2004;93:830–835. doi: 10.1080/08035250410026572. [DOI] [PubMed] [Google Scholar]

- Wewers ME, Lowe NK. A critical review of visual analogue scales in the measurement of clinical phenomena. Research in Nursing & Health. 1990;13:227–236. doi: 10.1002/nur.4770130405. [DOI] [PubMed] [Google Scholar]