Abstract

The role of visual feedback during the production of American Sign Language was investigated by comparing the size of signing space during conversations and narrative monologues for normally sighted signers, signers with tunnel vision due to Usher syndrome, and functionally blind signers. The interlocutor for all groups was a normally sighted deaf person. Signers with tunnel vision produced a greater proportion of signs near the face than blind and normally sighted signers, who did not differ from each other. Both groups of visually impaired signers produced signs within a smaller signing space for conversations than for monologues, but we hypothesize that they did so for different reasons. Signers with tunnel vision may align their signing space with that of their interlocutor. In contrast, blind signers may enhance proprioceptive feedback by producing signs within an enlarged signing space for monologues, which do not require switching between tactile and visual signing. Overall, we hypothesize that signers use visual feedback to phonetically calibrate the dimensions of signing space, rather than to monitor language output.

The perceptual loop theory of self-monitoring posits that auditory speech output is parsed by the comprehension system (Levelt, 1989; Postma, 2000). According to this theory, a centralized monitor is located in the conceptual processing system and receives input from both an inner (prearticulatory) loop and an outer (auditory) loop. The outer perceptual loop feeds auditory speech into the comprehension system where it is checked against the speaker's original intentions such that speech errors and appropriateness errors can be detected. This theory is particularly parsimonious because the same system that comprehends the speech of others can also be used to comprehend the speaker's own speech (Levelt, 1989). For sign language, however, visual input from one's own signing is distinct from visual input that the comprehension system receives from another's signing. Visual feedback during signing does not contain information about facial expressions, the view of the hands is from the back, movement direction is reversed, and manual signs tend to fall within the lower periphery of the visual field, where vision is relatively poor. Thus, the visual input that the comprehension system parses to understand the signing of other individuals is quite different from the visual input that the system receives from self-produced signing. To investigate whether visual feedback plays any role during sign production, we examined whether signers with visual impairments due to Usher syndrome alter the size of signing space. If changes in visual feedback alter the nature of sign production, then it would suggest that signers are indeed sensitive to visual feedback while signing.

Usher syndrome is an autosomal recessive genetic condition that causes serious hearing loss usually present at birth and progressive vision loss caused by retinitis pigmentosa (RP). RP causes night blindness and peripheral vision loss through the progressive degeneration of the retina. Deaf individuals with Usher syndrome begin to experience symptoms of RP, such as loss of peripheral vision, usually in adolescence or early adulthood. Over time, RP can lead to complete blindness. Deaf individuals in the United States who have Usher syndrome may acquire American Sign Language (ASL) during childhood from their deaf families or from exposure to ASL by deaf peers, educators, and/or hearing parents who learn ASL.

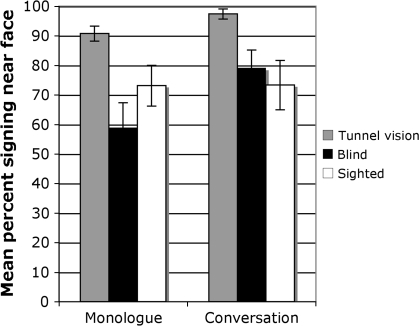

Anecdotal reports suggest that adult ASL signers with tunnel vision due to RP produce signs within a smaller signing space. An example is shown in Figure 1 taken from a commercially available videotape. The signer with Usher syndrome produced the ASL sign INTRODUCE high in signing space, close to his face. This sign is normally produced near the waist. If visual monitoring is important to sign production, this would explain why signers with tunnel vision have such a constrained signing space—they need to visually perceive their own output. It has also been observed, again anecdotally, that when signers with Usher syndrome become completely blind, their signing space increases. It is possible that these signers have been “released” from visual monitoring because they can no longer see their own output.

Figure 1.

Illustration of the sign INTRODUCE produced by a signer with Usher syndrome. The sign INTRODUCE is produced near the waist by normally sighted signers. The image of the signer with Usher syndrome is reproduced with permission from the video Deaf-Blind Getting Involved: A Conversation, produced by Sign Media, Inc., ©1992 Theresa Smith.

If signers with tunnel vision systematically produce signs within a restricted and raised signing space, but blind signers do not, it will provide evidence for the importance of visual self-monitoring during sign production. However, another possible explanation for such a result is that the restricted signing space is actually a signal to the sighted addressee to produce signs in a smaller space so that the individual with Usher syndrome can see their signing. That is, the person with Usher syndrome is indicating (either consciously or unconsciously) “sign like me so I can see you.” Because the face conveys grammatical information in ASL, signs need to be produced in a smaller space near the face in order to be perceived through the narrowed visual field of a person with tunnel vision. In contrast, completely blind signers comprehend ASL through tactile signing, and thus, such a communicative signal is not necessary. Tactile signing is a variety of ASL that is perceived by placing the hand lightly on the back of a signer's hand in order to perceive the signs through touch and movement (Collins & Petronio, 1998; Petronio, 1988; Petronio & Dively, 2006). When deaf-blind signers communicate with a normally sighted signer, they produce “visual” ASL but perceive tactile ASL using the hand-on-hand method of sign perception.

To investigate the size of signing space used by signers with visual impairment, we asked deaf signers with normal vision, with tunnel vision, or with no functional vision (blind signers) to communicate with a deaf ASL signer who had normal vision. To investigate whether a smaller signing space is used as a communicative signal for the other person to sign smaller, participants engaged in a conversation and produced a monologue in the form of a story narrative. If restricted signing space is a cue to the sighted signer to also use a smaller signing space, then signers with tunnel vision should use a smaller signing space only during conversation because monologues do not involve turn taking with the sighted signer.

Method

Participants

Fifteen deaf signers participated in the study. Five participants (three males and two females) were born with Usher syndrome and reported tunnel vision with a visual field of between 5 and 20 degrees. Five participants with Usher syndrome (two males and three females) reported no functional vision and used tactile ASL for communication. Five participants (three males and two females) were normally sighted ASL signers. All participants were prelingually deaf, had no cognitive disabilities, and used ASL (either visual or tactile) as their preferred and primary means of communication. Thirteen signers were native or near-native signers, exposed to ASL at birth or in early childhood. Two participants acquired ASL at age 11 (one who had tunnel vision and one with no functional vision).

Procedure

All participants interacted individually with a sighted deaf signer who was fluent in ASL. Participants engaged in a conversation about their life experiences and opinions about interpreters, and they produced a monologue in the form of a story narrative (The Three Bears story for 12 participants and a different story for 3 participants who were less familiar with The Three Bears story). The sighted interlocutor used tactile ASL with the functionally blind participants and visual ASL with the sighted signers and with the signers who had tunnel vision. The interlocutor was naive to the experimental questions under investigation. A video camera was placed next to the interlocutor such that a front view of the participant's signing was filmed. The participant's torso and the signing space around the head and shoulders were visible, and the participant's hands did not leave the field of view.

For each participant, the percent of signing that was produced near the face was measured using body landmarks that defined a rectangle that included the participant's head and upper torso. The lower limit of the rectangle was defined as the top third of the participant's chest (just below the shoulders); the upper boundary of the rectangle was defined as the top of the participant's head, and the sides of the rectangle were defined by the participant's shoulders. The percent of time that each participant's dominant hand fell within this rectangle while signing was calculated for the monologue and for an excerpt of conversation. The conversational excerpt was taken 5 min into the conversation and consisted of continuous signing that lasted from 1 to 2 min (average length = 1.6 min). The average monologue length was 2.1 min. The total average language sample was 3 min 36 s.

The percentage of time that signing occurred within this body-defined rectangle was the dependent measure, and the data were analyzed using a 3 (group: tunnel vision, blind, sighted signers) × 2 (genre: conversation, monologue) repeated-measures analysis of variance (ANOVA). For all analyses, variability is reported with repeated-measures 95% confidence interval (CI) half-widths based on single degree-of-freedom comparisons (Masson & Loftus, 2003).

Results

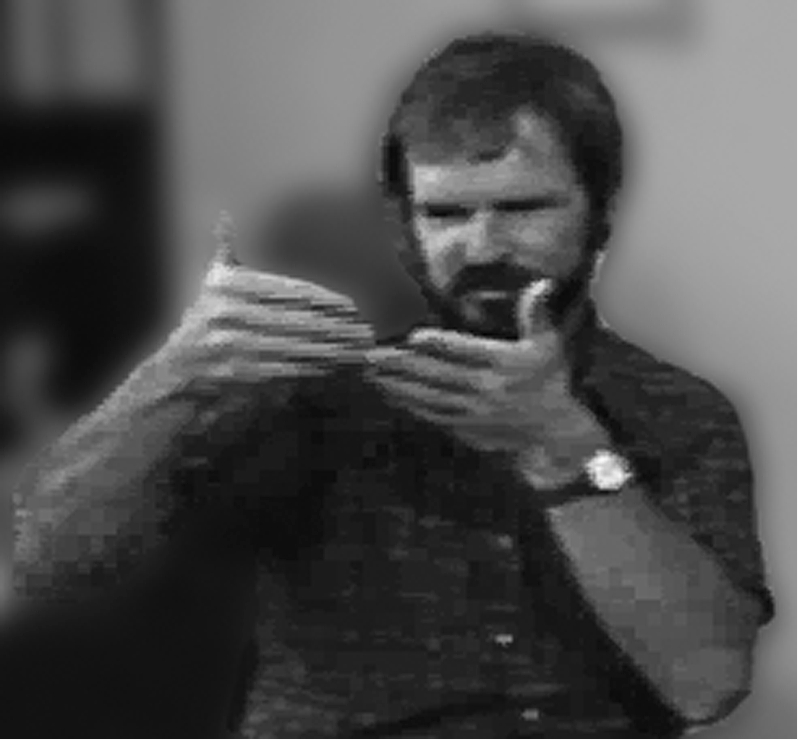

The results are shown in Figure 2. The mixed design repeated-measures ANOVA revealed a main effect of group, F(2, 12) = 6.306, p = .013, CI = ±16.47. The Tukey's Honestly Significant Difference test revealed that signers with tunnel vision produced more signing near the face than either sighted signers (p = .042) or blind signers (p = .016), whereas sighted and blind signers did not differ from each other (p =.846). The ANOVA also revealed that a greater percentage of signing was produced near the face for conversations than for monologues, F(1, 12) = 5.797, p = .033, CI = ±17.78. The interaction between participant group and genre did not reach significance, F(2, 12) = 2.485, p = .125, CI = ±30.79. However, planned comparisons revealed that signers with tunnel vision produced a higher percent of signing near the face during the conversation than the monologue (97.3% vs. 90.7%; t(4) = 5.32, p = .006), as did blind signers (79.1% vs. 58.9%; t(4) = 2.643, p = .057). The percent of signing near the face did not differ between conversation and monologue for the sighted signers (73% for both genres).

Figure 2.

Mean percent of signing that was produced near the face for signers with tunnel vision, blind signers (no functional vision), and normally sighted signers. Bars indicate standard error.

Discussion

The results confirm anecdotal reports that signers with tunnel vision due to Usher syndrome produce signs within a restricted signing space. The fact that these signers kept their hands near their face while signing a monologue suggests that they do so to maintain better visual feedback while signing, rather than to signal their addressee to sign smaller. Supporting this hypothesis is the finding that completely blind signers, who have no visual feedback, did not differ from normally sighted signers with respect to the amount of signing produced near the face. These results are consistent with a recent study by Emmorey, Gertsberg, Korpics, & Wright (2008). Emmorey et al. (2008) used Optotrak technology to study changes in the signing space of normally sighted signers who were blindfolded or wore goggles that created tunnel vision. The signing space of blindfolded signers was unaffected, but tunnel vision goggles caused signers to produce signs within a narrower vertical dimension of signing space. The temporary change in signing space caused by tunnel vision goggles for normally sighted signers was much less dramatic than the change we observed here for signers with tunnel vision due to Usher syndrome, but it was consistent across signers. Thus, results from both normally sighted signers and from signers with visual impairments indicate that eliminating the visual periphery reduces the size of signing space (particularly within the vertical dimension), but completely removing visual feedback does not alter the size of signing space (see also Siegel, Clay, & Naeve, 1992).

These data suggest that visual feedback plays at least some role during sign production. A potential reason for signing near the face is to prevent the hands from continually disappearing and reappearing within the visual field. Deaf signers are particularly sensitive to objects moving in and out of the visual periphery (Bavelier et al., 2000; Bosworth & Dobkins, 2001), and therefore, those with tunnel vision might adjust the size of signing space to avoid such visually distracting input. However, recently Arena, Finlay, and Woll (2007) found that normally sighted signers' hands frequently move outside their field of view. Arena et al. measured each signer's visual field and the location of their hands in signing space using Optotrak technology. Their results suggest that the smaller signing space we observed for signers with tunnel vision is unlikely to arise simply as an effort to avoid visual distraction because the hands move in and out of the visual field for normally sighted (as well as for visually impaired) signers.

Following Arena et al. (2007), we hypothesize that visual feedback may function primarily to calibrate the size of signing space with respect to where the hands appear within that space, rather than to keep the hands within view during signing. That is, signers with restricted vision reduce signing space in order to better estimate the dimensions of their signing and to visually assess the location of their hands. Normally sighted signers may also use visual feedback to phonetically calibrate the size of signing space and to help maintain visual awareness of where their hands are (in addition to proprioceptive awareness of the location of their hands).

We also found that signers with tunnel vision produced signs within an even more restricted signing space when conversing in a dialogue. We speculate that this additional reduction in signing space may result from a form of alignment (Pickering & Garrod, 2004), in which both participants in the dialogue align with respect to the size of signing space. That is, the normally sighted signer must sign within a restricted space near the face in order for the signer with tunnel vision to visually perceive the signing. Therefore, the signer with tunnel vision may match the restricted signing space of his or her interlocutor as a type of phonetic alignment. Both participants in the dialogue converge on a restricted signing space near the face. Just as speakers align with respect to accent and speech rate (Giles, Coupland, & Coupland, 1991; Giles & Powesland, 1975), signers may align with respect to the size of signing space.

For the blind signers, the size of signing space during conversation did not differ from that of normally sighted signers; rather, blind signers produced signs within a larger signing space during the monologue (i.e., a smaller percentage of signing was near the face; see Figure 2). One possible explanation for the enlarged signing space during the monologue is that blind signers must rely solely on kinesthetic monitoring, and increasing the size of hand and arm movements increases the proprioceptive signal (i.e., the sense of the location and position of the hand and arm with respect to the body). Enhancing proprioceptive feedback may aid self-monitoring for completely blind signers. During a conversation, however, these signers frequently switch between perceiving tactile signing and producing visual signing. The hand-on-hand method of perceiving tactile ASL requires that signs be produced within a smaller signing space in front of the body so that the blind perceiver can easily feel the signs. We speculate that blind signers may also produce visual ASL within a smaller signing space, thus aligning with their conversational partner who is producing tactile signing within a restricted space. As a consequence, signing space is reduced for conversation compared to monologues.

What do these modality differences in perceptual feedback imply for models of language production? Models that can account for monitoring of both signed and spoken language output should be favored based on parsimony. The fact that we found a systematic reduction in signing space for signers with tunnel vision provides some evidence for the perceptual loop theory, as applied to sign language monitoring. However, it is currently unclear whether the visual input received from one's own signing could be parsed and understood by the comprehension system (e.g., critical information about nonmanual markers is absent). Our results also provide support for psycholinguistic models that posit a role for proprioceptive feedback for monitoring speech (e.g., Lackner & Tuller, 1979). It is likely, however, that signers and speakers rely differentially on proprioceptive monitoring. The fact that speakers detect fewer errors during mouthed speech than during voiced speech indicates that they do not rely solely on proprioceptive monitoring (Postma & Noordanus, 1996). Auditory feedback provides essential information about acoustic properties of self-produced speech that is not available through proprioception, such as voicing or nasalization. Postma (2000) hypothesizes that proprioceptive and somatosensory monitoring of speech are critical for fine tuning and calibrating the production of speech but may play little role in error detection. In contrast, we hypothesize that visual monitoring of sign is used to fine-tune production, whereas proprioceptive and somatosensory monitoring are used to detect sign errors.

Some evidence for error detection based on proprioception is found in the self-corrections and repairs produced by the blind signers. For example, one blind signer wanted to fingerspell “Salt Lake,” and he fingerspelled S-L-A, interrupted himself, and then produced the correct spelling (S-A-L-T). In this case, error detection depended on feeling the position of the fingers and contact between the fingers that formed the letter handshapes. Another blind signer started to sign FATHER (five handshape moves toward the forehead where the sign is made) but switched to the compound MOTHER-FATHER (parents) before completing the sign. In this case, error detection required sensing the position and trajectory of the arm moving in space. Several of these types of corrections were observed, indicating that blind signers can detect production errors based entirely on proprioceptive and somatosensory feedback.

To conclude, models of human language processing should be able to account for data from both signed and spoken languages. Our data suggest that if the perceptual loop hypothesis for self-monitoring is to be maintained, the comprehension system must be able to access phonological representations by parsing either a motor (i.e., proprioceptive) signal or a sensory (visual or auditory) signal.

Funding

National Institutes of Health (grant R01 DC13249).

Acknowledgments

We thank Victor Ferreira, Tamar Gollan, Robert Slevc, and Bencie Woll for helpful comments on an earlier draft of the manuscript and Michael Schilling II for help with data collection. Finally, we would like to thank all the deaf and deaf-blind signers who participated in this research. No conflict of interest declared.

References

- Arena V, Finlay A, Woll B. Seeing sign: The relationship of visual feedback to sign language sentence structure. 2007, March. [Google Scholar]

- Bavelier D, Tomann A, Hutton C, Mitchell T, Corina D, Liu G, et al. Visual attention to the periphery is enhanced in congenitally deaf individuals. Journal of Neuroscience. 2000;20:RC93. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosworth RG, Dobkins KR. The effects of spatial attention on motion processing in deaf and hearing subjects. Brain and Cognition. 2001;49:170–181. doi: 10.1006/brcg.2001.1497. [DOI] [PubMed] [Google Scholar]

- Collins S, Petronio K. What happens in tactile ASL? In: Lucas C, editor. Pinky extension and eye gaze: Language use in Deaf communities. Washington, DC: Gallaudet University Press; 1998. pp. 18–37. [Google Scholar]

- Emmorey K, Gertsberg N, Korpics F, Wright CE. The influence of visual feedback and register changes on sign language production: A kinematic study with deaf signers. 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giles H, Coupland N, Coupland J. Accommodation theory: Communication, context, and consequence. In: Giles H, Coupland N, Coupland J, editors. Contexts of accommodation: Developments in applied sociolinguistics. Cambridge, United Kingdom: Cambridge University Press; 1991. pp. 1–68. [Google Scholar]

- Giles H, Powesland PF. Speech styles and social evaluation. London: Academic Press; 1975. [Google Scholar]

- Lackner JR, Tuller BH. Role of efference monitoring in the detection of self-produced speech errors. In: Cooper WE, Walker ECT, editors. Sentence processing. Hillsdale, NJ: Erlbaum; 1979. pp. 281–294. [Google Scholar]

- Levelt WJM. Speaking: From intention to articulation. Cambridge, MA: Massachusetts Institute of Technology Press; 1989. [Google Scholar]

- Masson MEJ, Loftus GR. Using confidence intervals for graphically based data interpretation. Canadian Journal of Experimental Psychology. 2003;57:203–220. doi: 10.1037/h0087426. [DOI] [PubMed] [Google Scholar]

- Petronio K. Interpreting for deaf-blind students: Factors to consider. American Annals of the Deaf. 1988;133:226–229. doi: 10.1353/aad.2012.0799. [DOI] [PubMed] [Google Scholar]

- Petronio K, Dively VL. YES, #NO, visibility and variation in ASL and tactile ASL. Sign Language Studies. 2006;7(1):57–98. [Google Scholar]

- Pickering MJ, Garrod S. Toward a mechanistic psychology of dialogue. Behavioral and Brain Sciences. 2004;27:169–226. doi: 10.1017/s0140525x04000056. [DOI] [PubMed] [Google Scholar]

- Postma A. Detection of errors during speech production: A review of speech monitoring models. Cognition. 2000;77(2):97–132. doi: 10.1016/s0010-0277(00)00090-1. [DOI] [PubMed] [Google Scholar]

- Postma A, Noordanus C. Production and detection of speech errors in silent, mouthed, noise-masked, and normal auditory feedback speech. Language and Speech. 1996;39:375–392. [Google Scholar]

- Siegel GM, Clay JL, Naeve SL. The effects of auditory and visual interference on speech and sign. Journal of Speech and Hearing Research. 1992;35:1358–1362. doi: 10.1044/jshr.3506.1358. [DOI] [PubMed] [Google Scholar]