Abstract

We report a new brain signature of memory trace activation in the human brain revealed by magnetoencephalography and distributed source localization. Spatiotemporal patterns of cortical activation can be picked up in the time course of source images underlying magnetic brain responses to speech and noise stimuli, especially the generators of the magnetic mismatch negativity. We found that acoustic signals perceived as speech elicited a well-defined spatiotemporal pattern of sequential activation of superior–temporal and inferior–frontal cortex, whereas the same identical stimuli, when perceived as noise, did not elicit temporally structured activation. Strength of local sources constituting large-scale spatiotemporal patterns reflected additional lexical and syntactic features of speech. Morphological processing of the critical sound as verb inflection led to particularly pronounced early left inferior–frontal activation, whereas the same sound functioning as inflectional affix of a noun activated superior–temporal cortex more strongly. We conclude that precisely timed spatiotemporal patterns involving specific cortical areas may represent a brain code of memory circuit activation. These spatiotemporal patterns are best explained in terms of synfire mechanisms linking neuronal populations in different cortical areas. The large-scale synfire chains appear to reflect the processing of stimuli together with the context-dependent perceptual and cognitive information bound to them.

Keywords: inflectional affix, language, MEG, noise, spatiotemporal pattern, speech

An important question in cognitive neuroscience addresses the nature of memory traces that store experiences, familiar objects, and spoken words in the human brain. Neurophysiological studies in monkeys have demonstrated local cortical circuits generating precisely timed sequences of nerve cell activation, so-called synfire chains, that may contribute to specific perceptual, cognitive, and behavioral processes (Abeles 1991; Abeles et al. 1993; Prut et al. 1998; Ikegaya et al. 2004). However, memory circuits are not necessarily local. Cognitive processing implies binding of information across modalities (auditory and motor in the case of spoken words Rizzolatti et al. 2001), and the circuits storing such cross-modality information span wide cortical areas (Fuster et al. 2000; Fuster 2003). Therefore, neuronal populations in different areas of cortex may become active in a defined spatiotemporal order indexing specific stimulus information (Pulvermüller 1999; Fuster 2003; Feldman 2006; Plenz and Thiagarajan 2007). To capture the activation of interarea circuits, it is necessary to investigate the dynamic spatiotemporal patterns with which excitation emerges in different cortical areas and, especially, their stimulus specificity. Functional imaging studies measuring metabolic change suffer from the slowness of such change, which does not allow the tracking of the exact timing of neuronal activation spreading in the millisecond range. However, such interarea spatiotemporal mapping of memory circuits is possible using whole-brain neurophysiological imaging with magnetoencephalography (MEG).

At the large-scale level of whole-brain recordings with MEG, 1 early brain response, which peaks already between 100 and 200 ms after critical stimulus onset, has proven fruitful in revealing the existence of memory traces in the human brain. This brain response, the mismatch negativity (MMN) and its magnetic equivalent, has been found to be larger to familiar language sounds than to sounds of a foreign language (Näätänen et al. 1997, 2001) and is even enhanced to meaningful words and sounds as compared with meaningless sound sequences (Korpilahti et al. 2001; Pulvermüller et al. 2001; Pettigrew et al. 2004; Frangos et al. 2005; Shtyrov et al. 2005; Hauk et al. 2006; Pulvermüller and Shtyrov 2006). The MMN may therefore be a useful tool for investigating the brain dynamics of speech and sound processing.

Large-scale neurophysiological imaging methods, including MEG, measure neuronal mass activity and are capable of mapping its time course with greatest precision. They do not, however, provide direct information about the locus of the sources that contribute to these dynamics. Inherent to the method is the problem of determining sources in a 3-dimensional space from a 2-dimensional surface topography. This so-called inverse problem does not have a unique solution (von Helmholtz 1853), and it is for this very reason that source estimation procedures require assumptions restricting the space of possible solutions (Hämäläinen et al. 1993; Ilmoniemi 1993). Methods calculating equivalent current dipoles, ECDs, build upon the assumption that surface topographies are produced by single dipoles or sets of point sources. Difficulties of single or multiple dipole approaches emerge from the distributed character of most cortical activations, which is misrepresented by dipoles, inaccuracies in the estimation of source depth, and difficulties in a priori determining the number of sources (see, e.g., Hauk 2004; Huang et al. 2006).

Distributed source estimation techniques are not subject to these problems, as a large number of concurrently active sources is allowed, and a number of source constellations are determined, which all explain the surface topography equally well. One of these solutions is then selected because it is most parsimonious according to mathematically defined criteria. The most established approach, the so-called L2 norm or classical minimum norm solution, minimizes the sum of squares of strength for all coactive sources, and the L1 norm is based on the sum of rectified source strengths (Ilmoniemi 1993; Hämäläinen and Ilmoniemi 1994; Fuchs et al. 1999). These methods also do have their own specific limitations and disadvantages. The L2 norm is known to make focal sources appear more distributed, resulting in limited spatial resolution, and making it difficult to separate overlapping source time courses, especially if the sources are close together. L1 norm solutions can, in principle, localize focal sources and are therefore less likely to lead to interference between source time courses. However, limitations of this method include its high computational demands, temporal discontinuities (spiking character of source time courses), and spatial instability of the solution (great activation differences between adjacent sources). There are ways to overcome these limitations, for example, by collapsing source data over regions of interest (ROIs) (to compensate for spatial instability) and by sacrificing some of the excellent temporal resolution of MEG (to compensate for temporal discontinuity). In essence, L1 methods have a great potential of tracking the specific time courses of distant cortical sources, while still outperforming L2 in spatial resolution and fMRI in the temporal domain (Uutela et al. 1999; Stenbacka et al. 2002; Pulvermüller et al. 2003; Auranen et al. 2005; Osipova et al. 2006).

Neuroimaging studies applied different strategies to pin down cortical dynamics of memory circuits. Metabolic imaging showed, and it is generally agreed, that language elements, for example, meaningful words and sounds distinguishing between them, are cortically stored as distributed neuronal ensembles binding perception, articulation, and semantic information (Barsalou 1999; Pulvermüller 1999, 2005; Fuster 2003; Rizzolatti and Craighero 2004; Feldman 2006; Kiefer et al. 2007). One approach to tackling the dynamics of memory circuit activation in the brain therefore compares meaningful spoken language with nonlinguistic sounds with similar acoustic spectrotemporal characteristics, for example, speech and signal-correlated noise, SCN (Scott and Johnsrude 2003). However, in this case, the spectrotemporal match is usually not perfect and differences in brain activation may therefore be driven by the remaining acoustic differences between speech and noise stimuli. A different approach uses familiar meaningful stimuli, for which a memory trace is present in the brain, and meaningless items of a very similar type as controls, as in the comparison of spoken words and meaningless pseudowords (Holcomb and Neville 1990; Compton et al. 1991; Bentin et al. 1999). If averages over large numbers of words and pseudowords are taken, it may appear unlikely that acoustic, phonetic, and phonological features of the stimulus groups differ. However, as a large number of features may differ between different speech and speech-like stimuli, excluding all possibly relevant confounds by stimulus matching appears impossible.

A solution to the multiple confounds problem in speech research is possible adopting a strategy well established in psychoacoustics, namely investigating brain responses to identical stimuli, whose perceptual and cognitive processing is being changed by different contexts (cf. Micheyl et al. 2003; Carlyon 2004). A research strategy for neuroscience experiments of this type is offered by the MMN paradigm (Näätänen et al. 2001). Brain responses to frequently presented context stimuli are recorded along with responses to rare deviant stimuli, each of which consists of the context stimulus cross-spliced with the critical stimulus attached to its end. As frequent standard stimulus and rare deviant stimulus are, in this case, identical up to the starting point of the critical stimulus, it becomes possible, by calculating the MMN brain response, to subtract out the contribution of the context, just leaving the brain response to the critical part of the deviant stimulus as perceived in the respective context (Pulvermüller and Shtyrov 2006). By changing contexts and subtracting out its contribution to the brain response, specific perceptual and linguistic processes triggered by the critical stimulus in these contexts can thus be monitored.

Here, we chose contexts that made the critical stimulus item either part of a meaningful word, thus activating a memory network in the brain, or part of a noise stimulus, therefore also changing its perceptual characteristics. Interestingly, there are language sounds that only sound like speech when presented in the context of speech but are, however, perceived as unfamiliar noise if presented in isolation or embedded into sounds other than speech (Liberman 1996). The word-final phonemic sound [t] in Finnish, for example, can signal a meaningful grammatical word ending when placed after the stem of a noun or verb. If the brief noise constituting the plosion of the [t] terminates a meaningless spoken syllable, it does not have a clear role as part of a meaningful item, even though it is still perceived as the spoken sound [t]. However, in the context of noise, the very same critical stimulus is perceived as a meaningless unfamiliar chirp-like sound. As a function of context, the same noise burst can therefore generate different percepts thereby creating the opportunity to study acoustic and linguistic processing.

In the present study, we investigated MEG brain activity elicited by brief noise bursts placed in 4 different contexts (noise, pseudoword, noun, verb context). Previous research indicated that the magnitude and location of cortical activation can distinguish between speech and noise (Palva et al. 2002; Scott and Johnsrude 2003; Uppenkamp et al. 2006). Furthermore, there is much discussion about whether even fine-grained morphological and syntactic information linked to nouns and verbs may be reflected by specific local cortical processes (Hillis and Caramazza 1995; Shapiro and Caramazza 2003b; Bak et al. 2006). Here we ask whether the timing of cortical source activation is speech specific and possibly reflects word and morpheme type.

Materials and Methods

Subjects

Participants were 16 healthy right-handed (Oldfield 1971) monolingual native speakers of Finnish aged 21–39 (6 males) without left-handed family members. They had normal hearing and did not report any history of neurological illness or drug abuse. They were paid for their participation after signing an informed consent form. Ethical permission for the study was granted by the Helsinki University Central Hospital Ethics Board.

Stimuli

The standard stimuli were the syllables vyö, lyö, and ryö and a spectrally similar SCN. The same 4 sounds with the addition of the consonant [t] in the end—which, in noise context, sounded like a chirp noise—were used as deviant stimuli in their respective recording sessions. Whereas the first and the second word are stems of a Finnish noun and verb, respectively, meaning “belt” and “hit”, the third item is a meaningless pseudoword in Finnish. For stimulus production, the CVV syllables vyö, lyö, and ryö were spoken repeatedly by a female native speaker of Finnish and recorded digitally (sampling rate 44.1 Hz). As acoustic events following each other within a window of approximately 200 ms may, depending on their spectrotemporal similarity, perceptually interact with each other, possibly giving rise to phenomena such as differential auditory masking, illusions, or streaming (Näätänen 1995; Fishman et al. 2001; Micheyl et al. 2003; Carlyon 2004), great effort was spent to match the stimuli for a range of acoustic features. To this end, tokens of each syllable type with the same duration and F0 frequency and matched with regard to the envelope of the acoustic wave form were selected and adjusted to have the same sound energy (root mean square of the signal). For producing SCN, we used the spectral characteristics obtained from the speech stimuli using the Fast Fourier Transform. To further approximate the spoken stimulus properties, the amplitude of the noise stimulus was modulated using the temporal envelope of the speech signals. This meticulous stimulus generation was done to ensure, as carefully as possible, that stimuli were matched for acoustic and spectrotemporal characteristics, including length, acoustic energy, spectral composition, and temporal envelope. The resulting 4 “standard” stimuli were all 310 ms long. The final [t] sound in the 4 “deviant” counterparts of these stimuli was obtained from the recording of a similarly matched syllable työt (to avoid a coarticulation bias, which would have resulted in case the [t] had been spoken directly after one of the actual standards) and was then cross-spliced onto each original CVV syllable after a silent closure time of 55 ms. Stimulus length for these slightly longer “deviant” stimuli was 400 ms, and the onset of the final noise constituting the [t] was always at 365 ms. The word final [t] sound always indicates nominative plural on nouns and second-person singular on verbs. The affixed pseudoword was meaningless.

To examine the influence of context on the perception of the critical stimulus, the cross-spliced [t]/noise, 7 subjects different from the MEG participants were presented with the 4 deviant stimuli and, in a separate condition, the critical stimulus out of context. Stimulus order was randomized separately for each participant. Ratings of speech likeness of the critical sound on a 10-point scale (1—not speech like, 10—like natural speech) differed between noise and speech contexts (context lyö: mean = 6.86 [SE = 0.63], vyö: 7.71 [0.64], ryö: 6.29 [0.99], noise: 3.00 [1.09], out-of-context: 3.29 [0.94], F4,24 = 8.39, P < 0.00022). These psychoacoustic data confirm that the contexts biased the auditory system toward noise or speech perception of the critical stimulus, that is, toward a chirp or [t] sound.

Design

In 4 separate blocks, each pair of standard and deviant stimuli, which only differed in the presence or absence of the final chirp noise, was presented in a passive oddball task. In each experimental block, standard and deviant stimuli occurred with a probability of 0.86 and 0.14, respectively. The sequence was pseudorandomized and block order counterbalanced. Presentation was binaural at 50 dB above the individual hearing threshold and the stimulus-onset asynchrony was 900 ms. Crucially, the same critical stimulus and the same acoustic contrast were present in all 4 contexts, that of noun, verb, pseudoword, and noise. As the MMN reflects acoustic stimulus features and especially acoustic contrasts between standard and deviant stimulus (Näätänen and Alho 1997), the present paradigm controls for acoustic effects on the brain response. A minimum of 150 artifact-free deviant trials were recorded in each block, thus resulting in slightly over 60′ experimental time. To further control for any possible acoustic effects of the final plosion sound on the MMN, we also obtained brain responses (300 artifact-free trials minimum) to the 4 longer stimuli, each presented repetitively as frequent standard stimulus in a separate recording block. Subjects were instructed to ignore the auditory stimuli but focus their attention on a self-selected video film presented throughout the recording. Video films were silent and did not contain written language.

MEG Recording and Data Analysis

Subjects were comfortably seated under the MEG helmet after head coordinates and head shapes had been recorded using a 3D-Space Fasttrack Digitisation system to allow for anatomical colocalization. Nasion and preauricular points were used as anatomical anchor points. The evoked magnetic fields were recorded (passband 0.03–200 Hz, sampling rate 600 Hz) with a whole-head 306-channel MEG setup (Elekta Neuromag, Helsinki, Finland) during the auditory stimulation (Ahonen et al. 1993). The recordings started 100 ms before stimulus onset and ended 900 ms thereafter. The responses were on line averaged separately for all types of stimuli in each condition. Epochs with voltage variation exceeding 150 μV at either of 2 bipolar eye movement electrodes or with field intensity variation exceeding 3000 femtotesla per centimeter (fT/cm) at any MEG channel were excluded from averaging. The averaged responses were filtered off-line (passband 1–20 Hz), and linear detrending was applied on the entire epoch. A silent period of 50 ms before the critical stimulus (chirp/[t] sound) onset was used as the baseline.

The so-called “identity MMN” (see Pulvermüller and Shtyrov 2006) was obtained for each context condition, noun, verb, pseudoword, and noise, by subtracting the averaged neurophysiological response to the deviant stimulus by the response to the same identical stimulus presented as a frequently repeated item (“control deviant stimulus”, see also Fig. 1). Estimation of distributed cortical sources was performed using the L1-Minimum Current Estimation module of the Elekta Neuromag MEG analysis software (Uutela et al. 1999). Singular value decomposition (SVD) was applied to reduce the influence of noise (Uusitalo and Ilmoniemi 1997). To reduce the temporal instability of L1 solutions, source estimations were performed over time intervals of 5 ms. Source solutions were calculated independently for each subject, condition, and time step. To standardize the individual solutions, the current estimates of each subject and the grand average source constellations were projected on the 1231 possible source loci of a triangularized gray matter surface of an averaged brain (Uutela et al. 1999). For statistical evaluation, maximum activation values and activation latencies were computed for relatively large (radii ranging between 1 and 2 cm) ellipsoid ROIs, capturing the majority of the inferior–frontal, inferior–central, and superior–temporal areas. These areas were selected because they are most significant for acoustic and speech processes (Pulvermüller et al. 2003; Uppenkamp et al. 2006). Large ROIs were chosen to minimize the likelihood of spatial errors and to overcome the spatial instability of local L1 solutions. Figure 2 indicates the left hemispheric inferior–frontal, inferior–central, and superior–temporal ROIs on the triangularized gray matter surface. Homotopic regions were used for the right hemisphere. Maximal source strengths in a time window of 50–250 ms after critical stimulus onset were extracted for each subject and condition in the 3 ROIs in both hemispheres. Significance of activation was tested by comparing activation with baseline activity in the same ROIs. ROI-specific activation latencies were determined by measuring the point in time where 50% of the local maximal activation was reached for the first time (latency of half maximum). Local source strengths and latencies were then compared between ROIs and context conditions using repeated-measures analyses of variance and planned comparison F-tests.

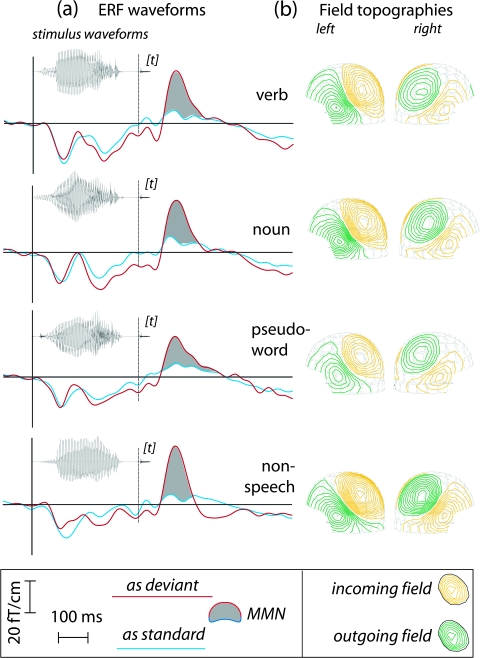

Figure 1.

Stimuli, event-related fields and MMN topographies. (a) Critical stimuli presented as frequently repeated items (blue curves) and rare deviant stimuli (red curves) of an oddball paradigm elicited event-related fields that did not differ between each other up to the final [t] sound (vertical lines in the middle). 100–150 ms after this critical sound, there was a small N100m response when stimuli were repeatedly presented (see blue curves), with a MMN overlaid on top of it when the critical [t] sound was the rare deviant stimulus (see red curves). The critical difference, the MMN, is shaded in gray. (b) The diagrams on the right show the topographies of the MMN recorded above the left and right hemispheres.

Figure 2.

Top diagram: Left hemispheric activation landscape obtained in the present experiment, including foci in superior–temporal, central, and inferior–frontal cortex. Yellow ellipses indicate the ROIs that formed the basis of statistical source analysis. The time course of activation in the 3 ROIs is shown for the grand average of source estimates for all stimuli taken together.

Results

General Pattern of Activation

Standard stimuli including the context stimuli (noise and CVV stimuli without the critical stimulus, the final [t] sound) and frequently repeated “control deviant” stimuli (which also included the critical stimulus) elicited relatively flat responses reflecting the acoustic dynamics in the input. Critically, event-related fields did not distinguish between the 4 “control deviants,” which were used in the calculation of MMNs. A reliable N100m complex followed the [t] sound in all conditions but did not significantly differentiate between them (blue lines in Fig. 1a). In contrast, the same items (CVV/noise plus critical stimulus) presented as rare deviant stimuli produced pronounced differences in brain activation between the 4 conditions (red curves in Fig. 1a). These were equally manifest in the magnetic MMN.

The subtraction of “control deviant” from deviant responses yielded identity MMNs peaking at 100–150 ms after onset of the [t] sound (shaded areas in Fig. 1a). The percentage of activity underlying the MMN peak that was explained by ROIs (MMN sources in all ROIs divided by the sum of all sources in the entire brain volume) was 92.7%. This implies that the a priori choice of ROIs was appropriate for capturing most of the variance in MMN activation. ROI analysis revealed significant left hemispheric sources of the MMN for each of the 4 deviant stimuli in superior–temporal, inferior–central, and inferior–frontal ROIs (F values > 4, P values < 0.05). A typical left hemispheric activation pattern is shown in Figure 2, with ROIs and their dynamics indicated. Whereas superior–temporal and inferior–central activation were also present in the right hemisphere for all deviants, reliable right inferior–frontal activation significantly above the level of the baseline was only seen in the noise and pseudoword conditions. Left hemispheric and superior–temporal sources underlying the MMN revealed differences between conditions in the timing of their activation and in their activation strength.

Activation Time Course Reflects Speech–Nonspeech Distinction

Analysis of MMN activation times in different ROIs indicated simultaneous superior–temporal activation in both hemispheres (95 ms on both sides, difference nonsignificant [ns]). Within the left hemisphere, activation times differed between ROIs, F2,30 = 4.57, P < 0.02. Inferior–frontal activation tended to follow upon superior–temporal activation; the inferior–central focus was sparked near simultaneously with the latter. Figures 3 and 4 indicate and statistical analysis confirmed that the activation time difference between superior–temporal and inferior–frontal areas depended on stimulus context. Interestingly, the delay of inferior–frontal relative to superior–temporal activation was significant for linguistic contexts constituted by nouns, verbs, and pseudowords, F1,15 = 11.81, P < 0.004, but there was no such difference in ROI activation times for the same critical stimulus presented in noise context (F < 1, Fig. 3).

Figure 3.

Timing of inferior–frontal (IF), inferior–central (IC), and superior–temporal (ST) source activations. Averages over all subjects (bars) and standard errors (lines) of the latencies of half maxima are shown relative to critical stimulus onset. Note the significant differences between ST and IF activation times in the speech conditions but not in the noise condition.

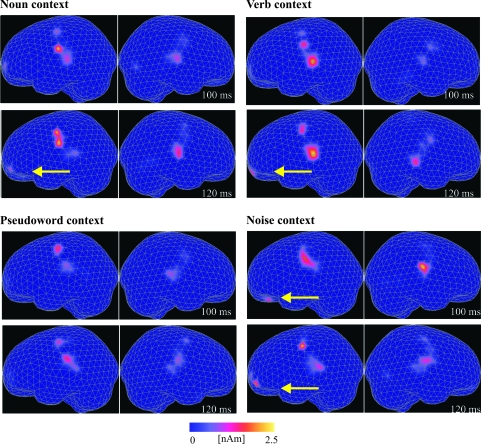

Figure 4.

Illustration of spatiotemporal patterns of MMN brain activity elicited when the critical stimulus was presented in the noun, verb, pseudowords, and noise contexts. Stimulus-specific grand averages of activation landscapes are illustrated for 2 time steps, 100 and 120 ms after critical stimulus onset. Source estimates for the left and right hemisphere are shown side by side for each time segment. Note yellow arrows indicating that superior–temporal and inferior–frontal sources in the left hemisphere emerged in sequence for words (100 vs 120 ms; see set of diagrams at the top) and simultaneously for noise (100 ms in both areas; diagrams on the lower right).

Critically, the difference in spatiotemporal patterns between speech and noise became manifest in a statistically significant interaction between the factors stimulus context (speech vs noise) and ROI (inferior–frontal vs superior–temporal), F1,15 = 5.37, P < 0.04. Half-maxima of superior–temporal cortex activation were measured at 97 ms for word and at 91 ms for pseudoword contexts (difference ns); those in inferior–frontal cortex followed after a 12 ms delay at 109 and 103 ms, respectively. The comparison of inferior–central to inferior–frontal activation tended to show the same differences, but in this case statistical tests did not reach significance (Fig. 3).

Local Source Strengths Reflect Linguistic Differences

The strength of early local cortical source activation distinguished between noun, verb, pseudoword, and noise contexts. An analysis of variance compared maximal source strengths in ROIs with significant activation across all stimulus context conditions (left inferior–frontal, inferior–central, and superior–temporal and right superior–temporal). There was a main effect of ROI, F3,45 = 9.14, P < 0.0001, and, critically, an interaction of the stimulus context and ROI factors, F9,135 = 4.27, P < 0.0001 (Figs 5 and 6).

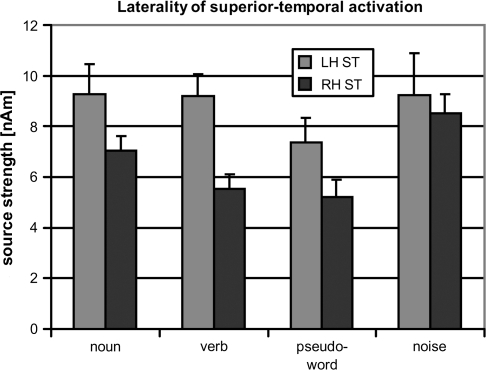

Figure 5.

Differential laterality of superior–temporal source strengths. Averages over all participants (bars) and standard errors (lines) of ROI-specific activation are given. Significant superior–temporal laterality emerged for speech but not for noise contexts. LH - left hemisphere, RH - right hemisphere, ST - superior-temporal ROI.

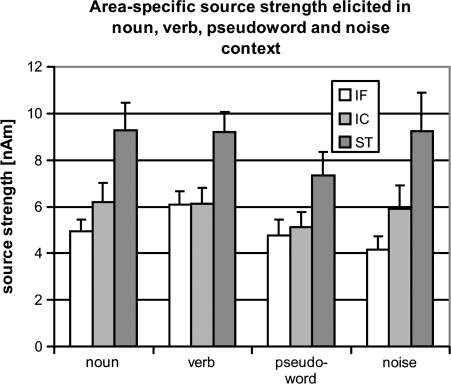

Figure 6.

Maximal source strengths elicited in the superior–temporal, inferior–central and inferior–frontal ROIs in the left hemisphere. Averages over all participants (bars) and standard errors (lines) of ROI-specific activation are shown. ST - superior-temporal, IC - inferior-central, IF - inferior-frontal.

Superior–temporal sources in the left and right hemispheres were compared between the 4 conditions, yielding main effects of laterality (stronger sources in the left hemisphere; F1,15 = 4.25, P < 0.05) and stimulus context (activation strong for noise and words but weak for pseudowords; F3,45 = 3.08, P < 0.04). The interaction of both factors was also significant, F3,45 = 4.56, P < 0.007, demonstrating that laterality changed with stimulus context (Fig. 5). Planned comparison tests documented significant laterality of superior–temporal ROI activation for linguistic contexts (noun, F1,15 = 4.40, P < 0.03; verb, F1,15 = 10.37, P < 0.003; pseudoword, F1,15 = 5.56, P < 0.02) but not for noise context.

The right hemispheric superior–temporal source was largest for noise context and significantly reduced relative to noise for all speech conditions (F values >8, P values <0.01). Noun contexts yielded stronger right superior–temporal activation than verb, F1,15 = 9.59, P < 0.004, and pseudoword contexts, F1,15 = 3.59, P < 0.04.

The left hemispheric superior–temporal source was significantly stronger for word than for pseudoword contexts, F1,15 = 3.23, P < 0.05 (cf. Pulvermüller et al. 2001), without a general significant difference between noise and speech stimuli. Left hemispheric inferior–central activation, which occurred near simultaneously with the left superior–temporal activation, showed a tendency toward the same effects, but comparisons did not reach significance.

Source dynamics in inferior–frontal cortex also showed differences between stimulus contexts, F3,45 = 2.66, P < 0.05. Figure 6 plots source strengths obtained in the 3 left hemispheric ROIs in all 4 conditions. Verb context led to strongest activation (6.1 nAm), followed by noun (4.9 nAm) and pseudoword (4.8 nAm) contexts; noise contexts elicited the smallest activation (4.2 nAm). As the critical stimulus in verb contexts elicited stronger activation than in noun context, F1,16 = 4.40, P < 0.03, it appears that particularly strong inferior–frontal activation observed in this study reflected the processing of grammatical features of inflectional affixes of verbs.

Discussion

This study investigated spatiotemporal dynamics of local cortical sources elicited by identical stimuli presented in different contexts, where they were perceived as noise, meaningless sound, and grammatical suffix of noun and verb, respectively. Main findings were the following.

Timing of local cortical source activations distinguished speech from noise: a significant delay between superior–temporal and inferior–frontal activation was found to be associated with speech processing (Fig. 3).

Superior-temporal activation indicated lexical context: magnitude of left superior–temporal sources reflected memory trace activation for spoken words. Meaningful noun and verb contexts led to stronger superior–temporal source activation than the meaningless pseudoword context (Fig. 5).

Local cortical activation reflected processing of specific inflectional affixes: left inferior–frontal source activation was stronger when verb affixes were processed compared with noun affix processing, and the reverse was found for right superior–temporal activation (Figs 5 and 6).

As the contribution of stimulus contexts to the brain response was removed by using control conditions, this study could reveal brain responses to identical critical stimuli placed in different contexts. Because physically identical stimuli elicited different patterns of magnetic brain activation, we conclude that it was the contextual bias of the cortical processes elicited by these critical stimuli, which led to the differential cortical activation documented. We argue here that the contextual influences on brain activation are related to phonological and semantic levels. One may still argue that differential spectrotemporal similarity between context and critical stimuli could, in theory, lead to differential nonlinguistic early perceptual interactions, as known from streaming or masking (Fishman et al. 2001; Micheyl et al. 2003, 2007). However, we would like to draw attention to the meticulous matching of spectrotemporal features of standard stimuli, which, together with the fact that identical critical parts of deviant stimuli were used, would make it unlikely that the spectral differences between stimuli per se explain the present results. Such differences may predict an activation difference in superior–temporal cortex (possibly even lower structures), where early nonlinguistic perceptual processes are typically manifest but can, in our view, not easily explain the critical observations of the present study about differential laterality and well-timed activation of widespread cortical sources.

Limitations originating from the impossibility to guarantee exact acoustic and psycholinguistic matching of speech and noise stimuli can be overcome by 1) using identical stimuli perceived and processed differently in different contexts and 2) removing additive contributions of these contexts. As the investigation targeted identical stimuli, the present results allow for conclusions on critical perceptual and cognitive processes, independent of the physical features of the critical stimuli. On the other hand, the use of a small stimulus set may appear as a disadvantage of the present research strategy. Using few well-controlled stimuli to address general questions is, however, an established strategy in a range of research fields, especially in psychophysics and psychoacoustics (Carlyon 2004), from where the strategy had been imported to brain science. There are further arguments in favor of a single-item approach. To reveal the earliest brain responses reflecting higher cognitive processes, it is essential to minimize stimulus variance. Averaging over the brain correlates of variable speech stimuli, for example, will blur and possibly remove early focal and short-lived brain activity, therefore making it impossible to study precise early activation time courses (Pulvermüller and Shtyrov 2006). Furthermore, the inflectional system of most languages includes only a small number of inflectional affixes (English has 4 for verbs and 1 for nouns), and, as we demonstrate here, even items as similar as the final “s” in “sees” and “seas” may have different brain correlates. Therefore, some areas of the neuroscience of language require a single-item approach.

Timing of Local Cortical Sources Tells Speech from Noise

A significant interaction demonstrated that source activation latencies, and therefore spatiotemporal patterns, differed between noise, pseudoword, and word contexts. There was no significant between-area difference in activation latencies for noise contexts. In contrast, all speech stimuli elicited a significantly earlier superior–temporal activation compared with their inferior–frontal activation, the relevant delay being 12 ms.

Earlier MEG work had reported activation spreading from temporal to frontal cortex in response to tone and word stimuli (Dale et al. 2000; Rinne et al. 2000; Marinkovic et al. 2003; Pulvermüller et al. 2003; Hauk and Pulvermüller 2004; Dhond et al. 2007). As we show here, the minimal but well-defined delay between superior–temporal and inferior–frontal sources in the left hemisphere may index the signature of distributed memory circuits for speech. In line with the spatial aspect of the present findings, earlier work has suggested that frontotemporal circuits are involved in speech processing in humans (eg, Scott and Johnsrude 2003; Pulvermüller 2005; Uppenkamp et al. 2006) and that similar circuits can play a role in animal communication (Rizzolatti and Craighero 2004; Kanwal and Rauschecker 2007). Our spatiotemporal results suggest that these frontotemporal circuits can be conceptualized as neuronal assemblies whose neuronal elements are held together by long-distance links ensuring precisely timed functional interaction.

Precisely timed activation patterns, or “neuronal avalanches” (Plenz and Thiagarajan 2007), are known from multiple-unit recordings in animals and are commonly attributed to neuronal circuits called synfire chains (Abeles 1982). Synfire chains consist of component neuron sets linked to each other in a stepwise manner, thus generating a specific spatiotemporal pattern of neuronal activity when the chain ignites. Neurophysiological evidence from the animal literature supports the existence of synfire chains in local cortical areas and also demonstrated that their activation correlates with specific behaviors (Abeles et al. 1993; Vaadia et al. 1995; Plenz and Thiagarajan 2007). The specific spatiotemporal patterns of activity revealed here by MEG source analysis are best explained by the existence of inter-area synfire chains spread out over superior–temporal and inferior–frontal cortex and specifically processing speech stimuli. We therefore suggest that the stimulus-elicited activation of long-term memory circuits for spoken words and morphemes contributes to the emergence of the precisely timed pattern of cortical activation spreading between cortical areas.

Whereas pseudowords may partly activate memory circuits for words and morphemes (Wennekers et al. 2006)—as reflected by a reduced activation but a still measurable superior–temporal inferior–frontal time delay—unfamiliar noise sounds may not activate corresponding memory traces. Their processing is best described in terms of acoustic processes followed by attention switching (Rinne et al. 2000; Rinne et al. 2005). The brain signatures for such acoustic processing and attention switching include a symmetric bilateral response in superior–temporal cortex (Näätänen et al. 2001; Patterson et al. 2002). The bilateral nature of the frontal brain response to the brief spectrally rich critical stimuli may reflect aspects of their spectrotemporal characteristics (Zatorre et al. 2002).

Left Superior–Temporal Activation Indicates Lexical Context

Memory circuit activation became manifest both in the fine-grained timing of local sources and in their strength. Whereas symmetric strong superior–temporal activation was elicited by critical stimuli in noise contexts, speech contexts led to left lateralized superior–temporal activity. Therefore, it appears that, similar to the timing of local cortical sources, the laterality of superior–temporal activation reflects the speech/noise contrast. However, right frontal activation tended to indicate a different distinction, as it was still significant to noise and pseudoword contexts but insignificant for word contexts (cf. Shtyrov et al. 2005).

Local source strengths distinguished between speech stimuli. Left hemispheric superior–temporal activation in word contexts was stronger than that in pseudoword contexts. This lexical enhancement replicates earlier results (Korpilahti et al. 2001; Pulvermüller et al. 2001; Shtyrov and Pulvermüller 2002; Endrass et al. 2004; Pettigrew et al. 2004; Sittiprapaporn et al. 2004; Shtyrov et al. 2007). The right superior–temporal sources reflected the speech–noise distinction, as it was stronger for noise than for all speech contexts examined.

Local Signatures of Inflectional Processing: Noun vs Verb Affixes

An extensive debate in cognitive neuroscience addresses the question whether nouns and verbs have different brain correlates. In spite of positive results, earlier work addressing this question is still under discussion (Miceli et al. 1984; Damasio and Tranel 1993; Daniele et al. 1994; Pulvermüller et al. 1999; Shapiro et al. 2005). Any sets of nouns and verbs are characterized, apart from their membership in different lexical and syntactic categories, by semantic features possibly underlying differences in brain activity these items may elicit. Even if pseudowords are placed in verb and noun contexts, such as “to wug” and “the wug” (Shapiro and Caramazza 2003), these contexts constitute a bias toward action or object reading, thus implying semantic differences. A potentially fruitful strategy is offered by the study of inflectional affixes of nouns and verbs, as these items would be linked to noun- and verb-related grammatical information without referring to objects or actions. Also, the inflectional system appears to be a rich target of neuroscience research (Marslen-Wilson and Tyler 2007). The present paradigm, where the contribution of processes elicited by noun and verb stems are removed from the brain response, offers a unique opportunity to investigate the brain correlates of grammatical information linked to noun and verb affixes. Surprisingly, we found reliable differences in brain activation between noun and verb affixes. The noun affix led to stronger excitation in right superior–temporal cortex than the verb suffix (cf. Shapiro et al. 2005), whereas the latter activated left inferior–frontal cortex more strongly than the former (cf. Shapiro et al. 2005; Shapiro et al. 2006). This is consistent with psycholinguistic theories postulating distinct brain mechanisms for grammatical information related to nouns and verbs (cf. Shapiro and Caramazza 2003a, 2003b). Critically, our present results suggest highly specific cortical activation patterns and possibly underlying neural circuits for inflectional affixes of nouns and verbs. It appears that these affixes, respectively, spark synfire chains with similar temporal structure but differential interactions between local neuronal assemblies in inferior–frontal and superior–temporal areas.

Summary

Spatiotemporal patterns and local sources extracted from MEG recordings can identify cortical memory circuits of different types. The present results indicate that the speech–noise contrast and a range of fine-grained psycholinguistic differences between speech–language materials are reflected by spatiotemporal patterns of cortical activity, especially the timing of local cortical sources and by their magnitude. We also report differences in local source strengths in inferior–frontal and superior–temporal lobe that may index specific morphological processes elicited by noun and verb inflection. Mechanistically, the cortical circuits underlying these specific rapid spatiotemporal patterns revealed by MEG appear as variants of the synfire chains documented by intracortical recordings. As spatiotemporal patterns and local sources differed already 100 ms after stimulation, we note that functional specificity of perceptual and cognitive brain processes arises surprisingly early.

Funding

Medical Research Council (U1055.04.003.00001.01, U1055.04.003.00003.01); European Community under the “Information Society Technologies” and the “New and Emerging Science and Technology” Programmes (IST-2001-35282, NESTCOM).

Acknowledgments

We are grateful to Risto Ilmoniemi, Risto Näätänen, Ian Nimmo-Smith, Juha Montonen, Elina Pihko, and Johanna Salonen for their contributions to this work at different stages. Conflict of Interest: None declared.

References

- Abeles M. 1982. Local cortical circuits. An electrophysiological study. Berlin (Germany): Springer. [Google Scholar]

- Abeles M. 1991. Corticonics—neural circuits of the cerebral cortex. Cambridge (MA): Cambridge University Press. [Google Scholar]

- Abeles M, Bergman H, Margalit E, Vaadia E. Spatiotemporal firing patterns in the frontal cortex of behaving monkeys. J Neurophysiol. 1993;70:1629–1638. doi: 10.1152/jn.1993.70.4.1629. [DOI] [PubMed] [Google Scholar]

- Ahonen AI, Hämäläinen MS, Ilmoniemi RJ, Kajola MJ, Knuutila JE, Simola JT, Vilkman VA. Sampling theory for neuromagnetic detector arrays. IEEE Trans Biomed Eng. 1993;40:859–869. doi: 10.1109/10.245606. [DOI] [PubMed] [Google Scholar]

- Auranen T, Nummenmaa A, Hamalainen MS, Jaaskelainen IP, Lampinen J, Vehtari A, Sams M. Bayesian analysis of the neuromagnetic inverse problem with l(p)-norm priors. Neuroimage. 2005;26:870–884. doi: 10.1016/j.neuroimage.2005.02.046. [DOI] [PubMed] [Google Scholar]

- Bak TH, Yancopoulou D, Nestor PJ, Xuereb JH, Spillantini MG, Pulvermüller F, Hodges JR. Clinical, imaging and pathological correlates of a hereditary deficit in verb and action processing. Brain. 2006;129:321–332. doi: 10.1093/brain/awh701. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22:577–609. doi: 10.1017/s0140525x99002149. discussion 610–560. [DOI] [PubMed] [Google Scholar]

- Bentin S, Mouchetant-Rostaing Y, Giard MH, Echallier JF, Pernier J. ERP manifestations of processing printed words at different psycholinguistic levels: time course and scalp distribution. J Cogn Neurosci. 1999;11:235–260. doi: 10.1162/089892999563373. [DOI] [PubMed] [Google Scholar]

- Carlyon RP. How the brain separates sounds. Trends Cogn Sci. 2004;8:465–471. doi: 10.1016/j.tics.2004.08.008. [DOI] [PubMed] [Google Scholar]

- Compton PE, Grossenbacher P, Posner MI, Tucker DM. A cognitive-anatomical approach to attention in lexical access. J Cogn Neurosci. 1991;3:304–312. doi: 10.1162/jocn.1991.3.4.304. [DOI] [PubMed] [Google Scholar]

- Dale AM, Liu AK, Fischl BR, Buckner RL, Belliveau JW, Lewine JD, Halgren E. Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron. 2000;26:55–67. doi: 10.1016/s0896-6273(00)81138-1. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Tranel D. Nouns and verbs are retrieved with differently distributed neural systems. Proc Natl Acad Sci U S A. 1993;90:4957–4960. doi: 10.1073/pnas.90.11.4957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daniele A, Giustolisi L, Silveri MC, Colosimo C, Gainotti G. Evidence for a possible neuroanatomical basis for lexical processing of nouns and verbs. Neuropsychologia. 1994;32:1325–1341. doi: 10.1016/0028-3932(94)00066-2. [DOI] [PubMed] [Google Scholar]

- Dhond RP, Witzel T, Dale AM, Halgren E. Spatiotemporal cortical dynamics underlying abstract and concrete word reading. Hum Brain Mapp. 2007;28:355–362. doi: 10.1002/hbm.20282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Endrass T, Mohr B, Pulvermüller F. Enhanced mismatch negativity brain response after binaural word presentation. Eur J Neurosci. 2004;19:1653–1660. doi: 10.1111/j.1460-9568.2004.03247.x. [DOI] [PubMed] [Google Scholar]

- Feldman J. From molecule to metaphor: a neural theory of language. Cambridge (MA): MIT Press; 2006. [Google Scholar]

- Fishman YI, Reser DH, Arezzo JC, Steinschneider M. Neural correlates of auditory stream segregation in primary auditory cortex of the awake monkey. Hear Res. 2001;151:167–187. doi: 10.1016/s0378-5955(00)00224-0. [DOI] [PubMed] [Google Scholar]

- Frangos J, Ritter W, Friedman D. Brain potentials to sexually suggestive whistles show meaning modulates the mismatch negativity. Neuroreport. 2005;16:1313–1317. doi: 10.1097/01.wnr.0000175619.23807.b7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuchs M, Wagner M, Kohler T, Wischmann HA. Linear and nonlinear current density reconstructions. J Clin Neurophysiol. 1999;16:267–295. doi: 10.1097/00004691-199905000-00006. [DOI] [PubMed] [Google Scholar]

- Fuster JM. 2003. Cortex and mind: unifying cognition. New York: Oxford University Press. [Google Scholar]

- Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405:347–351. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- Hämäläinen MS, Hari R, Ilmoniemi RJ, Knuutila J, Lounasmaa OV. Magnetoencephalography—theory, instrumentation, and applications to noninvasive studies of the working human brain. Rev Mod Phys. 1993;65:413–497. [Google Scholar]

- Hämäläinen MS, Ilmoniemi RJ. Interpreting magnetic fields of the brain: minimum norm estimates. Med Biol Eng Comput. 1994;32:35–42. doi: 10.1007/BF02512476. [DOI] [PubMed] [Google Scholar]

- Hauk O. Keep it simple: a case for using classical minimum norm estimation in the analysis of EEG and MEG data. Neuroimage. 2004;21:1612–1621. doi: 10.1016/j.neuroimage.2003.12.018. [DOI] [PubMed] [Google Scholar]

- Hauk O, Pulvermüller F. Neurophysiological distinction of action words in the fronto-central cortex. Hum Brain Mapp. 2004;21:191–201. doi: 10.1002/hbm.10157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Shtyrov Y, Pulvermüller F. The sound of actions as reflected by mismatch negativity: rapid activation of cortical sensory-motor networks by sounds associated with finger and tongue movements. Eur J Neurosci. 2006;23:811–821. doi: 10.1111/j.1460-9568.2006.04586.x. [DOI] [PubMed] [Google Scholar]

- Hillis AE, Caramazza A. Representation of grammatical categories of words in the brain. J Cogn Neurosci. 1995;7:396–407. doi: 10.1162/jocn.1995.7.3.396. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ, Neville HJ. Auditory and visual semantic priming in lexical decision: a comparison using event-related brain potentials. Lang Cogn Process. 1990;5:281–312. [Google Scholar]

- Huang MX, Dale AM, Song T, Halgren E, Harrington DL, Podgorny I, Canive JM, Lewis S, Lee RR. Vector-based spatial-temporal minimum L1-norm solution for MEG. Neuroimage. 2006;31:1025–1037. doi: 10.1016/j.neuroimage.2006.01.029. [DOI] [PubMed] [Google Scholar]

- Ikegaya Y, Aaron G, Cossart R, Aronov D, Lampl I, Ferster D, Yuste R. Synfire chains and cortical songs: temporal modules of cortical activity. Science. 2004;304:559–564. doi: 10.1126/science.1093173. [DOI] [PubMed] [Google Scholar]

- Ilmoniemi RJ. Models of source currents in the brain. Brain Topogr. 1993;5:331–336. doi: 10.1007/BF01128686. [DOI] [PubMed] [Google Scholar]

- Kanwal JS, Rauschecker JP. Auditory cortex of bats and primates: managing species-specific calls for social communication. Front Biosci. 2007;12:4621–4640. doi: 10.2741/2413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiefer M, Sim EJ, Liebich S, Hauk O, Tanaka J. Experience-dependent plasticity of conceptual representations in human sensory-motor areas. J Cogn Neurosci. 2007;19:525–542. doi: 10.1162/jocn.2007.19.3.525. [DOI] [PubMed] [Google Scholar]

- Korpilahti P, Krause CM, Holopainen I, Lang AH. Early and late mismatch negativity elicited by words and speech-like stimuli in children. Brain Lang. 2001;76:332–339. doi: 10.1006/brln.2000.2426. [DOI] [PubMed] [Google Scholar]

- Liberman AM. 1996. Speech: a special code. [Google Scholar]

- Marinkovic K, Dhond RP, Dale AM, Glessner M, Carr V, Halgren E. Spatiotemporal dynamics of modality-specific and supramodal word processing. Neuron. 2003;38:487–497. doi: 10.1016/s0896-6273(03)00197-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marslen-Wilson WD, Tyler LK. Morphology, language and the brain: the decompositional substrate for language comprehension. Philos Trans R Soc Lond B Biol Sci. 2007;362:823–836. doi: 10.1098/rstb.2007.2091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miceli G, Silveri M, Villa G, Caramazza A. On the basis of agrammatics' difficulty in producing main verbs. Cortex. 1984;20:207–220. doi: 10.1016/s0010-9452(84)80038-6. [DOI] [PubMed] [Google Scholar]

- Micheyl C, Carlyon RP, Gutschalk A, Melcher JR, Oxenham AJ, Rauschecker JP, Tian B, Courtenay Wilson E. The role of auditory cortex in the formation of auditory streams. Hear Res. 2007;229:116–131. doi: 10.1016/j.heares.2007.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Micheyl C, Carlyon RP, Shtyrov Y, Hauk O, Dodson T, Pulvermüller F. The neurophysiological basis of the auditory continuity illusion: a mismatch negativity study. J Cogn Neurosci. 2003;15:747–758. doi: 10.1162/089892903322307456. [DOI] [PubMed] [Google Scholar]

- Näätänen R. The mismatch negativity: a powerful tool for cognitive neuroscience. Ear Hear. 1995;16:6–18. [PubMed] [Google Scholar]

- Näätänen R, Alho K. Mismatch negativity–the measure for central sound representation accuracy. Audiol Neurootol. 1997;2:341–353. doi: 10.1159/000259255. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Lehtokoski A, Lennes M, Cheour M, Huotilainen M, Iivonen A, Valnio A, Alku P, Ilmoniemi RJ, Luuk A, et al. Language-specific phoneme representations revealed by electric and magnetic brain responses. Nature. 1997;385:432–434. doi: 10.1038/385432a0. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Tervaniemi M, Sussman E, Paavilainen P, Winkler I. ‘Primitive intelligence’ in the auditory cortex. Trends Neurosci. 2001;24:283–288. doi: 10.1016/s0166-2236(00)01790-2. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh Inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Osipova D, Rantanen K, Ahveninen J, Ylikoski R, Happola O, Strandberg T, Pekkonen E. Source estimation of spontaneous MEG oscillations in mild cognitive impairment. Neurosci Lett. 2006;405:57–61. doi: 10.1016/j.neulet.2006.06.045. [DOI] [PubMed] [Google Scholar]

- Palva S, Palva JM, Shtyrov Y, Kujala T, Ilmoniemi RJ, Kaila K, Naatanen R. Distinct gamma-band evoked responses to speech and non-speech sounds in humans. J Neurosci. 2002;22:RC211. doi: 10.1523/JNEUROSCI.22-04-j0003.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson RD, Uppenkamp S, Johnsrude IS, Griffiths TD. The processing of temporal pitch and melody information in auditory cortex. Neuron. 2002;36:767–776. doi: 10.1016/s0896-6273(02)01060-7. [DOI] [PubMed] [Google Scholar]

- Pettigrew CM, Murdoch BE, Ponton CW, Finnigan S, Alku P, Kei J, Sockalingam R, Chenery HJ. Automatic auditory processing of english words as indexed by the mismatch negativity, using a multiple deviant paradigm. Ear Hear. 2004;25:284–301. doi: 10.1097/01.aud.0000130800.88987.03. [DOI] [PubMed] [Google Scholar]

- Plenz D, Thiagarajan TC. The organizing principles of neuronal avalanches: cell assemblies in the cortex? Trends Neurosci. 2007;30:101–110. doi: 10.1016/j.tins.2007.01.005. [DOI] [PubMed] [Google Scholar]

- Prut Y, Vaadia E, Bergman H, Haalman I, Slovin H, Abeles M. Spatiotemporal structure of cortical activity: properties and behavioral relevance. J Neurophysiol. 1998;79:2857–2874. doi: 10.1152/jn.1998.79.6.2857. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F. Words in the brain's language. Behav Brain Sci. 1999;22:253–336. [PubMed] [Google Scholar]

- Pulvermüller F. Brain mechanisms linking language and action. Nat Rev Neurosci. 2005;6:576–582. doi: 10.1038/nrn1706. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Kujala T, Shtyrov Y, Simola J, Tiitinen H, Alku P, Alho K, Martinkauppi S, Ilmoniemi RJ, Näätänen R. Memory traces for words as revealed by the mismatch negativity. Neuroimage. 2001;14:607–616. doi: 10.1006/nimg.2001.0864. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Lutzenberger W, Preissl H. Nouns and verbs in the intact brain: evidence from event-related potentials and high-frequency cortical responses. Cereb Cortex. 1999;9:498–508. doi: 10.1093/cercor/9.5.497. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Shtyrov Y. Language outside the focus of attention: the mismatch negativity as a tool for studying higher cognitive processes. Prog Neurobiol. 2006;79:49–71. doi: 10.1016/j.pneurobio.2006.04.004. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Shtyrov Y, Ilmoniemi RJ. Spatio-temporal patterns of neural language processing: an MEG study using minimum-norm current estimates. Neuroimage. 2003;20:1020–1025. doi: 10.1016/S1053-8119(03)00356-2. [DOI] [PubMed] [Google Scholar]

- Rinne T, Alho K, Ilmoniemi RJ, Virtanen J, Naatanen R. Separate time behaviors of the temporal and frontal mismatch negativity sources. Neuroimage. 2000;12:14–19. doi: 10.1006/nimg.2000.0591. [DOI] [PubMed] [Google Scholar]

- Rinne T, Degerman A, Alho K. Superior temporal and inferior frontal cortices are activated by infrequent sound duration decrements: an fMRI study. Neuroimage. 2005;26:66–72. doi: 10.1016/j.neuroimage.2005.01.017. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annu Rev Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci. 2001;2:661–670. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- Scott SK, Johnsrude IS. The neuroanatomical and functional organization of speech perception. Trends Neurosci. 2003;26:100–107. doi: 10.1016/S0166-2236(02)00037-1. [DOI] [PubMed] [Google Scholar]

- Shapiro K, Caramazza A. Grammatical processing of nouns and verbs in left frontal cortex? Neuropsychologia. 2003a;41:1189–1198. doi: 10.1016/s0028-3932(03)00037-x. [DOI] [PubMed] [Google Scholar]

- Shapiro K, Caramazza A. The representation of grammatical categories in the brain. Trends Cogn Sci. 2003b;7:201–206. doi: 10.1016/s1364-6613(03)00060-3. [DOI] [PubMed] [Google Scholar]

- Shapiro KA, Moo LR, Caramazza A. Cortical signatures of noun and verb production. Proc Natl Acad Sci U S A. 2006;103:1644–1649. doi: 10.1073/pnas.0504142103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shapiro KA, Mottaghy FM, Schiller NO, Poeppel TD, Fluss MO, Muller HW, Caramazza A, Krause BJ. Dissociating neural correlates for nouns and verbs. Neuroimage. 2005;24:1058–1067. doi: 10.1016/j.neuroimage.2004.10.015. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Osswald K, Pullvermüller F. Memory traces for spoken words in the brain as revealed by the haemodynamic correlate of the mismatch negativity (MMN) Cereb Cortex. 2008;18(1):29–37. doi: 10.1093/cercor/bhm028. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Pihko E, Pulvermüller F. Determinants of dominance: is language laterality explained by physical or linguistic features of speech? Neuroimage. 2005;27:37–47. doi: 10.1016/j.neuroimage.2005.02.003. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Pulvermüller F. Memory traces for inflectional affixes as shown by the mismatch negativity. Eur J Neurosci. 2002;15:1085–1091. doi: 10.1046/j.1460-9568.2002.01941.x. [DOI] [PubMed] [Google Scholar]

- Sittiprapaporn W, Chindaduangratn C, Kotchabhakdi N. Long-term memory traces for familiar spoken words in tonal languages as revealed by the Mismatch Negativity. Songkjla Nakharin J Sci Technol. 2004;26:1–8. [Google Scholar]

- Stenbacka L, Vanni S, Uutela K, Hari R. Comparison of minimum current estimate and dipole modeling in the analysis of simulated activity in the human visual cortices. Neuroimage. 2002;16:936–943. doi: 10.1006/nimg.2002.1151. [DOI] [PubMed] [Google Scholar]

- Uppenkamp S, Johnsrude IS, Norris D, Marslen-Wilson W, Patterson RD. Locating the initial stages of speech-sound processing in human temporal cortex. Neuroimage. 2006;31:1284–1296. doi: 10.1016/j.neuroimage.2006.01.004. [DOI] [PubMed] [Google Scholar]

- Uusitalo MA, Ilmoniemi RJ. Signal-space projection method for separating MEG or EEG into components. Med Biol Eng Comput. 1997;35:135–140. doi: 10.1007/BF02534144. [DOI] [PubMed] [Google Scholar]

- Uutela K, Hämäläinen M, Somersalo E. Visualization of magnetoencephalographic data using minimum current estimates. Neuroimage. 1999;10:173–180. doi: 10.1006/nimg.1999.0454. [DOI] [PubMed] [Google Scholar]

- Vaadia E, Haalman I, Abeles M, Bergman H, Prut Y, Slovin H, Aertsen A. Dynamics of neuronal interactions in monkey cortex in relation to behavioural events. Nature. 1995;373:515–518. doi: 10.1038/373515a0. [DOI] [PubMed] [Google Scholar]

- von Helmholtz H. Über einige Gesetze der Vertheilung elektrischer Ströme in körperlichen Leitern, mit Anwendung auf die thierisch-elektrischen Versuche. Ann Phys Chem. 1853;89:211, 353–233. [Google Scholar]

- Wennekers T, Garagnani M, Pulvermüller F. Language models based on Hebbian cell assemblies. J Physiol Paris. 2006;100:16–30. doi: 10.1016/j.jphysparis.2006.09.007. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends Cogn Sci. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]