Abstract

BACKGROUND

Residents demonstrate scholarly activity by presenting posters at academic meetings. Although recommendations from national organizations are available, evidence identifying which components are most important is not.

OBJECTIVE

To develop and test an evaluation tool to measure the quality of case report posters and identify the specific components most in need of improvement.

DESIGN

Faculty evaluators reviewed case report posters and provided on-site feedback to presenters at poster sessions of four annual academic general internal medicine meetings. A newly developed ten-item evaluation form measured poster quality for specific components of content, discussion, and format (5-point Likert scale, 1 = lowest, 5 = highest).

Main outcome measure(s): Evaluation tool performance, including Cronbach alpha and inter-rater reliability, overall poster scores, differences across meetings and evaluators and specific components of the posters most in need of improvement.

RESULTS

Forty-five evaluators from 20 medical institutions reviewed 347 posters. Cronbach’s alpha of the evaluation form was 0.84 and inter-rater reliability, Spearman’s rho 0.49 ( < 0.001). The median score was 4.1 (Q1 -Q3, 3.7-4.6)(Q1 = 25th, Q3 = 75th percentile). The national meeting median score was higher than the regional meetings (4.4 vs, 4.0, < 0.001). We found no difference in faculty scores. The following areas were identified as most needing improvement: clearly state learning objectives, tie conclusions to learning objectives, and use appropriate amount of words.

CONCLUSIONS

Our evaluation tool provides empirical data to guide trainees as they prepare posters for presentation which may improve poster quality and enhance their scholarly productivity.

Key Words: case report, poster, evaluation tool, academic meetings

BACKGROUND

The Accreditation Council on Graduate Medical Education (ACGME) requires residents to participate in scholarly activities and endorses case reports as one such venue.1,2 Case reports, or clinical vignettes, are drawn from everyday patient encounters, do not require financial resources or formal research training, and are, therefore, well suited for resident scholarly activity.3 Case reports add value to medical education by reflecting contemporary clinical practice and real time medical decision making.4,5 Many journals publish case reports4,6,7 and a case report may be one of the first manuscripts a resident will write4,8 or present in poster format.9

Trainees and junior faculty can begin their academic careers with a poster presentation.8,9 In addition to presenting their scholarly work, poster sessions provide trainees a place to network, develop mentorship, and interact with potential future employers. Poster sessions add to meeting content by disseminating early research findings,10 sharing interesting clinical case presentations, and allowing collaboration within the scientific community.10–12 Across specialties, poster presentations have been shown to lead to subsequent manuscript publication.10, 11–17

When faced with the task of preparing a poster, trainees find guidance within their institution, from national organizations,18–20 published recommendations,12, 21–23 or the Internet. Although the ACGME requires institutions to support trainees’ scholarly activities,2 not all institutions are able to provide adequate mentoring.9 Recommendations from national organizations or published references detail multiple components of a standard poster and are often presented as “how to tips” or general guides,20–24 but there is currently no evidence to support the recommendations and no published data to identify which components of a poster are most important. As a result, the quality of posters presented at academic medical meetings is highly variable.

We implemented a mentorship program designed to give trainees feedback on their case report posters at academic meetings. Through this program, our study aim was to 1.) develop and test an evaluation tool to measure the quality of the posters and 2.) identify specific components of the posters most in need of improvement.

METHODS

Evaluation Tool

We developed a one-page standardized form to evaluate the poster components. Two authors (LLW, AP) developed the initial form, incorporating elements from the peer-review submission process of the Society of General Internal Medicine (SGIM) vignette committee and the American College of Physicians’ poster judging criteria.18 The form was refined by members of the 2006 Southern Regional SGIM planning committee through an iterative process. The final version had ten items, rated on a five-point Likert scale (1 = lowest rating, 5 = highest rating) grouped in three domains 1) content (clear learning objectives, case description, relevant content area); 2) conclusions (tied to objectives, supported by content, increased the understanding or improved the diagnosis/ treatment); and, 3) format (clarity, appropriate amount of words, effective use of color, effective use of pictures and graphics) (Appendix). We calculated a mean score for each poster based on the ten items.

Evaluation and Feedback Process

We provided feedback to all posters presented at four case reports poster sessions. The sessions were from academic general internal medicine annual meetings: three regional meetings (Southern Society of General Internal Medicine; 2006–2008) and one national (Society of General Internal Medicine; 2007) and were 90 minutes in duration. The case reports selected for poster presentation were determined by the meetings’ requirements and peer-reviewed process.

Faculty evaluators were clinician educators from academic medical centers. We invited a convenience sample of evaluators from the meetings’ planning committees and reviewers from the initial peer-review process. Each evaluator reviewed 5–10 posters during the poster session and gave immediate feedback to the presenter; the feedback was both verbal (if the presenter was available) and written via the completed feedback form. At the end of each poster session, we collected a copy of the completed form, without poster identification information. We also collected anonymous identifiers of the evaluators including faculty rank, primary academic role, and number of years on faculty. We did not provide any specific training to the evaluators and poster assignment was random. The University of Alabama at Birmingham Institutional Review Board approved the study.

ANALYSIS

We used standard descriptive statistics and calculated Cronbach’s alpha to determine internal consistency of the evaluation tool. We assessed inter-rater reliability among faculty evaluators using 46 posters at one meeting (Southern Society of General Internal Medicine, 2008) and with a Spearman’s rho. We used the Kruskall-Wallis test to compare ratings by faculty (faculty rank, primary academic role, years on faculty) and the Mann-Whitney test to compare ratings by meeting location (regional or national); we used a value of < 0.05 to determine statistical significance.

RESULTS

A total of 45 evaluators representing 20 medical institutions (United States and Canada) completed review on 347 of 432 scheduled posters (80.3%) at four meetings (Southern SGIM 2006 = 63, 2007 = 84, 2008 = 123; National SGIM 2007 = 77); posters not reviewed were due to lack of evaluator time or poster absence. The 432 scheduled posters were from 70 institutions, 24 states, one province, and two countries (United States and Canada).

Evaluation Tool: Overall Scores and Tool Performance

The Cronbach’s alpha for the ten items on the evaluation form was 0.84. The inter-rater reliability, Spearman’s rho, among 46 posters was 0.49 ( < 0.001); for each of the 10 items, 38% of the ratings were identical and 48% were within one unit in the 5-point Likert scale. The median score was 4.1 (Q1–Q3, 3.7–4.6) (Q1 = 25th, Q3 = 75th percentile). The score for the national meeting was higher (median 4.4; Q1–Q3, 4.0–4.7) than for the regional meetings (median 4.0; Q1–Q3, 3.6–4.4) ( < 0.001). The score did not differ by number of years on faculty ( = 0.10), faculty rank ( = 0.14), or primary academic role ( = 0.06).

Poster Components Most Needing Improvement

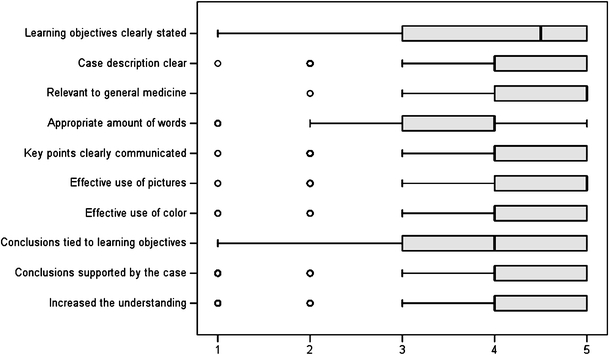

The overall importance of each component is shown in Figure 1. The components with lowest scores were not clearly stating the learning objectives (content), not linking the conclusions to the learning objectives (conclusions), and having an inappropriate amount of words (format). All poster components correlated with the overall poster score, with conclusions tied to learning objectives having the highest correlation coefficient ( = 0.79).

Figure 1.

Poster component scores (5-point Likert scale; 1 = lowest rating, 5 = highest rating).

DISCUSSION

Many organizations and articles provide how-to tips to guide trainees when preparing a poster for presentation.18–24 However, they are limited by lack of evidence and validation. We developed an evaluation tool to measure the quality of case report posters that, when tested in a widely representative sample, had high internal consistency by evaluators. Furthermore, we provide empirical evidence on specific poster components most needing improvement: clearly stating learning objectives, tying the conclusions to the learning objectives, and using the appropriate amount of words.

In our literature review, we found few published evaluation tools which objectively measure scientific posters. In the nursing literature, Bushy published a 30 item tool titled the Quality Assurance (QA)-Poster Evaluation Tool in 1990, which was later modified to a 10-item tool.25,26 It scores posters on overall appearance and content, but lacks scientific evaluation in regards to its validity as an evaluation tool. In the neurology literature, Smith et al., evaluated 31 scientific posters at one national neurology meeting in an effort to generate poster assessment guidelines for “best poster” prizes. He found high correlation among neurologist evaluators ( = 0.75) for first impressions of a poster, and that posters with high scientific merit but unattractive appearance risked being overlooked.27 For evaluation tools specific to case report posters, we found only one, used by The American College of Physicians for judging posters at meetings.28 It includes items related to the case significance, presentation, methods, visual impact, and presenter interview, but does not include specific check-list items or validation of the tool.

The evaluation tool we developed can be used at academic meetings to score case report posters. First, the tool had face validity. We used criteria by national academic organizations and refined the tool through an iterative process with academic clinician educators. Second, the Cronbach alpha score of 0.84 demonstrates high internal consistency. Third, the evaluation tool had discriminant validity. Scores from the national meeting were higher than the regional meetings. The national meeting accepted approximately 50% of case report submissions, whereas the regional meetings accepted all submissions. With a more competitive selection process, national scores should be higher than regional. Finally, the tool had similar ranges across faculty evaluators. Scores were not different by number of years on faculty, by evaluator rank, or by academic role; while the inter-rater reliability was modest, 86% of ratings were either identical or within one unit in the 5-point Likert scale. In light of these findings, we believe our evaluation tool may be valuable for other educators as it yields reliable and valid data and provides specific details for a successful poster.

Mentoring trainees in poster presentations is important, and one should not underestimate the scholarly significance of presenting a poster at an academic meeting. Several studies demonstrate the scholarly importance of research abstracts initially presented as posters. Across medical specialties, 34% to 77% of posters presented at meetings were subsequently published in peer reviewed journals.10,14–17 In one study, 45% of abstracts presented over 2 years at a national pediatric meeting were subsequently published in peer-reviewed journals.10 Abstracts selected for poster presentation have higher rates of publication than those not accepted for presentation (22% vs. 40%), and posters have similar rates of publication,10,17 time to publication, and journal impact factor10 as oral presentations. Thus, a poster appears to be an important step in dissemination of scholarly work.

A case report presentation, either in poster or publication, serves as a venue for trainees to participate in scholarly activity.1,4,8,9 In a national survey of internal medicine residents,3 53% of respondents presented a case report abstract. When compared to residents presenting research abstracts, those residents who presented a case report were more likely to initiate the project on their own and were less likely to have had a mentor. Sixty-eight percent of respondents planned to submit their project for publication. This highlights the need for dedicated faculty to mentor trainees through the process of writing a case report and creating an effective poster presentation.1,2 Our study can inform faculty mentors who may feel inexperienced to assist trainees with a case report poster.

Our study has some limitations. We evaluated only case report posters at four general internal medicine meetings. Our project was designed to provide educational mentorship and therefore the feedback not anonymous. This may have tempered the critiques and contributed to the overall high median scores. We evaluated the poster independently of the presenter and did not attempt evaluate the presenter’s knowledge, presentation skills, or interaction with the evaluator; yet the presenter’s skills may have influenced the scores. Despite the overall high median scores, and these limitations, we were still able to identify specific areas well below the 25th percentile.

In conclusion, our study validates an evaluation tool which effectively identified poster components most in need of improvement. Our evaluation tool can be utilized as a way to judge case report posters for awards or ranking. Our results can guide mentors and trainees to optimize their poster presentations which may improve the quality and enhance their scholarly productivity. Case report posters should clearly state learning objectives, tie the conclusions to the learning objectives, and use the appropriate amount of words. Future steps should adapt and further validate our evaluation form for broader settings and scientific posters. A successful poster may not only improve the educational goals of the presentation, but enhance personal and professional goals for trainees.

Acknowledgements

The authors have no potential conflicts of interest to report. Dr. Lisa L. Willett had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. The authors wish to thank the members of the 2006 Southern Regional SGIM planning committee and the faculty evaluators who provided feedback at the meetings.

Funding None.

Conflict of Interest None disclosed.

Appendix: Evaluation Form

Poster Presenter Academic Institution is in: ❒ Canada (1) ❒ USA (2) (List State):

.

| Strongly agree | Agree | Neutral | Disagree | Strongly disagree | |

|---|---|---|---|---|---|

| Content | |||||

| Learning objectives: Objectives are clearly stated | 5 | 4 | 3 | 2 | 1 |

| Main body: Content area relevant to General Internal Medicine | 5 | 4 | 3 | 2 | 1 |

| Main body: Case description/research methods clear | 5 | 4 | 3 | 2 | 1 |

| Conclusions | |||||

| Are tied in to the objectives | 5 | 4 | 3 | 2 | 1 |

| Can be supported by the content of the vignette or data | 5 | 4 | 3 | 2 | 1 |

| Increase the understanding of a disease process or improve diagnosis or treatment | 5 | 4 | 3 | 2 | 1 |

| Presentation format | |||||

| Key points clearly communicated | 5 | 4 | 3 | 2 | 1 |

| Appropriate amount of words | 5 | 4 | 3 | 2 | 1 |

| Effective use of colors | 5 | 4 | 3 | 2 | 1 |

| Effective use of pictures/graphics | 5 | 4 | 3 | 2 | 1 |

What is the best component of this poster?

What is one area that could improve this poster?

.

| Evaluator information: | |||||

|---|---|---|---|---|---|

| Faculty rank: | Instructor (1) | Assistant (2) professor | Associate (3) professor | Professor (4) | Other (5) |

| Primary role: | Division (1) director | Program (2) director (PD) | Associate or (3) assistant PD | Clerkship (4) director | Other (5) |

| Years on faculty: | 1–5 (1) | 6–10 (2) | >10 (3) | ||

Footnotes

Presented in part at the 2008 Society of General Internal Medicine Southern Regional Meeting, February 22, 2008, New Orleans, Louisiana.

References

- 1.Accreditation Council on Graduate Medical Education (ACGME), Educational Program, Residents’ Scholarly Activities. http://www.acgme.org/acWebsite/navPages/commonpr_documents/IVB123_EducationalProgram_ResidentScholarlyActivity_Documentation.pdf. Accessed 10/17/08.

- 2.Accreditation Council on Graduate Medical Education (ACGME), ACGME Program Requirements for Residency Education in Internal Medicine, Effective July 1, 2007. http://www.acgme.org/acWebsite/downloads/RRC_progReq/140_im_07012007.pdf. Accessed 10/17/08.

- 3.Rivera JA, Levine RB, Wright SM. Completing a scholarly project during residency training. Perspectives of residents who have been successful. JGIM. 2005;20:366–9. [DOI] [PMC free article] [PubMed]

- 4.Drenth JPH, Smits P, Thien T, Stalenhoef AFH. The case for case reports in the Netherlands Journal of Medicine. Neth J Med. 2006;64:262–4. [PubMed]

- 5.Vandenbroucke JP. In defense of case reports and case series. Ann Intern Med. 2001;134:330–4. [DOI] [PubMed]

- 6.The New England Journal of Medicine. http://authors.nejm.org/Help/acHelp.asp. Accessed 10/17/08

- 7.Estrada CA, Heudebert GR, Centor RM. JGIM New section: Case reports and clinical vignettes. J Gen Intern Med. 2005;20:971. [DOI]

- 8.Levine RB, Hebert RS, Wright SM. Resident research and scholarly activity in internal medicine residency training programs. J Gen Intern Med. 2005;20:155–9. [DOI] [PMC free article] [PubMed]

- 9.Alguire PC, Anderson WA, Albrecht RR, Poland GA. Resident Research in Internal Medicine Training Programs. Ann Intern Med. 1996;124:321–8. [DOI] [PubMed]

- 10.Carroll AE, Sox CM, Tarini BA, Ringold S, Christakis DA. Does presentation format at the Pediatric Academic Societies’ Annual meeting predict subsequent publication. Pediatrics. 2003;112:1238–41. [DOI] [PubMed]

- 11.Nguyen V, Tornetta P III, Bkaric M. Publication rates for the scientific sessions of the OTA: Orthopaedic Trauma Association. J Orthop Trauma. 1998;12:457–59. [DOI] [PubMed]

- 12.Erren TC, Bourne PE. Ten simple rules for a good poster presentation. PLoS Computational Biology. 2007;3(5):e102. [DOI] [PMC free article] [PubMed]

- 13.Preston CF, Bhandari M, Fulkerson E, Ginat D, Koval KJ, Egol KA. Podium versus Poster Publicaiton Rates at the Orthopaedic Trauma Association. Clinical Orthopaedics and Related Research. 2005;437:260–64. [DOI] [PubMed]

- 14.Sprague S, Bhandari M, Devereaux PJ, et al. Barriers to full-text publication following presentation of abstracts at annual orthopaedic meetings. J Bone Joint Surg Am. 2003;85-A:158–63. [DOI] [PubMed]

- 15.Bhandari M, Devereaux PJ, Guyatt GH, et al. An observational study of orthopaedic abstracts and subsequent full-text publications. J Bone Joint Surg Am. 2002;84-A:615–21. [DOI] [PubMed]

- 16.Weber EJ, Callaham ML, Wears RL, Barton C, Young G. Unpublished research from a medical specialty meeting why investigators fail to publish. JAMA. 1998;280:257–9. [DOI] [PubMed]

- 17.Gandhi SG, Gilbert WM. Society of gynecologic investigation: what gets published. Reprod Sci. 2004;11:562–5. [DOI] [PubMed]

- 18.American College of Physicians. Preparing a Poster Presentation. http://www.acponline.org/srf/abstracts/pos_pres.htm. Accessed 10/17/08

- 19.Society of General Internal Medicine, Poster Tips. http://sgim.org/userfiles/file/PosterTips.pdf, Accessed 10/17/08

- 20.Swarthmore College. Purrington, C.B. Advice on designing scientific posters. http://www.swarthmore.edu/NatSci/cpurrin1/posteradvice.htm. Accessed 10/17/08, 2006.

- 21.Wolcott TG. Mortal sins in poster presentations or, How to give the poster no one remembers. Newsletter of the Society for Integrative and Comparative Biology 1997. Fall:10–11

- 22.Boullata JI, Mancuso CE. A “how-to” guide in preparing abstracts and poster presentations. Nutr Clin Pract. 2007;22:641–6. [DOI] [PubMed]

- 23.Block S. The DOs and DON’Ts of poster presentation. Biophysical Journal. 1996;71:3527–9. [DOI] [PMC free article] [PubMed]

- 24.Durbin CG Jr. Effective use of tables and figures in abstracts, presentations, and papers. Respir Care. 2004;49:1233–7. [PubMed]

- 25.Bushy A. A tool to systematically evaluate QA poster displays. J Nurs Qual Assur. 1990;4(4):82–5. [PubMed]

- 26.Garrison A, Bushy A. The research poster appraisal tool (R-PAT-II): Designing and evaluating poster displays, JHQ Online July/August 2004, W4-24-W4-29, http://www.nahq.org/journal

- 27.Smith PE, Fuller G, Dunstan F. Scoring posters at scientific meetings: first impressions count. J R Soc Med. 2004;97:340–1. [DOI] [PMC free article] [PubMed]

- 28.American College of Physicians, http://www.acponline.org/residents_fellows/competitions/abstract/prepare/judge_criteria.pdf. Accessed September 24, 2008