Abstract

Long-term memory (LTM) is a multifactorial construct, composed of different stages of information processing and different cognitive operations that are mediated by distinct neural systems, some of which may be more responsible for the marked memory problems that limit the daily function of individuals with schizophrenia. From the outset of the CNTRICS initiative, this multidimensionality was appreciated, and an effort was made to identify the specific memory constructs and task paradigms that hold the most promise for immediate translational development. During the second CNTRICS meeting, the LTM group identified item encoding and retrieval and relational encoding and retrieval as key constructs. This article describes the process that the LTM group went through in the third and final CNTRICS meeting to select nominated tasks within the 2 LTM constructs and within a reinforcement learning construct that were judged most promising for immediate development. This discussion is followed by each nominating authors' description of their selected task paradigm, ending with some thoughts about future directions.

Keywords: episodic memory, schizophrenia, relational memory, item memory

Introduction

From the outset, the CNTRICS initiative appreciated that long-term memory (LTM) is a broad and multidimensional construct, encompassing multiple stages of information processing and engaging distinct neural systems, some of which are likely to be more central to the memory problems that limit the daily function of individuals with schizophrenia. A great deal of this understanding grew from clinical research employing traditional neuropsychological memory tasks such as the California Verbal Learning Test1 and Wechsler Memory Scale.2 However, unlike the previous MATRICS initiative3 that focused on these neuropsychological measures, the goal of the CNTRICS initiative was to identify tasks from the cognitive neuroscience world that hold promise for translational development for drug discovery.4 Accordingly, during the second of 3 CNTRICS meetings, 2 LTM domains5 were identified as the most promising constructs for immediate translational development: (1) relational encoding and retrieval, defined as “the processes involved in memory for stimuli/elements and how they were associated with coincident context, stimuli or events” and (2) item encoding and retrieval, defined as “the processes involved in memory for individual stimuli or elements irrespective of contemporaneously presented context or elements.” The LTM group was also assigned the construct of reinforcement learning, defined as “acquired behavior as a function of both positive and negative reinforcers including the ability to (a) associate previously neutral stimuli with value, as in Pavlovian conditioning; (b) rapidly modify behavior as a function of changing reinforcement contingencies; and (c) slowly integrate over multiple reinforcement experiences to determine probabilistically optimal behaviors in the long run.” At the end of the second meeting, a call went out to the scientific community to engage in an online submission process to nominate tasks that assess these 3 constructs to be considered for ongoing development.

As part of the nomination process, scientists were asked to provide evidence for each task's construct validity, link to neural circuits, clarity of cognitive mechanisms, availability of an animal model, link to neural systems through neuropsychopharmacology, amenability for use in neuroimaging, evidence of impairment in schizophrenia, and psychometric characteristics. In the third CNTRICS meeting, the LTM breakout group was asked to select the 2 most promising tasks within each construct based on these same criteria, with an understanding that although all tasks may not meet all requirements (eg, animal model, psychometric characteristics), tasks without clear evidence of construct validity and link to a neural circuit should not be given further consideration. The purpose of this article is to report on the outcome of this deliberation process and provide the reader with the nominating authors’ description of the (1) item encoding and retrieval, (2) relational encoding and retrieval, and (3) reinforcement learning tasks that were judged to be ready for immediate translational development.

For the construct of relational encoding and retrieval, 2 tasks—associative inference paradigm (AIP) and relational and item encoding and retrieval (RIER)—were judged ready for further development and will be described below. The third nominated task was a transitive inference paradigm (TIP). Group members were impressed with TIP's link to neural circuits, availability of an animal model, link to neuropsychopharmacology, and evidence of impairment in schizophrenia. However, the greater complexity of the TIP vs AIP resulted in a somewhat lower score for construct validity and led to a decision to select the AIP over the TIP for immediate development. Two tasks were considered for the item encoding and retrieval construct—RIER and inhibition of current irrelevant memories task. Of these, only the RIER (which assesses both item and relational memory) was chosen. The nominating author's acknowledgement that the inhibition of current irrelevant memories task has “unknown” construct validity precluded it from further consideration. Within the reinforcement learning construct, the complementary nature of several of the nominated tasks lead the working group to recommend 3 tasks for further development—the probabilistic reward task, the probabilistic selection task, and the probabilistic reversal learning task. The weather prediction task was the fourth task nominated. Because the nominating author described 3 different possible learning strategies, questions arose about the task's construct validity and it did not receive further consideration. The recommended tasks are shown in table 1. Below are the nominating authors' descriptions of the selected tasks within each of the 3 LTM constructs.

Table 1.

Long-Term Memory in Schizophrenia

| Relational encoding and retrieval: The processes involved in memory for stimuli/elements and how they were associated with coincident context, stimuli, or events. |

| Recommended for immediate development: |

| (1) Associative inference paradigm |

| (2) Relational and item encoding and retrieval (RIER) task |

| Item encoding and retrieval: The processes involved in memory for individual stimuli or elements irrespective of contemporaneously presented context or elements. |

| Recommended for immediate development: |

| (1) RIER task |

| Reinforcement learning: Acquired behavior as a function of both positive and negative reinforcers including the ability to (a) associate previously neutral stimuli with value, as in Pavlovian conditioning; (b) rapidly modify behavior as a function of changing reinforcement contingencies; and (c) slowly integrate over multiple reinforcement experiences to determine probabilistically optimal behaviors in the long run. |

| Recommended for immediate development: |

| (1) Probabilistic reward task |

| (2) Probabilistic selection task |

| (3) Probabilistic reversal learning task |

Relational Encoding and Retrieval

Associative Inference Paradigm

Description.

Relational representations bind distinct elements of an event into a memory representation that captures the relationships between the elements.6,7 Relational representations are thought to underlie mnemonic flexibility that allows for the generative use of stored knowledge about elements of experience to address new questions posed by the environment. The AIP provides a means to examine mnemonic flexibility and the nature of the relational representations that support the use of memory in novel situations.

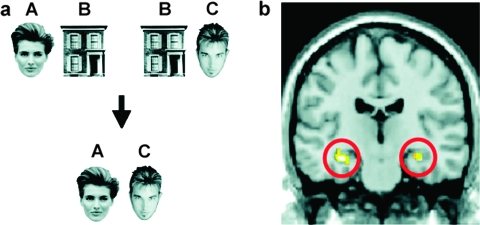

In the AIP, participants receive explicit training on 2 sets of paired associates (eg, AB and BC) and are then tested on whether they can infer from these associations the relationship between A and C. Specifically, participants learn an initial set of AB associations, where each A might consist of a unique face and each B a unique house (figure 1a). Then, participants learn an overlapping set of associations consisting of the same B stimuli (eg, the same houses) paired with a new set of C stimuli (eg, another unique set of faces). Thus, during learning, each B stimulus (a house) is associated with 2 different stimuli, A and C (2 unique faces), though the A and C stimuli are not directly experienced together.

Fig. 1.

Associative Inference Paradigm. a) Participants encode overlapping face-house pairs (AB, BC) and are tested on the inferential relationship between pairs (AC). b) Anterior hippocampal activation associated with inferential retrieval of AC pairs.13 Reprinted with Permission from Wiley 8/27/08.

During a subsequent memory test, participants make 2-alternative forced-choice judgments that depend on memory for the learned associations (AB and BC) and the inferential relationship between A and C. For all test trials, the incorrect choice item (foil) is a stimulus that had been studied in another pairing, ensuring that the 2-choice stimuli are equally familiar. This aspect of the design provides construct validity because performance requires memory for the relations between stimuli rather the memory for individual items. That is, memory judgments for trained pairs (AB, BC) cannot be determined from stimulus familiarity and must be made based on learned associations. Similarly, for inferential pairs (AC) whose relationship was not studied, participants must retrieve the relation between the A and C stimuli that emerges from the overlapping associations with the same B stimulus.

Construct Validity.

The logic of the AIP rests on the theoretical argument that relational representations separately code elements of an event, maintaining the compositionality of the elemental representations and organizing them in terms of their relations to one another.6,7 The compositional nature of these relations allows for reactivation of representations from partial input (pattern completion),8,9 a process thought to underlie event recollection. Further, maintenance of the compositionality of elements in relational representations allows flexible use of learned information at retrieval, making it possible to infer relations across stimuli without explicit training. This flexibility is important for associative inference decisions that putatively require inference to solve novel (untrained) stimulus configurations at test (AC retrieval decisions). However, relational representations may also contribute to inferential decisions through pattern completion at encoding (D. Shohamy and A. D. Wagner, unpublished data).9,10 When learning an overlapping set of pairs, AB and BC, pattern completion to AB during presentation of BC would enable an AC relation to be encoded. At test, inferential logic is not required to make an AC decision because a stored AC relation exists and can be directly retrieved.

Neural Systems.

Neuroanatomical and computational models have proposed that the medial temporal region, and the hippocampus, in particular, is essential for pattern completion processes that support performance in the AIP.9,11,12 Recent neuroimaging work with humans has revealed activation in anterior hippocampal regions at retrieval during inferential judgments relative to explicitly learned associations (figure 1b).13

Pharmacological and Behavioral Manipulation.

Data on pharmacological manipulation effects on the AIP are not yet available. Behavioral variation in performance has been observed in the AIP, with awareness of the overlapping relationships between stimuli during encoding perhaps being essential to inferential performance at test. For example, participants who are explicitly informed about the overlapping relationships between stimuli prior to learning or who spontaneously acquire such awareness during learning perform more accurately on AC judgments than uninformed or unaware subjects.14

Animal Models.

Complementary animal work has documented impaired associative inference judgments following hippocampal lesion. In rats, lesions of hippocampus proper impair AC judgments without disrupting the ability to encode or retrieve the explicitly trained associations (AB and BC).15

Performance in Schizophrenia.

How schizophrenia affects performance in the AIP is currently unknown. However, in the hierarchical TIP, schizophrenia impairs relational judgments (BD) with performance on nonrelational judgments (AE) remaining intact.16 Functional neuroimaging has further demonstrated that impaired memory performance in schizophrenia during conscious recollection17 and the hierarchical inference task18 is associated with decreased activation in hippocampal regions.

Psychometric Data.

These data are not yet available for the AIP.

Future Directions.

The AIP has a clearly defined construct and good theoretical work done on neural systems. Future studies should build psychometric evidence of test-retest reliability, extend examination of the AIP to schizophrenia populations, and increase examination of pharmacological affects on performance. In particular, medial temporal lobe regions that are thought to support relational memory performance in the AIP receive input from and provide feedback to dopamine (DA) releasing neurons in the midbrain that are associated with reward and motivation.19 Alteration in medial temporal lobe-midbrain interactions may exist in schizophrenia given the abnormal transmission of DA observed in the disease, and these alterations may have important implications for relational memory function. Medications used to treat schizophrenia may also influence interactions between medial temporal lobe structures and midbrain DA regions thus impacting memory. Determining how medication affects medial temporal lobe function and performance in the AIP may yield new insights into disease treatment.

Item Encoding and Retrieval and Relational Encoding and Retrieval

Relational and Item Encoding and Retrieval Task

Description.

The RIER task combines 2 paradigms previously used to study item-specific and relational episodic memory in schizophrenia. The first is a levels-of-processing (LOP) task designed to control for group differences in item-specific encoding strategies and, thereby, generate equivalent recognition and source memory performance between schizophrenia patients and controls.20,21 The second is a relational encoding task22 that produces a significant recognition deficit in patients with schizophrenia. During the encoding phase of RIER, participants are presented with 2 trial types in separate blocks. During “item-specific” encoding blocks, participants are presented with a series of trials in which they are shown a single object and asked to rate whether it is pleasant or unpleasant. During “relational” encoding blocks, participants are presented with a series of trials in which 3 objects are shown and they must judge whether the objects are in the correct order in terms of weight (from lightest to heaviest). In each study block, participants encode 12 objects, and a total of 3 blocks are completed for each encoding condition. The sequence of encoding blocks is counterbalanced to minimize order effects. During the retrieval phase of the task, participants first complete a yes/no item recognition test consisting of a random sequence of 72 previously studied objects (36 from item specific and 36 from relational) and 72 previously unseen foil objects. Next, participants are given an associative recognition test consisting of objects that were previously studied on relational trials. The test includes 18 “intact” pairs consisting of objects that were originally studied on the same trial and 18 “recombined” pairs consisting of objects that were originally studied on different trials. Subjects are asked to indicate if the pairs are intact or rearranged.

Construct Validity.

Behavioral research has distinguished between item-specific and relational encoding strategies.22–24 Common item-specific encoding strategies involve making a semantic decision about an item (eg, “pleasant”/“unpleasant,” “abstract”/“concrete”), whereas relational encoding strategies include imagining 2 or more items interacting or linking 2 or more words in the context of a sentence or story. It is thought that relational encoding promotes memory for associations among items, whereas item-specific encoding enhances the distinctiveness of specific item.23–26 In the episodic memory literature, relational encoding has been linked to the function of the hippocampus, which is thought to support the binding of novel representations.27–29 The distinction between relational vs item-specific encoding has also been supported by neuroimaging studies of working memory (WM) that have revealed dissociations between brain regions involved in item-specific WM maintenance and regions involved in manipulation of relationships fbetween items while they are being maintained. Research has shown that dorsolateral prefrontal cortex (DLPFC) is selectively activated on trials in which relationships among items are processed.30 Moreover, engagement of the DLPFC during relational WM processing predicts successful LTM retrieval.22,31,32 Several studies have shown that although both relational and item-specific encoding tasks are effective, they tend to have different effects on memory performance.23–26 For example, relational encoding is optimal when memory for associations between items will be tested (eg, paired associate learning), whereas item-specific encoding is optimal when memory for item details is tested (R. S. Blumenfeld and C. Ranganath, unpublished data). The available evidence therefore indicates that the construct of relational encoding and retrieval has validity at both the cognitive and neural level of analysis and that it is supported by both hippocampal and DLPFC-mediated mechanisms.

In prior work,22 it has been shown that the associative portion of the RIER task promotes LTM by building associations between triplets of words, whereas this evidence was not seen for a control task that involved passive rehearsal of words. Preliminary results (see below) suggest that performance is impaired in patients relative to controls. In contrast, item-specific semantic encoding has been shown to improve memory by facilitating encoding of distinctive item-specific information because it does not encourage building of relationships among items.24 As described below, item-specific semantic encoding tasks promote robust levels of memory performance in patients with schizophrenia.20,33,34

Neural Systems.

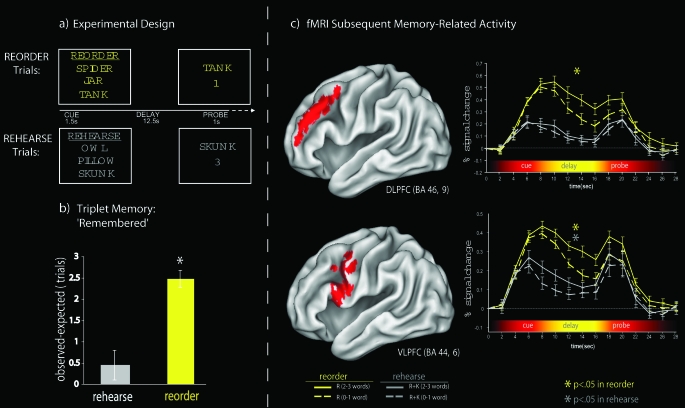

Several recent studies have demonstrated that DLPFC activation during relational encoding reliably predicts successful LTM.22 However, DLPFC activity is generally not correlated with successful item-specific encoding (see Blumenfeld and Ranganath 31 for review). For example, in a recent study from Dr Ranganath's labortory22 using a variant of the relational encoding task used in the RIER paradigm, participants were scanned while performing the 2 WM tasks (figure 2a). On “rehearse” trials, subjects were required to rehearse a set of 3 words across a 12-second delay period, whereas on “reorder” trials, participants were required to rearrange a set of 3 words based on the weight of the object that each word referred to over the delay. Although both conditions required maintenance across the delay, reorder trials also required participants to evaluate relationships between items in the memory set along a single dimension (weight). Analyses of subsequent LTM performance showed significantly more reorder trials in which all 3 items were recollected than would be expected based on overall item hit rates alone (figure 2b), but the same was not true for rehearse trials. This result suggests that, on reorder trials, participants successfully encoded relationships between the items in each memory set. Consistent with the idea that the DLPFC is involved in relational processing in WM, DLPFC activation was increased during reorder trials compared with rehearse trials (figure 2c). Furthermore, DLPFC activation during reorder, but not rehearse trials, was positively correlated with subsequent LTM performance. No such relationship was evident during rehearse trials. In contrast, activation in the left ventrolateral prefrontal cortex (VLPFC) (Broadmann area 44/6) and in the hippocampus was correlated with subsequent memory performance on both rehearse and reorder trials. Results from this study and others32 suggest that the DLPFC may be specifically recruited during relational encoding, adding support to the validity of this neural construct.

Fig. 2.

Results From Blumenfeld and Ranganath.22 a) Example stimuli and task timing for working memory trials. b) Difference between observed and expected numbers of recollected triplets from each memory set. The mean difference between the observed number of trials for which all 3 words were successfully judged as remembered and the expected number of such trials given the overall hit rate is separately plotted for reorder and rehearse trials. A positive difference indicated that subsequent memory performance was benefited by enhanced inter-item associations. Error bars depict the SEM across subjects, and the asterisk denotes that the observed expected difference was statistically significant for reorder trials. c) Time course of activation in prefrontal regions of interest (ROIs). The activity in the reorder and rehearse task is plotted separately for the left dorsolateral prefrontal cortex (DLPFC), and anterior ventrolateral prefrontal cortex (aVLPFC) was correlated with subsequent LTM performance specifically during reorder trials. In contrast, delay period activation in the posterior ventrolateral prefrontal cortex (pVLPFC) was predictive of subsequent LTM on both rehearse and reorder trials. The error bars in the time courses reflect the SEM at each time point for the reorder and rehearse tasks for each ROI. Reprinted with permission from Society for Neuroscience.

Pharmacological and Behavioral Manipulation.

Data on pharmacological manipulation effects on the RIER are not yet available. As noted above, when patients are provided with an item-specific encoding strategy, there are no longer group differences in item recognition and source retrieval performance20,21 or in functional magnetic resonance imaging (fMRI) activation in the VLPFC.33,34

Animal Models.

The kinds of relational encoding processes that are manipulated in the RIER paradigm have not been extensively investigated in animal models, in part because it is difficult to directly manipulate encoding strategies in nonhuman animals. Some relevant evidence, however, comes from studies of WM tasks in monkeys. For example, a single-unit recording study35 showed that neurons in the monkey dorsal prefrontal cortex encoded information about temporal order relationships between a series of items presented in a WM task. In contrast, ventral prefrontal neurons tended to encode the physical features of objects to be maintained. Another study demonstrated that lesions to mid-DLPFC impaired memory for sequences of actions.36 This prior work demonstrates the feasibility of investigating associative memory in animal models although direct translation of the RIER to rodent models may not be feasible, in which case nonhuman primate models may be more appropriate.

Performance in Schizophrenia.

Research by Dr Ragland and others has revealed consistent evidence of episodic memory deficits in schizophrenia linked to impaired organizational processes. During initial experiments, subjects were studied with explicit word list encoding tasks where no strategy was provided.37,38 During debriefing, controls were more likely to engage in item-specific semantic processing, and we suspected that patients were employing a less effective strategy. A levels-of-processing paradigm39 tested whether providing a semantic, item-specific encoding strategy could improve patients’ performance and prefrontal function. As predicted, patients showed the same benefit as healthy controls from item-specific semantic processing on both recognition20,34 and source memory tasks21 and showed robust activation in the ventrolateral portions of the prefrontal cortex.33,34 These results suggest that item-specific encoding processes may be relatively spared compared with relational encoding and motivate inclusion of a semantic item-specific encoding condition in the RIER paradigm to address issues of generalized deficit.

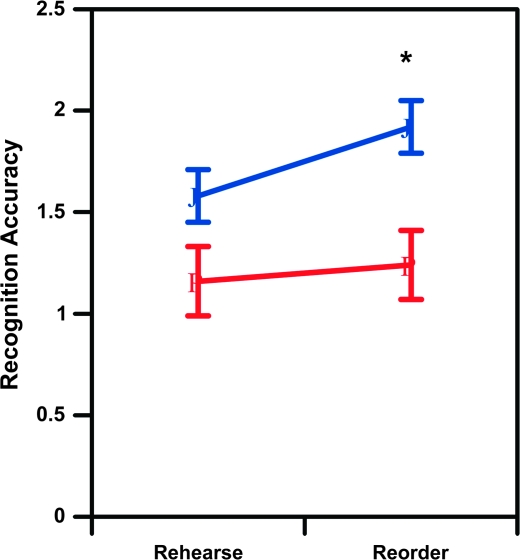

In contrast, pilot data from Dr Ragland and Dr Ranganath using the relational encoding condition from the RIER task suggest that when individuals with schizophrenia are provided with a relational encoding strategy, they may perform more poorly than controls (figure 3). Specifically, preliminary data from a sample of 15 patients with schizophrenia (8 females) and 16 demographically matched healthy volunteers showed main effects of group [F(1,29) = 7.04], p <.05, task [F(1,29) =13.7, p < .001], and a task by group interaction [F(1,29) =5.06, p < .05] on recognition accuracy. As can be seen in Figure 3, this interaction was due to patients having worse recognition accuracy relative to healthy volunteers on the relational memory reorder condition [F(1,29) =10.0, p < .005], but not on the item-specific rehearse condition [F(1,29) =3.84, p < .06].

Fig. 3.

Recognition accuracy of controls (blue circles) and patients (red triangles) for rehearse and reorder tasks. Error bars depict the SEM across subjects, and the asterisk denotes a significant group difference for the reorder but not rehearse task.

Psychometric Data.

These data are not yet available for the RIER.

Future Directions.

The RIER task has well-established construct validity, identification of specific prefrontal cognitive control systems underpinning task performance, and preliminary evidence for a relative deficit in relational vs item-specific encoding and retrieval performance in schizophrenia. Improved understanding of the specific role that the hippocampus and MTL play in task performance, development of animal models, and building psychometric evidence of test-retest reliability were identified as important future directions. Although relational memory can be examined in animal models, direct translation of the RIER to animal models will require directly manipulating encoding strategies. Meeting this goal may be more feasible in nonhuman primates then in rodent models. As with all LTM tasks, establishing adequate test-retest reliability is also a challenge given the likelihood of substantial practice effects on task performance. This may necessitate development of parallel forms of the RIER.

Reinforcement Learning

Probabilistic Reward Task

Description.

This task is based on a differential reinforcement schedule that provides an objective assessment of participants' propensity to modulate behavior as a function of reward history.40 The task, which was modified from an earlier paradigm described by Tripp and Alsop,41 is rooted within the behavioral model of signal detection42 and the generalized matching law.43,44

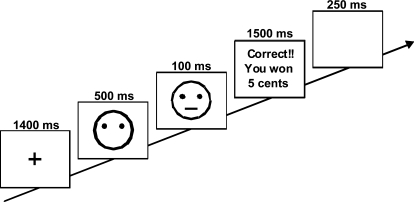

Figure 4 provides an illustration of the probabilistic reward task (adapted from Pizzagalli et al40). The task includes 300 trials, divided into 3 blocks of 100 trials, which are separated by a 30-second break. A trial starts with the presentation of an asterisk for 1400 milliseconds, immediately followed by a schematic mouthless cartoon face presented for 500 milliseconds. Next, either a short (11.5 mm) or long (13 mm) mouth is briefly presented on the screen for 100 milliseconds. The mouthless face remains visible until the participant makes a response. For each trial, participants are asked to determine which mouth stimulus was presented by pressing either the “z” key or the “/” key on a PC keyboard (counterbalanced across subjects). For each block, the 2 mouth stimuli are presented equally often using a pseudorandomized sequence allowing up to 3 consecutive presentations of the same stimulus. Within each block, only 40 correct trials are followed by reward feedback (eg, “Correct!! You won 5 cents”), presented for 1500 milliseconds immediately after a correct response. If a reward feedback is presented, an additional blank screen is presented for 250 milliseconds. For nonrewarded trials, a blank screen is presented for 1750 milliseconds.

Fig. 4.

Summary of Task Design. At each trial, participants are asked to select via bottom press whether a short or long mouth had been presented. Figure modified with permission from Pizzagalli et al.40 Reprinted with Permission from Elsevier.

Critically, an asymmetric reinforcer schedule is used to induce a response bias.45 Thus, correct identification of one mouth (“rich stimulus”) is rewarded 3 times more frequently than correct identification of the other mouth (“lean stimulus”). Only 40 correct trials are rewarded in each block (30 rich, 10 lean) to ensure that each participant is exposed to the same (or a very similar) number of rewards. To achieve this goal, a controlled reinforcer procedure is used: if a participant makes an incorrect response on a trial scheduled to be rewarded, the feedback is delayed until the next correct response of the same stimulus.

Before the task, participants are instructed that the goal of the task is to win as much money as possible and that not all correct responses will receive a reward feedback. Importantly, participants are not informed that one of the stimuli will be rewarded more frequently. Note that due to the probabilistic nature of the task, participants cannot infer which stimulus is more advantageous based on the outcome of a single trial; instead, in order to optimize their choices, participants need to “integrate” reinforcement history over time. Depending on the monetary reward used for each trial, participants earn approximately $6 (5 cent per trial)40 or approximately $24 (20 cent per trial).46

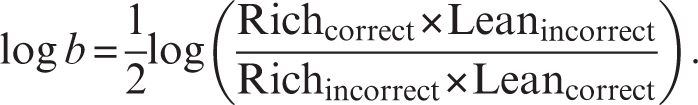

The main variable of interest is response bias, which can be computed41,42 as

|

As evident from the formula, a high-response bias emerges when participants tend to correctly identify the stimulus associated with more frequent rewards (rich hits) and to misclassify the lean stimulus (lean misses). To examine general task performance, secondary analyses consider hit rates [(number of hits)/(number of hits + number of misses)], reaction time, and discriminability. Discriminability, which assesses the subjects’ ability to perceptually distinguish between the stimuli and can thus be used as an indication of task difficulty, is computed as

|

In addition to these variables, the probability of specific responses as a function of the immediately preceding trial can be computed to evaluate the strength of a response bias as a function of (a) which stimulus had been rewarded in the preceding trial and (b) proximity of reward delivery. For example, the probability of selecting “rich” or “lean” in trials immediately following a correctly identified, rewarded rich trial vs a correctly identified, nonrewarded rich trial may be computed.47 Finally, in several studies, we have found that reward learning, which can be measured by subtracting response bias in block 1 from response bias in block 3, showed strong construct and predictive validity.40,47

Construct Validity.

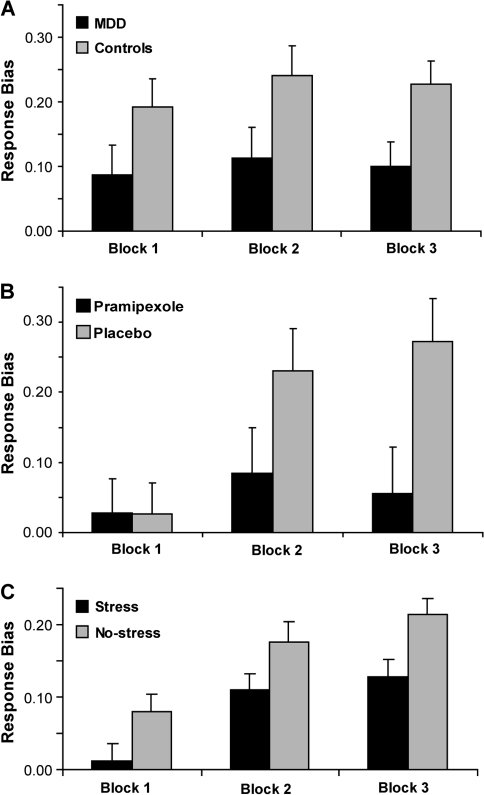

Initial construct validity comes from studies evaluating samples hypothesized to be characterized by dysfunctional reinforcement learning.48,49 Subjects with elevated depressive symptoms,40 unmedicated patients with major depressive disorder (MDD),50 and medicated euthymic patients with bipolar disorders47 showed reduced response bias toward the more frequently rewarded stimulus (figure 5a). Moreover, trial-by-trial probability analyses revealed that MDD subjects were impaired at expressing a response bias toward the more frequently rewarded cue in the absence of immediate reward (manifested as increased miss rates), whereas they were responsive to delivery of single rewards. Increased miss rates for the more frequently rewarded stimulus correlated with anhedonic symptoms (r = 0.52, P < .05), even after considering anxiety symptoms and general distress.50

Fig. 5.

Selected Findings Derived From the Probabilistic Reward Task. Response bias toward the more frequently rewarded stimulus is reduced in (a) unmedicated major depressive disorder subjects;50 (b) healthy controls receiving a single dose of a D2/3 agonist assumed to activate dopamine (DA) autoreceptors and thus reduce phasic DA bursts to unpredictable reward;47 and (c) healthy controls exposed to an acute stressor.67 A and C reprinted with permission from Elsevier. B reprinted with permission from Springer Science and Business Media.

Neural Systems.

Compared with learners, nonlearners showed significantly lower activation in response to reward feedback in dorsal anterior cingulate cortex (dACC) regions that have been previously implicated in integrating reinforcement history over time.51,52 This result is consistent with the hypothesis that nonlearners have blunted reinforcement sensitivity. Moreover, the ability to develop a response bias toward the more frequently rewarded stimulus correlated with dACC activation (r = 0.40, P < .030). A final feature of this study was that some of the participants performed a monetary incentive delay (MID) task53,54 during fMRI. Relative to nonlearners, learners showed larger basal ganglia responses to reward feedback (monetary gains) in the MID task. These findings suggest that participants developing a response bias toward the more frequently rewarded stimulus had stronger dACC and basal ganglia responses to reward outcomes.

Pharmacological and Behavioral Manipulation.

Two studies have shown that response bias in the probabilistic reward task is modulated by pharmacological manipulations affecting DA either directly46 or indirectly.55 In the first study, a single 0.5 mg dose of the D2/3 agonist pramipexole or placebo was administered to healthy volunteers 2 hours before performing the task.46 Consistent with predictions based on animal evidence,56–58 pramipexole impaired reinforcement learning (see Frank and O'Reilly59 for a prior human study postulating similar effects). Compared with placebo, subjects receiving pramipexole showed lower response bias toward the more frequently rewarded stimulus (figure 5b).46 Control analyses confirmed that reduced response bias was not due to transient adverse effects.

The aim of a second pharmacological study was to test the hypothesis that nicotine might increase responsiveness to reward-related cues,55 based on prior findings that nicotine increases appetitive responding through activation of presynaptic nicotinic receptors on mesocorticolimbic DA neurons in animals,60,61 and increases the incentive value of monetary reward in humans.62 Using a randomized, double-blind, placebo-controlled crossover design, Barr et al55 administered a single dose of transdermal nicotine (7–14 mg) to 30 psychiatrically healthy adult nonsmokers. Nicotine increased response bias toward the more frequently rewarded stimulus, and this effect persisted over time, as demonstrated by a greater response bias during the placebo session in participants who received nicotine in the first compared with the second session (1 week later).

Animal Models.

In collaboration with Athina Markou at University of California at San Diego, Dr Pizzagalli is developing a task analogous to the human probabilistic reward task for use in rodent studies.

Performance in Schizophrenia.

In a recent study, Heerey et al63 used the probabilistic reward task in a sample of 40 clinically stable and medicated outpatients with schizophrenia. The authors found that, compared with healthy controls, patients with schizophrenia had a reduced ability to discriminate between the stimuli but a similar response bias. The authors concluded that schizophrenia is characterized by intact sensitivity to reward and ability to modify responses based on reinforcements. Although intriguing, the interpretation of these findings is somewhat difficult because all patients were medicated at the time of testing. Moreover, no information was provided about the smoking status of participants, in particular whether patients and controls were matched for this variable. In light of the recent finding that nicotine enhances response bias in the probabilistic reward task,55 and given high rates of smoking in schizophrenia,64,65 it is unclear whether the null findings reported in63 might be partially due to group differences in smoking status and/or history.

Psychometric Data.

The test-retest reliability of the probabilistic reward task over approximately 38 days was r = 0.57, P < .004. Satisfactory test-retest reliability of reward learning (r = 0.56 over an averaged period of 39 days period) also emerged in an independent sample.66 In a recent study evaluating monozygotic (n = 20) and dizygotic (n = 15) twin pairs, the heritability of reward responsiveness was estimated to be 48%.67 Due to the limited sample size of this twin study, these heritability estimates should be considered preliminary.

Future Directions.

As noted above, the probablistic reward task has a growing body of evidence for its construct validity and neural bases. However, there are several important avenues for future development of this task. First, additional work is needed to determine the degree to which performance on this task is sensitive to reward learning deficits in schizophrenia. The published study on schizophrenia performance on this task did not reveal deficits in reward sensitivity. If additional work confirms the integrity of reward processing in this task in schizophrenia, this may not be a particularly useful task for schizophrenia research (though it may still be very valuable for work on mood disorders or other disorders with impaired reward sensitivity). Second, the current version of the task is quite long (300 trials) that reduces the feasibility of using it in clinical trials settings. Thus, one important future direction for the development of this task is to determine whether a shorter version (eg, only 1–2 blocks) would have sufficient sensitivity and reliability. Third, the test-retest reliability, while good for an experimental task, is overall less than would be optimal for a task to be used in clinical trials and would benefit from efforts to increase reliability.

Probabilistic Selection Task

Description.

The probabilistic selection task68,69 measures participants’ ability to learn from positive and negative feedback by integrating reinforcement probabilities over many trials. Three different stimulus pairs (AB, CD, EF) are presented in random order, and participants have to learn to choose 1 of the 2 stimuli. Feedback follows the choice to indicate whether it was correct or incorrect, but this feedback is probabilistic: In AB trials, a choice of stimulus A leads to positive feedback in 80% of trials, whereas a B choice leads to negative feedback in these trials. CD and EF pairs are less reliable: stimulus C is correct in 70% of trials, while E is correct in 60% of trials. Over the course of training, participants learn to choose stimuli A, C, and E more often than B, D, or F. Note that learning to choose A over B could be accomplished either by learning that choosing A leads to positive feedback or that choosing B leads to negative feedback (or both). To evaluate whether participants learn more about positive or negative outcomes of their decisions, performance is subsequently probed in a test/transfer phase in which all novel combinations of stimuli are presented and no feedback is provided. Positive feedback learning is assessed by reliable choice of the most positive stimulus A in this test phase, when presented with other stimuli (AC, AD, AE, and AF). Negative feedback learning is assessed by reliable avoidance of the most negative stimulus B when presented with the same stimuli (BC, BD, BE, and BF). The extent to which participants perform better in choose-A or avoid-B pairs has been associated with a “Go” or “NoGo” learning bias and is very sensitive to dopaminergic state, manipulation, and genetics.59,69–73

In addition to the probabilistic reinforcement learning biases, the task can also probe other aspects of reinforcement-based decision making. For example, the tendency to rapidly learn from a single instance of reinforcement in the initial trials of the task is thought to rely on distinct process from that involved in integrating feedback probabilities over trials.71 Similarly, when faced with novel test pairs, participants adaptively modulate their response times in proportion to the degree of reinforcement conflict. High conflict choices involving stimuli with similar reinforcement probabilities are associated with longer response times than those associated with divergent reinforcement probabilities, a process thought to depend on interactions between dorsomedial frontal cortex and the subthalamic nucleus.72

Construct Validity.

Performance on the probabilistic selection task is defined by the ability to choose the probabilistically most optimal stimulus. Of course, many factors can contribute to better or worse performance aside from reinforcement learning, including attention, motivation, fatigue, WM, etc. However, the main measure of interest in the task is within subject (ie, the ability to choose the most positive stimulus is contrasted with that of avoiding the most negative stimulus), thereby controlling for overall performance levels and specifically assessing the contribution of reinforcement. This relative positive to negative feedback learning measure is reliably altered by dopaminergic manipulation in a range of populations, and moreover, similar effects in positive vs negative learning have been observed in other tasks meant to measure similar constructs but using different stimuli, motor responses, and task rules (ie, probabilistic Go/NoGo learning task59 and probabilistic reversal learning task74).

Neural Systems.

Within the task paradigm, positive and negative feedback learning in this task are thought to rely on striatal D1 and D2 receptors, respectively. As described above, probabilistic positive and negative feedback learning are sensitive to dopaminergic manipulation. Increases in dopaminergic stimulation, likely in the striatum, lead to better positive learning but cause impairments in negative feedback learning.59,71,72 These effects are thought to arise because DA bursts that occur during positive outcomes75 support “Go learning” via D1 receptors,68,76 whereas DA dips that occur during negative outcomes75 support “NoGo learning” via D2 receptor disinhibition.68,76 Thus, an increase in striatal DA (eg, due to pharmacology) would continually stimulate D2 receptors and effectively block the effects of DA dips needed for NoGo learning.68,76 Conversely, DA depletion is associated with relatively better negative feedback learning but worse positive feedback learning. At the individual difference level, genes that control D1 and D2 DA function in the striatum are predictive of probabilistic positive and negative learning, whereas genes that control DA function in prefrontal cortex are predictive of rapid trial-to-trial learning from negative feedback.70 Negative feedback learning is also associated with enhanced error-related negativity (brain potentials originating from anterior cingulate cortex)76,77 and activation of this same region in functional neuroimaging.73 Lesions to medial prefrontal cortex are associated with both deficits in the acquisition of reinforcement contingencies (patients took longer to learn) and impaired negative feedback learning in the test phase.78 Finally, the subthalamic nucleus (a component within the basal ganglia network) is believed to delay responses during high conflict decisions. Supporting this claim, deep brain stimulation of the subthalamic nucleus causes premature responding in these high conflict choices.71

Pharmacological and Behavioral Manipulation.

As previously noted, the probabilistic selection task is sensitive to pharmacological manipulation. DA agonists, including levodopa and D2 agonists, impair negative feedback learning in Parkinson patients while sometimes improving positive feedback learning.72 In attention deficit/hyperactivity disorder, stimulant medications (methylphenidate and amphetamine), which elevate striatal DA, improved positive but not negative feedback learning.77 In healthy participants, low doses of D2 agonists and antagonists, which may act presynaptically to modulate DA release, predictably alter positive and negative feedback learning.59

Animal Models.

There is currently no available animal model of the probabilistic selection task. However, preliminary unpublished results from Claudio DaCunha's laboratory in Brazil suggest that rats can learn a reduced form of the task using odor discrimination and 2 pairs of stimuli. In a related project, Rui Costa and colleagues have developed a forced-choice task requiring mice to learn to choose and avoid behaviors associated with positive and negative tastants.79 Mice with elevated striatal DA levels showed enhanced bias to approach rewarding tastants together with a reduced bias to avoid aversive tastants, similar to the data reported in humans. In monkeys, striatal D1 receptor blockade abolishes the normal response speeding observed when a large reward is available (a measure of Go learning), whereas D2 receptor blockade leads to greater response slowing when smaller than average rewards are available (a measure of NoGo learning).80

Performance in Schizophrenia.

In a preliminary study, patients with schizophrenia showed large deficits in learning the standard version of the probabilistic selection task, which uses Japanese Hiragana characters as stimuli.81 Subsequent testing using verbalizable stimuli (pictures of every day objects such as bicycles) showed that patients can learn the task but show selective deficits in early acquisition (thought to rely on prefrontal structures), which correlated with their negative symptoms.81 In the test phase, patients showed intact “NoGo” learning but selectively impaired “Go” learning. Further, all the genetic polymorphisms predictive of learning in this task are candidate genes for schizophrenia.

Psychometric Data.

Practice effects for the probabilistic selection task have been assessed in Frank and O'Reilly.59 Different stimuli are used across sessions. On average, participants are faster to learn the task after multiple sessions, but this practice does not affect relative positive vs negative feedback learning.

Future Directions.

As with the probabilistic reward task, the probabilistic selection task has good construct validity and good theoretical and empirical work on its neural substrates. One issue in regard to construct validity is the degree to which the speed of initial learning influences the ability to learn from positive vs negative reinforcement. In other words, if fast learners experience relatively little negative feedback associated with the “B” stimulus, they may show reduced “negative feedback” learning. However, this may not reflect a true impairment in learning from negative feedback but rather an absence or a reduction in the actual experience of negative feedback during the task. Like many paradigms from cognitive neuroscience, the current version of the probabilistic reward task does not have an immediate animal homologue and is likely too long to be used in standard clinical trials, though it may be amenable to use in early-phase studies. However, work examining the degree to which the task could be shortened or simplified, while still retaining construct validity, would facilitate its use in clinical trials. In addition, more information is needed on all psychometrics aspects of the task, including test-retest reliability, floor and ceiling effects, and practice effects.

Probabilistic Reversal Learning Task

Description.

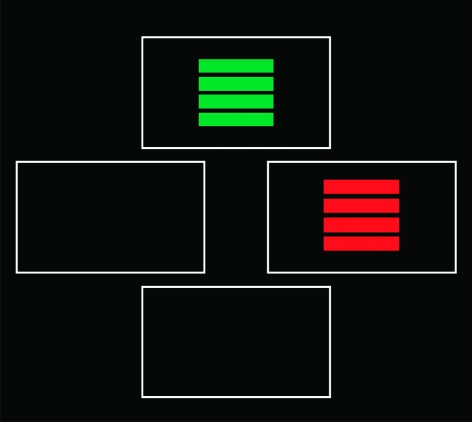

This task was developed by Trevor Robbins and Robert Rogers and first published in Lawrence et al82 and Swainson et al.83 On each trial, subjects are presented 2 visual patterns (rectangles of colored stripes; figure 6). These patterns appear in 2 randomly chosen boxes out of 4 possible boxes. The task consists of 2 stages, starting with a simple probabilistic visual discrimination, in which subjects have to make a 2-alternative forced-choice between 2 colors. The “correct” stimulus (which is always the first stimulus touched) receives an 80:20 ratio of positive:negative feedback, and the opposite ratio of reinforcement is given for the “incorrect” stimulus. In the second, reversal stage, the contingencies are reversed, without warning, so that the previously “incorrect” color is now correct and vice versa for the previously “correct” color.

Fig. 6.

Screen Display of the Probabilistic Reversal Learning Task.

Although all subjects receive a total of 80 trials, a learning criterion of 8 consecutive correct trials is generally imposed for the purposes of analysis. Main performance measures are failure or success at each stage, mean errors to criterion, and mean latencies. Failure/success rates are analyzed using the likelihood-ratio method for contingency tables.84 Subjects failing stage 1 are excluded from subsequent analyses of error rates and latencies at stage 2. They are included when error rates and latencies at stage 1 are analyzed (to obtain a measure of acquisition). Measures of perseveration and maintenance can also be obtained. Consecutive-perseverative responses refer to consecutive responses made, directly following reversal of the reinforcement contingencies, to the color that was correct during stage 1 but is now incorrect in stage 2. Stage 1-perseverative errors (in the terminology of Jones and Mishkin85) refer to errors in blocks of 8 trials (of the reversal stage), in which performance within these blocks falls significantly below chance (≤1 correct response). Both these perseveration scores tend to correlate significantly with total errors made in stage 2 of the task. Therefore, each should be converted to a score indicating the proportion of total errors in stage 2. Maintenance errors refer to the errors made subsequent to criterion being reached (ie, the number of responses to incorrect stimulus/total trials remaining), subject to there being at least 10 trials after criterion is reached.

Construct Validity.

The probabilistic reversal learning task gauges adaptive behavior, which requires anticipation of biologically relevant events, ie, rewards and punishments, by learning signals of their occurrence. The ability to predict events interacts with the ability to strengthen and weaken actions when these actions are closely followed by rewards and punishments. These processes are often collectively referred to as reinforcement learning. Models of reinforcement learning use a temporal difference prediction error signal, representing the difference between expected and obtained events, to update their predictions based on states of the environment.86 Probabilistic learning tasks are commonly used to assess reinforcement learning and neural activity associated with prediction errors.87–89 For example, O'Doherty et al88,89 used probabilistic learning tasks to establish that activity in the (ventral) striatum, and orbitofrontal cortex was positively correlated with the prediction error signal.

More generally, it should be noted that demands for reinforcement learning are particularly high in probabilistic reversal learning paradigms. However, reversal learning constitutes a special case of reinforcement learning, and adequate performance also depends on other processes including prepotent response inhibition and stimulus switching. On a related note, it should be recognized that simple reinforcement learning models do not encompass all aspects of probabilistic reversal learning, as implemented in our paradigm. For example, Hampton et al90 have shown that behavioral performance on neural activity (in the ventromedial prefrontal cortex) during probabilistic reversal learning was fit better by a model that also simulated knowledge of the abstract task structure than by a simple reinforcement learning model that did not incorporate such a higher order knowledge about interdependencies between actions.

Neural Systems.

A number of neuropsychological studies have revealed that (probabilistic and deterministic) reversal learning is disrupted by frontal lobe lesions.91–94 The deficits appear restricted to patients with ventromedial frontal lesions, do not to extend to patients with dorsolateral prefrontal lesions, and cannot be attributed to problems with initial acquisition of stimulus-reinforcement contingencies. Recently, Hornak et al93 observed deficits on the probabilistic reversal learning task in a small number of patients with DLPFC lesions, but posttest debriefing revealed that these patients had failed to pay attention to the crucial feedback provided on the screen. In a further study, a group of patients with frontal variant frontotemporal dementia showed impairments on the probabilistic reversal learning task, while showing intact performance on executive functions associated with the DLPFC.95

The first fMRI study of this task96 revealed suprathreshold activity in the VLPFC, anterior cingulate cortex, and posterior parietal cortex during the final reversal errors relative to the baseline correct responses. A priori hypotheses allowed more focused region of interest analyses, which revealed that the activity in the VLPFC was significantly larger on the final reversal errors than on the other (eg, probabilistic) errors that did not lead to switching. It should be noted that at a lower statistical threshold (uncorrected for multiple comparisons), the DLPFC was also active during the final reversal errors. Such additional (albeit relatively less) activity in the DLPFC was confirmed in later fMRI studies with this task97–101 and with a slightly adapted version of the task.100 Finally, more recent studies that employed more optimal acquisition sequences have also revealed reversal-related activity in the more anterior orbitofrontal cortex.97,100 In our initial study, reversal-related activity was also observed in the ventral striatum, centered on the nucleus accumbens. Reversal-related activity was also observed in the nucleus accumbens in patients with Parkinson disease (who had abstained from their medication97). However, the exact computation carried by the nucleus accumbens during probabilistic reversal learning is still under investigation.

Pharmacological and Behavioral Manipulation.

Probabilistic reversal learning is sensitive to dopaminergic and serotoninergic manipulations but not to noradrenergic manipulations in humans. Withdrawal of dopaminergic medication, such as levodopa and DA receptor agonists, in patients with mild Parkinson disease (PD) improves performance.101 These results are consistent with Mehta et al,102 who showed that administration of the DA receptor agonist bromocriptine impaired performance on this task in young healthy volunteers while improving spatial memory. Additional support for the overdose hypothesis came from a recent pharmacological fMRI study, which revealed that dopaminergic medication in mild PD patients abolished reversal-related activity in the ventral striatum (particularly in the nucleus accumbens).97 The effect of medication was particularly large on final reversal errors that led to behavioral switching. Interestingly, another pharmacological fMRI study in healthy volunteers revealed that administration of methylphenidate (60 mg oral) also abolished reversal-related activity in the ventral striatum (particularly in the ventral putamen), an effect that was again particularly prominent on the final reversal errors.98 Discrepancies in the precise localization of this effect within the ventral striatum indicate the need for further work.

Serotoninergic manipulation also affects performance on the task. However, the nature of the effect is qualitatively different from that observed after dopaminergic manipulation. Chamberlain et al103 observed that acute administration of citalopram, a selective serotonin reuptake inhibitor, but not atomoxetine, a selective noradrenaline reuptake inhibitor, increased the number of switches after probabilistic errors (ie, misleading punishment). This effect was attributed to a presynaptic mechanism of action, paradoxically reducing serotonin levels. Consistent with this hypothesis, Evers et al104 found that the dietary acute tryptophan depletion procedure, which lowers serotonin synthesis, enhanced neural activity in the dorsomedial prefrontal cortex during punishment on switch and nonswitch trials. Thus, in contrast to dopaminergic medication, serotoninergic manipulation affected the processing of punishment irrespective of switching. Interestingly, patients with major depression, which has been associated with serotoninergic abnormality, were found to suffer a deficit on probabilistic reversal learning that did not reflect perseverative responding but rather reflected an inability to maintain responding to the usually correct stimulus in the face of misleading, probabilistic errors.99,105 Finally, Taylor-Tavares et al99 observed an inverse correlation between suppressed activity in the amygdala during probabilistic, misleading errors and the tendency to switch after these misleading errors. Critically, this relationship was abolished in depressed patients. Additional pharmaco-fMRI studies examined different versions of the current task paradigm.106–111

Animal Models.

There is a long history of animal work on the probabilistic reversal learning task.85,112,113 When assessing convergence between animal and human studies, it is important to consider subtle differences in task design. First, studies with experimental animals have used deterministic rather than probabilistic contingencies, so that animals never obtain “misleading” punishment or reward. Second, the nature of reward and punishment is qualitatively different, with reward constituting prolonged periods of access to juice or food in animals but (often) bonus points of positive feedback in humans. On the other hand, punishment may consist of periods of darkness or reward omission in animals but bonus point loss or negative feedback in humans.

Nevertheless, there is remarkable convergence. Consistent with neuroimaging studies in humans, animal work has implicated the orbitofrontal cortex in reversal learning.85,113,114 Single-cell recordings have also revealed that the firing of orbitofrontal neurons changes with alterations in reward contingencies.115,116 More recent single-cell recording studies suggest that the activity of orbitofrontal neurons reverses more slowly than that of neurons in the amygdale,117,118 which may suggest that the orbitofrontal cortex may indirectly facilitate flexibility in downstream regions (such as the amygdala) by signaling the expected value of outcomes rather than by directly inhibiting previously relevant responses.

In addition to orbitofrontal and amygdala findings,85 animal studies have implicated the ventral striatum in reversal learning. Specifically, lesions of the ventrolateral part of the head of the caudate nucleus induced a perseverative response tendency during (object) reversal learning in monkeys.112 Subsequent work with rodents and nonhuman primates indicates that lesions of nucleus accumbens also disrupts performance on (object and/or spatial) reversal learning tasks.119,120 However, the impairment following nucleus accumbens lesions is generally not restricted to the reversal stages of the task, but extends to initial acquisition stages, suggesting a more general role in the learning of stimulus-reinforcement contingencies rather than in reversal specifically.119

Psychopharmacological work with marmosets supports human evidence that reversal learning is sensitive to serotoninergic manipulations. Clarke et al121–123 revealed that depletion of serotonin in the orbitofrontal cortex with the neurotoxin 5,7-dihydroxytriptamine (DHT) impaired reversal learning and induced perseverative responding to the previously rewarded stimulus. It might be noted that the perseverative nature of the deterministic reversal deficit after 5,7-DHT lesions in marmosets is qualitatively different from the inappropriate switching seen after tryptophan depletion in humans. This discrepancy may reflect differences in the task used in marmosets and humans (probabilistic vs deterministic; emphasis on punishment) or, more likely, differences in the effect of the manipulation on the degree of serotonin depletion in the brain.124,125

Performance in Schizophrenia.

Waltz and Gold126 recently employed a modified version of the probabilistic reversal learning task in 34 patients with schizophrenia and 26 controls. Although patients and controls performed similarly on the initial acquisition of probabilistic contingencies, patients showed substantial learning impairments when reinforcement contingencies were reversed, achieving significantly fewer reversals.

Psychometric Data.

These data are not yet available for the probabilistic reversal learning task.

Future Directions.

The probabilistic reversal learning task has clearly established validity for both the reinforcement learning and the reversal learning constructs of the task paradigm. As in the other reinforcement learning paradigms, there is also solid work identifying neural substrates, developing animal models, and investigating effects of pharmacological manipulation. In future work, it will be important to more fully investigate the impact of schizophrenia on task performance. Establishing that patient impairments are specific to the reversal condition will address alternate generalized deficit explanations. As with most tasks being translated from the cognitive neuroscience domain, substantial work will also be needed to establish and optimize psychometric characteristics.

Acknowledgments

Appreciation goes to Dr Cameron Carter and Dr Deanna Barch, who spearheaded the CNTRICS initiative. Appreciation also goes to Jody Conrad, Carol Cox, and Deb Tussing, who were central to the successful organization of the CNTRICS meetings. Finally, we would also like to thank the additional members of the breakout group including, Deanna Barch, Robert Buchanan, Nelson Cowan, Melissa Fisher, Michael Green, Ann Kring, Gina Kuperberg, Steve Marder, Daniel Mathalon, Michael Minzenberg, Trevor Robbins, Martin Sarter, Larry Seidman, Carol Tamminga, Steve Taylor, Andy Yonelinas, Jong Yoon and Jared Young.

References

- 1.Delis DC, Kramer JH, Kaplan E, Ober BA. California Verbal Learning Test (CVLT) Manual. New York, NY: The Psychological Corporation; 1987. [Google Scholar]

- 2.Wechsler DA. Wechsler Adult Intelligence Scale Revised (WAIS-R), Manual. Cleveland, OH: The Psychological Corporation; 1981. [Google Scholar]

- 3.Nuechterlein KH, Green MF, Kern RS, et al. The MATRICS Consensus Cognitive Battery, part 1: test selection, reliability, and validity. Am J Psychiatry. 2008;165:203–213. doi: 10.1176/appi.ajp.2007.07010042. [DOI] [PubMed] [Google Scholar]

- 4.Carter CS, Barch DM. Cognitive neuroscience-based approaches to measuring and improving treatment effects on cognition in schizophrenia: the CNTRICS initiative. Schizophr Bull. 2007;33:1131–1137. doi: 10.1093/schbul/sbm081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ranganath C, Minzenberg M, Ragland JD. The cognitive neuroscience of memory function and dysfunction in schizophrenia. Biol Psychiatry. 2008;19:417–427. doi: 10.1016/j.biopsych.2008.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Eichenbaum H. A cortical-hippocampal system for declarative memory. Nat Rev Neurosci. 2000;1:41–50. doi: 10.1038/35036213. [DOI] [PubMed] [Google Scholar]

- 7.Eichenbaum H, Cohen NJ. From Conditioning to Conscious Recollection: Memory Systems of the Brain. New York, NY: Oxford University Press; 2001. [Google Scholar]

- 8.Marr D. Simple memory: a theory for archicortex. Philos Trans R Soc Lond B Biol Sci. 1971;262:23–81. doi: 10.1098/rstb.1971.0078. [DOI] [PubMed] [Google Scholar]

- 9.O'Reilly RC, Rudy JW. Conjunctive representations in learning and memory: principles of cortical and hippocampal function. Psychol Rev. 2001;108:311–345. doi: 10.1037/0033-295x.108.2.311. [DOI] [PubMed] [Google Scholar]

- 10.Frank MJ, Rudy JW, O'Reilly RC. Transitivity, flexibility, conjunctive representations, and the hippocampus. II. A computational analysis. Hippocampu. 2003;13:341–354. doi: 10.1002/hipo.10084. [DOI] [PubMed] [Google Scholar]

- 11.Nakazawa K, Quirk MC, Chitwood RA, et al. Requirement for hippocampal CA3 NMDA receptors in associative memory recall. Science. 2002;297:211–218. doi: 10.1126/science.1071795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Nakazawa K, Su LD, Quirk MC, Rondi-Reig L, Wilson MA, Tonegawa S. Hippocampal CA3 NMDA receptors are crucial for memory acquisition of one-time experience. Neuron. 2003;38:305–315. doi: 10.1016/s0896-6273(03)00165-x. [DOI] [PubMed] [Google Scholar]

- 13.Preston AR, Shrager Y, Dudukovic NM, Gabrieli JD. Hippocampal contribution to the novel use of relational information in declarative memory. Hippocampus. 2004;14:148–152. doi: 10.1002/hipo.20009. [DOI] [PubMed] [Google Scholar]

- 14.Lee SS, Jensen AR. The effect of awareness on three-stage mediated association. J Verb Learn Verb Behav. 1968;7:1005–1009. [Google Scholar]

- 15.Bunsey M, Eichenbaum H. Conservation of hippocampal memory function in rats and humans. Nature. 1996;379:255–257. doi: 10.1038/379255a0. [DOI] [PubMed] [Google Scholar]

- 16.Titone D, Ditman T, Holzman PS, Eichenbaum H. Levy DL. Transitive inference in schizophrenia: impairments in relational memory organization. Schizophr Res. 2004;68:235–247. doi: 10.1016/S0920-9964(03)00152-X. [DOI] [PubMed] [Google Scholar]

- 17.Heckers S, Rauch SL, Goff D, et al. Impaired recruitment of the hippocampus during conscious recollection in schizophrenia. Nat Neurosci. 1998;1:318–323. doi: 10.1038/1137. [DOI] [PubMed] [Google Scholar]

- 18.Ongur D, Cullen TJ, Wolf DH, et al. The neural basis of relational memory deficits in schizophrenia. Arch Gen Psychiatry. 2006;63:356–365. doi: 10.1001/archpsyc.63.4.356. [DOI] [PubMed] [Google Scholar]

- 19.Lisman JE, Grace AA. The hippocampal-VTA loop: controlling the entry of information into long-term memory. Neuron. 2005;46:703–713. doi: 10.1016/j.neuron.2005.05.002. [DOI] [PubMed] [Google Scholar]

- 20.Ragland JD, Moelter ST, McGrath C, et al. Levels-of-processing effect on word recognition in schizophrenia. Biol Psychiatry. 2003;54:1154–1161. doi: 10.1016/s0006-3223(03)00235-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ragland JD, Valdez JN, Loughead J, Gur RC, Gur RE. Functional magnetic resonance imaging of internal source monitoring in schizophrenia: recognition with and without recollection. Schizophr Res. 2006;87:160–171. doi: 10.1016/j.schres.2006.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Blumenfeld RS, Ranganath C. Dorsolateral prefrontal cortex promotes long-term memory formation through its role in working memory organization. J Neurosci. 2006;26:916–925. doi: 10.1523/JNEUROSCI.2353-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Bower GH. Imagery as a relational organizer in associative learing. J Verb Learn Verb Behav. 1970;9:529–533. [Google Scholar]

- 24.Hunt RR, Einsten GO. Relational and item-specific information in memory. J Verb Learn Verb Behav. 1981;920:497–514. [Google Scholar]

- 25.Bower GH. Organizational factors in memory. Cogn Psychol. 1970;1:18–46. [Google Scholar]

- 26.Hunt RR, McDaniel MA. The enigma of organization and distinctiveness. J Mem Lang. 1993;32:421–445. [Google Scholar]

- 27.Eichenbaum H. Remembering: functional organization of the declarative memory system. Curr Biol. 2006;16:R643–R645. doi: 10.1016/j.cub.2006.07.026. [DOI] [PubMed] [Google Scholar]

- 28.Eichenbaum H, Yonelinas AR, Ranganath C. The medial temporal lobe and recognition memory. Annu Rev Neurosci. 2007;30:123–152. doi: 10.1146/annurev.neuro.30.051606.094328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Squire LR. Memory systems of the brain: a brief history and current perspective. Neurobiol Learn Mem. 2004;82:171–177. doi: 10.1016/j.nlm.2004.06.005. [DOI] [PubMed] [Google Scholar]

- 30.Postle BR, Berger JS, D'Esposito M. Functional neuroanatomical double dissociation of mnemonic and executive control processes contributing to working memory performance. Proc Natl Acad Sci USA. 1999;96:12959–12964. doi: 10.1073/pnas.96.22.12959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Blumenfeld RS, Ranganath C. Prefrontal cortex and long-term memory encoding: an integrative review of findings from neuropsychology and neuroimaging. Neuroscientist. 2007;13:280–291. doi: 10.1177/1073858407299290. [DOI] [PubMed] [Google Scholar]

- 32.Murray LJ, Ranganath C. The dorsolateral prefrontal cortex contributes to successful relational memory encoding. J Neurosci. 2007;27:5515–5522. doi: 10.1523/JNEUROSCI.0406-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ragland JD, Gur RC, Valdez JN, et al. Levels-of-processing effect on frontotemporal function in schizophrenia during word encoding and recognition. Am J Psychiatry. 2005;162:1840–1848. doi: 10.1176/appi.ajp.162.10.1840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bonner-Jackson A, Haut K, Csernansky JG, Barch DM. The influence of encoding strategy on episodic memory and cortical activity in schizophrenia. Biol Psychiatry. 2005;58:47–55. doi: 10.1016/j.biopsych.2005.05.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ninokura Y, Mushiake H, Tanji J. Integration of temporal order and object information in the monkey lateral prefrontal cortex. J Neurophysiol. 2004;91:555–560. doi: 10.1152/jn.00694.2003. [DOI] [PubMed] [Google Scholar]

- 36.Petrides M. Impairments on nonspatial self-ordered and externally ordered working memory tasks after lesions of the mid-dorsal lateral part of the lateral frontal cortex of monkey. J Neurosci. 1995;15:359–375. doi: 10.1523/JNEUROSCI.15-01-00359.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ragland JD, Gur RC, Raz J, et al. Effect of schizophrenia on frontotemporal activity during word encoding and recognition: a PET cerebral blood flow study. Am J Psychiatry. 2001;158:1114–1125. doi: 10.1176/appi.ajp.158.7.1114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ragland JD, Gur RC, Valdez J, et al. Event-related fMRI of frontotemporal activity during word encoding and recognition in schizophrenia. Am J Psychiatry. 2004;161:1004–1015. doi: 10.1176/appi.ajp.161.6.1004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Craik FIM, Lockhart RS. Levels of processing: a framework for memory research. J Verb Learn Verb Behav. 1972;12:599–607. [Google Scholar]

- 40.Pizzagalli DA, Jahn AL, O'Shea JP. Toward an objective characterization of an anhedonic phenotype: a signal-detection approach. Biol Psychiatry. 2005;57:319–327. doi: 10.1016/j.biopsych.2004.11.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Tripp G, Alsop B. Sensitivity to reward frequency in boys with attention deficit hyperactivity disorder. J Clin Child Psychol. 1999;28:366–375. doi: 10.1207/S15374424jccp280309. [DOI] [PubMed] [Google Scholar]

- 42.Davison MC, Tustin RD. The relation between the generalized matching law and signal-detection theory. J Exp Anal Behav. 1978;29:331–336. doi: 10.1901/jeab.1978.29-331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Baum WM. On two types of deviation from the matching law: bias and undermatching. J Exp Anal Behav. 1974;22:231–242. doi: 10.1901/jeab.1974.22-231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Herrnstein RJ. On the law of effect. J Exp Anal Behav. 1970;13:243–266. doi: 10.1901/jeab.1970.13-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.McCarthy D, Davison M. Signal probability, reinforcement, and signal detection. J Exp Anal Behav. 1979;32:373–382. doi: 10.1901/jeab.1979.32-373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Pizzagalli DA, Evins AE, Schetter EC, et al. Single dose of a dopamine agonist impairs reinforcement learning in humans: behavioral evidence from a laboratory-based measure of reward responsiveness. Psychopharmacology (Berl) 2008;196:221–232. doi: 10.1007/s00213-007-0957-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pizzagalli DA, Goetz E, Ostacher M, Iosifescu D, Perlis RH. Euthymic patients with bipolar disorder show decreased reward learning in a probabilistic reward task. Biol Psychiatry. 2008;64:162–168. doi: 10.1016/j.biopsych.2007.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hasler G, Drevets WC, Manji HK, Charney DS. Discovering endophenotypes for major depression. Neuropsychopharmacology. 2004;29:1765–1781. doi: 10.1038/sj.npp.1300506. [DOI] [PubMed] [Google Scholar]

- 49.Leibenluft E, Charney DS, Pine DS. Researching the pathophysiology of pediatric bipolar disorder. Biol Psychiatry. 2003;53:1009–1020. doi: 10.1016/s0006-3223(03)00069-6. [DOI] [PubMed] [Google Scholar]

- 50.Pizzagalli DA, Iosifescu D, Hallett LA, Ratner KG, Fava M. Reduced hedonic capacity in major depressive disorder: evidence from a probabilistic reward task. J Psychiatr Res. doi: 10.1016/j.jpsychires.2008.03.001. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Kennerley SW, Walton ME, Behrens TEJ, Buckley MJ, Rushworth MFS. Optimal decision making and the anterior cingulate cortex. Nat Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- 52.Rushworth MF, Buckley MJ, Behrens TE, Walton ME, Bannerman DM. Functional organization of the medial frontal cortex. Curr Opin Neurobiol. 2007;17:220–227. doi: 10.1016/j.conb.2007.03.001. [DOI] [PubMed] [Google Scholar]

- 53.Dillon DG, Holmes AJ, Jahn AL, Bogdan R, Wald LL, Pizzagalli DA. Dissociation of neural regions associated with anticipatory versus consummatory phases of incentive processing. Psychophysiology. 2008;45:36–49. doi: 10.1111/j.1469-8986.2007.00594.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Knutson B, Fong GW, Bennett SM, Adams CM, Hommer D. A region of mesial prefrontal cortex tracks monetarily rewarding outcomes: characterization with rapid event-related fMRI. NeuroImage. 2003;18:263–272. doi: 10.1016/s1053-8119(02)00057-5. [DOI] [PubMed] [Google Scholar]

- 55.Barr RS, Pizzagalli DA, Culhane MA, Goff DC, Evins AE. A single dose of nicotine enhances reward responsiveness in non-smokers: Implications for development of dependence. Biol Psychiatry. 2008;63:1061–1065. doi: 10.1016/j.biopsych.2007.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Fuller RW, Clemens JA, Hynes MD., III Degree of selectivity of pergolide as an agonist at presynaptic versus postsynaptic dopamine receptors: implications for prevention or treatment of tardive dyskinesia. J Clin Psychopharmacol. 1982;2:371–375. [PubMed] [Google Scholar]

- 57.Tissari AH, Rossetti ZL, Meloni M, Frau MI, Gessa GL. Autoreceptors mediate the inhibition of dopamine synthesis by bromocriptine and lisuride in rats. Eur J Pharmacol. 1983;91:463–468. doi: 10.1016/0014-2999(83)90171-1. [DOI] [PubMed] [Google Scholar]

- 58.Piercey MF, Hoffmann WE, Smith MW, Hyslok DK. Inhibition of dopamine neuron firing by pramipexole, a dopamine D3 receptor-preferring agonist: comparison to other dopamine receptor agonists. Eur J Pharmacol. 1996;312:35–44. doi: 10.1016/0014-2999(96)00454-2. [DOI] [PubMed] [Google Scholar]

- 59.Frank MJ, O'Reilly RC. A mechanistic account of striatal dopamine function in human cognition: psychopharmacological studies with cabergoline and haloperidol. Behav Neurosci. 2006;120:497–517. doi: 10.1037/0735-7044.120.3.497. [DOI] [PubMed] [Google Scholar]

- 60.Dani JA, Harris RA. Nicotine addiction and comorbidity with alcohol abuse and mental illness. Nat Neurosci. 2005;8:1465–1470. doi: 10.1038/nn1580. [DOI] [PubMed] [Google Scholar]

- 61.Kenny PJ, Markou A. Nicotine self-administration acutely activates brain reward systems and induces a long-lasting increase in reward sensitivity. Neuropsychopharmacology. 2006;31:1203–1211. doi: 10.1038/sj.npp.1300905. [DOI] [PubMed] [Google Scholar]

- 62.Dawkins L, Powell JH, West R, Powell J, Pickering A. A double-blind placebo controlled experimental study of nicotine: I. Effects on incentive motivation. Psychopharmacology (Berl) 2006;189:355–367. doi: 10.1007/s00213-006-0588-8. [DOI] [PubMed] [Google Scholar]

- 63.Heerey EA, Bell-Warren KR, Gold JM. Decision-making impairments in the context of intact reward sensitivity in schizophrenia. Biol Psychiatry. 2008;64:62–69. doi: 10.1016/j.biopsych.2008.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.de Leon J, Tracy J, McCann E, McGrory A, Diaz FJ. Schizophrenia and tobacco smoking: a replication study in another US psychiatric hospital. Schizophr Res. 2002;56:55–65. doi: 10.1016/s0920-9964(01)00192-x. [DOI] [PubMed] [Google Scholar]

- 65.Kumari V, Postma P. Nicotine use in schizophrenia: the self medication hypotheses. Neurosci Biobehav Rev. 2005;29:1021–1034. doi: 10.1016/j.neubiorev.2005.02.006. [DOI] [PubMed] [Google Scholar]

- 66.Santesso DL, Dillon DG, Birk JL. Individual differences in reinforcement learning: behavioral, electrophysiological, and neuroimaging correlates. NeuroImage. 42:807–816. doi: 10.1016/j.neuroimage.2008.05.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Bogdan R, Pizzagalli DA. The heritability of hedonic capacity and perceived stress: a twin study evaluation of candidate depressive phenotypes. Psychol Med. 2008;28:1–8. doi: 10.1017/S0033291708003619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Frank MJ, Seeberger LC, O'reilly RC. By carrot or by stick: cognitive reinforcement learning in Parkinsonism. Science. 2004;306:1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- 69.Frank MJ, Woroch BS, Curran T. Error-related negativity predicts reinforcement learning and conflict biases. Neuron. 2005:47495–501. doi: 10.1016/j.neuron.2005.06.020. [DOI] [PubMed] [Google Scholar]