Abstract

While evidence-based medicine has increasingly broad-based support in health care, it remains difficult to get physicians to actually practice it. Across most domains in medicine, practice has lagged behind knowledge by at least several years. The authors believe that the key tools for closing this gap will be information systems that provide decision support to users at the time they make decisions, which should result in improved quality of care. Furthermore, providers make many errors, and clinical decision support can be useful for finding and preventing such errors. Over the last eight years the authors have implemented and studied the impact of decision support across a broad array of domains and have found a number of common elements important to success. The goal of this report is to discuss these lessons learned in the interest of informing the efforts of others working to make the practice of evidence-based medicine a reality.

Delivering outstanding medical care requires providing care that is both high-quality and safe. However, while the knowledge base regarding effective medical therapies continues to improve, the practice of medicine continues to lag behind, and errors are distressingly frequent.1

Regarding the gaps between evidence and practice, Lomas et al.2 evaluated a series of published guidelines and found that it took an average of approximately five years for these guidelines to be adopted into routine practice. Moreover, evidence exists that many guidelines—even those that are broadly accepted—are often not followed.3,4,5,6,7 For example, approximately 50% of eligible patients do not receive beta blockers after myocardial infarction,8 and a recent study found that only 33% of patients had low-density lipoprotein (LDL) cholesterol levels at or below the National Cholesterol Education Program recommendations.5 Of course, in many instances, relevant guidelines are not yet available, but even in these instances, practitioners should consider the evidence if they wish to practice evidence-based medicine, and a core part of practicing evidence-based medicine is considering guidelines when they do exist.

Although we strive to provide the best possible care, many studies within our own institution have identified gaps between optimal and actual practice. For example, in a study designed to assess the appropriateness of antiepileptic drug monitoring, only 27% of antiepileptic drug levels had an appropriate indication and, among these, half were drawn at an inappropriate time.9 Among digoxin levels, only 16% were appropriate in the inpatient setting, and 52% were appropriate in the outpatient setting.10 Of clinical laboratory tests, 28% were ordered too early after a prior test of the same type to be clinically useful.11 For evaluation of hypothyroidism or hyperthyroidism, the initial thyroid test performed was not the thyroid-stimulating hormone level in 52% of instances.12 Only 17% of diabetics who needed eye examinations had them, even after visiting their primary care provider.13 The Centers for Disease Control and Prevention (CDC) guidelines for vancomycin use were not followed 68% of the time.14 Safety also is an issue: in one study, we identified 6.5 adverse drug events per 100 admissions, and 28% were preventable15; for example, many patients received medications to which they had a known allergy. Clearly, there are many opportunities for improvement.

We believe that decision support delivered using information systems, ideally with the electronic medical record as the platform, will finally provide decision makers with tools making it possible to achieve large gains in performance, narrow gaps between knowledge and practice, and improve safety.16,17 Recent reviews have suggested that decision support can improve performance, although it has not always been effective.18,19 These reviews have summarized the evidence that computerized decision support works, in part, based on evidence domain. While this perspective has been very useful and has suggested, for example, that decision support focusing on preventive reminders and drug doses has been more effective than decision support targeting assistance regarding diagnosis, it does not tell one how best to deliver it.

In all the areas discussed above, we have attempted to intervene with decision support to improve care with some successes20,21 and many partial or complete failures.22,23,24 For the purposes of this report, we consider decision support to include passive and active referential information as well as reminders, alerts, and guidelines. Many others also have evaluated the impact of decision support,19,20 and we are not attempting to provide a comprehensive summary of how decision support can improve care but rather to provide our perspective on what worked and what did not.19 Thus, the goal of this report is to present generic lessons from our experiences that may be useful to others, including informaticians, systems developers, and health care organizations.

Study Site

Brigham and Women's Hospital (BWH) is a 720-bed tertiary care hospital. The hospital has an integrated hospital information system, accessed via networked desktop personal computers, that provides clinical, administrative, and financial functions.25,26 A physician order entry application was implemented initially in 1993.27,28 Physicians enter all patient orders into this application, with the majority being entered in coded form. The information system in general, and the physician order entry system in particular, delivers patient-specific decision support to clinicians in real time. Most active decision support to date has focused on drugs,29 laboratory testing,30,22 and radiology procedures.24 In addition, a wide array of information is available online for physicians to consult, including literature searching, Scientific American Medicine, and the Physician's Desk Reference, among others; these applications are used hundreds of times daily. In the ambulatory practices associated with the hospital, we have developed an electronic medical record, which is the main record used in most practices31 and which includes an increasing amount of decision support.32

We currently are in the process of developing additional applications for the Partners network, which includes BWH and Massachusetts General Hospital, several smaller community hospitals, and Partners Community Healthcare, a network of more than 1,000 physicians across the region. These include a Longitudinal Medical Record, which will serve as a network-wide record across the continuum of care and will include ambulatory order entry, an enterprise master patient index, and a clinical data repository.31

Ten Commandments for Effective Clinical Decision Support

1. Speed Is Everything.

We have found repeatedly,33 as have others,34 that the speed of an information system is the parameter that users value most. If the decision support is wonderful, but takes too long to appear, it will be useless. When infrastructure problems slow the speed of an application, user satisfaction declines markedly. Our goal is subsecond “screen flips” (the time it takes to transition from one screen to the next), which appears anecdotally to be the threshold that is important to our users. While this may be a difficult standard to achieve, it should be a primary goal.

Evidence supporting this comes, in part, from user surveys regarding computerized physician order entry. In one such survey, we found that the primary determinant of user satisfaction was speed and that this rated much higher than quality improvement aspects.35 In fact, users perceived physician order entry primarily as an efficiency technology,35 even though we found in a formal time–motion study that it took users significantly longer to write orders using the computer than with paper, in part, because many screens were involved.36 Others have had similar results.37 Thus, while the hospital administration and clinical leadership's highest priorities are likely to be costs and quality, the top priority of users will be the speed of the information system.

2. Anticipate Needs and Deliver in Real Time.

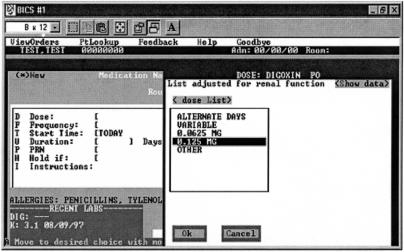

It is not enough for the information a provider needs to simply be available someplace in the system—applications must anticipate clinician needs and bring information to clinicians at the time they need it (▶). All health professionals in the United States face increasing time pressure and can ill afford to spend even more time seeking bits of information. Simply making information accessible electronically, while better than nothing, has little effect.38 Systems can serve the critical role of gathering and making associations between pieces of information that clinicians might miss because of the sheer volume of data, for example, emphasizing a low-potassium level in a patient receiving digoxin.

Figure 1.

Ordering digoxin. This screen from an application illustrates how an application can anticipate provider needs (here, the most recent digoxin and potassium levels and the dose forms that digoxin comes in) and bring this information to the clinician at the point of care.

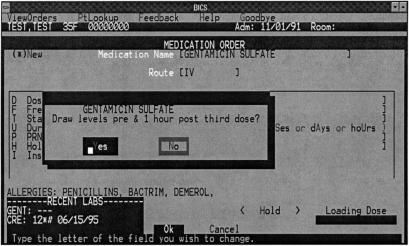

Optimal clinical decision support systems should also have the capability to anticipate the subtle “latent needs” of clinicians in addition to more obvious needs. “Latent needs” are needs that are present but have not been consciously realized. Decision support provided through the computer can fill many such latent needs, for example, notifying the clinician to lower a drug dose when a patient's kidney function worsens.39 Another example of a latent need is a situation that occurs in which one order or piece of information suggests that an action should follow (▶).40 Overhage et al.40 have referred to these orders or information as corollary orders. As did Overhage et al.,40 our group has also found that displaying suggested orders across a wide range of order types substantially increased the likelihood that the desired action will occur.41

Figure 2.

Example of a corollary order. Ordering gentamicin sulfate prompts a suggestion for a corollary order: drawing levels before and one hour after the third dose.

3. Fit into the User's Workflow.

Success with alerts, guidelines, and algorithms depends substantially on integrating suggestions with practice.38 We have built a number of “stand-alone” guidelines for a variety of conditions, including sleep apnea, for example. However, use counts have been very low, even for excellent guidelines. The vancomycin guideline mentioned above was available for passive consultation, yet was rarely used; only after bringing the guideline to the user on a single screen at the time the clinician was in the process of ordering vancomycin did we see an impact. Understanding clinician workflow, particularly when designing applications for the outpatient setting, is critical.

4. Little Things Can Make a Big Difference.

The point here is that usability matters—a lot. Developers must make it easy for a clinician to “do the right thing.” In the human factors world, usability testing has had a tremendous impact on improving systems,42 and what appear to be nuances can make the difference between success and failure. While it should be obvious that clinical computing systems are no different, usability testing has not necessarily been a routine part of designing them. We have had many experiences in which a minor change in the way screens were designed had a major impact on provider actions. For example, in one application, when the default was set to have clinicians enter the diagnosis as free text rather than from a code list below, a much larger proportion of diagnoses were entered as free text than when we had defaulted to the most likely coded alternative. This, in turn, had down-stream consequences regarding providing decision support, for example, providers did not get reminders about diabetes if diabetes was entered as free text. In a more dramatic example, the Regenstrief group found that displaying computerized reminders suggesting vaccinations for inpatients had no impact when the reminders were easy to ignore, but when the screen flow was altered to make it harder for physicians to ignore the reminder suggestions, they saw a large positive impact.43

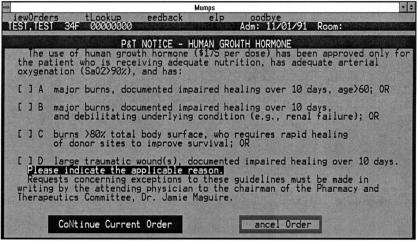

5. Recognize that Physicians Will Strongly Resist Stopping.

Across a wide array of interventions, we have found that physicians strongly resist suggestions not to carry out an action when we do not offer an alternative, even if the action they are about to carry out is virtually always counterproductive. In a study of decision support regarding abdominal radiography,24 suggestions that no radiograph be ordered at all were accepted only 5% of the time, even though studies ordered when the alerts were overridden yielded almost no useful findings. Similarly, for tests that were clearly redundant with another test of the same kind performed earlier that day, we found that clinicians overrode reminders a third of the time, even though such results were never useful (▶).44

Figure 3.

Alert for a “redundant” laboratory order. We have found repeatedly that physicians resist stopping; in this instance, alerts for redundant orders often are overridden when there is no alternative plan of action suggested, even when the testing almost never identifies anything useful.

In counter detailing about drugs, we have found also that if clinicians have strong beliefs about a medication, and either no alternative or an unpalatable alternative is offered, clinicians routinely override suggestions not to order the ketorolac original medication. One example was for intravenous ketorolac, which clinicians believed was more effective than oral nonsteroidals for pain relief, despite little evidence to support this.

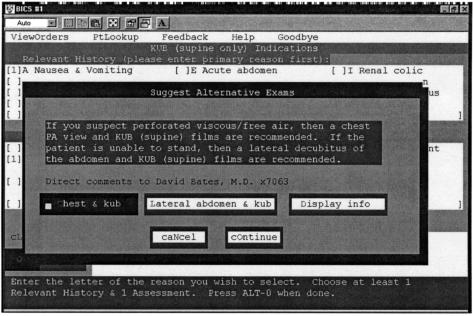

Our general approach has been to allow clinicians to exercise their own judgment and override nearly all reminders and to “get past” most guidelines. However, situations arise in which this may not be desirable. For example, we noted several years ago that we were using approximately $600,000 of human growth hormone per year, even though we are not a pediatric hospital. A drug utilization evaluation found that most of this was being used by surgeons in the intensive care unit who believed that giving daily growth hormone to patients with “failure to wean” from the ventilator helped them get off the ventilator more rapidly. However, an examination of the evidence found that there was only one abstract to support this belief. Subsequently, the Pharmacy and Therapeutics Committee formed a group that developed a single-screen, computerized guideline for this medication. Because the medication is never needed urgently and the costs involved were so large ($180 per dose), the guideline stated that one of the indications on the screen had to be present, or the user would have to apply in writing to the chairman of the Pharmacy and Therapeutics Committee (▶). This had a large impact, and utilization decreased to about one third the former level. However, over time, utilization began again to climb. An evaluation by pharmacy found that users had begun to “game the system” or to state that one of the indications under the guideline was present when, in fact, it was not. However, because of order entry, which requires physicians to log in, it was possible to identify the ordering clinician involved. Targeted discussions with these physicians quickly resulted in utilization returning to previous levels. Thus, in such situations, ongoing monitoring may be required.

Figure 4.

Computerized guidelines. These guidelines for the use of human growth hormone were developed to help prevent inappropriate and unnecessary use of this expensive medication. Introducing computerized guidelines to the process of ordering human growth hormone decreased utilization by two thirds.

6. Changing Direction Is Easier than Stopping.

In many situations, we have found that the computer is an enormously powerful tool for getting physicians to “change direction,” and enormous savings can result. Changing physician behavior in this way is especially effective when the issue at hand is one attribute of an order the physician probably does not have strong feelings about, such as the dose, route, or frequency of a medication or the views in a radiographic study. For example, the Pharmacy and Therapeutics Committee at our institution determined that intravenous ondansetron was as effective if given at a lower dose three times daily rather than the previous routine of four times daily after doing an equivalence study and surveying patients (unpublished data). Simply changing the default dose and frequency on the ordering screen had a dramatic effect on physician behavior. In the four weeks before changing the default frequency, 89.7% of the orders for ondansetron were for four times daily and 5.9% were for three times daily. In the four weeks after changing the default frequency, 13.7% of ondansetron orders were for four times daily, and 75.3% were for three times daily. The cost savings associated with this change were approximately $250,000 in the first year after intervention alone.41

Another example of decision support successfully changing physician direction involves abdominal radiograph orders (▶). For abdominal films, for certain indications, only one view is needed, while for other indications both upright and flat films are needed.24 In one study, the computer order entry system asked the clinician to provide his or her indication, then suggested the appropriate views if the choice of views did not match the indication. Clinicians accepted these suggestions almost half the time.24

Figure 5.

Changing direction. In the case of abdominal radiography, suggesting the appropriate views given the indication often results in a change.

7. Simple Interventions Work Best.

Our experience has been that if you cannot fit a guideline on a single screen, clinicians will not be happy about using it. Writers of paper-based guidelines do not have such constraints and tend to go on at some length. While there is some utility in having the complete backup guideline as reference material, as McDonald and Overhage have pointed out,45 such guidelines are not usable online without modification, especially during the provision of routine care. An example would be “use aspirin in patients status-post myocardial infarction unless otherwise contraindicated.” We have built a number of computerized guidelines,14,22,24,46 and have repeatedly found this issue to be important. In one study, when clinicians ordered several frequently overused rheumatologic tests on inpatients, we developed a Bayesian program that asked clinicians to enter probabilities of disease.46 However, clinicians needed to provide several pieces of information to get to the key decision support. Eleven percent of intervention orders were cancelled versus less than 1% in the controls. But, we found that in instances in which they cancelled, physicians never got to the decision support piece of the intervention—all the effect appeared to be due to a barrier effect.

Another guideline we evaluated was an adaptation of the CDC guideline for prescribing vancomycin.14 Getting the guideline to fit on a single screen required substantial condensation and simplification, but we believe this was a key factor in convincing clinicians to use it. In a randomized, controlled trial evaluating the reduction of vancomycin use using computer guidelines, intervention physicians wrote 32% fewer orders than control physicians and had 28% fewer patients for whom a vancomycin order was initiated or renewed. The duration of vancomycin therapy was also 36% lower for patients of the intervention physicians than for patients of the control physicians.14 Based on a small number of chart reviews, these decreases were clinically appropriate, although a significant amount of inappropriate use persisted.

8. Ask for Additional Information Only When You Really Need It.

To provide advanced decision support—and especially to implement guidelines—one frequently needs data that are not already in the information system and can be obtained only from the clinician. Examples are the weight of a patient about to be prescribed a nephrotoxic drug and the symptom status of a patient with congestive heart failure. Some situations are perceived as sufficiently risky by clinicians that they will be willing to provide a number of pieces of information, for example, if they are prescribing chemotherapy. However, in many others, the clinician may not want to get a piece of information that is not immediately at hand, such as the weight or whether a young woman could be pregnant. In a trial we performed of guided medication dosing for inpatients with renal insufficiency,39 we needed to have the patient weight to make suggestions (other variables including age, gender, and creatinine level were all available). Getting consensus that it was acceptable to demand this was in many ways the most difficult part of the project. In approximately one third of patients, no weight was entered at first, although this proportion eventually fell. Overall, our experience has been that the likelihood of success in implementing a computerized guideline is inversely proportional to the number of extra data elements needed. Plans must be made to cover situations in which the provider does not give a piece of information for some reason, and, over time, it makes sense to try to make sure that key pieces of data (such as weight) are collected as part of routine care.

9. Monitor Impact, Get Feedback, and Respond.

If reminders are to be delivered, there should be a reasonable probability that they will be followed, although this probability should likely vary substantially according to the type of reminder. For strongly “action-oriented” suggestions, we try to have clinicians respond positively more than approximately 60% of the time—this is a threshold we reached empirically and somewhat arbitrarily. For other situations, for example when suggesting that a specific type of utilization be avoided, lower thresholds may be reasonable. It is clear that if numerous suggestions are delivered that rarely are useful, clinicians will simply extinguish and miss even very important suggestions.47 For example, with drug–drug interactions in pharmacies, so many notifications are delivered to pharmacists with similar priority levels that pharmacists have been found to override a third of reminders about a life-threatening interaction.48 Therefore, we carefully evaluate and prune our knowledge base. For drug–drug interactions, we have downgraded the severity level for many. Achieving the right balance between over-and underalerting is difficult and should be an important topic for further research.

Many of our interventions did not turn out as expected with lower impact for a variety of reasons. We have learned that it is essential to track impact early when supplying decision support by assessing how often suggestions are followed, and then make appropriate midcourse corrections. For instance, when presenting reminders to use aspirin in patients with coronary artery disease, we found that a major reason for failure to comply was that patients were already receiving warfarin, which we should have anticipated but did not.

10. Manage and Maintain Your Knowledge-based Systems.

Maintaining the knowledge within the system and managing the individual pieces of the system are critical to successful delivery of decision support. We have found it useful to track the frequency of alerts and reminders and user responses and have someone, usually in information systems, evaluate the resulting reports on a regular basis. Thus, if it becomes clear that a drug–drug interaction is suddenly coming up tens of times per day yet is always being overridden, an appropriate corrective action can be taken. The effort required to monitor and address issues in such systems is considerable and is easy to underestimate. It is also critical to keep up with the pace of change of medical knowledge. We have attempted to assign each area of decision support to an individual, and require the individual to assess their assignment periodically to ensure that the knowledge base remains applicable.

Discussion

The costs of medical care continue to rise, and society is no longer willing to give the medical profession a blank check. An early consequence of rising costs was the increasing penetration of managed care, which has attempted to minimize the provision of unnecessary care, while providing the care that is important. However, even in most managed care situations, many unnecessary things get done, while other effective interventions do not get carried out in part because the number of potentially beneficial things to accomplish is so large that physicians cannot effectively keep track of them all. Information systems represent a critical and underused tool for managing utilization and improving both efficiency and quality.1

Especially when combined with an electronic record, decision support is one of the most potent ways to change physician behavior. Some find this approach threatening and fear the loss of physician autonomy.49 While such fears may not be entirely unfounded, we think the computer can essentially provide a “better cockpit” for clinicians, which can help them avoid errors, be more thorough, and stay closer to the findings of the evidence base. A one-dimensional scale of “degrees of computerization” has been suggested: (1) The computer offers no assistance; humans must do it all. (2) The computer offers a complete set of action alternatives, and (3) narrows the selection down to a few, (4) suggests one, and (5) executes that selection if the human approves, or (6) allows the human a restricted time to veto before automatic execution, or (7) executes automatically, then necessarily informs the human, or (8) informs him or her after execution only if he or she asks, or (9) informs him or her after execution only if it, the computer, decides to. (10) The computer decides everything and acts autonomously, ignoring the human.50

Most of medicine in the United States is still at level 1, which we believe is far from optimal. We have found that clinical decision support is most likely to be accepted if it approaches level 5, and while there are comparatively few situations in which this can be achieved, they are very high yield. For instance, there are many “consequent actions,” in which one action suggests that another is very likely indicated, for example, ordering aminoglycoside levels after ordering aminoglycosides, and physicians are much more likely to carry out such actions when appropriate suggestions are made.40 In addition, there are many straightforward situations in which it makes sense to make it as easy as possible for a clinician to carry out an action, for example, ordering a mammogram for a 55-year-old woman. In other instances, level 2 or 3 may be optimal.

Several important, recent trials from England have identified no benefit with computer-based guidelines in chronic diseases in primary care; the studies targeted hypertension, angina, and asthma.51,52,53 In the most recent of these evaluations, the investigators concluded that key issues were the timing of triggers, ease of use of the system, and helpfulness of the content.53 These fit with our comments regarding workflow and making messages highly directive. Our own results with chronic diseases and complex guidelines have been largely similar.38 These results are disappointing because chronic disease management is so important; perhaps the biggest challenge is identifying accurately where the patient is in their care so that helpful suggestions can be made. This remains a vitally important frontier in decision support.

Surprises

A number of features we believed would be valued have either caused major problems, or received relatively little use, or both. Regarding ordering, a major issue was implementation of “free text” ordering—orders that were written simply using free text. While we developed a parser that worked reasonably well, orders written using this mechanism often proved difficult to categorize according to type. As a consequence, relevant decision support was not delivered. Moreover, in these instances, the computer did not know to which ancillary area to send such orders and they thus ended up in a generic pile. However, the clinician had the impression that his or her order had been communicated. A related feature with similar problems was personal order sets. Because of the way the application was designed, such order sets ended up bypassing most decision support. To our surprise, neither free text orders nor personal order sets were used frequently or were highly valued.35 As a result, we no longer allow either free text orders or personal order sets, although divisions and departments can develop order sets.

Another tool that we thought would be highly valued was links to referential information supporting the decision support. Although this has been used to date less often than we would have hoped, users do rate it as important to them, and part of the reason these get relatively little use may be that the information we have displayed has, as of yet, been quite limited. We currently are building a tool to allow real-time links to Web-based evidence, which should be much more comprehensive and may be more highly valued.

Another surprise came in delivering reminders about redundant tests.22 We found that these reminders were effective when delivered, but that the overall impact of the decision support was much lower than expected, because the system was often bypassed by test orders going directly to the laboratory, and because tests ordered using order sets were not put through these screens.

Limitations and Conclusions

Our findings are limited in that many of our experiences to date come from a single, large, tertiary care institution and one large integrated delivery system, so that issues in other types of institutions may vary. Further, a major part of our user group represents residents in training, although many attending physicians use the system on a regular basis.

We conclude that decision support provided using information systems represents a powerful tool for improving clinical care and patient outcomes, and we are hopeful that these thoughts will be useful to others building such systems. Moving toward more evidence-based practice has the potential to improve quality and safety while simultaneously reducing costs. We believe that implementation of computerized decision support through electronic medical records will be the key to actually accomplishing this. However, much remains to be learned about how to best influence physician behavior using decision support—especially around implementing complex guidelines—and this is likely to become an increasingly important area of research as we struggle to provide higher-quality care at lower cost.

Supported in part by RO1 HS07107 from the Agency for Health Care Policy and Research.

References

- 1.Institute of Medicine Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academy Press, 2001. [PubMed]

- 2.Lomas J, Sisk JE, Stocking B. From evidence to practice in the United States, the United Kingdom, and Canada. Milbank Q. 1993;71:405–10. [PubMed] [Google Scholar]

- 3.Schectman JM, Elinsky EG, Bartman BA. Primary care clinician compliance with cholesterol treatment guidelines. J Gen Intern Med. 1991;6:121–5. [DOI] [PubMed] [Google Scholar]

- 4.Troein M, Gardell B, Selander S, Rastam L. Guidelines and reported practice for the treatment of hypertension and hypercholesterolaemia. J Intern Med. 1997;242:173–8. [DOI] [PubMed] [Google Scholar]

- 5.Marcelino JJ, Feingold KR. Inadequate treatment with HMG-CoA reductase inhibitors by health care providers. Am J Med. 1996;100:605–10. [DOI] [PubMed] [Google Scholar]

- 6.Cabana MD, Rand CS, Powe NR, et al. Why don't physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282:1458–65. [DOI] [PubMed] [Google Scholar]

- 7.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice: a systematic review of rigorous evaluations. Lancet. 1993;342:1317–22. [DOI] [PubMed] [Google Scholar]

- 8.Bradford WD, Chen J, Krumholz HM. Under-utilisation of beta-blockers after acute myocardial infarction. Pharmacoeconomic implications. Pharmacoeconomics. 1999;15:257–68. [DOI] [PubMed] [Google Scholar]

- 9.Schoenenberger RA, Tanasijevic MJ, Jha A, Bates DW. Appropriateness of antiepileptic drug level monitoring. JAMA. 1995;274:1622–6. [PubMed] [Google Scholar]

- 10.Canas F, Tanasijevic M, Ma'luf N, Bates DW. Evaluating the appropriateness of digoxin level monitoring. Arch Intern Med. 1999;159:363–8. [DOI] [PubMed] [Google Scholar]

- 11.Bates DW, Boyle DL, Rittenberg E, et al. What proportion of common diagnostic tests appear redundant? Am J Med. 1998;104:361–8. [DOI] [PubMed] [Google Scholar]

- 12.Solomon CG, Goel PK, Larsen PR, Tanasijevic M, Bates DW. Thyroid function testing in an ambulatory setting: identifying suboptimal patterns of use [abstract]. J Gen Intern Med. 1996;11(suppl):88. [Google Scholar]

- 13.Karson A, Kuperman G, Horsky J, Fairchild DG, Fiskio J, Bates DW. Patient-specific computerized outpatient reminders to improve physician compliance with clinical guidelines. J Gen Intern Med. 2000;15(suppl 1):126. [Google Scholar]

- 14.Shojania KG, Yokoe D, Platt R, Fiskio J, Ma'luf N, Bates DW. Reducing vancomycin utilization using a computerized guideline: results of a randomized control trial. J Am Med Inform Assoc. 1998;5:554–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bates DW, Cullen D, Laird N, et al. Incidence of adverse drug events and potential adverse drug events: implications for prevention. JAMA. 1995;274:29–34. [PubMed] [Google Scholar]

- 16.Bates DW, Cohen M, Leape LL, Overhage JM, Shabot MM, Sheridan T. Reducing the frequency of errors in medicine using information technology. J Am Med Inform Assoc. 2001;8:299–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Middleton B, Renner K, Leavitt M. Ambulatory practice clinical information management: problems and prospects. Healthc Inf Manag. 1997;11(4):97–112. [PubMed] [Google Scholar]

- 18.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280:1339–46. [DOI] [PubMed] [Google Scholar]

- 19.Johnston ME, Langton KB, Haynes RB, Mathieu A. Effects of computer-based clinical decision support systems on clinician performance and patient outcome. A critical appraisal of research. Ann Intern Med. 1994;120:135–42. [DOI] [PubMed] [Google Scholar]

- 20.Bates DW, Kuperman G, Teich JM. Computerized physician order entry and quality of care. Qual Manag Healthc. 1994;2(4):18–27. [PubMed] [Google Scholar]

- 21.Bates DW, Teich J, Lee J, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc. 1999;6:313–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bates DW, Kuperman G, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med. 1999;196:144–59. [DOI] [PubMed] [Google Scholar]

- 23.Solomon DH, Shmerling RH, Schur P, Lew R, Bates DW. A computer-based intervention to reduce unnecessary serologic testing. J Rheumatol. 1999;26:2578–84. [PubMed] [Google Scholar]

- 24.Harpole LH, Khorasani R, Fiskio J, Kuperman GJ, Bates DW. Automated evidence-based critiquing of orders for abdominal radiographs: impact on utilization and appropriateness. J Am Med Inform Assoc. 1997;4:511–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Safran C, Slack WV, Bleich H.L. Role of computing in patient care in two hospitals. MD Comput. 1989;6:141–8. [PubMed] [Google Scholar]

- 26.Glaser JP, Beckley RF, Roberts P, Marra JK, Hiltz FL, Hurley J. A very large PC LAN as the basis for a hospital information system. J Med Syst. 1991;15:133–7. [DOI] [PubMed] [Google Scholar]

- 27.Teich JM, Hurley JF, Beckley RF, Aranow M. Design of an easy-to-use physician order entry system with support for nursing and ancillary departments. Proc Annu Symp Comput Appl Med Care. 1992:99–103. [PMC free article] [PubMed]

- 28.Teich JM, Spurr CD, Flammini SJ, et al. Response to a trial of physician based inpatient order entry. Proc Annu Symp Comput Appl Med Care. 1993:316–20. [PMC free article] [PubMed]

- 29.Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280:1311–6. [DOI] [PubMed] [Google Scholar]

- 30.Bates DW, Kuperman G, Jha A, et al. Does the computerized display of charges affect inpatient ancillary test utilization? Arch Intern Med. 1997;157:2501–8. [PubMed] [Google Scholar]

- 31.Teich JM, Sittig DF, Kuperman GJ, Chueh HC, Zielstorff RD, Glaser JP. Components of the optimal ambulatory care computing environment. Medinfo. 1998;9:t-7. [PubMed] [Google Scholar]

- 32.Spurr CD, Wang SJ, Kuperman GJ, Flammini S, Galperin I, Bates DW. Confirming and delivering the benefits of an ambulatory electronic medical record for an integrated delivery system. TEPR 2001 Conf Proc. 2001. (CD-ROM).

- 33.Lee F, Teich JM, Spurr CD, Bates DW. Implementation of physician order entry: user satisfaction and usage patterns. J Am Med Inform Assoc. 1996;3:42–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McDonald CJ. Protocol-based computer reminders, the quality of care and the non-perfectability of man. N Engl J Med. 1976;295:1351–5. [DOI] [PubMed] [Google Scholar]

- 35.Lee F, Teich JM, Spurr CD, Bates DW. Implementation of physician order entry: user satisfaction and self-reported usage patterns. J Am Med Inform Assoc. 1996;3:42–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Shu K, Boyle D, Spurr C, et al. Comparison of time spent writing orders on paper with computerized physician order entry. Medinfo. 2001;10(pt 2):2–11. [PubMed] [Google Scholar]

- 37.Overhage JM, Perkins S, Tierney WM, McDonald CJ. Controlled trial of direct physician order entry: effects on physicians' time utilization in ambulatory primary care internal medicine practices. J Am Med Inform Assoc. 2001;8:361–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Maviglia SM, Zielstorff RD, Paterno M, Teich JM, Bates DW, Kuperman GJ. Automating complex guidelines for chronic disease: lessons learned. J Am Med Inform Assoc. 2003;10:154–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chertow GM, Lee J, Kuperman GJ, et al. Guided medication dosing for inpatients with renal insufficiency. JAMA. 2001;286:2839–44. [DOI] [PubMed] [Google Scholar]

- 40.Overhage JM, Tierney WM, Zhou XH, McDonald CJ. A randomized trial of “corollary orders” to prevent errors of omission. J Am Med Inform Assoc. 1997;4:364–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Teich JM, Merchia PR, Schmiz JL, Kuperman GJ, Spurr CD, Bates DW. Effects of computerized physician order entry on prescribing practices. Arch Intern Med. 2000;160:2741–7. [DOI] [PubMed] [Google Scholar]

- 42.Norman DA. The Design of Everyday Things. New York: MIT Press, 2000.

- 43.Dexter PR, Perkins S, Overhage JM, Maharry K, Kohler RB, McDonald CJ. A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med. 2001;345:965–70. [DOI] [PubMed] [Google Scholar]

- 44.Bates DW, Kuperman GJ, Rittenberg E, et al. Reminders for redundant tests: results of a randomized controlled trial. Symp Comp Appl Med Care. 1995:935.

- 45.McDonald CJ, Overhage JM. Guidelines you can follow and trust: an ideal and an example. JAMA. 1994;271:872–3. [PubMed] [Google Scholar]

- 46.Solomon DH, Shmerling RH, Schur P, Lew R, Bates DW. A computer based intervention to reduce unnecessary serologic testing. J Rheumatol. 1999;26:2578–84. [PubMed] [Google Scholar]

- 47.Abookire SA, Teich JM, Sandige H, et al. Improving allergy alerting in a computerized physician order entry system. Proc AMIA Symp. 2000:2–6. [PMC free article] [PubMed]

- 48.Cavuto NJ, Woosley RL, Sale M. Pharmacies and prevention of potentially fatal drug interactions. JAMA. 1996;275:1086–7. [PubMed] [Google Scholar]

- 49.Bogner MS (ed). Human Error in Medicine. Hillsdale, NJ: Lawrence Erlbaum Associates, 1994.

- 50.Sheridan TB, Thompson JM. People versus computers in medicine. In Bogner MS (ed). Human Error in Medicine. Hillsdale, NJ: Lawrence Erlbaum Associates, 1994, pp 141–59.

- 51.Montgomery AA, Fahey T, Peters TJ, MacIntosh C, Sharp DJ. Evaluation of computer based clinical decision support system and risk chart for management of hypertension in primary care: randomised controlled trial. BMJ. 2000;320:686–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Eccles M, McColl E, Steen N, et al. Effect of computerised evidence based guidelines on management of asthma and angina in adults in primary care: cluster randomised controlled trial. BMJ. 2002;325:941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Rousseau N, McColl E, Newton J, Grimshaw J, Eccles M. Practise based, longitudinal, qualitative interview study of computerized evidence based guidelines in primary care. BMJ. 2003;326:314–8. [DOI] [PMC free article] [PubMed] [Google Scholar]