Abstract

This study evaluated functional benefits from bilateral stimulation in 20 children ages 4 – 14, 10 use two CIs and 10 use one CI and one HA. Localization acuity was measured with the minimum audible angle (MAA). Speech intelligibility was measured in quiet, and in the presence of 2-talker competing speech using the CRISP forced-choice test. Results show that both groups perform similarly when speech reception thresholds are evaluated. However, there appears to be benefit (improved MAA and speech thresholds) from wearing two devices compared with a single device that is significantly greater in the group with two CI than in the bimodal group. Individual variability also suggests that some children perform similarly to normal-hearing children, while others clearly do not. Future advances in binaural fitting strategies and improved speech processing schemes that maximize binaural sensitivity will no doubt contribute to increasing the binaurally-driven advantages in persons with bilateral CIs.

Keywords: Bilateral, Binaural, Cochlear implant, Hearing aid, Children, Speech, Localization

Cochlear implants (CIs) have become a powerful means of providing hearing to deaf persons. Although the vast majority of CI users can understand speech in a quiet situation (Zeng, 2004; Stickney et al, 2004; Holt & Kirk, 2005), and report improvement in quality of life post-implantation (Summerfield et al, 2002), they have continued difficulties under many circumstances. For instance, most CI users have difficulty locating sounds in their environments; all sounds appear to be coming either directly from their ear, or inside their head. In addition, their ability to understand speech in everyday, noisy and reverberant environments is quite poor (e.g., Fu et al, 1998; Nelson & Jin, 2004; Stickney et al, 2004, 2005). Continuous efforts are being made to improve performance with single CIs by developing better speech processing strategies (e.g., Rubinstein & Hong, 2003; Green et al, 2005; Nie et al, 2005; Yang & Fu, 2005).

Bilateral CIs (BI-CIs) are also being provided to a growing number of patients in an attempt to increase quality of life and to improve listening in everyday noisy situations. This clinical approach is primarily rooted in the assumption that, since normal-hearing people rely on two ears (binaural hearing) for sound localization and speech understanding in noise, deaf individuals should also have two good ears in order to maximize their performance. Bilaterally implanted adults show some clear and significant benefits when using two CIs compared with a single CI. As in normal hearing persons, speech understanding in noise is better in bilateral users when both ears are activated compared with a monaural condition (Gantz et al, 2002; Tyler et al, 2002; Muller et al, 2002; Litovsky et al, 2004; Schleich et al, 2004). The benefits are thought to arise from a combination of effects, including the head shadow effect, binaural squelch and binaural summation, all of which can be achieved at least to some extent without requiring fine-structure information or precise inter-aural time difference (ITD) resolution.

In adult patients, discrimination of the right vs. left hemifield typically results in robust improvement in localization acuity with bilateral CIs compared with one CI (e.g., Gantz et al, 2002; Tyler et al, 2002). More stringent measures of localization precision in a multi-speaker array have also been made in a number of studies. Errors are generally smaller when bilateral CI users listen with both CIs compared with either ear alone (e.g., van Hoesel & Tyler, 2003; Litovsky et al, 2004; Nopp et al, 2004). When a coordinated stimulation approach is used, such that both implants are controlled by a single research processor, adults with BI-CIs have excellent sensitivity to inter-aural level differences (ILDs) and many have very good sensitivity to ITDs (e.g., van Hoesel & Tyler, 2003; van Hoesel et al, 2002; Litovsky et al, 2005). Performance appears to depend on whether or not adults had exposure to binaural stimulation early in life. For example, some adults who had binaural experience prior to the loss of their hearing show excellent sensitivity to ITDs, even following a long period of auditory deprivation (Long et al, 2003; Litovsky et al, 2005). In contrast, adults who were deprived of hearing in early childhood, or born without hearing, have very poor sensitivity to ITDs (Litovsky et al, 2005; Jones et al, 2005).

The issue of early experience may bear significance when bilateral CIs are undertaken and evaluated in children. Our lab has been studying performance in children with bilateral CIs, some of whom are tested on a single interval following bilateral activation, and others who are tested at repeated intervals over a period of 2 years or longer. All of these children received their two CIs in sequential procedures, typically several years apart. Hence, their auditory deprivation prior to having bilateral CIs consists of a 2-stage process that includes initial bilateral deafness followed by monaural deafness. Most of the children studied here were born without hearing, and therefore did not have the opportunity to learn how to hear with acoustic input or how to use binaural cues, before becoming deaf.

In a recent study we found that, on measures of localization acuity, most of the children show benefit from bilateral CIs, and the amount of benefit depends on individual children’s experience both pre- and post-implantation (Litovsky et al, 2006). From that work we concluded that the children engage in a learning process for a period of time following the activation of bilateral hearing, and during that time they learn how to use bilateral information. Anecdotal observations in our lab suggest that, typically when bilateral hearing is first activated the concept of “where” sounds come from is some-what foreign, and difficult for the children to grasp. Within the first 1–2 years, 70% of children we studied appear to learn how to achieve this very important function, and the majority of those children can eventually discriminate right/left source positions better with two ears than with a single ear (Litovsky et al, 2006). These children are unlike most adults with bilateral CIs, who can typically localize sounds within a few months after receiving their second CI, because their auditory history is distinctive. First, most of the children never experienced binaural acoustic stimuli, which may be a factor in the difficulties they exhibit in learning how to localize. Second, following sequential procedures they have to unlearn hearing with a single CI alone, and learn a new set of cues that can be applied when bilateral stimulation is available.

With regard to speech understanding, very little is known about the potential benefits of bilateral CIs in children. If the auditory cues necessary for bilateral benefits do not require the same learning process, then bilateral hearing might result in benefits very early after bilateral activation.

An additional issue, also raised in our earlier work, is the consideration of children who are fitted with a hearing aid (HA) in the non-implanted ear. This is often done in order to provide continued stimulation to that ear, but the extent to which performance in these children improves beyond that which is achieved with the CI alone is unknown. There is evidence to suggest that HAs in the non-implanted ear can offer benefits on measures of speech understanding in noise in adult listeners (e.g., Armstrong et al, 1997; Ching et al, 2001, 2004; Tyler et al, 2002; Kong et al, 2005) and location acuity (Ching et al, 2001; Tyler et al, 2002). Similarly, in some children benefits have been seen on tasks involving sound location identification and speech understanding in noise, although the effect sizes are very small (e.g., Ching et al, 2001). Kong et al. (2005) have suggested that HAs can lead to improvements whereby fine structure acoustic information at low frequencies is combined with high-frequency envelope information, compensating for limitations in the CI signal processing and electrode design. This electro-acoustic benefit clearly depends on the patient’s amount of residual usable low frequency hearing, but the amount of residual hearing required for the benefits to emerge is not clear.

In the present paper we report on findings from two groups of children, one with bilateral CIs (CI-CI), and a second group of bimodal children who wear a CI in one ear and a hearing aid in the other ear (CI-HA). The purpose of this paper is to evaluate the potential benefits of bilateral and bimodal fittings, within each group and across groups, by comparing performance on speech intelligibility in quiet, and in the presence of competing speech signals. The competitors are placed either at the same location as the target speech, or 90 deg apart, either near the first-implanted ear or near the second-implanted/hearing-aid ear. These various configurations provide a means of assessing masking, as well as spatial release from masking (SRM) under bilateral and monaural listening conditions. For the same children, we also compare measures of localization acuity (MAA) gathered in a similar fashion to results reported by Litovsky et al. (2006). Finally, information about individual children’s auditory histories and amplification is provided, to help the reader make determinations about possible reasons for individual variability within each group.

Methods

Subjects (total N– 20):

Group 1, CI-CI

10 children, ages 3 to 14 at the time of testing participated. All children received their first CI at least one year prior to the second CI. Testing was conducted at various intervals following activation of the second CI. Of the 10 children, 9 were fitted with Nucleus devices; 7 with two N24 devices, one with two N22 devices and one with N22 in the first-implanted ear and N24 in the second-implanted ear. One child was fitted with two Clarion devices. The speech processors had auto-sensitivity settings that activated the automatic gain control at 67 dB SPL or higher, hence the levels chosen for this study were systematically kept at 66 dB or lower. One child (CIAE) was diagnosed with Waardenburg Type I, but has no other disabilities. Table 1 includes the relevant demographic details for each participant. In addition, it should be noted that all children participated in intensive auditory-verbal and/or speech therapy for several years. All children were in their age-appropriate grade level at school and educated in a mainstream school environment.

Table 1.

Biographical Summary: CI-CI

| Subject Code | History | Date of Birth | Gender | Age at visit | Months Post-Activation Cis | First CI | Second CI | Hearing Aid History |

|---|---|---|---|---|---|---|---|---|

| CIAA | ID NIHS at birth; fixed stapes bones & nerve damage was diagnosis | 10/28/1994 | Female | 9 | 1st CI: 57 2nd CI: 9 |

N24 in Right ear; activated Sep 1999 | N24 in Left ear; activated Dec 2002 | Worn from 14 mos to 4 yrs old |

| CIAB | ID at age 11 mos; unknown etiology | 9/11/1990 | Female | 14 | 1st CI: 93 2nd CI: 23 |

N22 in Right ear; activated Sep 1996 | N24 in Left ear; activated Jan 2003 | Bilateral fitting Jan 1992 minimal success |

| CIAC | ID at age 12 mos; unknown etiology; Bilateral profound loss. Right ear worsened from 95 dB to over 110 dB at age 4.5 yrs | 10/14/1994 | Female | 10 | 1st CI: 76 2nd CI: 22 |

N22 in Right ear; activated May 1999 | N22 in Left ear; activated Feb 2003 | Bilateral fitting Dec 1995. Worn but with little success |

| CIAE | ID at 11 mos; Waardenburg Type I; Bilateral profound loss | 9/19/1997 | Female | 8 | 1st CI: 74 2nd CI: 26 |

N24 in Right ear; activated April 1999 | N24 Contour in Left ear; activated April 2003 | Bilateral fitting Sep 1998. Worn until April 1999 but did not get much benefit |

| CIAG | ID at birth; progressive loss; etiology unknown | 8/9/2000 | Male | 4 | 1st CI: 29 2nd CI: 12 |

N24 in Right ear; activated May 2002 | N24 in Left ear; activated Sept 2003 | Bilateral fitting at 5 weeks of age |

| CIAT | ID at 17 mos; CMV | 9/23/1997 | Male | 7 | 1st CI: 26 2nd CI: 3 |

N24 in Left ear; activated Aug 2002 | N24 in Right ear; activated July 2004 | Bilateral fitting with minimal results |

| CIAY | ID at 3.1 yrs with a rapidly progressive SNHL | 4/9/1999 | Male | 6 | 1st CI: 13 2nd CI: 3 |

N24 in Right ear; activated June 2004 | N24 in left ear; activated April 2005 | Bilateral fitting in Aug. 2002, HL progressed and stronger aids were not beneficial a year later |

| CIBC | ID at 20 mos; Etiology is unknown | 11/23/1996 | Female | 8 | 1st: 75 2nd: 3 |

Clarion S-series in Left ear; activated Jan. 1999 | Auria 90K in Right ear; activated Jan. 2005 | Hearing aids were never worn |

| CIBI | ID at 9 mos; Mondini malformation | 12/7/2001 | Female | 3 | 1st: 44 2nd: 10 |

N24 in Right ear; activated Feb 2003 | N24 in Left ear; activated Oct 2004 | Bilateral fitting in Oct 2002 with Phonak Pico Forte |

| CIBO | ID at 26 mos; Enlarged Vestibular Aqueduct (EVA) Syndrome | 1/9/2000 | Female | 5 | 1st CI: 37 2nd CI: 24 |

N24 Contour in Right ear; activated Nov 2002 | N24 Contuor in Left ear; activated Dec 2003 | Bilateral fitting in March2002 with Widex Diva |

Loudness

The right and left speech processors were each programmed independently by the child’s clinician, and the ‘comfortable’ volume level for each unilateral program was recorded. Attempts to equalize the loudness for the two ears were made with a number of children during early stages of the study. Since those measures were not always reliable, attempts were made to at least verify that a centered auditory image was heard from the front location at a comfortable level. Prior to testing, the speech processors were each activated separately, and the child was asked to report whether the loudness of a 60 dB SPL speech signal was comfortable. Subsequently, both processors were activated together and comfortable loudness was confirmed. The child was then presented with a speech sound from the front loudspeaker (0°) and asked to indicate the perceived location of that sound, to determine whether it appeared to be centered. If the perceived location was clearly skewed towards one side, adjustments were made to the volume controls until a comfortable and centered image was reported. This process was of course problematic for some of the CI-CI children, especially when testing was within a few months after activation of the second CI (some children were reluctant to increase the volume or sensitivity settings for the processor on the second-implanted ear). The attempts described here suggest that further work in this area is important, not only in the research realm, but in clinical fitting approaches as well.

Group 2, CI-HA

10 children, ages 6 to 14 at the time of testing participated. All were identified as having a hearing loss by the age of 2 years, and implanted between the ages of 1.5 and 8.5 years. Of the 10 participants, 8 had a Nucleus (22, 24 or Freedom) device and 2 had the MedEl C40+ device (see Table 2 for details regarding etiology, age, type of CI and HA). The implant speech processor and the hearing aid were each programmed independently by the child’s clinician. As with the children with BI CIs, loudness balancing was attempted by presenting a speech sound from the front loudspeaker (0°), asking the child to indicate the perceived loudness and selecting levels consistent with the child’s report of a sound being comfortable. The “loudness balance” task was more difficult to perform on some of the CI + HA children since the perception of sound through the two devices can be entirely different and difficult to compare.

Table 2.

Biographical Summary: CI-HA

| Subject Code | History | Date of Birth | Gender | Age at visit | Time Post-Activation of CI (months) | Type of Device: First CI | Type of Device: Hearing Aids | Aided Thr. in HA ear |

|---|---|---|---|---|---|---|---|---|

| CIAD | ID at 19 mo; Bilateral severe to profound loss gradually progressed over the next 6 mos. Not formally tested, but suspects Waardenburg Syndrome | 12/10/1993 | Male | 10 | 91 | N22 in Left ear; activated Jan1996 at age 2 yrs | Phonak 2P3AZ BTE in Right ear; fit July 2003 | 30–35 @.25–4.0 kHz |

| CIAF | ID at age 11mo; unknown etiology-mother suspects DPT immunization. Hearing in Left ear dropped off significantly at age 3 yrs; Right ear remained stable | 9/2/1997 | Female | 6 | 7 | N24 in Left ear; activated Sep2003 at age 6 yrs | Oticon DigiFocus II Super Power in Right ear since Sept 2003; prior had worn Phonak SonoForte BTE AU since identification | 80–100 @.25–4.0 kHz |

| CIAO | ID at 1.5 yrs; Meningitis | 10/12/1995 | Female | 8 | 29 | N24 in Left ear; activated Feb 2001 at age 5.5 yrs | Bilateral fitting in 1996; Wears a Phonak SonoForte 2 in Right ear | 35–40 @ .25–.5 kHz; 50–60 @ 1–4 kHz |

| CIAR | ID at 7 mos; etiology is unknown | 4/20/1995 | Male | 9 | 8 | Med-El C40+/in Right ear; activated Dec 2003 at age 8.5 yrs | Initially fitted bilateral at 7mo; Currently wears a Digifocus II in left ear | 30–40 @ .25–4.0 kHz |

| CIAS | ID at 2 yrs; etiology is unknown; Hearing progressed within the first 2 yrs from mild to severe and then profound. | 3/20/1990 | Male | 14 | 78 | N24 in the Right ear; activated March 1998 at age 8 yrs | Initially fitted bilateral with Unitron US80; Was fitted in the left ear with Phonak Supero 412, Oticon Sumo and is currently wearing Widex P37 | 60 @ .25–1 kHz; 100 @ 2–4 kHz |

| CIBA | ID at 2 yrs of age; Connexin 26 mutation | 8/28/1995 | Male | 9 | 73 | N24 in the Left ear; activated March 1999 at age 3.5 yrs | Initially fitted bilateral with Phonak Sonoforte in Aug 1997; In Nov. 2004 was fitted with Phonak Supero 412 in his right ear | 30–50 @ .25–4.0 kHz |

| CIBD | ID at 2 yrs of age; Mondini malformation. He was ID with profound SNHL in right ear and severe fluctuating SNHL in left ear. At age 3 he bumped his head and mother reports lost hearing in his left ear | 10/11/1994 | Male | 10 | 82 | N24 in Right ear; activated Oct. 1998 at age 4 yrs | Fitted only in left ear with Phonak Novo Forte E4 in 1996 | 30 @ .25–3.0 kHz |

| CIBH | ID at birth; Mondini malformation | 10/2/1998 | Male | 6 | 65 | Med El C40+/in Left ear; activated March 2000 at age 17 mos | Bilateral fitting at 3mo; after first CI continued use in the right ear | 55 @ .25; 80–100 @ .5–4.0 kHz |

| CIBK | ID at 17 mos; Connexin 26 mutation | 1/3/1999 | Male | 6 | 56 | N24 in Right ear; activated Feb 2001at age 2 yrs | Initially fitted bilateral with Oticon Digifocus II in June 2000; continued use in left ear after first CI | 60 @ .25–1.0; 80–100 @ 2–4 kHz |

| CIBL | ID at age 12 mos in left ear and at 7 yrs in right ear; Cogan’s Syndrome | 6/9/1993 | Female | 12 | 31, except a 3-mo break @ 23–26 mos due to device failure | Freedom in Left ear; activated June 2005 at age 8.5 yrs | Fit monaural in Sept 2000 with Phonak Supero, right ear only | 40 @ .5–3 kHz; 80 @ 4 kHz |

Testing environment

Testing was conducted in sound treated booths (IAC; 1.8×1.8 m or 2.8×3.25 m) with reverberation time (RT60) of 250 ms. Subjects sat at a small table facing the loudspeakers. All testing was conducted using a “listening game” platform whereby computerized interactive software was used to engage the child on the tasks, and was used to provide feedback on every trial. The child was asked to orient the head towards the front (in the event that noticeable head movement occurred, data from the trial were discarded and an additional trial was presented on that condition).

Design and testing intervals

Under ideal circumstances, data collection for the CI-CI group would have taken place for all subjects at the same time stamp of bilateral experience. Since we depended on participants’ willingness to enroll in the study, personal constraints dictated much of the timing, hence the inter-subject variation in the number of months after activation of the second CI at the time of testing. Similarly, for children in the CI-HA group, the ages and amount of auditory exposure varied. During each visit to the lab children were tested on a number of measures. The speech measures were prioritized more highly, hence data are not available for 4 of the CI-CI and two of the CI-HA children on the MAA task. To avoid possible effects of learning, attention, fatigue, loss of interest in the tasks and other possible confounds, testing was carefully balanced for the various measures and listening modes (BI, first CI, and second CI or HA) across days, and within each day, across morning and afternoon sessions.

EXPERIMENT 1: SPEECH INTELLIGIBILITY

The CRISP test (Litovsky, 2003, 2005) was used to evaluate speech intelligibility in quiet and in the presence of different-sex speech interferers.

Stimuli and setup

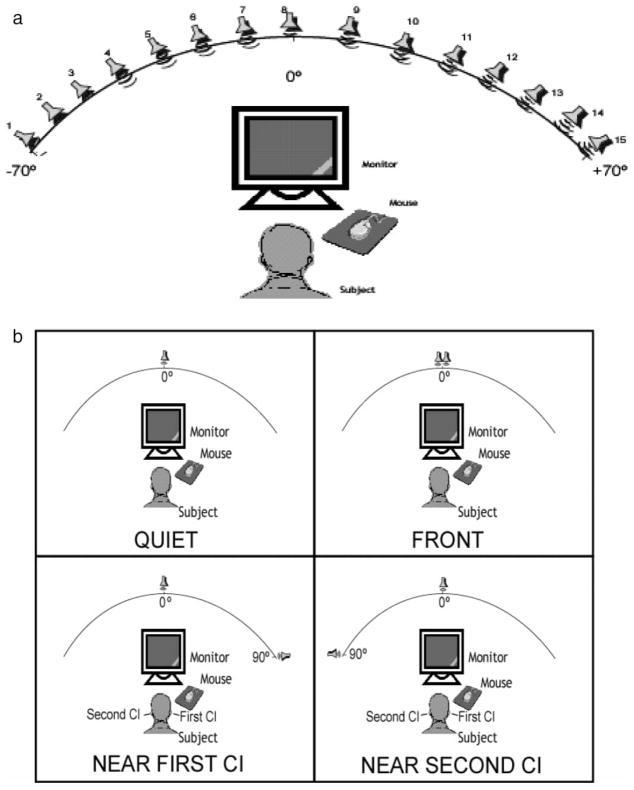

All stimuli were recorded at a sampling rate of 44 kHz and stored as wav files. Target stimuli consisted of a closed set of 25, two-syllable children’s spondees spoken by a male voice (e.g., Litovsky, 2005). The root-mean-square value of all words was equalized. The interferers consisted of a 2-talker female with two different running speech streams recorded with the same voice (Rothauser et al, 1969). Target stimulus selection, level controls and output, as well as response acquisition were achieved using dedicated software written in C++. The target and interferers were fed to separate channels, amplified (Crown D-75) and presented to separate loudspeakers (Cambridge Soundworks, Center/Surround IV; matched within 1 dB at 100 to 8,000 Hz) (see Figure 1b). The target stimulus was always presented from a loudspeaker at front (0°). That loudspeaker and a second one were placed at a distance of 1.5 m from the listener’s head. In order to vary the location of the interferer, the second loudspeaker was either at center/front (0°), Left (−90°) or Right (+90°).

Figure 1.

Schematic diagram of testing setup. 1a) Array of 15 loudspeakers mounted on an arc with a radius of 1.5 m at ear level, positioned every 10° (− 70° to + 70°). 1b). One loudspeaker was mounted on the arc at center (0°) for the target, and a second loudspeaker was mounted at center, right or left, for the interferers. The left/right loudspeakers were re-positioned at the required angles for each testing condition.

Procedure

Prior to testing, a brief familiarization task was administered to each child to determine if she or he could readily identify every target word. The experiment was designed to measure the effect of speech and noise interferers on word recognition, not vocabulary. During the familiarization task the child was asked to identify the pictured spondees. Within minutes, it was clear whether the child was comfortable and familiar with every target word on the list. The task then consisted of a 4-alternative-forced-choice procedure (Litovsky, 2005). On every trial a target word was chosen randomly from the list. The target word was preceded by a leading phrase “Ready? Point to the....” also spoken by a male talker. The child was then asked to identify a picture matching that word from an array of four pictures that appeared on the computer screen, only one of which matched the target. Following each trial a piece of a puzzle-picture was filled in on the screen. In conditions containing interfering sounds, the interferer was activated, followed by the target presentation, and continued after the target was turned off, for approximately 1–2 seconds. Subjects were instructed to ignore the female voice and to listen carefully to the male voice.

Data analysis

Speech reception thresholds (SRTs) were estimated using a method described by Litovsky (2005). Threshold data were collected using an adaptive tracking method. The interferer level fixed to comfortable speech levels at 60 dB SPL and the target speech level varied adaptively. Starting step size was 8 dB and the level was initially decreased after a single correct response. Decreases in level continued until after the first incorrect response, at which point the algorithm switched to a 3 down/1 up rule. SRTs for each condition were computed using Matlab psignifit toolbox (version 2.5.41), applying the methods described by Wichman and Hill (2001a, b). A logistic function was fit to all the data points from each experimental run for each participant, using a constrained maximum likelihood (ML) algorithm, and SRT was computed at the point on the psychometric function where performance was 79.4% correct.

EXPERIMENT 2: MINIMUM AUDIBLE ANGLE (MAA)

Fifteen loudspeakers were arranged in a semi-circular array as shown in Figure 1a, with a radius of 1.5 m, positioned on the horizontal arc at 10° intervals (−70° to +70°). If necessary, the speakers were placed at angle separations of 2.5 and 5.0°. During each block of trials, two loudspeakers were selected at equal left/right angles and remained fixed for 20 trials. Testing was conducted at numerous angles for each subject. Data collection for each block lasted between 3–5 minutes; block durations depended on the age and to some degree attention and motivation of the child. Since measurements had to be completed at numerous angles, the amount of time required for testing was 40 to 60 minutes. Tucker Davis Technologies (TDT System III; RP2, PM2, AP2) in conjunction with a personal computer host was used for stimulus presentation, control of the multiplexer for speaker switching and amplification.

Stimuli and setup

Stimuli were a subset of spondaic words taken from the list of words in Experiment 1. These stimuli have been shown to be effective for MAA testing in pediatric CI and HA users (Litovsky et al, 2006). Stimulus levels averaged 60 dB SPL and were randomly varied between 56–64 dB SPL (roved ± 4 dB).

Procedure

Stimuli were presented from either the left or right, and the child used the computer mouse to select icons on the screen indicating left vs. right positions. A few of the younger children preferred to point with their finger and have the experimenter enter the response into the computer. Following each response, feedback was provided such that the correct-location icon flashed on the screen. In this 2-alternative forced-choice procedure, source direction (left/right) varied randomly, from trial to trial. Angle size varied from block to block using a modified adaptive rule, based on the child’s performance. The angle was decreased following blocks in which overall performance yielded ≥ 15/20 (75%) correct, otherwise the angle was increased. Decisions regarding the step size leading to increased or decreased angles were based on similar rules to those used in classic adaptive procedures (e.g., Litovsky, 1997; Litovsky and Macmillan, 1994). MAA thresholds for each listening mode for each subject were defined as the smallest angle at which performance reached 70.9% correct. Listening modes in which performance was consistently below 70.9% correct for all angles tested are denoted as ‘NM’ (non-measurable) in the figures.

Results

Speech intelligibility

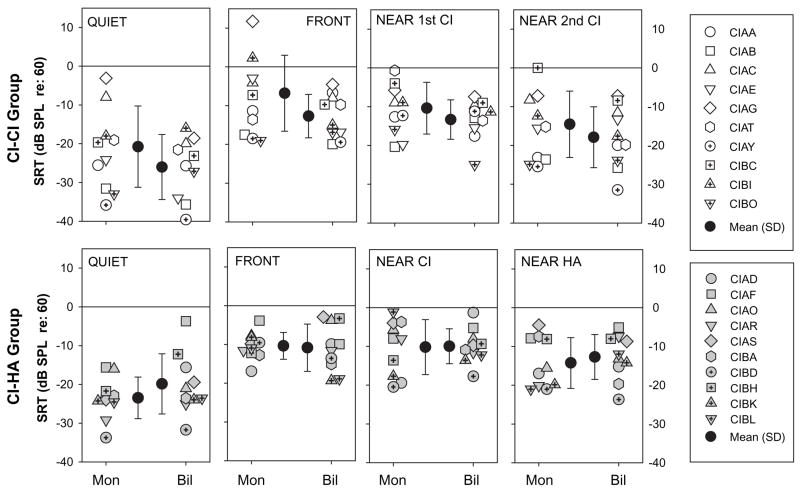

Speech reception thresholds (SRTs) for the two groups of children are compared in Figure 2, for the four conditions (quiet, front and near each device) at the two listening modes (first CI only, bilateral). SRTs are plotted relative to the level of the competing speech signals which, when presented, were at 60 dB SPL. In quiet, average SRTs for both groups were between −20 and −26 (actual levels of 30–40 dB); however, the variability in SRTs was large, suggesting that while some children were able to hear the target speech at very low levels, others required higher levels. In the presence of competing sounds that were either in front or on the side, there were significant increases in SRTs, indicating masking (see below for details). SRTs were analyzed with a mixed-design 3-way analysis of variance (ANOVA), treating group as the between-subjects variable and listening mode (monaural, bilateral) and condition (quiet, front and near each device) as the repeated-measures variables. A significant effect of condition was found [F(3,18) = 17.85, p < .0001]. Post-hoc Sheffé’s tests revealed that SRTs were lower in quiet than all other conditions (p < .001), confirming the occurrence of masking; SRTs were higher when the competitors were in front than near the second CI (or HA) (p <.005), confirming that separating the masker from the target results in a spatial release from masking (see more details below). SRTs were lower when the competitors were near the second CI (or HA) than near the first CI (p < .05). The lack of an effect of group further suggests that children with bilateral CIs and with bimodal hearing had, on average, similar SRTs.

Figure 2.

Speech reception thresholds (SRTs) are plotted for the two groups of children, CI-CI on top and CI-HA on the bottom. Panel include results from the quiet condition (left), and the three conditions with competing speech talkers, whose locations were either in front, at 90° near the first CI or at 90° near the second CI. Each panel contains SRTs under monaural (first or single CI) and bilateral (CI-CI or CI-HA) listening modes. Individual results are shown as well as group mean (± SD). Note that SRTs are plotted relative to the level of the competing speech signals which, when presented, were at 60 dB SPL.

Binaural hearing is known to provide an advantage for understanding speech in the presence of competing signals. This advantage, also known as spatial release from masking (SRM) is ~5–7 dB in normal-hearing children ages 4–7 when tested under identical conditions to those used here (Litovsky, 2005). From Figure 2 and the statistics on SRTs it appears that SRM occurred in a number of cases, however, due to the scale, this important measure is difficult to glean. SRM is defined as the difference between SRTs when the competing speech is in front (same as target) or at 90 deg on the side. Given that hearing is typically asymmetrical in the populations studied here, we measured SRM when the competing speech was either near the first CI or near the other ear (second CI or HA). Figure 3 shows average (± SD) SRM values for the two groups of children, when using bilateral devices (CI-CI or CI-HA). SRM values were analyzed with a 2-way ANOVA treating group as the between-subjects variable and side (near first CI, near second CI/HA) as the repeated-measures variable. A significant main effect of side [F(1,18)= 6.5, p < .02] suggests that SRM was greater when the competing speech was near the second CI/HA than when it was near the first CI. No effect of group was found.

Figure 3.

Spatial release from masking (SRM) values are plotted for the bilateral listening conditions, when the competing speech was either at 90° near the first CI (left panel) or at 90° near the second CI (right panel). Each panel compares results from the two groups of children. Individual results are shown as well as group mean (± SD). The legend indicates subject codes, and for the CI-CI children, the number of months after activation of the second CI.

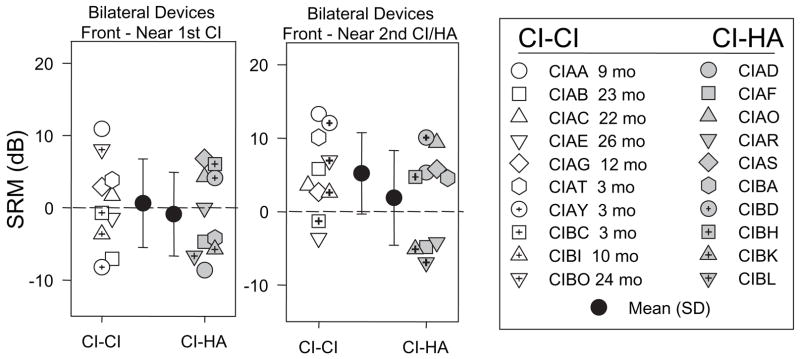

An important question regarding bilateral amplification is whether the second CI/HA provides a functional benefit. The speech intelligibility measures used here offer one means of assessing the differences in performance when children listen through their first (or only) CI, and when a second device (second CI or HA) is added. To evaluate the bilateral benefit, SRTs in the bilateral listening mode were subtracted from SRTs in the monaural (first CI) mode; positive difference scores indicate a benefit (reduction of SRTs in the bilateral mode), whereas negative values indicate a disadvantage, or disruption, due to the addition of a second CI/HA. Bilateral advantage scores (in dB) are plotted in Figure 4, by group and condition. A mixed 2-way ANOVA was conducted on these scores, treating group as the between-subjects variable and condition as the repeated-measures variable. There was a significant effect of group [F(1,18)= 9.962, p < .005], with no other effects or interactions. This finding suggests that the bilateral advantage is significantly higher for the CI-CI group compared with the CI-HA group regardless of whether testing was conducted in quiet, or in the presence of interferers.

Figure 4.

The bilateral advantage (see text) is potted for the four listening conditions (quiet, or with interfering speech at front, near the first CI or near the second CI/HA), and within each panel Individual results are shown as well as group mean (± SD). The legend indicates subject codes, and for the CI-CI children, the number of months after activation of the second CI.

In Figure 4, the horizontal lines at zero refer to values with no bilateral advantage or disruption. For all conditions, group average values are positive for the CI-CI group, and are either negative or near zero for the CI-HA group. The individual data points for both groups are, however, important to consider, as the addition of a second device produced advantages for some children, while being disruptive in other children. It is especially important to note this fact for the children in the CI-HA group who diverge from the group mean and show a bilateral advantage. These advantages are not directly and consistently related to the children’s audiometric thresholds in the HA ear (see Table 2). For instance, CIBA had 30–50 dB thresholds at .25–4 kHz in the HA ear and showed a bilateral advantage on several conditions. CIBH had 80–100 dB thresholds at .5–4 kHz and had a bilateral disadvantage on most conditions. This would suggest that the audiogram predicts performance. However, CIBL, whose thresholds were rather good (40 dB @ .5–3 kHz) showed a mixture of bilateral advantage and disruption, depending on the condition.

Although the amount of bilateral experience was noted as being an important factor for performance on the MAA task (Litovsky et al, 2006), it does not seem to be the case for the bilateral advantage in the speech task. As can be seen from Figure 4, children can show large advantage values with as little as 3 months of bilateral stimulation (e.g., CIBC, CIBI on conditions with interferers).

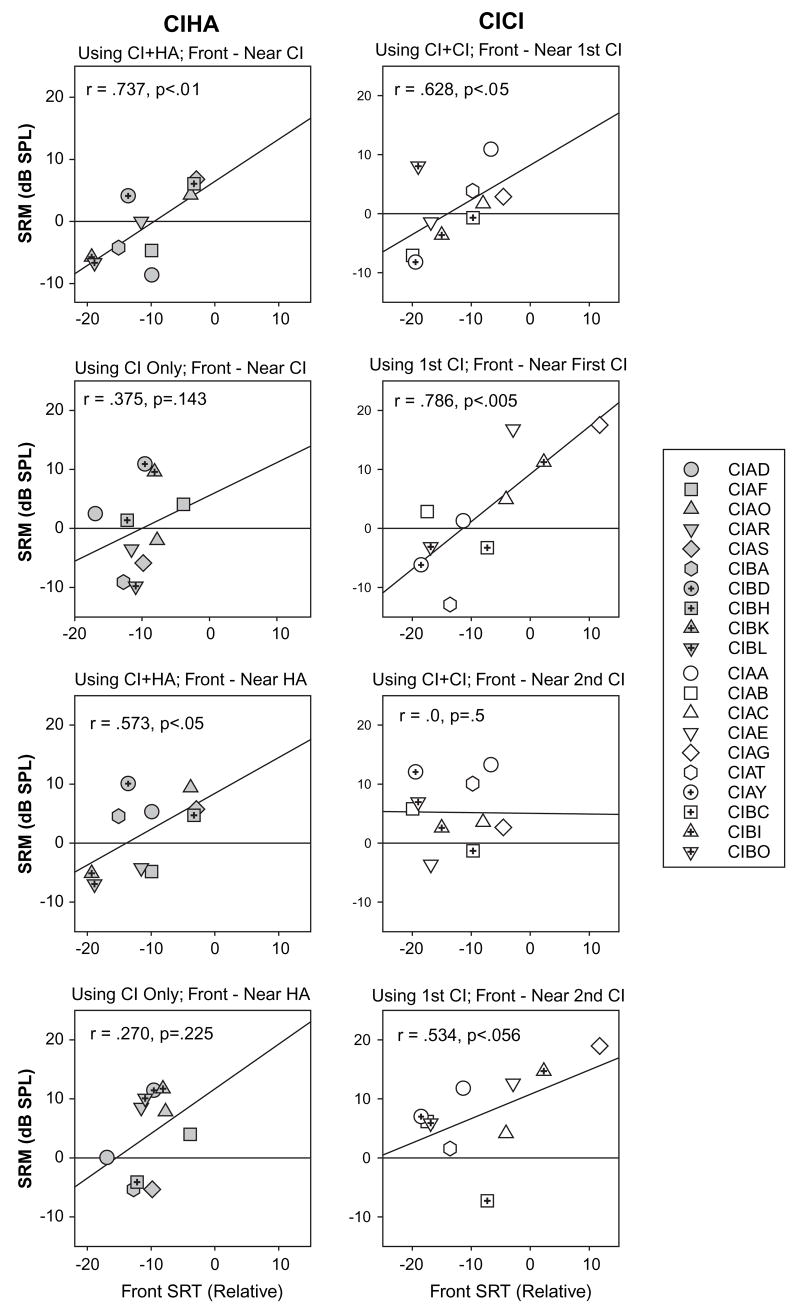

Finally, data were examined to determine whether SRM can be predicted from performance on conditions in which spatial cues are not available. Figure 5 shows correlations for SRM values and SRTs in the front (non spatial) condition. In the CI-CI group, significant correlations were obtained for SRM when the competitors were near the first-CI side, in both the bilateral (r= .628, p < .05) and monaural (r= .786, p < .005) listening modes. The correlations suggest that, in the bilateral mode, children with high SRTs due to masking in the front condition are likely to benefit from spatial separation of the two sounds (e.g., subjects CIAA, CIAG). In contrast, children with low SRTs (small masking) in the front condition are likely to perform worse when spatial separation is introduced (e.g., subjects CIAB and CIAY), somewhat of an “anti-SRM” or spatial disruption. This effect might occur if the microphone in the implant device has a directional property that selectively amplifies signals from the side (microphone properties were not available). Alternatively, a perceptual-based explanation is that a masker that is segregated from the target speech and nearer to the side of the head could potentially attract more attention, resulting in a disruptive effect.

Figure 5.

Correlations are shown for SRM values and SRTs obtained in the front (non spatial) condition. Results are shown separately for the CI-CI (right) and CI-HA group (left). Each panel contains values obtained for different listening conditions, when the children were using either both or one device, and when the competing speech was either near the first CI or on the other side. The exact condition is indicated in the text above each graph, and results from the correlation values are indicated within each plot.

When the competitors are displaced towards the side with the second CI, CI-CI group generally shows a positive SRM, hence the lack of correlation with the front masking condition. That is, children with sequential bilateral CIs generally cope better with displacement of interferers towards the second CI than towards the first CI. In the CI-HA group, correlations were significant only when bilateral devices were used, when the competitors were displaced towards either the CI side (r= .737, p < .01) or the HA side (r= .573, p < .05). This finding suggests that in this group, children who generally have high levels of masking in front (absence of spatial cues) will benefit from spatial separation of the target and interferer, regardless of the direction towards which the interferer is displaced.

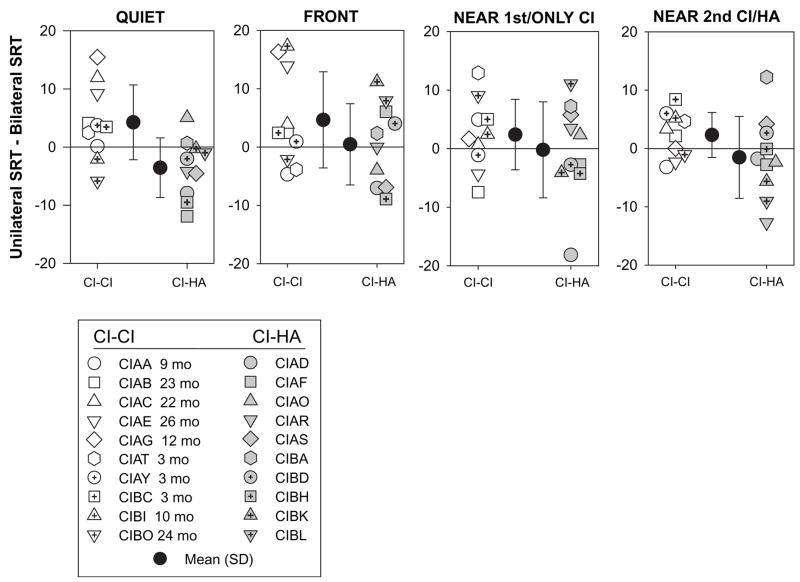

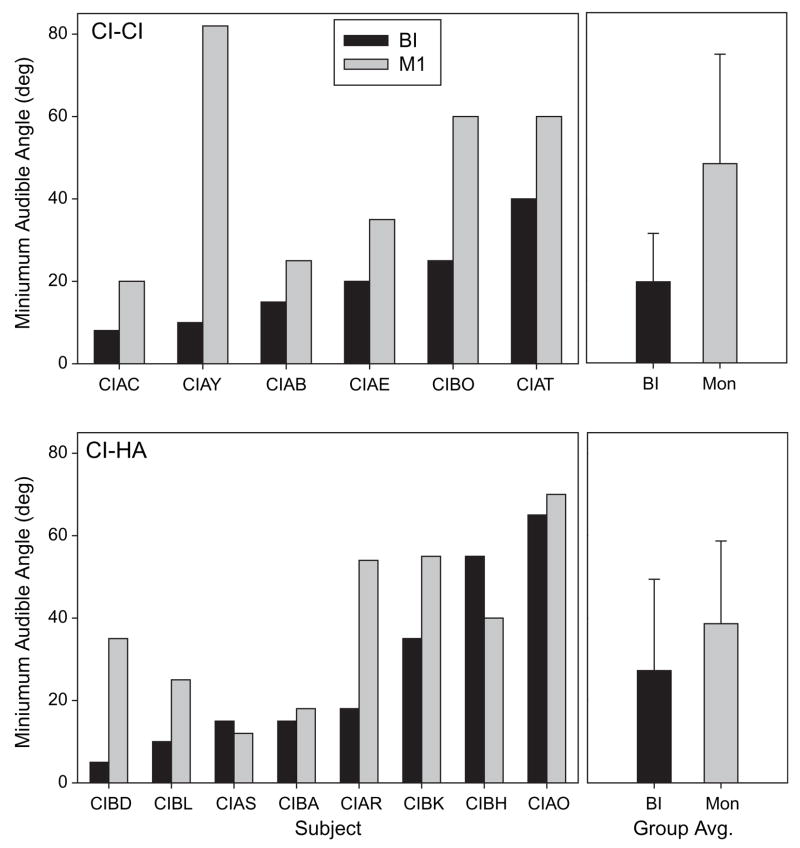

Minimum audible angle

Of the 20 subjects tested on the speech measures described thus far, 14 (6 CI-CI and 8 CI-HA) also participated in the MAA study. Individual MAA thresholds are plotted in Figure 6, in ascending order according to bilateral thresholds, alongside group means (± SD). In the CI-CI group, MAA thresholds were lower (better) in the bilateral mode than in the monaural mode for all children; the effect sizes, however, differ amongst the children, ranging from 11° to 72°. In the CI-HA group there are also clear examples of a bilateral benefit in 4/8 children ranging from 20° to 36°. Of the remaining 4 children, two had small bilateral benefits of 3°, and two showed bilateral disruption of 15° and 3°. Paired-sample t-tests were conducted for each group to examine the effect of listening mode. In the CI-CI group, MAAs were significantly smaller in the bilateral mode (20° average) than in the monaural mode (50° average) [t(5)= − 2.630, p < .05,two-tailed), suggesting a bilateral benefit for localization acuity. In contrast, in the CI-HA group there was no significant difference between bilateral and monaural modes. The lack of significant difference in the CI-HA group is most likely due to the high variability in performance amongst those children. A few of the CI-HA children performed as well as the CI-CI children in the bilateral mode, but a number had very high MAA thresholds in the bilateral mode. In addition, in a few cases the monaural MAA performance was also excellent (e.g., CIAS, CIBA), suggesting that these children were able to use spatial cues to some extent with their CI alone. As was found in the speech measure, here too bilateral advantages are not directly and consistently related to the children’s audiometric thresholds in the ear with the HA (see Table 2). Between-subjects t-test (with unequal n size) revealed no significant difference between the groups for either the bilateral or unilateral listening mode.

Figure 6.

MAA values are shown for individual children on the left, and group means (± SD) on the right. Values are compared for monaural (gray fill) and bilateral (black fill) listening modes. Results are shown separately for the CI-CI group (top) and CI-HA group (bottom). Subject codes are indicated below each graph.

Finally, the data were examined to determine whether there is a relationship between individual children’s performance on the MAA task in the bilateral mode and the bilateral benefit observed with the speech task. Surprisingly, none of the speech measures correlated with the MAA results, suggesting that performance on these two tasks may depend on somewhat different auditory mechanisms.

Discussion

This study was aimed at determining whether there are identifiable benefits from bilateral stimulation in children. Two groups of children participated, those with bilateral CIs, and those with bimodal hearing who wear a CI in one ear and a HA in the opposite ear. All children traveled to Madison WI from various localities to participate in these studies, and their CI mappings and HA fittings were performed by their own clinicians prior to enrolling in the study, which may be an important point to consider. They also vary in age, type of device used, amount of experience with their first CI, and for the bilaterally implanted children, amount of bilateral experience. The findings lead to some general conclusions about the effects of bilateral stimulation on functional abilities of the children studied here. The speech intelligibility measures showed that children with bilateral CIs and with bimodal hearing had, on average, similar SRTs. That is, the level at which they are able to correctly identify spondaic words, either in quiet or in the presence of competing sounds, is not different. It is noteworthy that in quiet, average SRTs for both groups were between − 20 and − 26 with reference to the 60 dB SPL competitor (actual SRTs were 30–40 dB SPL), which is higher than the average SRTs (27 ± 5.25 dB SPL) reported for normal-hearing children ages 4–7 when tested using identical methods (Litovsky, 2005). However, there is great variability in the SRTs measured here; several of the children had results within the reported normal limits, while others’ performance was substantially worse than that of normal-hearing children. The results therefore suggest that, while some of the children with degraded speech inputs are able to extract sufficient information from their surroundings, others are clearly less likely to succeed. An age effect may also have occurred; the two youngest (6 year old) children in this group generally had higher SRTs than the older children.

An important difference between bilateral and bimodal children arises when comparing their performance with a single (first) CI and with both ears activated. The two-ear advantage, which produced a reduction in SRTs with two devices compared with a single device, was seen more clearly and significantly in the children with two CIs; SRTs improved in the bilateral mode for all listening conditions. In contrast, in the CI-HA group, the addition of a HA in the non-implanted ear did not result in overall better SRTs; while a few individuals showed improvement on some conditions with the addition of a HA (e.g., CIBA, CIBL), others had a bilateral disruption evidenced by increased SRTs with the addition of the HA. Overall, these findings suggest that children with bilateral CIs show greater improvement from a second CI than do children who wear a HA in the opposite ear. It is important to note that this finding is based on the CRISP-Spondee closed-set test, but has not been confirmed for other measures of speech intelligibility. Results with the CI-CI children group are consistent with prior reports of bilateral advantages in adults with bilateral CIs (Gantz et al, 2002; Tyler et al, 2002; Muller et al, 2002; Litovsky et al, 2004; Schleich et al, 2004). There is an important difference between most adults and children with bilateral CIs. The vast majority of adults studied to date acquired their deafness post-lingually, after having learned to hear acoustically and after having acquired some binaural abilities. The children who participated in this study were pre-lingually deaf and have not had the same opportunity to establish language skills, nor to develop binaural abilities prior to receiving their CIs. The results presented here therefore suggest that the ability to take some advantage of two CIs does not require prior binaural experience. The magnitudes of the benefits across the two populations are hard to compare because of the differences in measures (adults have not been tested on the spondees), as well as possible confounds of auditory experience, age and cognition. As the children become older however, measures on early-implanted children will be comparable to measures in adult implantees. Results obtained in the CI-HA group are in agreement with a recent report (Ching et al, 2005) indicating that while adults benefit from using bimodal hearing devices on measures of speech in noise, children are less likely to.

Spatial release from masking (SRM), a measure of the extent to which listeners are able to rely on spatial segregation of target and competing speech to better understand target speech, was not statistically different between the two groups. It may be interesting to note, however, that when the competitors were near the side of the (newer) second CI, or HA (i.e., when the first-CI ear was protected from the competitor by head shadow), average SRM values were 5.2 and 1.8, respectively. SRM values for many of the CI-CI children were within the range of 5–7 dB obtained in normal hearing children (Litovsky, 2005; Johnstone & Litovsky, 2006). One other consideration, however, is that the benefit in this condition is not likely to be due to binaural effects such as “squelch” (e.g., Tyler et al, 2003; Schleich et al, 2004). Previous studies on the advantages of bilateral CIs in adults have also confirmed that squelch effects are somewhat minor compared with other bilateral benefits, such as head-shadow (van Hoesel, 2004; Litovsky et al, 2004; Schleich et al, 2004), most likely due to limitations in current fittings and speech processing strategies that do not maximize binaural information.

Measures of MAA obtained here suggest that bilateral listening modes are helpful to both groups of children, but significantly more beneficial for children with bilateral CIs than children with bimodal (CI-HA) hearing. This is in part because all CI-CI children show a binaural effect to some extent, while some of the CI-HA children have very high bilateral MAAs, indicating the lack of benefit from the HA. In addition, the CI-HA children slightly outperform the CI-CI children in the monaural (first CI) condition, leaving less room for bilateral improvement. These measures should however be used with caution when making decisions about whether to provide children with bilateral CIs. Those children in the CI-HA group typically report benefits from their HA and prefer to wear it rather than to wear their CI alone. In contrast, in all but one (CIBC) of the CI-CI children, attempts were made to fit the second ear with a HA prior to the second implantation, but there appeared to be no benefit from the HA.

Conclusion

Children with bilateral stimulation, whether two CIs or a CI and a HA, show certain benefits on perceptual measures aimed at identifying bilateral advantages. However, children with two CIs perform significantly better than children with bimodal hearing on two specific measures. The first is the advantage for speech intelligibility when using two devices compared with a single device. The second is the advantage gained from bilateral devices on the localization acuity (minimum audible angle) task. The decision regarding who should receive two CIs remains at the clinical level, and depends on numerous factors that are yet to be fully understood and agreed upon. The tests described here may be useful at that level when such decisions are being made. An important consideration, not directly addressed here, is the effect of auditory experience and plasticity on children’s ability to benefit from bilateral CIs. Should children who receive two implants do so by a certain age? The results show that benefits from two CIs are not restricted to the younger children. At the moment, the N size is too small to make general recommendations regarding age, hence further work is needed in which young-implanted bilateral users are carefully assessed for bilateral benefits. Finally, there are of course some limitations in current clinical fitting approaches, and in the speech processing strategies, which place constraints on the extent to which users function similarly to normal-hearing persons with intact binaural hearing. CI users have only a finite number of electrodes distributed along the tonotopic axis of the cochlea, and the electrode placement can vary dramatically. In addition, implant speech processors do not preserve rapidly-varying frequency modulations, which provide fine-structure information that is useful in binaural processing. Even for envelope-driven stimulation, binaural sensitivity would be maximized if there were fitting strategies and hardware designs by which information to specific electrode sites along the two CI arrays would be matched spectrally and temporally. Future advances in binaural fitting strategies will no doubt contribute to increasing the binaurally-driven advantages in persons with bilateral CIs.

Acknowledgments

The authors are very grateful to the many people who helped with data collection and analysis in our lab, including Corina Vidal, Smita Agrawal, Tanya Jensen, Dianna Brown and Soha Garadat. We are grateful to Gongqiang Yu for invaluable software and hardware support, to Tina Grieco for helpful discussions and feedback on the manuscript. Many thanks also to the audiologists and surgeons who referred the children to our lab and encouraged their participation, including R. Peters and J. Lake (Dallas), J. Madell (NY), S. Waltzman (NY), M. Neault (Boston), N. Young (Chicago), J. Hitchcock (Rochester, MN). L. Gregoret has been incredibly helpful as well with recruitment and encouragement. Cochlear Americas provided startup support during initial stages of this project. Most of all, we are grateful to all the parents and children for their willingness to travel to Madison, their positive attitudes and hard work. Work supported by NIH-NIDCD R01 DC003083 and R21 DC006641 to R.Y.L.

Abbreviations

- CI

Cochlear implant

- HA

Hearing aid

- MAA

Minimum audible angle

- SRM

Spatial release from masking

- SRT

Speech reception threshold

- CI-CI

Bilateral cochlear implants group

- CI-HA

Bimodal group

References

- Armstrong M, Pegg P, James C, Blamey P. Speech perception in noise with implant and hearing aid. Am J Otol. 1997;18:S140–1. [PubMed] [Google Scholar]

- Ching TY, Psarros C, Hill M, Dillon H, Incerti P. Should children who use cochlear implants wear hearing aids in the opposite ear? Ear Hear. 2001;22:365–80. doi: 10.1097/00003446-200110000-00002. [DOI] [PubMed] [Google Scholar]

- Ching TY, Incerti P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears? Ear Hear. 2004;25:9–21. doi: 10.1097/01.AUD.0000111261.84611.C8. [DOI] [PubMed] [Google Scholar]

- Ching TY, van Wanrooy E, Hill M, Dillon H. Binaural redundancy and inter-aural time difference cues for patients wearing a cochlear implant and a hearing aid in opposite ears. Int J Audiol. 2005;44:513–21. doi: 10.1080/14992020500190003. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV, Wang X. Effects of noise and spectral resolution on vowel and consonant recognition: acoustic and electric hearing. J Acoust Soc Am. 1998;104:3586–96. doi: 10.1121/1.423941. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Tyler RS, Rubinstein JT, Wolaver A, Lowder M, Abbas P, Brown C, Hughes M, Preece JP. Binaural cochlear implants placed during the same operation. Otol Neurotol. 2002;23:169–80. doi: 10.1097/00129492-200203000-00012. [DOI] [PubMed] [Google Scholar]

- Green T, Faulkner A, Rosen S. Enhancement of temporal periodicity cues in cochlear implants: Effects on prosodic perception and vowel identification. J Acoust Soc Am. 2005;118:375–385. doi: 10.1121/1.1925827. [DOI] [PubMed] [Google Scholar]

- Holt RF, Kirk KI. Speech and language development in cognitively delayed children with cochlear implants. Ear Hear. 2005;26:132–48. doi: 10.1097/00003446-200504000-00003. [DOI] [PubMed] [Google Scholar]

- Johnstone PM, Litovsky RY. Effect of masker type on speech intelligibility and spatial release from masking in children and adults. J Acous Soc Am. 2006 doi: 10.1121/1.2225416. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones GL, Litovsky RY, Agrawal SS, van Hoesel RJ. Effect of stimulation rate and interaural electrode pairing on ITD sensitivity in bilateral cochlear implant users. CIAP Abstracts. 2005:21. [Google Scholar]

- Kong YY, Stickney GS, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–61. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Macmillan NA. Sound localization precision under conditions of the precedence effect: effects of azimuth and standard stimuli. J Acoust Soc Am. 1994;96:752–8. doi: 10.1121/1.411390. [DOI] [PubMed] [Google Scholar]

- Litovsky RY. Developmental changes in the precedence effect: Estimates of Minimal Audible Angle. J Acoust Soc Amer. 1997;102:1739–1745. doi: 10.1121/1.420106. [DOI] [PubMed] [Google Scholar]

- Litovsky RY. U.S. Patent No. 6,584,440 Method and system for rapid and reliable testing of speech intelligibility in children. 2003

- Litovsky RY, Parkinson A, Arcaroli J, Peters R, Lake J, Johnstone P, Yu G. Bilateral cochlear implants in adults and children. Arch Otology Head and Neck Surgery. 2004;130:648–655. doi: 10.1001/archotol.130.5.648. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Agrawal SS, Jones GL, Henry BA, van Hoesel RJ. Effect of interaural electrode pairing on binaural sensitivity in bilateral cochlear implant users. Assoc Res Otolaryngol. 2005:168. [Google Scholar]

- Litovsky RY. Speech intelligibility and spatial release from masking in young children. J Acoust Soc Amer. 2005;117:3091–3099. doi: 10.1121/1.1873913. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Johnstone PM, Godar S, Agrawal SS, Parkinson A, Peters R, Lake J. Bilateral cochlear implants in children: localization acuity measured with minimum audible angle. Ear Hear. 2006;27:43–59. doi: 10.1097/01.aud.0000194515.28023.4b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Long CJ, Eddington DK, Colburn HS, Rabinowitz WM. Binaural sensitivity as a function of interaural electrode position with a bilateral cochlear implant user. J Acoust Soc Am. 2003;114:1565–1574. doi: 10.1121/1.1603765. [DOI] [PubMed] [Google Scholar]

- Muller J, Schon F, Helms J. Speech Understanding in Quiet and Noise in Bilateral Users of the MED-EL COMBI 40/40+ Cochlear Implant System. Ear Hear. 2002;23:198–206. doi: 10.1097/00003446-200206000-00004. [DOI] [PubMed] [Google Scholar]

- Nie K, Stickney G, Zeng FG. Encoding frequency modulation to improve cochlear implant performance in noise. IEEE Trans Biomed Eng. 2005;52:64–73. doi: 10.1109/TBME.2004.839799. [DOI] [PubMed] [Google Scholar]

- Nopp P, Schleich P, D’Haese P. Sound localization in bilateral users of MED-EL COMBI 40/40+ cochlear implants. Ear Hear. 2004;25:205–214. doi: 10.1097/01.aud.0000130793.20444.50. [DOI] [PubMed] [Google Scholar]

- Nelson PB, Jin SH. Factors affecting speech understanding in gated interference: cochlear implant users and normal-hearing listeners. J Acoust Soc Am. 2004;115:2286–94. doi: 10.1121/1.1703538. [DOI] [PubMed] [Google Scholar]

- Rothauser EH, Chapman WD, Guttman N, Nordby KS, Silbiger HR, Urbanek GE, Weinstock M. I.E.E.E. recommended practice for speech quality measurements. IEEE Trans Aud Electroacoust. 1969;17:227–246. [Google Scholar]

- Rubinstein JT, Hong R. Signal coding in cochlear implants: exploiting stochastic effects of electrical stimulation. Ann Otol Rhinol Laryngol Suppl. 2003;191:14–19. doi: 10.1177/00034894031120s904. [DOI] [PubMed] [Google Scholar]

- Schleich P, Nopp P, D’Haese P. Head shadow, squelch, and summation effects in bilateral users of the MED-EL COMBI 40/40 + cochlear implant. Ear Hear. 2004;25:197–204. doi: 10.1097/01.aud.0000130792.43315.97. [DOI] [PubMed] [Google Scholar]

- Stickney GS, Zeng FG, Litovsky R, Assmann P. Cochlear implant speech recognition with speech maskers. J Acoust Soc Am. 2004;116(2):1081–91. doi: 10.1121/1.1772399. [DOI] [PubMed] [Google Scholar]

- Stickney GS, Nie K, Zeng FG. Contribution of frequency modulation to speech recognition in noise. J Acoust Soc Am. 2005;118(4):2412–20. doi: 10.1121/1.2031967. [DOI] [PubMed] [Google Scholar]

- Summerfield AQ, Marshall DH, Barton GR, Bloor KE. A cost-utility scenario analysis of bilateral cochlear implantation. Arch Otolaryngol Head Neck Surg. 2002;128(11):1255–62. doi: 10.1001/archotol.128.11.1255. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Parkinson AJ, Wilson BS, Witt S, Preece JP, Noble W. Patients utilizing a hearing aid and a cochlear implant: speech perception and localization. Ear Hear. 2002;23:98–105. doi: 10.1097/00003446-200204000-00003. [DOI] [PubMed] [Google Scholar]

- van Hoesel R. Exploring the benefits of bilateral cochlear implants. Audiol Neurootol. 2004;9:234–246. doi: 10.1159/000078393. [DOI] [PubMed] [Google Scholar]

- van Hoesel R, Ramsden R, Odriscoll M. Sound-direction identification, interaural time delay discrimination, and speech intelligibility advantages in noise for a bilateral cochlear implant user. Ear Hear. 2002;23:137–149. doi: 10.1097/00003446-200204000-00006. [DOI] [PubMed] [Google Scholar]

- van Hoesel R, Tyler R. Speech perception, localization and lateralization with bilateral cochlear implants. J Acoust Soc Am. 2003;113:1617–30. doi: 10.1121/1.1539520. [DOI] [PubMed] [Google Scholar]

- Tyler RS, Dunn CC, Witt SA, Preece JP. Update on bilateral cochlear implantation. Curr Opin Otolaryngol Head Neck Surg. 2003;11(5):388–93. doi: 10.1097/00020840-200310000-00014. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: I. Fitting, sampling, and goodness of fit. Percept Psychophys. 2001a;63:1293–1313. doi: 10.3758/bf03194544. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: II. Bootstrap-based confidence intervals and sampling. Percept Psychophys. 2001b;63:1314–1329. doi: 10.3758/bf03194545. [DOI] [PubMed] [Google Scholar]

- Yang LP, Fu QJ. Spectral subtraction based speech enhancement for cochlear implant patients in background noise. J Acoust Soc Am. 2005;117:1001–1004. doi: 10.1121/1.1852873. [DOI] [PubMed] [Google Scholar]

- Zeng FG. Trends in cochlear implants. Trends Amplif. 2004;8(1):1–34. doi: 10.1177/108471380400800102. 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]