Abstract

Health technology assessments (HTAs) are an as yet unexploited source of comprehensive, systematically generated information that could be used by research funding agencies to formulate researchable questions that are relevant to decision-makers. We describe a process that was developed for distilling evidence gaps identified in HTAs into researchable questions that a provincial research funding agency can use to inform its research agenda. The challenges of moving forward with this initiative are discussed. Using HTA results to identify research gaps will allow funding agencies to reconcile the different agendas of researchers who conduct clinical trials and healthcare decision-makers, and will likely result in more balanced funding of pragmatic and explanatory trials. This initiative may require a significant cultural shift from the current, mostly reactive, funding environment based on an application-driven, competitive approach to allocating scarce research resources to a more collaborative, contractual one that is proactive, targeted and outcomes-based.

Abstract

Les évaluations des technologies de la santé (ETS) sont une source encore inexploitée d'information détaillée et produite de façon systématique. Elles pourraient être utilisées par les organismes qui financent la recherche pour formuler des questions de recherche pertinentes pour les décideurs. Un processus a été élaboré pour transformer les lacunes dans les preuves décelées dans les ETS en questions de recherche qu'un organisme provincial de financement peut utiliser pour orienter son propre programme de recherche. On discute des défis liés à la mise en œuvre d'une telle initiative. L'utilisation des résultats des ETS pour repérer les lacunes dans la recherche permettra aux organismes de financement de concilier les différents objectifs des chercheurs et des décideurs du domaine de la santé et mènera probablement à un financement plus équilibré des essais pragmatiques et explicatifs. Cette initiative pourrait nécessiter un important virage culturel, soit l'abandon du cadre de financement actuel, qui est principalement réactif et fondé sur une approche concurrentielle axée sur les applications, dans la répartition des maigres ressources de recherche, au profit d'une approche contractuelle, proactive, ciblée et davantage axée sur la collaboration et les résultats.

In most countries, the demand for healthcare outstrips the resources available to provide it. This imbalance, and the concomitant pressure on decision-makers to allocate resources appropriately, has been a prominent factor in the rise of health technology assessment (HTA). HTA is a form of policy research that seeks to inform decision-making, in both policy and practice, by systematically examining the effects of a particular technology on the individual and society with respect to its safety, efficacy, effectiveness and cost-effectiveness, as well as its social, economic and ethical implications, and identifying areas that require further research (Office of Technology Assessment 1976; Banta et al. 1999). A health technology, in this instance, is any intervention administered with the aim of improving the health status of patients or of populations (Muir Gray 1997). HTA activities are currently undertaken in more than 23 countries, and the majority are publicly funded and administered by national or regional governments (INAHTA 2008).

The Relevance Gap in Health Research

Basic research is often supported by public funds, while the private sector usually concentrates on applied research and technology development. A major problem with this arrangement is that developments from industry are often driven by technological and financial factors rather than the health needs of the population (Banta et al. 1999). In addition, it is not uncommon for publicly funded academic institutions to produce research that does not directly address a relevant patient or health system need, or improve on previous inconclusive clinical or population studies, and may even duplicate prior work on a question that has already been answered (Savulescu et al. 1996; Hotopf 2002; Tunis et al. 2003; Zwarenstein and Oxman 2006).

Despite substantial increases in public and private funding for clinical research over the last decade, research output still often fails to provide specific answers for many common, important questions posed by healthcare decision-makers (Pearson and Jones 1997; Hotopf 2002; Lenfant 2003). This lack is most apparent in the conclusions of systematic literature reviews, HTA reports and clinical practice guidelines. These research syntheses are designed to provide a comprehensive summation of the available evidence for decision-makers in the healthcare system, but this aim is routinely stymied by the poor quality and inadequate quantity of the available evidence (Tunis et al. 2003). The relevance disconnect between the research agenda and societal need undermines efforts to improve the scientific basis of healthcare decision-making and limits the ability of public and private insurers to develop evidence-based coverage policies (Tunis et al. 2003).

Identifying Evidence Gaps, Research Gaps and Researchable Questions

Health services research is crucial to controlling healthcare costs in the long term and ensuring optimal use of healthcare resources because it can help identify innovative, less expensive alternative therapies that should be promoted, as well as costly, harmful and ineffective treatments that should be sidelined (Lenfant 1994; Claxton and Sculpher 2006). When comparing health technologies, the questions posed by healthcare decision-makers are often structured differently from those addressed in clinical trials (Figure 1). Traditional explanatory or mechanistic trials, which recruit homogeneous populations and determine how an intervention works under ideal conditions (efficacy trials), rarely satisfy all the needs of decision-makers striving to make evidence-based determinations, particularly at the policy level. In contrast, pragmatic or practical clinical trials, which assess the extent to which an intervention produces a result under ordinary circumstances (effectiveness trials), are formulated according to the information needed to make a decision and are conducted in heterogeneous patient populations under “real-world” conditions (Table 1) (de Zoysa et al. 1998; Roland and Torgerson 1998; Tunis et al. 2003; The Cochrane Collaboration 2005).

FIGURE 1.

The relationship between evidence gaps and research gaps

TABLE 1.

Summary of main differences between explanatory and pragmatic trials

| Study Characteristic | Explanatory Trials | Pragmatic Trials |

|---|---|---|

| Objective | Test of efficacy Tests a specific component of treatment |

Test of effectiveness Tests a package of care rather than individual components contributing to that care |

| Inclusion/exclusion criteria | Many; narrowly defined | Few; broadly defined |

| Patient group | Selected and homogeneous | Diverse and heterogeneous Participants reflect the population for which the treatment is intended |

| Randomization | Usually randomization by participant | Often quasi-experimental designs with no randomization |

| Blinding | Often double-blind | Participants blinded to treatment allocation when possible Data collectors and analysts often blinded to treatment allocation |

| Intervention | Standardized Simple interventions; often a discrete single activity |

May be a discrete single activity, but often involves a complex intervention |

| Control | Often placebo-controlled | Standard care (clinically relevant interventions or no treatment) |

| Ancillary therapy | Rarely present | Often present Reflects clinical practice |

| Outcomes | Single-objective, often laboratory-based, outcomes | Wider spectrum; measures that are familiar to prescribing clinicians and relevant to everyday life, such as function and quality of life |

| Setting | Experimental setting | Routine care setting |

| Technical skill and experience of practitioners | Usually experts | Wide variation |

| Compliance | Maximized Measured to assure high level |

Not essential, often low Often measured as an outcome Includes non-compliers and dropouts |

| Sample size | Small Standard statistical determination of sample size |

Large |

| Co-morbidities | Often none | Often present |

| Informed consent | Lengthy | Brief |

| Follow-up | Usually short-term | Longer-term |

| Data collection | Extensive | Limited |

| Confounding | Controlled where possible | Not controlled |

| Internal validity (the extent to which the study design is likely to have precluded bias) | Higher | Lower |

| External validity (degree of generalizability of the results) | Lower | Higher |

The majority of trials conducted to date have been explanatory trials because most major research funding organizations do not have an explicit mandate to fund studies that are important to decision-makers (Hotopf 2002; Tunis et al. 2003). In an attempt to redress this deficiency, various research funding agencies in the USA and Canada have sponsored pragmatic trials in recent years, but these organizations still do not have a systematic mechanism for identifying decision-makers' areas of priority (Tunis et al. 2003; Glasgow et al. 2006). The resulting evidence gap can be defined as all the evidence missing from a body of research on a particular topic that would otherwise potentially answer the questions of decision-makers (clinicians, other practitioner groups, administrators, policy makers) (Figure 1). By systematically summarizing the available evidence in response to policy-driven questions, HTAs routinely identify evidence gaps that are relevant to policy makers and the attendant “research gaps,” that is, the additional research needed, from a policy maker's perspective, to address the evidence gap in the available primary research. There are almost always fewer research gaps than evidence gaps because, while it would be nice to know everything (the evidence gap), most of the time decision-makers must be content with picking a few aspects of the evidence gap that would be the most useful for informing decisions and the most practicable to answer within the time and resource constraints of the research environment (the research gap).

Thus, HTAs are an as yet unexploited source of systematically generated, comprehensive information that could be used by research funding agencies to bridge the evidence gap and formulate researchable questions that are relevant to decision-makers. However, relying solely on the producers of HTA reports to identify research gaps will result in an extensive list, but not necessarily one that is relevant to clinicians or policy makers (de Vet et al. 2001), since HTAs are circumscribed by the inherent limitations of the evidence base they summarize. Any endeavour to derive researchable questions from the research gaps identified by HTAs must include researchers, policy makers, clinicians, consumers and the public, since each group will often have different opinions on the need for future research and how it should be designed, financed and developed (Black 2001; Lomas et al. 2003).

Using HTA to Inform the Research Funding Agenda

World experience

From the results of a recent survey of members of the International Network of Agencies for Health Technology Assessment, it appears that only two countries, Belgium and the United Kingdom, have a formal process for linking the identification of research gaps from HTA reports to the research funding process (Table 2) (Scott et al. 2006). Among many other HTA agencies, the use of HTA reports to help funding agencies address evidence gaps usually occurs in an ad hoc, serendipitous fashion, if at all. The agencies surveyed identified a number of challenges in pursuing such a process, including insufficient resources, in terms of personnel and time, to commit to such a project; the difficulty of providing clear explanations and valid recommendations for future research from HTAs; a reluctance to interfere with an already established research agenda; and the logistical complexity of establishing such a long-term strategy.

TABLE 2.

Summary of the INAHTA member survey results and environmental scan

| Agency/Country | Formal Process |

|---|---|

| Institute of Technology Assessment (ITA) Austria |

No* |

| Belgian Health Care Knowledge Centre (KCE) Belgium |

Yes* Unanswered questions identified by HTA reports can be submitted as a new topic proposal for the following year's work program. Topic proposals are reviewed annually. Selected proposals, which are chosen according to their health policy relevance or potential impact, are funded by the KCE. |

| Agence d'évaluation des technologies et des modes d'intervention en santé (AETMIS) Canada |

No* |

| Danish Centre for Evaluation and HTA (DACEHTA) Denmark |

No*† |

| Hungarian HTA agency (HunHTA) Hungary |

No* |

| The Advisory Council on Health Research (RGO) Organization for Health Research and Development (ZonMw) Netherlands |

Unclear whether topics for future research come from HTA reports* A subprogram of the Health Care Efficiency Research Program; focuses on closing the knowledge gaps to promote the use of cost-effective interventions. Details of the process are unclear. The RGO sets priorities for the HTA program of ZonMw, which then invites researchers to formulate research proposals on the specific research topics. |

| Norwegian Knowledge Centre for the Health Services (NOKC) Norway |

No* |

| The Catalan Agency for Health Technology Assessment and Research (CAHTA) Spain |

Unclear whether topics for future research come from HTA reports† The agency designs and assesses research protocols and projects. CAHTA also manages a biennial call for clinical and healthcare services research proposals that are financed by the CatSalut and the Inter-department Research and Technological Innovation Commission (CIRIT). |

| Basque Office for Health Technology Assessment (OSTEBA) Spain |

No* |

| The Swedish Council on Technology Assessment in Health Care (SBU) Sweden |

No* |

| The Health Technology Assessment Program (NCCHTA) United Kingdom |

Yes*† A formal section in HTA reports identifies further research required. This information is fed into the NCCHTA prioritization process, together with research recommendations from other high-quality systematic reviews, Cochrane Reviews, NICE guidance, NHS programs, a Web-based call for suggestions and special-interest groups. The HTA program invites bids for the prioritized research. The Health Technology Assessment Commissioning Board (HTACB) oversees the commissioning process. |

| National Institute for Health and Clinical Excellence (NICE) United Kingdom |

Yes† The Research and Development Programme fills evidence gaps identified in NICE reports by actively promoting them to research funding bodies. The program normally commissions research in partnership with another funding organization. |

| The Agency for Healthcare Research and Quality (AHRQ) United States |

No* |

| Veterans Affairs Technology Assessment Program (VATAP) United States |

No* |

HTA – health technology assessment; NHS – National Health Service

Sources: *Personal communication; †agency website.

The system in the United Kingdom, which seems to be the most comprehensive and systematic, is facilitated by two agencies, the National Coordinating Centre for Health Technology Assessment (NCCHTA) and the National Institute for Health and Clinical Excellence (NICE). NICE issues guidance for the National Health Service on public health, clinical practice and the use of health technologies. Evidence gaps identified by NICE guidance reports are fed into the NICE Research and Development Programme, where they are prioritized by a Research and Development Advisory Committee according to their importance, relevance and feasibility. As NICE is unable to commission research directly, the research recommendations and their priority ranking are published on the NICE website. High-priority topics are actively promoted to public and private research funding bodies (Claxton and Sculpher 2006; NICE 2006).

In contrast, the NCCHTA, which contracts review groups to undertake the HTA reports used to inform NICE guidance, is one of the largest public funders of research in the United Kingdom. It has an annual budget of GB£13 million, 90% of which is spent on new randomized controlled trials (Stevens and Milne 2004; NCCHTA 2007a). The HTA reports identify areas where further research is required, and this information is used by the NCCHTA, together with research recommendations from other sources, to establish a list of research topics. Informal descriptions of the research questions (vignettes) are then submitted to the relevant advisory panel and an HTA Prioritisation Strategy Group, which prioritize the topics according to their importance, urgency and potential cost (Claxton and Sculpher 2006; NCCHTA 2007a,b). Although this system appears to work well, the separation of research prioritization and commissioning from reimbursement decisions is not ideal (Claxton and Sculpher 2006; NCCHTA 2007a).

Canadian experience: A pilot project in Alberta, Canada

In Alberta, a unique situation exists in which an independent, government-sponsored HTA program is housed within a provincial research funding organization, the Alberta Heritage Foundation for Medical Research (AHFMR). The AHFMR disburses over $65 million each year and is one of the main sources of public funding for biomedical and health research in the province of Alberta (AHFMR 2003). Funding applications are assessed for their feasibility, importance and originality by external reviewers with expertise in the relevant field. The applications are then ranked by an AHFMR committee of reviewers (AHFMR 2003). While the AHFMR designates broad research priority areas for different categories of funding, there is no mechanism for systematically and objectively identifying evidence gaps. Therefore, a pilot project was undertaken to

assess how well HTA reports published by the AHFMR HTA program could identify evidence gaps and delineate the concomitant research gaps and

develop a process for distilling researchable questions from the research gaps identified by an AHFMR HTA report to inform the research funding programs of the AHFMR.

Objective 1: To Assess How Well AHFMR HTA Reports Delineate Research Gaps

An internal assessment was conducted of a consecutive series of HTA reports – four full HTA reports and four shorter reports (TechNotes) – published by the HTA program between 2002 and 2003 (Scott et al. 2006). All bar one of the reports were produced in response to questions posed by health ministry policy makers, who are the main clients of the HTA program.

The problem of limited evidence was reported severally in the reviewed HTA reports, but evidence and research gaps were not consistently or clearly highlighted and were often embedded within lengthy discussion sections. More useful information on evidence gaps was gleaned from personal interviews with the HTA researchers than from reading their reports (Scott et al. 2006).

Objective 2: To Develop A Process for Distilling Relevant Researchable Questions From The Research Gaps Identified in AHFMR HTA Reports

Two questionnaires were developed, one for researchers and one for clinicians/policy makers, to simultaneously formulate researchable questions from the research gaps uncovered in HTA reports and to capture the different perspectives and priorities of a representative cross-section of stakeholders (Figure 2). Three of the four questions were the same; the fourth item was omitted from the researcher questionnaire. The questionnaires focused on two HTA reports (Ospina and Harstall 2002, 2003) published by the HTA program on chronic pain and were piloted with an Information Sharing Group on Chronic Pain comprising one policy maker, one policy maker/health services researcher, one clinician, one clinician/health administrator and one HTA researcher who co-authored the reports.

FIGURE 2.

Questionnaire used for interviewing members of the Information Sharing Group on Chronic Pain

* THIS QUESTION WAS NOT POSED TO THE HTA RESEARCHER.

-

Does this report sufficiently answer the research question(s)?

yes

no

partially

What are the main research and clinical questions that remain unresolved after reading this report?

-

What are the main policy questions that remain unresolved after reading this report?

Please prioritize these issues from the perspective of what are, in your opinion, the most important issues that need to be answered about this topic to improve this aspect of care/service within the Alberta health system.

What kind of information/data/study would best answer the unresolved issues (research, clinical and policy), in your opinion?

-

*Please identify a research group or individual(s) who would be willing to assist with designing/formulating/undertaking a research proposal to address the outstanding question(s).

Are you willing to be an active participant in upcoming research projects?

The results were compiled into a list of research questions on chronic pain, reflecting the three stakeholder perspectives (health services research, clinical and policy), along with the names of potential researchers identified in the questionnaire as being willing to undertake the research. An illustrative set of results is summarized in Table 3. These were presented to the Vice President of Programs and the Director of Grants and Awards of the AHFMR. The consensus was that the process held promise and could work on a case-by-case basis. In addition, the following comments were made:

A dedicated group needs to be identified that will have the commitment to shepherd the process from start to finish. It is important for the HTA program to link the stakeholders.

Research in Alberta is largely investigator driven, so a paradigm shift is required. The research gaps project may be an important step in achieving this.

The level of complexity of the process should reflect the research dollars available for funding.

Using HTAs to identify research gaps could provide the AHFMR and its stake-holders with a mechanism for pinpointing research needs.

TABLE 3.

Summary of the research gaps/opportunities identified from an HTA report on multidisciplinary programs for chronic pain

| Research gaps noted in the text of the report per Objective 1 |

|---|

|

| Research gaps/researchable questions identified by the report per Objective 2* |

Research and clinical questions:

Policy questions:

What kind of information/data/study would best answer the unresolved issues?

|

Answers to questions 1, 2 and 3 of the survey only.

Moving from Theory to Practice

Implementation issues

Prioritizing the Research Questions

Priority should be given to medical and health services research that is most likely to improve health and the performance of the healthcare system (Fraser 2000). Since it is unrealistic to expect that all HTA reports will automatically undergo the process outlined to identify research gaps, formalized, objective criteria for prioritizing which HTA reports are chosen must be developed. Also, in cases where a number of researchable questions are identified from an HTA with no clear front runner, there must be an established process and criteria for prioritizing these questions.

Assembling a representative group that can provide a balanced review of the funding proposals may be challenging. In addition, the entire process must be shepherded to ensure that it is timely, that the proposals focusing on the research gaps do not get sidelined by other funding priorities and that the needs of all stakeholders are taken into account in the research design. Questions identified in HTA reports, which are often based on international research, must also be contextualized against local needs, the extant research capacity and the mandate of the funding agency. This is particularly relevant for Alberta, which has sizable financial resources available but only three million residents (AHFMR 2006; Statistics Canada 2006). If Alberta does not have the required clinical expertise for a specific research proposal, consideration should be given to the question of pursuing an out-of-province collaboration and possible sources of additional research dollars. The role of other Canadian HTA agencies in coordinating and establishing research policy also needs to be ascertained to ensure a unified strategy. For example, the Ontario Health Technology Advisory Committee has already established a Program for Assessment of Technology in Health (PATH) that undertakes field evaluations to collect primary data in parallel with an HTA for new technologies that have a scant evidence base (Goeree and Levin 2006).

To ensure acceptance by stakeholders in the research community, additional targeted funds may need to be found for identified research gaps rather than shifting money within the pool of currently available dollars. Care must also be taken to ensure that explanatory trials and basic curiosity-driven research are not underfunded as a result of an increased focus on policy-related research (Califf and Woodlief 1997; “Research Funding” 2003).

Obtaining Clinical, Policy, Consumer and Public Input

The Alberta pilot project showed that the research questions identified varied with the respondent's interests, role and educational background, thus emphasizing the need for multiple perspectives to increase relevance. The project owed much to the serendipitous existence of the Information Sharing Group, which provided a pool of accessible, motivated and knowledgeable clinicians, researchers and policy makers who could participate in the process. In most cases, such a group is not likely to be available, so who then provides clinical and policy input? And who provides public input? One possible solution is to engage the policy maker(s) who asked the question in the first place, and the clinicians who were either external reviewers for the HTA report, or who may have provided clinical expertise during its synthesis. Professional organizations may also be able to identify clinical experts, and lobby groups and consumer advocacy agencies may be a potential source of consumer participants. Involving the public and consumers in the production of HTA reports, rather than just at the tail end, would make this process even more seamless.

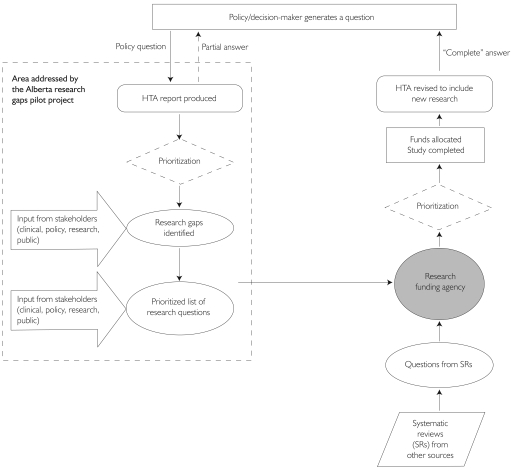

Establishing A Feedback Loop

For the process of identifying research gaps to be effective, the funded research needs to be fed back into another HTA, or some other mechanism, to provide the answer to the decision-maker who originally asked the question and close the loop (Figure 3). Therefore, criteria need to be established at the outset for determining when the question has been answered, or when further research is unlikely to yield any significant additional value (Claxton et al. 2004). These criteria will also help in gauging whether the research dollars were well spent. This decision would most likely be made within the funding agency by a committee that would ideally include the multidisciplinary team that helped formulate the initial research question. The cycle may have to go through a number of iterations before there is sufficient evidence to satisfy the decision-maker (Irwig et al. 1998).

FIGURE 3.

Flow diagram of the conceptual framework for the feedback loop involving research gaps identified by HTAs

Good coordination and communication among all the actors in the process are essential for success, and the process must be timely to ensure that the end result is still relevant to the policy maker and the current clinical context. In the Alberta pilot project, the HTA reports were the common link between the disparate stakeholders and, thus, provided a focal point for getting all the key players at the table and potentially narrowing the problematic discontinuity in decision-making between the research commissioning and reimbursement spheres.

Issues from the funder's perspective

The proposed approach of utilizing HTA reports to identify evidence gaps and subsequently derive research questions should in theory appeal to funders. After all, the relevance to decision-making, and the importance of the area in which the evidence gaps have been identified, should already be evident from the fact that the original question leading to the HTA came directly from the policy makers' environment. This is a big advantage for research funders eager to demonstrate the impact of the research they support on the efficiency of the health system. The process could also help funders find the appropriate balance between supporting broadly based, investigator-driven research (usually referred to as basic research, even within the areas of health services and population-based research) and the more applied, targeted research based on an HTA-linked process.

However, at the end of even the most elaborate process for identifying evidence gaps and defining researchable questions is the need to link such questions with appropriately qualified, available investigators willing to do the research. For most publicly run, non-profit funders this may require a significant cultural shift from a reactive, application-driven, competitive approach process for allocating scarce research resources to one that is more proactive, targeted and outcomes-based, and requires a more collaborative, contractual approach. Research funders still face a number of barriers to developing such an approach, including the scarcity of funds available for investigator-driven research, an academic recognition system that generally does not value “contract” research to the same extent as conventional, peer-reviewed, competitive research, and the perception that such applied research should be supported from within the healthcare system itself.

Methodological issues: Pragmatic versus explanatory trials

HTA is policy driven, so most research gaps identified by HTAs will involve questions of effectiveness rather than efficacy. Using HTAs to formulate researchable questions for funding may encourage more pragmatic clinical trials (Table 1). This, in turn, could force an expansion of HTA quality assessment criteria and entail more methodological development and training in the HTA community to tackle such issues as interpreting discrepant results between explanatory and pragmatic trials and ensuring accurate synthesis of the research evidence.

Key Elements in Using HTAs to Identify Research Gaps

During the Alberta pilot project, it became apparent that certain elements are essential for moving the initiative forward.

Explicit, actionable research questions

To be a facilitating factor in setting the research agenda, HTAs must be more explicit and consistent in defining specific research gaps and questions. However, such definition cannot be done in a contextual vacuum. The pilot project demonstrated the importance of incorporating input from motivated stakeholders.

Good communication

Because research funders and policy makers often have different priorities, HTA researchers must be able to “translate” HTA-derived research recommendations into a language that funders understand. Involving the funders in the design of the questionnaire provided a forum for ascertaining and understanding their priorities. In addition, we found the funders surprisingly open to exploring ways of increasing the relevance and impact of their disbursement decisions, so HTA agencies need not be shy in approaching local research funding agencies with such proposals.

On June 30, 2006, after 11 years at the AHFMR, the HTA program moved to the Institute of Health Economics, a prominent provincial HTA agency. While ostensibly problematic, this move actually proved beneficial. Firstly, the potential conflict of interest that may have been cited by other provincial HTA groups was removed by the disengagement. Secondly, the relationship forged between the AHFMR and the HTA program will now provide a model for establishing similar partnerships with other external HTA agencies.

Commitment

The infrastructure needed for prioritizing HTA-derived research proposals and managing the process outlined already exists within the AHFMR. Nonetheless, the composition of existing panels and committees that oversee funding allocation will have to change to incorporate a broader range of stakeholders than is currently represented. An intensive, ongoing commitment of resources and guidance from the HTA program is also necessary until the process gains enough momentum to be self-perpetuating. Even in the long term, HTA researcher input will remain a crucial part of the translation process between policy makers and the funding agency.

Conclusion

A process was developed in Alberta for distilling evidence gaps identified in HTAs on chronic pain management into researchable questions that a provincial research funding agency can use to inform its research agenda. This novel approach also identified a research team to coordinate and potentially conduct the necessary research studies. Although there will always be evidence gaps, such a process could serve as a starting point for funding agencies to fill some of these gaps by reconciling the different agendas of researchers and healthcare decision-makers. A detailed review of more established programs, particularly the system in the United Kingdom, may help to inform these efforts. The producers of HTAs can augment such processes by identifying and explicitly describing evidence gaps in the clinical research in their reports and involving clinicians, policy makers, consumers and the public in the production of HTAs.

Using HTA results to identify research gaps may be a way that funding agencies can better incorporate the needs of healthcare decision-makers into the research agenda and demonstrate the impact of the research they fund. Like the research endeavour itself, the orchestration of such a paradigm shift will involve an incremental evolution from this first step.

Acknowledgements

We are indebted to Dr. R. Taylor for provision of information and for valuable comments on the draft version of the report upon which this manuscript is based. We are grateful to the following individuals for their participation in and contribution to the pilot project: Mr. M. Taylor, Ms. L. Chan, Ms. P. Corabian, Ms. L. Dennett, Dr. B. Guo, Dr. D. Juzwishin, Ms. M. Ospina and Dr. Z. Tang. None of the authors have any personal conflicts of interest.

We also wish to thank the INAHTA member agencies who responded to the environmental scan surveys conducted in 2004 and 2006. Members of the Information Sharing Group on Chronic Pain were Mr. H. Borowski, Dr. S. Rashiq, Dr. D. Schopflocher and Dr. P. Taenzer.

Contributor Information

N. Ann Scott, Research Associate, Health Technology Assessment Unit, Institute of Health Economics, Edmonton, AB.

Carmen Moga, Research Associate, Health Technology Assessment Unit, Institute of Health Economics, Edmonton, AB.

Christa Harstall, Director, Health Technology Assessment Unit, Institute of Health Economics, Edmonton, AB.

Jacques Magnan, Vice President – Programs, Alberta Heritage Foundation for Medical Research, Edmonton, AB.

References

- Alberta Heritage Foundation for Medical Research (AHFMR) AHFMR 99-02 Triennial Report. 2003 Retrieved January 23, 2008. < http://www.ahfmr.ab.ca/publications>.

- Alberta Heritage Foundation for Medical Research (AHFMR) Highlights. 2006 Retrieved January 23, 2008. < http://www.ahfmr.ab.ca/publications>.

- Banta H.D., Oortwijn W.J. European Commission, Directorate-General for Employment, Industrial Relations and Social Affairs, Directorate V/F. Health Technology Assessment in Europe: The Challenge of Coordination. Luxembourg: Office for Official Publications of the European Communities; 1999. [Google Scholar]

- Black N. Evidence Based Policy: Proceed with Care. British Medical Journal. 2001;323(7307):275–79. doi: 10.1136/bmj.323.7307.275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Califf R.M., Woodlief L.H. Pragmatic and Mechanistic Trials. European Heart Journal. 1997;18(3):367–70. doi: 10.1093/oxfordjournals.eurheartj.a015256. [DOI] [PubMed] [Google Scholar]

- Claxton K., Ginnelly L., Sculpher M., Philips Z., Palmer S. A Pilot Study on the Use of Decision Theory and Value of Information Analysis as Part of the NHS Health Technology Assessment Programme. Health Technology Assessment. 2004;8:1–118. doi: 10.3310/hta8310. Retrieved January 23, 2008. <http://www.hta.nhsweb.nhs.uk/fullmono/mon831.pdf>. [DOI] [PubMed] [Google Scholar]

- Claxton K., Sculpher M. Using Value of Information Analysis to Prioritise Health Research: Some Lessons from Recent UK Experience. Pharmacoeconomics. 2006;24:1055–68. doi: 10.2165/00019053-200624110-00003. [DOI] [PubMed] [Google Scholar]

- The Cochrane Collaboration. Glossary of Terms in the Cochrane Collaboration. Chichester, UK: John Wiley & Sons; 2005. Version 4.2.5. Retrieved January 23, 2008. < http://www.cochrane.org/resources/handbook/glossary.pdf>. [Google Scholar]

- de Vet H.C., Kroese M.E., Scholten R.J., Bouter L.M. A Method for Research Programming in the Field of Evidence-Based Medicine. International Journal of Technology Assessment in Health Care. 2001;17(3):433–41. doi: 10.1017/s0266462301106148. [DOI] [PubMed] [Google Scholar]

- de Zoysa I., Habicht J.-P., Pelto G., Martines J. Research Steps in the Development and Evaluation of Public Health Interventions. Bulletin of the World Health Organisation. 1998;76(2):127–33. [PMC free article] [PubMed] [Google Scholar]

- Fraser D.W. Overlooked Opportunities for Investing in Health Research and Development. Bulletin of the World Health Organisation. 2000;78(8):1054–61. [PMC free article] [PubMed] [Google Scholar]

- Glasgow R.E., Davidson K.W., Dobkin P.L., Ockene J., Spring B. Practical Behavioral Trials to Advance Evidence-Based Behavioral Medicine. Annals of Behavioral Medicine. 2006;31(1):5–13. doi: 10.1207/s15324796abm3101_3. [DOI] [PubMed] [Google Scholar]

- Godwin M., Ruhland L., Casson I., MacDonald S., Delva D., Birtwhistle R., Lam M., Seguin R. Pragmatic Controlled Clinical Trials in Primary Care: The Struggle between External and Internal Validity. BMC Medical Research Methodology. 2003;3:28. doi: 10.1186/1471-2288-3-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goeree R., Levin L. Building Bridges between Academic Research and Policy Formulation: The PRUFE Framework – An Integral Part of Ontario's Evidence-Based HTPA Process. Pharmacoeconomics. 2006;24(11):1143–56. doi: 10.2165/00019053-200624110-00010. [DOI] [PubMed] [Google Scholar]

- Hotopf M. The Pragmatic Randomised Controlled Trial. Advances in Psychiatric Treatment. 2002;8:326–33. [Google Scholar]

- International Network of Agencies for Health Technology Assessment (INAHTA) 2008 Retrieved January 23, 2008. < http://www.inahta.org>.

- Irwig L., Zwarenstein M., Zwi A., Chalmers I. A Flow Diagram to Facilitate Selection of Interventions and Research for Health Care. Bulletin of the World Health Organisation. 1998;76(1):17–24. [PMC free article] [PubMed] [Google Scholar]

- Lenfant C. Research Needs and Opportunities. Maintaining the Momentum. Circulation. 1994;90(5):2192–93. doi: 10.1161/01.cir.90.5.2192. [DOI] [PubMed] [Google Scholar]

- Lenfant C. Clinical Research to Clinical Practice – Lost in Translation? New England Journal of Medicine. 2003;349(9):868–74. doi: 10.1056/NEJMsa035507. [DOI] [PubMed] [Google Scholar]

- Lomas J., Fulop N., Gagnon D., Allen P. On Being a Good Listener: Setting Priorities for Applied Health Services Research. Milbank Quarterly. 2003;81(3):363–88. doi: 10.1111/1468-0009.t01-1-00060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacRae K.D. Pragmatic versus Explanatory Trials. International Journal of Technology Assessment in Health Care. 1989;5(3):333–39. doi: 10.1017/s0266462300007406. [DOI] [PubMed] [Google Scholar]

- Muir Gray J.A. Evidence-Based Healthcare. New York: Churchill Livingstone; 1997. [Google Scholar]

- National Coordinating Centre for Health Technology Assessment (NCCHTA) Annual Report of the NIHR Health Technology Assessment Programme 2006. 2007a Retrieved January 23, 2008. <http://www.hta.nhsweb.nhs.uk/publicationspdfs/annualreports/annualreportfinalweb.pdf>.

- National Coordinating Centre for Health Technology Assessment (NCCHTA) The Principles Underlying the Work of the National Coordinating Centre for Health Technology Assessment. 2007b Retrieved January 23, 2008. <http://www.hta.nhsweb.nhs.uk/about/probity.pdf>.

- National Institute for Health and Clinical Excellence (NICE) National Institute for Clinical Excellence Research and Development Strategy. 2006 Retrieved January 23, 2008. < http://www.nice.org.uk/page.aspx?o=295953>.

- Office of Technology Assessment. Development of Medical Technology: Opportunities for Assessment. 1976 Retrieved January 23, 2008. < http://govinfo.library.unt.edu/ota/Ota_5/DATA/1976/7617.PDF>.

- Ospina M., Harstall C. Prevalence of Chronic Pain: An Overview. 2002 HTA #29. Retrieved January 23, 2008. <http://www.ihe.ca/hta/publications.html>.

- Ospina M., Harstall C. Multidisciplinary Pain Programs for Chronic Pain: Evidence from Systematic Reviews. 2003 HTA #30. Retrieved January 23, 2008. <http://www.ihe.ca/hta/publications.html>.

- Pearson P., Jones K. Primary Care – Opportunities and Threats. Developing Professional Knowledge: Making Primary Care Education and Research More Relevant. British Medical Journal. 1997;314(7083):817–20. doi: 10.1136/bmj.314.7083.817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Research Funding: The Problem with Priorities. Nature Materials. 2003;2(10):639. doi: 10.1038/nmat992. Editorial. [DOI] [PubMed] [Google Scholar]

- Roland M., Torgerson D.J. What Are Pragmatic Trials? British Medical Journal. 1998;316(7127):285. doi: 10.1136/bmj.316.7127.285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Savulescu J., Chalmers I., Blunt J. Are Research Ethics Committees Behaving Unethically? Some Suggestions for Improving Performance and Accountability. British Medical Journal. 1996;313(7069):1390–93. doi: 10.1136/bmj.313.7069.1390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott A., Moga C., Harstall C. Using HTA to Identify Research Gaps: A Pilot Study. 2006 HTA Initiative #24. Retrieved January 23, 2008. <http://www.ihe.ca/hta/publications.html>.

- Statistics Canada. Population by Year, by Province and Territory. 2006 Retrieved October 2007. < http://www40.statcan.ca/l01/cst01/demo02a.htm>.

- Stevens A., Milne R. Health Technology Assessment in England and Wales. International Journal of Technology Assessment in Health Care. 2004;20(1):11–24. doi: 10.1017/s0266462304000741. [DOI] [PubMed] [Google Scholar]

- Tunis S.R., Stryer D.B., Clancy C.M. Practical Clinical Trials: Increasing the Value of Clinical Research for Decision Making in Clinical and Health Policy. Journal of the American Medical Association. 2003;290(12):1624–32. doi: 10.1001/jama.290.12.1624. [DOI] [PubMed] [Google Scholar]

- Zwarenstein M., Oxman A. Why Are So Few Randomized Trials Useful, and What Can We Do about It? Journal of Clinical Epidemiology. 2006;59(11):1125–26. doi: 10.1016/j.jclinepi.2006.05.010. [DOI] [PubMed] [Google Scholar]