Abstract

Aims

Many fMRI protocols for localizing speech comprehension have been described, but there has been little quantitative comparison of these methods. We compared five such protocols in terms of areas activated, extent of activation, and lateralization.

Methods

FMRI BOLD signals were measured in 26 healthy adults during passive listening and active tasks using words and tones. Contrasts were designed to identify speech perception and semantic processing systems. Activation extent and lateralization were quantified by counting activated voxels in each hemisphere for each participant.

Results

Passive listening to words produced bilateral superior temporal activation. After controlling for pre-linguistic auditory processing, only a small area in the left superior temporal sulcus responded selectively to speech. Active tasks engaged an extensive, bilateral attention and executive processing network. Optimal results (consistent activation and strongly lateralized pattern) were obtained by contrasting an active semantic decision task with a tone decision task. There was striking similarity between the network of brain regions activated by the semantic task and the network of brain regions that showed task-induced deactivation, suggesting that semantic processing occurs during the resting state.

Conclusions

FMRI protocols for mapping speech comprehension systems differ dramatically in pattern, extent, and lateralization of activation. Brain regions involved in semantic processing were identified only when an active, non-linguistic task was used as a baseline, supporting the notion that semantic processing occurs whenever attentional resources are not controlled. Identification of these lexical-semantic regions is particularly important for predicting language outcome in patients undergoing temporal lobe surgery.

Keywords: language, fMRI, speech, comprehension, semantics, temporal lobe

Introduction

Localization of language areas prior to brain surgery can help determine the risk of post-operative aphasia and may be useful for modifying surgical procedures to minimize such risk. Anterior temporal lobe resection is a common and highly effective treatment for intractable epilepsy (Wiebe et al., 2001; Tellez-Zenteno et al., 2005), but carries a 30–50% risk of decline in naming ability when performed on the left temporal lobe (Hermann et al., 1994; Langfitt & Rausch, 1996; Bell et al., 2000; Sabsevitz et al., 2003). In addition to retrieval of names, the left temporal lobe is classically associated with speech comprehension (Wernicke, 1874). These seemingly different language functions both depend on common systems for processing speech sounds (phonology) and word meanings (lexical semantics), both of which are located largely in the temporal lobe (Indefrey & Levelt, 2004; Awad et al., 2007). Identification of these phonological and lexical-semantic systems is therefore an important goal in the presurgical mapping of language functions.

Functional magnetic resonance imaging (fMRI) is used increasingly for this purpose (Binder, 2006). FMRI is a safe, non-invasive procedure for localizing hemodynamic changes associated with neural activity. Many fMRI studies conducted on healthy adults have investigated the brain correlates of speech comprehension, though with a variety of activation procedures and widely varying results. There has been little systematic, quantitative comparison of these activation protocols. There is at present little agreement, for example, on which type of procedure produces the strongest activation, which is most specific for detecting processes of interest, and which is associated with the greatest degree of hemispheric lateralization.

Speech comprehension protocols can be categorized in general terms according to stimulus and task factors (Table 1). Speech is an acoustically complex stimulus that engages much of the auditory cortex bilaterally in pre-linguistic processing of spectral and temporal information (Binder et al., 2000; Poeppel, 2001). Most models of speech perception posit a late stage of auditory perception in which this complex acoustic information activates long-term representations for consonant and vowel phonemes (Pisoni, 1973; Stevens & Blumstein, 1981; Klatt, 1989). Many fMRI studies of speech perception have aimed to isolate this phonemic stage of the speech perception process by contrasting spoken words with non-speech auditory sounds that presumably 'subtract out' earlier stages of auditory processing. These non-speech control sounds have varied in terms of their similarity to speech, ranging from steady state 'noise' with no spectral or temporal information (Binder et al., 2000), to 'tones' possessing relatively simple spectral and temporal information (Démonet et al., 1992; Binder et al., 2000; Desai et al., 2005), to various synthetic speech-like sounds with spectrotemporal complexity comparable to speech (Scott et al., 2000; Dehaene-Lambertz et al., 2005; Liebenthal et al., 2005; Mottonen et al., 2006). As expected, the more similar the control sounds are to the speech sounds in terms of acoustic complexity, the less activation occurs in primary and association auditory areas in the superior temporal lobe (Binder et al., 2000; Scott et al., 2000; Davis & Johnsrude, 2003; Specht & Reul, 2003; Uppenkamp et al., 2006).

Table 1.

Five types of protocols for mapping speech comprehension areas.

| Language Condition | Control Condition | |||

|---|---|---|---|---|

| Stimulus | Task State | Stimulus | Task State | |

| 1. | Speech | Passive | none | Passive ("Rest") |

| 2. | Speech | Passive | Non-Speech | Passive |

| 3. | Speech | Active, Linguistic | none | Passive ("Rest") |

| 4. | Speech | Active, Linguistic | Non-Speech | Active, Nonlinguistic |

| 5. | Speech | Active, Semantic | Speech | Active, Phonological |

Another principle useful for categorizing speech comprehension studies is whether or not an active task is requested of the participants. Many studies employed passive listening to speech, whereas others required participants to respond to the speech sounds according to particular criteria. Active tasks focus participants' attention on a specific aspect of a stimulus, such as its form or meaning, which is assumed to cause 'top-down' activation of the neural systems relevant for processing the attended information. For example, some prior studies of speech comprehension sought to identify the brain regions specifically involved in processing word meanings (lexical-semantic system) by contrasting a semantic task using speech sounds with a phonological task using speech sounds (Démonet et al., 1992; Mummery et al., 1996; Binder et al., 1999).

A final factor to consider in categorizing speech comprehension studies is whether or not an active task is used for the baseline condition. Performing a task of any kind requires a variety of general functions such as focusing attention on the relevant aspects of the stimulus, holding the task instructions in mind, making a decision, and generating a motor response. Active control tasks are used to 'subtract out' these and other general processes that are considered non-linguistic in nature and therefore irrelevant for the purpose of language mapping. Another potential benefit of such tasks is that they provide a better-controlled baseline state than resting (Démonet et al., 1992). Evidence suggests that the conscious resting state is characterized by ongoing mental activity experienced as 'daydreams', 'mental imagery', 'inner speech' and the like, which is interrupted when an overt task is performed (Antrobus et al., 1966; Pope & Singer, 1976; Singer, 1993; Teasdale et al., 1993; Binder et al., 1999; McKiernan et al., 2006). It has been proposed that this 'task unrelated thought' depends on the same conceptual knowledge systems that underlie language comprehension and production of propositional language (Binder et al., 1999). Thus, resting states and states in which stimuli are presented with no specific task demands may actually be conditions in which there is continuous processing of conceptual knowledge and mental production of meaningful 'inner speech'.

Table 1 illustrates five types of contrasts that have been used to map speech comprehension systems. The first four are obtained by crossing either a passive or active task state with a control condition using either silence or a non-speech auditory stimulus. The last contrast uses speech sounds in both conditions while contrasting an active semantic task with an active phonological task. Though there are other possible contrasts not listed in the table (e.g., Active Speech vs. Passive Non-Speech), most prior imaging studies on this topic can be classified into one of these five general types. Within each type, of course, are many possible variations on both stimulus content (e.g., relative concreteness, grammatical class, or semantic category of words; sentences vs. single words) and specific task requirements.

Our aim in the current study was to provide a meaningful comparison between these five types of protocols through controlled manipulation of the factors listed in Table 1. Interpreting differences between any two of these types requires that the same or comparable word stimuli be used in each case. We used single words for each protocol, as these lend themselves readily to use in semantic tasks. Similarly, we used the same non-speech tone stimuli for the passive and active control conditions in protocols 2 and 4. Finally, because fMRI results are known to be highly variable across individuals and scanning sessions, we scanned the same 26 individuals on all five protocols in the same imaging session. The results were characterized quantitatively in terms of extent and magnitude of activation, specific brain regions activated, and lateralization of activation. The results provide the first clear picture of the relative differences and similarities, advantages and disadvantages of these speech comprehension mapping protocols.

Methods

Participants

Participants in the study were 26 healthy adults (13 men, 13 women), ranging in age from 18 to 29 years, with no history of neurologic, psychiatric, or auditory symptoms. All participants indicated strong right-hand preferences (laterality quotient > 50) on the Edinburgh Handedness Inventory (Oldfield, 1971). Participants gave written informed consent and were paid a small hourly stipend. The study received prior approval by the Medical College of Wisconsin Human Research Review Committee.

Task Conditions and Behavioral Measures

Task conditions during scanning included a resting state, a passive tone listening task, an active tone decision task, a passive word listening task, a semantic decision task, and a phoneme decision task (Table 2). Auditory stimuli were presented with a computer and a pneumatic audio system, as previously described (Binder et al., 1997). Participants kept their eyes closed during all conditions. For the resting condition, participants were instructed to remain relaxed and motionless. No auditory stimulus was presented other than the baseline scanner noise. Stimuli in the passive and active tone tasks were digitally-synthesized 500-Hz and 750-Hz sinewave tones of 150 ms duration each, separated by 250-ms inter-tone intervals. These were presented as sequences of 3 to 7 tones. In the passive tone task, participants were asked simply to listen to these sequences. In the tone decision task, participants were required to respond by pressing a button with the left hand for any sequence containing two 750 Hz tones. The left hand was used in this and all other active tasks to minimize any leftward bias due to activation of the left hemisphere motor system.

Table 2.

Conditions used in the study.

| Condition | Stimulus | Task | |

|---|---|---|---|

| 1. | Rest | none | Rest. |

| 2. | Passive Tone | tones | Listen only. |

| 3. | Tone Decision | tones | Respond if sequence contains two high tones. |

| 4. | Passive Word | animal names | Listen only. |

| 5. | Semantic Decision | animal names | Respond if animal meets both semantic criteria. |

| 6. | Phoneme Decision | nonword syllables | Respond if item contains both target phonemes. |

Stimuli in the passive word and semantic decision tasks were 192 spoken English nouns designating animals (e.g., turtle), presented at the rate of one word every 3 seconds. In the passive word task, participants were asked simply to listen passively to these words. In the semantic decision task, participants were required to respond by a left hand button press for animals they considered to be both "found in the United States" and "used by people." No animal word was used more than once in the same task. The tone and word stimuli were matched on average intensity, average sound duration (750 ms), average trial duration (3 s), and frequency of targets (37.5% of trials). Characteristics of the tone and word stimuli and the rationale for the tone and semantic decision tasks are described elsewhere in greater detail (Binder et al., 1995; Binder et al., 1997).

Stimuli in the phoneme decision task were spoken consonant-vowel (CV) syllables, including all combinations of the consonants b, d, f, g, h, j, k, l, m, n, p, r, s, t, v, w, y, and z with the five vowels /æ /, /i/, /a/, /o/, and /u/. All syllables were edited to a duration of 400 ms. Each trial presented three CV syllables in rapid sequence, e.g., /pa dæ su/. Subjects were required to respond by a left hand button press when a triplet of CV syllables included both of the consonants /b/ and /d/.

Seven functional scans were acquired (Table 3). In each scan, one of the conditions alternated eight times with one of the other conditions in a standard block design. Each of these sixteen blocks lasted 24 s and included 8 stimulus presentations (i.e., 8 tone trains, 8 words, or 8 CV triplets). To avoid the possibility that participants might automatically perform the active decision tasks during the passive conditions, all passive scans were acquired before the participants received any training on the decision tasks or had knowledge that tasks were to be performed. Within this group of scans (scans 1–3), the order of scans was randomized and counterbalanced across participants.

Table 3.

Composition and order of the functional scans.

| Scan | Condition 1 | Condition 2 |

|---|---|---|

| 1, 2, or 3 | Passive Tone | Rest |

| 1, 2, or 3 | Passive Word | Rest |

| 1, 2, or 3 | Passive Word | Passive Tone |

| (learn Tone Decision task) | ||

| 4 | Tone Decision | Rest |

| (learn Semantic Decision task) | ||

| 5, 6, or 7 | Semantic Decision | Rest |

| (learn Phoneme Decision task) | ||

| 5, 6, or 7 | Semantic Decision | Tone Decision |

| 6, or 7 | Semantic Decision | Phoneme Decision |

Following the passive scans, participants were instructed in the Tone Decision task and performed a Tone Decision vs. Rest scan to identify brain regions potentially involved in task-unrelated conceptual processing during the resting state. To avoid the possibility that participants might rehearse or review the semantic task during these resting blocks, the Tone Decision vs. Rest scan was always acquired first after the passive scans and before participants had any knowledge of the semantic task. The remaining three scans were acquired after training on the Semantic Decision task. These included a Semantic Decision vs. Rest scan, a Semantic Decision vs. Tone Decision scan, and a Semantic Decision vs. Phoneme Decision scan. The order of these three scans was randomized and counterbalanced across participants, except that the Phoneme Decision task was restricted to one of the final two scans. This restriction was to avoid the mental burden of having to learn and perform two new tasks simultaneously (Semantic Decision and Phoneme Decision) for scan 5.

Performances on the Tone Decision and Phoneme Decision tasks were scored as the proportion of correct responses. Responses on the semantic decision task were scored using response data from a group of 50 normal right-handed controls on the same stimulus sets. Items responded to with a probability greater than .75 by controls (e.g., lamb, salmon, horse, goose) were categorized as targets, and items responded to with a probability less than .25 by controls (e.g., ape, cockroach, lion, stork) were categorized as distractors. Performance by each subject was then scored as the proportion of correct discriminations between targets and distractors.

Image Acquisition

Scanning was conducted on a 1.5 Tesla General Electric Signa scanner (GE Medical Systems, Milwaukee, WI) using a 3-axis local gradient coil with an insertable transmit/receive birdcage radiofrequency coil. Padding was placed behind the neck and around the head as needed to relax the cervical spine and to fill the space between the head and inner surface of the coil. Functional imaging employed a gradient-echo echoplanar sequence with the following parameters: 40 ms echo time, 4 s repetition time, 24 cm field of view, 64×64 pixel matrix, and 3.75×3.75×7.0 mm voxel dimensions. Seventeen to 19 contiguous sagittal slice locations were imaged, encompassing the entire brain. One hundred sequential image volumes were collected in each functional run, giving a total duration of 6 min, 40 s for each run. Each 100-image functional run began with 4 baseline images (16 s) to allow MR signal to reach equilibrium, followed by 96 images during which two comparison conditions were alternated for eight cycles. High resolution, T1-weighted anatomical reference images were obtained as a set of 124 contiguous sagittal slices using a 3D spoiled-gradient-echo ("SPGR") sequence.

Image Processing and Subtraction Analysis

Image analysis was done with AFNI software (available at http://afni.nimh.nih.gov/afni) (Cox, 1996). Motion artifacts were minimized by registration of the raw echoplanar image volumes in each run to the first steady-state volume (fifth volume) in the run. Estimates of the three translation and three rotation movements at each point in each time-series were computed during registration and saved. The first four images of each run, during which spin relaxation reaches an equilibrium state, were discarded, and the mean, linear trend, and second-order trend were removed on a voxel-wise basis from the remaining 96 image volumes of each run.

Multiple regression analysis of each run in each subject was performed to identify voxels showing task-associated changes in BOLD signal. An idealized BOLD response was derived by convolving the 24-sec on/off task alternation function with a canonical hemodynamic response modeled using a gamma function (Cohen, 1997). Movement vectors computed during image registration were included in the model to remove residual variance associated with motion-related changes in MRI signal. This analysis generated, for each functional run, a map of beta coefficients representing the magnitude of the response at each voxel and a map of correlation coefficients representing the statistical fit of the observed data to the idealized BOLD response function.

Group activation maps were created with a random-effects model treating subject as a random factor. The beta coefficient map from each subject was spatially smoothed with a 6-mm full-width-half-maximum Gaussian kernel to compensate for inter-subject variance in anatomical structure. These maps were then resized to fit standard stereotaxic space (Talairach & Tournoux, 1988) using piece-wise affine transformation and linear interpolation to a 1-mm3 voxel grid. A single-sample, two-tailed t test was then conducted at each voxel for each run to identify voxels with mean beta coefficients that differed from zero. These group maps were thresholded using a voxel-wise 2-tailed probability of P < 0.0001 (|t-deviate| ≥ 4.55) and minimum cluster size of 200 mm3, resulting in a whole-brain corrected, 2-tailed probability threshold of P < 0.05 for each group map, as determined by Monte-Carlo simulation.

The final analysis determined the mean number of significantly activated voxels in each hemisphere for each task contrast. Individual masks of the supratentorial brain volume were created for each subject by thresholding the first echoplanar image volume to exclude voxels outside of the brain, followed by manual editing to remove the cerebellum and brainstem. The resulting mask was aligned to standard stereotaxic space and divided at the midline to produce separate left and right whole-hemisphere regions of interest (ROIs). The correlation coefficient maps from each subject were then thresholded at a whole-brain corrected P < 0.05 (voxel-wise P < 0.001 and minimum cluster size of 295 mm3) and converted to standard stereotaxic space. Activated voxels were then automatically counted in the left and right hemisphere ROIs for each subject and task contrast. A laterality index (LI) was computed for each subject and task contrast using the formula (L − R)/(L + R), where L and R are the number of voxels in the left and right hemisphere ROIs. LIs computed in this way are known to vary as a function of the significance threshold, becoming more symmetrical as the threshold is lowered (Adcock et al., 2003). LIs for some tasks have been shown to vary substantially depending on the brain region in which the voxels are counted (Lehéricy et al., 2000; Spreer et al., 2002). To permit a meaningful comparison between activation protocols, we therefore used the same threshold and whole-hemisphere ROI for all protocols.

Results

Task Performance

All participants learned the tasks easily and tolerated the scanning procedure well. Performance on the Tone Decision task was uniformly good, with participants attaining a mean score of 98.4% correct (SD = 1.9, range 89–100%). A paired t-test showed no difference in Tone Decision performance when the task was paired with rest compared to when it was paired with the Semantic Decision task (P = 0.256). Participants also performed well in discriminating targets from distractors on the Semantic Decision task, with a mean score of 92.3% correct (SD = 4.4, range 72–100%). It should be noted that judgments in the Semantic Decision task are subjective and depend on participants' personal experiences, hence there are no strictly correct or incorrect responses. Accuracy scores reflect the similarity between a participant's responses and those of a group of participants, and are intended merely to demonstrate compliance with the task. Repeated-measures ANOVA showed no difference in Semantic Decision performance when the task was paired with rest, with the Tone Decision task, or with the Phoneme Decision task (P = 0.745). Accuracy on the Phoneme Decision task averaged 92.4% correct (SD = 4.7, range 77– 100%).

FMRI Results

Results for each of the main speech comprehension contrasts are described below, as well as several relevant contrasts between the control conditions. Peak activation coordinates for each contrast are given in the Appendix.

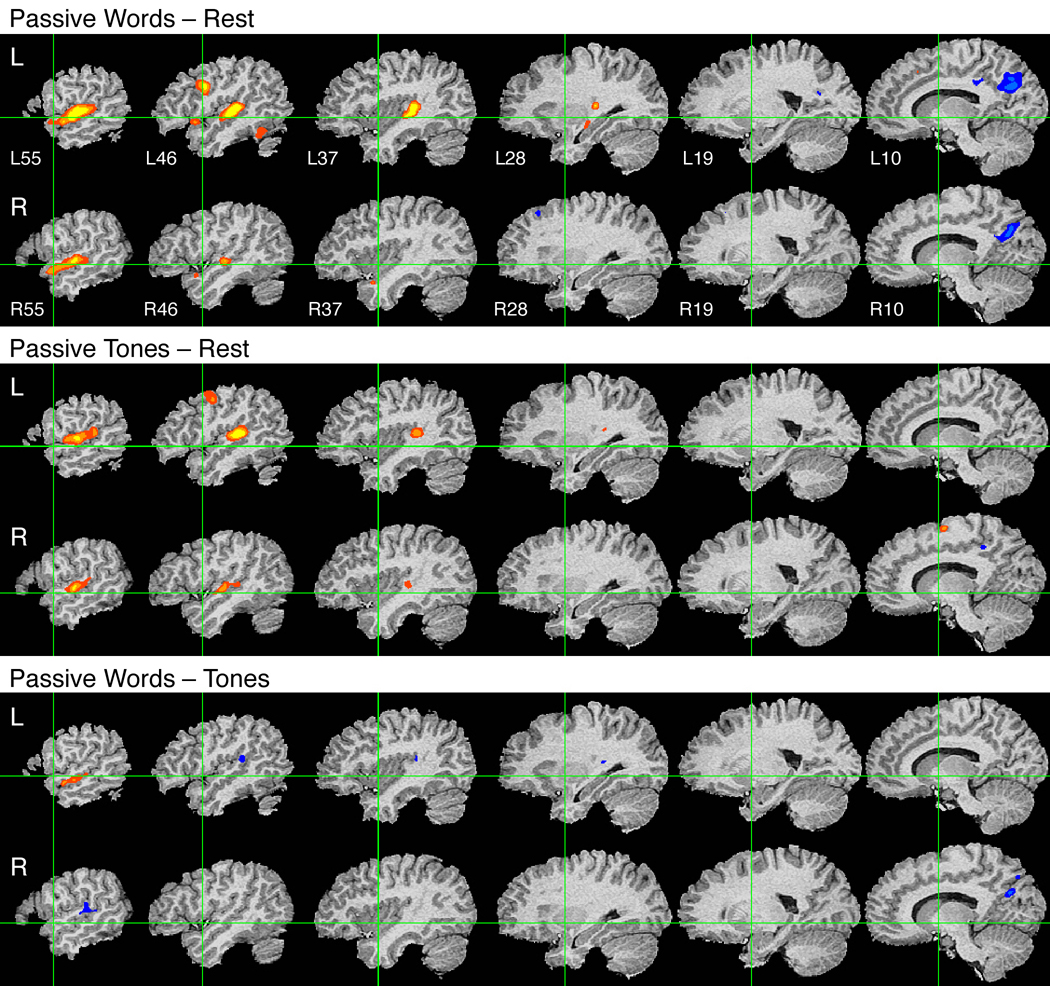

Passive Words vs. Rest

Activation during passive listening to words, compared to a resting state, occurred mainly in the superior temporal gyrus (STG) bilaterally, including Heschl's gyrus (HG) and surrounding auditory association cortex in the planum temporale (PT), lateral STG, and upper bank of the superior temporal sulcus (STS) (Figure 1, top panel). Smaller foci of activation were observed in two left hemisphere regions, including the inferior precentral sulcus (junction of Brodmann areas (BA) 6, 44, and 8) and the posterior inferior temporal gyrus (BA 37). Stronger activation during the resting state (blue areas in Figure 1, top) was observed in the posterior cingulate gyrus and precuneus bilaterally.

Figure 1.

Group fMRI activation maps from three passive listening experiments. Top: Passive listening to words relative to resting. Middle: Passive listening to tones relative to resting. Bottom: Passive listening to words relative to tones. Data are displayed as serial sagittal sections through the brain at 9-mm intervals. X-axis locations for each slice are given in the top panel. Green lines indicate the stereotaxic Y and Z origin planes. Hot colors (red-yellow) indicate positive activations and cold colors (blue-cyan) negative activations for each contrast. All maps are thresholded at a whole-brain corrected P < 0.05 using voxel-wise P < 0.0001 and cluster extent > 200 mm3. The three steps in each color continuum represent voxel-wise P thresholds of 10−4, 10−5, and 10−6.

Passive Words vs. Passive Tones

Much of the STG activation to words could be due to pre-linguistic processing of auditory information, and therefore not specific to speech or language. As shown in the middle panel of Figure 1, passive listening to tone sequences elicited a very similar pattern of bilateral activation in STG, HG, and PT, demonstrating that activation in these regions is not specific to speech.

The lower panel of Figure 1 shows a direct contrast between passive words and passive tones. Contrasting words with this non-speech auditory control condition should eliminate activation related to pre-linguistic auditory processing. A comparison of the top and bottom panels of Figure 1 confirms that activation in HG, PT, and surrounding regions of the STG was greatly reduced by incorporating this control condition. Activation for words relative to tones is restricted to ventral regions of the STG lying in the STS. This STS activation is clearly lateralized to the left hemisphere, consistent with the idea that additional activation for words over tones reflects language-related phoneme perception processes. No other areas showed greater activation for words over tones. Stronger activation for tones was noted in the posterior STG bilaterally, and in the right posterior cingulate gyrus.

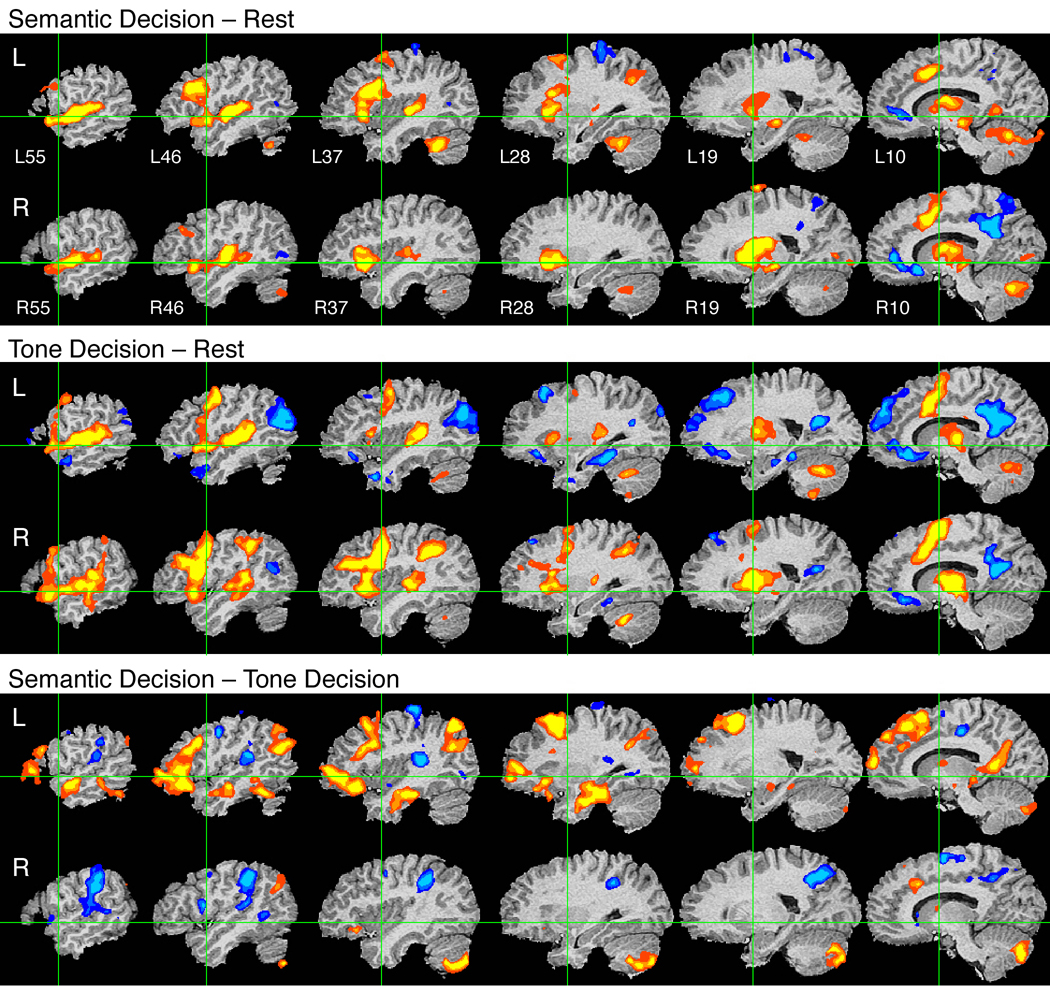

Semantic Decision vs. Rest

In contrast to the passive word listening condition, the Semantic Decision task requires participants to focus attention on the words, retrieve specific semantic knowledge, make a decision, and generate a motor response. Like the passive listening condition, this task produced bilateral activation of the STG due to auditory processing, though this activation was somewhat more extensive than in the passive listening condition (Figure 2, upper panel). In addition, the Semantic Decision task activated a complex, bilateral network of frontal, limbic, and subcortical structures. Activation in the lateral frontal lobe was strongly left-lateralized, involving cortex in the posterior IFG (pars opercularis) and adjacent MFG, and distinct regions of ventral and dorsal premotor cortex (BA 6, frontal eye field). Strong, symmetric activation occurred in the supplementary motor area (SMA), anterior cingulate gyrus, and anterior insula. Smaller cortical activations involved the left intraparietal sulcus (IPS) and the right central sulcus, the latter consistent with use of a left hand response for the task. Bilateral activation also occurred in several subcortical regions, including the putamen (stronger on the right), anterior thalamus, medial geniculate nuclei, and paramedian mesencephalon. Finally, there was activation in the cerebellum bilaterally, involving both medial and lateral structures, and stronger on the left. Regions of task-induced deactivation (i.e., relatively higher BOLD signal in the resting condition) were observed in the posterior cingulate gyrus and adjacent precuneus bilaterally, the rostral and subgenual cingulate gyrus bilaterally, and the left postcentral gyrus.

Figure 2.

Group fMRI activation maps from three active listening experiments. Top: Semantic Decision relative to resting. Middle: Tone Decision relative to resting. Bottom: Semantic Decision relative to Tone Decision. Display conventions as described for Figure 1.

Semantic Decision vs. Tone Decision

Much of the activation observed in the Semantic Decision – Rest contrast could be explained by general executive, attention, working memory, and motor processes that are not specific to language tasks. As shown in the middle panel of Figure 2, the Tone Decision task elicited a similar pattern of bilateral activation in premotor, SMA, anterior cingulate, anterior insula, and subcortical regions. In addition, the Tone Decision task activated cortex in the right hemisphere that was not engaged by the Semantic task, including the posterior IFG, dorsolateral prefrontal cortex (inferior frontal sulcus), SMG (BA 40), and mid-MTG. Regions of task-induced deactivation were much more extensive with this task, involving large regions of the posterior cingulate gyrus and precuneus bilaterally, rostral/subgenual cingulate gyrus bilaterally, left orbital and medial frontal lobe, dorsal prefrontal cortex in the SFG and adjacent MFG (mainly on the left), angular gyrus (mainly left), ventral temporal lobe (parahippocampus, mainly on the left), and the left anterior temporal pole.

The lower panel of Figure 2 shows the contrast between Semantic Decision and Tone Decision tasks. Using the Tone Decision task as a control condition should eliminate activation in general executive systems as well as in low-level auditory and motor areas. A comparison of the top and bottom panels of Figure 2 confirms these predictions, showing subtraction of the bilateral activation in dorsal STG, premotor cortex and SMA, anterior insula, and deep nuclei that is common to both tasks. Compared to the Tone task, the Semantic task produced relative BOLD signal enhancement in many left hemisphere association and heteromodal regions, including much of the prefrontal cortex (anterior and mid-IFG, SFG, and portions of MFG), several regions in the lateral and ventral temporal lobe (MTG and ITG, anterior fusiform gyrus, parahippocampal gyrus, anterior hippocampus), the angular gyrus, and the posterior cingulate gyrus. Many of these regions (dorsal prefrontal cortex, angular gyrus, posterior cingulate) were not prominently activated when the Semantic Decision task was contrasted with Rest, suggesting that these regions are also active during the resting state. Their appearance in the Semantic Decision – Tone Decision contrast is due to their relative deactivation (or lack of tonic activation) during the Tone task. Other areas activated by the Semantic task relative to the Tone task included the right cerebellum and smaller foci in the pars orbitalis of the right IFG, right SFG, right angular gyrus, right posterior cingulate gyrus, and left anterior thalamus.

Several areas showed relatively higher BOLD signals during the Tone Decision task, including the PT bilaterally, the right SMG and anterior IPS, and scattered regions of premotor cortex bilaterally.

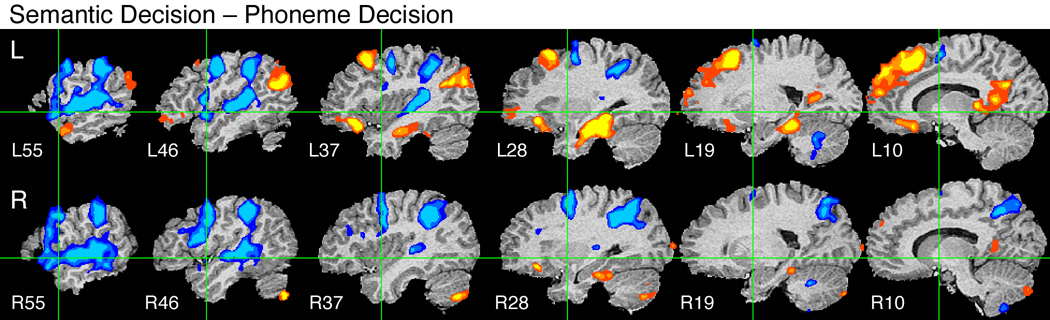

Semantic Decision vs. Phoneme Decision

Stimuli used in the Tone Decision task are acoustically much simpler than the speech sounds used in the Semantic Decision task and contain no phonemic information. The Phoneme Decision task, which requires participants to process meaningless speech sounds (pseudowords), provides a control for phonemic processing, allowing more specific identification of brain regions involved in semantic processing.

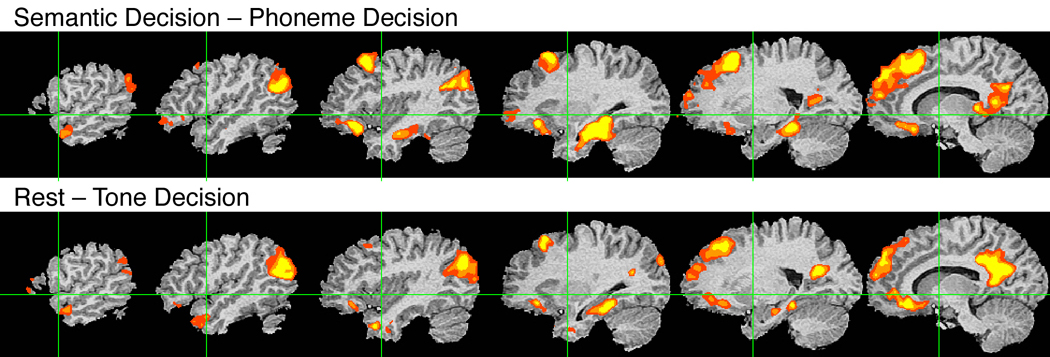

The following regions showed stronger activation for the Semantic compared to the Phoneme task (Figure 3): left dorsal prefrontal cortex (SFG and adjacent MFG), pars orbitalis (BA 47) of the left IFG, left orbital frontal cortex, left angular gyrus, bilateral ventromedial temporal lobe (parahippocampus, fusiform gyrus, and anterior hippocampus, more extensive on the left), bilateral posterior cingulate gyrus, and right posterior cerebellum. Small activations were observed in the anterior left STS and the right pars orbitalis. In contrast to the Semantic Decision – Tone Decision contrast (see Figure 2, bottom), there was little or no activation of dorsal regions of the left IFG or adjacent MFG, or of the lateral temporal lobe (MTG, ITG). These latter regions must have been activated in common during both the Semantic and Phoneme Decision tasks, and are therefore likely to be involved in pre-semantic phonological processes, such as phoneme recognition. Posterior regions of the left IFG and adjacent premotor cortex (BA 44/6) were in fact activated more strongly by the Phoneme task than the Semantic task. Other regions showing this pattern included the right posterior IFG and premotor cortex, extensive regions of the STG bilaterally, the SMG and anterior IPS bilaterally, and the SMA bilaterally.

Figure 3.

Group fMRI activation map from the Semantic Decision – Phoneme Decision contrast.

A notable aspect of the Semantic Decision activation pattern for this contrast is how closely it resembles the network of brain areas showing task-induced deactivation (i.e., stronger activation during the resting state) in the contrast between Tone Decision and Rest (blue areas in Figure 2, middle panel). Figure 4 shows these regions of stronger activation for the resting state, duplicated from Figure 2, together with the areas activated by the Semantic relative to the Phonemic task. In both the Semantic Decision – Phoneme Decision contrast and the Rest – Tone Decision contrast, stronger BOLD signals are observed in left angular gyrus, left dorsal prefrontal cortex (SFG and adjacent MFG), left orbital frontal cortex, left pars opercularis, posterior cingulate gyrus, bilateral ventromedial temporal lobe (more extensive on the left), and left temporal pole.

Figure 4.

A comparison of left hemisphere areas activated by the Semantic Decision task relative to the Phoneme Decision task (top) and areas active during Rest relative to the Tone Decision task (bottom).

Activation Extent and Degree of Lateralization

For clinical applications, it is important not only that a language mapping protocol identify targeted linguistic systems, but also that it produce consistent activation at the single subject level and a left-lateralized pattern useful for determining language dominance. We quantified the extent and lateralization of activation for each task protocol by counting the number of voxels that exceeded a whole-brain corrected significance threshold in each participant (Table 4). Repeated-measures ANOVA showed effects of task protocol on total activation volume (F(4,100) = 19.528, P < 0.001), left hemisphere activation volume (F(4,100) = 23.070, P < 0.001), right hemisphere activation volume (F(4,100) = 15.133, P < 0.001), and laterality index (F(4,100) = 21.045, P < 0.001). Total activation volume was largest for the Semantic Decision – Tone Decision protocol, and was greater for this protocol than for all others (all pair-wise P < 0.05, Bonferroni corrected for multiple comparisons) except the Semantic Decision – Rest protocol. Total activation volume was greater for all of the active task protocols than for any of the passive protocols (all pair-wise P < 0.05, Bonferroni corrected). Left hemisphere activation volume was largest for the Semantic Decision – Tone Decision protocol, which produced significantly more activated left hemisphere voxels than any of the other four protocols (all pair-wise P < 0.05, Bonferroni corrected). The laterality index was greatest for the Semantic Decision – Tone Decision and Semantic Decision – Phoneme Decision protocols. LIs for these tasks did not differ, but both were greater than the LIs for Passive Words – Rest and Semantic Decision – Rest (all pair-wise P < 0.05, Bonferroni corrected). Single-sample t-tests showed that LIs for the Passive Words – Rest (P = 0.23) and Semantic Decision – Rest (P = 0.07) protocols did not differ from zero, whereas LIs for the other three protocols were all significantly greater than zero (all P < 0.0001). Finally, LIs for the Semantic Decision – Tone Decision and Semantic Decision – Phoneme Decision protocols showed much less variation across the group (smaller SD) compared to the protocols using passive conditions (all F ratios > 4.4, all P < 0.001).

Table 4.

Mean extent and lateralization of language-related activation for 5 fMRI contrasts. Numbers in parentheses are standard deviations. Voxel counts have been converted to normalized volumes of activation, expressed in ml.

| Activation Volume in ml | Laterality | ||

|---|---|---|---|

| Contrast | Left | Right | Index |

| Passive Words vs. Rest | 6.8 (7.0) | 4.0 (4.1) | .100 (.415) |

| Passive Words vs. Passive Tones | 3.0 (6.2) | 0.8 (1.6) | .522 (.457) |

| Semantic Decision vs. Rest | 18.0 (14.1) | 14.4 (12.7) | .117 (.315) |

| Semantic Decision vs. Tone Decision | 35.1 (25.2) | 9.3 (10.5) | .624 (.167) |

| Semantic Decision vs. Phoneme Decision | 16.8 (13.1) | 4.2 (4.1) | .620 (.196) |

In summary, the active task protocols (Semantic Decision – Rest, Semantic Decision – Tone Decision, and Semantic Decision – Phoneme Decision) produced much more activation than the passive protocols, the Semantic Decision – Tone Decision protocol produced by far the largest activation volume in the left hemisphere, and the protocols that included a non-resting control (Passive Words – Tones, Semantic Decision – Tone Decision, and Semantic Decision – Phoneme Decision) were associated with stronger left-lateralization of activation than the protocols that used a resting baseline. Of the five protocols, the Semantic Decision – Tone Decision protocol showed the optimal combination of activation volume, leftward lateralization, and consistency of lateralization.

For clinical applications, it is important to know the probability of detecting significant activation in targeted ROIs in individual patients. We previously constructed left frontal, temporal, and angular gyrus ROIs using activation maps from the Semantic Decision – Tone Decision contrast in a group of 80 right-handed adults (Frost et al., 1999; Szaflarski et al., 2002; Sabsevitz et al., 2003). With these three ROIs as targets, activated voxels (whole-brain corrected P < 0.05) were detected in 100% of the 26 participants in the current study in all three ROIs using the Semantic Decision – Tone Decision protocol.

Discussion

Our aim in this study was to compare five types of functional imaging contrasts used to examine speech comprehension networks. The contrasts produced markedly different patterns of activation and lateralization. These differences have important implications for the selection and interpretation of clinical fMRI language mapping protocols.

Passive Words – Rest

Many researchers have attempted to identify comprehension networks by contrasting listening to words with a resting state. Our Passive Words – Rest contrast confirms similar prior studies showing bilateral, symmetric activation of the STG, including primary auditory areas in Heschl's gyrus and planum temporale as well as surrounding association cortex, during passive word listening (Petersen et al., 1988; Wise et al., 1991; Mazoyer et al., 1993; Price et al., 1996; Binder et al., 2000; Specht & Reul, 2003). Interpretations of this STG activation vary, with some authors equating it to the 'receptive language area of Wernicke' and others arguing that it represents a pre-linguistic auditory stage of processing (Binder et al., 1996a; Binder et al., 2000). The latter account arises from the fact, often neglected in traditional models of language processing, that speech sounds are complex acoustic events. Speech phonemes (consonants and vowels), prosodic intonation, and speaker identity are all encoded in subtle spectral (frequency) and temporal patterns that must be recognized quickly and efficiently by the auditory system (Klatt, 1989). Analogous to the monkey STG, which is comprised largely of neurons coding such auditory information (Baylis et al., 1987; Rauschecker et al., 1995), the human STG (including the classical Wernicke area) appears to be specialized for processing complex auditory information. Thus, much of the activation observed in contrasts between word listening and resting can be attributed to auditory perceptual processes rather than to recognition of specific words. According to this model, activation should occur in the same STG regions during listening to spoken nonwords (e.g., "slithy toves") and to complex sounds that are not speech. Many imaging studies have confirmed these predictions (Wise et al., 1991; Démonet et al., 1992; Price et al., 1996; Binder et al., 2000; Scott et al., 2000; Davis & Johnsrude, 2003; Specht & Reul, 2003; Uppenkamp et al., 2006). Because auditory perceptual processes are represented in both left and right STG, this model also accounts for why the activation observed with passive listening is bilateral, and why lateralization measures obtained with this type of contrast are not correlated with language dominance as measured by the Wada test (Lehericy et al., 2000).

Passive Words – Passive Tones

We used simple tone sequences to 'subtract out' activation in the STG due to auditory perceptual processes with the expectation that only relatively early auditory processing would be removed using this control. The Passive Words – Passive Tones contrast confirmed similar prior studies, showing activation favoring speech in the mid-portion of the STS, with strong left lateralization (Mummery et al., 1999; Binder et al., 2000; Scott et al., 2000; Ahmad et al., 2003; Desai et al., 2005; Liebenthal et al., 2005; Benson et al., 2006). Nearly all of the activation observed in the dorsal STG in the Passive Words – Rest contrast was removed by incorporating this simple non-speech control, confirming that this more dorsal activation is not specific to speech or to words. The resulting activation, though strongly left-lateralized, was much less extensive than with other protocols. Six participants had little or no measurable activation (<0.1 ml) with the Passive Words – Passive Tones protocol. Among the other 20 participants, the total activation volume for the Semantic Decision – Tone Decision protocol was, on average, 44 times greater than for Passive Words – Passive Tones.

Semantic Decision – Rest

Active tasks that require participants to consciously process specific information about a stimulus are often used to enhance activation in brain regions associated with such processing. We designed a semantic decision task that required participants to retrieve specific factual information about a concept and use that information to make an explicit decision. Like the passive listening condition, this task produced bilateral activation of the STG due to auditory processing. This activation was somewhat more extensive than in the passive listening condition, consistent with previous reports that attention enhances auditory cortex activation (O'Leary et al., 1996; Grady et al., 1997; Jancke et al., 1999; Petkov et al., 2004; Johnson & Zatorre, 2005; Sabri et al., 2008). The Semantic Decision – Rest contrast also activated widespread prefrontal, anterior cingulate, anterior insula, and subcortical structures bilaterally. Some of these activations can be attributed to general task performance processes that are not specific to language. For example, any task that requires a decision about a stimulus must engage attentional systems that enable the participant to attend to and maintain attention on the stimulus. Similarly, any such task must involve maintenance of the task instructions and response procedure in working memory, a mechanism for making a decision based on the instructions, and a mechanism for executing a particular response. Dorsolateral prefrontal and inferior frontal cortex, premotor cortex, SMA, anterior cingulate, anterior insula, IPS, and subcortical nuclei have all been linked with these general attention and executive processes in prior studies (Paulesu et al., 1993; Braver et al., 1997; Smith et al., 1998; Honey et al., 2000; Adler et al., 2001; Braver et al., 2001; Ullsperger & von Cramon, 2001; Corbetta & Shulman, 2002; Krawczyk, 2002; Binder et al., 2004). Although there is modest leftward lateralization of the activation in some of these areas, most notably in the left dorsolateral prefrontal cortex, much of it is bilateral and symmetric, consistent with prior studies of attention and working memory.

Semantic Decision – Tone Decision

Active control tasks are used to subtract activation related to general task processes (Démonet et al., 1992). The aim is to design a control task that activates these systems to roughly the same degree as the language task while making minimal demands on language-specific processes. The Tone Decision task used in the present study requires subjects to maintain attention on a series of non-linguistic stimuli, hold these in working memory, generate a decision consistent with task instructions, and produce an appropriate motor response. Because many of these processes are common to both the Semantic Decision and Tone Decision tasks, activations associated with general executive and attentional demands of the Semantic Decision task are not observed in the Semantic Decision – Tone Decision contrast. As with the Passive Words – Passive Tones contrast, the tone stimuli used in the control task also cancel out activation in the dorsal STG bilaterally and even produce relatively greater activation of the planum temporale compared to words (Binder et al., 1996a). Other areas with relatively higher BOLD signals during the Tone Decision task included scattered regions of premotor cortex bilaterally, the right SMG, and right anterior IPS. These activations probably reflect the greater demands made by the Tone task on auditory short-term memory (Crottaz-Herbette et al., 2004; Arnott et al., 2005; Gaab et al., 2006; Brechmann et al., 2007; Sabri et al., 2008).

Most striking about the Semantic Decision – Tone Decision contrast, however, are the extensive, left-lateralized activations in the angular gyrus, dorsal prefrontal cortex, and ventral temporal lobe that were not visible in the Semantic Decision – Rest map. These areas have been linked with lexical-semantic processes in many prior imaging studies (Démonet et al., 1992; Price et al., 1997; Cappa et al., 1998; Binder et al., 1999; Roskies et al., 2001; Binder et al., 2003; Devlin et al., 2003; Scott et al., 2003; Spitsyna et al., 2006). Lesions in these sites produce deficits of language comprehension and concept retrieval in patients with Wernicke aphasia, transcortical aphasia, Alzheimer disease, semantic dementia, herpes encephalitis, and other syndromes (Alexander et al., 1989; Damasio, 1989; Gainotti et al., 1995; Dronkers et al., 2004; Nestor et al., 2006; Noppeney et al., 2007). These regions form a widely distributed, left-lateralized network of higher-order, supramodal cortical areas distinct from early sensory and motor systems. We propose that this network is responsible for storing and retrieving the conceptual knowledge that underlies word meaning. Processing of such conceptual knowledge is the foundation for both language comprehension and propositional language production (Levelt, 1989; Awad et al., 2007). It is these brain regions, in other words, that represent the 'language comprehension areas' in the human brain, as opposed to the early auditory areas, attentional networks, and working memory systems highlighted by the Semantic Decision – Rest contrast.

Why is activation in these regions not visible in the Semantic Decision – Rest contrast? The most likely explanation is that these regions are also active during the conscious resting state (Binder et al., 1999). These activation patterns suggest, in particular, that people retrieve and use conceptual knowledge and process word meanings even when they are outwardly 'resting'. Though counterintuitive to many behavioral neuroscientists, this notion has a long history in cognitive psychology, where it has been discussed under such labels as 'stream of consciousness', 'inner speech', and 'task-unrelated thoughts' (James, 1890; Hebb, 1954; Pope & Singer, 1976). Far from being a trivial curiosity, this ongoing conceptual processing may be the mechanism underlying our unique ability as humans to plan the future, interpret past experience, and invent useful artifacts (Binder et al., 1999). The existence of ongoing conceptual processing is supported not only by everyday introspection and a body of behavioral research (Antrobus et al., 1966; Singer, 1993; Teasdale et al., 1993; Giambra, 1995), but also by functional imaging studies (Binder et al., 1999; McKiernan et al., 2006; Mason et al., 2007). In particular, a recent study demonstrated a correlation between the occurrence of unsolicited thoughts and fMRI BOLD signals in the left angular gyrus and ventral temporal lobe (McKiernan et al., 2006). A key finding from both the behavioral and imaging studies is that ongoing conceptual processes are interrupted when subjects must attend and respond to an external stimulus. This observation allows us to explain why activation in this semantic network is observed when the Semantic Decision task is contrasted with the Tone Decision task but not when it is contrasted with a resting state. Unlike the resting state, the tone task interrupts semantic processing, thus a difference in level of activation of the semantic system occurs only when the tone task is used as a baseline.

This account also explains why this semantic network was not visible in either of the passive listening contrasts. Passive listening makes no demands on attention or decision processes and is therefore similar to resting. According to the model presented here, conceptual processes continue unabated during passive listening regardless of the type of stimuli presented. The semantic network therefore remains equally active through all of these conditions and is not visible in contrasts between them. Based on these findings, we disagree with authors who advocate the use of passive listening paradigms for mapping language comprehension systems. This position was articulated strongly by Crinion et al. (Crinion et al., 2003), who compared active and passive contrasts using speech and reversed speech stimuli. Similar activations were observed with both paradigms, which the authors interpreted as evidence that active suppression of default semantic processing is not necessary. Two aspects of the Crinion et al. study are noteworthy, however. First, the active task required participants to detect 2 or 3 changes from a male to a female speaker during a story that lasted several minutes. This task was likely very easy and did not continuously engage participants' attention. Second, the activation observed with these contrasts was primarily in the left STS, resembling the passive Words–Tones contrast in the current study. There was relatively little activation of ventral temporal regions such as those activated here in the Semantic Decision – Tone Decision contrast. Thus, we interpret the activations reported by Crinion et al. as occurring mainly at the level of phoneme perception, though their sentence materials likely also activated dorsolateral temporal lobe regions involved in syntactic parsing (Humphries et al., 2006; Caplan et al., 2008). In contrast to these systems, mapping conceptual/semantic systems in the ventral temporal lobe requires active suppression of ongoing conceptual processes.

The model just developed is outlined in schematic form in Table 5. The table indicates, for each of the experimental conditions, whether or not the condition engages any of the following six processes: auditory perception, phoneme perception, retrieval of concept knowledge, attention, working memory, and response production. A comparison of the + and − entries for any two of the conditions provides a prediction for which processes are likely to be represented in the contrast between those conditions. Reviewing the contrasts discussed above, for example, the Passive Words – Rest contrast is predicted to reveal activation related to auditory and phoneme perception, and the Passive Words – Passive Tones contrast is predicted to show activation related more specifically to phoneme perception. Semantic Decision – Rest is predicted to show activation in auditory and phoneme perception, attention, working memory, and response production systems. Semantic Decision – Tone Decision is predicted to show activation in phoneme perception and concept knowledge systems.

Table 5.

Hypothesized neural systems engaged by the six conditions used in the study.

| Passive | Passive | Tone | Phoneme | Semantic | ||

|---|---|---|---|---|---|---|

| 'Rest' | Tones | Words | Decision | Decision | Decision | |

| Auditory Perception | − | + | + | + | + | + |

| Phoneme Perception | − | − | + | − | + | + |

| Concept Knowledge | + | + | + | − | − | + |

| Attention | − | − | − | + | + | + |

| Working Memory | − | − | − | + | + | + |

| Response Production | − | − | − | + | + | + |

Semantic Decision – Phoneme Decision

The last contrast we examined aims to isolate comprehension processes related to retrieval of word meaning. Because the Tone Decision task uses non-speech stimuli, activation observed in the Semantic Decision – Tone Decision contrast represents both phoneme perception and lexical-semantic stages of comprehension. As illustrated in Table 5, the Phoneme Decision task, which incorporates nonword speech stimuli, is designed to activate the same auditory and phoneme perception processes engaged by the Semantic Decision task, but with minimal activation of conceptual knowledge. Areas activated in the Semantic Decision – Phoneme Decision contrast included the angular gyrus, ventral temporal lobe, dorsal prefrontal cortex, pars orbitalis of the IFG, orbital frontal cortex and posterior cingulate gyrus, all with strong leftward lateralization. These results are consistent with prior studies using similar contrasts (Démonet et al., 1992; Price et al., 1997; Cappa et al., 1998; Binder et al., 1999; Roskies et al., 2001; Devlin et al., 2003; Scott et al., 2003). Compared to the Semantic Decision – Tone Decision contrast, the Semantic Decision – Phoneme Decision contrast produces less extensive activation, consistent with the hypothesis that some of the activation observed in the former contrast is due to pre-semantic speech perception processes. It is also possible that the phoneme decision stimuli, though not words, were sufficiently word-like to partially or transiently activate word codes (Luce & Pisoni, 1998), resulting in partial masking of the lexical-semantic system.

The Phoneme Decision task requires participants to identify individual phonemes in the speech input and hold these in memory for several seconds. Thus this task makes greater demands on auditory analysis and phonological working memory than the Semantic Decision task. Posterior regions of the left IFG and adjacent premotor cortex (BA 44/6), and the SMG bilaterally, were activated more strongly by the Phoneme task than the Semantic task, supporting previous claims for involvement of these regions in phonological processes (Démonet et al., 1992; Paulesu et al., 1993; Buckner et al., 1995; Fiez, 1997; Devlin et al., 2003). Other areas activated more strongly by the Phoneme task included large regions of the STG bilaterally, the SMA bilaterally, the right posterior IFG and premotor cortex, and the anterior IPS bilaterally.

A close look at Table 5 reveals another contrast that could be used to identify activation related specifically to conceptual processing. In the contrast Rest – Tone Decision, the only system predicted to be more active during resting than during the tone task is the conceptual system. Figure 4 shows a side-by-side comparison of the regions activated by the Semantic Decision – Phoneme Decision contrast and the Rest – Tone Decision contrast. In both cases, activation is observed in the left angular gyrus, ventral temporal lobe, dorsal prefrontal cortex, orbital frontal lobe, and posterior cingulate gyrus. The similarity between these activation maps is striking, and particularly so because they were generated using such different task contrasts. The same brain areas are activated by (a) resting compared to a tone decision task, and (b) a semantic decision task compared to a phoneme decision task. This outcome is highly counterintuitive and can only be explained by a model, such as the one in Table 5, that includes activation of conceptual processes during the resting state (Binder et al., 1999). Note that this resting state activation is the same phenomenon often called 'the default state' in research on task-induced deactivations (Raichle et al., 2001). Our model provides an explicit account of the close similarity between semantic and task-induced deactivation networks, which has not been addressed in previous accounts of the default state.

Implications for Language Mapping

These observations have several implications for the design of clinical language mapping protocols. We found that the Semantic Decision – Tone Decision contrast produced an optimal combination of consistent activation and a strongly left-lateralized pattern. These results are not due to any unique characteristics of this particular semantic task; many similar tasks could be designed to focus participants' attention on word concepts. Moreover, as should be clear from this study, activation patterns are not determined by a single task condition, but rather by differences in processing demands between two (or more) conditions. In designing protocols for mapping language comprehension areas, it is critically important to incorporate a contrasting condition that interrupts ongoing conceptual processes by engaging the subject in an attentionally-demanding task. The Tone Decision task accomplishes this by requiring continuous perceptual analysis of meaningless tone sequences. The Semantic Decision – Tone Decision contrast thus identifies not only differences related to pre-semantic phoneme perception but also differences in the degree of semantic processing. It is this strong contrast, not the Semantic Decision task alone, that accounts for the extensive activation.

Consistent and extensive activation, however, is not the only goal of language mapping. More important is that the activation represents linguistic processes of interest. All effortful cognitive tasks require general executive and attentional processes that may not be relevant for the purpose of language mapping. The Tone Decision task also engages these processes and thus 'subtracts' activation due to them, resulting in a map that more specifically identifies language-related activation. As with the semantic task, these characteristics of the tone task are not unique, and there are many possible variations on this task that could accomplish the same goals, perhaps more effectively.

The activation patterns we observed reinforce current views regarding the neuroanatomical representation of speech comprehension processes. In contrast to the traditional view that localizes comprehension processes in the posterior STG (Geschwind, 1971), modern neuroimaging and lesion correlation data suggest that comprehension depends on a widely distributed cortical network involving many regions outside the posterior STG and planum temporale. These areas include distributed semantic knowledge stores located in ventral temporal (MTG, ITG, fusiform gyrus, temporal pole) and inferior parietal (angular gyrus) cortices, as well as prefrontal regions involved in retrieval and selection of semantic information.

FMRI methods for mapping lexical-semantic processes have particular relevance for the presurgical evaluation of patients with intractable epilepsy. The most common surgical procedure for intractable epilepsy is resection of the anterior temporal lobe, and the most consistently reported language deficit after anterior temporal lobe resection is anomia (Hermann et al., 1994; Langfitt & Rausch, 1996; Bell et al., 2000; Sabsevitz et al., 2003). Although these patients have difficulty producing names, the deficit is not caused by a problem with speech articulation or articulatory planning, but by a lexical-semantic retrieval impairment. Difficulty retrieving names in such cases is a manifestation of partial damage to the semantic system that stores knowledge about the concept being named or to the connections between the concept and its phonological representation (Levelt, 1989; Lambon Ralph et al., 2001). Efforts to use fMRI to predict and prevent anomia from anterior temporal lobe resection should therefore focus on identification of this lexical-semantic retrieval system rather than on auditory processes or motor aspects of speech articulation, neither of which are affected by temporal lobe surgery or play a role in language outcome.

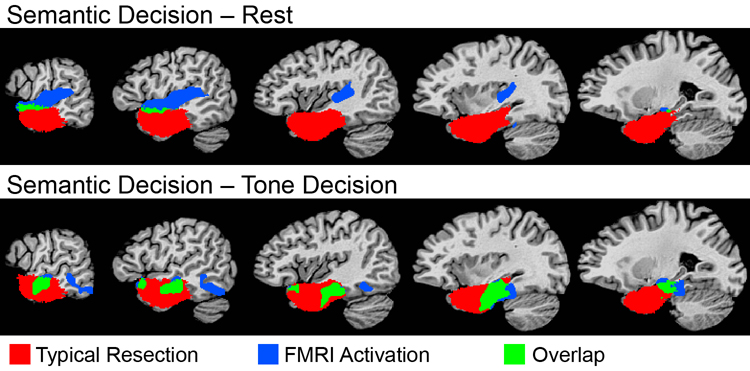

Consistent with this view is the fact that anterior temporal lobe resections commonly involve ventral regions of the temporal lobe while typically sparing most of the STG. Figure 5 shows an overlap map of 23 left anterior temporal lobe resections performed at our center, computed by digital subtraction of preoperative and postoperative anatomical scans in each patient. As shown in the figure, the typical resection overlaps ventral lexical-semantic areas activated in the Semantic Decision – Tones contrast (green), but not STG areas activated in the Semantic Decision – Rest contrast. From a clinical standpoint, it is critical to detect these language zones that lie within the region to be resected, particularly since it is damage to these lexical-semantic systems that underlies the language deficits observed in these patients. In support of this model, lateralization of temporal lobe activation elicited with the Semantic Decision – Tone Decision contrast described here has been shown to predict naming decline after left anterior temporal lobe resection (Sabsevitz et al., 2003).

Figure 5.

Overlap of the Semantic Decision – Rest and Semantic Decision – Tone Decision activations with a map of left anterior temporal lobe (ATL) resections in 23 epilepsy patients. ATL resection volumes were mapped in each patient by registration and subtraction of pre- and post-operative structural MRI scans. These resection volumes were then combined in stereotaxic space to produce a map showing the probability of resection at each voxel. The probability map was thresholded to identify voxels resected in at least 5 of 23 patients (colored areas). Yellow indicates overlap of the ATL resection map with the Semantic Decision – Rest activation. Green indicates overlap of the ATL resection map with the Semantic Decision – Tone Decision activation. Blue indicates overlap of the ATL resection map with both of the fMRI maps.

The current study focused on cognitive processes elicited by various tasks and did not attempt to resolve all issues regarding language mapping methods. For example, no attempt was made to compare lateralization in different ROIs. Previous studies showed that placement of ROIs in brain regions with lateralized activation (e.g., IFG) can circumvent the problem of nonspecific bilateral activation in other regions (Léhericy et al., 2000). Furthermore, we restricted the current investigation to protocols involving single words, yet recent data suggest that spoken sentences may provide a more potent stimulus for eliciting temporal lobe activation (Vandenberghe et al., 2002; Humphries et al., 2006; Spitsyna et al., 2006; Awad et al., 2007). Development of more sensitive methods for identifying semantic networks in the anterior ventral temporal lobe is a particularly important goal for future research. We also did not investigate the relative ease with which these protocols can be applied to patients with neurological conditions. The Semantic Decision – Tone Decision protocol, as conducted here with a regular alternation between active tasks, has been used successfully by our group in over 200 epilepsy patients with full-scale IQ ranging as low as 70 (Binder et al., 1996b; Springer et al., 1999; Sabsevitz et al., 2003; Binder et al., 2008). Personnel with expertise in cognitive testing, such as a neuropsychologist or cognitive neurologist, are needed to train the patient to understand and perform the tasks, as is standard with Wada testing and other quantitative tests of brain function. Finally, the current study does not address whether removal of the temporal lobe regions activated in these protocols reliably causes language deficits. Does sparing or removal of these regions account for variation in language outcome across a group of patients? This question is beyond the scope of the current study but will be critical to address in future research.

Acknowledgments

Thanks to Julie Frost, William Gross, Edward Possing, Thomas Prieto, and B. Douglas Ward for technical assistance. Supported by National Institute of Neurological Diseases and Stroke grants R01 NS33576 and R01 NS35929, and by National Institutes of Health General Clinical Research Center grant M01 RR00058.

APPENDIX

Location of activation peaks in the atlas of Talairach and Tournoux (1988).

| Passive Words > Rest | |||||

|---|---|---|---|---|---|

| Location | BA | x | y | z | z-score |

| Superior Temporal | |||||

| L planum temporale | 42/22 | −52 | −25 | 6 | 6.16 |

| L planum temporale | 42/22 | −43 | −31 | 7 | 6.04 |

| L Heschl's g. | 41/42 | −30 | −29 | 10 | 5.30 |

| L ant. STG | 22 | −48 | 6 | −4 | 4.99 |

| L ant. STS | 21/22 | −54 | −9 | −4 | 4.99 |

| R STG | 22 | 53 | −20 | 4 | 5.47 |

| R ant. STG | 22 | 58 | −6 | −2 | 5.01 |

| R ant. STG | 22/38 | 44 | 5 | −11 | 4.56 |

| R ant. STG | 22/38 | 35 | 5 | −17 | 4.52 |

| Other | |||||

| L precentral g. | 6 | −47 | −3 | 29 | 5.27 |

| L SFG | 6 | −6 | 20 | 44 | 4.86 |

| L post. ITG | 37 | −42 | −54 | −10 | 4.60 |

| Passive Words > Passive Tones | |||||

| Location | BA | x | y | z | z-score |

| Superior Temporal | |||||

| L ant. STS | 21/22 | −54 | −10 | −6 | 4.79 |

| L STS | 21/22 | −50 | −29 | 1 | 4.36 |

| Semantic Decision > Rest | |||||

| Location | BA | x | y | z | z-score |

| Medial Frontal | |||||

| L SMA | 6 | −1 | 5 | 46 | 7.03 |

| L SMA | 6 | −3 | −6 | 60 | 4.43 |

| L ant. cingulate g. | 24 | −10 | 10 | 40 | 6.24 |

| L ant. cingulate g. | 24 | −5 | 27 | 28 | 4.40 |

| R ant. cingulate g. | 32 | 3 | 16 | 41 | 6.42 |

| R SMA | 6 | 5 | 2 | 61 | 4.73 |

| Lateral Frontal | |||||

| L pars operc./IFS | 44/8 | −44 | 13 | 26 | 6.30 |

| L precentral s. | 6 | −39 | 4 | 31 | 5.72 |

| L precentral s. | 6/44 | −38 | 2 | 18 | 5.42 |

| L post. MFG | 6 | −30 | 4 | 56 | 4.90 |

| L post. MFG | 8 | −38 | −3 | 58 | 4.79 |

| L precentral g. | 6 | −38 | −9 | 50 | 4.48 |

| R pars triang./IFS | 45/46 | 44 | 23 | 30 | 4.71 |

| Anterior Insula | |||||

| L ant. insula | - | −33 | 19 | 2 | 5.74 |

| L ant. insula | - | −34 | 17 | 12 | 5.73 |

| R ant. insula | - | 33 | 18 | −3 | 6.19 |

| R ant. insula | - | 34 | 19 | 11 | 5.33 |

| Superior Temporal | |||||

| L HG | 41 | −49 | −15 | 1 | 7.00 |

| L STG | 22 | −60 | −23 | 6 | 6.35 |

| L ant. STG | 22 | −48 | −1 | −5 | 5.63 |

| L planum parietale | 22/40 | −35 | −38 | 19 | 4.54 |

| R HG | 42 | 46 | −20 | 12 | 6.47 |

| R STG | 22 | 58 | −12 | −1 | 6.45 |

| R post. STS | 21 | 53 | −35 | 6 | 5.25 |

| Other Cortical | |||||

| L IPS | 7 | −28 | −61 | 33 | 4.96 |

| L cingulate isthmus | 30 | −6 | −49 | 4 | 5.23 |

| R lingual g. | 17 | 18 | −90 | 0 | 4.61 |

| R lingual g. | 17 | 19 | −77 | 6 | 4.49 |

| Subcortical | |||||

| L caudate | - | −13 | −6 | 18 | 5.78 |

| L putamen | - | −20 | 1 | 5 | 4.98 |

| L globus pallidus | - | −15 | −8 | −3 | 4.78 |

| L med. geniculate body | - | −17 | −21 | −5 | 5.27 |

| L med. thalamus | - | −6 | −18 | 12 | 5.26 |

| L midbrain | - | −5 | −21 | −7 | 5.55 |

| R putamen | - | 20 | 1 | 16 | 6.48 |

| R putamen | - | 20 | 8 | 4 | 6.42 |

| R corona radiata | - | 22 | −15 | 19 | 5.65 |

| R ant. thalamus | - | 6 | −15 | 13 | 5.29 |

| R ant. thalamus | - | 7 | −10 | 3 | 4.73 |

| R ventral thalamus | - | 9 | −24 | −1 | 4.99 |

| R cerebral peduncle | - | 19 | −21 | −3 | 5.20 |

| midbrain tectum | - | −1 | −27 | 0 | 5.20 |

| Cerebellum | |||||

| L lat. cerebellum | - | −37 | −54 | −25 | 5.66 |

| L med. cerebellum | - | −9 | −65 | −17 | 5.34 |

| R med. cerebellum | - | 9 | −67 | −23 | 5.55 |

| R cerebellum | - | 30 | −50 | −25 | 4.43 |

| Semantic Decision > Tone Decision | |||||

| Location | BA | x | y | z | z-score |

| Dorsal Prefrontal | |||||

| L SFS | 6 | −25 | 14 | 53 | 7.49 |

| L SFG | 8 | −8 | 31 | 46 | 6.02 |

| L SFS | 10 | −23 | 53 | 6 | 5.85 |

| L SFG | 6 | −5 | 18 | 57 | 5.84 |

| L SFG | 10 | −8 | 60 | 16 | 5.58 |

| L SFG | 9 | −11 | 49 | 37 | 5.30 |

| L post. MFG | 6 | −32 | 4 | 59 | 5.17 |

| L SFG | 8 | −19 | 35 | 48 | 4.92 |

| SFG | 6 | 0 | 18 | 45 | 5.95 |

| Lateral Frontal | |||||

| L ant. MFG | 10 | −33 | 47 | 4 | 6.24 |

| L ant. IFS | 46/10 | −39 | 36 | −1 | 6.20 |

| L IFS | 44/6 | −41 | 8 | 30 | 6.06 |

| L IFS | 46 | −35 | 16 | 26 | 5.16 |

| L pars triang. | 45 | −52 | 26 | 5 | 5.86 |

| L pars triang. | 45 | −46 | 17 | 24 | 5.61 |

| L pars triang. | 45 | −45 | 19 | 9 | 5.00 |

| L pars orbitalis | 47 | −44 | 22 | −4 | 5.83 |

| L pars orbitalis | 47 | −29 | 21 | −5 | 5.66 |

| L pars orbitalis | 47/45 | −49 | 34 | −1 | 5.17 |

| L pars orbitalis | 47 | −28 | 16 | −18 | 4.92 |

| R pars orbitalis | 47 | 39 | 25 | −7 | 5.18 |

| Medial Frontal | |||||

| L gyrus rectus | 12 | −2 | 28 | −15 | 5.13 |

| L ant. cingulate g. | 32 | −10 | 35 | 27 | 4.91 |

| L orbital frontal pole | 10/11 | −17 | 59 | −7 | 4.48 |

| Ventral Temporal | |||||

| L ant. STS | 21/22 | −57 | −9 | −8 | 5.67 |

| L ant. STS | 21/22 | −48 | −9 | −8 | 4.84 |

| L STS | 21/22 | −39 | −15 | −15 | 5.20 |

| L MTG | 21 | −56 | −39 | −6 | 4.91 |

| L post. ITG | 37 | −45 | −51 | −14 | 5.48 |

| L post. ITS | 37 | −45 | −45 | −2 | 4.61 |

| L fusiform g. | 20/36 | −32 | −31 | −16 | 5.94 |

| L ant. fusiform g. | 36 | −30 | −17 | −25 | 5.89 |

| L ant. fusiform g. | 20/36/38 | −29 | −9 | −32 | 4.93 |

| Angular Gyrus | |||||

| L angular g. | 39 | −37 | −66 | 50 | 6.36 |

| L angular g. | 39 | −45 | −68 | 25 | 5.96 |

| L angular g. | 39 | −42 | −75 | 32 | 5.31 |

| L angular g. | 39 | −30 | −59 | 29 | 5.14 |

| R angular g. | 39 | 46 | −67 | 34 | 4.80 |

| Posterior Medial | |||||

| L post. cingulate g. | 30 | −4 | −53 | 10 | 5.92 |

| L precuneus | 31 | −12 | −63 | 27 | 4.87 |

| L cingulate isthmus | 27 | −9 | −31 | −3 | 4.73 |

| Subcortical | |||||

| L ant. thalamus | - | −4 | −3 | 13 | 5.17 |

| L cerebral peduncle | - | −11 | −15 | −9 | 4.65 |

| R caudate head | - | 14 | 3 | 11 | 4.48 |

| Cerebellum | |||||

| L med. cerebellum | - | −6 | −77 | −30 | 5.57 |

| R med. cerebellum | - | 14 | −76 | −26 | 6.64 |

| R lat. cerebellum | - | 38 | −72 | −39 | 6.04 |

| R lat. cerebellum | - | 33 | −59 | −36 | 5.71 |

| R post. cerebellum | - | 23 | −83 | −34 | 5.53 |

| R cerebellum | - | 25 | −68 | −28 | 4.75 |

| Semantic Decision > Phoneme Decision | |||||

| Location | BA | x | y | z | z-score |

| Dorsal Prefrontal | |||||

| L SFG | 6/8 | −15 | 18 | 50 | 6.65 |

| L SFG | 8 | −8 | 28 | 45 | 6.23 |

| L SFS | 6 | −25 | 16 | 55 | 6.19 |

| L SFG | 9 | −12 | 51 | 37 | 5.63 |

| L SFG | 8 | −7 | 34 | 54 | 5.55 |

| L SFG | 9 | −10 | 55 | 18 | 5.04 |

| L SFG | 9 | −16 | 43 | 30 | 4.98 |

| L SFG | 9 | −12 | 41 | 44 | 4.94 |

| Ventral Prefrontal | |||||

| L pars orbitalis | 47 | −33 | 28 | −8 | 6.76 |

| L pars orbitalis | 47/11 | −21 | 22 | −17 | 4.57 |

| L susorbital s. | 12/32 | −8 | 38 | −9 | 5.19 |

| L med. orbital g. | 11 | −11 | 23 | −13 | 5.09 |

| L ant. MFG | 10 | −44 | 41 | −6 | 4.54 |

| L ant. MFG | 10 | −30 | 51 | 0 | 4.42 |

| L frontal pole | 10 | −18 | 63 | 8 | 4.36 |

| R pars orbitalis | 47 | 30 | 29 | −8 | 5.39 |

| Ventral Temporal | |||||

| L hippocampus | - | −30 | −26 | −12 | 6.25 |

| L hippocampus | - | −28 | −36 | −4 | 5.79 |

| L hippocampus | - | −11 | −35 | 7 | 5.28 |

| L parahippocampus | 28/35/36 | −31 | −20 | −20 | 6.19 |

| L ant. fusiform g. | 36 | −28 | −13 | −28 | 4.86 |

| L ant. MTG | 21 | −53 | −5 | −18 | 5.00 |

| L ant. MTG | 21 | −62 | −6 | −12 | 4.95 |

| R collateral s. | 28/35/36 | 25 | −30 | −15 | 5.40 |

| R fusiform g. | 20/37 | 32 | −38 | −18 | 5.15 |

| Angular Gyrus | |||||

| L angular g. | 39 | −45 | −68 | 26 | 6.27 |

| L angular g. | 39 | −38 | −77 | 28 | 5.31 |

| L angular g. | 39 | −53 | −65 | 34 | 4.75 |

| Posterior Medial | |||||

| L post. cingulate g. | 30/23 | −4 | −51 | 11 | 5.60 |

| L post. cingulate g. | 23 | −20 | −61 | 14 | 4.75 |

| L post. cingulate | g.23 | −8 | −52 | 28 | 4.66 |

| L precuneus | 31 | −10 | −63 | 25 | 5.29 |

| L cingulate isthmus | 30 | −13 | −42 | 0 | 4.68 |

| R post. cingulate g. | 23 | 2 | −37 | 31 | 5.28 |

| Occipital Pole | |||||

| L occipital pole | 17/18 | −19 | −103 | −5 | 5.48 |

| R occipital pole | 18 | 29 | −99 | 11 | 4.56 |

| R occipital pole | 17/18 | 15 | −103 | 10 | 4.53 |

| Cerebellum | |||||

| R lat. cerebellum | - | 44 | −73 | −34 | 5.83 |

| R lat. cerebellum | - | 32 | −70 | −36 | 5.67 |

| R post. cerebellum | - | 17 | −84 | −34 | 4.68 |

| R post. cerebellum | - | 31 | −76 | −25 | 4.56 |

Abbreviations: ant. = anterior, BA = approximate Brodmann area, g. = gyrus, med. = medial, IFS = inferior frontal sulcus, IPS = intraparietal sulcus, ITG = inferior temporal gyrus, L = left, lat. = lateral, MFG = middle frontal gyrus, MTG = middle temporal gyrus, operc. = opercularis, post. = posterior, R = right, SFG = superior frontal gyrus, SMA = supplementary motor area, STG = superior temporal gyrus, STS = superior temporal sulcus, triang. = triangularis

References

- Adcock JE, Wise RG, Oxbury JM, Oxbury SM, Matthews PM. Quantitative fMRI assessment of the differences in lateralization of language-related brain activation in patients with temporal lobe epilepsy. Neuroimage. 2003;18:423–438. doi: 10.1016/s1053-8119(02)00013-7. [DOI] [PubMed] [Google Scholar]

- Adler CM, Sax KW, Holland SK, Schmithorst V, Rosenberg L, Strakowski SM. Changes in neuronal activation with increasing attention demand in healthy volunteers: An fMRI study. Synapse. 2001;42:266–272. doi: 10.1002/syn.1112. [DOI] [PubMed] [Google Scholar]

- Ahmad Z, Balsamo LM, Sachs BC, Xu B, Gaillard WD. Auditory comprehsnion of language in young children: Neural networks identified with fMRI. Neurology. 2003;60:1598–1605. doi: 10.1212/01.wnl.0000059865.32155.86. [DOI] [PubMed] [Google Scholar]

- Alexander MP, Hiltbrunner B, Fischer RS. Distributed anatomy of transcortical sensory aphasia. Arch. Neurol. 1989;46:885–892. doi: 10.1001/archneur.1989.00520440075023. [DOI] [PubMed] [Google Scholar]

- Antrobus JS, Singer JL, Greenberg S. Studies in the stream of consciousness: Experimental enhancement and suppression of spontaneous cognitive processes. Perceptual and Motor Skills. 1966;23:399–417. [Google Scholar]

- Arnott SR, Grady CL, Hevenor SJ, Graham S, Alain C. The functional organization of auditory working memory as revealed by fMRI. J. Cogn. Neurosci. 2005;17:819–831. doi: 10.1162/0898929053747612. [DOI] [PubMed] [Google Scholar]

- Awad M, Warren JE, Scott SK, Turkheimer FE, Wise RJS. A common system for the comprehension and production of narrative speech. J. Neurosci. 2007;27:11455–11464. doi: 10.1523/JNEUROSCI.5257-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baylis GC, Rolls ET, Leonard CM. Functional subdivisions of the temporal lobe neocortex. J. Neurosci. 1987;7:330–342. doi: 10.1523/JNEUROSCI.07-02-00330.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell BD, Davies KG, Hermann BP, Walters G. Confrontation naming after anterior temporal lobectomy is related to age of acquisition of the object names. Neuropsychologia. 2000;38:83–92. doi: 10.1016/s0028-3932(99)00047-0. [DOI] [PubMed] [Google Scholar]

- Benson RR, Richardson M, Whalen DH, Lai S. Phonetic processing areas revealed by sinewave speech and acoustically similar non-speech. Neuroimage. 2006;31:342–353. doi: 10.1016/j.neuroimage.2005.11.029. [DOI] [PubMed] [Google Scholar]

- Binder JR, Rao SM, Hammeke TA, Frost JA, Bandettini PA, Jesmanowicz A, Hyde JS. Lateralized human brain language systems demonstrated by task subtraction functional magnetic resonance imaging. Arch. Neurol. 1995;52:593–601. doi: 10.1001/archneur.1995.00540300067015. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Rao SM, Cox RW. Function of the left planum temporale in auditory and linguistic processing. Brain. 1996a;119:1239–1247. doi: 10.1093/brain/119.4.1239. [DOI] [PubMed] [Google Scholar]

- Binder JR, Swanson SJ, Hammeke TA, Morris GL, Mueller WM, Fischer M, Benbadis S, Frost JA, Rao SM, Haughton VM. Determination of language dominance using functional MRI: A comparison with the Wada test. Neurology. 1996b;46:978–984. doi: 10.1212/wnl.46.4.978. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Cox RW, Rao SM, Prieto T. Human brain language areas identified by functional MRI. J. Neurosci. 1997;17:353–362. doi: 10.1523/JNEUROSCI.17-01-00353.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PSF, Rao SM, Cox RW. Conceptual processing during the conscious resting state: a functional MRI study. J. Cogn. Neurosci. 1999;11:80–93. doi: 10.1162/089892999563265. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PSF, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cereb. Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Binder JR, McKiernan KA, Parsons M, Westbury CF, Possing ET, Kaufman JN, Buchanan L. Neural correlates of lexical access during visual word recognition. J. Cogn. Neurosci. 2003;15:372–393. doi: 10.1162/089892903321593108. [DOI] [PubMed] [Google Scholar]