Abstract

Numerous neuroimaging and neuropsychological studies have highlighted the role of the ventral, occipitotemporal visual processing stream in the representation and retrieval of semantic memory for the appearance of objects. Here, we examine the role of the parietal cortex in retrieval of object shape information. fMRI data were acquired as subjects listened to the names of common objects and made judgments about their shape. Recruitment of the left inferior parietal lobule (IPL) during shape retrieval was modulated by the amount of prior tactile experience associated with the objects. In addition, the same pattern of activity was observed in right postcentral gyrus, suggesting that the representation of object shape is distributed amongst regions that are relevant to the sensorimotor acquisition history of this attribute, as predicted by distributed models of semantic memory.

Keywords: conceptual memory, semantic memory, inferior parietal lobule, tactile, shape

Research focusing on object recognition addresses the question, “how do we match our current visual input to a stored representation of an object?” When researchers ask this question, they simultaneously ask how our knowledge of the appearance of an object is represented and accessed. Therefore, investigation into the representation of appearance information can inform our understanding of visual object recognition. In addition, cognitive psychologists have long been interested in the organizing principles of semantic memory and have recently begun to characterize the representation of specific object attributes, like object form, in addition to characterizing the representation of entire objects (for a review see Thompson-Schill, Kan & Oliver, 2006).

In the current study we investigated shape retrieval using the same task described in a prior study by this group (Oliver & Thompson-Schill, 2003), in order to assess whether shape retrieval recruits parietal cortex to varying degrees, as a function of the extent to which an object was experienced as a target of action. Under domain-specific distributed models (e.g., Allport, 1985; Martin, Ungerleider & Haxby, 2000), long-term knowledge about a particular object is distributed amongst the sensorimotor processing regions that were used to originally acquire that information. For example, if you learned about stethoscopes only by seeing them hanging around the necks of doctors, then your knowledge of stethoscopes would be stored predominantly in the regions of the brain that process visual information. Alternatively, if your experience with stethoscopes included using them to listen to patients’ lungs, then your representation of them would be more distributed amongst the sensorimotor regions (e.g., vision, tactile and motor) used to observe and interact with them. When applied to attribute knowledge, domain-specific distributed models predict that your knowledge about an object’s shape would be spread out amongst the sensorimotor regions that you utilized to gain knowledge about that attribute.

There are several reasons to expect that shape retrieval would recruit parietal cortex based on its putative roles in processing visual information. Based on their work in non-human primates, and on prior investigations of the two visual systems (Ingle,1967), Ungerleider & Mishkin (1982) described a functional-anatomical division of labor between ventral and dorsal extrastriate visual cortex, which they characterized as specializations for object identification (“what”) and spatial localization (“where”) respectively. It is possible that the shape of an object could be learned partly by spatial processing performed to relate the parts of the object to one another. If this were the case, then retrieval of shape knowledge may involve parietal cortex due to a history of spatial processing. In contrast, acquisition (and thus retrieval) of color knowledge would not be expected to involve spatial processing (cf. Oliver & Thompson-Schill, 2003).

Parietal cortex may also represent shape knowledge because object shape can be acquired as one acts on objects in the world. For example, in order to form the appropriate grasp for an object, you must estimate the size and shape of an object and match those estimates with your finger aperture and orientation (Jeannerod et al., 1986). Therefore, there is reason to believe that, at least for some objects, there is a consistent relationship between an object’s shape and certain aspects of the actions that you perform on it. Based on neuropsychological evidence, Goodale and Milner (1992) proposed the alternate titles “what” and “how” for the two visual processing streams to emphasize the dorsal stream’s role in using visual information to perform actions. If the nature of representation described by domain-specific distributed models (e.g., Allport, 1985) is combined with Goodale and Milner’s (1992) account of the dorsal stream as a processor for visually-guided actions, then one would expect that knowledge about an object’s shape would be partially represented by visuo-motor codes in parietal cortex, provided that information about shape is correlated with information obtained through grasping interactions. That is, a neural pattern that codes object-specific grasp information (or other action-related properties) will necessarily also reflect any features that covary with this information. Shape and size are two likely candidates.

In a prior study, we explored the representation of shape, size and color knowledge (Oliver & Thompson-Schill, 2003) by asking subjects to retrieve this information for named objects while undergoing fMRI scanning. Retrieval of size and shape knowledge relative to retrieval of color knowledge was found to activate the right inferior parietal lobule (IPL) and the left superior parietal lobule (SPL). Activity in IPL has been observed for retrieval of geometric properties (shape and size) relative to retrieval of material attributes (i.e., roughness or hardness) (Newman et al., 2005). In the current study we further explore the role of parietal regions in shape retrieval. Subjects were asked to make shape decisions about a set of items that varied in terms of the amount of tactile experience typically associated with each object. It is assumed that objects that are frequently touched are also associated with a richer history as the targets of actions such as grasping. Therefore, we predict that activity in the parietal regions recruited for the shape retrieval task will be modulated by the tactile history of the object.

However, we recognize that it is also possible that one could learn about the shape of objects through tactile processing that is not directed towards an action. Prior research has shown that tactile processing in object recognition and shape discrimination involves regions within the parietal lobe including the anterior part of the supramarginal gyrus, the intraparietal sulcus and primary somatosensory cortex in the postcentral sulcus (e.g., Bodegard et al., 2001). In fact, the anterior portion of IPS that is involved in object grasping (Binkofski et al., 1998; Culham et al., 2003; Frey et al., 2005) has also been shown to be active for tasks requiring object shape information to be transferred between the visual and tactile modalities (Grefkes et al., 2002; Tanabe et al., 2005). Given that it is possible to learn about an object’s shape tactilely, it would not be surprising if object shape were learned partly through tactile processing. Therefore, objects with a greater history of interaction through the tactile modality may be associated with greater activity in the parietal lobe due to their tactile history in addition to their history as implements for actions. In this initial study we make no attempt to distinguish between these two alternatives.

Methods

Participants

Thirteen right-handed, native English speakers with no history of neurological disorders participated in this experiment. These individuals were drawn from the University of Pennsylvania community and were paid $40 for their participation. One subject’s data were removed from analyses due to excessive motion (over 6mm or degrees). The remaining subjects consisted of eight females and four males that ranged in age from 18 to 26. All subjects provided informed consent prior to participation and all procedures were approved by the human subjects review board of the University of Pennsylvania.

Materials

To select stimuli for the fMRI experiment, we initially evaluated a number of properties of a set of 205 real world objects, based on responses from a separate group of 30 subjects who completed a web survey. This group of subjects was drawn from the same community as the subjects in the fMRI experiment and received either course credit or $10 for their participation. No individual subject participated in both the web survey and the fMRI experiment.

For each item in the web survey the name of the object was presented along with the following questions: (i) “Is the object composed mostly of curved edges or straight edges?” This question served as the shape retrieval task in the fMRI study, therefore, the responses to this survey question were used to select items with high agreement across subjects as to the correct answer. (ii) “How often do you interact with this object?” Responses to this question were used to generate general exposure (i.e., frequency of sensorimotor interaction of any type) scores for each item and response options were as follows: very infrequently, infrequently, sometimes, frequently, very frequently. (iii) “What percent of your interaction with this object includes tactile contact?”1 with the following options for responding: 0-10%, 10-20%, 20-30%, 30-40%, 40-50%, 50-60%, 60-70% 70-80%, 80-90%, 90-100%. This question was used to generate tactile interaction ratings for each item.

From this initial set of 205 items, we selected 119 items for inclusion in the shape retrieval task used during fMRI scanning2. The items that were selected were classified as being “mainly composed of curved edges” or “mainly composed of straight edges” by at least 80% of the web survey participants. The average response to this question across subjects was not strongly correlated with the tactile experience and general exposure ratings described above, although it was somewhat negatively correlated with the log of lexical frequency (correlation with tactile experience rating, r = .10, correlation with general exposure, r = -.08, correlation with the log of lexical frequency, r = -.35). In addition, 75 pronouncable nonwords and 75 abstract real words were selected for a lexical decision baseline task. The real words were low in concreteness and imageability (ratings of less than 400 on a scale from 100-700) but were matched to the shape task items in terms of word frequency (t(159) = .93, p > .10), number of phonemes (t(167) = -.80, p > .10), and familiarity (t(152) = -1.32, p > .10). Ratings for each of these word features were found in the MRC Psycholinguistics Database (Wilson, 1987) for those items that were listed there. For the items included in the shape judgment task, familiarity was moderately correlated with both the average tactile exposure rating and the average general exposure rating obtained from the web survey, r = .49 and r = .62 respectively. Concreteness was negatively correlated with the log of lexical frequency, r = -.28. None of the other word features exhibited a significant correlation with the tactile ratings, the general exposure ratings or with the log of lexical frequency (all r’s < .17). The log of lexical frequency was not strongly correlated with the tactile ratings or the general exposure ratings, r’s < .13.

Procedure

While undergoing scanning, participants performed two tasks in an alternating, blocked sequence: shape judgments and lexical decisions. In both tasks stimuli were presented auditorily. In the shape retrieval task subjects were presented with object names and they were asked to decide, “Is the object composed of mostly curved edges or mostly straight edges?” In the lexical decision baseline task the subjects were presented with abstract real words and pseudowords and were asked to decide, “Is this a real word or not a real word?” Subjects received training on both tasks using a set of 56 non-experimental stimuli one to three days prior to the scan. During scanning the two tasks alternated every 10 trials and the trials were presented in 3 runs of 9 blocks each starting and ending with a block of the lexical decision task. Each block of 10 trials was preceded by an instruction cue spoken in a male voice. All experimental trials were presented in a female voice. In each task, auditory presentation of a new stimulus occurred every 2 seconds and subjects were given 1.85 seconds from the stimulus onset to make a keypress response. Subjects were instructed to respond quickly and accurately. All responses were made by pressing one of two buttons, and each response was expected to occur equally often in each of the tasks. The full set of 270 stimuli was presented over 3 runs. Each subject was presented with 1 of 4 item orders that were randomly ordered with respect to the level of tactile experience associated with the objects (according to the mean level from the group web survey) in the shape retrieval task. At the beginning of each run there were 10 practice shape retrieval trials that were not included in the analyses. These practice trials were included to allow the subjects time to reacquaint themselves with the task while the scanner reached steady state magnetization.

Scanning procedure

Subjects were scanned using a 2.89 T Siemens scanner with a standard head coil and foam padding to secure the head in position. For each subject, T1-weighted anatomical images (TR = 1620 msec, TE = 3 msec, TI = 950 msec, voxel size = .75 mm × .75 mm × 3.0 mm) were acquired prior to functional imaging. Next, subjects performed the shape retrieval task and the lexical decision task while undergoing blood oxygen dependent (BOLD) imaging (Ogawa et al., 1993). One hundred and ten sets of 33 slices were collected every 2 seconds using interleaved, gradient echo, echoplanar imaging (TE = 30 msec, FOV= 19.2 cm × 19.2 cm, voxel size = 3 mm × 3 mm × 3 mm). This fMRI sequence was repeated 3 times. All stimuli and instructions were presented over headphones (Avotec, Inc.), using MATLAB (The Mathworks Inc.) with the psychophysics toolbox (Brainard, 1997) and responses were collected using a fiber optic response pad (Current Designs, Inc.).

Image processing

Voxbo software (www.voxbo.org) was used to process the functional data. Specifically, the images were reconstructed and a slice acquisition correction was applied. A six-parameter, rigid-body transformation was applied to correct for motion (Friston et al., 1995). Subjects with more than 6 mm of translational or 6 degrees of rotational motion were removed from the analysis, resulting in the exclusion of one subject. The data were normalized and smoothed with an 8 mm Gaussian filter using SPM2 (http://www.fil.ion.ucl.ac.uk/spm).

Each participant’s data were subjected to a general linear model for serial correlated error terms (Worsley & Friston, 1995) that included an estimate of intrinsic serial autocorrelation (Zarahn et al., 1997). This model included a diagonal set for the two tasks (shape retrieval and lexical decision) and a covariate describing the typical amount of tactile experience associated with the items in the shape task. This tactile experience covariate was generated from the group web-survey means for each item. In addition, this model also included the following covariates of no interest: scan effects, global signal, movement parameters for each of the 6 directions of movement, and the square root of reaction time (i.e., the square root of each subject’s own reaction time for each trial). The data were mean normed to correct for differences across scans and the data were filtered to remove variance occurring at the three lowest and one highest frequencies of the scan.

Results

Behavioral results

There was a significant difference in reaction time between the shape retrieval condition (mean = 1202 ms, SD = 87 ms) and the lexical decision baseline task (mean = 1106 ms, SD = 66 ms), t(11) = 7.8, p < .0001. However, there was no difference in accuracy between the two tasks, t(11)= 1.48, p=.17, with shape retrieval accuracy (mean = 77%, SD = 11%) similar to lexical decision accuracy (mean = 72%, SD = 5%).

fMRI Results

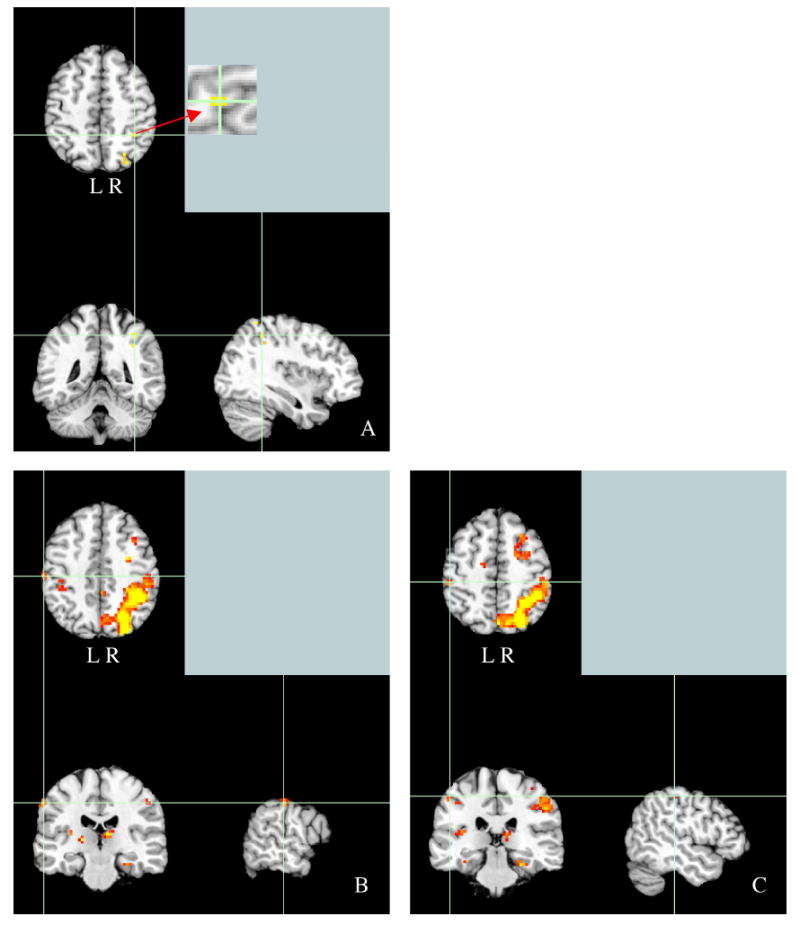

In order to characterize activation patterns associated with shape retrieval, a whole brain analysis was performed. Candidate “shape responsive” regions of parietal cortex were identified as any cluster of voxels responding more for shape retrieval than lexical decision; the false positive rate was controlled at α = .05, corrected for multiple comparisons, by performing 2000 Monte Carlo permutations of the data to derive the critical threshold (t = 8.29) (Nichols & Holmes, 2002). While these regions were significantly active for our shape retrieval task relative to lexical decision, there are a number of other differences between the two tasks that would not be expected to vary with tactile experience. Each cluster within parietal cortex was then identified as a region of interest (ROI) and tested for the effect of tactile experience (see Table 1 for all regions localized by this contrast and Figure 1 for images of the regions of interest showing effects of tactile experience). The localization of these regions was identified by converting the MNI coordinates to Talairach coordinates and identifying regions based on these coordinates using the Talairach Daemon (Lancaster et al., 2000).

Table 1.

Tactile Experience, General Exposure and Lexical Frequency Effects (t-values) by Region and Model

| Local maxima | Number of voxels | Model 1 | Model 2 | |||

|---|---|---|---|---|---|---|

| Tactile | Tactile | General exposure | Word frequency | |||

| Left ROIs |

||||||

| -30 -39 -21 Fusiform Gyrus |

4 | --- | --- | --- | --- | |

| -54 -57 -12 Middle Temporal Gyrus |

37 | --- | --- | --- | --- | |

| -48 -57 -9 Occipital Lobe |

||||||

| -51 36 15 Inferior Frontal Gyrus |

2 | --- | --- | --- | --- | |

| -39 -81 33 Precuneus |

4 | --- | --- | --- | --- | |

| -42 -45 42 Inferior Parietal Lobule |

4 | --- | --- | --- | --- | |

| -30 -78 48 Superior Parietal Lobule |

29 | --- | --- | --- | --- | |

| -42 -48 51 Inferior Parietal Lobule |

4 | 1.93 | 1.81 | --- | 1.94 | |

| -42 -54 63 Inferior Parietal Lobule |

4 | --- | --- | --- | --- | |

| Right ROIs |

||||||

| 36 -78 33 Precuneus |

7 | --- | --- | --- | --- | |

| 39 -36 42 Inferior Parietal Lobule |

20 | --- | --- | --- | 1.37 | |

| 57 -21 45 Postcentral Gyrus (BA3) |

11 | 1.82 | 1.48 | --- | --- | |

| 48 -33 51 Postcentral Gyrus |

7 | 2.04 | 1.98 | 1.76 | --- | |

Note: The local maxima of each region for the localizing contrast of shape retrieval relative to lexical decision are shown along with the t-value corresponding to the effects within each region. Effects significant at p < .05 are shown in bold font, .05 < p < .10 is shown in regular typeface and non-significant findings (p > .10) are noted as ---. The tactile covariates in Model1 and Model2 are identical.

Figure 1.

A. Panels A-C all represent regions of interest localized by comparing the shape retrieval task to the lexical decision task. A. Activity in the inferior parietal lobule (MNI coordinates: -42 -48 51) localized at the permutation corrected threshold of p < .05 (t = 8.29). B & C: Activity in the right postcentral gyrus (BA 2, MNI coordinates:57 -21 45 and 48 −33 51) for the contrast of shape retrieval relative to lexical decision at reduced threshold of t = 3.07.

A mean time series was calculated for each subject by averaging over all of the suprathreshold voxels in each ROI. The average time series across the voxels within each region of interest was used to calculate a variance-normalized effect size measure for tactile experience. These values were then subjected to a random effects analysis across subjects, using a one-sample t-test to determine whether each contrast was significantly greater than 0. The results from these t-tests are reported in Table 1 (under Model 1) for each region showing a significant effect of shape retrieval relative to lexical decision, and are described in more detail below.

The procedure that was used to localize regions of interest did not identify any regions in right parietal cortex; however, a prior study from this group investigating shape retrieval (Oliver & Thompson-Schill, 2003) reported a role for the right IPL in shape retrieval. To explore the role of tactile experience in this a priori region of interest right parietal ROIs were defined by lowering the threshold for the contrast of shape retrieval compared to lexical decision until the number of positively activated voxels present in the right parietal lobe matched the total number of voxels (45) present in the left parietal lobe at the permutation-defined threshold corresponding to p < .05. For the right parietal lobe, the threshold that yielded 45 voxels was t = 3.07. Each cluster of voxels identified in the right parietal lobe using this procedure was then tested for effects of the covariates of interest. Results of the one-sample t-tests performed within each region of the right parietal lobe are included in Table 1 (under Model 1) and described below.

In one shape responsive portion of the left IPL (MNI coordinates: -42 -48 51, region size = 4 voxels), the magnitude of activation during shape retrieval was correlated positively with tactile experience, t(11) = 1.93, p < .05. No other significantly shape-responsive regions were modulated in this way. Two of the subthreshold ROIs in right parietal cortex showed a pattern of effects similar to the left IPL region. Activity in two adjacent regions in right postcentral gyrus showed a positive relationship with tactile experience, (MNI coordinates: 57 -21 45, BA3, region size = 11 voxels), t(11) = 1.82, p < .05 and MNI coordinates: 48 −33 51, region size = 7 voxels) t(11) = 2.04, p < .05.

An effect of the amount of tactile experience on recruitment of these regions is interesting to the extent that it is truly an effect of the amount of tactile experience associated with the objects rather than an effect of other variables that are correlated with tactile experience. The web survey that was used to assess tactile experience was designed to separately assess tactile experience and general sensorimotor experience. Specifically, subjects were asked to assess the total amount of experience that they had with the objects through any sensory modality and then separately to estimate the proportion of their total experience with the object that was tactile in nature. Perhaps not surprisingly these two measures are correlated with one another, r = .61. To establish whether the effect of tactile experience on recruitment of left IPL and right postcentral gyrus is specifically due to tactile experience rather than general sensorimotor experience, the same data were tested in a model that was identical to the one presented thus far, but that also includes a covariate describing the general amount of sensorimotor experience associated with the objects and a covariate describing the lexical frequency associated with the object names. The covariate describing general sensorimotor experience was generated based on the mean responses of all subjects who participated in the web survey. The lexical frequency covariate was generated using log-transformed Kucera & Francis (1967) ratings for the items in the shape task when available. The results of this analysis are presented in Table 1 (under Model 2) and are described below.

In the context of this model, the tactile covariate was found to be significant in left IPL t(11) = 1.81, p < .05 (MNI coordinates: -42 -48 51, region size = 4 voxels), indicating that the effect of tactile exposure continues to explain unique variance when general sensorimotor experience and lexical frequency are modeled. The effect of the general sensorimotor covariate in this region was not significant, t(11) = .01, p > .10. However, the effect of lexical frequency in this region was significant in this region, t(11) = 1.94, p < .05. No other effects of these three covariates approached significance in any of the other regions tested in the left parietal cortex. In this model, one of the right postcentral gyrus regions (described in section above) was significantly modulated by tactile experience t(11) = 1.98, p < .05 (MNI coordinates: 48 −33 51) while the other was only marginally modulated t(11) = 1.48, p = .08 (MNI coordinates: 57 -21 45, BA3). The first of these regions was also marginally modulated by general sensorimotor experience, t(11) = 1.76, p = .05 (MNI coordinates: 48 −33 51). The second region did not show a significant effect of general sensorimotor experience, t(11) = 1.23, p > .10. Neither of these regions was significantly modulated by the lexical frequency of the items, p’s > .10. However, a separate region showed a marginal effect of lexical frequency, t(11) = 1.37, p = .098 (MNI coordinates: 39 -36 42, IPL, region size = 20 voxels).

While regions outside of parietal cortex were activated for the contrast of shape relative to lexical decisions, none of the other regions was modulated by the level of tactile exposure associated with the objects tested.

Discussion

Previous studies of shape knowledge retrieval in our lab (Oliver & Thompson-Schill, 2003) and others (Newman et al., 2005) have identified regions in parietal cortex, bilaterally, that play a role in long-term object shape knowledge. In the current study, regions were localized for their involvement in retrieving object shape knowledge from auditorily presented names relative to a lexical decision task. The response of these regions was tested for modulation by prior tactile experience with the objects tested. Three regions displaying this pattern of effect were identified- one in the left IPL, near the anterior IPS, and another two in the right postcentral gyrus. We review the relevance of each of these activations to the prior literature on tactile and action processing below.

Left Inferior Parietal Lobe (IPL)

In the current study we observed that the left IPL’s involvement in object shape retrieval was positively associated with prior tactile experience with the objects. The particular location of the activity in left IPL (BA40) is within 6 mm of the more anterior of two dorsal IPS regions identified as shape sensitive (Sawamura et al., 2005 coordinates: -39, -48, 57, coordinates from this study: -42, -48, 51). The anterior, left sided area described in the current study seems to be very near, but slightly superior to the area that has been called the human homologue of monkey AIP (based on coordinates range compiled by Johnson-Frey, 2005). In non-human primates, AIP has been shown to be important in processing the size, shape and orientation of objects for grasping (Taira et al., 1990; Murata et al., 1996; Sakata et al., 1995; Murata et al., 2000) and disruptions to the processing in this region has been linked to deficits in grasping (Gallese et al., 1994). In humans, this area is thought to reside near the intersection of IPS and the postcentral gyrus (Binkofski et al., 1998, 1999; Jancke et al., 2001; Shikata et al., 2001; Grefkes et al., 2002; Culham et al., 2003). Human IPS has also been linked to processing of object properties, like size and shape, that are utilized to grasp an object but not to merely reach for it (Culham et al., 2003). This region not only selects for object form, similar to ventral region LOC, but also selects for the grasp posture (Shmuelof & Zohary, 2005). A group lesion-deficit analysis linked damage to the anterior intraparietal sulcus with impairments in forming the correct grip aperture for objects (Binkofski et al., 1998). In addition, observation of actions that involve objects (e.g. reaching and grasping a ball) are associated with greater activity in aIPS cortex than are pantomimed actions (e.g. mimicking reaching and grasping a ball) (e.g., Buccino et al., 2001).

The location of activation in the current study was near that observed for object-related hand actions (Talairach coordinates (Buccino et al., 2001): -36 −40 52 and for the current study: -42 −45 49) and to the location of the activity reported by Johnson-Frey and colleagues (2005) for planning tool gestures with either hand. This area has been associated with activity in many tasks involving tool stimuli and other objects with strong motor associations (Okada et al., 2000; Chao & Martin, 2000; Grezes & Decety, 2002; Creem-Regehr & Lee, 2005; Weisberg, van Turennout, & Martin, 2006) and has been thought to represent attribute information associated with performing actions on objects (Chao & Martin, 2000). Therefore, the current study suggests that semantic representations of object shape recruit action-processing regions to the extent that the representation was acquired through tactile and/or action processing.

As noted earlier, we cannot discriminate on the basis of this dataset alone whether the correlation of shape sensitive IPL activity with amount of tactile experience with objects is due to prior tactile experience per se or to prior experience performing actions on the objects. Tactile shape matching elicits activity in the contralateral postcentral gyrus extending into the SPL and in anterior IPS and in the supramarginal gyrus of the IPL (Hadjikhani and Roland, 1998). A recent study characterizing the regions involved in tactile form processing relative to tactile texture processing identified the following five regions: postcentral sulcus (PCS), anterior intraparietal sulcus (aIPS), posterior intraparietal sulcus (pIPS), ventral intraparietal sulcus (vIPS), and the lateral occipital complex (LOC) (Peltier et al., 2007). By comparing processing of visually perceived form to visually perceived texture, these authors determined that LOC and IPS are likely to be multisensory shape processing regions while PCS mainly processes shape haptically. This result is consistent with a number of haptic shape processing studies that have also localized activity to portions of IPS (e.g., Zhang et al., 2004; Van de Winckel et al., 2005). For example, both the anterior supramarginal gyrus and the cortex around the IPS (near our left IPL activation) were reported to respond to tactile shape perception compared to rest, but not for lower level feature discrimination (e.g., discrimination of edges, curvature, roughness and brush velocity using mechanical stimulation; Bodegard et al., 2001). Supporting a role for representing shape long term, bilateral activation of the IPL has been observed during tactile object recognition of familiar objects compared to tactile exploration of unfamiliar objects (Reed et al., 2004)

Taken together, these results suggest that the area of activity observed in this study may show significant modulation by tactile history either because it is a region sensitive to action relevant object features or because it is important in deriving object shape through the tactile modality. However, given that it appears to be involved specifically for the higher level representation of tactilely perceived object shape (Bodegard et al., 2001), this region may process shape, from both tactile and visual inputs (Peltier et al., 2007), for the more general purpose of performing actions including tactile object recognition. Therefore, the question of whether this area represents tactile experience with object shapes relative to action experience with objects may be less critical.

Lexical frequency also was correlated with activity in this portion of left IPL. It is unclear from this experiment whether the lexical frequency effect is attributable to language processing that is completely separate from sensorimotor representation or whether repeated exposure to the object names (as one would have for object names with high frequencies) strengthens existing sensorimotor representations. Importantly, the observed effect of tactile experience on the involvement of this region persists even when the lexical frequency of items is included in the model.

Right Postcentral Gyrus

The effects of tactile processing and general exposure that we observed in the postcentral gyrus for shape retrieval are consistent with the involvement of this region in haptic shape perception (Bodegard et al., 2000, Bodegard et al., 2001; Servos et al., 2001). As mentioned above, PCS may process shape mainly haptically, as opposed to other regions such as anterior IPS that may process shape multimodally (Peltier et al., 2007). This result is not surprising given that PCS (BA3), is a part of the primary somatosensory cortex (Van Westen et al., 2004).

While it is not possible to separately test for the effects of prior tactile experience and action experience with the objects tested, the fact that this shape sensitive region is located in a primary tactile processing region and activity within it is modulated by the tactile history of the objects suggests that this region may represent shape information gleaned through tactile processing. However, even the activity we observed in postcentral gyrus has been observed in studies that focus on action representation. For example, activity in or near the postcentral gyrus has been observed when listening to tool sounds relative to animal sounds (Lewis et al., 2006) and during preparation of motor actions (Krams et al., 1998). Therefore, it is still not clear that the pattern of activity observed here can be attributed entirely to the tactile history of the object per se. Still, given that the right postcentral gyrus is within primary sensorimotor cortex and is proposed to be earlier in the tactile object processing stream than anterior IPS (Peltier et al., 2007), this activation may be more likely than our left IPL region to represent the tactile history of the objects. Still further experimentation is required to tease apart the contribution of each process (tactile processing and action processing) to the ultimate representation of shape in these regions.

One might expect effects of sensory experience to appear in the somatosensory cortex contralateral to the dominant hand of the subjects. However, right somatosensory cortex activation might be expected in this study given that a subset of the objects tested were most likely handled bimanually. In addition, prior research has supported bilateral involvement of primary somatosensory cortex even in unimanual stimulation (Nihashi et al., 2005). To be clear, we do not wish to claim that this effect is right lateralized. It is quite possible that a similar effect would be present in the left postcentral gyrus if this region were to be tested in isolation. While this region was not identified using the corrected threshold for the region-defining contrast, it is clear that at the reduced threshold used to define regions of interest on the right (t = 3.07), the left postcentral gyrus is included in a continuous swath of activity that extends along the left intraparietal sulcus (see Figure 1). The modulation of activity within the left postcentral gyrus was not specifically investigated given that this region was not separately isolated at the corrected threshold. Future research could focus on this region using a different type of region of interest approach such as growing a sphere based on coordinates or anatomically defining this structure.

General Discussion

Prior research has found that retrieval of knowledge about graspable objects (i.e., imagining grasping tools) and viewing pictures of graspable objects are associated with parietal activity (Martin et al., 1996; Faillenot et al., 1997; Chao & Martin, 2000; Okada et al., 2000; Grezes & Decety, 2002; Creem-Regehr & Lee, 2005; Weisberg, van Turennout, & Martin, 2006). It is possible that the correlation we observed between activity in parietal regions during shape retrieval and amount of tactile experience with objects may be partly attributable to the inclusion of graspable objects like tools in our set of items. In fact, we would expect these items to be associated with greater motoric and tactile experience and produce increased activity in the parietal cortex for this reason. Due to the fact that our stimuli were not selected so as to be clear examples of “tools” or “non-tools” it is difficult to establish a set of criteria that can be used to divide them into these categories to be separately tested for the effects of tactile experience. However, tools are thought to activate parietal regions due to their status as objects that are the targets of actions (Creem-Regehr, 2005) and, given that this is not at odds with what we argue in this paper, we did not feel it was necessary to test for category effects beyond effects of sensorimotor experience in this dataset.

Our stimuli exhibited a moderate correlation between tactile experience and general exposure (r = .61). However, after accounting for variance in the fMRI data that can be uniquely attributed to overall sensorimotor exposure and lexical frequency, the effect of tactile experience is still significant in the left IPL and right postcentral gyrus near IPL and marginally significant in area BA3. Thus, the effect of tactile experience is not likely to be due to greater general exposure with the objects. The observation that activity associated with shape retrieval can be modulated by the amount of tactile experience suggests that a history of spatial processing of objects (e.g., making point to point comparisons within the object) is not the only reason for the involvement of the IPL in shape retrieval. If shape retrieval recruited parietal regions due to a history of spatial processing alone, then this activity should not be modulated by tactile experience.

In the current experiment we used a functional region of interest approach. When a functional region of interest approach is employed, regions are defined based on a task comparison that is orthogonal to the comparison of interest and then a threshold is set to define the regions to be probed for experimental effects. Typically, this threshold is set to be below the Bonferroni corrected threshold to ensure that patches of cortex that show the experimental effect of interest are not overlooked. When an uncorrected threshold is used to define regions one runs the risk of introducing voxels into the experimental comparison that were only present in the region defining comparison due to noise and, thus, are unlikely to be involved in the process of experimental interest. In addition, by utilizing an uncorrected threshold, one limits the claims that can be made regarding the data. Any observed significant effects of the experimental comparison are still valid, but they cannot be described as occurring in regions significantly involved in the region defining contrast. Instead, they must be described as effects occurring in regions that show a trend for involvement in the region defining contrast. Still, the technique of using an uncorrected threshold to define regions of interest is useful because it helps prevent important results from going unnoticed. In the current experiment we first probed regions that showed a significant effect of our region-defining comparison (shape retrieval relative to lexical decision) at the corrected threshold. This analysis identified regions that were significantly involved in this shape retrieval task that also showed significant modulation by tactile experience. However, the corrected threshold yielded only regions of interest in the left parietal cortex and prior work from this lab suggested that right parietal regions may play a role in shape retrieval (Oliver & Thompson-Schill, 2003). Given that right parietal cortex was of interest a priori, we also performed an analysis in which we selected an uncorrected threshold (for the region-defining contrast) to define regions in the right parietal cortex. The particular threshold we selected equated the number of voxels active in the right parietal cortex to that present in the left parietal cortex for the comparison thresholded at the Bonferroni corrected level.

One important aspect of the shape task performed in this experiment is that it does not involve visual stimuli. This is important because our interest is in the pattern of activity evoked during retrieval of appearance information. Our proposal is that by answering a question about an object’s shape in the absence of an image of the object the network of brain regions that represent shape, whether they be visual, tactile or otherwise, will become engaged. If the object were visually present then we would not know whether activity was due to reengagement of visual regions during retrieval or due to current visual processing. In this experiment regions were localized for responding more to a shape retrieval task than to a lexical decision task. The selection of the lexical decision baseline was motivated by a desire to ensure that the effect of interest, tactile experience, would not vary during the baseline trials as this would reduce our power to detect this effect. However, given that there was no other task involving concrete objects to use as a comparison in this dataset, one should not conclude that these regions respond exclusively during retrieval of object shape. Future research will be needed to determine the specificity of the response of these regions. In particular, it would be interesting to identify shape responsive regions that become consistently engaged across a variety of shape retrieval tasks. While an attempt was made to select a shape retrieval task that was not biased toward vision or tactile processing, we can not know for certain which areas would be active across a variety of shape tasks until such an experiment is performed. It is also possible that by asking subjects to retrieve the shape of objects without the objects present subjects may engage in tactile imagery that is not part of the long-term representation of object shape. While this is a possibility, we do not suspect that this task is more susceptible to this imagery than other non-visual shape retrieval tasks. To the extent that other non-visual tasks also engage tactile imagery without instruction one might consider such imagery to be an important part of how shape is retrieved and represented.

Sensorimotor models of conceptual representation (e.g., Allport, 1985, Martin, Ungerleider, & Haxby, 2000) are ambiguous with respect to whether it is the absolute or the relative amount of a particular type of sensorimotor experience that is critical in determining the degree to which that modality is involved in representation. For some objects this distinction predicts different outcomes during our shape retrieval task. For example, objects with a high proportion of tactile experience relative to total sensorimotor experience but with a low total amount of tactile exposure (e.g., a shoehorn) would be expected to recruit tactile and/or action processing regions more if the critical variable is the proportion of total experience that is tactile while they would be expected to recruit these regions less if the absolute amount of tactile experience is the critical variable. We designed our experience survey specifically to separate out general sensorimotor experience from tactile experience. However, if it were the case that absolute tactile experience is the relevant variable to explain involvement of a region during retrieval then we might expect to observe general exposure effects as well as tactile effects. Only one of the regions showing the tactile effect, in the right postcentral gyrus near the IPL, was found to have a marginal effect of general exposure. Therefore, this study does not clearly discriminate between the absolute experience versus proportion of experience characterizations of sensorimotor models. It would be interesting to specifically address this distinction in the future.

In summary, activity associated with shape retrieval was modulated by prior tactile experience in regions, such as the IPL and postcentral gyrus, that may be used to process shape information during tactile perception and action. This contributes to the previous literature by elucidating the role of parietal cortex in the retrieval of semantic knowledge about the appearance of objects (e.g., Oliver & Thompson-Schill, 2003) and supports domain specific distributed models of semantic memory (e.g., Allport, 1985; Martin, Ungerleider, & Haxby, 2000).

Acknowledgments

This research was supported by the National Institutes of Health (RO1 MH60414, RO1- MH067008, RO1- MH070850). We would like to thank Geoff Aguirre for advice on experimental design and analysis and Irene Kan, Laura Barde and Laurel Buxbaum for comments on a previous version of this manuscript. In addition, we thank Russell Epstein, Anjan Chatterjee, and the members of the Thompson-Schill lab, and the Cognitive Tea group at the University of Pennsylvania for useful feedback on this study.

Appendix 1

| Shape Retrieval Items | Lexical Decision Nonwords | Lexical Decision Abstract Words |

|---|---|---|

| ant | barpple | ability |

| ape | blipers | adjective |

| aspirin | bouse | adverb |

| ax | branship | advice |

| barn | brompt | aim |

| barrel | cafer | allow |

| basketball | dak | ambition |

| bell | dreat | apathy |

| bell peppers | drenel | appear |

| biscuit | dricks | belief |

| blackberry | drond | bid |

| boat flag | droom | bother |

| box | eletation | clue |

| bracelet | fabbip | cure |

| brick | fervice | custom |

| brownie | finter | despair |

| bush | flanter | duty |

| butter | flink | ego |

| butterfly | focho | enough |

| button | fruged | envy |

| cabbage | gaft | extra |

| cabinet | garn | fate |

| canary | garput | glory |

| capsule | gaster | gone |

| cart | glicket | grudge |

| cathedral | gration | guilt |

| chalkboard | grister | heredity |

| chimney | griver | hint |

| cliff | hance | honesty |

| cloud | hity | honour |

| clown nose | joard | ideal |

| cotton | jur | impulse |

| crossing sign | loy | inquiry |

| crown | malander | insight |

| cucumber | millete | irony |

| cup | narket | lie |

| deck | nert | memory |

| diamond | nim | mercy |

| doughnut | nurken | merit |

| dove | padit | minor |

| dumpster | pedow | mood |

| easel | perken | motive |

| egg | pilk | myth |

| egg yolk | pilm | noun |

| elephant | piln | once |

| envelope | pistem | origin |

| fire engines | prand | outcome |

| fish | prent | overlap |

| flag | pruth | pardon |

| flagpole | quertle | pat |

| football | rall | patience |

| fork | sart | pause |

| frog | smuction | permit |

| gallery | snilk | phase |

| grape | swafe | phrase |

| grass skirt | tace | pity |

| handicaped sign | tanter | plea |

| house | trang | proof |

| jackhammer | tranklet | ration |

| kitten | trasen | remark |

| knife | trinks | reminder |

| lab coat | troam | safety |

| lake | tump | satire |

| lemon | tuply | scheme |

| lens | turple | sent |

| magazine | twelity | skill |

| map | vees | suspect |

| marble | verpet | talent |

| mast | vim | theme |

| match | vrown | topic |

| money | waper | trace |

| mug | wapler | verb |

| nest | whipic | vow |

| newspaper | wicture | worth |

| offramp signs | zan | wrath |

| orange | ||

| pear | ||

| pencil | ||

| phone book | ||

| pickle | ||

| pie | ||

| pig | ||

| plum | ||

| popcorn | ||

| potato | ||

| prison | ||

| radishes | ||

| railroad | ||

| rake | ||

| raspberry | ||

| record | ||

| roof | ||

| rubber gloves | ||

| ruler | ||

| saddle | ||

| saw | ||

| sheet | ||

| shoehorn | ||

| shopping cart | ||

| skunk | ||

| squirrel | ||

| stable | ||

| staple | ||

| station | ||

| stop sign | ||

| strawberry | ||

| sword | ||

| tangerine | ||

| tarp | ||

| tennis balls | ||

| tire | ||

| toad | ||

| toast | ||

| tomato | ||

| wallet | ||

| walnut | ||

| weedwacker | ||

| yield sign | ||

| zipper |

Footnotes

A longer format version of the question was included in the survey instructions. The instructions for the tactile rating were as follows: Indicate the proportion of your total interaction with the object that has been tactile. In other words, you should think about the total amount of time you have spent interacting with that object and make an estimate of the amount of that time that you spent actually physically touching the object (regardless of whether you were also using other senses to explore the object). Select the percentage that best matches this estimate. As you consider the total amount of time spent interacting with the objects you should include all times in which you were consciously aware of the objects presence by way of any of your senses (smell, taste, touch, vision, hearing). The instructions for the familiarity question were as follows: For this question please consider how frequently you encounter each object in the world (using any of your senses). If you encounter an object fairly regularly in your everyday life, then you should respond by selecting “very frequently” and if you never encounter an object in your daily life, then you should select “very infrequently”.

There were 120 trials in the fMRI task with one item mistakenly repeated resulting in 119 unique items.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Allport DA. Distributed memory, modular subsystems and dysphasia. In: Newman SK, Epstein R, editors. Current Perspectives in Dysphasia. Edinburgh: Churchill Livingstone; 1985. pp. 207–244. [Google Scholar]

- Binkofski F, Buccino G, Posse S, Seitz RJ, Rizzolatti G, Freund H–J. A fronto-parietal circuit for object manipulation in man: Evidence from an fMRI study. European Journal of Neuroscience. 1999;11:3276–3286. doi: 10.1046/j.1460-9568.1999.00753.x. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Dohle C, Posse S, Stephan KM, Hefter H, Seitz RJ, Freund H-J. Human anterior intraparietal area subserves prehension: A combined lesion and functional MRI activation study. Neurology. 1998;50(5):1253–1259. doi: 10.1212/wnl.50.5.1253. [DOI] [PubMed] [Google Scholar]

- Bodegard A, Geyer S, Grefkes C, Zilles K, Roland PE. Hierarchical processing of tactile shape in the human brain. Neuron. 2001;31:317–328. doi: 10.1016/s0896-6273(01)00362-2. [DOI] [PubMed] [Google Scholar]

- Bodegard A, Ledberg A, Geyer S, Naito E, Zilles K, Roland PE. Object shape differences reflected by somatosensory cortical activation in human. Journal of Neuroscience. 2000;20(RC51):1–5. doi: 10.1523/JNEUROSCI.20-01-j0004.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox, Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freud H–J. Action observation activates premotor and parietal areas in a somatotopic manner: An fMRI study. European Journal of Neuroscience. 2001;13:400–404. [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Creem-Regehr SH, Lee JN. Neural representations of graspable objects: are tools special? Cognitive Brain Research. 2005;22:457–469. doi: 10.1016/j.cogbrainres.2004.10.006. [DOI] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza FX, Gati JS, Menon RS, Goodale MA. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Experimental Brain Research. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Faillenot I, Toni I, Decety J, Gregoire MC, Jeannerod M. Visual pathways for object-oriented action and object identification. Functional anatomy with PET. Cerebral Cortex. 1997;7:77–85. doi: 10.1093/cercor/7.1.77. [DOI] [PubMed] [Google Scholar]

- Frey SH, Vinton D, Norlund R, Grafton ST. Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Cognitive Brain Research. 2005;23:397–405. doi: 10.1016/j.cogbrainres.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Ashburner J, Frith CD, Poline JB, Heather JD, Frackowiak RSJ–B. Spatial registration and normalization of images. Human Brain Mapping. 1995;2:165–189. [Google Scholar]

- Gallese V, Murata A, Kaseda M, Niki N, Sakata H. Deficit of hand preshaping after muscimol injection in monkey parietal cortex. Neuroreport. 1994;5:1525–1529. doi: 10.1097/00001756-199407000-00029. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends in Neurosciences. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Weiss PH, Zilles K, Fink GR. Crossmodal processing of object features in human anterior intraparietal cortex: an fMRI study implies equivalences between humans and monkeys. Neuron. 2002;35:173–184. doi: 10.1016/s0896-6273(02)00741-9. [DOI] [PubMed] [Google Scholar]

- Grezes J, Decety J. Does visual perception of object afford action? Evidence from a neuroimaging study. Neuropsychologia. 2002;40:212–222. doi: 10.1016/s0028-3932(01)00089-6. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, Roland PE. Cross-modal transfer of information between the tactile and the visual representations in the human brain: A positron emission tomographic study. The Journal of Neuroscience. 1998;18(3):1072–1084. doi: 10.1523/JNEUROSCI.18-03-01072.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ingle D. Two visual mechanisms underlying the behavior of fish. Basic Research in Psychology. 1967;31:44–51. doi: 10.1007/BF00422385. [DOI] [PubMed] [Google Scholar]

- Jancke L, Kleinschmidt A, Mirzazade S, Shah NJ, Freund HJ. The role of the inferior parietal cortex in linking the tactile perception and manual construction of object shapes. Cerebral Cortex. 2001;11:114–121. doi: 10.1093/cercor/11.2.114. [DOI] [PubMed] [Google Scholar]

- Jeannerod M. The formation of finger grip during prehension. A cortically mediated visuomotor pattern. Behavioral Brain Research. 1986;19:99–116. doi: 10.1016/0166-4328(86)90008-2. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cerebral Cortex. 2005;15:681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krams M, Rushworth MFS, Deiber M-P, Frackowiak RSJ, Passingham RE. The preparation, execution and suppression of copied movements in the human brain. Experimental Brain Research. 1998;120:386–398. doi: 10.1007/s002210050412. [DOI] [PubMed] [Google Scholar]

- Kucera H, Francis WN. Computational Analysis of Present-day American English. Providence: Brown University Press; 1967. [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT. Automated Talairach Atlas labels for functional brain mapping. Human Brain Mapping. 2000;10(3):120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW, Phinney RE, Brefczynski-Lewis JA, DeYoe EA. Lefties get it “right” when hearing tool sounds. Journal of Cognitive Neuroscience. 2006;18(8):1314–1330. doi: 10.1162/jocn.2006.18.8.1314. [DOI] [PubMed] [Google Scholar]

- Martin A, Ungerleider LG, Haxby JV. Category-specificity and the brain: The sensory-motor model of semantic representations of objects. In: Gazzaniga MS, editor. The Cognitive Neurosciences. 2. Cambridge: MIT Press; 2000. [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV. Neural correlates of category-specific knowledge. Nature. 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- Murata A, Gallese V, Kaseda M, Sakata H. Parietal neurons related to memory-guided hand manipulation. Journal of Neurophysiology. 1996;75(5):2180–2186. doi: 10.1152/jn.1996.75.5.2180. [DOI] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. Journal of Neurophysiology. 2000;83(5):2580–2601. doi: 10.1152/jn.2000.83.5.2580. [DOI] [PubMed] [Google Scholar]

- Nichols TE, Holmes AP. Nonparametric permutation tests for functional neuroimaging: A primer with examples. Human Brain Mapping. 2002;15(1):1–25. doi: 10.1002/hbm.1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishashi T, Naganawa S, Sato C, Kawai H, Nakamura T, Fukatsu H, Ishigaki T, Aoki I. Contralateral and ipsilateral responses in primary somatosensory cortex following electrical median nerve stimulation- an fMRI study. Clinical Neurophysiology. 2005;116:842–848. doi: 10.1016/j.clinph.2004.10.011. [DOI] [PubMed] [Google Scholar]

- Newman SD, Klatzky RL, Lederman SJ, Just MA. Imagining material versus geometric properties of objects: an fMRI study. Cognitive Brain Research. 2005;23:235–246. doi: 10.1016/j.cogbrainres.2004.10.020. [DOI] [PubMed] [Google Scholar]

- Ogawa S, Menon RS, Tank DW, Kim S–G, Merkle H, Ellermann JM, 7, Ugurbil K. Functional brain mapping by blood oxygenation level-dependent contrast magnetic resonance imaging: A comparison of signal characteristics with a biophysical model. Biophysical Journal. 1993;64:803–812. doi: 10.1016/S0006-3495(93)81441-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okada T, Tanaka S, Nakai T, Nishizawa S, Inui T, Sadato N, Yonekura Y, Konishi J. Naming of animals and tools: A functional magnetic resonance imaging study of categorical differences in the human brain areas commonly used for naming visually presented objects. Neuroscience Letters. 2000;296:33–36. doi: 10.1016/s0304-3940(00)01612-8. [DOI] [PubMed] [Google Scholar]

- Oliver RT, Thompson-Schill SL. Dorsal stream activation during retrieval of object size and shape. Cognitive, Affective & Behavioral Neuroscience. 2003;3(4):309–322. doi: 10.3758/cabn.3.4.309. [DOI] [PubMed] [Google Scholar]

- Peltier S, Stilla R, Mariola E, LaConte S, Hu X, Sathian K. Activity and effective connectivity of parietal and occipital cortical regions during haptic shape perception. Neuropsychologia. 2007;45:476–483. doi: 10.1016/j.neuropsychologia.2006.03.003. [DOI] [PubMed] [Google Scholar]

- Reed CL, Shoham S, Halgren E. Neural substrates of tactile object recognition: An fMRI study. Human Brain Mapping. 2004;21:236–246. doi: 10.1002/hbm.10162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakata H, Taira M, Murata A, Mine S. Neural mechanisms of visual guidance of hand action in the parietal cortex of the monkey. Cerebral Cortex. 1995;5:429–438. doi: 10.1093/cercor/5.5.429. [DOI] [PubMed] [Google Scholar]

- Sawamura H, Georgieva S, Vogels R, Vanduffel W, Orban GA. Using functional magnetic resonance imaging to assess adaptation and size invariance of shape processing by humans and monkeys. The Journal of Neuroscience. 2005;25(17):4294–4306. doi: 10.1523/JNEUROSCI.0377-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Servos P, Lederman S, Wilson D, Gati J. fMRI-derived cortical maps for haptic shape, texture and hardness. Cognitive Brain Research. 2001;12:307–313. doi: 10.1016/s0926-6410(01)00041-6. [DOI] [PubMed] [Google Scholar]

- Shikata E, Hamzei F, Glauche V, Knab R, Dettmers C, Weiller C, Buchel C. Surface orientation discrimination activates caudal and anterior intraparietal sulcus in humans: An event-related fMRI study. Journal of Neurophysiology. 2001;85(3):1309–1314. doi: 10.1152/jn.2001.85.3.1309. [DOI] [PubMed] [Google Scholar]

- Shmuelof L, Zohary E. Dissociation between ventral and dorsal fMRI activation during object and action recognition. Neuron. 2005;47:457–470. doi: 10.1016/j.neuron.2005.06.034. [DOI] [PubMed] [Google Scholar]

- Taira M, Mine S, Georgopoulos AP, Murata A, Sakata H. Parietal cortex neurons of the monkey related to the visual guidance of hand movement. Experimental Brain Research. 1990;83:29–36. doi: 10.1007/BF00232190. [DOI] [PubMed] [Google Scholar]

- Tanabe HC, Kato M, Miyauchi S, Hayashi S, Yanagida T. The sensorimotor transformation of cross-modal spatial information in the anterior intraparietal sulcus as revealed by functional MRI. Cognitive Brain Research. 2005;22(3):385–396. doi: 10.1016/j.cogbrainres.2004.09.010. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, Kan IP, Oliver RT. Functional neuroimaging of semantic memory. In: Cabeza R, Kingstone A, editors. The Handbook of Functional Neuroimaging of Cognition. 2006. [Google Scholar]

- Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle D, Goodale M, Mansfield R, editors. Analysis of Visual Behavior. Cambridge, MA: MIT Press; 1982. pp. 549–586. [Google Scholar]

- van de Winckel A, Sunaert S, Wenderoth N, Peeters R, Van Hecke P, Feys H, et al. Passive somatosensory discrimination tasks in healthy volunteers: Differential networks involved in familiar versus unfamiliar shape and length discrimination. Neuroimage. 2005;26:441–453. doi: 10.1016/j.neuroimage.2005.01.058. [DOI] [PubMed] [Google Scholar]

- van Westen D, Fransson P, Olsrud J, Rosen B, Lundborg G, Larsson E-M. Fingersomatotopy in area 3b: an fMRI study. BMC Neuroscience. 2004;5(28):1–6. doi: 10.1186/1471-2202-5-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weisberg J, Van Turennout M, Martin A. A neural system of learning about object function. Cerebral Cortex. 2006;17:513–521. doi: 10.1093/cercor/bhj176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson M. MRC Psycholinguistic Database: Machine usable dictionary (Version 2.00) [Online] 1987 Available: http://www.psy.uwa.edu/au/mrcdatabase/uwa_mrc.htm.

- Worsley KJ, Friston KJ. Analysis of fMRI time-series revisited-again. Neuroimage. 1995;2:173–181. doi: 10.1006/nimg.1995.1023. [DOI] [PubMed] [Google Scholar]

- Zarahn E, Aguirre GK, D’Esposito M. Empirical analyses of BOLD fMRI statistics: I. Spatially unsmoothed data collected under null-hypothesis conditions. Neuroimage. 1997;5:179–197. doi: 10.1006/nimg.1997.0263. [DOI] [PubMed] [Google Scholar]

- Zhang M, Weisser VD, Stilla R, Prather SC, Sathian K. Multisensory cortical processing of object shape and its relation to mental imagery. Cognitive Affective and Behavioral Neuroscience. 2004;4:251–259. doi: 10.3758/cabn.4.2.251. [DOI] [PubMed] [Google Scholar]