Abstract

A Microsoft® Excel-based workbook designed for research analysts to use in a national study was retooled for treatment program directors and financial officers to allocate, analyze, and estimate outpatient treatment costs in the U.S. This instrument can also be used as a planning and management tool to optimize resources and forecast the impact of future changes in staffing, client flow, program design, and other resources. The Treatment Cost Analysis Tool (TCAT) automatically provides feedback and generates summaries and charts using comparative data from a national sample of non-methadone outpatient providers. TCAT is being used by program staff to capture and allocate both economic and accounting costs, and outpatient service costs are reported for a sample of 70 programs. Costs for an episode of treatment in regular, intensive, and mixed types of outpatient treatment types were $882, $1,310, and $1,381 respectively (based on 20% trimmed means and 2006 dollars). An hour of counseling cost $64 in regular, $85 intensive, and $86 mixed. Group counseling hourly costs per client were $8, $11, and $10 respectively for regular, intensive, and mixed. Future directions include use of a web-based interview version, much like some of the commercially available tax preparation software tools, and extensions for use in other modalities of treatment.

Keywords: Treatment costs, Cost analysis, Economic evaluation, Substance abuse treatment

1. Introduction

The past decade has seen considerable methodological advancement in cost analysis and the economic study of addiction in the United States. Several sound and proven methods have been developed and used to help structure and standardize estimates of substance abuse treatment services and their costs. These have included a micro-costing method for treatment services (Anderson et al., 1998), the Drug Abuse Treatment Cost Analysis Program (DATCAP; French et al., 1997), a Center for Substance Abuse Treatment (CSAT) Uniform System of Accounting and Cost Reporting (USACR; Capital Consulting, 1998) for substance abuse treatment programs and an accompanying costing tool, the Substance Abuse Treatment Cost Allocation and Analysis Template (SATCAAT; Harwood and McCliggott, 1998, October), the Cost, Procedure, Process, and Outcome Analysis (CPPOA; Yates, 1996, 1999), the Alcohol Drug Services Study (ADSS) Cost Data Audit Instrument (CDAI; Beaston-Blaakman et al., 2007b; Horgan et al., 2001; Shepard et al., 2000; Substance Abuse and Mental Health Services Administration, 2003), and the Substance Abuse Services Cost Analysis Program (SASCAP; Zarkin et al., 2004). Each has been used successfully in research studies to provide reliable estimates of treatment costs. However, cost data can serve a dual role, answering broad research and policy questions on the one hand and program management questions on the other (Cartwright, 2008). At present, research applications are better developed than management applications. Indeed, with the exception of the Yates (Yates, 1996, 1999) CPPOA methodology, existing approaches to cost data collection were all designed to be used by trained project staff to capture data for research studies. The CPPOA is distinct in that it was designed for use by individuals with varying levels of education and backgrounds. As a consequence, many of these other developments in the economic arena have not been readily available to practitioners for their direct use.

Cartwright (2008) reviews several costing methods, discussing their strengths, weaknesses, and applications. His review notes that the ADSS approach relies on a short instrument (making the burden on respondents smaller), but still employs economic concepts such as the costs of volunteer time. These features make the approach appealing for use in large-scale data collection efforts. They also give it the potential to serve a program management function as well as collecting research data. In ADSS, the greatest emphasis was placed on collecting labor costs. Staff time and wages, including the value of volunteer time, were captured for a range of personnel categories. Labor expenses constitute the bulk of costs for many programs, justifying detailed measurement in this area (Drummond et al., 2005). Nonetheless, some other cost contributors are worth considering. For example, when programs are operated by a broader parent organization, the administrative overhead (or indirect expenses) passed on to the program can be substantial (Zarkin et al., 2004). Ignoring these costs could lead to undervaluing the resources programs are using. Likewise, non-personnel costs, including buildings and equipment, can vary among programs. Capturing large capital expenses can be particularly challenging because of the need to consider costs over the product’s useful lifespan (Drummond et al., 2005). The Treatment Cost Analysis Tool (TCAT), the focus of this paper, includes explicit assessment of these areas. These are structured such that they provide a framework and some assistance to users who may be less familiar with the relevant costing concepts.

The purpose of this paper is to report costs from a large sample of outpatient drug-free (ODF) programs operating in four distinct regions in the U.S., describing in detail the technical functions of the TCAT, and illustrating the use of this costing tool. These cost estimates were developed by program personnel using the tool as part of a study funded by the National Institute on Drug Abuse. The goal of the parent project in which the TCAT was first used is to develop an information system that can be used by treatment programs to monitor their organizational health.

2. Method

2.1. Instrumentation

Using the ADSS CDAI as a starting point, the Treatment Cost Analysis Tool (TCAT) was developed with the intention of serving both research and management functions. The purpose of the TCAT is to collect, analyze, and report outpatient substance abuse treatment client volume and cost data including the allocation of overhead (indirect) costs associated with an administrative or parent agency. Administrative or parent agency overhead is obtained using provider estimates of the proportion of parent resources used by the program targeted in the cost analysis, combined with the dollar value of parent agency administrative costs (i.e., excluding all direct service program costs). The cost analysis tool is designed to be self-administered by program financial staff (or sometimes program directors) to capture these data for research studies and build a data set for economic analyses. Beyond research purposes, another purpose of the TCAT is to serve as a management tool to help community providers estimate their costs of service provision, compare their costs to other outpatient programs, and conduct planning and forecasting by using the tool to estimate the impact of “what if” cost scenarios by examining alternative business practices such as different client volume and staffing patterns.

Key features include consistency checking, programmed calculations, and reporting functions in tabular and graphic formats in a single Microsoft Excel®-based, automated, workbook package using linked worksheets. Currently, the TCAT uses inflated data from the ADSS outpatient sample (n = 222) in bar charts for managers to compare their cost data with other outpatient programs from a national sample. As experience and data from the TCAT and other cost analyses accumulate, new comparison data can be easily entered into the TCAT data table that is used to automatically generate these graphical comparison charts.

The TCAT allows treatment program personnel (rather than just research staff) to estimate accounting and economic treatment costs. Accounting costs include explicit monetary values associated with inputs used to provide treatment such as staff wages, fringe benefits, facility costs, other non-personnel costs, and so forth (Domino et al., 2005), which are standardized through a chart of accounts that serves as the foundation for a structured accounting system (Cartwright, 2008). Economic costs for treatment programs may differ from accounting costs because they also subsume some implicit costs such as the market value for donated goods and services used by a program (French et al., in press) including facilities and capital resources, and volunteers and unpaid interns. Although scientific research studies are still a primary motivator for measuring these costs, they can be used for administrative and management purposes as well.

This cost analysis tool was developed collaboratively by a team of colleagues from Texas Christian University and Brandeis University, as part of the Treatment Cost and Organizational Monitoring project (TCOM; Flynn et al., 2008). It was included in an information system for investigating program structures, operations, climate, resources, and costs as they are related to treatment service delivery. Within this multifaceted context, the cost assessment needed to produce reliable estimates at a reasonable expense and level of effort (see Luce et al., 1996). Building the TCAT upon the heritage of the ADSS approach was a promising way to meet these needs. However, the TCAT extends the level of detail for measuring costs for some essential treatment resources.

The TCAT also is distinct in that it incorporates analysis directly into the instrument, making it an analytic tool rather than simply a data collection device. It relies on the built-in facilities of the spreadsheet software for mathematical computation and graphical presentation for automating the cost analysis process. Table 1 provides an overview of the data entry and reporting elements. As users complete the TCAT, internal calculations provide results and diagnostic information. Specifically, the tool uses seven spreadsheets for data input. These capture information on expenditures concerning staff and payroll (or volunteer time), non-personnel items (including capital costs and donations), and any overhead allocations from a managing parent organization. Data on client volume and counseling activities also are measured. An additional, optional page or worksheet is included to assist in determining annualized costs for capital purchases (such as buildings or computer equipment). These input pages are linked to a series of seven output pages. Four pages present analytic results including unit costs (e.g., cost per episode or per enrolled day) and breakdowns across cost categories (e.g., personnel and non-personnel percentages). Three pages present comparative bar charts. (Analytic output is discussed in more detail below.)

Table 1.

TCAT Data Entry and Reporting Components

| Topic Area | Sample Elements | Possible Sources |

|---|---|---|

| Data entry | ||

| Parent organization | Total costs of parent agency; administrative costs of parent (excluding all direct service program costs); estimated proportion of parent resources used by the program of interest | Annual report |

| Program finances | Total costs; total revenue | Annual report |

| Clients and clinical activity | Annual admission and discharge/inactive counts; number of active clients (point prevalence); average length of stay; client capacity; individual counseling sessions attended per client per week; average number of clients per group therapy and educational sessions; group sessions attended per client per week; average group session length; average number of counselors per group session | Program MIS |

| Personnel costs | Number of staff by position (including volunteers and unpaid interns); average hourly rate by position; average number of hours worked per week by position; fringe benefit rate | Payroll reports |

| Capital costs | costs or market value for capital resources (e.g., computer systems, buildings & facilities); expected useful life; general depreciation estimates (if applicable) | Accounting records; IRS depreciation guidelines |

| Non-Personnel costs | Cost or market value of administrative items, dietary, housekeeping/laundry, medical care, laboratory, client transportation, rent/interest, taxes, insurance, etc. | Accounting records |

| Key results and comparisons (built-in) | ||

| Unit Costs | Average costs per treatment episode, per enrolled client day, per counseling hour, and per group counseling hour per client | TCAT data entry pages |

| Personnel cost analysis | Total labor costs; total wages; total fringe benefits; added economic costs of volunteers and unpaid interns; labor costs as a percentage of total costs; percentage breakdown of labor costs for counselors, medical staff, and administration | TCAT data entry pages |

| Non-personnel cost analysis | Total non-personnel costs; non-personnel costs as a percentage of total costs | TCAT data entry pages |

| Benchmark comparisons | Bar charts compare respondent program to outpatient non-methadone ADSS sample (n=222) on percentage distribution of personnel costs by type of staff, unit costs (per enrollment day, cost per counseling hour, and cost per group counseling hour per client), and hourly wages by position categories | TCAT data entry pages; built-in ADSS summary dataa |

Alcohol and Drug Services Study; Substance Abuse and Mental Health Services Administration, 2003.

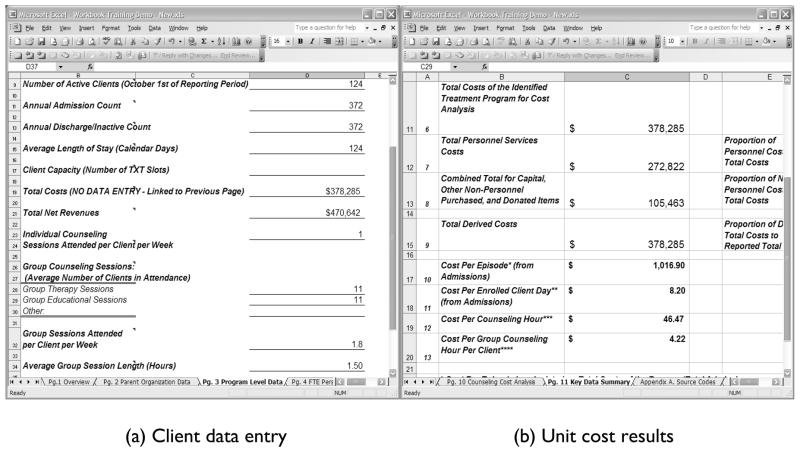

Figure 1 illustrates these input-output links within the TCAT. The left panel (Figure 1a) shows a portion of the data entry page concerning client volume and counseling activity. The analyst types appropriate values for client admissions, discharges, length of stay, and so forth in the cells of the spreadsheet (based on program records from the financial reporting period of interest). The right panel (Figure 1b) shows part of the summary page with several key cost analysis results. Values shown are generated internally by the tool. Pre-programmed calculations produce unit costs in Figure 1b (e.g., cost per episode) from values entered in Figure 1a (e.g., admissions and total costs). The analyst spends his or her time in monitoring and interpreting the analysis, and if necessary modifying the inputs, rather than in performing mathematical operations.

Figure 1.

Treatment Cost Analysis Tool (TCAT) sample pages.

A variety of built-in “consistency checks” assist users while entering data and interpreting results. Inconsistencies can reflect data entry errors or unusual program operations that might threaten the validity of conclusions. These checks involve constructing and comparing parallel estimates from separate data elements, and using ranges drawn from prior research to identify unusual cases. For example, estimates of the average number of active clients in a program are constructed from annual admissions and discharges, and then these are compared with the point prevalence count. Specifically, multiplying the number of annual client admissions (or discharges) by the average length of stay and dividing by 365 produces a close approximation to the point prevalence count. Based on field experience, these 3 values should be within about 25% of one another; larger discrepancies may indicate an error in data entry, or a highly variable census. These admission- and discharge-based client counts, as well as the point prevalence count, are used in estimating occupancy rates (i.e., each count is divided by licensed slots, with expected occupancy rates of 70–100%). Cost per episode of treatment and cost per enrolled client day are also calculated using all 3 estimators (with values expected to range within 20% of one another). These consistency checks are viewed as both technical and methodological strengths that allow for reconciliation of discrepant data. The use of parallel estimates with expected ranges helps to ensure the collection of high quality data that can be checked during data entry by program staff, as well as later by research analysts after completion and submission of the Excel workbooks. Without consistency checks, opportunities to identify questionable data and reconcile discordant entries may be missed.

The set of automatically-generated analytic results within the TCAT allows users to see the product of the cost analysis procedure immediately. These can be helpful even to experienced analysts working in the field. They also offer some benefits of cost analysis to treatment program managers, even if they do not know all the computational details. Several different pages are included that range in level of detail. For example, the “key data summary” features a table listing important unit costs and a percentage breakdown of personnel versus non-personnel expenditures, whereas the “personnel cost analysis” explores personnel costs by job category and distinguishes wages, fringe benefits, and the cost of volunteers. For comparative purposes, three bar charts present both the program and ADSS (Substance Abuse and Mental Health Services Administration, 2003) data (inflated to the current year) for personnel cost breakdown, unit costs, and staff wages. These give program personnel an additional reference point for interpreting their service costs.

2.2. Program sample

Outpatient drug-free (ODF) treatment programs participating in the TCOM parent project provided data for the cost study. The program sample was not selected randomly, nor was it intended to be representative of the ODF population of treatment programs in the U.S. Inclusion criteria were used to provide a consistent definition of “an organization,” and to ensure sufficient amounts of data. Specifically, participating providers had to be primarily non-methadone, outpatient drug abuse treatment programs (although they could be embedded in the criminal justice or mental health system), and they had to have at least three clinical staff members. A quota-sampling plan was developed to provide adequate coverage of various program types (e.g., for-profit and not-for-profit business models, and varying levels of care) and geographic regions (Southeast, Great Lakes, Gulf Coast, and the Pacific Northwest). Regions were defined in conjunction with the Addiction Technology Transfer Centers (ATTCs; including the Southern Coast ATTC, Great Lakes ATTC, Gulf Coast ATTC, and Northwest Frontier ATTC). Each ATTC assisted with program recruitment and a target of approximately 25 programs was set for each region.

Overall, 115 programs from 9 states (Florida, Idaho, Illinois, Louisiana, Ohio, Oregon, Texas, Washington, and Wisconsin) enrolled in TCOM and provided initial descriptive data during 2004 and 2005. Of these, 94 participated in the first of three years of staff and client survey data collection (in 2005). Among the 21 non-participating programs, there were 6 that closed between the time of initial data collection and the first year surveys, 3 programs were undergoing significant reorganization, 2 were rebuilding following Hurricane Katrina, and 10 others withdrew from the study. Of the 94 programs participating in survey data collection, 82 completed TCATs and e-mailed them to the project office as part of the 2005 field data collection effort. Seventy were useable for the present study. Twelve TCATs were not used; two came from programs that started or significantly changed operations during the fiscal year (1 new startup and 1 acquired in a merger), 2 were incomplete and the additional information could not be obtained, and 8 had discrepancies in client or financial data that could not be reconciled.

2.3. Data collection procedures

Data collected focused on assembling cross-sectional views of program operations, costs, staff, and clients from each of three years of data collection. The first of these went to the field in 2005, and the second and third waves occurred subsequently at approximately one-year intervals. Program structure also was assessed upon enrollment in the project, and was updated in subsequent years, using the Survey of Structure and Operations (SSO; Knight et al., 2008). Staff completed the Survey of Organizational Functioning (SOF; Broome et al., 2007b) and clients completed the Client Evaluation of Self and Treatment (CEST; Joe et al., 2002), but these data are not used in the present study. Finally, program costs were evaluated using the TCAT. A program official (usually a financial officer or program director) completed the TCAT based on program records from the most recently-completed fiscal year, and then project staff reviewed the files. A callback strategy (i.e., telephone and e-mail contact) was used to verify out of range, inconsistent, and missing data. Prior to data collection, Program Directors and Financial Officers from the participating outpatient non-methadone programs received a full day of training covering the use and interpretation of project instruments. Half of the day was spent on the TCAT, including simulated use of the cost workbook. On average, program personnel reported that it took 6 hours to complete the workbooks including gathering, compiling, and entering data. All participation in the project was voluntary, and the research protocol and procedures for informed consent were approved by the TCU Institutional Review Board.

3. Results

Table 2 describes the 70 programs in the sample. One-third offered only “regular” outpatient services (defined as less than 6 hours of structured programming each week); another 13% offered only “intensive” services (defined as a minimum of 2 hours of structured programming on at least 3 days each week; Substance Abuse and Mental Health Services Administration, 2007). The remainder, about half the programs (54%), was of a “mixed” type that included both regular and intensive tracks that could not be separated administratively. Forty-one percent of the programs were accredited by either the Joint Commission on the Accreditation of Healthcare Organizations (JCAHO) or the Commission on Accreditation of Rehabilitation Facilities (CARF). Most were private, not-for-profit programs (71%) and were affiliated with a larger parent organization (86%). Programs varied considerably in their size, with almost half (49%) having an active client count of 50 or fewer and 9% having more than 300 clients.

Table 2.

Program sample characteristics

| Characteristic (n = 70) | Percent |

|---|---|

| Level of Care | |

| Regular | 33 |

| Intensive | 13 |

| Mixed (regular & intensive) | 54 |

| JCAHO/CARF accredited | 41 |

| Ownership | |

| Private, Not-for-profit | 71 |

| Private, For-profit | 21 |

| Public | 7 |

| Affiliation with larger parent organization | 86 |

| Size (active client count) | |

| 1–50 | 49 |

| 50–100 | 21 |

| 100–150 | 7 |

| 150–200 | 6 |

| 200–250 | 3 |

| 250–300 | 4 |

| 300+ | 9 |

Client volume and cost information are presented in Table 3 for each of the three major types of ODF programs (regular, intensive, and mixed). Costs reported here are inflated to 2006 U.S. dollar values using the Consumer Price Index for “services by other medical professionals” (Bureau of Labor Statistics, n.d.), to maximize their relevance. On average, regular outpatient programs tended to be moderately sized (average active client count of 85.5 and 275.4 annual admissions), with a typical length of stay just over 3 months (104.4 days). Consistent with their more specialized focus, intensive programs tended to be relatively small (averaging an active client count of 33.0 and annual admissions of 210.2) and to last about 2 months (58.7 days). Programs with mixed regular and intensive tracks tended to be the largest (with an average active client count of 140.2 and annual admission of 458.8) and their length of stay was approximately 4 months (126.2 days). The relative size and duration of these programs also have implications for their costs.

Table 3.

Program Characteristics and Costs by Outpatient Treatment Type

| Regular (n = 23) | Intensive (n = 9) | Mixed (n = 38) | ||||

|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | |

| Active client count | 85.5 | 106.8 | 33.0 | 36.5 | 140.2 | 172.7 |

| Annual admissions | 275.4 | 285.2 | 210.2 | 185.1 | 458.8 | 523.3 |

| Annual discharges | 252.7 | 288.5 | 200.4 | 185.2 | 449.8 | 564.8 |

| Average length of stay (days) | 104.4 | 42.4 | 58.7 | 15.1 | 126.2 | 61.4 |

| Occupancy rate | 94% | 87% | 75% | 41% | 63% | 26% |

| Licensed slots | 101.1 | 133.4 | 41.3 | 48.2 | 203.1 | 201.3 |

| Total annual cost | $241,011 | $286,026 | $253,018 | $215,397 | $543,381 | $525,239 |

| 20% Trimmed mean | $162,761 | $196,450 | $406,526 | |||

| Personnel/Labor | $174,883 | $199,140 | $177,552 | $150,522 | $372,333 | $354,330 |

| 20% Trimmed mean | $127,590 | $141,657 | $279,871 | |||

| Non-Personnel | $67,689 | $94,572 | $75,244 | $77,117 | $167,991 | $176,133 |

| 20% Trimmed mean | $37,449 | $51,486 | $118,456 | |||

| Treatment episode | $926 | $404 | $1,277 | $358 | $2,535 | $4,955 |

| 20% Trimmed mean | $882 | $1,310 | $1,381 | |||

| Enrolled day | $9 | $3 | $23 | $7 | $18 | $25 |

| 20% Trimmed mean | $9 | $23 | $11 | |||

| Counseling hour | $80 | $55 | $88 | $30 | $121 | $157 |

| 20% Trimmed mean | $64 | $85 | $86 | |||

| Group counseling hour per client | $10 | $6 | $11 | $3 | $14 | $14 |

| 20% Trimmed mean | $8 | $11 | $10 | |||

The cost data in Table 3 are summarized in two ways – both using the conventional mean and standard deviation statistics, and using the trimmed mean. Trimmed means are computed by excluding a predetermined number of the largest and smallest values in the data (Winer, 1971), in order to describe the central tendency for the main body of the distribution. They are therefore less sensitive to the extreme values that sometimes occur with financial data. The trimmed means in Table 3 exclude the highest 20% of cases and the lowest 20%. That degree of trimming usually makes a good balance between the goals of controlling outliers and efficiently using the data (see Wilcox, 1998 for an introductory discussion).

The trimmed means in Table 3 are typically lower than the corresponding sample means, reflecting the potential impact of a small number of programs with unusually high costs. Not surprisingly, the types of programs showed variations in their overall costs. Of most relevance to practitioners, however, are the unit costs, such as cost of a treatment episode or an enrolled day. The trimmed means suggest typical costs for the regular programs would be $882 for an episode and $9 on a daily basis. In contrast, the higher levels of contact in intensive programs yield estimates of $1,310 for an episode and $23 for a day of care. Finally, in mixed programs the (trimmed) average cost for an episode was $1,381, for a cost of $11 per day.

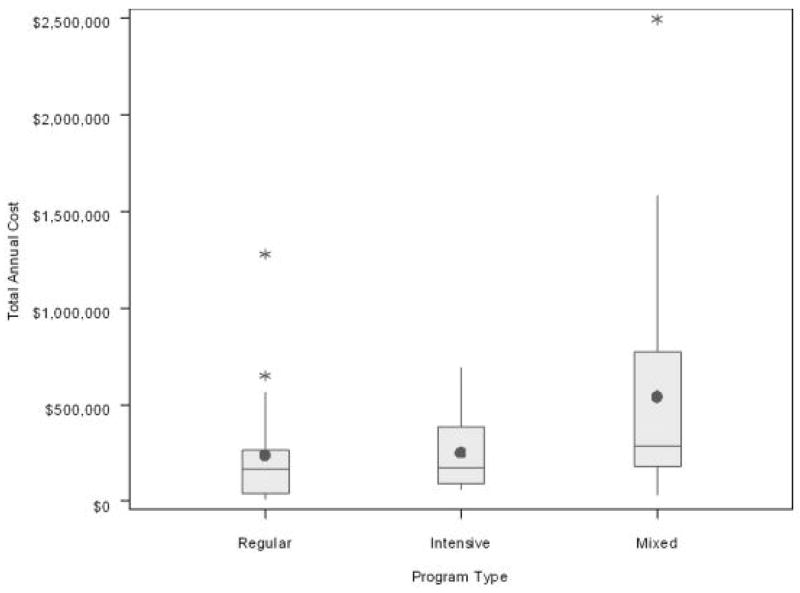

In addition to the differences between the three program types, the data suggest considerable difference within each type, as well. These differences are hinted at by the magnitude of the standard deviations in Table 3, which are often substantial, especially for the mixed category. The situation is illustrated more clearly in Figure 2. The total annual costs for programs in each of the three types appear in box-and-whisker plots, showing the range of the data before trimming. The length of each box represents the middle 50% of programs (the “interquartile range”), and within each box the dot shows the sample mean and the horizontal line the sample median. The vertical lines, or “whiskers,” represent the upper and lower quarters of the distribution, out to a distance of 1.5 times the interquartile range; the asterisks show extreme programs that fall beyond that range. The plots show a substantial range of total cost figures among programs within both the regular and intensive types – that is, programs offering the same general model of care. However, this situation is most extreme for the mixed programs. These programs are extraordinarily diverse in their configuration and, by extension, in their costs. More detailed study is clearly needed to explore the determinants of these costs.

Figure 2.

Total annual costs, in 2006 dollars, by outpatient program type.

4. Discussion

The TCAT, a computerized and self-administered cost analysis tool for generating non-methadone outpatient substance abuse treatment service costs in the U.S., was used to allocate and analyze the full costs of treatment, including administrative overhead associated with a parent organization. Overall, seventy completed cost analyses from the first year of data collection generated the most current unit of service costs available for outpatient non-methadone treatment programs. With training and ongoing technical assistance as part of a reconciliation process, program staff (e.g., financial officers, program directors, etc.) can estimate and analyze the combined accounting and economic costs of treatment and generate important unit costs (e.g., cost per episode or per enrolled day) and breakdowns across cost categories (e.g., personnel and non-personnel percentages). Output from these analyses can be used for both program and research purposes. Distinct advantages of this cost analysis tool are the immediate feedback and comparative reporting functions that offer program personnel an additional reference point for interpreting their service costs. Included are built in consistency checks that provide opportunities to identify inconsistent data and consequently rectify discrepant data entries.

Lessons learned from earlier research-administered cost analyses were reinforced through the self-administered approach used in the TCAT. Collecting cost data is more complex and time consuming than typical methods used in other research field data collection efforts such as cross sectional surveys or self-administered questionnaires. Collecting quality cost data involves reconciliation, particularly when analyses indicate inconsistencies, potential data entry errors, and unusual program operations. This process requires time and effort in submitting, reviewing, responding, and revising cost workbooks. However, the use of built-in “consistency checks,” such as constructing and comparing parallel estimates from separate data elements and using ranges drawn from prior research to identify unusual cases, helps facilitate progress toward attaining valid interpretations and conclusions.

Defining organizational units for analysis can be challenging given the different perspectives between community-based providers and researchers. In the present project, for pedagogical purposes, a family analogy was adopted to assist in defining and determining units of analyses or cost centers of interest. The administrative agency was identified as the “parent” and various treatment programs were categorized as “siblings,” such as the “A Street Methadone Clinic, B Street Outpatient Program, or C Street Residential House.” Use of this analogy was understandable, acceptable, and helpful in defining the sample and clearly identifying cost centers.

Early on, we discovered that some of the research design components had unintended but positive effects on program learning, which in some cases impacted managerial practices. For example, during a training session when participants were asked to identify cost centers according to types of services defined by the research team (i.e., regular and intensive), several program financial officers responded that they had not been keeping separate “books” for their intensive and regular programs/services, but they now saw a good reason to separate them. They asked if they could do so when they returned to their programs and then add these units to the study sample. Another unanticipated request was when several participants asked for advance copies of the TCAT for their own use outside of the study for potential adaptation and use in other programs within their agencies.

Additional lessons and benefits were in developing new lines of communication and collaboration within participating programs. The TCAT requires input information or knowledge that is typically available from different sectors of a treatment agency: clinical information (such as client flow or length of stay) known by program/clinical directors, and financial information (such as total costs and labor costs) known by financial officers. Staff who performed these functions and who otherwise may have had very little contact were brought together in training sessions and subsequent collaborations while completing TCATs. Staff sometimes gained a better appreciation of one another’s perspective and information needs through these interactions. Situations were encountered where agency financial staffs were working from budgets that indicated different program staffing levels than were realized in clinics, and when this occurred, by working together, clinical and financial staff were able to identify and rectify these discrepancies.

Finally, the level of sophistication of financial systems varied substantially across the sample, thus the amount of technical assistance needed with the cost tool also differed considerably. In addition, some individuals with a stronger business background saw an application for the TCAT as a planning and forecasting tool for program management. For example, a program director indicated the tool could be used for “what if” calculations to determine client flow patterns in relation to staffing, and subsequent calculations/results could be used to negotiate additional counseling positions with a program’s parent or administrative agency.

Limitations of the study include a non-random national sample that limits generalization to the broader outpatient treatment system in the U.S. However, as discussed by Knight and colleagues (2008) the sample was similar to the National Survey of Substance Abuse Treatment Services (N-SSATS; U.S. Department of Health and Human Services, 2006) sample for the participating regions, which was encouraging given the sampling plan had to conform to funding constraints. To the best of our knowledge, ADSS (Substance Abuse and Mental Health Services Administration, 2003) was the first and only cost study to-date in the U.S. using a national sample, to study the major treatment modalities, and use of such a sample in the present study was beyond available resources. The only other large representative study was the one by Zarkin et al. (2004) that examined costs of methadone treatment. Because our sample was not nationally representative of outpatient treatment, caution should be used when generalizing from the results. Also, it should be noted that the TCAT measures costs from the perspective of the treatment program. Those wishing to conduct economic analyses from some other perspective, such as including opportunity costs for client time, will require supplemental data.

Nonetheless, building upon the methodological foundations of the costing approach used in ADSS helps to make the TCAT a reasonable choice for assessing costs in future large-scale studies. ADSS relied on a relatively short and flexible instrument. Reduced length meant foregoing some detail, but that can be an appropriate trade-off when working with a large sample (Cartwright, 2008). Relative to the original ADSS instrument, the TCAT extends coverage of some cost components, particularly with regard to non-personnel costs and administrative overhead. However, it retains much of the flexibility and the computational elements of the original, which will serve to make the TCAT useful in many of the same situations.

Another future direction is the use of an Internet version designed to approximate an interview format, similar to commercially-available tax preparation software. Internet-based applications offer several advantages, including wider access, centralized data capture, and ease of maintenance and updates. Perhaps the greatest strength, however, is the capacity to customize and streamline the user experience to obtain detailed data with less burden. For example, collecting salary or wage information for each employee separately yields potentially more accurate results than collecting similar data on job categories (Beaston-Blaakman et al., 2007a). Unfortunately, providing individual information can mean extensive data entry and requires a lengthy instrument to accommodate the data. The TCAT’s Internet version (TCAT-I) addresses this challenge by displaying only as many entry rows as the program has staff members, and using drop-down lists to assist in data entry. Thus, the TCAT-I can achieve detail more conveniently.

Recognizing that cost analysis is the first step in economic evaluations and “only a partial form of economic appraisal” (Drummond et al., 2005, p.2), the TCAT offers an opportunity to develop the data sets needed to conduct separate and subsequent economic investigations (e.g., cost-effectiveness, cost function analyses, etc.). Indeed, such analyses have already been produced from the TCAT and its predecessor the CDAI (for example, see Beaston-Blaakman et al., 2007b; Broome et al., 2007a, 2008). The need for economic evaluation is not unique to the U.S., either. Nationalized or subsidized treatment systems also must allocate their budget in a way that best supports outcomes, which can require monitoring and containing costs. The TCAT is available for use outside the U.S. with the caveat that adaptations may be needed depending upon the funding mechanisms, cost centers, and other treatment system differences that may not correspond with current practice in the U.S. Dollars can be easily converted to other currencies; however, as we have learned in our work in England and Italy, the ways in which substance abuse treatment services are delivered and funded are different than those in the U.S. Applications of the TCAT in countries other than the U.S. will potentially require changes in the scope of the tool, but some of the technology and methodology (e.g., consistency checking, programmed calculations, and reporting functions in tabular and graphic formats in a single Microsoft Excel®-based, automated, workbook package using linked worksheets) should remain constant. For example, definitions of programs and units of analyses differ across countries, but once they have been clearly defined the TCAT can be modified for use in these settings.

In summary, the TCAT is a promising new costing tool that derives from established methodologies and reflects economic principles. In addition to its research applications, the potential for the TCAT to be used in a self-administered manner opens new possibilities for treatment programs to gain insight into their own costs and to use economic information to support management decision-making. Supporting researchers and practitioners in collecting and applying cost data is expected to help improve the quality of care in turn.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Anderson DW, Bowland BJ, Cartwright WS, Bassin G. Service-level costing of drug abuse treatment. J Subst Abuse Treat. 1998;15:201–211. doi: 10.1016/s0740-5472(97)00189-x. [DOI] [PubMed] [Google Scholar]

- Beaston-Blaakman A, Flynn PM, Reuben E, Shepard DS. Findings from a comparative study of brief cost methods in substance abuse treatment research. Addiction Health Services Research Conference; Athens, GA. 2007a. [Google Scholar]

- Beaston-Blaakman A, Shepard DS, Horgan C, Ritter G. Organizational and client determinants of cost in outpatient substance abuse treatment. Journal of Mental Health Policy and Economics. 2007b;1:3–13. [PubMed] [Google Scholar]

- Broome KM, Beaston-Blaakman A, Knight DK, Flynn PM. Organizational and clientele predictors of costs in outpatient drug abuse treatment. Economic models and methods; Symposium conducted at the annual Addiction Health Services Research Conference; Athens, GA. 2007a. [Google Scholar]

- Broome KM, Beaston-Blaakman A, Knight DK, Flynn PM. Organizational and clientele predictors of costs in outstanding drug abuse treatment. 2008 Manuscript submitted for publication. [Google Scholar]

- Broome KM, Flynn PM, Knight DK, Simpson DD. Program structure, staff perceptions, and client engagement in treatment. J Subst Abuse Treat. 2007b;33:149–158. doi: 10.1016/j.jsat.2006.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bureau of Labor Statistics. 2006 Consumer Price Index detailed report tables. nd Retrieved August 15, 2008 from http://www.bls.gov/cpi/cpi_dr.htm#2006.

- Capital Consulting. Measuring the cost of substance abuse treatment services: An overview. Center for Substance Abuse Treatment, Substance Abuse and Mental Health Administration; Rockville, MD: 1998. [Google Scholar]

- Cartwright WS. A critical review of accounting and economic methods for estimating the costs of addiction treatment. J Subst Abuse Treat. 2008;34:302–310. doi: 10.1016/j.jsat.2007.04.011. [DOI] [PubMed] [Google Scholar]

- Domino M, Morrissey JP, Nadlicki-Patterson T, Chung S. Service costs for women with co-occurring disorders and trauma. Substance Abuse Treatment. 2005;28:135–143. doi: 10.1016/j.jsat.2004.08.011. [DOI] [PubMed] [Google Scholar]

- Drummond MF, Sculpher MJ, Torrance GW, O’Brien B, Stoddart GL. Methods for the economic evaluation of health care programmes. Oxford University Press; Oxford, UK: 2005. [Google Scholar]

- Flynn PM, Knight DK, Broome KM, Simpson DD. Integrating treatment costs with organizational and client functioning. 2008. Manuscript submitted for publication. [Google Scholar]

- French MT, Dunlap LJ, Zarkin GA, McGeary KA, McLellan AT. A structured instrument for estimating the economic cost of drug abuse treatment: The Drug Abuse Treatment Cost Analysis Program (DATCAP) J Subst Abuse Treat. 1997;14:445–455. doi: 10.1016/s0740-5472(97)00132-3. [DOI] [PubMed] [Google Scholar]

- French MT, Popovici I, Tapsell L. The economic costs of substance abuse treatment: Updated estimates and cost bands for program assessment and reimbursement. J Subst Abuse Treat. doi: 10.1016/j.jsat.2007.12.008. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harwood HJ, McCliggott D. Unit costs and substance abuse treatment (Newsletter published by the Association for Health Services Report) Connection. 1998 October;5:8. [Google Scholar]

- Horgan CM, Reif S, Ritter GA, Lee MT. Organizational and financial issues in the delivery of substance abuse treatment services. In: Galanter M, editor. Recent developments in alcoholism: Volume 15 Services research in the era of managed care. Kluwer Academic/Plenum Publishers; New York: 2001. pp. 9–29. [DOI] [PubMed] [Google Scholar]

- Joe GW, Broome KM, Rowan-Szal GA, Simpson DD. Measuring patient attributes and engagement in treatment. J Subst Abuse Treat. 2002;22:183–196. doi: 10.1016/s0740-5472(02)00232-5. [DOI] [PubMed] [Google Scholar]

- Knight DK, Broome KM, Simpson DD, Flynn PM. Program structure and counselor-client contact in outpatient substance abuse treatment. Health Serv Res. 2008;43:616–634. doi: 10.1111/j.1475-6773.2007.00778.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luce BR, Manning WG, Siegel JE, Lipscomb J. Estimating costs in cost-effectiveness analysis. In: Gold MR, Siegel JE, Russell LB, Weinstein MC, editors. Cost-effectiveness in health and medicine. Oxford University Press; New York: 1996. pp. 176–213. [Google Scholar]

- Shepard DS, Hodgkin D, Anthony Y. Analysis of hospital costs: A manual for managers. World Health Organization, Health Systems Development Program; Geneva, Switzerland: 2000. [Google Scholar]

- Substance Abuse and Mental Health Services Administration. The ADSS Cost Study: Costs of substance abuse treatment in the specialty sector. Office of Applied Studies; Rockville, MD: 2003. [Google Scholar]

- Substance Abuse and Mental Health Services Administration. National Survey of Substance Abuse Treatment Services (N-SSATS): 2006. Office of Applied Studies; Rockville, MD: 2007. [Google Scholar]

- U.S. Department of Health and Human Services. National Survey of Substance Abuse Treatment Services (N-SSATS), 2005. Inter-University Consortium for Political and Social Research; Ann Arbor, MI: 2006. [Google Scholar]

- Wilcox RR. How many discoveries have been lost by ignoring modern statistical methods? Am Psychol. 1998;53:300–314. [Google Scholar]

- Winer BJ. Statistical principles in experimental design. McGraw-Hill, Inc.; New York: 1971. [Google Scholar]

- Yates BT. Analyzing costs, procedures, processes, and outcomes in human services. Sage; Thousand Oaks, CA: 1996. [Google Scholar]

- Yates BT. Measuring and improving cost, cost-effectiveness, and cost-benefit for substance abuse treatment programs. National Institute on Drug Abuse; Bethesda, MD: 1999. [Google Scholar]

- Zarkin GA, Dunlap LJ, Homsi G. The substance abuse services cost analysis program (SASCAP): A new method for estimating drug treatment services costs. Eval Program Plann. 2004;27:35–43. [Google Scholar]