Abstract

Background

Bayesian unsupervised learning methods have many applications in the analysis of biological data. For example, for the cancer expression array datasets presented in this study, they can be used to resolve possible disease subtypes and to indicate statistically significant dysregulated genes within these subtypes.

Results

In this paper we outline a marginalized variational Bayesian inference method for unsupervised clustering. In this approach latent process variables and model parameters are allowed to be dependent. This is achieved by marginalizing the mixing Dirichlet variables and then performing inference in the reduced variable space. An iterative update procedure is proposed.

Conclusion

Theoretically and experimentally we show that the proposed algorithm gives a much better free-energy lower bound than a standard variational Bayesian approach. The algorithm is computationally efficient and its performance is demonstrated on two expression array data sets.

Background

Unsupervised clustering methods from machine learning are very appropriate in extracting structure from biological data sets. There has been extensive work in this direction using hierarchical clustering analysis [1], K-Means clustering [2] and self-organizing maps [3]. Bayesian methods are an effective alternative since they provide a mechanism for inferring the number of clusters. They can easily incorporate priors which penalise over-complexed models which would fit to noise and they allow probabilistic confidence measures for cluster membership. In this paper, we focus on Bayesian models which use Dirichlet priors. Examples of these models include Latent Dirichlet Allocation [4] (LDA) for use in text modeling and Latent Process Decomposition (LPD) [5] for analysis of microarray gene expression datasets. One appealing feature of the latter models is that each data point can be stochastically associated with multiple clusters. One approach to model inference is to use methods such as Markov Chain Monte Carlo and Gibbs sampling. However, for the large datasets which occur in many biomedical applications these methods can be too slow for certain tasks such as model selection. This motivates our interest in computationally efficient variational inference methods [4-6].

Typically, these inference methods posit that all the latent variables and model parameters are independent of each other (i.e. a fully factorized family) which is a strong assumption. In this paper we propose and study an alternative inference method for LPD, which we call marginalized variational Bayesian (MVB). In this approach the latent process (cluster) variables and model parameters are allowed to be dependent on each other. As we will show in the next section, this assumption is made feasible by marginalizing the mixing Dirichlet variables, and then performing inference in the reduced variable space. This new approach to constructing an LPD model theoretically and experimentally provides much better free-energy lower bounds than standard a variational Bayes (VB) approach [6,7]. Moreover, the algorithm is computationally efficient and converges faster, as we demonstrate with experiments using expression array datasets.

Methods

The LPD probabilistic model

We start by recalling LPD [5]. Let d index samples, g the genes (attributes) and k the soft clusters (samples are represented as combinatorial mixtures over clusters). The numbers of clusters, genes and samples are denoted , , and respectively. For each data Ed, we have a multiple process (cluster) latent variable Zd = {Zdg: g = 1,..., } where each Zdg is a -dimensional unit-basis vector, i.e., choosing cluster k is represented by Zdg, k = 1 and Zdg, j = 0 for j ≠ k, otherwise. Given the mixing coefficient θd, the conditional distribution of Zd is given by . The conditional distributions, given the latent variables, is given by , where is the Gaussian distribution with mean μ and precision β.

Now we introduce conjugate priors over parameters θ, μ, β. Specifically, we choose p(θd) = Dir(θd|α), and , and p(β) distributed as ∏gk Γ(βgk|a0, b0) where Γ is defined by . We assume the data is i.i.d. and let Θ = {μ, β }. The joint distribution is given by

| (1) |

One can easily see that the marginal likelihood p(E|Θ) is the same as that in [5]. It is important to note that, in standard Gaussian mixture models [8], each data point is only related with a -dimensional latent variable which restricts the data to being in one cluster. Instead, in LPD each data point Ed is associated with multiple latent variables Zd = {Zdg: g = 1,..., }, and thus Ed is stochastically associated with multiple clusters.

Marginalized variational Bayes

In this section we describe a marginalized variational Bayesian approach for LPD. The target of model inference is to compute the posterior distribution p(θ, Z, Θ |E) = p(E, θ, Z|Θ)p(Θ)/p(E). Unfortunately, this involves computationally intensive estimation of the integral in the evidence p(E). Hence, we approximate the posterior distribution in a hypothesis family whose element are denoted by q(θ, Z, Θ).

The standard variational bayesian method [7,10] uses the equality:

| (2) |

Our optimization target is to maximize the free-energy: which, equivalently, minimizes the KL-divergence. One standard way is to choose the hypothesis family in a factorized form q(θ, Z, Θ) = q(θ)q(Z)q(Θ). In this setting, the free-energy lower bound (2) for the likelihood can be written as:

| (3) |

In this paper we study an alternative approach motivated by [9] which only marginalizes the latent variable θ and do variational inference only with respect to the leftover latent variable Z. In essence, we assume that the latent variables θ can be dependent on Z, Θ and the hypothesis family is chosen in the form of q(θ, Z, Θ) = q(θ |Z, Θ)q(Z)q(Θ). Since the distribution q(θ|Z, Θ) is arbitrary, let it be equal to . Putting this into equation (2) and observing that gives

| (4) |

| (5) |

Therefore, it is sufficient to maximize the lower bound

| (6) |

Observe that log . Consequently, we see that

| (7) |

As mentioned above, since θ can be dependent on Z, Θ, marginalized VB (MVB) yields a tighter lower bound for the likelihood than the standard VB approach in [6], thus potentially yielding better clustering results.

Model inference and learning

We now turn our attention to the derivation of updates for marginalized VB following the inference methodology [7,10]. For simplicity, let the posterior distribution q(Z), q(μ), q(β) be indexed by parameters. Specifically, we assume that , , and q(β) = ∏g, k Γ(βgk|agk, bgk). Correspondingly, the free-energy lower bound ℒ(q(Z), q(Θ)) in equation (6) becomes a variational functional over these parameters, and hence we use ℒ(R, μ, β) later on. The model inference can be summarized by the following coordinate ascent updates.

Let Z\dg denote the random variables excluding Zdg. For any d, g let Θ and Z\dg be fixed, then we take the functional derivative of the free-energy ℒ(q(Z), q(Θ)) w.r.t. q(Zdg) and obtain the update:

| (8) |

For the updates for q(Θ), we obtain

| (9) |

Marginalizing out θ in (1) yields

| (10) |

Estimating the expectations of the log likelihoods in equations (8) and (9), we derive the variational EM-updates as follows. Details are postponed to the Appendix.

E-step: using equation (8) and denoting the digamma function by ψ, we have

| (11) |

where Ndg, k is given by 0.5(ψ(agk)+log bgk) - 0.5agkbgk((Edg - mgk)2 + ) and rdg, k should be normalized to one over k.

M-step: using equation (9):

| (12) |

| (13) |

| (14) |

| (15) |

We pursue the above iterative procedure until convergence of the lower bound ℒ(R; Θ) whose evaluation is given in the Appendix. Since Zdg, k determines the cluster for the observed data point Ed at attribute g and rdg, k is its expectation, we intuitively assign data Ed to cluster arg max{∑grdg;k: k = 1,...., }. We can also do model selection over the number of clusters based on a free energy lower bound of the marginalized VB. Experiments in the next section show that this approach is reasonable.

Results

We ran marginalized VB on three data sets. The first was the wine data set from the UCI Repository [11]: this has 178 samples and each sample has 13 features. This data set was chosen for the purpose of validating the proposed method since there are 3 distinct clusters present (derived from 3 cultivars). As more biologically relevant examples we then selected two cancer expression array datasets. The first of these was a lung cancer data set [12] consisting of 73 samples and 918 features. The second was a leukemia data set [13] with 90 samples and 500 features. All the data sets were normalized to zero mean and unit variance and the hyper-parameters m0, v0, a0, and b0 were chosen to have the same values in both standard VB and marginalized VB. Since the datasets are normalized and m0, v0 are hyper-parameters of the Gaussian prior distribution over the mean for the data, it is reasonable to choose m0 = 0, v0 = 1. For similar reasons, given a0, b0 are hyper-parameters of the Gamma prior distribution over the precision (inverse variance) of the data and the mean of a Gamma distributed random variable is a0b0, we chose a0 = 20 and b0 = 0.05 throughout these experiments.

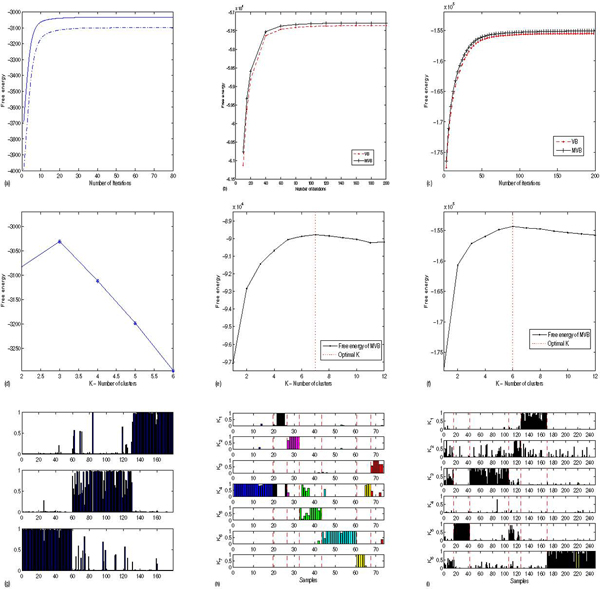

First we compared the free energy lower bound of marginalized VB and standard VB based on 30 random initialization. In Figure 1 (top row) we observe an improvement in the free energy as a function of iteration step, for marginalized VB over standard VB. In analogy to standard VB, marginalized VB can determine the appropriate number of soft clusters by estimating the free energy bound given by equation (6) in contrast to the hold-out cross-validation procedure for a maximum likelihood approach to LPD [5]. To investigate the effectiveness of this approach to model selection, free energies were averaged over 20 runs from different random initializations. As shown in Figure 1 (middle row), marginalized VB performed well in determining the correct number of clusters (three) in the UCI wine data set. For the cancer array datasets, the peak in the averaged free energy is less marked with an indication of six soft clusters for the leukemia data set and seven clusters for the lung cancer data set.

Figure 1.

Results for the wine data set (left column), lung cancer data set (middle column) and leukemia data set (right column). Top row (a-c): free energy bounds comparison (upper curve:MVB, lower curve:VB). Middle row (d-f): free energy (y-axis) versus , the number of clusters. Bottom row (g-i): the normalized ∑grdg, k gives a confidence measure that sample d belongs to a cluster k. For the two cancer datasets, samples separated by dashed lines belong to an identified class e.g. adenocarcinoma samples or small cell lung cancer samples (figure (h), see text).

In the bottom row of Figure 1, we see that marginalized VB shows quite promising clustering results using the normalized ∑grdg, k: these peaks indicate the confidence in the allocation of the dth sample to the kth cluster and accord well with known classifications. The lung cancer dataset of Garber et al [12] (middle column, Figure 1) consisted of 73 gene expression profiles from normal and tumour samples with the tumours labelled as squamous, large cell, small cell and adenocarcinoma. The samples are in the order in which they are presented in the original paper [12] with the dashed lines showing their original principal sample groupings. As with Garber et al [12] we identified seven clusters in the data with the adenocarcinoma samples falling into three separate clusters with strong correlation with clinical outcomes. For their ordering (which we follow) samples 1–19 belong to adenocarcinoma cluster 1, samples 20–26 belong to adenocarcinoma cluster 2, samples 27–32 are normal tissue samples, samples 33–43 are adenocarcinoma cluster 3, samples 44–60 are squamous cell carcinomas, samples 61–67 are small cell carcinomas and samples 68–73 are from large cell tumours.

As our last example, we applied the proposed MVB method to an oligonucleotide microarray dataset from 360 patients with acute lymphoblastic leukemia (ALL) from Yeoh et al [13]. ALL is known to have a number of subtypes with variable responses to chemotherapy. In many cases fusion genes are implicated in the genesis of the disease. For the Yeoh et al [13] dataset, samples were drawn from leukemias with rearrangements involving BCR-ABL, E2A-PBX1, TEL-AML1, rearrangements of MLL gene, hyperdiploid karyotope (more than 50 chromosomes) and T lineage leukemias (T-ALL). The free energy is plotted in Figure 1 (right column, middle row) with a peak suggesting 6 subtypes. The dashed lines represent the original demarcations of groups according to known genetic rearrangement. Samples 1–15 are BCR-ABL, 16–42 are E2A-PBX1, 43–106 Hyperdiploid > 50, 107–126 MLL, 206–248 T-ALL, 249–327 TEL-AML1, 328–335 Group23 and 127–205 are labelled as Others. Some groupings, such as E2A-PBX1, are very distinct. However, the overall groupings are not as well defined as with lung cancer.

Conclusion

We have proposed an efficient variational Bayesian inference method for LPD probabilistic models. By allowing the variables to be dependent on each other, the method can provide more accurate approximation than standard VB. Also, the method provides a principled approach to model selection via the free energy bound. Promising clustering results were also reported on lung cancer and leukemia data sets. Extensions of this method to semi-supervised clustering will be reported elsewhere.

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

YY and CC conceived the method and drafted the manuscript. PL and YY coded the algorithm and implemented the experiments. All authors read and approved the final manuscript.

Appendix

In this appendix we derive the EM-updates and free energy bound for MVB.

Derivation of updates

Noting that, for any d, g, ∑kZdg, k = 1 and denoting the number of features by we obtain from equation (10):

| (16) |

Since , putting this observation into the log p(E, Z|Θ) yields:

where constant terms are independent of Zdg, k. Hence, substituting this into equation (8) we conclude that

| (17) |

To estimate the expectation of the Normal distribution, we use the following observations (e.g. [7]) for the Gamma and Normal distributions:

and

Consequently, simple manipulation yields:

equals, up to a constant term:

| (18) |

We also use approximating methods [9] to estimate log . For this purpose, we observe, for any positive random variable x, that

and , . Plugging the above observations into equation (17) yields the desired E-step updates.

For the updates for q(Θ), the updates are essentially the same as those in [6,7] since the associated terms with variables with Θ in [log p(E, Z|Θ)] are exact the same, that is, Θ only appears in the Normal distribution. Hence, noting that the product of two Gamma (Normal) distributions is a Gamma (Normal) distribution, we can obtain, from equations (16) and (9), the M-step updates.

Free energy bound

The free-energy lower bound of marginalized VB is defined by equation (6):

From the fact that Γ(x + 1) = xΓ(x) for any x > 0, we know that , where we use the convention . Putting this equation into the expression (10) of log likelihood, we obtain:

Since we used the convention , . It remains to estimate the term for g = 1,..., - 1. To this end, we use the approximation (18) again to get:

Consequently, we conclude:

| (19) |

where the convention is used again.

In addition,

For the KL divergences, we have:

and

Acknowledgments

Acknowledgements

This work is supported by EPSRC grant EP/E027296/1.

This article has been published as part of BMC Proceedings Volume 2 Supplement 4, 2008: Selected Proceedings of Machine Learning in Systems Biology: MLSB 2007. The full contents of the supplement are available online at http://www.biomedcentral.com/1753-6561/2?issue=S4.

Contributor Information

Yiming Ying, Email: enxyy@bris.ac.uk.

Peng Li, Email: enxpl@bris.ac.uk.

Colin Campbell, Email: C.Campbell@bris.ac.uk.

References

- Eisen MB, et al. Cluster analysis and display of genome-wide expression patterns. Proc Natl Acad Sci USA. 1998;95:14863–14868. doi: 10.1073/pnas.95.25.14863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tavazoie S, et al. Systematic determination of genetic network architecture. Nature Genetics. 1999;22:281–285. doi: 10.1038/10343. [DOI] [PubMed] [Google Scholar]

- Tamayo P, et al. Interpreting patterns of gene expression with self-organizing maps: methods and application to hematopoietic differentiation. Proc Natl Acad Sci USA. 1999;96:2907–2912. doi: 10.1073/pnas.96.6.2907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blei DM, Ng AY, Jordan MI. Latent Dirichlet Allocation. Journal of Machine Learning Research. 2003;3:993–1022. doi: 10.1162/jmlr.2003.3.4-5.993. [DOI] [Google Scholar]

- Rogers S, Girolami M, Campbell C, Breitling R. The Latent Process Decomposition of cDNA Microarray Datasets. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2005;2:143–156. doi: 10.1109/TCBB.2005.29. [DOI] [PubMed] [Google Scholar]

- Carrivick L, Campbell C. A Bayesian approach to the analysis of micoarray datasets using variational inference. Journal submission. 2007.

- Attias H. A variational Bayesian framework for graphical models. Advances in Neural Information Processing Systems. 2002;12:209–215. [Google Scholar]

- Bishop CM. Pattern recognition and machine learning. (Series: Information Science & Statistics), Springer; 2006. [Google Scholar]

- Teh YW, Newman D, Welling M. A collapsed variational bayesian inference algorithm for latent dirichlet allocation. Advances in Neural Information Processing Systems. 2006;19:1353–1360. [Google Scholar]

- Jordan MI, Ghahramani Z, Jaakkola T, Saul LK. An introduction to variational methods for graphical models. Machine Learning. 1999;37:183–233. doi: 10.1023/A:1007665907178. [DOI] [Google Scholar]

- Blake CL, Newman DJ, Hettich S, Merz CJ. UCI repository of machine learning databases. 1998. http://www.ics.uci.edu/~mlearn/mlrepository.html

- Garber E, et al. Diversity of gene expression in adenocarcinoma of the lung. Proc Natl Acad Sci U S A. 2001;98:13784–13789. doi: 10.1073/pnas.221451398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeoh E-J, et al. Classification, subtype discovery, and prediction of outcome in pediatric acute lymphoblastic leukemia by gene expression profiling. Cancer Cell. 2002;1:133–143. doi: 10.1016/S1535-6108(02)00032-6. [DOI] [PubMed] [Google Scholar]