Abstract

Many drugs of abuse produce changes in impulsive choice, that is, choice for a smaller–sooner reinforcer over a larger–later reinforcer. Because the alternatives differ in both delay and amount, it is not clear whether these drug effects are due to the differences in reinforcer delay or amount. To isolate the effects of delay, we used a titrating delay procedure. In phase 1, 9 rats made discrete choices between variable delays (1 or 19 s, equal probability of each) and a delay to a single food pellet. The computer titrated the delay to a single food pellet until the rats were indifferent between the two options. This indifference delay was used as the starting value for the titrating delay for all future sessions. We next evaluated the acute effects of nicotine (subcutaneous 1.0, 0.3, 0.1, and 0.03 mg/kg) on choice. If nicotine increases delay discounting, it should have increased preference for the variable delay. Instead, nicotine had very little effect on choice. In a second phase, the titrated delay alternative produced three food pellets instead of one, which was again produced by the variable delay (1 s or 19 s) alternative. Under this procedure, nicotine increased preference for the one pellet alternative. Nicotine-induced changes in impulsive choice are therefore likely due to differences in reinforcer amount rather than differences in reinforcer delay. In addition, it may be necessary to include an amount sensitivity parameter in any mathematical model of choice when the alternatives differ in reinforcer amount.

Keywords: risk, impulsive choice, reinforer delay, reinforcer amount, nicotine, lever press, rats

Given a choice between two reinforcers, the preferred reinforcer is said to have a greater “value.” Prediction of choice depends upon identifying the function that accurately describes how different variables contribute to reinforcer value. Several mathematical models of choice have been proposed for that purpose (e.g., Bateson & Kacelnik, 1995; Davison, 1988; Gibbon, Church, & Fairhurst, 1988; Grace, 1993; Herrnstein, 1970; Hursh & Fantino, 1973; Killeen, 1968; 1982; Mazur, 1984). An assumption of most of these models is that the value of a reinforcer decreases as the delay to that reinforcer increases. The mathematical function that describes this relation is typically referred to as a delay discounting function. One such model that has been very successful in describing and predicting choices in discrete trial situations is Mazur's (1987) hyperbolic discounting function:

| 1 |

where V represents the current value of a delayed reinforcer, A represents the reinforcer amount, D represents the delay to the reinforcer, and k is a free parameter which reflects the rate at which the reinforcer loses value with increases in delay.

One prediction of Equation 1 is preference for a smaller–sooner reinforcer over a larger–later reinforcer, when the delay of the latter is such that its value is relatively lower than that of the former. The point at which this happens is determined in part by how quickly reinforcer value declines with increasing delay, which is reflected by the parameter k. Increases in k reflect decreases in the delay at which the large reinforcer would be equally preferred to the smaller–sooner reinforcer. Thus, k is frequently referred to as a measure of impulsive choice. It is also often described as a measure of “delay discounting,” because it indicates the rate at which a reinforcer's value decreases as delay increases.

If an experimental manipulation produces an increase in the rate of delay discounting, this should also affect an animal's choices between reinforcers delivered after fixed versus variable delays (which we will refer to as fixed/variable choice). Given a choice between one response alternative that delivers a single reinforcer either immediately or after a delay 2t (with equal probability of each) and another alternative that delivers a single reinforcer after a fixed delay t, the alternative producing the variable delays will be preferred (Bateson & Kacelnik, 1995; Cicerone, 1976; Mazur, 1984, 1986; Pubols, 1958). This result is predicted by Equation 1: For any positive value of k, the average of V for an immediate reinforcer and V for a reinforcer with a delay of 2t is greater than V for a reinforcer with a delay of t (see Mazur, 1984, for a more complete explanation). Equation 1 also predicts that as k increases, the value of the variable alternative increases relative to the fixed alternative. Thus, any experimental manipulation that increases the rate of delay discounting (k) should increase preference for variable delays over fixed delays.

According to Equation 1, an environmental or pharmacological manipulation that alters delay discounting (k) should affect choice in both fixed/variable choice procedures and impulsive choice procedures. The critical advantage of a fixed/variable procedure is experimental control: The choice alternatives can be made to differ in delay only, whereas in impulsive choice procedures, one alternative differs from the other in both reinforcer amount and delay. For example, Dallery and Locey (2005) used an adjusting delay procedure in which a computer adjusted the delay to a three-pellet reinforcer until it was equally preferred to a one-pellet reinforcer delayed 1 s. Then, twice per week they administered a different dose of nicotine and found that nicotine produced dose-dependent increases in preference for the smaller–sooner reinforcer (i.e., dose-dependent increases in impulsive choice). The critical question is whether these increases in impulsive choice were due to an increase in delay discounting (as represented by an increase in k in Equation 1), a decrease in amount sensitivity (i.e., a decrease in the effect of differences in amounts between the smaller–sooner and larger–later reinforcer), or some combination of both.

The present study was conducted to assess the effects of nicotine on choice when two response alternatives differ in delay or both delay and amount. A fixed/variable choice procedure similar to that used by Mazur (1984) was used to assess the effects of acute nicotine administration on choice controlled by delay. In the first phase, equal reinforcer amounts (one pellet) were available on each alternative. The procedure identified the titrated delay that was equally preferred to the variable delay, i.e., the indifference point between the two alternatives. This indifference point was then used as the initial titrating delay in all subsequent sessions. According to Equation 1, if nicotine increased the rate of delay discounting, the proportion of choices for the titrating alternative should have decreased. In the second phase, differing reinforcer amounts (one pellet or three pellets) were available on each alternative. If nicotine increased delay discounting, nicotine should have produced decreases in choice for the titrating delay in both phases of the experiment. If, instead, nicotine decreased amount sensitivity, nicotine should not have affected fixed/variable choice with equal reinforcer amounts (Phase 1) but produced an increase in preference for the small reinforcer (decrease in preference for the titrating delay) with different reinforcer amounts (Phase 2).

Method

Subjects

Fourteen Long-Evans hooded male rats (Harlan, Indianapolis, IN) were housed in separate cages under a 12∶12 hr light/dark cycle with continuous access to water. Each rat was maintained at 80% of its free-feeding weight as determined at postnatal day 150. Twelve of the 14 rats were experimentally naïve, while Rats 100 and 103 had extensive histories with similar choice procedures.

Apparatus

Seven experimental chambers (30.5 cm long × 24 cm wide × 29 cm high) in sound-attenuating boxes were used. Each chamber had two nonretractable levers (2 cm long × 4.5 cm wide), 7 cm from the chamber floor. Each lever required a force of approximately 0.30 N to register a response. A 5 cm × 5 cm × 3 cm food receptacle was located 3.5 cm from each of the two levers and 1.5 cm from the chamber floor. The food receptacle was connected to an automated pellet dispenser containing 45-mg Precision Noyes food pellets (Formula PJPPP). Three horizontally aligned lights (0.8 cm diameter), separated by 0.7 cm, were centered 7 cm above each lever. From left to right, the lights were colored red, yellow, and green. A ventilation fan within each chamber and white noise from an external speaker masked extraneous sounds. A 28-V yellow house light was mounted 1.5 cm from the ceiling on the wall opposite the intelligence panel. Med-PCTM hardware and software controlled data collection and experimental events.

Procedure

Training

Lever pressing was initially trained on an alternative fixed ratio (FR) 1 random time (RT) 100-s schedule. The houselight was turned on for the duration of each training session. Training trials began with the onset of all three left lever lights. In the initial trial, both levers were active so that a single response on either lever resulted in immediate delivery of one food pellet. The RT schedule was initiated at the beginning of each trial so that a single pellet was delivered, response-independently, approximately every 100 s. Both response-dependent and response-independent food deliveries were accompanied by the termination of all three lever lights. After a 2-s feeding period, the lights were illuminated and a new trial began. After two consecutive presses of one lever, that lever was deactivated until the other lever was pressed. After a total of 60 food deliveries, the session was terminated. Training sessions were conducted for 1 week, at the end of which all response rates were above 10 per minute.

After lever pressing was established, the rats were exposed to 1 week of lever-alternation training. During these sessions, all three lights above each active lever flashed on a 0.3 s on/off cycle. Sessions consisted of 30 blocks of two trials. In the first trial of each block, both levers were active. In the second trial of each block, the lever that the rat pressed in the preceding trial was deactivated and the lights above that lever were turned off. A single response to an active lever resulted in an immediate pellet delivery and a 2-s blackout during which all lights above both levers were extinguished.

Titrated Delay Procedure (prebaseline)

Following initial training, experimental sessions were conducted 7 days a week at approximately the same time every day. Sessions were preceded by a 10-min blackout period during which the chamber was dark and there were no programmed consequences for responses on either lever. At the end of the presession blackout period, the houselight, the green light above the left lever, and the red light above the right lever were illuminated. The left, green-lit lever was the “variable” lever, on which a single response resulted in a single pellet delivery after a variable delay: either 1 s (p = .5) or 19 s (p = .5). The right, red-lit lever was the titrated-delay lever. A single response on this lever resulted in a single pellet (in Phase 1) or three pellets (in Phase 2) delivered after a delay. Following a lever press, the lights above both levers were extinguished and the appropriate delay period was initiated. During the delay period, the light corresponding to the pressed lever flashed 10 times. The flash intervals were equally spaced and determined by the duration of the delay period such that there were 11 equal-interval off periods, the last of which terminated in food delivery rather than light illumination. The houselight was extinguished following the 2-s feeding period and remained off for the duration of the intertrial interval (ITI). The ITI was 60 s minus the duration of the previous delay, so each new trial began 60 s after the preceding choice. Immediately following the ITI, a new trial began with the onset of the houselight; the green, left-lever light; and the red, right-lever light. Each session consisted of 60 trials.

The titrated delay began at 10 s in the first session. Each response on the variable lever decreased the delay by 10% during the ensuing trial, to a minimum of 1 s. Each response on the titrated lever increased the delay by 10% during the ensuing trial. If the same lever was pressed in two consecutive choice trials, that lever was deactivated in the ensuing trial. The light corresponding to a deactivated lever was not illuminated and responses on that lever had no programmed consequences. Responses during these forced trials had no effect on the value of the titrated delay. In subsequent sessions, the titrated delay began at the final value from the preceding session.

This prebaseline condition continued for a minimum of 60 sessions and until the titrated delay was stable for seven consecutive sessions. Stability was determined based on the average titrated delay in each session according to three criteria. First, each of the seven-session averages had to be within 20% or 1 s of the average of those seven values. Second, the average of the first three-session averages and the average of the last three-session averages were required to be within 10% or 1 s of the seven-session average. Third, there could be no increasing or decreasing trend in average titrated delay across the final three sessions (i.e., three consecutive increases or decreases in the average titrated delay).

Once stability was achieved, the average adjusted delay for the seven stable sessions was considered the indifference point: the titrated delay which was equally preferred to the variable delay used in this procedure. If the stability criteria were not met within 100 sessions (Rats 136, 139, and 140 in Phase 1 and Rats 139 and 161 in Phase 2), the median of the average titrated delays for the last 20 sessions was considered the indifference point (except for Rat 118 which continued the prebaseline condition for 212 sessions before reaching stability, as shown in Table 1). The indifference point determined during the prebaseline period was then fixed, and it was used as the initial delay value in all subsequent sessions within that phase.

Table 1.

Number of sessions and percentage of choices for the titrating-delay lever during the last 7 sessions for the pre-baseline and baseline conditions. Also, the indifference delay (in seconds) determined from the pre-baseline condition.

| Rat | Phase 1 |

Rat | Phase 2 |

||||||||

| Pre-Baseline |

Baseline |

Ind. Delay | Pre-Baseline |

Baseline |

Ind. Delay | ||||||

| Sess. | Pref. | Sess. | Pref. | Sess. | Pref. | Sess. | Pref. | ||||

| 136 | 100a | 52.1% | 44 | 58.5% | 5.7 | 136 | 63 | 52.8% | 22 | 50.2% | 41.1 |

| 137 | 77 | 45.2% | 57 | 55.1% | 1.3 | 137 | 61 | 51.5% | 65 | 46.1% | 36 |

| 139 | 100a | 54.1% | 32 | 46.3% | 8 | 139 | 100a | 53.5% | 25 | 52.3% | 34.3 |

| 140 | 100a | 53.1% | 43 | 46.3% | 4.8 | 140 | 73 | 51.3% | 22 | 46.1% | 19.4 |

| 100 | 83 | 40.4% | 52 | 20.1% | 1.4 | 141 | 60 | 53.1% | 25 | 52.7% | 59.3 |

| 103 | 92 | 46.9% | 48 | 31.1% | 1 | 157 | 60 | 53.4% | 20 | 50.1% | 38.2 |

| 118 | 212 | 46.5% | 21 | 57.9% | 1.2 | 158 | 60 | 52.9% | 41 | 47.9% | 36.8 |

| 119 | 94 | 41.4% | 25 | 70.4% | 1.2 | 159 | 60 | 52.4% | 71 | 41.7% | 23.2 |

| 125 | 51 | 46.6% | 25 | 51.6% | 1.3 | 161 | 100a | 52.1% | 21 | 52.5% | 25.4 |

Pre-baseline terminated without stability.

Baseline

For the first trial of each session, the value of the titrated delay started at the indifference delay determined for that particular rat. All other aspects of the procedure remained the same as described above. The baseline was continued for a minimum of 20 sessions and until the proportion of choices for the titrated alternative was stable. Stability was determined based on three criteria. First, each of the last seven-session choice proportions had to be within 10% of the average of those seven sessions. Second, the average of the first three-session choice proportions and the average of the last three-session choice proportions were required to be within 5% of the seven-session average. Finally, there could be no increasing or decreasing trend in choice proportions across the final three sessions (i.e., three consecutive increases or decreases in choice proportions).

Acute Drug Regimen

The same procedure described in the baseline was used throughout the drug regimen. Nicotine was dissolved in a potassium phosphate solution (1.13 g/L monobasic KPO4, 7.33 g/L dibasic KPO4, 9 g/L NaCl in distilled H2O; Fisher Scientific, Pittsburgh, PA) to adjust pH to 7.4. Subjects were administered nicotine by subcutaneous injection immediately prior to the 10-min presession blackout period. Doses were 1.0, 0.3, 0.1, and 0.03 mg/kg nicotine (Sigma Chemical Co., St. Louis, MO). Doses of nicotine were calculated as the base, and injection volume was based on body weight at the time of injection (1 ml/kg). Injections occurred twice per week (Wednesday and Sunday for Phase 1, Tuesday and Saturday for Phase 2). During each phase, rats experienced two complete sequences of doses in descending order with each sequence preceded by a vehicle injection. Rat 157 died following 1.0 mg/kg nicotine during the second acute sequence (of Phase 2). Therefore, only data from the first administration sequence are presented for Rat 157.

Phases 1 and 2

Both phases of the experiment consisted of all the posttraining procedures just mentioned (pre-baseline, baseline, and acute drug regimen). Phase 1 and Phase 2 were procedurally identical except for the number of pellets delivered as a consequence for choosing the titrated lever. During Phase 1, the reinforcer produced by the variable-delay lever and the titrated lever was a single pellet. Group A rats (n = 9, Rats 100, 103, 118, 119, 125, 136, 137, 139, and 140) were exposed to Phase 1, and 4 of those (Group B: Rats 136, 137, 139, and 140) continued to Phase 2. During Phase 2, the reinforcer amounts differed for the two alternatives. Choices for the titrated-delay lever resulted in three pellets instead of one (choices for the variable-delay lever still produced one pellet). Group B rats experienced all of Phase 1 before repeating the posttraining procedures with different reinforcer amounts (Phase 2). Five additional rats (Group C: Rats 141, 157, 158, 159, and 161) were also exposed to Phase 2. Group C rats only experienced the posttraining procedures under Phase 2 conditions.

Results

Table 1 shows the number of sessions each rat spent under (a) the experimental (pre-baseline) condition prior to the determination of an indifference delay and (b) the baseline condition prior to initiation of the drug regimen. Preference for the titrated lever (in percentage of choices for that lever during the final seven sessions of the condition) in each of those conditions is also shown. Finally, the indifference delay used for the baseline and subsequent conditions for each rat is also shown in Table 1. Data from Phase 1 are shown on the left and data from Phase 2 are shown on the right.

Figure 1 shows the average latency to make a choice as a function of dose, during acute administration in both Phase 1 (closed circles) and Phase 2 (open circles). Latency was measured as the time from the onset of the stimulus lights (at the beginning of each trial) to the first response on either lever. Consistent with previous research (Dallery & Locey, 2005), only the largest dose of nicotine had any substantial effect on choice latencies relative to vehicle and control sessions.

Fig. 1.

Average latency to make a response as a function of dose during Phase 1 (closed circles) and Phase 2 (open circles). Note logarithmic y-axis. “C” and “V” indicate control (no injection) and vehicle (potassium phosphate) injection, respectively. Vertical lines represent standard errors of the mean.

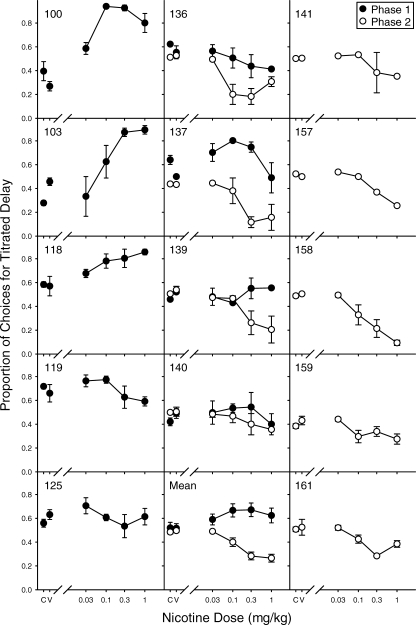

Figure 2 shows the effects of nicotine on choice for each subject in Phase 1 (closed circles) and Phase 2 (open circles). Specifically, the proportion of choices for the titrated delay is shown for each dose. Note that in each session, the value of the titrated delay started at the indifference delay determined for that particular rat. In Phase 1, only Rat 136 showed any consistent decrease in preference for the titrated delay relative to vehicle and control sessions. Several rats (e.g., 103 and 118) actually showed a dose-dependent increase in preference for the titrated delay relative to control sessions. Choices for the titrated delay also consistently increased following nicotine administration for Rat 100 with the peak effect under a relatively small (0.1 mg/kg) dose. Across rats, nicotine had no consistent dose-dependent effect on choice in Phase 1.

Fig. 2.

Effects of nicotine on choice during Phase 1 (closed circles) and Phase 2 (open circles). The proportion of choices for the titrated delay is shown for each dose of nicotine. “C” and “V” indicate control (no injection) and vehicle (potassium phosphate) injection, respectively. Vertical lines represent standard errors of the mean.

In Phase 2, all rats showed an increase in preference for the variable option under acute nicotine administration as indicated by the decrease in preference for the titrated delay. In most cases, the decrease was dose-dependent as reflected in the mean data. The descending order of doses did not seem to be responsible for any observed effects. Across the 8 rats experiencing both cycles, exactly 50% of the first cycle doses had greater effect than the corresponding dose during the second cycle with respect to magnitude of change in preference for the titrated delay.

Table 2 shows the average titrated delays for each rat during the acute drug regimens of both phases. The average adjusting delays are generally consistent with the choice proportions shown in Figure 2. For most rats in Phase 1, the average titrated delay increased slightly under nicotine administration. In Phase 2, nicotine produced substantial dose-dependent decreases in the average titrated delay for most rats.

Table 2.

Average session titrated delays (seconds) at each dose for each rat during acute administration.

| Phase 1 |

|||||||||

| 100 | 103 | 118 | 119 | 125 | 136 | 137 | 139 | 140 | |

| Control | 2.2 | 1.0 | 2.0 | 4.4 | 2.0 | 11.8 | 4.0 | 8.1 | 4.3 |

| Vehicle | 1.1 | 1.1 | 2.0 | 2.6 | 2.5 | 7.6 | 1.4 | 9.8 | 5.1 |

| 0.03 mg/kg | 2.5 | 1.1 | 3.3 | 5.8 | 4.4 | 9.1 | 4.7 | 9.6 | 3.8 |

| 0.1 mg/kg | 14.0 | 3.6 | 5.0 | 5.2 | 3.2 | 5.3 | 6.1 | 8.0 | 3.4 |

| 0.3 mg/kg | 11.8 | 7.8 | 5.6 | 2.9 | 3.0 | 3.5 | 3.7 | 14.6 | 4.6 |

| 1.0 mg/kg | 6.5 | 8.6 | 7.5 | 2.0 | 3.2 | 3.1 | 1.6 | 11.7 | 2.8 |

| Phase 2 |

|||||||||

| 136 | 141 | 137 | 139 | 140 | 157 | 158 | 159 | 161 | |

| Control | 46.3 | 52.8 | 23.1 | 36.3 | 21.3 | 46.4 | 33.4 | 10.9 | 26.2 |

| Vehicle | 49.2 | 53.9 | 26.5 | 42.4 | 22.2 | 44.1 | 41.9 | 12.3 | 29.8 |

| 0.03 mg/kg | 46.9 | 63.5 | 24.1 | 33.7 | 15.3 | 41.5 | 31.3 | 13.3 | 30.8 |

| 0.1 mg/kg | 11.1 | 62.3 | 14.5 | 22.6 | 15.9 | 41.2 | 12.5 | 13.9 | 19.3 |

| 0.3 mg/kg | 12.0 | 37.2 | 8.8 | 12.1 | 13.0 | 22.9 | 12.4 | 12.7 | 10.7 |

| 1.0 mg/kg | 17.9 | 26.2 | 15.5 | 9.6 | 11.7 | 21.7 | 10.4 | 11.2 | 13.9 |

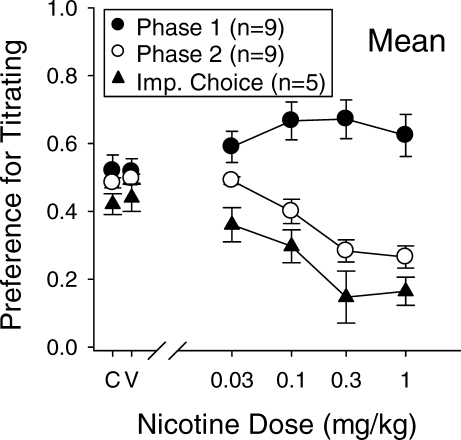

Figure 3 shows the mean proportion of choices for the titrated alternative as a function of acute nicotine dose for rats in Phase 1 (closed circles), rats in Phase 2 (open circles), and rats in the Dallery and Locey (2005) study (closed triangles). For Phase 2 and the impulsive choice study, the titrated alternative was a 3-pellet reinforcer as opposed to a 1-pellet reinforcer after a variable (Phase 2) or brief, 1-s (impulsive choice) delay. Any effect on choice in Phase 1 is not consistent with either of the other two procedures which used different reinforcer amounts on the two alternatives. Phase 2 results indicate a dose-dependent increase in preference for the smaller (one pellet), variably-delayed (1 s or 19 s) reinforcer, which is very similar to the dose-dependent increase in preference for the smaller (one pellet), sooner (1 s) reinforcer in the Dallery & Locey impulsive choice study.

Fig. 3.

Acute effects of nicotine on choice for the variable delay with equal reinforcer amounts (Phase 1, closed circles), choice for the variable delay with different reinforcer amounts (Phase 2, open circles), and impulsive choice (closed triangles) from Dallery and Locey (2005). Data shown as a proportion of choices for the titrated alternative as a function of dose. For both Phase 2 and the impulsive choice procedure, the titrated alternative was also the large, three-pellet, alternative. “C” and “V” indicate control (no injection) and vehicle (potassium phosphate) injection, respectively. Vertical lines represent standard errors of the mean.

Discussion

Previous research examining the relation between smoking or nicotine and intertemporal choice (Baker, Johnson, & Bickel, 2003; Bickel, Odum, & Madden, 1999; Dallery & Locey, 2005; Mitchell, 1999) has used impulsive choice procedures, making it impossible to separate nicotine effects on different reinforcer amounts and different reinforcer delays. However, the authors of these studies interpreted the relationship between nicotine and increased impulsive choice in terms of increased delay discounting. If nicotine does increase delay discounting, then it should have produced an increase in choice for the variable delay (decrease in preference for the titrated delay) in Phase 1 of the present experiment. As can be seen in Figure 2, if there was any effect of nicotine on fixed/variable choice, it produced the opposite effect: increasing preference for the titrated (fixed) delay (e.g., Rats 100, 103, and 118). How can these seemingly contradictory findings be reconciled?

According to Equation 1, reinforcer value is determined solely by A (amount), D (delay), and k (delay discounting). Nicotine cannot directly impact either reinforcer amount or reinforcer delay (e.g., three-pellet reinforcers are identical in amount—three pellets—regardless of nicotine dose). Therefore, if nicotine increases impulsive choice by changing reinforcer value, nicotine must increase delay discounting (k). It follows that increases in delay discounting should increase preference for variable delays in fixed/variable procedures. As such, nicotine should have increased preference for the variable delay in Phase 1, but no such increases were observed. Thus, Equation 1 cannot reconcile these findings.

In Phase 2, acute injections of nicotine produced dose-dependent increases in preference for the smaller, variable option over the larger, titrating option. Following administration of the most effective doses of nicotine (0.3 mg/kg and 1.0 mg/kg), rats were indifferent between one pellet after a variable delay and three pellets after about 15 s, compared to about 36 s following vehicle administration (see Table 2). As with nicotine-induced increases in impulsive choice (Dallery & Locey, 2005), Phase 2 results could be due to either the presence of different reinforcer delays or different reinforcer amounts. However, given that the same procedure produced no increases in delay-based risky choice in Phase 1 when reinforcer amounts were equal on the two alternatives, it is likely that the acute effects of nicotine on both impulsive choice and in this case, fixed/variable choice, are due to differences in reinforcer amounts on the two alternatives.

A comparison of dose-effect curves from Dallery and Locey (2005) and Figure 3 reveal similar effects on the proportion of choices for the large alternative. Although the procedures used in these two experiments were similar, the present, fixed/variable procedure did use a faster titrating delay (10% per trial) procedure than was used in the context of impulsive choice (up to 10% per six trials). As such, it is difficult to compare the magnitude of effects across these studies. However, as shown in Figure 3, the lack of nicotine effect in enhancing preference for the variable delay in Phase 1, combined with the similarity of impulsive choice and Phase 2 variable-delay preference functions, provide compelling evidence that any acute effect of nicotine on impulsive choice is the result of differences in reinforcer amounts.

These data indicate that nicotine decreases preference for a three-pellet reinforcer relative to a one-pellet reinforcer, without any effect on delay discounting (k). In other words, nicotine simply decreases the value of a large reinforcer, relative to a small reinforcer (or increases the value of the small relative to the large, which is functionally identical). Equation 1 does not allow for such an effect. With Equation 1, a three-pellet reinforcer will always be thrice as valuable as an equally delayed one-pellet reinforcer. It follows that if nicotine decreased preference for three-pellet reinforcers relative to one-pellet reinforcers, Equation 1 would need to be modified to account for that effect. Specifically, an amount sensitivity parameter—a parameter that indicates the relative impact of different amounts on reinforcer value—should be added to provide a more accurate characterization of how amount and delay determine reinforcer value.

The need for separate amount and delay sensitivity parameters has been proposed by several researchers. For example, the concatenated generalized matching law (Killeen, 1972; Logue, Rodriguez, Pena-Correal, & Mauro, 1984), useful for the prediction and/or description of performance under concurrent interval and concurrent chain schedules, includes a parameter for reinforcer magnitude sensitivity. Pitts and Febbo (2004) used a slightly modified version of the concatenated generalized matching law in an attempt to isolate the behavioral mechanism of action in amphetamine-induced changes in impulsive choice using a concurrent-chains procedure. Both delay and amount sensitivity exponents were estimated, and amphetamine generally decreased sensitivity to reinforcer delay. Similarly, Kheramin et al. (2002) used an alternative mathematical model proposed by Ho, Mobini, Chiang, Bradshaw, and Szabadi, (1999) in an attempt to separate the relative influence of amount sensitivity and delay discounting on impulsive choice in orbital prefrontal cortex-lesioned rats.

A number of mathematical models might prove to be effective in describing intertemporal choice under conditions where either delay or amount sensitivity vary. However, given the general success of Equation 1, a reasonable approach might be to start with a simple modification to account for the present data. We can add an amount sensitivity parameter z to Equation 1 such that:

| 2 |

Using this equation with a simple impulsive choice example, one pellet delayed 1 s vs. three pellets delayed 5 s, and holding k constant at 1, a change in z from less than 1 to greater than 1, would produce a shift in preference from the one-pellet option to the three-pellet option. Unlike Equation 1, Equation 2 is capable of accounting for both the present data and the Dallery and Locey (2005) data. A nicotine-induced decrease in z would increase impulsive choice (Dallery & Locey, 2005) and variable-delay preference in a fixed/variable choice procedure with different reinforcer amounts (Phase 2) without having any effect on fixed/variable choice with equal reinforcer amounts (Phase 1).

Whatever amount sensitivity parameter or alternative mathematical model proves most useful in accounting for the present data, these results question the assumption that nicotine-induced increases in impulsive choice reflect increases in delay discounting. In so doing, these results challenge the assumption of identity between impulsive choice effects and delay discounting effects. For instance, several researchers have reported a magnitude effect in humans, such that increasing reinforcer magnitude decreases k (e.g., Green, Myerson, & McFadden, 1997; Kirby, 1997; Raineri & Rachlin, 1993). Perhaps it does. But at present, research only indicates that increasing reinforcer magnitude decreases impulsive choice. There is currently no evidence that this decrease in impulsive choice is due to a decrease in k (i.e., the extent to which delay decreases reinforcer value) rather than an increase in amount sensitivity (i.e., the relative impact of different amounts on reinforcer value).

One potential criticism of the current study is that we did not determine a range of indifference points to fit a discounting equation such as Equation 1 or 2. One might be tempted to conclude that we cannot make interpretations about changes in discounting or amount sensitivity, as in effect we only examined one indifference point. In light of these equations, however, any change in the proportion of choice should reflect a change in either delay discounting or amount sensitivity. The lack of any decrease in the proportion of choice in Phase 1 indicates that nicotine did not affect delay discounting, k. Only when different amounts were added did the proportion of choice change, which would be consistent with a change in amount sensitivity. Equation 2 can describe the pattern of results observed in the current study. Further work is needed to determine how well Equation 2 can describe more rigorous tests of amount sensitivity effects. For example, the case for an amount sensitivity interpretation would be more convincing if we were to replicate both phases of this experiment using different delays on the variable alternative to generate a range of indifference points. Indeed, such a procedure might eventually be necessary to evaluate Equation 2's account of the relationship between amount sensitivity and reinforcer value.

Another potential limitation is that some other variable not captured by Equations 1 or 2 was responsible for the changes in choice. The interpretation of a nicotine amount-sensitivity effect assumes that any change in preference between two alternatives is due to changes in the relative reinforcer value between those two alternatives. There are, however, other behavioral processes that could produce such changes. For instance, nicotine might affect stimulus control. A dose-dependent decrease in stimulus control should produce a dose-dependent shift towards indifference. Fortunately, with the present procedures, indifference is established during the baseline so that the procedure is sensitive to increases in preference for either alternative but not increases in indifference. Therefore, the dose-dependent increases in preference for the variable delay in Phase 2 cannot be accounted for by a decrease in stimulus control.

A final potential limitation stems from the differences in baseline performance across phases. Although the difference in reinforcer amounts was the only procedural difference between the two phases, this one procedural change did produce behavioral differences in baseline performance. This presents the possibility that the difference in baseline performance, rather than the difference in reinforcer amounts, was responsible for the difference in nicotine effects across phases. Although the latencies to make a choice were comparable between phases (see Figure 1), the indifference delays during Phase 2 (see Table 1) and in Dallery and Locey (2005) were longer than the indifference delays in Phase 1. Perhaps nicotine only increases delay discounting with large indifference delays, and the lack of an effect in Phase 1 may have reflected a floor effect. However, even for rats that produced relatively long indifference delays in Phase 1 under vehicle (rats 136, 139, and 140), nicotine did not substantially decrease the proportion of choices (Figure 2). Also, approximately 50% of choices were allocated to each alternative under vehicle and control sessions. As such, there was no floor effect with respect to proportion of choices in Phase 1 (as shown in Figure 2). Nevertheless, it is unclear if the indifference delay must be above some threshold in order for nicotine to produce an effect. It could be tested, however, by using different delays on the variable alternative. That is, the procedure could deliver a pellet after 10 or 60 seconds, for example, and a rat should show indifference between this alternative and the titrated alternative at a longer indifference delay.

Equation 1 and similar mathematical models of choice have been effective in the description and prediction of choice, both within behavioral pharmacology and the experimental analysis of behavior in general. The present results suggest that it may be useful to consider additional behavioral mechanisms, such as changes in amount sensitivity, to account for drug-induced changes in intertemporal choice. Further work is needed to establish the generality of this conclusion. Such work should avoid exclusive reliance on procedures that conflate differences in reinforcer amount and delay, such as impulsive choice procedures. A critical complement will be procedures that isolate the relative contributions of delay discounting and amount sensitivity on choice. The value of such procedures, whether we call them “impulsive choice” procedures, “fixed/variable” procedures, “risky choice” procedures, or something else, should be judged by their capacity to identify functional relations between amount, delay, and choice.

Acknowledgments

The authors thank Noelle Eau Claire, Irene Glenn, Julie Marusich, and Bethany Raiff for their assistance with this project. This research was supported by a grant from the College of Liberal Arts and Sciences at the University of Florida and grant F31 DA021442 from the National Institute on Drug Abuse. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute On Drug Abuse or the National Institutes of Health. Portions of these data were presented at the 2005 meeting of the Southeastern Association for Behavior Analysis and the 2006 meetings of the Society for Research on Nicotine and Tobacco, the Association for Behavior Analysis, the College on Problems of Drug Dependence, and the Florida Association for Behavior Analysis. Portions of this manuscript were included as part of the dissertation of the first author at the University of Florida. Reprint requests should be sent to Matt Locey or Jesse Dallery, Department of Psychology, University of Florida, P.O. Box 112250, Gainesville, FL 32611 (email: mlocey@ufl.edu).

References

- Baker F, Johnson M.W, Bickel W.K. Delay discounting in current and never-before cigarette smokers: Similarities and differences across commodity, sign, and magnitude. Journal of Abnormal Psychology. 2003;112:382–392. doi: 10.1037/0021-843x.112.3.382. [DOI] [PubMed] [Google Scholar]

- Bateson M, Kacelnik A. Preferences for fixed and variable food sources: Variability in amount and delay. Journal of the Experimental Analysis of Behavior. 1995;63:313–329. doi: 10.1901/jeab.1995.63-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel W.K, Odum A.L, Madden G.J. Impulsivity and cigarette smoking: Delay discounting in current, never, and ex-smokers. Psychopharmacology. 1999;146:447–454. doi: 10.1007/pl00005490. [DOI] [PubMed] [Google Scholar]

- Cicerone R.A. Preference for mixed versus constant delay of reinforcement. Journal of the Experimental Analysis of Behavior. 1976;25:257–261. doi: 10.1901/jeab.1976.25-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dallery J, Locey M.L. Effects of acute and chronic nicotine on impulsive choice in rats. Behavioural Pharmacology. 2005;16:15–23. doi: 10.1097/00008877-200502000-00002. [DOI] [PubMed] [Google Scholar]

- Davison M.C. Delay of reinforcers in a concurrent-chain schedule: An extension of the hyperbolic-decay model. Journal of the Experimental Analysis of Behavior. 1988;50:219–236. doi: 10.1901/jeab.1988.50-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibbon J, Church R.M, Fairhurst S. Scalar expectancy theory and choice between delayed rewards. Psychological Review. 1988;95:102–114. doi: 10.1037/0033-295x.95.1.102. [DOI] [PubMed] [Google Scholar]

- Grace R.C. Violations of transitivity: Implications for a theory of contextual choice. Journal of the Experimental Analysis of Behavior. 1993;60:185–201. doi: 10.1901/jeab.1993.60-185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L, Myerson J, McFadden E. Rate of temporal discounting decreases with amount of reward. Memory & Cognition. 1997;25:715–723. doi: 10.3758/bf03211314. [DOI] [PubMed] [Google Scholar]

- Herrnstein R.J. On the law of effect. Journal of the Experimental Analysis of Behavior. 1970;13:243–266. doi: 10.1901/jeab.1970.13-243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ho M.-Y, Mobini S, Chiang T.- J, Bradshaw C.M, Szabadi E. Theory and method in the quantitative analysis of “impulsive choice” behaviour: implications for Psychopharmacology. Psychopharmacology. 1999;146:362–372. doi: 10.1007/pl00005482. [DOI] [PubMed] [Google Scholar]

- Hursh S.R, Fantino E. Relative delay of reinforcement and choice. Journal of the Experimental Analysis of Behavior. 1973;19:437–450. doi: 10.1901/jeab.1973.19-437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kheramin S, Body S, Mobini S, Ho M.-Y, Velazquez-Martinez D.N, Bradshaw C.M, et al. Effects of quinolinic acid-induced lesions of the orbital prefrontal cortex on inter-temporal choice: A quantitative analysis. Psychopharmacology. 2002;165:9–17. doi: 10.1007/s00213-002-1228-6. [DOI] [PubMed] [Google Scholar]

- Killeen P. On the measurement of reinforcement frequency in the study of preference. Journal of the Experimental Analysis of Behavior. 1968;11:263–269. doi: 10.1901/jeab.1968.11-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen P. The matching law. Journal of the Experimental Analysis of Behavior. 1972;17:489–495. doi: 10.1901/jeab.1972.17-489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen P. Incentive Theory: II. Models for choice. Journal of the Experimental Analysis of Behavior. 1982;38:217–232. doi: 10.1901/jeab.1982.38-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirby K.N. Bidding on the future: Evidence against normative discounting of delayed rewards. Journal of Experimental Psychology: General. 1997;126:54–70. [Google Scholar]

- Logue A.W, Rodriguez M.L, Pena-Correal T.E, Mauro B.C. Choice in a self-control paradigm: Quantification of experienced-based differences. Journal of the Experimental Analysis of Behavior. 1984;41:53–67. doi: 10.1901/jeab.1984.41-53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Tests of an equivalence rule for fixed and variable reinforcer delays. Journal of Experimental Psychology: Animal Behavior Processes. 1984;10:426–436. [PubMed] [Google Scholar]

- Mazur J.E. Fixed and variable ratios and delays: Further tests of an equivalence rule. Journal of Experimental Psychology: Animal Behavior Processes. 1986;12:116–124. [PubMed] [Google Scholar]

- Mazur J.E. An adjusting procedure for studying delayed reinforcement. In: Commons M.L, Mazur J.E, Nevin J.A, Rachlin H, editors. Quantitative analyses of behavior: the effects of delay and of intervening events on reinforcement value. Hillsdale, NJ: Erlbaum; 1987. pp. 55–73. [Google Scholar]

- Mitchell S.H. Measures of impulsivity in cigarette smokers and non-smokers. Psychopharmacology. 1999;146:455–464. doi: 10.1007/pl00005491. [DOI] [PubMed] [Google Scholar]

- Pitts R.C, Febbo S.M. Quantitative analyses of methamphetamine's effects on self-control choices: Implications for elucidating behavioral mechanisms of drug action. Behavioural Processes. 2004;66:213–233. doi: 10.1016/j.beproc.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Pubols B.H. Delay of reinforcement, response perseveration, and discrimination reversal. Journal of Experimental Psychology. 1958;56:32–39. doi: 10.1037/h0044491. [DOI] [PubMed] [Google Scholar]

- Raineri A, Rachlin H. The effect of temporal constraints on the value of money and other commodities. Journal of Behavioral Decision Making. 1993;6:77–94. [Google Scholar]