Abstract

Token reinforcement procedures and concepts are reviewed and discussed in relation to general principles of behavior. The paper is divided into four main parts. Part I reviews and discusses previous research on token systems in relation to common behavioral functions—reinforcement, temporal organization, antecedent stimulus functions, and aversive control—emphasizing both the continuities with other contingencies and the distinctive features of token systems. Part II describes the role of token procedures in the symmetrical law of effect, the view that reinforcers (gains) and punishers (losses) can be measured in conceptually analogous terms. Part III considers the utility of token reinforcement procedures in cross-species analysis of behavior more generally, showing how token procedures can be used to bridge the methodological gulf separating research with humans from that with other animals. Part IV discusses the relevance of token systems to the field of behavioral economics. Token systems have the potential to significantly advance research and theory in behavioral economics, permitting both a more refined analysis of the costs and benefits underlying standard economic models, and a common currency more akin to human monetary systems. Some implications for applied research and for broader theoretical integration across disciplines will also be considered.

Keywords: token reinforcement, conditioned reinforcement, symmetrical law of effect, cross-species analysis, behavioral economics

A token is an object or symbol that is exchanged for goods or services. Tokens, in the form of clay coins, first appeared in human history in the transition from nomadic hunter-gatherer societies to agricultural societies, and the expansion from simple barter economies to more complex economies (Schmandt-Besserat, 1992). Since that time, token systems, in one form or another, have provided the basic economic framework for all monetary transactions. From the supermarket to the stock market, any economic system of exchange involves some form of token reinforcement.

Token systems have been successfully employed as behavior-management and motivational tools in educational and rehabilitative settings since at least the early 1800s (see Kazdin, 1978). More recently, token reinforcement systems played an important role in the emergence of applied behavior analysis in the 1960–70s (Ayllon & Azrin, 1968; Kazdin, 1977), where they stand as among the most successful behaviorally-based applications in the history of psychology.

Laboratory research on token systems dates back to pioneering studies by Wolfe (1936) and Cowles (1937) with chimpanzees as experimental subjects. Conducted at the Yerkes Primate Research Laboratories, and published as monographs in the Journal of Comparative Psychology, these papers describe an interconnected series of experiments addressing a wide range of topics, including discrimination of tokens with and without exchange value, a comparison of tokens and food reinforcers in the acquisition and maintenance of behavior, an assessment of response persistence under conditions of immediate and delayed reinforcement, preference between tokens associated with different reinforcer magnitudes and with qualitatively different reinforcers (e.g., food vs. water, play vs. escape), and social behavior engendered by token reinforcement procedures, to name just a few.

The focus of these early investigations was on conditioned reinforcement—the conditions giving rise to and maintaining the acquired effectiveness of the tokens as reinforcing stimuli. A central question concerned the degree to which tokens, as conditioned reinforcers, were functionally equivalent to unconditioned reinforcers. This emphasis on the conditioned reinforcement value of tokens was maintained in later work by Kelleher (1956), also with chimpanzees and also conducted at the Yerkes Laboratories (see Dewsbury, 2003, 2006, for an interesting historical account of this period at Yerkes). Kelleher's work was notable in that it was the first to place token reinforcement in the context of reinforcement schedules. The emphasis then shifted from response acquisition to maintenance, and from discrete-trial to free-operant methods. It also opened the study of token reinforcement to more refined techniques for assessing conditioned reinforcement value (e.g., chained and second-order schedules). Prior to Kelleher's work, conditioned reinforcement was generally studied in acquisition or extinction, and the effects were weak and transient. With the emerging technology of reinforcement schedules, however, conditioned reinforcement in general, and token reinforcement in particular, was studied in situations that permitted continued pairing of stimuli with food, producing more robust effects.

Kelleher's work suggested another important role of tokens—that of organizing coordinated sequences of behavior over extended time periods. An emphasis on conditioned reinforcement and temporal organization has been carried through in later investigations of token reinforcement with rats (Malagodi, Webbe, & Waddell, 1975) and pigeons (Foster, Hackenberg, & Vaidya, 2001). Token reinforcement procedures have also been extended to the study of choice and self-control (Jackson & Hackenberg, 1996), and to aversive control (Pietras & Hackenberg, 2005), where they have proven useful in cross-species comparisons and to behavioral-economic formulations more generally (Hackenberg, 2005).

Despite periods of productive research activity, the literature on token reinforcement has developed sporadically, with little integration across research programs. And to date, no published reviews of laboratory research on token systems exist. The purpose of the present paper is to review what is known about token reinforcement under laboratory conditions and in relation to general principles of behavior. The extensive literature on token systems in applied settings is beyond the scope of the present paper, although it will consider some implications for applied research of a better understanding of token-reinforcement principles.

Two chief aims of the paper are to identify gaps in the research literature, and to highlight promising research directions in the use of token schedules under laboratory conditions. The paper is organized into four main sections. In Section I, past research on token systems will be reviewed and discussed in relation to common behavioral functions—reinforcement, temporal organization, antecedent stimulus functions, and aversive control. This kind of functional analysis provides an effective way to organize the literature, revealing continuities between token reinforcement and other types of contingencies.

Although resembling other contingencies, token-based procedures have distinctive characteristics that make them effective tools in the analysis of behavior more generally. The present paper considers three areas in which token systems have proven especially useful as methodological tools. Section II discusses the use of token systems in addressing the symmetrical law of effect—the idea that reinforcers (gains) and punishers (losses) can be viewed in conceptually analogous terms, as symmetrical changes along a common dimension. Such a view is foundational in major theoretical accounts of behavior, yet supporting laboratory evidence is surprisingly sparse. Token systems provide a methodological context in which to explore such questions across species and outcome types.

This common methodological context makes token systems useful in a comparative analysis more generally, where minimizing procedural differences is paramount. This is the main focus of Section III, a review and discussion of procedures and concepts in the area of choice and self-control, showing how token systems can be used to bridge key procedural barriers separating research with humans from that with other animals.

Section IV explores the implications of token systems for behavioral economics. Laboratory research in the experimental analysis of behavior has contributed to behavioral economics for over three decades (Green & Rachlin, 1975; Hursh, 1980, Lea, 1978), though with few exceptions (Kagel, 1972; Kagel & Winkler, 1972; Winkler, 1970), this work has not made direct contact with token reinforcement methods or concepts. This is unfortunate, because token systems have great potential for exploring a wide range of relationships between behavior and economic contingencies, and for uniting the different branches of behavioral economics.

I. Functional Analysis of Token Reinforcement

A token reinforcement system is an interconnected set of contingencies that specifies the relations between token production, accumulation, and exchange. Tokens are often manipulable objects (e.g., poker chips, coins, marbles), but can be nonmanipulable as well (e.g., stimulus lamps, points on a counter, checks on a list). A token, as the name implies, is an object of no intrinsic value; whatever function(s) a token has is established through relations to other reinforcers, both unconditioned (e.g., food or water) or conditioned (e.g., money or credit). As such, a token may serve multiple functions—reinforcing, punishing, discriminative, eliciting—depending on its relationship to these other events. The present review will be organized around known behavioral functions: reinforcement, temporal organization, antecedent stimulus functions (discriminative and eliciting), and punishment.

Reinforcing Functions

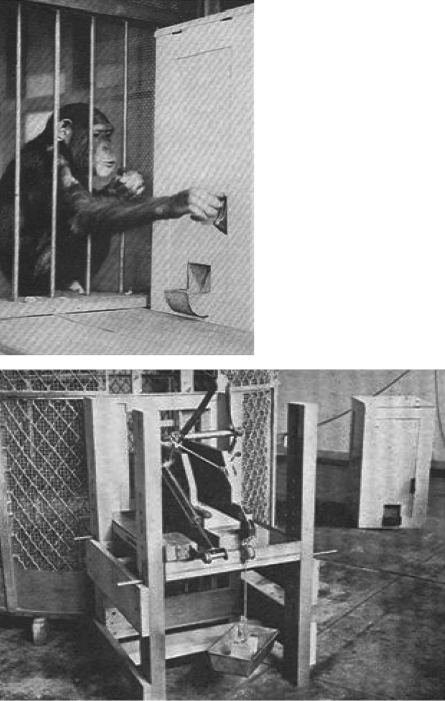

Tokens are normally conceptualized as conditioned reinforcers, established as such through their relationship to other reinforcers. In the studies by Wolfe (1936) and Cowles (1937), the chimpanzees were trained to deposit poker chips into a vending-machine apparatus (as shown in the top panel of Figure 1) for a variety of unconditioned reinforcers (e.g., grapes, water). Initially, depositing was modeled by the experimenter; exchange opportunities were continuously available and each token deposit was reinforced immediately with food, ensuring contiguous token–food pairings. The importance of such pairings was demonstrated in subsequent conditions, in which depositing was brought under stimulus control: The chimps were trained to respond differentially with respect to tokens with exchange value (white tokens, exchangeable for grapes) and without exchange value (brass tokens, exchangeable for nothing). Such differential behavior was evidenced by (a) exclusive preference for white over brass tokens, and (b) extinction of depositing brass tokens. Periodic tests throughout the study verified the selective reinforcing effectiveness of the white chips: When white and brass tokens were scattered on the floor, the chimpanzees picked up and deposited only the white (food-paired) tokens.

Fig. 1.

Subject and apparatus used in Wolfe (1936). Top: Chimpanzee depositing a poker-chip token into token receptacle. Bottom: Outside view of experimental apparatus. See text for details. From Yerkes (1943). Property of the Yerkes National Primate Research Center, Emory University. Reprinted with permission.

Acquisition and Maintenance of New Behavior

Once token depositing had been established, the chimpanzees were then trained to operate a weight-lifting apparatus for access to tokens. The animals lifted a bar from a horizontal to a vertical position, the force of which could be adjusted by adding weight to the bar, a stem of which extended outside the work chamber (see bottom of Figure 1). The main question was whether tokens could establish and maintain behavior as effectively as food reinforcers. Several of Wolfe's (1936) experiments addressed this question, systematically comparing token-maintained behavior with food-maintained behavior. In one experiment, for example, sessions in which tokens were earned alternated with sessions in which food was earned. Tokens were earned after 10 responses and exchanged immediately for food. For 3 of 4 subjects, there was little difference in the time required to complete 10 trials in the token versus food sessions. For the other subject, the time was considerably shorter in the food sessions. Similar results were obtained using a different procedure, in which the weight requirement increased in fixed increments with each reinforcer (an early type of escalating, or progressive, schedule). Again, for all but one subject, there was essentially no difference in the terminal weights achieved under token and food conditions. For the remaining animal, the weights were slightly but consistently higher under food than under tokens, indicating greater efficacy of food over token reinforcers.

Using an apparatus similar to Wolfe (1936), as well as some of the same subjects, Cowles (1937) compared the effects of tokens (poker chips) and food (raisins and seeds) under a variety of experimental arrangements. In one experiment, for example, chimpanzees were trained on a two-position discrimination task, in which the position of the alternative associated with tokens was alternated repeatedly until it was selected exclusively. Tokens could be exchanged for food at the end of the session, which lasted either 10 or 20 trials. The discrimination was rapidly learned by 2 of 3 subjects on this procedure, and improved with repeated testing (from 1st to 2nd session within a day) and across repeated reversals. In another experiment, the same 2 chimpanzees were trained on a 5-position discrimination-learning task, in which correct responses produced either tokens later exchangeable for food, or food alone. The discrimination was learned rapidly for both subjects, and appeared to improve over time. One subject learned better (higher accuracy and fewer trials to meet criterion) with food than with tokens, while the other learned equally well with both reinforcers. However, even for the latter, token sessions were characterized by more “off-task” behavior, especially early in the exchange ratio. Under conditions in which a limited number of trials were provided, learning was more rapid under food than under token-food conditions for both subjects. Thus, despite little difference in asymptotic performance under both reinforcers, learning proceeded somewhat more rapidly with food than with tokens.

Subsequent experiments by Cowles (1937) showed that the tokens derived their reinforcing capacity through their relation to the terminal (food) reinforcers. In one experiment along these lines, Cowles compared the relative efficacy of food-paired and nonpaired tokens to that of food on the accuracy of a kind of delayed match-to-sample discrimination under conditions of immediate and delayed exchange. In delayed exchange, the tokens accumulated in a tray across a block of trials and were deposited in a room adjacent to that in which the tokens were earned. Accuracy was somewhat higher under food–token than nonfood–token conditions. In immediate exchange, the chimpanzees earned and exchanged tokens in the same room, and deposits were required to initiate the next trial. Accuracy increased under both food-paired and nonfood-paired token conditions, markedly so under paired conditions. Under conditions in which tokens were compared to food directly, accuracy was slightly but consistently better under food than food-paired token conditions, and significantly better under food than nonfood-paired token conditions.

In sum, the results of these experiments demonstrate that tokens function as effective reinforcers, albeit somewhat less effective than unconditioned reinforcers. This differential effectiveness of tokens versus food reinforcers is to be expected, if the tokens are functioning as conditioned reinforcers. The research also showed that tokens derive their reinforcing functions through relations to food. While this, too, may hardly be surprising, few studies have demonstrated the necessary and sufficient conditions for tokens to function as conditioned reinforcers. Indeed, little is known about the training histories needed to establish reinforcing functions of tokens. Is explicit token-food pairing necessary? Must conditional stimulus (CS) functions of tokens be established before they will function as effective reinforcers? In short, what are the optimal conditions for establishing and maintaining tokens as reinforcers? These are fundamental but unresolved questions requiring additional research.

Generalized Reinforcing Functions

When tokens are paired with multiple terminal reinforcers, they are said to be generalized reinforcers (Skinner, 1953). It is common in token systems with humans, for example, to include a store in which tokens can be exchanged for an array of preferred items and activities. Such generalizability should greatly enhance the durability of tokens, making them less dependent on specific motivational conditions, although there is surprisingly little empirical support for this. Perhaps the closest was an early experiment by Wolfe (1936). Three chimpanzees were given choices between black tokens (exchangeable for peanuts) and yellow tokens (exchangeable for water) under conditions of 16-hr deprivation from food or water (alternating every two sessions). All 3 subjects generally preferred the tokens appropriate to their current deprivation conditions, though the preference was not exclusive. Two additional subjects were therefore run under 24-hr deprivation conditions and were permitted free access to the alternate reinforcer for 1 hr prior to each session. Eliminating the motivation for one reinforcer was thought to heighten the motivation for the other. These subjects generally preferred the deprivation-specific reinforcer, and did so more strongly than the 3 subjects studied under more modest deprivation conditions.

Had a third token type been paired with both food and water, generalized reinforcing functions of the tokens could then have been evaluated. To date, however, generalized functions of tokens have yet to be explored. This remains a key topic of research on token reinforcement, especially in light of the immense theoretical importance attached to the concept of generalized reinforcement (Skinner, 1953). What types of experiences are necessary in establishing generalized functions? Once established, how do generalized reinforcers compare to more specific reinforcers? Are generalized reinforcers more durable than specific reinforcers? Are generalized reinforcers more resistant to extinction, or other disruptions, than more conventional reinforcers? Will generalized reinforcers be preferred to specific reinforcers? Will generalized reinforcers generate more work to obtain them? Are generalized reinforcers more aversive to lose than more specific reinforcers? In short, under what conditions will generalized reinforcers prove more valuable than specific reinforcers? These are just a few of the many foundational questions brought within the scope of an experimental analysis of generalized reinforcement.

Bridging Reinforcement Delay

Another function commonly ascribed to conditioned reinforcers is that of bridging temporal gaps between behavior and delayed reinforcers (Kelleher, 1966a; Skinner, 1953; Williams, 1994a, b). In an early experiment along these lines, Wolfe (1936) showed that tokens could maintain behavior in the face of extensive delays to food reinforcement. Four conditions were studied: (1) tokens were earned immediately, but could not be exchanged until after a delay (immediate token, delayed exchange/food); (2) food was earned for each response but delivered after a delay (no token, delayed food); (3) same as 2, except that brass chips were available during the delays (nonpaired token, delayed food); and (4) tokens were earned and could be deposited immediately, but food was delivered only after a delay (immediate token/exchange, delayed food). This latter condition differed from (1) in that no tokens were present during the delay interval.

In all four conditions, the delays increased progressively with each food delivery, until a breakpoint was reached—a point beyond which responding ceased (5 min without a response). Responding was clearly strongest in Condition 1 (immediate token, delayed exchange/food) for all 4 chimpanzees studied, reaching delays of 20 min and 1 hr for 2 subjects. For the other 2 subjects, breakpoints under Condition 1 were undefined, both in excess of 1 hr. The breakpoints under Conditions 2–4 were generally short (less than 3 min) for all subjects and did not differ systematically across conditions. Interestingly, there was evidence of pausing under the latter conditions, but not under Condition 1, indicating that the immediate delivery of a token helped sustain otherwise weak behavior temporally remote from food.

Performances in Conditions 1 and 4 are relevant to accounts of conditioned reinforcement based on concepts of marking—the facilitative effects of response-produced stimulus changes. According to this view, the rate-enhancing effects of stimuli paired with food arise not from the temporal pairing of stimulus with food (conditioned reinforcement), but from the response-produced stimulus change, which is said to mark, or make more salient, the response that produces the reinforcer (Lieberman, Davidson, & Thomas, 1985; Williams, 1991). If the tokens function mainly to mark events in time, then no differences would be expected under Conditions 1 and 4, as both involve similar response-produced stimulus changes: immediate token presentation. Conversely, if tokens function as conditioned reinforcers via temporal pairings with food, then greater persistence would be expected under Condition 1 than Condition 4. The higher breakpoints obtained under Condition 1 versus Condition 4 are clearly more consistent with the latter view, suggesting that the tokens were indeed serving as conditioned reinforcers—that their function depended on explicit pairing with food—rather than merely as temporal markers.

Similar results were reported in a nontoken context by Williams (1994b, Experiment 1), with response acquisition under delayed reinforcement. Rats' lever presses produced food after a 30-s delay. In marking conditions, each lever press produced a 5-s tone at the outset of the 30-s delay interval. In conditioned-reinforcement conditions, each lever press produced a 5-s tone at the beginning and end of the delay interval. Responding was acquired more rapidly under the latter conditions, showing the importance of the temporal pairing of the stimulus with food. In a second experiment, Williams compared acquisition under these latter conditions to that under conditions in which the tone remained on during the entire 30-s delay interval, designed to assess the bridging functions of the stimulus. Although both conditions included temporal pairing of tone and food, the bridging conditions proved less effective than conditions in which the tone was presented only at the beginning and end of the interval. Williams attributed these differences to the higher rate of food delivery (per unit time) in the presence of the briefer signals.

Some conditions in Wolfe's (1936) experiment also bear on potential bridging functions of the tokens. For the 2 subjects whose responding was maintained at 1-hr delays under Condition 1, delays exceeding 1 hr were examined. As before, the delay increased systematically across sessions. Because it was impractical to retain the subjects in the workspace under such long delays, the chimpanzees were permitted to leave the experimental space (and the token) 5 min after making a response and were returned 5 min prior to the end of the delay interval. Both subjects continued to respond at the maximum delays tested: 5 hrs for one subject, 24 hrs for the other. This suggests that the continued presence of the token was not essential to the reinforcing value of the tokens, as long as temporal pairing of tokens and food was intact. This conclusion must be tempered by the fact that these conditions were conducted following extended exposure to conditions in which the tokens filled the temporal gap, and may therefore have depended in part on that history. Additional research is needed to isolate the necessary and sufficient conditions under which response-produced stimuli enhance tolerance for delayed reinforcers. It should be apparent from this brief review, however, that token reinforcement procedures are well suited to the task.

Temporal Organization: Schedules of Token Reinforcement

Despite the conceptual importance of the pathbreaking work by Wolfe (1936) and Cowles (1937), research on token reinforcement lay dormant for approximately two decades. Kelleher revived research on token reinforcement in the 1950s, combining it with the emerging technology of reinforcement schedules. A fundamental insight of Kelleher's (and, later, Malagodi's) research was the conceptualization of a token reinforcement contingency as a series of three interconnected schedule components: (a) the token-production schedule, the schedule by which responses produce tokens, (b) the exchange-production schedule, the schedule by which exchange opportunities are made available, and (c) the token-exchange schedule, the schedule by which tokens are exchanged for other reinforcers. Research has shown that behavior is systematically related both to the separate and combined effects of these schedule components. This research will be reviewed below, along with a discussion of the relevance of such work to other schedules used to study conditioned reinforcement.

Token-Production Schedules

The first demonstration of schedule-typical behavior under token reinforcement schedules was reported by Kelleher (1956). Two chimpanzees were first trained to press a lever on fixed-ratio (FR) and fixed-interval (FI) schedules of food reinforcement, in which responses produced food after a specified number of responses (FR) or the first response after a specified period of time (FI). Once lever pressing was established, the subjects were then trained to deposit poker-chip tokens in a receptacle for food. Unlike the earlier studies by Wolfe and Cowles, in which exchange responses were enabled or prevented by opening or closing a shutter in front of the token receptacle, Kelleher brought exchange responding under discriminative control. Deposits in the presence of an illuminated window produced food, whereas deposits when the window was dark did not. When depositing was under stimulus control, the animals were taught to press a lever to obtain poker chips. Under both simple and multiple schedules, Kelleher found that FR and FI schedules of token production generated response patterning characteristic of FR and FI schedules of food production.

In a subsequent study, Kelleher (1958) performed a more extensive analysis of FR schedules of token reinforcement. Responses in the presence of a white light produced tokens (poker chips) that could be exchanged for food during an exchange period (signaled by a red light) at the end of the session. With the exchange schedule held constant at FR 60 (i.e., 60 tokens were required to produce the exchange period) and the token-exchange schedule held constant at FR 1 (i.e., once the exchange period was reached, each of the 60 token deposits produced a food pellet), the token-production ratio was varied from FR 60 to FR 125 gradually over the course of 10 sessions. Responding was characterized by a two-state pattern: alternating bouts of responding and pausing, similar to that seen under FR schedules of food reinforcement. The break-run pattern persisted in extinction with token schedules discontinued, an effect also seen with FR schedules of food reinforcement (Ferster & Skinner, 1957).

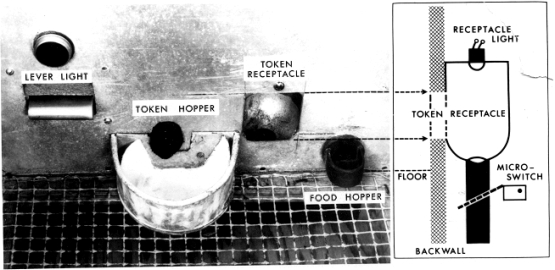

Malagodi (1967a, b, c) extended Kelleher's research on token-reinforced behavior, using rats rather than chimpanzees as subjects and marbles rather than poker chips as tokens. The basic apparatus is depicted in Figure 2, which shows a photograph of the front wall of the chamber and a diagram of the token receptacle. Lever presses produced marbles, dispensed into the token hopper. During scheduled exchange periods (denoted by a clicker and local illumination of the token receptacle), depositing the tokens in the token receptacle produced food, dispensed into the food hopper.

Fig. 2.

Photograph of intelligence panel and diagram (side view) of token receptacle used in Malagodi's research with rats as subjects and marbles as tokens. See text for details. From Malagodi (1967a). Reprinted with permission.

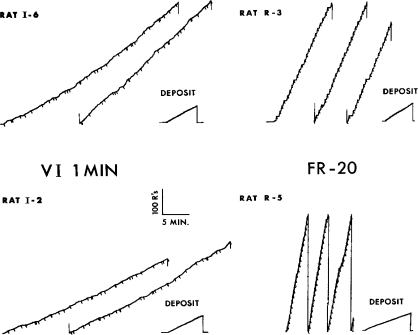

In several experiments, Malagodi (1967 a, b, c) examined response patterning under various token-production schedules. Illustrative cumulative records from Malagodi (1967a) are shown in Figure 3, with VI and FR token-production responding on the left and right panels, respectively. The exchange-production and token-exchange (deposit) schedules were held constant at FR 1. Consistent with Kelleher's findings, FR token-production patterns showed a high steady rate with occasional preratio pausing, characteristic of FR patterning with food reinforcers. Similarly, VI token-production responding was characterized by a lower but steady rate, resembling response patterning on simple VI schedules. The token-exchange (deposit) records show a relatively constant rate, appropriate to the FR-1 exchange schedule. Similar schedule-typical response patterns under FR and VI token-production schedules were reported by Malagodi (1967 b, c).

Fig. 3.

Representative cumulative records for rats exposed to token-reinforcement schedules. Left panels: VI 1-min token-production schedules. Right panels: FR 20 token-production schedules. Pips indicate token deliveries. Exchange-production and token-exchange schedules (labeled deposit in the figure) were both FR 1. From Malagodi (1967a). Reprinted with permission.

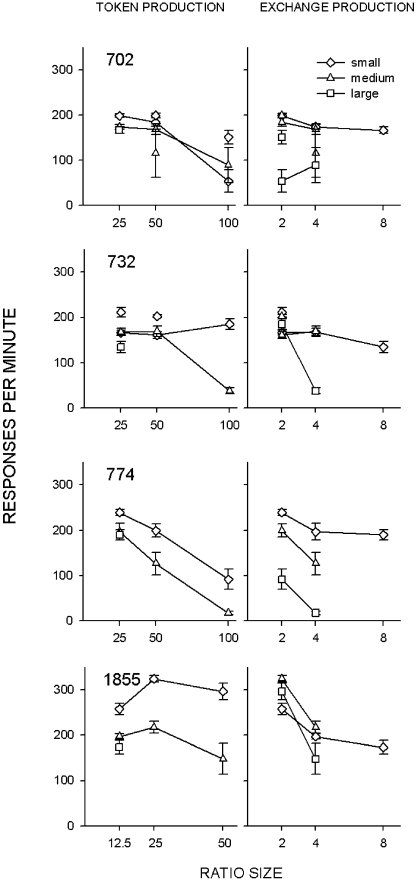

More recently, Bullock and Hackenberg (2006) expanded the analysis of token-production effects, parametrically examining behavior under both FR token-production and exchange-production schedules under steady-state conditions. The subjects were pigeons and the tokens consisted of stimulus lamps (LEDs) mounted in a horizontal array above the response keys in an otherwise standard experimental chamber. With the token-exchange schedule held constant at FR 1, the token-production ratio was varied systematically across conditions from FR 25 to FR 100 at each of three different exchange-production FR values (2, 4, and 8). Figure 4 shows response rates on the token-production schedule as a function of the token-production and exchange-production ratios. In general, response rates declined with increases in the token-production FR (left panels), especially at the higher exchange-production ratios (as denoted by symbols), an effect that corresponds well to that reported with simple FR schedules (Mazur, 1983). (Exchange-production effects [right panels] will be taken up in the next section.) There was only suggestive evidence of such an effect in Kelleher (1958), as the token-production FR was increased incrementally over approximately 10 sessions, with data shown only for the lowest and highest ratio.

Fig. 4.

Token-production and exchange-production response rates as a function of FR token-production ratio (left panels) and exchange-production ratio (right panels) of 4 pigeons across the final five sessions of each condition. Note that the graphs in left and right panels are derived from the same data, plotted differently to highlight the impact of token-production and exchange-production ratios. Different symbols represent different ratio sizes for the exchange-production ratios (left panels) and token-production ratios (right panels). Unconnected points represent data from replicated conditions; error bars represent standard deviations. From Bullock & Hackenberg (2006). Copyright 2006, by the Society for the Experimental Analysis of Behavior, Inc. Reprinted with permission.

Taken together, the results of Kelleher (1958) with chimpanzees and poker-chip tokens, of Malagodi (1967a, b, c) with rats and marble tokens, and of Bullock and Hackenberg (2006) with pigeons and stimulus-lamp tokens, show that performance under schedules of token production resemble, in both patterning and rate, performance under schedules of food reinforcement (Mazur, 1983), suggesting that tokens do indeed serve as conditioned reinforcers. Token-reinforced behavior is also modulated, however, by the exchange-production schedule that determines how and when exchange opportunities are made available. In the Bullock and Hackenberg experiment, for example, response rates were generally low in the early segments of the exchange-production cycle, but increased throughout the cycle, as additional tokens were earned. Response rates were thus a joint function of the token-production schedule and exchange-production schedule.

Exchange-Production Schedules

More direct evidence of exchange-production effects comes from studies in which the exchange-production schedule value or type is directly manipulated. For example, Webbe and Malagodi (1978) compared rats' response rates under VR and FR exchange-production schedules while holding constant the token-production schedule (FR 20) and token-exchange schedule (FR 1) requirements. In other words, a fixed number of lever presses were required to produce tokens (marbles) and to exchange tokens for food, but groups of tokens were required to produce an exchange period. In some conditions, the exchange-production schedule was FR 6 (six tokens required to produce exchange periods), and in others VR 6 (an average of six tokens required to produce exchange periods). Responding was maintained under both schedule types, but at higher rates under the VR schedules. Most of the rate differences were due to differential postexchange pausing produced by the schedules. The FR exchange-production schedule generated extended pausing early in the cycle giving way to higher rates later in the cycle—the “break-run” pattern characteristic of simple FR schedules. This bivalued pattern was greatly attenuated under the VR exchange-production schedule.

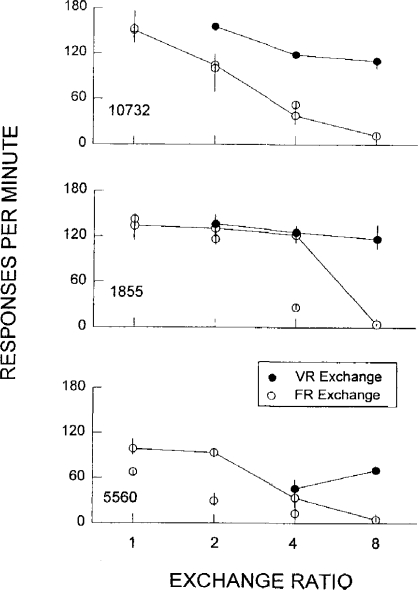

Foster et al. (2001) reported similar effects with FR and VR exchange ratios varied over a much wider range of values. Pigeons' keypecks produced tokens (stimulus lamps) according to an FR 50 schedule and exchange opportunities according to FR and VR schedules. Exchange schedule type (FR or VR) was varied within a session in a multiple-schedule arrangement, whereas the schedule value was systematically varied from 1 to 8 across conditions (requiring between 50 and 400 responses per exchange period). Response rates were consistently higher, and pausing consistently shorter, under VR than under comparable FR exchange-production schedules. Moreover, rates were more sensitive to exchange-production ratio size under FR than under VR schedules. These effects are portrayed in Figure 5, which shows response rates as a function of exchange-production ratio size and schedule type. Here and in Bullock and Hackenberg (2006), right panels of Figure 4, response rates decreased with increases in the size of the FR exchange-production schedule. These effects on response rates, as well as those on preratio pausing (not shown), resemble effects typically obtained with simple FR and VR schedules, suggesting that extended segments of behavior may be organized by exchange schedules in much the same way that local response patterns are organized by simple schedules.

Fig. 5.

Token-production response rates of 3 pigeons as a function of exchange-ratio size and schedule type: FR (open circles) and VR (closed circles) over the final five sessions of each condition. Unconnected points denote data from replicated conditions. Error bars indicate the range of values contributing to the means. From Foster, Hackenberg, & Vaidya (2001). Copyright, 2001 by the Society for the Experimental Analysis of Behavior, Inc. Reprinted with permission.

Viewed in this way, token schedules closely resemble second-order schedules of reinforcement (Kelleher, 1966a), in which a pattern of behavior generated under one schedule (the first-order, or unit schedule) is treated as a unitary response reinforced according to another schedule (the second-order schedule). In a token arrangement, first-order (token-production) units are reinforced according to a second-order (exchange-production) schedule. If the exchange-production schedules are acting as higher-order schedules, then the rate and patterning of token-production units should be schedule-appropriate. In the Foster et al. (2001) study, for example, the rate of FR 50 token-production units was higher under VR than under FR exchange, owing mainly to extended pausing in the early segments under the FR exchange-production ratio. That the functions relating pausing and response rates to schedule value and type also holds at the level of second-order schedules, suggests that token-production units might profitably be viewed as individual responses themselves reinforced according to a second schedule, supporting a view of token schedules as a kind of second-order schedule.

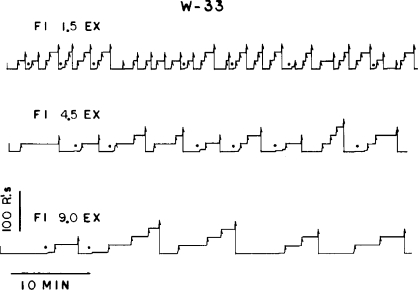

Further evidence of exchange-schedule modulation over token-reinforced behavior comes from studies utilizing time-based exchange-production schedules. In a study by Waddell, Leander, Webbe, and Malagodi (1972), rats' lever presses produced tokens (marbles) under FR 20 schedules in the context of FI exchange schedules, which varied parametrically from 1.5 to 9 min across conditions. The first token produced after the FI exchange interval timed out produced an exchange period (light and clicker) in the presence of which each token could be exchanged for a food pellet.

Token-production patterns were characterized by bivalued (break-run) patterns, such that responding either occurred at high rates or not at all, similar to that seen under simple FR schedules. These patterns are illustrated in Figure 6, which shows cumulative records of responding across the exchange-production FI cycle (within each panel) and as a function of exchange-schedule FI durations (across panels). The temporal pattern of token-production units within an exchange cycle was positively accelerated, similar to that seen under simple FI schedules, with longer pauses near the beginning of a cycle giving way to higher rates as the exchange period grew nearer. Overall rates decreased and quarter-life values increased with increases in the FI exchange schedule, similar to that seen under FI schedules of food presentation. The overall pattern of results is consistent with that seen under second-order schedules of brief stimulus presentation (Kelleher, 1966b), further supporting the view that token-production schedules are units conditionable with respect to second-order schedule requirements.

Fig. 6.

Representative cumulative records for one rat exposed to FR 20 token-production schedules as a function of exchange-production schedules: FI 1.5 min (top), FI 4.5 min (middle) and FI 9 min (bottom). Token deliveries are indicated by pips, exchange periods by resets of the response pen. From Waddell, Leander, Webbe, & Malagodi (1972). Reprinted with permission.

Token-Exchange Schedules

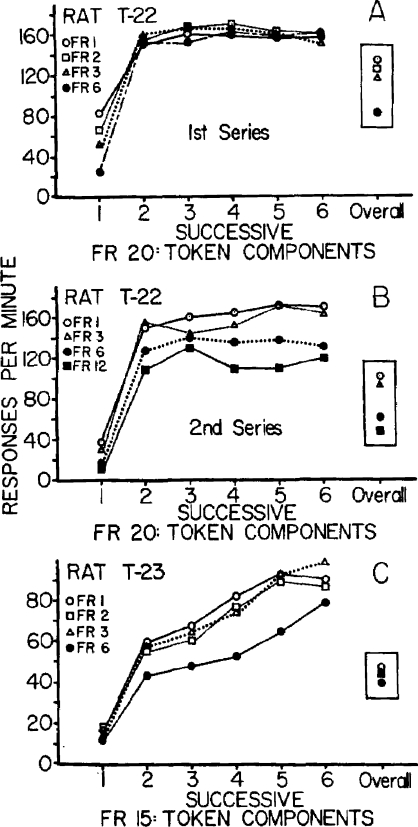

In the studies reviewed thus far, the token-production and/or exchange-production schedules have been manipulated in the context of a constant token-exchange schedule. That is, once the exchange period is produced, each token-exchange response removes a token and produces food. In the only published study in which the token-exchange schedule was manipulated (Malagodi, Webbe, & Waddell, 1975), rats' lever presses produced marble tokens according to an FR 20 token-production schedule, and exchange periods according to either FR 6 (2 rats) or FI t-s schedules (2 rats). The token-exchange ratio was systematically manipulated across conditions, such that multiple tokens were required to produce each food pellet.

Figure 7 shows initial-link response rates across successive token-production FRs as a function of the terminal-link token schedule for the pair of FR subjects (divided by phase for Rat T-22). Response rates increased in proximity to the terminal-link exchange schedule, and were ordered inversely with the token-exchange FR. Similar effects were observed in rats exposed to the FI token-exchange schedule (not shown). Together, these results are consistent with those obtained with chained schedules more generally (Ferster & Skinner, 1957; Hanson & Witoslawski, 1959). Moreover, the token-production FR units resembled simple FR units, consistent with findings in the literature on second-order schedules (Kelleher, 1966a), suggesting that token-production schedules create integrated response sequences reinforced according to second-order exchange-production and token-exchange schedules.

Fig. 7.

Response rates across successive FR token-production segments as a function of token-exchange FR for 2 rats. Overall mean response rates are shown on the right-hand side of each plot. From Malagodi, Webbe, & Waddell (1975). Copyright 1975, by the Society for the Experimental Analysis of Behavior, Inc. Reprinted with permission.

In sum, behavior under token reinforcement schedules is a joint function of the contingencies whereby tokens are produced and exchanged for other reinforcers. Other things being equal, contingencies in the later links of the chain exert disproportionate control over behavior. Token schedules are part of a family of sequence schedules that includes second-order and extended chained schedules. Like these other schedules, token schedules can be used to create and synthesize behavioral units that participate in larger functional units under the control of other contingencies. Token schedules differ, however, from these other sequence schedules in several important ways.

First, unlike conventional chained and second-order schedules, the added stimuli in token schedules are arrayed continuously, and each token is paired with food during exchange periods. These token–food relations may render token schedules more susceptible to stimulus–stimulus intrusions than other sequence schedules, as discussed below in relation to antecedent stimulus functions. Second, unlike other sequence schedules, token schedules provide a common currency (tokens) in relation to which reinforcer value can be scaled. This makes the procedures useful in exploring symmetries between the behavioral effects of losses and gains, as discussed in Section II. Third, the interdependent components of a token system (production and exchange for other reinforcers) closely resemble the point or money reinforcement systems commonly used in laboratory research with humans. This makes token procedures especially useful in narrowing the methodological gulf separating research with humans from that with other animals, as discussed in Section III. Finally, added stimuli (number of tokens) in token schedules are correlated not only with temporal proximity to food (as in other sequence schedules) but with amount of reinforcement available in exchange. This makes token schedules especially useful tools in studying economic concepts, such as unit price, as discussed in Section IV.

Token Accumulation

Another distinctive feature of token reinforcement schedules is the accumulation of tokens prior to exchange. In an early experiment by Cowles (1937), chimpanzees were studied under three conditions: (1) tokens were earned and accumulated but could not be exchanged until the end of the interval, (2) food was earned and accumulated but could not be consumed until the end of the interval, and (3) food was earned but did not accumulate and could not be consumed until the end of the interval. Subjects were trained under three different FI exchange values (1, 3, and 5 min) before the final value of 10 min was reached. For one subject, responding was maintained under (1) and (2) but not under (3). For the other subject, responding was weakly but consistently maintained under (1) but not under (2) or (3). For both subjects, the number of tokens earned decreased throughout the interval (counter to the typical FI pattern), an effect attributed to “token satiation.” In support of this notion, when tokens (5, 15, or 30) were provided at the beginning of the session, token-maintained behavior decreased, such that an approximately equal number of tokens (free + earned) were obtained. To complicate matters, however, the total number of tokens or grapes decreased across sessions, suggesting perhaps additional motivational factors.

More recently, Sousa and Matsuzawa (2001) reported accumulation of tokens (coins) by chimpanzees in a match-to-sample procedure. Correct responses produced a token that could be exchanged at any time by inserting into a slot. Once inserted, a single exchange response would deliver food reinforcement. Performance initially established via food reinforcement showed little or no deterioration when token reinforcers were substituted for food. The token reinforcement contingency was also sufficient to generate accurate responding in new discriminations. Thus, consistent with the older studies by Cowles (1937) and Wolfe (1936), token reinforcement contingencies appear to be effective in establishing and maintaining discriminative performance in a variety of tasks. The authors also noted “spontaneous” savings of tokens in one of the chimps—spontaneous in the sense that it was not strictly required by the contingencies. The authors reasoned that such behavior was adaptive, requiring less effort in the long run. That is, accumulating groups of tokens before exchange means that the exchange response (in this case, moving from one part of the chamber to another) need only be executed once rather than repeatedly, increasing the overall reinforcer density (number of reinforcers per unit time). Although plausible, their procedures do not permit a clear test of this notion.

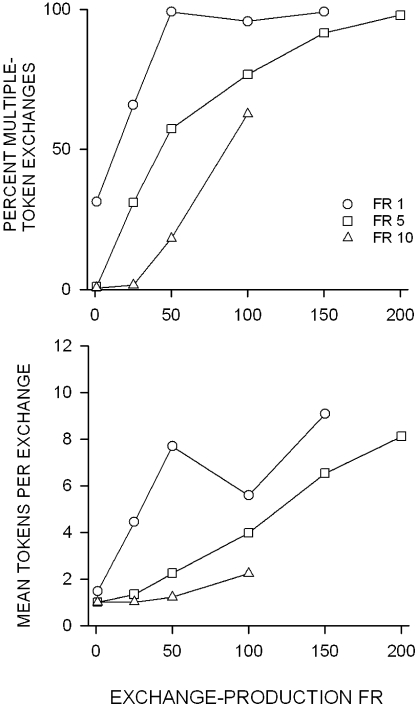

Yankelevitz, Bullock and Hackenberg (2008) examined patterns of token accumulation under a variety of ratio-based token-production and exchange schedules, permitting clearer specification of the relative costs of accumulating and exchanging tokens. Cycles began with the illumination of a token-production key. Satisfying an FR on this key produced a token and simultaneously illuminated a second key, the exchange-production key. Satisfying the exchange-production ratio produced the exchange period, during which all of the tokens earned that cycle could be exchanged for food. Unlike the extended-chained schedules reviewed above, in which token-production and exchange-production schedules were arranged sequentially in chain-like fashion, the token-production and exchange-production ratios here were arranged concurrently after the first token had been earned, providing subjects with a choice between accumulating additional tokens and moving directly to the exchange period.

The token-production and exchange-production ratios were systematically varied across conditions, with the exchange-production ratio manipulated at three different token-production ratios. Summary data are shown in Figure 8. The top panel shows percentage of cycles in which accumulation occurred (two or more tokens prior to exchange) as a function of FR exchange-production size, averaged across 3 subjects. The bottom panel shows the average number of tokens accumulated per exchange, also expressed as a function of FR exchange-production size. The different FR token-production values are displayed separately (denoted by different symbols and data paths). In general, accumulation varied directly with the exchange-production ratio and inversely with the token-production ratio. In other words, as the costs associated with producing the exchange period increased, more tokens were accumulated prior to exchange. Conversely, as the costs of producing each token increased, fewer tokens were accumulated.

Fig. 8.

Mean token accumulation as a function of FR exchange-production ratio. Top panel: Percentage of cycles in which multiple tokens accumulated prior to exchange. Bottom panel: Mean tokens accumulated per exchange period. The different symbols represent different token-production FR sizes. Data are based on the final five sessions per condition, averaged across 3 subjects. (Adapted from Yankelevitz, Bullock, & Hackenberg, 2008).

Viewing the tokens as conditioned reinforcers, the results are consistent with prior research on reinforcer accumulation (Cole, 1990; Killeen, 1974; McFarland & Lattal, 2001), but over a wider parametric range of conditions. Moreover, as Cole and others have noted, accumulation procedures involve tradeoffs between short-term and longer-term reinforcement variables. Going immediately to exchange minimizes the upcoming reinforcer delay, but at the expense of increasing the overall response:reinforcer ratio. Conversely, accumulation increases the delay to the upcoming reinforcer, but reduces the number of responses per unit of food (unit price). Token accumulation procedures thus bear on current debates over the proper time scale over which behavior is related to reinforcement variables. This topic will be discussed in more detail in Section IV.

Antecedent Stimulus Functions of Tokens

The evidence reviewed above strongly suggests conditioned reinforcing functions of the tokens. As stimuli temporally related to other reinforcers, however, tokens acquire antecedent functions as well, discriminative and eliciting. Research bearing on these functions will be addressed in this section.

Discriminative Functions

In the Kelleher (1958) study reviewed above, low response rates in the early token-production segments gave way to high and roughly invariant rates in the later segments (in closer proximity to the exchange period and food). Weak responding in the early links was attenuated by providing free tokens at the outset of the session, suggesting that the tokens were serving a discriminative function, signaling temporal proximity to the exchange periods and food. Thus, despite no change in the schedule requirements (the same number of responses were required to produce tokens and exchange periods), responding was strengthened by arranging stimulus conditions more like those prevailing later in the cycle. The discriminative functions of stimuli early in the sequence parallels effects seen on extended-chained schedules with ratio components (Jwaideh, 1973; see review by Kelleher & Gollub, 1962).

Similar discriminative functions were likely at work in the so-called token satiation effect reported by Cowles (1937), in which providing free tokens at the beginning of a session resulted in fewer tokens earned. Recall that responding decreased throughout the interval, such that the stimulus conditions late in the interval controlled low rates of behavior. More direct evidence of discriminative effects of tokens on accumulation comes from the Yankelevitz et al. (2008) study, described in the preceding section. When the tokens were removed but all other contingencies held constant, accumulation decreased markedly, suggesting that the tokens were discriminative for further token earning.

Eliciting Functions

In addition to reinforcing and discriminative functions, pairing tokens and food may also establish CS functions of the tokens. In one of Cowles' (1937) discrimination experiments, food-paired tokens were provided for correct responses and nonpaired tokens for incorrect responses. In addition to serving as a more effective reinforcer, the paired but not the unpaired tokens evoked strong token-directed consummatory responses. The chimpanzees came to respond to the paired tokens as if they were food, putting them into their mouths, and so on. Such token-directed consummatory behavior has also been reported in other studies with manipulable tokens: poker chips (Kelleher, 1958), marbles (Malagodi, 1967d), and ball bearings (Boakes, Poli, Lockwood, & Goodall, 1978; Midgley, Lea, & Kirby, 1989).

In the Midgley et al., (1989) study, for example, rats were trained to deposit ball bearings in a floor receptacle by reinforcing precursor responses (e.g., handling, rolling tokens toward the receptacle) with food. In two experiments, depositing was successfully trained in 13 of 15 rats. In addition to the reinforced response, however, the authors noted frequent instances of “misbehavior,” defined as an excess frequency of precursor responses. That such behavior continued to occur despite postponing food deliveries suggests the involvement of evocative or eliciting functions of the tokens. A procedural analysis of token procedures lends plausibility to this account. Tokens are closely coupled with exchange periods and food throughout most token-reinforcement contingencies, ensuring repeated food–token pairings. In addition to the close temporal pairing of tokens and food during exchange periods, the presentation and accumulation of tokens on many token procedures is temporally correlated with food, differentially signaling delays to food. Thus, apart from the discriminative functions noted above, tokens may also evoke or elicit behavior based on their temporal relations to food.

Such token-evoked behavior is probably more likely with manipulable tokens such as marbles and poker chips than with nonmanipulable tokens such as lights. Manipulable tokens are handled in various ways throughout the sequence of earning and exchanging them for food, and this physical contact with the tokens then becomes part of the behavior engendered by the procedures. With nonmanipulable tokens, on the other hand, such physical contact with the tokens is permitted but not required by the contingencies. Although pigeons have been known to orient to and occasionally peck at token lights, such token-directed behavior occurs much less often than with manipulable tokens.

Behavior evoked or elicited by tokens is an interesting and important topic in its own right, and may play an important role in a comprehensive analysis of token systems. Because such behavior is often incompatible with behavior reinforced by tokens, however, it may be desirable when examining primarily operant functions of tokens to use nonmanipulable tokens, less susceptible to intrusions from embedded stimulus–food relations. Specific methodological choices therefore depend on the types of questions asked.

Punishing Functions

If tokens function as conditioned reinforcers, then the loss of tokens should function as conditioned punishers. Such token-loss contingencies, also known as response-cost contingencies, are common in application and in everyday life, but until recently have not been an explicit topic of laboratory investigation with nonhumans. The main obstacle appears to be methodological. With manipulable tokens, such as marbles or poker chips, the tokens are in a subject's possession until they are exchanged for food; there is no obvious way to remove a token once it is has been earned. With nonmanipulable tokens, such as lights, however, tokens are earned and accumulated but are not physically in a subject's possession. Removing a token is just as straightforward as presenting one, by extinguishing rather than illuminating a token light. This makes such procedures especially well-suited to the study of conditioned punishment via token loss.

In the first published study along these lines, Pietras and Hackenberg (2005) examined token-loss contingencies in a multiple-schedule arrangement with pigeons. Tokens consisted of stimulus lights, mounted in a horizontal array above the response keys (as described above). Identical VR 4 (RI 30 s) schedules of token reinforcement operated in both components, such that tokens were produced on average every 30 s, and exchanges were produced on average following every four tokens. A conjoint FR schedule of token loss was arranged in one component (punishment component), such that every n responses (either 10 or 2, in different conditions) removed a token from the token array.

Response rates were differentially suppressed in the punishment component, demonstrating a clear punishment function of token loss. Because both the exchange-production and token-loss schedules were ratio based, exchanges and food were infrequent in the punishment component. Despite extremely low rates of food reinforcement, responding was not completely suppressed, reaching approximately 30–40% of baseline (unpunished) levels, even under the most stringent (FR 2) token-loss schedule. Only when the tokens were no longer produced under extinction conditions was responding eliminated. This suggests that the mere production of tokens as conditioned reinforcers maintained behavior even when they were only rarely exchanged for food. To test this, Pietras and Hackenberg (2005) conducted an additional condition, termed exchange extinction, in which the token-loss contingency was suspended—permitting production and accumulation of tokens—but tokens could not be exchanged for food. Exchange responses turned off tokens but did not produce food. Response rates under these conditions stabilized well above rates in the extinction condition and at approximately the same levels as in the punishment conditions, suggesting that responding was maintained by the production and accumulation of tokens as conditioned reinforcers even in the signaled absence of food. These effects parallel those seen with other conditioned reinforcers (Horney & Fantino, 1984).

Overall, the results are consistent with prior findings with human subjects: Response-contingent token loss suppresses behavior on which it is contingent (Munson & Crosbie, 1998; O'Donnell, Crosbie, Williams, & Saunders, 2000; Weiner, 1962, 1963, 1964). Contingent token loss also produces indirect effects on behavior, such as contrast (Crosbie, Williams, Lattal, Anderson, & Brown, 1997), comparable to that seen with electric-shock punishment (Brethower & Reynolds, 1962). Together with the Pietras and Hackenberg (2005) findings with pigeons, the results with human subjects appear to support an interpretation based on negative punishment (i.e., punishment via token loss). In negative-punishment studies, however, suppression due to punishment is confounded with changes in reinforcement density that normally accompany response suppression. That is, because negative punishment, by definition, involves reinforcer loss, reductions in responding may be attributed not to a direct punishment effect, but to concomitant changes in reinforcer density.

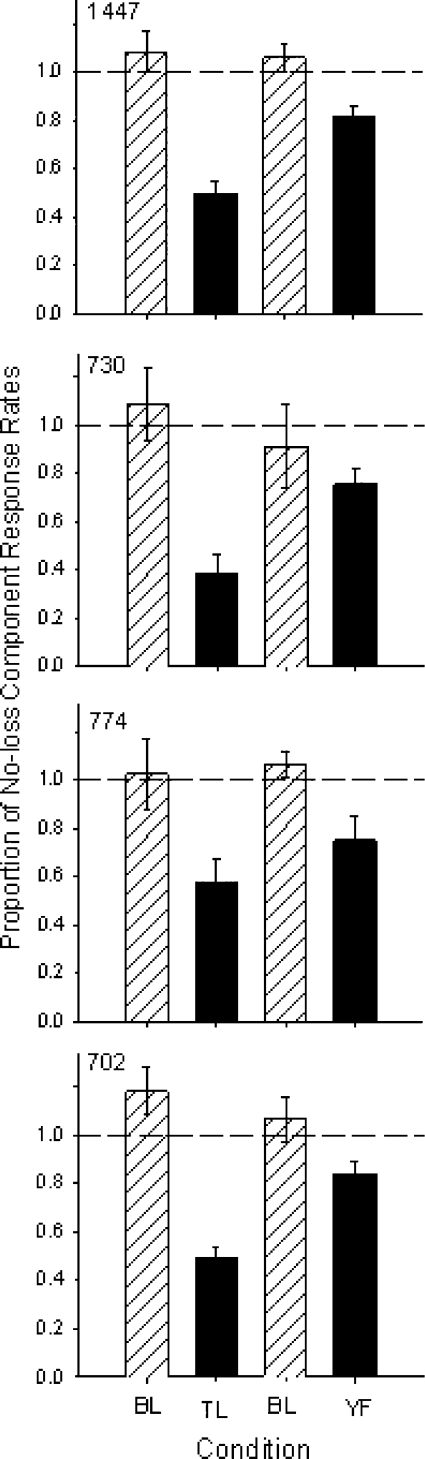

To overcome this interpretive difficulty, Raiff, Bullock, and Hackenberg (2008) compared response suppression under token-loss contingencies to that under yoked schedules with comparable rates of food density. Like the Pietras and Hackenberg (2005) study, a multiple-schedule format was used with pigeons as subjects and lights as tokens. Identical schedules of token gain, VR 4 (RI 30 s), operated in both components; a conjoint FR 2 token-loss schedule was superimposed on the token schedule in one component. To separate the direct effects of punishment from indirect effects of changes in the density of food, a yoked-control condition was included, in which the punishment contingency was suspended but the density of food reinforcement was yoked to that from the token-loss condition.

Figure 9 shows summary data, averaged across the final five sessions under baseline (hatched bars) and loss (filled bars) conditions for each pigeon. The filled bars labeled TL (token loss) represent data from punishment conditions, those labeled YF (yoked food) from yoked control conditions, expressed as a proportion of the response rates in the no-loss components of the same sessions. Response rates were somewhat reduced in the YF condition, indicating some food-related decrements, but remained consistently higher than under TL conditions. Because the reinforcer densities were equivalent under the two loss conditions, the greater reductions under token-loss conditions support a punishment interpretation.

Fig. 9.

Response rates and standard deviations in baseline (hatched bars) and loss (filled bars) conditions, expressed as a percentage of rates in the no-loss component within the same session, for 4 pigeons across the final five sessions of each condition. The labels TL and YF represent data from Token-Loss and Yoked-Food conditions, respectively. See text for additional details. Adapted from Raiff, Bullock, & Hackenberg (2008).

Although the research on token loss to date is consistent with a punishment interpretation, much remains to be done. For example, little is known about how punishment effects relate to (a) punishment variables (e.g., rate, magnitude, and schedule of token loss), (b) reinforcement variables (e.g., rate, magnitude, and schedule of reinforcement maintaining the punished behavior), (c) motivational variables (e.g., deprivation, generalizability of the tokens), or (d) the availability of unpunished alternatives, to name just a few. The use of token systems may help revitalize the empirical study of aversive control, a field that has been in decline over the past few decades (Critchfield & Rasmussen, 2007).

II. Token Systems and the Symmetrical Law of Effect

One implication of results of token-loss punishment studies is that reinforcers and punishers may be viewed in conceptually analogous terms, as symmetrical changes along a common dimension. This common dimension has long been assumed in major theories of behavior, though empirical support is surprisingly scarce. A major obstacle is methodological: reinforcers are typically appetitive events (e.g., food and water) whereas punishers are typically aversive events (e.g., electric shock). This poses serious scaling issues. For example, how does food compare to shock? Can they be measured in comparable units? One approach to the problem has been to scale reinforcers and punishers empirically, using post hoc descriptive modeling to fit the parameters (deVilliers, 1980; Farley, 1980; Farley & Fantino, 1978).

An alternative approach is to use token procedures in which gains and losses can be measured in common currency units. This was the strategy adopted by Critchfield, Paletz, MacAleese, and Newland (2003) in a study with human subjects, designed to test two different quantitative models of punishment—a direct suppression model and a competitive suppression model. The former assumes punishment is a primary process and symmetrical effects of losses and gains; the latter assumes punishment is a secondary process and asymmetrical effects of losses and gains. Human subjects were exposed to a variety of concurrent schedules in which choices both occasionally produced and lost points (later exchangeable for money). The schedules of point gain were always unequal: One schedule paid off at a higher rate. The loss schedules were arranged in such a way that the predictions of the two models diverged. In 17 of 20 cases across three experiments when the models made different predictions, the direct suppression model provided a better account of the data. These results are broadly consistent with the few studies conducted with nonhuman animals and electric-shock punishment (deVilliers, 1980; Farley, 1980), but the use of point gains and losses obviates the need for post hoc functional scaling of different consequence types.

More recently, Rasmussen and Newland (2008) studied concurrent-schedule performance in humans with schedules of point gain and loss, using the generalized matching law to quantify bias and sensitivity. Under no-punishment conditions, there was slight undermatching and little or no bias. When contingent point loss was superimposed on one of the two schedules, however, sensitivity was sharply reduced, with strong bias toward the unpunished alternative. The bias was more pronounced than would be expected from a consideration of relative reinforcement rates alone, suggesting asymmetrical effects of gains and losses. More specifically, the authors estimated that in this experiment a loss was worth approximately three times more than a gain.

Using a similar analytic strategy with human subjects, Magoon and Critchfield (2008) examined responding under similarly structured concurrent schedules of positive (point gain) and negative (point-loss avoidance) reinforcement. Two matching functions were obtained per subject—one under homogenous conditions (all positive reinforcement) and one under heterogenous conditions (positive and negative reinforcement, arranged concurrently). Because both gains and losses were of similar magnitude, a bias would reflect the differential impact of one consequence type over the other. No such bias was found, indicating symmetrical effects of losses and gains.

Extending these lines of research to token-based procedures with nonhumans would shed light on the cross-species generality of the symmetrical law of effect, putting a sharper quantitative point on the token-loss findings reviewed above. It would also provide a fresh perspective on a range of decision-making phenomena studied extensively in psychology and economics, such as risky choice. To take just one example, when choosing between certain and uncertain outcomes, human subjects are more likely to select the probabilistic (risky) option in a loss context than in a gain context (Kahnemen & Tversky, 1979, 2000). In other words, humans tend to be risk-prone for losses and risk-averse for gains. So pervasive is this finding that it is deemed axiomatic in the most influential theories of human decision-making. Kahneman and Tversky's (1979) Prospect Theory, for example, holds that the subjective value function is convex and steeper for losses than for gains: Losses loom larger—carry greater subjective value—than corresponding gains.

Gain-Loss Symmetry in Comparative Perspective

The gain-loss asymmetry seen in many risky choice studies with humans is part of a larger pattern of biases known as framing effects—the tendency for decisions to be influenced by the context or format in which they are presented (Kahneman & Tversky, 2000). Frames are normally verbal constructions, such as hypothetical scenarios that describe different courses of action. This raises questions about the generality of framing-induced behavioral biases. Are these effects limited to language-proficient humans, or are they more fundamental? To answer this question, recent research has explored framing effects in nonhuman primates.

In a clever experimental adaptation of methods used with human subjects, Chen, Lakshminarayanan, and Santos (2006) tested loss aversion in captive capuchin monkeys. The monkeys were trained to exchange tokens (metal coins) with experimenters for preferred food items. The monkeys then received a budget of 12 tokens that could be spent on either of two options, and were exposed to several conditions designed to assess framing. In one, the monkeys were given choices between two variable options—one framed as a gain and the other as a loss. The gain option began each trial with one food item (displayed by the experimenter) to which a second item was added with 50% probability. The loss option began each trial with two food items from which one was lost with 50% probability. Both options thus provided the same overall return (1.5 food items per trial), but differed in how they were framed. The monkeys generally preferred the gain option—spending more of their token budget on it—a result not explainable on the basis of return rate alone. Instead, the authors argued that the monkeys have an inherent aversion to loss, similar to that commonly observed in humans (but see Silberberg, Roma, Huntsberry, Warren-Boulton, Sakagami, Ruggiero, et al., 2008, for an alternative interpretation).

In a similar vein, Brosnan, Jones, Lamberth, Mareno, Richardson, and Schapiro (2007) reported suggestive evidence in chimpanzees of an endowment (or status-quo) effect—the seemingly paradoxical tendency to disproportionately weight items already in one's possession. The results of these and other recent studies with primates have important implications for the cross-species generality of behavioral biases, and their presumed evolutionary basis. It has been argued on the basis of these results that behavioral biases are not unique to humans, but rather, are shared by other primates, and in this sense may be a fundamental characteristic of primate cognition. Before this or any other explanation can be properly assessed, however, we need to know a great deal more about behavioral biases in nonhuman animals—the conditions that create them and the range of conditions (and species) under which they are observed. However such research turns out, it promises to reveal important information on the generality of effects deemed foundational to major theories of behavior.

III. Token Systems and Cross-Species Comparisons

A theme running through the studies reviewed in the preceding section is that of cross-species generality—the degree to which principles of behavior are applicable across species. In providing a common methodological context, token procedures are well suited to cross-species comparative studies. One area in which token systems have already proven useful in cross-species comparisons is that of choice and self-control. This section will include a review and discussion of such work.

In a series of important papers, Logue and colleagues (see review by Logue, 1988) examined humans' choices between sooner–smaller reinforcers (SSRs) and larger–later reinforcers (LLRs) with token reinforcers (points later exchangeable for money). In a study by Logue, Pena-Correal, Rodriguez, and Kabela (1986), for example, subjects selected between a small number of points available immediately and a larger number of points available after a delay. Postreinforcer delays followed the SSR, such that overall rate of reinforcement was equal for both options. Under a wide range of conditions, humans generally preferred the LLR, the option providing the greatest net reinforcement.

This basic result has been reported in several other studies of self-control with human subjects and token reinforcers (Logue, King, Chavarro, & Volpe, 1990; Flora & Pavlik, 1992). Although such performances have been taken as evidence of self-control, the delays to tokens (points) are only one of several delays in the procedure. Other and perhaps more critical are the delays to the exchange period (the periods during which points are exchangeable for money) and the delays to the terminal reinforcers. In the Logue et al. (1986) study, as in most laboratory studies, the exchange delays were held constant, at the end of a subject's participation in the experiment. If these later reinforcers in the chain—the delays to exchange and money—are viewed as the operative reinforcers, then the self-control seen in most laboratory studies with human subjects may instead be viewed as sensitivity to monetary reinforcer amount with monetary reinforcer delay held constant. Such sensitivity to reinforcer amount is an interesting and important finding in its own right, as there are few such data on reinforcer magnitude effects in humans. By itself, however, the finding may hold little relevance to the issue of self-control. To constitute self-control, a subject must face contrasting delays to the terminal reinforcers.

Reconceptualizing the traditional procedures as a token-reinforcement system suggests that self-control observed in prior results may be limited to those cases in which self-control and impulsivity are defined with respect to token delays and the exchange delays are held constant. In support of this, Hyten, Madden, and Field (1994) found evidence of an exchange-delay effect with humans in a self-control procedure: Subjects were more likely to exhibit self-control (i.e., select the larger number of points each trial) when the exchange delays were equal (e.g., a week after the session) than when they were unequal and shorter for the small-reinforcer option (see also Roll, Reilly, & Johanson, 2000, for analogous effects in choices between money and cigarettes).

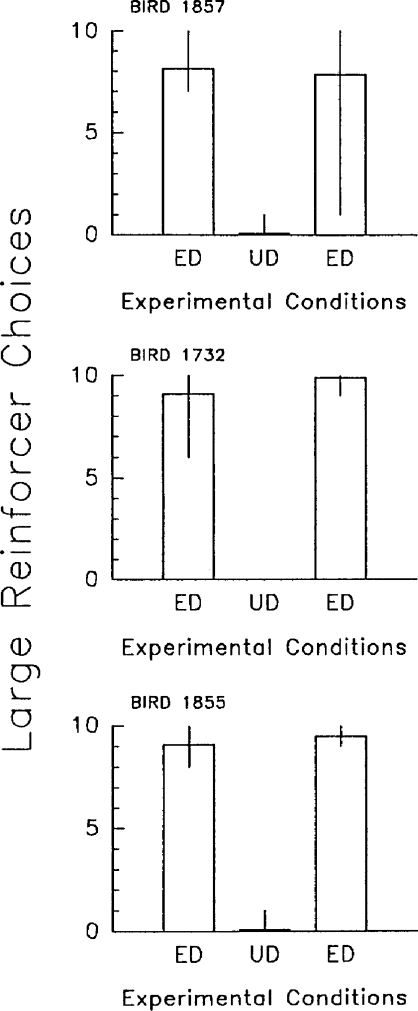

Jackson and Hackenberg (1996) reported similar findings in an analogous study with pigeons and token reinforcers. The tokens were stimulus lights, as described above, designed to mimic the display of points in analogous experiments with human subjects. Like points, the light tokens served as currency; each token was worth 2-s food, in that it could be exchanged for that amount of food during scheduled exchange periods. In the basic procedure, pigeons were given repeated choices between SSR (1 token delivered immediately) and LLR (3 tokens delivered after a delay), with the overall rate of (token and food) reinforcement held constant. In some conditions (Experiment 4), the delays to the exchange periods (when any earned tokens could be exchanged for food) were equal irrespective of which choice had been made. These equal-exchange delay (ED) conditions were designed to mimic the typical human experiment in which the delays to the exchange period and money are held constant. In other conditions, the exchange periods were scheduled just after token delivery, and were therefore unequal and shorter for the SSR. These unequal-exchange delay conditions (UD) are more like the conditions under which self-control is typically studied with nonhuman subjects. If choices are governed not by the token delays, but by the exchange delays and food, then one would predict preference for the LLR under ED conditions and the SSR under UD conditions.

Summary results are shown in Figure 10, which displays the number of choices (in a 10-trial session) of the LLR across exchange-delay conditions: UD for unequal exchange delay and ED for equal exchange delay. All 3 pigeons strongly preferred the small-reinforcer option under UD conditions and the large-reinforcer option under ED conditions. Thus, consistent with the Hyten et al. (1994) results with humans, pigeons' choices were governed not by token delays but by the delays to periods during which those tokens are exchanged for other reinforcers.

Fig. 10.

Mean number of large-reinforcer choices per 10-trial session for 3 pigeons in a token-based self-control procedure as a function of exchange-delay conditions. Delays to the exchange periods were either unequal and shorter for the small-reinforcer option (UD) or equal for both options (ED). Data are from the final 10 sessions per condition; error bars reflect the range of values contributing to the mean. From Jackson & Hackenberg (1996). Copyright 1996, by the Society for the Experimental Analysis of Behavior, Inc. Reprinted with permission.

Hackenberg and Vaidya (2003) replicated and extended these results, separately manipulating not only the exchange delays but the terminal-reinforcer (food) delays as well. Together, the results of these studies demonstrate disproportionate control by reinforcers later in the chain (exchange and terminal-reinforcer delays) relative to token reinforcers. That delays to exchange and terminal reinforcers exert strong control over behavior is not surprising given the exchange-schedule effects reviewed above, and the extensive literature on extended-chained (Gollub, 1977) and concurrent-chained schedules (Fantino, 1977). Indeed, such effects follow directly from a conceptualization of a token schedule as a kind of hybrid schedule with extended and concurrent-chained components. In support of this, in conditions in the Jackson and Hackenberg (1996) study in which exchange periods followed blocks of choice trials (Experiments 1 and 2), and in which tokens therefore accumulated prior to exchange, choice latencies were long in the early trials of a block, and short and undifferentiated in the later trials of a block.

Taken as a whole, the results with humans (Hyten et al., 1994) and pigeons (Jackson & Hackenberg, 1996; Hackenberg & Vaidya, 2003) converge on a similar point: In token-based self-control procedures, choice patterns are governed not by token delays but by delays to the periods during which tokens are exchangeable for other reinforcers. When these delays are equal, preferences for the LLR usually prevail; when the delays are unequal, preferences for the SSR usually prevail. In other words, under conditions more typical of research with humans, more “human-like” performance is seen (i.e., self-control), whereas under conditions more typical of research with nonhuman animals, more “animal-like” performance is seen (i.e., impulsivity). This is not to imply that all differences between human and nonhuman self-control are due to procedural variables. Other factors, such as human verbal and rule-governed histories, may interact with procedural variables. It is not possible to isolate the impact of these or any other factors, however, unless procedural differences are taken into proper account. A failure to do so may result in misleading or premature conclusions in cross-species comparisons (Hackenberg, 2005).

IV. Token Systems and Behavioral Economics

Token reinforcement procedures are highly relevant to behavioral economics. From an economic perspective, tokens can be conceptualized as a type of currency, earned and exchanged for other commodities. Token production is analogous to a wage, exchange opportunities to procurement costs, accumulation to savings, terminal reinforcers to expenditures, and so on. To date, however, there has been little substantive contact between behavioral economics and token reinforcement. This is unfortunate, because token systems provide a controlled and analytically tractable environment in which to explore interactions between behavior and economic variables.

In this section, the role of token systems in behavioral economics will be discussed. First, relations between tokens and consumer-demand concepts will be explored, showing how token systems permit more refined analyses of price than traditional formulations. Second, the utility of establishing common currency in assessing demand for qualitatively different commodities (including generalized commodities) will be discussed. Third, some applied implications of token economic systems will be considered. Finally, the role of token systems in forging a cross-disciplinary approach to behavioral economics will be discussed.

Demand Elasticity and Unit Price