Abstract

Objectives

This study sought to investigate user interactions with an electronic health records (EHR) system by uncovering hidden navigational patterns in the EHR usage data automatically recorded as clinicians navigated through the system's software user interface (UI) to perform different clinical tasks.

Design

A homegrown EHR was adapted to allow real-time capture of comprehensive UI interaction events. These events, constituting time-stamped event sequences, were used to replay how the EHR was used in actual patient care settings. The study site is an ambulatory primary care clinic at an urban teaching hospital. Internal medicine residents were the primary EHR users.

Measurements

Computer-recorded event sequences reflecting the order in which different EHR features were sequentially accessed.

Methods

We apply sequential pattern analysis (SPA) and a first-order Markov chain model to uncover recurring UI navigational patterns.

Results

Of 17 main EHR features provided in the system, SPA identified 3 bundled features: “Assessment and Plan” and “Diagnosis,” “Order” and “Medication,” and “Order” and “Laboratory Test.” Clinicians often accessed these paired features in a bundle together in a continuous sequence. The Markov chain analysis revealed a global navigational pathway, suggesting an overall sequential order of EHR feature accesses. “History of Present Illness” followed by “Social History” and then “Assessment and Plan” was identified as an example of such global navigational pathways commonly traversed by the EHR users.

Conclusion

Users showed consistent UI navigational patterns, some of which were not anticipated by system designers or the clinic management. Awareness of such unanticipated patterns may help identify undesirable user behavior as well as reengineering opportunities for improving the system's usability.

Introduction

Clinical practice in ambulatory care demands complex processing of data and information, usually at the point of care and during busy office hours. The increasing availability and capability of computerized systems offer great potential for effectively acquiring, storing, retrieving, and analyzing data and information; however, these desirable outcomes cannot be achieved if they fail to present data and information to the right people, at the right time, and in the right sequence. Mindfully designed software user interface (UI) and application flow (AF) are therefore of vital importance. Appealing, intuitive UI and AF designs also offer superior use experience, which is the key for any technological innovation to prevail.

Unfortunately, the lack of a systematic consideration of users, tasks, and environments often results in poor UI and AF designs in health information technology (IT) systems, a major impediment to their widespread adoption and routine use. 1,2 Poorly designed UI and AF may also account for unintended adverse consequences, leading to decreased time efficiency, jeopardized quality of care, and escalated threat to patient safety. 3–8 Consequently, these systems fail to deliver on their promise, user dissatisfaction increases over time, and systems are often abandoned. 2,9,10

It is evident that in addition to outcomes-based evaluation, health informatics research should also focus on analyzing end users' usage behavior to reveal the cognitive, behavioral, and organizational roots that have led to suboptimal outcomes and caused many health IT implementation projects to fail. 11–14 Human-centered computing has been increasingly recognized as an important means to address this gap. For example, Kushniruk and Patel 14 (2004) proposed to use cognitive engineering methods to improve the usability of clinical information systems, Johnson et al. 11 (2005) introduced a user-centered framework for guiding the redesign process of health care software user interfaces, and Harrison et al. 15 (2007) developed an interactive sociotechnical analysis model for studying human–machine interaction issues associated with introducing new technologies into health care. Usability studies, many instantiating the models and frameworks above, have been conducted to study a wide range of health IT applications, from electronic health records (EHR) systems 16–18 to computerized prescriber order entry (CPOE) systems 19 and emergency room medical devices. 20–22 The results amply show the value of applying a human-centered approach to improving the design of health IT.

Existing usability studies mainly employ research designs such as expert inspection, simulated experiments, and self-reported user satisfaction surveys. Some of these designs are difficult and/or expensive to conduct (e.g., videotaping computer use sessions to infer users' cognitive processes during interactions with a software system) or are subject to various sources of data unreliability, such as the Hawthorne effect commonly found in observational and experimental studies 23 and the Halo effect and cognitive inconsistencies when eliciting end users' self-reports. 24 In this article, we illustrate a novel approach for studying user behavior with a homegrown EHR by uncovering hidden navigational patterns in the automatically recorded usage data. Because EHR usage data can be usually extracted from its transaction database, this approach allows for the discovery of realistic user behavior demonstrated in actual patient care settings. To accommodate the full spectrum of UI usage, we also built into the EHR a special, nonintrusive tracking mechanism that captures additional UI interaction events such as mouse clicks to expand or collapse a tree view. These transitory events may not result in a record in an EHR's transaction database, and thus may not be captured otherwise.

To analyze the collected EHR interaction data, we use: (1) sequential pattern analysis (SPA), which searches for recurring patterns in which a series of EHR feature accesses occurred consecutively in a given chronological sequence; (2) a within-session SPA that computes the probability of reusing certain EHR features or combinations of features during a single patient encounter; and (3) a first-order Markov chain model that reveals an overall sequential order of EHR feature accesses by estimating the transition probability of users navigating from one feature to another. Together, these methods delineate common navigational patterns when the users navigating through the EHR's user interface to perform varying clinical tasks, reflecting patient care processes and clinical workflow.

It should be noted that although the uncovered UI navigational patterns reveal valuable insights into user behavior with the EHR, such patterns may not be a comprehensive representation of EHR use. For example, reading information from a computer display without interacting with the UI cannot be readily detected. Moreover, UI navigational patterns derived from user interactions with the EHR may not accurately represent the clinical behavior at the point of care due to the possibility of deferred documentation—clinicians may use alternative documentation strategies during a patient encounter and transcribe the data into the EHR afterward. Therefore, we recommend that use of this UI-driven behavior analysis approach be combined with other research designs such as context inquiry and ethnographically based observations to better delineate and understand user behavior with the EHR and its root causes.

Background

Since 2000, the research team has been working with practitioners at the Western Pennsylvania Hospital to design and develop a clinical decision-support system for enhancing the hospital's internal medicine residency training program. This system, called Clinical Reminder System (CRS), was a result of this joint effort. It is designed to manage an ambulatory clinic's routine operations, facilitate clinical documentation, and generate clinician-directed point-of-care reminders based on evidence-based clinical guidelines. 25 An increased emphasis on patient data management as a precursor to reminder generation has also led CRS to evolve over time into a standalone, lightweight EHR. Comprehensive patient data including patient descriptors, symptoms, and orders are captured in CRS, in addition to live or batched data feeds of billing transactions, patient registrations, and laboratory test results from other hospital information systems.

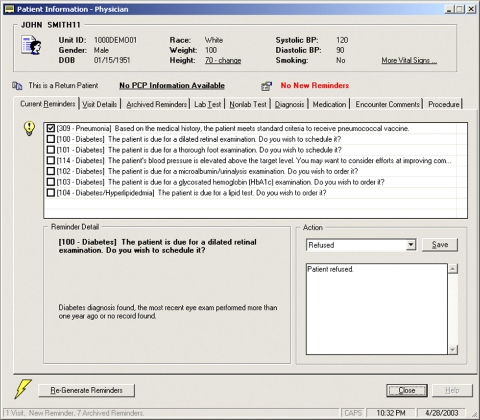

In February 2002, the first version of CRS (▶) was deployed in the West Penn Medical Associates, an ambulatory primary care clinic at the hospital. This clinic is one of the rotation sites serving the hospital's internal medicine residency training program. The clerical staff and nurses in the clinic used the system to schedule appointments, manage workflow, and collect pre-encounter assessments such as vital signs; the residents and attending physicians used the system to document clinical findings, prescribe medications, enter orders, and generate patient-specific chronic disease management and preventive care reminders. Clinicians' interaction with the system was enabled through desktop computers installed in every examination room in the clinic.

Figure 1.

User interface of the pilot CRS implementation.

In a qualitative study evaluating this initial implementation, several negative themes emerged, including a salient user complaint that the system's UI/AF design lacked clear “navigational guidance.” 26 To address this issue, we initiated a reengineering effort and worked closely with the intended end users in the study clinic to jointly explore the problems identified. This reengineering process took over one and half years to complete, resulting in a completely redesigned and redeveloped system. Enhancements included a full migration of CRS into a web-enabled application (the initial version was implemented in client-server architecture using Microsoft Visual Basic, Microsoft, Redmond, WA), a more intuitive user interface layout, and enhanced features supporting the clinic's resident training activities. The reengineered CRS thus incorporated lessons learned from a suboptimal pilot implementation combined with a better understanding of the routine clinical practice requirements of its intended end users. The present study evaluates these new design assumptions and choices by analyzing the subsequent day-to-day usage of the reengineered system deployed anew in the same clinic. Some preliminary results of this research were previously reported by Zheng et al. in 2007. 27

Methods

Study Setting and Participants

The redeployment took place in June 2005. Hands-on training was provided to every clinical staff member in the subsequent month. In this study, we allowed three months to pre-populate patient data and to let clinicians adjust to the new designs and new features before starting the research data collection. The study was approved by the Western Pennsylvania Hospital institution review board of the participating hospital. The EHR usage data collected from its transaction database were appropriately anonymized to remove clinicians' identities. Our data analysis only requires UI interaction event types, session IDs, and timestamps. No patient health information was collected.

In this article, we analyzed 10-month EHR usage data recorded from October 1, 2005, to August 1, 2006. During this time period, 40 residents were registered in the system, 10 of whom were excluded because they recorded EHR usage in fewer than 5 patient encounters. Their limited exposure to the system was deemed inadequate to allow the development of mature user behavior. Because the attending physicians in the study clinic only used the system to review and approve the residents' work, their usage was not considered.

The provisioning of point-of-care reminders was previously found to significantly interrupt patient care practice and clinical workflow. 27 Because we did not have a readily available solution to the problem and the present research was focused on studying EHR usage patterns, we chose to defer the activation of the reminder generation functionality while the data collection for this study was being conducted. Hence, the usage of CRS analyzed in this article constitutes only the usage of its generic EHR features. Seventeen such features are provided that were considered essential by the intended end users in supporting their everyday work, such as family and social history, diagnosis and assessment, and medication management. These features are labeled in this article using the first letter of a feature's name unless there is a conflict; for example, A denotes “Assessment and Plan,” G denotes “AllerGies,” M denotes “Medication,” E denotes “Medication Side Effects,” and so forth. The full feature labeling scheme is provided in ▶.

Table 1.

Table 1 Feature Labeling Scheme and the Overall Frequency of Use

| Label | Feature | Frequency of Use (%) |

|---|---|---|

| A | Assessment and Plan | 21.18 |

| B | Retaking BP | 0.34 |

| D | Diagnosis (problem list) | 16.36 |

| E | Medication Side Effects | 0.22 |

| F | Family History | 1.24 |

| G | AllerGies | 1.88 |

| H | History of Present Illness (HPI) | 7.26 |

| L | Laboratory Test | 3.58 |

| M | Medication | 14.53 |

| O | Order | 17.17 |

| P | Procedure | 0.38 |

| R | EncounteR Memo | 0.44 |

| S | Social History | 2.85 |

| T | Office Test | 0.62 |

| V | Vaccination | 0.83 |

| X | Physical EXamination | 6.69 |

| Y | Review of SYstems | 4.43 |

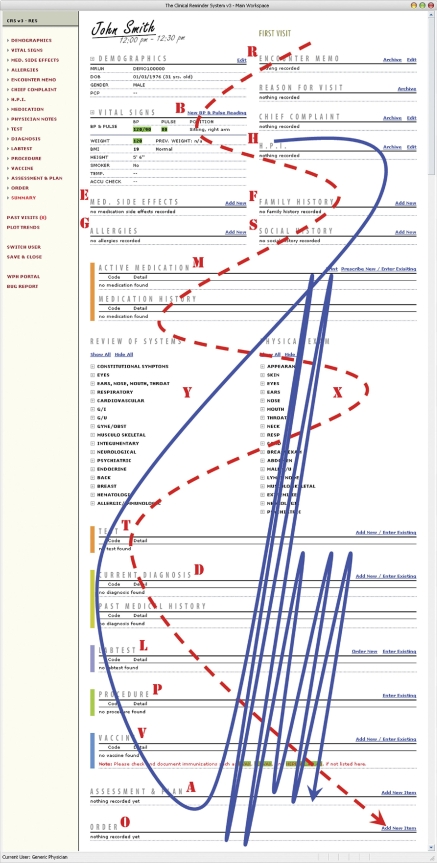

The reengineered CRS user interface is shown in ▶. Note that this UI layout features a unique design in which all essential EHR features are placed in a single workspace. The objective of this design is to maintain cognitive continuity when users navigate from one EHR feature to another. It also may help to avoid confusion caused by “fragmented displays” and “hidden information” problems, as discussed in several studies investigating unintended adverse consequences introduced by CPOE implementations (e.g., Ash et al., 2004; Koppel et al., 2005). Within this main workspace, users may scroll up or down to navigate to different EHR functional areas, or they may use the navigation menu provided to the left of the main workspace to quickly jump to a specific feature. The rationale for this UI design is discussed in more detail in Zheng et al., 25 2007.

Figure 2.

Reengineered CRS UI and the onscreen positioning of the 17 main EHR features. The screenshot is manipulated for print: on a regular computer display approximately 1/3 of the screen will be visible at one time. Dashed line = anticipated navigational pathway by design; solid line = actual navigational pathway observed.

Construction of Event Sequences

The EHR feature accesses events during each patient encounter were extracted from the EHR's transaction database, encoded according to the feature labeling scheme, and ordered chronologically based on their recorded timestamps. HMXAD, for example, is an event sequence composed of five sequential feature accesses: “History of Present Illness” (H) → “Medication” (M) → “Physical Examination” (X) → “Assessment and Plan” (A) → “Diagnosis” (D). In this study, successively accessing the same EHR feature was treated as a single interaction event, as these repeated accesses did not incur the cognitive challenge of “locating the next EHR feature to work on.” Below we present three analytical methods that we use for analyzing the event sequences hereby constructed. Note that we use the timestamps only to generate the chronologically ordered sequences and do not consider the temporal relations in the data.

Sequential Pattern Analysis

Sequential pattern analysis searches for recurring patterns within a large number of event sequences, where each sequence is composed of a series of timestamped events. 28 The SPA finds combinations of events appearing consistently in a given chronological order and recurring across multiple sequences. An SPA has applications in many areas, such as predicting future merchandise purchases based on a customer's past shopping record 28 and providing personalized web content based on a user's past surfing history. 29 In this study, we use a simple form of SPA to detect sequential patterns composed of consecutive EHR features access events. In other words, we use SPA to uncover those related EHR features that tend to be accessed one after another in a consistent sequential order. More sophisticated SPA algorithms and other analytical methods such as lag sequential analysis are capable of detecting sequential patterns with intermediate steps. We chose not to use these approaches because in this study context “locating the next EHR feature to work on” is the primary cognitive task a user is confronted with at each UI navigation episode. Which features will need to be located in a few steps into the future, that is, the lagged sequential dependencies between EHR features, is less of an immediate concern.

If a combination of consecutive events, s, appears in X sequences in a Y-sequence space, we note that s receives a support of X divided by Y. In this study, s is reported as a sequential pattern if it receives a support of 0.15 or above from the empirical data, that is, clinicians accessed this sequential combination of EHR features in more than 15% of patient encounters. Note that if an event combination appears multiple times within a single sequence, it is counted only once (the within-sequence recurrence rate analysis is discussed in the next section). Also note that some sequential patterns may be a subset of longer patterns, for example a hypothetical pattern abc is a subsequence contained in abcd. We only report the longest patterns in the article, referred to as maximal patterns in sequential pattern analysis.

Analysis of Within-session Recurrence Rates

An SPA leads to the discovery of maximal sequential patterns across encounter sessions. We are also interested in learning whether certain cross-session patterns have a tendency to recur within a session. Within-session recurrence rates are thus calculated as the number of event sequences in which a sequential pattern appears more than once divided by the total number of sequences that contain the pattern. An SPA, in combination with the within-session recurrence rate analysis, could provide empirical evidence with respect to which EHR features tend to be glued together in the EHR's UI navigation. Such knowledge may help inform more effective UI/AF designs, for example the glued EHR features can be then placed next to each other in adjacent onscreen locations to facilitate UI navigation.

First-order Markov Chain Analysis

The analyses above identify recurring sequential patterns, which are, however, fragmented regularities that do not contribute to the delineation of an overall sequential order of EHR feature accesses. This section introduces our use of a first-order Markov chain model to uncover such global navigational pathways.

A Markov chain is a stochastic process, with a memoryless property, in which a system changes its state at discrete points in time. 30 In the context of this study, we define a Markov state as the EHR feature access observed at a given point in time. A state change occurs when a user navigates from one feature to another on the EHR's UI. The sequential dependencies between EHR features during the state change, or EHR feature transition probabilities, can be estimated using the empirical data. A Markov chain can be constructed based on the feature transition probability matrix to reveals the hidden, global navigational pathways. In this study, we use a first-order Markov chain model in which the probability of observing a feature access event in a given state is solely dependent on the feature access observed in the immediately preceding state. We did not use higher-order Markov chain models or other lagged sequential analysis methods for the same reason as discussed in Section B.2.

The initial stationary probability vector, the probabilities of observing each of the EHR features being accessed in the initial Markov chain state, is computed using a maximum-likelihood estimate as the fraction of event sequences starting with a given feature. The transition probability of a → b is a maximum-likelihood estimate of observing this transition out of all possible transitions originating from a. For example, suppose there were three event sequences: A MRHFTXYXADAD, B MOMHFXADABLO, and DXADAPMOMAO (underlined: the starting feature of a sequence; italic: feature transitions originating from M). The initial probability vector would be {0.33, 0.33, 0.33, 0, 0, …, 0}, because A, B, and D each leads once in these three observations. The transition probability from feature M to feature R, O, H, and A would be 0.2, 0.4, 0.2, and 0.2, respectively, because of the 5 transitions originating from M, the combination MR, MH, and MA each occurs once (20% probability of occurring) and MO occurs twice (40% probability of occurring). Zero probabilities indicate that such feature transitions were never observed in the empirical data. A brief description of the first-order Markov chain model we use in this article is provided in Appendix 1, available as a JAMIA online data supplement at www.jamia.org. An in-depth introduction to Markov chains and their mathematical properties can be found elsewhere. 30

Results

During the 10-month study period, 30 active CRS users recorded EHR usage in 973 distinct patient encounters. The data tables of the CRS' transaction database (Oracle, Redwood Shores, CA) contain basic fields such as sessionID, userID (masked during the data collection), eventType, and timeStamp. We developed a computer program in Microsoft Visual C# (Microsoft, Redmond, WA) to extract these data fields to construct event sequences and to perform the data analyses.

The overall usage rate of each of the 17 main EHR features is reported in ▶. As ▶ shows, “Assessment and Plan” (21.18%), “Order” (17.17%), “Diagnosis” (16.36%), and “Medication” (14.53%) were among the most frequently accessed features—together their usage constituted nearly 70% of all EHR user interactions; whereas features such as “Medication Side Effects” (0.22%), “Retaking BP” (0.34%), “Procedure” (0.38%), and “Encounter Memo” (0.44%) were rarely used.

Consecutive Feature Access Events

The SPA identified 11 maximal sequential patterns that satisfy a minimum support threshold of 15%. These patterns are shown in ▶. ADAD (51.16%) and DADA (43.97%) are found to be the most commonly used sequential feature combinations, indicating that the CRS users often accessed “Assessment and Plan” and “Diagnosis” together and switched between these two features back and forth. A post hoc analysis was performed to determine whether accessing “Assessment and Plan” first occurred more often or vice versa. The results show that “Assessment and Plan” led in 89.18% of the … ADAD … or … DADA … sequence segments. Similarly, “Order” ⇆ “Medication” (32.77%) and “Order” ⇆ “Laboratory Test” (18.6%) are two other frequently appearing feature combinations, in which “Order” was more likely to be accessed before “Medication” (72.57%) or “Laboratory Test” (71.58%). Other patterns that received significant support from the empirical data also include “Physician Examination” → “Assessment and Plan” ⇆ “Diagnosis” (XADA), observed in 40.17% of CRS use sessions; “Review of System” → “Physician Examination” → “Assessment and Plan” ⇆ “Diagnosis” (YXAD), occurred in 21.78% of patients encounters; and “History of Present Illness” → “Social History,” appeared in 19.03% of the constructed event sequences. Note that we use the symbol ⇆ to denote bidirectional feature transitions, for example a clinician may switch between “Assessment and Plan” and “Diagnosis” back and forth multiple times; whereas the symbol → denotes one-way feature transitions.

Table 2.

Table 2 Sequential Patterns

| Pattern | Level of Support (%) |

|---|---|

| ADAD | 51.16 |

| DADA | 43.97 |

| XADA | 40.17 |

| OMOM | 32.77 |

| MOMO | 29.39 |

| YXAD | 21.78 |

| HS | 19.03 |

| OL | 18.6 |

| OMY | 16.7 |

| LO | 15.64 |

| HO | 15.01 |

Within-session Sequential Patterns

▶ shows the within-session recurrence rates of the sequential patterns identified in the previous step. Three patterns are found to have high probabilities of recurring within a single patient encounter context, namely “Assessment and Plan” ⇆ “Diagnosis,” “Order” ⇆ “Medication,” and “Order” ⇆ “Laboratory Test.” “Assessment and Plan” → “Diagnosis,” for example, indicates that conditional on an initial use of this sequential feature combination, there is a 70.22% chance that it will be used again at a later point during the same encounter. As noted earlier, “Assessment and Plan” ⇆ “Diagnosis,” “Order” ⇆ “Medication,” and “Order” ⇆ “Laboratory Test” are also cross-session sequential patterns. These pairs of features are hereby referred to as bundled features.

Table 3.

Table 3 Within-session Recurrence Rates of the Sequential Patterns

| Pattern | Level of Support (%) |

|---|---|

| AD | 70.22 |

| MO | 64.98 |

| OL | 64.77 |

| DA | 64.35 |

| OM | 63.67 |

| LO | 51.35 |

Higher-order Pattern Detection

Bundled features were further collapsed to form new event sequences to allow for higher-order pattern detection. AD … AD in the sequence HADAD … ADADXY, for example, was collapsed to create a new sequence H-K-XY, which was then inspected by another pass of sequential pattern analysis. AD … ADO (“Assessment and Plan” ⇆ “Diagnosis” → “Order”) is the only higher-order pattern thus identified, supported by 15.64% of patient encounters. This pattern suggests that once a clinician completes working on the “Assessment and Plan” ⇆ “Diagnosis” bundled feature, she may immediately move on to write medication orders.

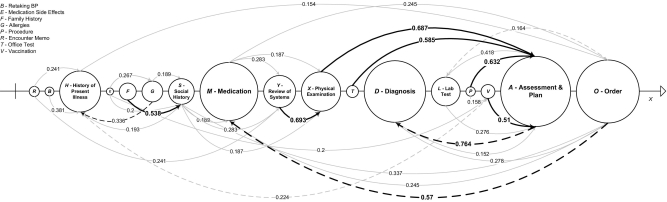

The Global Navigational Pathway

The estimated transition probabilities between the 17 main EHR features are provided in Appendix 2, available as a JAMIA online data supplement at www.jamia.org. ▶ depicts a network graph that presents the feature transition probabilities in a graphical format. In ▶, these 17 features are horizontally positioned according to their sequential placement on the EHR's UI. The directional arcs represent the feature transitions with respective estimated probabilities.

Figure 3.

The feature transition probability matrix plotted as a network graph (the 17 features are horizontally positioned according to their sequential placement on the EHR's UI. Size of a node is proportional to the feature's overall frequency of use. Feature transitions with a probability lower than 0.15 are not shown. Bold arcs = transitions with a probability over 0.5; dashed arcs = nonadjacent feature transitions running counter to the default UI layout.

In ▶, the feature transitions with a probability above 0.5 are highlighted using bold arcs. These high probabilities indicate dominant feature transitions; for example, after a clinician works on “Physical Examination,” the chance that she or he would immediately move on to use “Assessment and Plan” (0.687) is higher than the probabilities of using all other EHR features combined. Similarly, “Assessment and Plan” → “Diagnosis” (0.764) is a transition that is very likely to occur, suggesting that after documenting in “Assessment and Plan,” the next EHR feature a clinician would interact with is most likely to be “Diagnosis.” Further, “Order” has a high probability of transitioning to “Medication” (0.57), as does “Family History” → “Social History” (0.538). “Family History” → “Social History” is an anticipated pattern because these two features are co-located on the EHR's UI.

Furthermore, “Physical Examination” has a high probability of following “Review of Systems” (0.693), which is also anticipated because “Physical Examination” on the UI appears right next to “Review of Systems.” Nonetheless, after “Physical Examination,” the most likely EHR feature to be accessed next is not the adjacent feature on the screen (“Office Test”), but “Assessment and Plan,” which is farther away from where “Physical Examination” is located. Patterns that demonstrate switching to a distant feature also include “Procedure” → “Assessment and Plan,” “Office Test” → “Assessment and Plan,” and “Vaccination” → “Assessment and Plan,” with transition probabilities of 0.632, 0.585, and 0.51, respectively. These nonadjacent feature transitions running counter to the default UI layout are designated in ▶ using dashed arcs.

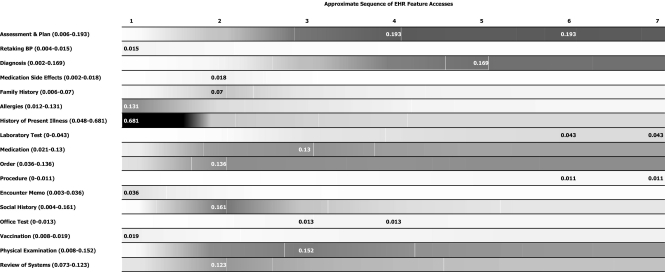

Based on the estimated feature transition probabilities, a Markov chain was constructed (▶) that converges in about 7 steps, possibly because a small set of EHR features (e.g., “Assessment and Plan”) received consistently heavy usage. The first column in ▶ is the estimated initial probability vector. The nth column lists the likelihood of observing each of the row features in the nth step. To better illustrate the Markov chain, we turn ▶ into a visual representation, or an EHR Feature Spectrum, shown in ▶. The grayscale gradient on the feature spectrum is proportional to the probabilities of observing a row feature in each of the 7 Markov chain steps. Darker areas are associated with higher probabilities.

Table 4.

Table 4 The 7-Step Markov Chain

| Step 1 | Step 2 | Step 3 | Step 4 | Step 5 | Step 6 | Step 7 | |

|---|---|---|---|---|---|---|---|

| A - Assessment and Plan | 0.006 | 0.071 | 0.165 | 0.193 | 0.19 | 0.193 | 0.191 |

| B - Retaking BP | 0.015 | 0.008 | 0.005 | 0.004 | 0.004 | 0.004 | 0.004 |

| D - Diagnosis | 0.002 | 0.012 | 0.076 | 0.149 | 0.169 | 0.166 | 0.168 |

| E - Medication Side Effects | 0.002 | 0.018 | 0.003 | 0.002 | 0.002 | 0.002 | 0.002 |

| F - Family History | 0.006 | 0.07 | 0.026 | 0.017 | 0.015 | 0.014 | 0.014 |

| G - Allergies | 0.131 | 0.044 | 0.029 | 0.015 | 0.013 | 0.012 | 0.012 |

| H - History of Present Illness | 0.681 | 0.067 | 0.054 | 0.048 | 0.048 | 0.048 | 0.048 |

| L - Laboratory Test | 0 | 0.008 | 0.032 | 0.037 | 0.041 | 0.043 | 0.043 |

| M - Medication | 0.021 | 0.121 | 0.13 | 0.114 | 0.109 | 0.112 | 0.112 |

| O - Order | 0.036 | 0.136 | 0.133 | 0.129 | 0.134 | 0.135 | 0.135 |

| P - Procedure | 0 | 0.002 | 0.004 | 0.008 | 0.01 | 0.011 | 0.011 |

| R - Encounter Memo | 0.036 | 0.007 | 0.003 | 0.003 | 0.003 | 0.004 | 0.004 |

| S - Social History | 0.004 | 0.161 | 0.071 | 0.041 | 0.033 | 0.031 | 0.031 |

| T - Office Test | 0 | 0.007 | 0.013 | 0.013 | 0.012 | 0.012 | 0.012 |

| V - Vaccination | 0.019 | 0.008 | 0.012 | 0.013 | 0.017 | 0.018 | 0.018 |

| X - Physical Examination | 0.008 | 0.137 | 0.152 | 0.131 | 0.126 | 0.122 | 0.121 |

| Y - Review of Systems | 0.032 | 0.123 | 0.093 | 0.082 | 0.075 | 0.073 | 0.074 |

Figure 4.

The EHR feature spectrum in a timed space (grayscale gradient is proportional to the probabilities of observing a row feature in each of the 7 Markov chain steps. Darker areas indicate higher probabilities. Numbers in parentheses = the range of probabilities of observing a row feature in these 7 Markov chain steps; numeric labels on grayscale stripes = the maximum probability of observing a row feature.

As both ▶ and ▶ suggest, among the 17 main EHR features provided in CRS, “Retaking BP,” “Allergies,” “History of Present Illness,” “Encounter Memo,” and “Vaccination” have the highest probability of being accessed in the initial Markov state; in other words, if these features ever get used, they are most likely to be used at the very beginning of a patient encounter. Similarly, “Medication Side Effects,” “Family History,” “Order,” “Social History,” and “Review of Systems” are most likely to be accessed in the next step; “Medication,” “Office Test,” and “Physical Examination” are most likely to be accessed in the third step; and so forth.

The global navigational pathway can be immediately observed from ▶ as the sequence of column maximums. These values indicate which row feature has the greatest likelihood of being used in a given step. In contrast, row maximums in ▶ suggest in which step accessing a given row feature is most likely to occur. “History of Present Illness” → “Social History” → “Assessment and Plan” is the most commonly traversed pathway in the first three steps. Afterward, the use of “Assessment and Plan” dominates the system's state, because “Assessment and Plan” was the most intensively used feature and the most popular recipient node among all observed feature transitions. Aside from this favorite pathway, several other navigational routes were also frequented by the CRS users. “History of Present Illness” → “Physical Examination” → “Assessment and Plan” → “Diagnosis” → “Order” …, for example, is another popular pathway that emerged from the empirical data.

Discussion

▶ draws a comparison between the actual, observed navigational pathway (solid line) and the anticipated, ideal pathway as a reflection of the system's original UI/AF design principles (dashed line). These principles were derived through extensive design discussions with the system's intended end users and the clinic management. Allowing for the fact that actual EHR use must cater to specific patient care needs and contexts, there still exist substantial differences between the observed and the anticipated navigational pathways. This deviation may suggest that: (1) there may be a significant gap between clinicians' day-to-day clinical practice and recommended standards; in addition, what the design team learned from the design discussions with the end users might be their perceptions of how ambulatory care should be practiced, rather than how it was practiced in reality; and (2) the reengineered CRS still had issues in its UI/AF design that require further reengineering efforts. Below we discuss these two possible sources of the deviation in more detail.

Unanticipated/Undesirable User Behavior

The most noticeable divide between the actual and the anticipated pathways lies in the different utilization rates of EHR features that require structured data entry versus EHR features that allow for unstructured, narrative data. As shown in ▶, after entering free-text documentation in the “History of Present Illness” and “Social History” sections, clinicians tended to directly jump to “Assessment and Plan” that is presented last on the EHR's UI while skipping all other intermediate features where codified patient data are expected. This indicates both limited usage of structured data entry as well as undesirable sequence of clinical documentation. According to recommended standards, “Assessment and Plan” should be used at the end of an encounter session to add summative highlights of structured data entered in other relevant sections. This finding suggests that either certain critical patient care procedures (e.g., physical examination) were not routinely performed, or they were performed but not properly documented, or were not documented in a desirable sequence. In either case, quality of care may be undermined and patients may be exposed to a higher risk of adverse events.

Second, counter to expectations, “Encounter Memo” was seldom used. “Encounter Memo” allows clinicians to document contextual information that may not fit in any other categories, or transitory information that does not need to, or should not, appear in a patient's permanent record; for example, handoff notes. The end users on the system's design team reiterated that this was a critical feature; therefore, it was allocated to one of the most prominent screen positions, top right corner. However as the empirical data have shown, this feature was rarely utilized during the 10-month study period.

Insights into UI/AF Design

The navigational patterns identified also suggest design insights for further improving the UI/AF design of CRS. First, “History of Present Illness” should be placed in a distinctive, salient onscreen position because it is one of the most frequently used features and is usually accessed immediately after a clinician launches the system. Second, the three bundled features identified (“Assessment and Plan” ⇆ “Diagnosis,” “Order” ⇆ “Medication,” and “Order” ⇆ “Laboratory Test”) highlight the need to place them in adjacent UI locations. If that is not possible, certain navigational aids should be provided in the system to facilitate such frequent feature switches, for example, hyperlink shortcuts or “take me to” buttons. Third, the onscreen position of “Medication Side Effects” and “Allergies” may need to be swapped. “Allergies” is used more often and has a much higher probability of transitioning to “Medication Side Effects” instead of vice versa. Similarly, the onscreen position of “Family History” and “Social History” may also need to be swapped. Finally, some of the navigational patterns may suggest general EHR design insights that may not apply in this study context. For example, zero-transition probabilities indicate that such feature transitions never occurred in the empirical setting; having these features next to each other on a UI or presenting them consecutively in a stepwise guided wizard would therefore require further consideration.

Study Limitations

This study has several limitations. First, actual EHR usage can only be collected through a system deployed in the field. The idiosyncrasies of this system will inevitably alter user behavior, making achieved results difficult to generalize. Second, our study participants were internal medicine residents. Their clinical practice can be very different from that of other internal medicine practitioners and medical professionals in other specialties. In addition, the residency training activities in the study clinic could have generated unique EHR usage that may not apply in other settings. Third, a comprehensive analysis of user interactions with an EHR needs to further delineate between sequences resulting from real-time recording of encounter information vs. recording the data at the end of a patient visit. For example, if a clinician's physical and cognitive interactions with a patient are recorded in the EHR in real time during the patient encounter, the sequences are likely to reveal true point-of-care clinical behavior. However, if the relevant data are entered into the EHR afterward, the generated sequence may be a reflection of the order in which features are currently presented in the EHR interface. Although, as the results show, there is no predominance of deferred documentation in our empirical study, this study limitation may potentially lead to false conclusions. Fourth, real temporal relations need to be included in the study for better insights into the impact of feature access durations on sequences and revealed patterns. These extensions are part of our ongoing study. As such, the findings of this study do not necessarily suggest direct design insights for other EHR systems or other types of clinical information systems, but rather illustrate the promise of the methods in revealing user behavior with informatics applications at the point of care.

Future Directions

Using automatically recorded EHR usage data to uncover hidden UI navigational patterns may be a cost-effective approach for improving the usability of other EHRs or other types of clinical information systems. For example, numerous qualitative studies have analyzed the UI/AF-related unintended adverse consequences when introducing CPOE into clinical workspace. 5–7 Such unintended consequences may manifest themselves as abnormal patterns in a CPOE's UI navigation. For example, workarounds that bypass certain system-enforced blocks (e.g., patient safety assurance procedures) can be easily detected by looking for undesirable UI navigational patterns.

Future work may also consider providing a highly customizable EHR software user interface. However, although it should be acknowledged that different clinicians have distinct practice styles, compared with a deliberately calibrated default layout, a highly customizable UI may not be as effective in achieving desirable usability improvement goals. As shown in the literature, default screen layout and options exert a strong influence on clinician behavior, 31 whereas relying on end users' self-customization to let the usability of a system spontaneously improve often results in suboptimal outcomes due to several cognitive and practical reasons. 32 To some extent, allowing for extensive user customization may also facilitate undesirable user behavior, rather than fostering healthier ones.

Future work may also examine the boundary events connecting the patterns identified by SPA to look for potential regularities. Regularities at the boundaries—EHR feature accesses that occurred before, between, or after the commonly recurring sequential patterns—may suggest additional consistent user behavior through their interactions with the EHR.

This article provides evidence regarding the UI navigational behavior of EHR users. However, our analyses do not yet provide insights as to why users demonstrated observed behaviors, and if such behaviors are undesirable, possible remedies. In our follow-up studies, we will use supplemental phenomenologically based methods such as observations and interviews to further understand the findings reported in this article. We plan to seek the end users' opinions on the cognitive, social, and organizational root cause of the demonstrated behavior and how the system and its design processes could be further improved to address this complex interplay between users, computerized systems, and other dynamics in the clinical environment.

Conclusion

Many health IT implementation projects have failed because of poor UI/AF designs or the poor fit between a UI/AF design with a specific use context. In this article, we show a novel approach for improving the UI/AF design of a homegrown EHR and for fine-tuning its UI/AF design with the end users' day-to-day clinical practice. This was achieved by analyzing the automatically recorded EHR usage data to uncover hidden UI navigational patterns. We show that clinicians demonstrated consistent patterns when navigating through the EHR's UI to perform different clinical tasks. Some of these patterns were unanticipated, significantly deviating from the ideal patterns according to the system's original design principles. Understanding the nature of this deviation can help identify undesirable user behavior and/or UI/AF design deficiencies, informing corrective actions such as focused user training or continued system reengineering. Finally, the lack of concordance between clinicians' actual clinical practice and recommended standards, as shown in this study, is an imperative concern that must be carefully addressed in the health IT design and implementation processes.

References

- 1.Tang PC, Patel VL. Major issues in user interface design for health professional workstations: summary and recommendations Int J Biomed Comput 1994;34:139-148. [DOI] [PubMed] [Google Scholar]

- 2.Zhang J. Human-centered computing in health information systems. Part 1: analysis and design. J Biomed Inform 2005;38:1-3. [DOI] [PubMed] [Google Scholar]

- 3.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors J Am Med Inform Assoc 2004;11:104-112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Han YY, Carcillo JA, Venkataraman ST, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system Pediatrics 2005;116:1506-1512. [DOI] [PubMed] [Google Scholar]

- 5.Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors JAMA 2005;293:1197-1203. [DOI] [PubMed] [Google Scholar]

- 6.Campbell EM, Sittig DF, Ash JS, Guappone KP, Dykstra RH. Types of unintended consequences related to computerized provider order entry J Am Med Inform Assoc 2006;13:547-556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ash JS, Sittig DF, Poon EG, Guappone K, Campbell E, Dykstra RH. The extent and importance of unintended consequences related to computerized provider order entry J Am Med Inform Assoc 2007;14:415-423. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Linder JA, Schnipper JL, Tsurikova R, Melnikas AJ, Volk LA, Middleton B. Barriers to electronic health record use during patient visits AMIA Annu Symp Proc 2006:499-503. [PMC free article] [PubMed]

- 9.Massaro TA. Introducing physician order entry at a major academic medical center: I. Impact on organizational couture and behavior. Acad Med 1993;68:20-25. [DOI] [PubMed] [Google Scholar]

- 10.Goddard BL. Termination of a contract to implement an enterprise electronic medical record system J Am Med Inform Assoc 2000;7:564-568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Johnson CM, Johnson TR, Zhang J. A user-centered framework for redesigning health care interfaces J Biomed Inform 2005;38:75-87. [DOI] [PubMed] [Google Scholar]

- 12.Kaplan B. Evaluating informatics applications—some alternative approaches: theory, social interactionism, and call for methodological pluralism Int J Med Inform 2001;64:39-56. [DOI] [PubMed] [Google Scholar]

- 13.Delpierre C, Cuzin L, Fillaux J, Alvarez M, Massip P, Lang T. A systematic review of computer-based patient record systems and quality of care: more randomized clinical trials or a broader approach? Int J Qual Health Care 2004;16:407-416. [DOI] [PubMed] [Google Scholar]

- 14.Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems J Biomed Inform 2004;37:56-76. [DOI] [PubMed] [Google Scholar]

- 15.Harrison MI, Koppel R, Bar-Lev S. Unintended consequences of information technologies in health care—an interactive sociotechnical analysis J Am Med Inform Assoc 2007;14:542-549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rose AF, Schnipper JL, Park ER, Poon EG, Li Q, Middleton B. Using qualitative studies to improve the usability of an EMR J Biomed Inform 2005;38:51-60. [DOI] [PubMed] [Google Scholar]

- 17.Patel VL, Kushniruk AW, Yang S, Yale JF. Impact of a computer-based patient record system on data collection, knowledge organization, and reasoning J Am Med Inform Assoc 2000;7:569-585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sittig DF, Kuperman GJ, Fiskio J. Evaluating physician satisfaction regarding user interactions with an electronic medical record system AMIA Annu Symp Proc 1999:400-404. [PMC free article] [PubMed]

- 19.Rosenbloom ST, Geissbuhler AJ, Dupont WD, et al. Effect of CPOE user interface design on user-initiated access to educational and patient information during clinical care J Am Med Inform Assoc 2005;12:458-473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Zhang J, Johnson TR, Patel VL, Paige DL, Kubose T. Using usability heuristics to evaluate patient safety of medical devices J Biomed Inform 2003;36:23-30. [DOI] [PubMed] [Google Scholar]

- 21.Malhotra S, Laxmisan A, Keselman A, Zhang J, Patel VL. Designing the design phase of critical care devices: a cognitive approach J Biomed Inform 2005;38:34-50. [DOI] [PubMed] [Google Scholar]

- 22.Laxmisan A, Malhotra S, Keselman A, Johnson TR, Patel VL. Decisions about critical events in device-related scenarios as a function of expertise J Biomed Inform 2005;38:200-212. [DOI] [PubMed] [Google Scholar]

- 23.Mayo E. The Human Problems of an Industrial CivilizationNew York: MacMillan; 1933.

- 24.Schwarz N, Oyserman D. Asking questions about behavior: cognition, communication, and questionnaire construction Am J Eval 2001;22:127-160. [Google Scholar]

- 25.Zheng K, Padman R, Johnson MP, Engberg JB, Diamond HS. An adoption study of a clinical reminder system in ambulatory care using a developmental trajectory approach Medinfo 2004;11:1115-1120. [PubMed] [Google Scholar]

- 26.Zheng K, Padman R, Johnson MP, Diamond HS. Understanding technology adoption in clinical care: clinician adoption behavior of a point-of-care reminder system Int J Med Inform 2005;74:535-543. [DOI] [PubMed] [Google Scholar]

- 27.Zheng K, Padman R, Johnson MP. User interface optimization for an electronic medical record system Medinfo 2007;12:1058-1062. [PubMed] [Google Scholar]

- 28.Agrawal R, Srikant R. Mining sequential patternsProceedings of the 11th International Conference on Data Engineering. 1995. pp. 3-14.

- 29.Montgomery AL, Shibo L, Srinivasan K, Liechty JC. Modeling online browsing and path analysis using clickstream data Marketing Sci 2004;23:579-595. [Google Scholar]

- 30.Grinstead CM, Snell JL. Markov chains Introduction to Probability. Providence, RI: American Mathematical Society; 1997.

- 31.Halpern SD, Ubel PA, Asch DA. Harnessing the power of default options to improve health care N Engl J Med 2007;357:1340-1344. [DOI] [PubMed] [Google Scholar]

- 32.Mackay WE. Triggers and barriers to customizing software Proc CHI 1991:153-160.