Abstract

One of the main purposes in computational anatomy is the measurement and statistical study of anatomical variations in organs, notably in the brain or the heart. Over the last decade, our group has progressively developed several approaches for this problem, all related to the Riemannian geometry of groups of diffeomorphisms and the shape spaces on which these groups act. Several important shape evolution equations that are now used routinely in applications have emerged over time.

Our goal in this paper is to provide an overview of these equations, placing them in their theoretical context, and giving examples of applications in which they can be used. We introduce the required theoretical background before discussing several classes of equations of increasingly complexity. These equations include energy minimizing evolutions deriving from Riemannian gradient descent, geodesics, parallel transport and Jacobi fields.

1. Introduction

One of the main purposes in computational anatomy is the measurement and statistical study of anatomical variations in organs, notably in the brain or the heart. Over the last decade, our group has progressively developed several approaches for this problem, all related to the Riemannian geometry of groups of diffeomorphisms and the shape spaces on which these groups act. Several important shape evolution equations that are now used routinely in applications have emerged over time.

Our goal in this paper is to provide an overview of these equations, placing them in their theoretical context, and giving examples of applications in which they can be used. Because these equations all relate to geometric constructions on the group of diffeomorphisms, some theoretical background is needed. It will be summarized in the next section. We will then discuss equations of increasingly complexity. This will start, in section 3, with energy minimizing equations deriving from gradient descent (using an appropriate definition of the gradient adapted to the Riemannian structure on diffeomorphisms and shapes). We will show applications in area minimization for surfaces, and shape segmentation with ”Diffeomorphic Active Contours”.

The next set of equations are geodesic equations, first on the group of diffeomorphisms, then on the shape spaces on which they project (here, we call “shape” any structure that can be deformed). We will in particular consider “shapes” that are represented by collections of (not necessarily labeled) points, for which the evolution equations reduce to relatively simple ordinary differential equations. Other examples of interest are shapes represented by images and by densities. For computational anatomy, geodesics are of main importance, because they provide what is called normal coordinates, or exponential charts, that can be used to represent anatomical data relatively to a template. We shall also refer to this as the momentum representation, because of the momentum-preservation property of the geodesic equation, as we will describe in section 4.

Geodesic equations correspond to moving in “straight lines” on a curved manifold. The next class of equations we will describe, parallel translation, correspond to keeping track of a given direction while moving on a manifold. This will be discussed in section 5. The final class of equations we will discuss are Jacobi equations (section 6). These equations describe the variation of geodesics with respect to changes in their initial conditions. They are useful when solving variational problems optimizing over geodesics.

Before describing these equations, we need to summarize the theoretical background supporting their construction. This is done in the next section.

2. Mathematical background and notation

There are a few fundamental ingredients in this theory, and our goal is to review them in this section without including the full mathematical machinery. So, even if many of the presented concepts are special cases of a more general (and more abstract) theory, we will only introduce the notions that are needed for the understanding of the rest of this paper.

2.1

We will make our discussion in an arbitrary dimension, denoted d (although d = 3 in the examples and most of the applications in computational anatomy). We will assume that an open subset Ω in is chosen and keep it fixed in the following. For the theory, Ω can be the whole space , although numerical implementations would rather have it bounded (with suitable boundary conditions).

2.2

A diffeomorphism of Ω is an invertible transformation from Ω to itself, which is continuously differentiable (C1) with a differentiable inverse. The identity map, id, is a diffeomorphism, the composition of two diffeomorphisms is a diffeomorphism and the inverse of any of them is a diffeomorphism as well. This implies that the set of diffeomorphisms of Ω, denoted Diff(Ω) has a group structure.

2.3

Diffeomorphisms being transformations of Ω, they alter quantities that are included in, or defined on Ω. We will be particularly interested in collections of points, functions and densities on Ω, and denote these objects with a common notation; most of the time, an object will be denoted m, belonging to some space M. So, depending on the context, M will be the set of all finite configurations of points in Ω, or a set of functions, or densities, defined on Ω. Our construction extends in fact to a wide class of objects, like curves, surfaces, measures, etc.

We will say that diffeomorphisms act on M if, for any diffeomorphism φ and any object m, a deformed object φ · m can be defined. The natural requirements that id · m = m and φ ⋅ (ψ ⋅ m) = (φ ∘ ψ) ⋅ m ensure that (φ, m) ↦ φ is a group action, property that we will always assume. For configurations of points, m = (m1, …, mN), we have φ · m = (φ(x1), …, φ(xN)). For images, , we have φ ⋅ m = m ∘ φ-1. Finally, for densities , we have φ ⋅ m = m ∘ φ-1 |det D(φ-1)|, where D is the differential. The interpretation of the action on functions is that (φ · m)(x) is the value of m at the point that has been moved to x by φ. For densities, φ · m is the new repartition of mass after deformation of the space.

2.4

Vector fields are functions . Given a time-dependent vector field, i.e., a function v: (t,y) ↦ v(t,y) defined on [0, 1] → Ω (arbitrarily choosing the time scale to be 1), one defines the ordinary differential equation (ODE) on Ω by . The flow associated to this equation is the function (t, x) ↦ φ(t, x) such that, for a given x, t ↦ φ(t, x) is the solution of the ODE with initial condition y(0) = x, which is uniquely defined under standard smoothness assumptions on v.

For a fixed t, the function x ↦ φ(t,x) is a diffeomorphism of Ω, provided v has no normal component at the boundary of (if Ω has a boundary). We will also assume that v vanishes at infinity (if Ω is unbounded). This relation between vector fields and diffeomorphisms is a key component of the analysis, as well as an important generative tool for diffeomorphisms [7, 10]. We will use the notation φv(t, .) for the diffeomorphism obtained in this way.

2.5

If v is a C1 vector field (not time-dependent), the transformation id + tv: x ↦ x + tv (x) is a diffeomorphism for t small enough. For an object m ∈ M, we therefore can define the small deformation (id + tv) · m. The derivative, with respect to t, at time t = 0, of this expression is called the infinitesimal action of v on m and denoted v · m. So,

Working out this definition with our examples gives

for configurations of points, v · m = -∇mT v for images and v · m = -∇ · (mv) for densities (where ∇m is the column vector of derivatives and ∇ · (mv) is the divergence operator).

2.6

We now introduce a dot product norm over vector fields. More precisely, we will consider a space V of vector fields on Ω and a dot product, (v,w) ↦ 〈v, w〉V on V, denoting .

The norm on V is associated to an operator L, in the sense

| (1) |

The previous equation must be understood in a generalized sense. Most of the time (not always) Lv will be a singular object (a generalized function) which can only be computed via its action on smooth functions.

If V is a Hilbert space, which we will be assumed without further comment, this operator is invertible with a very well behaved inverse K = L-1. For all our models, K is a smooth kernel operator such that there exists a function (x, y) ↦ K (x, y) such that

| (2) |

(We make the common abuse of notation of using the same letter for the operator and the kernel.)

In full generality, K(., .) is matrix valued, although all our applications use a scalar kernel.

2.7

This space V and its norm is the final ingredient needed to define Riemannian geometric concepts over diffeomorphisms and shapes.

Again, it is not in our intent to invoke any structured mathematical theory in this paper, especially the one of infinite dimensional Lie groups that suits the present construction. One can understand the Riemannian metric in Riemannian geometry [9] as a way to generalize to curved spaces the concept of kinetic energy. Taking the case of diffeomorphisms, we can consider a trajectory t ↦ φt ∈ Diff(Ω). The associated velocity is ρt = dφt/dt. A Riemannian metric provides the associated energy, in the form

for some norm that may depend on the current position in the group.

Let us write

where

The interpretation is that vtdt is the additional infinitesimal displacement required to pass from the deformation φt to φt+dt. In deformation models inspired by fluid mechanics, the kinetic energy is assumed to only depend on this displacement (This assues that properties of the deformed medium remain invariant). This means that E(ρt) only depends on vt, and we will take

with .

2.8

One then defines minimizing geodesics as trajectories with minimal energy between their endpoints. Using the ODE representation, minimizing geodesics between the identity map and ψ ∈ Diff (Ω) are given by (with the notation introduced in section 2.4) where v is the time-dependent vector field solution of the variational problem:

Note that a v with finite energy such that may not always exist. In fact, the ψ's for which the solution exists form a subgroup of Diff (Ω) which is specified by V and denoted GV.

The geodesic distance between id and ψ is the square root of the above integral for the optimal v, and will be denoted dV(id, ψ). So we have

The geodesic distance between two diffeomorphisms φ and ψ is given by dV (id, ψ∘φ-1).

2.9

Since we interpret as a kinetic energy, we will, using (1), interpret Lv as a momentum. As we have remarked, this momentum can be a singular object. Note that this interpretation is not completely physically unsupported. The choice Lv = ρv where ρ is a scalar density is the momentum that leads to the Euler equation in fluid mechanics [2, 3] (when restricting to divergence-free flows). Also, L being a first order operator is related to the model that supports the Camassa-Holm equation for waves in shallow water [6].

2.10

Before passing to the next section, it remains to describe how this structure on groups of diffeomorphisms “projects” on the object space M via the group action.

The simplest way is to start with the geodesic distance. We define

(We use the same notation dV for both distances, which should not cause any confusion since they apply to different variables.)

So the geodesic distance on M coincides with the “Procustean distance” which consists in finding the least-cost deformation that takes m to m′.

The Riemannian structure on M requires, as we have seen, the definition, for all m, of a dot product norm of the form where for some trajectory on M passing at m. This norm is in fact given by the infinitesimal version of the definition of the distance, namely,

The vector field v that achieves this minimum is called the horizontal lift of ξ and will be denoted vξ (or is the dependency on m is not clear). With this notation, we have

| (3) |

A vector v is called horizontal at m if it coincides with vξ for ξ = v · m (so if it is the optimal infinitesimal deformation for the variation of m it generates).

We will discuss in section 4 how geodesics in M relate to geodesics in Diff(Ω).

3. Gradient Descent

3.1

We now discuss gradient descent equations in Diff(Ω) and in shape spaces. Let's first consider a function E: φ ↦ E(φ) defined on diffeomorphisms. The gradient of E with respect to the Riemannian metric introduced in section 2.7 is defined by the identity

for any small variation δ φ of φ (within a suitable class). From the definition of the dot product, this identity can be rewritten

or, letting v = δφ ∘ φ-1,

| (4) |

The left-hand side of (4) is often called the shape derivative of E at φ [8]. If the gradient exists, both sides are continuous linear forms in v (for the V norm).

3.2

To implement gradient descent with respect to this metric, the first step is to identify a function φ ↦ S(φ) ∈ V such that

Gradient descent is then simply

| (5) |

We will see some simple examples of this construction [25] in the following sections. However, one important property of this equation is that, if vt = -S(φt), this equation becomes dφtdt = vt ∘ φt so that φt is the flow associated to the time-dependent vector field vt. As noticed in section 2.4, this implies that φt is a diffeomorphism, at least as long as vt remains “well behaved”.

3.3

One can apply this for image matching and in fact retrieve an algorithm proposed in [7]. Consider two images m and m′ defined on Ω and the matching function

Then, the shape derivative is given by

From the definition of the dot product in V (see equation (1)), we see that this implies

or (since K = L-1)

| (6) |

When L = (αID - Δ)k (where Δ is the Laplacian), the associated gradient descent evolution is the equation proposed in [7]. Note that, because the minimized energy does not include a control of the smoothness of the diffeomorphism, the equation cannot be expected to stabilize and may develop singularity in the long run. If used as such, it must be combined with an appropriate stopping rule [25].

3.4

For the sake of illustration, let's describe what a gradient descent evolution would be for a penalized cost function of the form

(with the matrix norm |A|2 = trace(AAT) in the penalty term). This will result in a function S(φ) = S1(φ) + λS2(φ), with S1 given by (6). For S2, we have

To compute S2, a first option is to integrate by parts the above integral and obtain the dot product of some function with v which can be handled as before. Another option, that we now detail, is to use the kernel formulation and make a direct computation. Indeed, if K is smooth enough, one can prove the identity (for a matrix valued function y ↦ A(y))

where the integral in the right side term represents the function

Using this, we can write

3.5

We can also use gradient descent in the group of diffeomorphisms to implement segmentation algorithms. Assuming that the topology of the extracted object is known, we can represent the target object as a deformation φ ·m of a template (or initial guess) m. Let, for example, m be a binary image, representing a 3D volume (so that φ · = m ∘ φ-1), and define

where f is some function, defined on the observed image, quantifying the relative likelihood for a point with coordinate y to belong in the interior of the shape rather than in the exterior. Assuming that f is smooth, and writing

we have, with a standard computation [8],

This expression can be used to compute the gradient of E for the group metric. If m is the interior of a surface, we can write

where Nφ is the unit normal to the deformed boundary, φ(∂m), and σφ the volume form on this boundary. This yields ∇E(φ) = S(φ) ∘ φ with

3.6

Here is an alternative approach, in which we use an additional constraint that discretizes the gradient using some form of finite elements.

Let x1, …, xN be control points that have been defined relative to the reference shape m. We want to compute the gradient under the additional restriction to be finitely generated, in the sense that it can be written in the form with

The natural (and optimal) choice is to let be the orthogonal projection of S(φ) on the vector space

for the dot product ⟨., .⟩V (provided, of course, that K0 is chosen such that W ⊂ V ). Let e1, …, ed be the canonical basis of . Since the family of vector fields

generates W, we need, to compute the orthogonal projection, to solve the linear system of equations

| (7) |

This system takes a very simple form when , i.e.

where is another kernel. In this case, (7) gives

| (8) |

The projected gradient descent can then be implemented as

where the α k's solve System (8) at each time t.

This is particularly simple with Gaussian kernels. Defining

for , we can take (with σ0 > σ)

(using well known properties of the convolution of Gaussian kernels) and (8) becomes

| (9) |

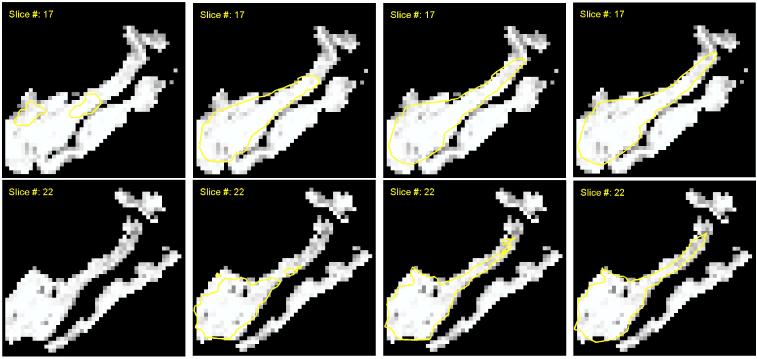

A preliminary experiment using this method is provided in figure 1.

Figure 1.

Evolution of a 3D diffeomorphic active contour in the hippocampal region. Two sections are provided (Rows 1 and 2). The first image shows the position at initial times, and is followed by two images at intermediate times and the final image after convergence. The grey level are proportional to estimated probabilities of grey matter using the AKM method [22]. Fifty control points were used in this experiment.

3.7

We now briefly discuss the relation between the previous gradients in the group of diffeomorphisms and the projected metric on shapes that we have discussed in 2.10.

Given a function defined on shapes, m ↦ F(m), we can always write m = φ · m0 and proceed as before for the minimization of E(φ) = F(φ ⋅m0). It is easy to prove that the function U(φ) then only depends on m = φ · m0, so that we can write the whole computation in terms of F only, solving

to obtain H(m) ∈ V and defining the evolution

This approach is identical to doing gradient descent in the space of shapes with the metric defined in 2.10.

3.8

Gradient descent on the area can also be used to build flows that can smooth surfaces without changing their topology [32]. Letting m be an initial surface, the goal is to build a diffeomorphic evolution φt · m minimizing the area functional

Here, we slightly deviate from the general formulation to make a small change in (3), defining

with

Computing gradient descent with this metric provides, for a closed surface, an area-minimizing flow of the form (for a point p ∈ m)

| (10) |

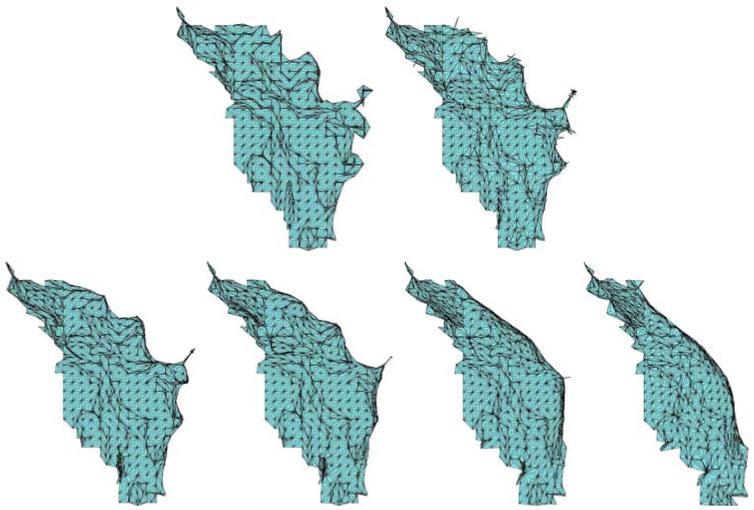

where H is the mean curvature and N the normal to the surface. (A strict application of 3.7 would correspond to taking ρ = 1.) Figure 2 provides an example of applying this algorithm to a surface (which is a piece of cortical surface located within the cingulate gyrus), compared to standard mean curvature flow (dp/dt = HN).

Figure 2.

Diffeomorphic mean curvature flow: a piece of cortical surface (First row, left) is smoothed using area minimizing flows. The right image in the first row shows the result of the classic mean curvature flow after t = 1, with already formed singularities. The second row shows the evolution of diffeomorphic mean curvature flow (10) at times t = 1, 2, 5, 10.

4. Geodesic Equations

4.1

We now switch to the equations that define ‘straight lines’ in our manifolds. These are the Euler-Lagrange equations associated to the construction of paths with minimal kinetic energy between two given points (diffeomorphisms or shapes).

In the group of diffeomorphisms, these equations have been described in [2, 3, 12, 16], and for the particular application to template matching in [19]. They are associated to the following evolution of the momentum

| (11) |

combined with dφt/dt = vt ∘ φt. Equation (11) must be understood in a generalized sense, and can be rewritten as follows: for all smooth vector field w,

| (12) |

We will follow [12] and refer to Equation (11) as the EPDiff equation (it relates to the Euler-Poincaré theory applied to diffeomorphisms [16]).

Geodesics in a Riemannian manifolds are curves that obey the geodesic equations (EPDiff in the case of diffeomorphisms). There is a risk of confusion with what we have defined as minimizing geodesics, which are solutions of the shortest path variational problem. Minimizing geodesics satisfy the geodesic equations, since they are Euler-Lagrange equations for the shortest path. But not all geodesics are minimizing between their endpoints, although they usually are minimizing between points in their trajectory that are sufficiently close to each other.

4.2

For further reference, we note that, letting at = Lvt, the evolution of the diffeomorphism under the EPdiff equation can be written as follows,

| (13) |

4.3

EPDiff provides an important representation of diffeomorphisms via the Riemannian exponential map. This map takes in input an initial velocity v0 (or the corresponding momentum Lv0) and solves the EPDiff equation until time t = 1 (one can show that EPDiff has solution over arbitrary time intervals provided K is smooth enough). The output of the map is the final diffeomorphism, φ1. We therefore have a representation

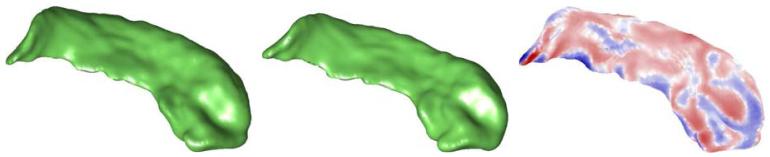

that relates the endpoint of a geodesic to the initial momentum. This is the momentum representation of the deformation. An example of this representation with images is provided in Figure 3.

Figure 3.

Visualizing the momentum on a binary volume. Left: template image; center: target image; right: momentum superimposed on the template image. The momentum can then be used as a deformation signature for shape studies.

4.4

EPDiff is a central equation in computational anatomy. A first reason for this is that it coincides with the Euler-Lagrange equation for the Large Deformation Diffeomorphic Metric Matching algorithm (LDDMM), which minimizes an energy that takes the form

where the data attachment term is similar to the ones we have discussed above. Since the computation of dV, (id, φ) is a variational problem itself, any solution of the LDDMM problem must optimize geodesics in some way ([15, 28, 4, 1, 27, 30]), which involves EPDiff.

One of the by-products is that solving the LDDMM problem automatically provides the momentum representation of the optimal diffeomorphism φ. This representation can then be used to analyze anatomical datasets. This approach has been used with hippocampuses [28], in relation to Alzheimer [29], of with the cingulate gyrus, in relation with schizophrenia [24]. Cardiac studies have also been developped in [11] using this method.

4.5

We now discuss the geodesics equation in the object space, M. Because the Riemannian structure on M has been defined as the projection of the one on diffeomorphisms, geodesics on M are directly obtained from geodesics on GV. More precisely, a geodesic starting from m0 ∈ M must take the form mt = φt · m0, such that

t ↦ φt is a geodesic on GV (it satisfies EPDiff).

The initial velocity at time t = 0 is horizontal at m0, as defined in section 2.10.

Property (ii) propagates over time: if it is true, then vt is horizontal at mt for all t.

Because horizontal vector fields have specific forms for specific types of objects, we will be able to simplify the projection of the EPDiff equation in an object-dependent manner. Note that, in spite of the apparent difference in their form, the equations that follow are all instances of the EPDiff equation with a specific choice for the initial momentum.

4.6

When objects M are point sets, horizontal vector fields at m = (x1, …, xN ) must take the form

| (14) |

Working out the EPDiff equation for such vector fields yields the following geodesic equations on M, which end up forming a system of ordinary differential equations. These equations are written in terms of mt = (x1(t), …, xN (t)) and at = (α1(t), …, αN (t)), with

| (15) |

Here, ∇1K represent the gradient of (x, y) ↦ K(x, y) with respect to the first coordinate, x.

The momentum representation for landmarks is captured by the family of d dimensional vectors, a0. The initial conditions (m0, a0) uniquely specify the values of mt and at at all times.

Note that the momentum Lv associated to a vector field given by (14) is singular. In fact, in this case, we have (with some abuse of notation)

for any w ∈ V. The relation between such singular solutions (sometimes called peakons) and soliton waves in fluid mechanics has been described in [13]. A numerical analysis of the stability of these equations is given in [17].

4.7

When objects are images, horizontal vector fields are characterized by the property that Lv is orthogonal to the level lines of the image. (The formal statement to include singular momenta is: Lvv(w) vanishes when applied to a test vector field, w, which is parallel to these level sets).

An interesting example is when Lv0(x) is given by a0(x)∇m0(x). This is a function in the classical sense, parallel to the gradient of m0. (This is interesting because one can show that solutions of the image matching LDDMM problem take this form [5, 20].) Then the geodesic equations on M are

| (16) |

4.8

Equations for densities are very similar. Horizontal momenta at m are also orthogonal to the level sets of m, and when Lv0 = a0∇m0, the evolution equations are

| (17) |

So that the roles of m and a are reversed compared to the image case.

4.9

Note that all the preceding geodesic equations take the form

| (18) |

where the functions F, G, H are linear in a and v, but not necessarily in m. (This includes also the case of diffeomorphisms, simply replacing m by φ.) The function t ↦ at can be interpreted as a representation for horizontal momenta, via the relation Lv = LF (m, a).

4.10

An interesting variant of the preceding three geodesic equations comes from the theory of metamorphosis. Our models so far only move the objects along trajectories that correspond to diffeomorphic transformations. Taking the case of images, it is impossible, with this approach, to alter topology along geodesics, say to move from a sphere-like object to a torus.

Metamorphosis [21, 26, 14] provides a mechanism that allows the evolution to deviate from pure deformations. The first equation in systems (15), (16), (17) is a conservation (advection) equation of the deformed object, mt, along the flow. The metamorphosis equations introduce a right-hand side to this equation, in order to provide a slightly inexact advection. The landmark equations, for example, become, introducing a parameter σ2,

| (19) |

For images, they are

| (20) |

and for densities

| (21) |

These equations have the fundamental property to remain geodesic equations in the relevant object spaces (for different metrics, obviously). The parameter σ2 is a weight defining how close to a pure deformation the evolution will be.

5. Parallel Translation

5.1

We have noticed in paragraph 4.4 that the momentum representation (exponential map) is an important tool that can provide a normalized representation of a family of shapes relative to a fixed template. There are cases, however, in which the registration of all data to a single template may not be the most natural first step in a study. Consider, as an example, the situation in which the data is paired (each subject is associated to two shapes, say left and right), and the issue is to decide whether the two shapes are statistically identical or to exhibit some asymmetry.

One possibility is to register all shapes to a template and design a statistical study of the asymmetry in terms of the momentum representation. A more direct and natural approach, however, would be to register the left shape to the right one and vice versa, and then measure whether the two processes are identical or not.

With LDDMM, the registrations result in momenta sitting on (or horizontal at) the left shape of each subject (for the left to right registrations) and on the right shape (for the right to left). All these momenta are not directly comparable and need to be aligned in order to be horizontal at a single template. The operation that does this with a minimal metric distortion is parallel translation along geodesics, which is the subject of this section. It is important to notice that this operation is done after the within-subject difference has been measured, and not before. This reduces the risk of having this difference mixed in between-subject variation, which is likely to occur if the template registration is performed first.

Finally, let's emphasize that these ideas directly generalize to situations beyond asymmetry. They can be applied to any case in which several shapes are observed for each subject, and the elements of interest are relative differences, within subject, among these shapes. This includes time series and longitudinal data.

This approach using parallel transport has been recently used for several applications. In [31], the left-right asymmetry of the hippocampus for normal subjects has been confirmed as a validation study for the method. In [24], a longitudinal study showed specific evolution patterns for dementia of the Alzheimer's type. Another from of application of parallel transport combined with time series LDDMM is provided in the current issue [23].

5.2

Parallel translation on a manifold is an operation that takes a tangent vector at some point in the manifold and translate it along a given curve. In our discussion, this curve will always be a geodesic from a subject shape to the template. Instead of tangent vectors, however, we will transport momenta. The point of view is equivalent, and more suitable for our discussion. For completeness, let's mention that, for diffeomorphisms, momenta and tangent vectors at φ ∈ G are related via Lv ↔ v ∘ φ.

5.3

We first give the general form of the equation, with the notation used in (18). We assume that a path t ↦ mt is given, satisfying an equation of the form

with the notation of section 4.9. The path t ↦ at is what we called the momentum parameter along mt, with corresponding deformation momentum Lvt = LF (mt, at). Let b0 be another momentum parameter at m0. The following equation translates b0 along the path t ↦ mt. We use the same notation as in (18)

| (22) |

Here we have denoted Fm and Hm the differentials for F and H with respect to the first variable, m. Note that the first equation defines db/dt implicitly, involving the inversion of the linear operator β ↦ H(m, F(m, β)) = F(m, β) ⋅ m.

If t ↦ at satisfies the third equation in (18), namely dat/dt = G(mt, at, vt), then mt is a geodesic, and we have the important property that at is its own parallel transport (i.e., bt = at is solution of the equation), since, in this case, the first equation reduces to

Another important feature is that the operation conserves the dot product: if wt and are the results of parallel transports along the same path, then .

5.4

We now discuss this situation in the special cases that we have considered, starting with the full group of diffeomorphisms and equation (13). Here, we have (with m = φ) F(m, a) = Ka (so that Fm = 0), H(m, v) = v ∘ φ and G(m, a, v) given by the right-hand side of the third equation in (13). This implies that

which can therefore be easily inverted in β. This provides the following form for parallel translation with diffeomorphisms.

| (23) |

with at = Lvt and bt = Lwt. (This equation, that we have written in classic form, also has a generalized counterpart, since a and b can be singular [18, 30].)

5.5

When considering object spaces, it is important to note that, while the geodesic equations in object spaces were simply special forms of EPDiff adapted to the specific expressions of horizontal vector fields, this is not the case with parallel translation. This is because horizontality is not conserved along (23). More precisely, if A(vt, wt) represents the right hand side of (23) (with at = Lvt and bt = Lwt), one can check that parallel translation along mt, as given by (22), is characterized by the two conditions,

,

wt is horizontal at mt.

5.6

For point sets, we have mt = (x1(t), …, xN(t)) and at = (α1(t), …, αN(t)), with

Using these expressions in (22), the equations for parallel translation are given as follows.

| (24) |

where ∇2 is the gradient with respect to the second coordinate. Replacing ηk, ξk, Dv(xk) and Dw(xk) by their expansions, this system can be rewritten uniquely in terms of mt, at and bt, yielding a finite dimensional ODE, but with a relatively lengthy expression that we will not detail here (see [31]).

5.7

For images, let mt be a geodesic for the image metric with vt = K(at∇mt). Let , and assume that w0 = -K(b0∇m0) is given with . Then, the parallel translation of η0 along mt for the image metric is

| (25) |

To explicitly compute dbt/dt, one needs to invert the operator . One can prove that this is invertible with the additional condition that ζ = 0 when ∇mt = 0. Solving this numerically is however notably harder than the solution of the linear system needed for (24). An algorithm for this has been proposed in [31].

5.8

We now conclude with the case of densities, which, as can be expected, is symmetric to the image case. With the same notation, the equations are

| (26) |

6. Jacobi Equation

6.1

We conclude this review with the Jacobi equations, which are first order variations of geodesics.

The geodesic evolutions are uniquely specified by the initial position, m0 (or φ0 = id for diffeomorphisms), and the initial velocity v0 (which must be horizontal at m0 for geodesics in object space). The Jacobi equation measures the variation of the geodesic when these initial conditions are changed.

A formal computation from the general form of the geodesic equation (18) is not too hard. Given initial perturbations m0 → m0 + δm0 and a0 → a0 + δa0, we get mt → mt + δmt, at → at + δat and vt → vt + δvt with

| (27) |

These equations must be combined with (18) to form a sellf contained dynamical system in (mt, at, δmt, δat). The Jacobi field is, by definition, J(t) = tδmt with δmt given by this system.

The equations can now be worked out in each case of interest. Note that they simplify further in the image and density cases, for which all functions are also linear in m, with G independent of m, yielding

| (28) |

6.2

This equation is related to a variety of problems. The first one is the optimization of the LDDMM functional, namely

The geodesic distance between id and φ is equal to the norm of the initial velocity v0 along the geodesic. So, denoting by m1(m0, a0) the object obtained by solving equation (18) until time t = 1, the problem is equivalent to the minimization of

(We write as a function of a0 only, because m0 is fixed in the LDDMM problem.)

To compute the effect of a small variation a0 → a0 + δa0, we write (formally applying the chain rule)

Now, dm1/da0 is precisely the Jacobi field δm1 when (27) is solved until time t = 1, with initial conditions m0, a0, δm0 = 0 and δa0. If one is able to write the last term as

| (29) |

a gradient descent procedure in the form a0 → a0 - ε (2a0 + b0)can be used to solve the LDDMM problem. (This is gradient descent for the metric .)

Such gradient descent procedures have been described with landmark matching [28] and image matching [30].

6.3

We now indicate how b0 can be computed in (29). The first step is to use the dual Jacobi equation. To define this equation, first replace δv by its expression in the last two equations of (27), to obtain a system that takes the form

| (30) |

The functions A and C (resp. B and D) are linear in δm (resp. δa). The dual equation is then given by

| (31) |

where A*,B*,C*,D* are the duals of the operators A, B, C.D (with fixed m and a); they correspond to the usual transposed matrices for finite dimensional evolutions (point sets) and to the L2 conjugate for images and densities. Given this system, the computation of b0 goes as follows:

Solve (31) backwards in time (from t = 1 to t = 0) with initial conditions .

- Let be the value obtained at t = 0 at the previous step; solve the equation

6.4

Another situation in which the Jacobi equation is relevant is based on the remark that the solution of the LDDMM problem must satisfy

| (32) |

where a1 and m1 is the final momentum parameter and object for the geodesic starting with (m0, a0) (This is an application of the Pontryagin principle.)

The shooting method in optimal control consists in solving this equation directly in terms of the initial parameter, a0, since m0 is fixed. Newton's method for this purpose consists in iteratively adapting the current value of a0 witha0 → a0 + δa0, such that, given the current m1 and a1, their variations δm1 and δa1 satisfy

This requires being able to explicitly compute the transformations δa0 ↦ (δm1, δa1) and inverting the previous equation to obtain δa0, which is tractable in finite dimension and directly applicable to point sets [1].

6.5

Finally, we can remark that the Jacobi equation is related to parallel translation as follows. If one expresses d2 δmt/dt2 as a function of the other variables at t = 0, i.e.,

which can be done by differentiating in time the second equation in (27) and replacing the first derivatives by their expressions in the system (and taking advantage of the fact that δm0 = 0), then the first equation in (24), (25) and (26) is exactly

This can be used to design a numerical implementation of parallel translation based on iterating Jacobi fields, as proposed in [30].

7. Conclusion

We have described, in this paper, a whole range of evolution equations (gradient descent, geodesics, parallel transport, Jacobi fields) that are related to important aspects of computational anatomy. These equations have all taken part in medical imaging applications, for smoothing, segmentation, registration, longitudinal analysis etc.

This provides a complementary angle on computational anatomy, which more often focuses on variational formulations, like LDDMM. While the computation of the solutions of evolution equations has become an important component of the discipline, this also brings new open problems, regarding the extension of their definition to new shape modalities or their numerical implementation, as well as potential new applications in the analysis of medical data. Significant development in these directions can be expected in the future.

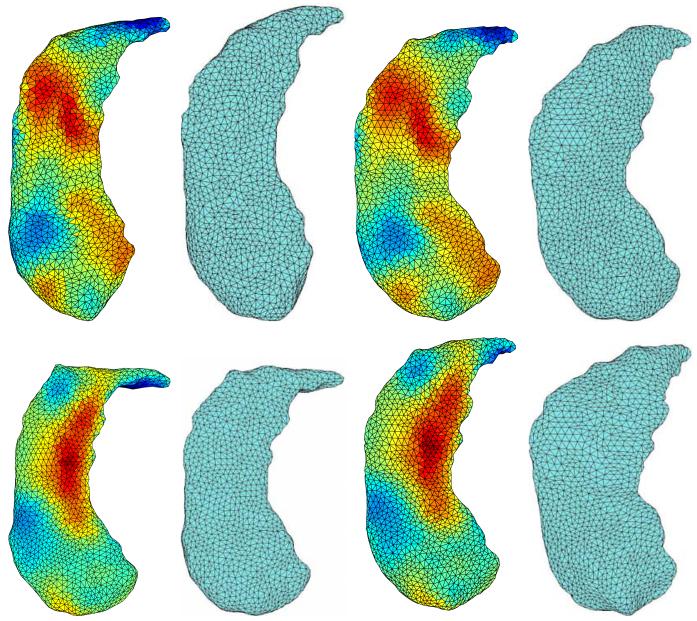

Figure 4.

Parallel tranlation on vertices of triangulated surfaces for pair data. Two examples are provided (one in each row). The first two images in each row represent shapes A and B, shape A being colored according to the Jacobian determinant of the optimal transformation from A to B (blue: atrophy; red: expansion). The last two images are the template, T, and a new synthetic shape S. S is obtained by parallel translating the initial momentum for the A-to-B transformation along the geodesic linking A to T, and then solving EPDiff starting from T with the translated momentum. In each row, T is colored according to the Jacobian determinant of the obtained deformation.

Acknowledgements

The research was partially supported by NIH 2P41 RR015241-07, 5U24 RR021382-05, 5P50 MH071616-04 and NSF DMS-0456253.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Allassonnière S, Trouvé A, Younes L. Geodesic shooting and diffeomorphic matching via textured meshes. Proc. of EMMCVPR; Springer; 2005. [Google Scholar]

- [2].Arnold VI. Sur un principe variationnel pour les ecoulements stationnaires des liquides parfaits et ses applications aux problèmes de stanbilité non linéaires. J. Mécanique. 1966;5:29–43. [Google Scholar]

- [3].Arnold VI. Mathematical methods of Classical Mechanics. Springer; 1978. Second Edition: 1989. [Google Scholar]

- [4].Beg MF, Miller MI, Trouvé A, Younes L. Technical report. University Johns Hopkins: 2002. Computing large deformation metrics via geodesic flows of diffeomorphisms. To appear in International Journal of Computer Vision. [Google Scholar]

- [5].Beg MF, Miller MI, Trouvé A, Younes L. Computing large deformation metric mappings via geodesic flows of diffeomorphisms. Int J. Comp. Vis. 2005;61(2):139–157. [Google Scholar]

- [6].Camassa R, Holm DD. An integrable shallow water equation with peaked solitons. Phys. Rev. Lett. 1993;71:1661–1664. doi: 10.1103/PhysRevLett.71.1661. [DOI] [PubMed] [Google Scholar]

- [7].Christensen GE, Rabbitt RD, Miller MI. Deformable templates using large deformation kinematics. IEEE trans. Image Proc. 1996 doi: 10.1109/83.536892. [DOI] [PubMed] [Google Scholar]

- [8].Delfour MC, Zolsio J-P. Shapes and Geometries. Analysis, differential calculus and optimization. SIAM; 2001. [Google Scholar]

- [9].Do Carmo MP. Riemannian geometry. Birkaüser; 1992. [Google Scholar]

- [10].Dupuis P, Grenander U, Miller M. Variational problems on flows of diffeomorphisms for image matching. Quaterly of Applied Math. 1998 [Google Scholar]

- [11].Helm PA, Younes L, Beg MF, Ennis DB, Leclercq C, Faris OP, McVeigh E, Kass D, Miller MI, Winslow RL. Circulation Research. 2005. Evidence of structural remodeling in the dyssynchronous failing heart. [DOI] [PubMed] [Google Scholar]

- [12].Holm DD, Marsden JE, Ratiu TS. The Euler-Poincaré equations and semidirect products with applications to continuum theories. Adv. in Math. 1998;137:1–81. [Google Scholar]

- [13].Holm DR, Ratnanather JT, Trouvé A, Younes L. Soliton dynamics in computational anatomy. Neuroimage. 2004;23:S170–S178. doi: 10.1016/j.neuroimage.2004.07.017. [DOI] [PubMed] [Google Scholar]

- [14].Holm DR, Trouvé A, Younes L. The Euler Poincaré theory of metamorphosis. Quart. Applied Math. 2008 To appear. [Google Scholar]

- [15].Joshi S, Miller M. Landmark matching via large deformation diffeomorphisms. IEEE transactions in image processing. 2000;9(8):1357–1370. doi: 10.1109/83.855431. [DOI] [PubMed] [Google Scholar]

- [16].Marsden JE, Ratiu TS. Introduction to Mechanics and Symmetry. Springer; 1999. [Google Scholar]

- [17].McLachlan Robert I, Marsland Stephen. N-particle dynamics of the Euler equations for planar diffeomorphisms. Dynamical Systems. 2007;22(3):269–290. [Google Scholar]

- [18].Michor P. Some geometric equations arising as geodesic equations on groups of diffeomorphisms and spaces of plane curves including the hamiltonian approach. Lecture Course, University of Wien.

- [19].Miller MI, Trouvé A, Younes L. On the metrics and euler-lagrange equations of computational anatomy. Annual Review of biomedical Engineering. 2002;4:375–405. doi: 10.1146/annurev.bioeng.4.092101.125733. [DOI] [PubMed] [Google Scholar]

- [20].Miller MI, Trouvé A, Younes L. Geodesic shooting for computational anatomy. J. Math. Image and Vision. 2005 doi: 10.1007/s10851-005-3624-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Miller MI, Younes L. Group action, diffeomorphism and matching: a general framework. Int. J. Comp. Vis. 2001;41:61–84. (Originally published in electronic form in: Proceeding of SCTV 99, http://www.cis.ohio-state.edu/ szhu/SCTV99.html) [Google Scholar]

- [22].Priebe CE, Miller MI, Ratnanather JT. Segmenting magnetic resonance images via hierarchical mixture modelling. Comp. Stat. Data Anal. 2006;50(2):551–567. doi: 10.1016/j.csda.2004.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Qiu A, Albert M, Younes L, Miller M. Time sequence diffeomorphic metric mapping and parallel transport track time-dependent shape changes. Neuroimage. 2008 doi: 10.1016/j.neuroimage.2008.10.039. (to appear) [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Qiu A, Younes L, Wang L, Ratnanather JT, Gillepsie SK, Kaplan K, Csernansky J, Miller MI. Combining anatomical manifold information via diffeomorphic metric mappings for studying cortical thinning of the cingulate gyrus in schizophrenia. NeuroImage. 2007;37(3):821–833. doi: 10.1016/j.neuroimage.2007.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Trouvé A. Diffeomorphism groups and pattern matching in image analysis. Int. J. of Comp. Vis. 1998;28(3):213–221. [Google Scholar]

- [26].Trouvé A, Younes L. Metamorphoses through lie group action. Found. Comp. Math. 2005:173–198. [Google Scholar]

- [27].Vaillant M, Glaunès J. Surface matching via currents. In: Springer, editor. Proceedings of Information Processing in Medical Imaging (IPMI 2005); 2005. number 3565 in Lecture Notes in Computer Science. [DOI] [PubMed] [Google Scholar]

- [28].Vaillant M, Miller MI, Trouvé A, Younes L. Statistics on diffeomorphisms via tangent space representations. Neuroimage. 2004;23(S1):S161–S169. doi: 10.1016/j.neuroimage.2004.07.023. [DOI] [PubMed] [Google Scholar]

- [29].Wang L, Beg, M. F, Ratnanather JT, Ceritoglu C, Younes L, Morris J, Csernansky J, Miller MI. Large deformation diffeomorphism and momentum based hippocampal shape discrimination in dementia of the alzheimer type. IEEE Transactions on Medical Imaging. 2006;26:462–470. doi: 10.1109/TMI.2005.853923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Younes L. Jacobi fields in groups of diffeomorphisms and applications. Quart. Appl. Math. 2007;65:113–134. [Google Scholar]

- [31].Younes L, Qiu A, Winslow RL, Miller MI. Transport of relational structures in groupe of diffeomorphisms. J. Math. Imaging and Vision. 2008 doi: 10.1007/s10851-008-0074-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Zhang S, Younes L, Zweck J, Ratnanather JT. Diffeomorphic surface flows: a novel method of surface evolution. SIAM J. Appl. Math. 2008;68(3):806–824. doi: 10.1137/060664707. [DOI] [PMC free article] [PubMed] [Google Scholar]