Abstract

The neurophysiology of eye movements has been studied extensively, and several computational models have been proposed for decision-making processes that underlie the generation of eye movements towards a visual stimulus in a situation of uncertainty. One class of models, known as linear rise-to-threshold models, provides an economical, yet broadly applicable, explanation for the observed variability in the latency between the onset of a peripheral visual target and the saccade towards it. So far, however, these models do not account for the dynamics of learning across a sequence of stimuli, and they do not apply to situations in which subjects are exposed to events with conditional probabilities. In this methodological paper, we extend the class of linear rise-to-threshold models to address these limitations. Specifically, we reformulate previous models in terms of a generative, hierarchical model, by combining two separate sub-models that account for the interplay between learning of target locations across trials and the decision-making process within trials. We derive a maximum-likelihood scheme for parameter estimation as well as model comparison on the basis of log likelihood ratios. The utility of the integrated model is demonstrated by applying it to empirical saccade data acquired from three healthy subjects. Model comparison is used (i) to show that eye movements do not only reflect marginal but also conditional probabilities of target locations, and (ii) to reveal subject-specific learning profiles over trials. These individual learning profiles are sufficiently distinct that test samples can be successfully mapped onto the correct subject by a naïve Bayes classifier. Altogether, our approach extends the class of linear rise-to-threshold models of saccadic decision making, overcomes some of their previous limitations, and enables statistical inference both about learning of target locations across trials and the decision-making process within trials.

Keywords: Saccades, Decision making, Reaction time, Bayesian learning, Model comparison

1. Introduction

In order to survive in a competitive, dynamic environment, animals must be able to integrate past experience with sensory evidence to infer the current state of the world and execute a behavioural response. Marked progress in our understanding of the neural basis of decision making has been achieved by focusing on sensory-driven decisions, such as the simple question of where to look next. Studying decision making in sensorimotor systems like the oculomotor system has the advantage that one can exploit a large body of neuroanatomical and neurophysiological knowledge that has been accumulated over the past decades. It seems conceivable that studying the neuronal mechanisms of visual-saccadic decision making could provide us with a blueprint of how the brain implements other sensorimotor decisions, or even deliver “a model for understanding decision making in general” (Glimcher, 2003).

The decision processes that underlie rapid eye movements towards a target have been studied in a variety of experimental paradigms. One seminal series of studies is based on the random dot-motion task designed by Newsome and colleagues (Newsome & Pare, 1988). In an initial fixed-duration version of this task, monkeys were trained to discriminate the motion direction of a set of moving dots with varying degrees of coherence, and indicate the perceived motion by a leftward or rightward saccade (Newsome, 1997; Newsome, Britten, & Movshon, 1989; Newsome, Britten, Salzman, & Movshon, 1990; Salzman, Britten, & Newsome, 1990). Subsequently, Shadlen, Britten, Newsome, and Movshon (1996) suggested a computational explanation of the neuronal mechanisms producing the resulting saccade and provided experimental verification of its key assumptions (Gold & Shadlen, 2000; Kim & Shadlen, 1999; Shadlen et al., 1996; Shadlen & Newsome, 2001). In particular, they identified a gradual rise of spiking activity in the lateral intraparietal (LIP) area integrating motion direction-specific signals from the middle temporal (MT) area (Shadlen & Newsome, 1996, 2001).

Based on a reaction time version of the same task (Roitman & Shadlen, 2002), Shadlen and colleagues advanced the hypothesis that rising activity before a saccade, which had also been observed in the frontal eye fields (FEF), represented the ratio of the log likelihoods that the two possible eye movements would be executed (Gold & Shadlen, 2000, 2001). Based on their decision-theoretic analysis, they suggested that log likelihood ratios might be used as “a natural currency for trading off sensory information, prior probability and expected value to form a perceptual decision” (Gold & Shadlen, 2001).

Another key series of studies was carried out by Hanes, Schall, and colleagues, who investigated an oddball task (as well as the countermanding paradigm; Hanes and Carpenter (1999)) to study how neural signals in the FEFs would finally trigger the initiation of saccades (Hanes & Schall, 1996; Hanes, Thompson, & Schall, 1995; Schall & Thompson, 1999; Thompson, Bichot, & Schall, 1997; Thompson, Hanes, Bichot, & Schall, 1996). In their oddball task, monkeys were trained to indicate, by an eye movement, the location of the oddball within a circular arrangement of visual stimuli around a central fixation dot. They showed that FEF activity was consistent with psychophysical models about oddball reaction time tasks (Luce, 1986; Ratcliff, 1978; Sternberg, 1969a, 1969b). Specifically, their findings supported the notion that the saccadic decision would be made as soon as gradually increasing neural activity in the FEFs had crossed a biophysical threshold (Hanes, Patterson, & Schall, 1998; Schall & Thompson, 1999).

Motivated by the question of why saccadic latencies displayed large variance in all of the above tasks, an even simpler reaction time paradigm was investigated by Carpenter and colleagues (Carpenter & Williams, 1995; Reddi & Carpenter, 2000). In their saccade-to-target reaction time task, human subjects were asked to shift their gaze from a central fixation stimulus to an eccentric target as soon as it appeared on the screen. The critical manipulation was to vary the uncertainty about where the target would appear (Basso & Wurtz, 1997, 1998). It was found that saccade latencies became shorter with increasing prior probability of the corresponding target location. Specifically, response speed was found to be proportional to the log prior probability of target location (Basso & Wurtz, 1997, 1998; Carpenter & Williams, 1995).

The behavioural and electrophysiological findings from all three paradigms described above are consistent with the notion of a saccade being elicited once some gradually rising neuronal activity crosses a biophysical threshold. This idea has been formalized in terms of various mechanisms known as rise-to-threshold accumulator models. These models aim to provide a computational abstraction of a biophysically conceivable mechanism that explains saccade latencies and their variability across trials (for reviews see Glimcher (2001, 2003), Gold and Shadlen (2001), Platt (2002), Ratcliff and Smith (2004), Schall (2001, 2003), Smith and Ratcliff (2004) and Usher and McClelland (2001)).

In the context of saccadic decision making with a fixed set of potential target locations, rise-to-threshold models assume that subjects maintain a set of hypotheses each of which corresponds to one such location (Carpenter & Williams, 1995; Gold & Shadlen, 2002; McMillen & Holmes, 2006; Shadlen & Gold, 2004). As the stimulus appears, a measure of evidence for each of these hypotheses is continuously refined, implemented as a competition between alternative decision signals in the brain. At any given point in post-stimulus time, these decision signals might, for example, represent the posterior probabilities of the target hypotheses, as derived from the subject’s prior (Basso & Wurtz, 1997, 1998; Platt & Glimcher, 1999) and the sensory evidence (i.e., the likelihood of the data) collected up to that point in time (Carpenter, 2004; Carpenter & Williams, 1995). As soon as one such signal reaches a preset threshold, a saccade is elicited towards the corresponding target. Depending on the way in which information is assumed to be accumulated over time, two specific types of rise-to-threshold model are often distinguished: random-walk models and linear rise-to-threshold models.

Random-walk or diffusion models are fundamentally based on a sequential probability ratio test that is being carried out continually (Ratcliff, 1978; Ratcliff & Rouder, 1998; Ratcliff & Smith, 2004; Ratcliff, Zandt, & McKoon, 1999; Wald, 1945). In these models, each new incoming piece of sensory evidence either increases or decreases a single decision variable until it has drifted beyond a threshold associated with the saccadic movement towards a particular target. The decision variable represents the relative evidence for the two alternatives (Ratcliff & Rouder, 1998). However, in the case of a simple saccade-to-target task in a high-contrast setting with highly salient targets, it has been questioned whether a random-walk process for target detection provides a sufficient explanation for the large variability in latencies (Carpenter, 2004; Carpenter & Reddi, 2001; Reddi, 2001).

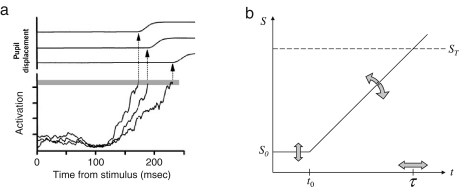

In linear rise-to-threshold models, randomness is introduced as trial-by-trial changes in the otherwise constant rate of rise of the decision signal. This notion has been formalized by Carpenter in a model termed ‘LATER’ (linear approach to threshold with ergodic rate; Carpenter and Williams (1995), Leach and Carpenter (2001), Reddi, Asrress, and Carpenter (2003)). Like other rise-to-threshold models, LATER proposes that a saccade towards a target is elicited as soon as a neural decision signal has reached a particular threshold. But unlike other rise-to-threshold models (e. g., Grice (1968) and Nazir and Jacobs (1991)), it assumes a fixed threshold and a linear increase whose rate is subject to variation across trials, yet fixed within a given trial (for a debate on the relationship between the two approaches see Carpenter and Reddi (2001), Ratcliff (2001), Usher and McClelland (2001)). The neurophysiological recordings by Schall and colleagues (Hanes & Schall, 1996; Schall & Thompson, 1999) are consistent with these key assumptions of the LATER model: they had observed that the threshold for saccade release seemed to be constant, whereas the slope of the rise in activity varied considerably across trials (see Fig. 2a).

Fig. 2.

Neuronal responses of a decision process and translation into a computational model. (a) Neuronal responses prior to a saccade from three trials. In their experiment, Schall and Thompson (1999) trained rhesus monkeys to stare at a central fixation stimulus and, as soon as eight secondary targets appeared, to elicit a saccade towards the oddball. The targets were arranged radially around the central fixation stimulus, and the location of the oddball was random. The diagram shows the recorded activity of single movement-related neurons in the saccadic movement maps of the frontal eye fields (FEF). Trials were grouped into those with slow, medium and fast saccades. The three plots show the averaged activity within these groups of trials, in each trial taking the activity from that neuronal response field corresponding to the correct target location. The activity patterns show that there is a fairly constant biophysical threshold at which a saccade is irrevocably elicited (grey bar) whereas the rate at which the signals rise varies between the groups of saccades. (Reprinted, with permission, from the Annual Review of Neuroscience, Volume 22 (c) 1999 by Annual Reviews, www.annualreviews.org) (b) Translation into a computational model of the decision process for a single trial. The rising activation in the FEFs is modelled as a linearly rising decision signal . It starts off at an initial level and rises at a variable rate until reaching threshold at time .

In their experiments on the saccade-to-target task, Carpenter and colleagues found that the observed saccadic latency was a function of the log probability of the corresponding target location: the more likely the target location, the shorter the latency (Carpenter & Williams, 1995). LATER accounts for this relationship by assuming that the learned a priori target probabilities determine the baseline levels of the decision signals, but not their rates of rise (cf. biased choice theory by Luce (1963)). Carpenter and colleagues used LATER to produce remarkably accurate predictions of human latency distributions in the saccade-to-target task as well as variations of it (Asrress & Carpenter, 2001; Carpenter & Williams, 1995; Leach & Carpenter, 2001; Reddi et al., 2003; Reddi & Carpenter, 2000).

A strength of the LATER model is the straightforward interpretability of its parameters. LATER has thus been used to relate various features of observed latency distributions to the putative underlying neurophysiological process (Anderson, 2008; Asrress & Carpenter, 2001; Carpenter & McDonald, 2007; Kurata & Aizawa, 2004; Leach & Carpenter, 2001; Loon, Hooge, Berg, & den, 2002; Madelain, Champrenaut, & Chauvin, 2007; Reddi et al., 2003; Sinha, Brown, & Carpenter, 2006). Furthermore, various extensions have been proposed, such as arrangements of multiple LATER units in parallel (Carpenter, 2004; Robinson, 1973), mixture models (Nakahara, Nakamura, & Hikosaka, 2006), or the assumption that both the rate of rise and the baseline level of the decision signal are trial-by-trial random variables (Nakahara et al., 2006).

However, the simplicity of this model limits its applicability in three ways. First, linear rise-to-threshold models like LATER have only been applied to saccade-to-target situations in which no learning took place: in previous studies, prior probabilities of target location were always fixed in a given experimental session, and subjects were initially given extensive training until their performance levelled off. During learning, by contrast, the baseline levels of the decision signals are expected to change across trials. Even though the notion of variable baseline levels has been discussed before (Glimcher, 2001; Nakahara et al., 2006), no specific model has been put forward how they might evolve dynamically depending on the history of previous trials. Second, LATER only accounts for simple marginal probabilities, where the probability distribution of target locations is described by a single vector of probabilities. It does not account for higher-order contingencies, that is, situations in which the target location probability depends on the target location during the previous trial. Third, within the class of linear rise-to-threshold models, no generative model has been proposed so far that would allow for statistical inference about parameter estimates and for model comparison (e.g., with regard to the type of learning that occurs across trials).

In this study, we propose a more general linear rise-to-threshold model for visual-saccadic decision making that overcomes the restrictions outlined above. First, we explicitly model how subjects’ priors are systematically altered by the sequence of stimuli observed so far. This approach makes it possible to investigate how learning dynamically shapes decision making about saccades. Second, our model is able to account for different forms of learning which can be evaluated by model comparison. In particular, this allows us to investigate whether subjects’ behaviour is not only driven by marginal but also by conditional probabilities. Third, based on computational considerations, we propose a specific parameterization of the model. This enables parameter estimation within a maximum likelihood scheme and the subsequent construction of a classifier that can be used to distinguish subjects with different learning profiles.

2. Methods

2.1. Task

For the present study, subjects were engaged in a sequential reaction time task (SRTT) during which they had to elicit saccades towards a given target in quick succession. The predictability of the target location was modified between blocks to induce varying forms of learning. The degree to which subjects learned the underlying contingency of a particular block was measured by the latencies of their saccades, that is, the time between stimulus onset and the beginning of the saccade towards the stimulus.

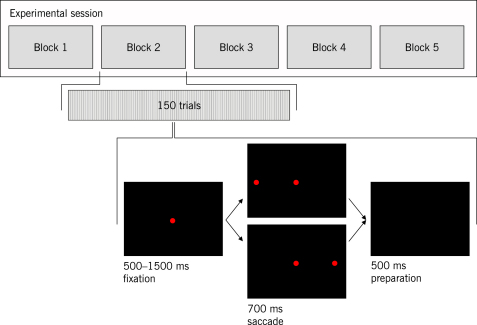

The specific setup adopted in this study was based on the saccade-to-target task proposed by Carpenter and Williams (1995). Subjects placed their heads on a chinrest in front of a computer screen in a dark, soundproof booth. At the beginning of a trial, they focused on a red fixation dot (hue 0∘, luminance 0.5) at the centre of a black screen. After a random waiting period between 500 and 1500 ms, a second red dot, the target, appeared on the screen, either located at 15∘ to the left or to the right. Since the original fixation dot remained visible, this design represented an overlap task rather than a gap task (alternative types of waiting-period probability distribution are examined in Oswal, Ogden, and Carpenter (2007)). Subjects were asked to foveate the target as quickly as possible, but not at the cost of errors. After another 700 ms, both dots disappeared, and the screen remained blank for an inter-trial interval of 500 ms.

Based on this design, Carpenter and Williams (1995) investigated the effects of fixed state probabilities for the two target locations on saccadic reaction times. For example, prior to the actual experiment, subjects were trained extensively on a sequence of trials during which the target appeared on the left-hand side with a probability of 70%, and on the right-hand side with a probability of 30%.

In our study, we extended this experimental design in two ways (see Fig. 1 ). First, each block contained a comparatively small number of trials, and subjects were not trained on a particular setting before the beginning of data acquisition. In this way, data were acquired while learning was in progress. Experimental pilots showed that 150 trials allowed for the subjects’ performance to stabilize sufficiently. Second, in addition to modifying target probabilities across blocks, the probability structure underlying the sequence of target locations was varied.

Fig. 1.

Experimental design. A complete session consists of 5 blocks, each of which contains 150 trials generated from the same block-specific transition matrix. All matrices shown in the main text were used to generate samples in each session. A trial consists of three consecutive stages: a fixation screen (showing a central red fixation dot); a target screen (showing both the fixation dot and an additional leftward or rightward target dot); an inter-trial interval (showing a black screen until the beginning of the next trial).

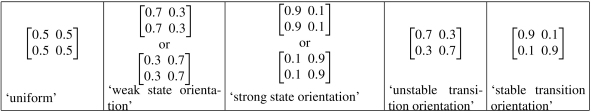

In a state-oriented block, as in previous experiments, the sequence of target locations was generated according to fixed state probabilities. They were specified as a vector , , where and denote the marginal probabilities of leftward and rightward targets, respectively.

In a transition-oriented block, the probability distribution of the target location of the current trial was conditional on the target location of the previous trial. Thus, given a sequence of past trials, the probability distribution of the target on the next trial depended on the last item of the sequence, and only on this one. A sequence with this property is known as a first-order Markov chain, and the change from one trial to the next as a transition. The probability that the next target location is , given that the current target location is , is given by . Thus, the sequence of target locations was specified by the transition matrix of its underlying Markov chain, , where and denote the probabilities of leftward and rightward targets, respectively, given that the target of the previous trial appeared at location . The first target in the sequence was drawn from a uniform initial distribution ; that is, the sequence of target locations was initialized randomly, either with a leftward or with a rightward target. The example in Table 1 shows a short sequence of trials generated from the transition matrix

Table 1.

Example of a sequence of target locations generated from the transition matrix

| Trial | 1 | 2 | 3 | 4 | 5 | 6 | … |

|---|---|---|---|---|---|---|---|

| Target probabilities | |||||||

| Target location drawn | … |

On trial 1, the target location is always drawn from a uniform distribution . On all subsequent trials, its probability distribution depends on the target location of the previous trial.

For each trial, the table shows the probability distribution vector from which the current target location is drawn. For the first trial, it is . In all subsequent trials, it is either the top or the bottom row of , depending on whether the previous target location was ‘left’ (top row) or ‘right’ (bottom row). The example illustrates that, given a transition matrix with high diagonal probabilities (a ‘stable’ transition matrix), the target tends to stay where it was on the previous trial, and only occasionally switches to the other side.

Finally, in a uniform block, target locations occurred on the left-hand side and the right-hand side with equal chance, rendering the sequence of targets maximally unpredictable. This block structure served as a control condition in which no statistical learning across trials should take place.

In order to avoid drowsiness, which subjects in pilot experiments had displayed after 30 min of constant testing, a single experimental session was chosen to contain only 5 blocks. A break of 3 min between any two blocks was introduced to reduce the potential confound of learning effects carrying over from one block to another.

In order to allow for a unified formalism, all blocks were specified in terms of a transition matrix . The blocks for each session were chosen according to the scheme in Box I .

Box I.

Each session contained all five block types. Their order was randomized in each session, and the two alternative matrices underlying the state-oriented blocks were counterbalanced across subjects. In order to distinguish transition-oriented learning from simpler state-oriented learning, all transition-oriented blocks were designed in such a way that the states of the implied Markov chain, 1 and 2, had a uniform steady state distribution (see Papoulis (1991)). Hence, in transition-oriented blocks, targets would, on average, appear equally often on either side, and no state-oriented learning should take place.

Experimental data were collected from three healthy male right-handed authors of this article with normal vision aged between 23 and 40 years (KHB, KES, WDP; see Table 2 ). Eye movements were recorded at a sampling frequency of 120 Hz using an ASL 504 infrared remote optics eye tracker. Targets were presented on a 27 cm 37 cm CRT screen at a viewing distance of 67 cm. Data acquisition and analysis were implemented using MATLAB, Cogent 2000, and ILAB (Gitelman, 2002).

Table 2.

Number of blocks (B) and trials (T) with successfully extracted saccade latencies, per subject and type of transition matrix

| Subject | Uniform |

Weak state orientation |

Strong state orientation |

Unstable transition orientation |

Stable transition orientation |

All |

||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B | T | B | T | B | T | B | T | B | T | B | T | |

| S-1 | 7 | 948 | 7 | 985 | 4 | 557 | 5 | 691 | 5 | 685 | 28 | 3866 |

| S-2 | 12 | 1727 | 12 | 1736 | 11 | 1554 | 11 | 1558 | 9 | 1285 | 55 | 7860 |

| S-3 | 11 | 1599 | 11 | 1594 | 12 | 1779 | 12 | 1755 | 11 | 1625 | 57 | 8352 |

Before extracting latencies from eye recordings, blinks were filtered by searching for invalid pupil size values. Pupil coordinates within a time window of 25 ms around the beginning and the end of a blink were removed. Saccades were then detected using a standard algorithm by Fischer, Biscaldi, and Otto (1993): in the raw recorded eye coordinates we looked for an initial pupil velocity of 250∘/s and searched the consecutive 100 ms time window for a saccade of at least 10∘ that resulted in a fixation of at least 100 ms. Any latencies below 10 ms or above 800 ms were interpreted as artifacts and removed, as were blocks with an overall recognition rate below 80%. Altogether, 15% of the recorded blocks were rejected, as were 4% of the trials from accepted blocks. For the remaining trials, we computed the latency between target onset and the beginning of the first detected saccade.

In order to reduce the variance of latencies, each subject took part in many sessions with an overall number of more than 20 000 trials.

2.2. Modelling

Various models have been proposed over the past two decades to explain the variability in the latencies between the appearance of a target and the initiation of an eye movement towards it. In one class of models, a decision signal is assumed to rise at a linear rate until reaching a fixed threshold. The release of a saccade is then modelled as the final outcome of this linear rise-to-threshold mechanism (Carpenter & Williams, 1995; Leach & Carpenter, 2001; Reddi et al., 2003). This type of model can be extended in two ways: (i) within an individual trial, the linear rise to threshold can be parameterized and turned into a generative model; (ii) across trials, the dynamics of alternative forms of learning can be integrated into the model.

The two levels can be formalized as hierarchically related intra-trial and inter-trial sub-models, respectively. They are described separately in the following sections. Put together, they predict saccade latencies on the basis of the sequence of target locations observed so far, as well as three model parameters.

2.2.1. Intra-trial modelling

We propose a generative intra-trial model that extends previous models of the relation between prior expectations about target location and saccadic onset times. It describes a computational abstraction of putative neurophysiological processes between target onset and the release of a saccade.

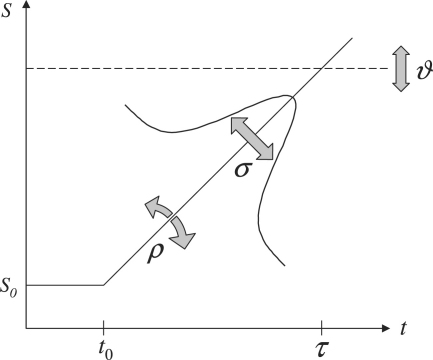

Each trial has two potential outcomes: a target appears either on the left-hand side or on the right-hand side of the screen. Within each trial, we model a subject’s belief in these two outcomes at any given point of time as distinct decision signals that evolve linearly over time until one of them hits a threshold . At the beginning of a trial, both decision signals have specific initial values, with the signal of the ‘winning’ hypothesis defined to start at . The initial level reflects the subject’s prior as provided by the inter-trial model described in Section 2.2.2. As the stimulus appears at , the two signals increase or decrease, respectively, representing the changing belief in the two hypotheses. As soon as one of them hits threshold, a saccade is released towards the corresponding target location at time . The rate at which the decision signals rise or fall varies over trials, accounting for the large variance of the resulting latency distribution (see Fig. 2).

The decision process can be parameterized by modelling subjects as Bayesian observers who collect evidence about the true state of the world and, combined with their prior expectations, accept one of two competing hypotheses about it (Knill & Pouget, 2004). In this scheme, the initial level of the ‘winning’ decision signal, , is associated with the subject’s prior, and the rate of rise is associated with the likelihood of the true hypothesis.

Evidence from several studies provides support for this parameterization. For instance, based on psychophysical experiments in humans, Carpenter and Williams (1995) plotted latencies on a reciprobit scale, in which normally distributed data approach a straight line. They found that a change in the marginal probabilities of the two target locations led to a reciprobit swivel. This change in slope is consistent with a change in the threshold height but not with a change in the mean rate of rise, which would cause a reciprobit shift (Sinha et al., 2006). Further support for the notion that priors determine the initial level of the decision signal rather than its rate of rise comes from neurophysiological experiments using a similar task to ours in monkeys (Basso & Wurtz, 1997, 1998; Ratcliff, Cherian, & Segraves, 2003). These studies identified neurons in the superior colliculus whose firing rates just before target onset reflected the target probability but not target salience. Altogether, these human and primate studies provide a robust foundation for the assumption that the subjective prior systematically influences the initial level of the decision signal, , before it starts to rise until hitting threshold.

Let the two possible states of the world be denoted by , corresponding to the target location being , respectively, within the current trial . The sensory evidence for the hypotheses is provided by time-continuous visual input. Assuming this supportive evidence to be processed in small, discrete timesteps, in a lossless fashion without any form of temporal filter (Ludwig, Gilchrist, McSorley, & Baddeley, 2005), the evidence at time is referred to as , and the accumulated evidence for one or another hypothesis up to time is denoted by . Writing for the probability density of the piece of evidence at time , we make two simple assumptions. First, it is assumed that , that is, and are conditionally independent. This means that . Second, since sensory stimuli are equal throughout the duration of the trial, the likelihood term is taken to be constant. It follows that

| (1) |

Subjects are modelled as permanently testing a decision rule which determines whether they continue their observation—or accept one of the hypotheses. From a Bayesian learning perspective it is intuitive to consider, as a decision variable, the subjective posterior probability of each hypothesis, given the supporting evidence up to time . In an iterative form, its dynamics can be written as

| (2) |

illustrating how the prior probability is turned into a posterior probability as new evidence is processed. Using Bayes’ theorem and Eq. (1), we obtain the closed form

| (3) |

in which the assumption of discretized time is no longer necessary. However, this quantity does not rise linearly over time. Therefore, as an alternative decision variable that can be constructed in the case of two possible target locations, we consider the log posterior ratio. Using (2), its iterative form can be written as

| (4) |

Using (3), the closed form is

| (5) |

which can be written in an analogous fashion for its counterpart by interchanging and . These log-odds are attractive candidates for computational models of neuronal processes of decision making because they (i) allow for optimal decision making and (ii) rise linearly over time, as shown in Fig. 2a. It is assumed that a hypothesis is accepted when its decision variable reaches a fixed threshold. This yields a decision rule that is evaluated at each point of time :

| (6) |

and otherwise neither is accepted. Note that equivalently optimal decision rules, although framed somewhat differently, have been proposed by previous authors. For example, in the case of a forced-choice task, Gold and Shadlen (2001) consider a decision rule that is based on the likelihood ratio of the two hypotheses: accept when , and accept otherwise. This rule can be turned into a decision rule for our task by multiplying the right-hand side criterion by an additional factor that introduces the necessary ‘temporal gap’ in which neither hypothesis is accepted. Equating , taking logarithms, and using Eq. (1), this modified rule can be rewritten as

| (7) |

which is precisely the same rule as in (6). This means that the two approaches are decision-equivalent.

Both the decision variable for the true hypothesis in (7) and its counterpart for the alternative hypothesis start at specific initial levels that represent the subject’s prior, and then rise or fall, respectively, over time. This corresponds to the notion of the accumulation of supportive evidence for the two rival hypotheses. A saccade to the true target location is released at time when

| (8) |

where and denote the true and the false target location of the current trial , respectively.

The likelihood term can be thought of as a descriptor of a subject’s visual discrimination efficiency or processing capacity. The larger it is the more quickly will an observed sensory stimulus make the subject increase their posterior belief in the corresponding hypothesis, and the shorter the resulting saccade latency. One way of parameterizing this quantity is to assume arbitrary ‘evidence units’. With

| (9) |

the supportive evidence per unit time for the true hypothesis is larger than the evidence for the false hypothesis, by an amount determined by a second model parameter .

In addition to the parameters and , we must account for the fact that, across trials, the rate of the decision signal varies (see Fig. 2a). Previous experiments based on the same paradigm as in this study have found reciprocal latencies to conform to a Gaussian distribution (Carpenter & Williams, 1995). Hence, in trial , the rate of the assumed decision signal, , will have a Gaussian distribution as well, with denoting the difference between the threshold and the initial level of the decision variable (see Fig. 2b). This introduces a third model parameter that describes the standard deviation of . The parameterization of the intra-trial model is summarized in Fig. 3 .

Fig. 3.

Intra-trial model parameterization. The proposed intra-trial model has three free parameters, represented by grey arrows. (i) and (ii) determine the mean and the standard deviation of the normally distributed slope of the decision signal that corresponds to the true target location of the current trial. The larger , the shorter the predicted saccade latency . The larger , the larger the variability of the distribution of . (iii) specifies the threshold the decision signal has to reach in order to evoke a saccade. The larger , the longer the latency and the less the influence of the initial value .

2.2.2. Inter-trial modelling

A central assumption of our model is that, at the beginning of each trial, the starting point of the decision signal associated with the true hypothesis corresponds to , i.e. the log ratio of the prior probabilities of the correct and the incorrect hypothesis, see Eq. (5). The evolution of the prior probabilities during a sequence of trials should reflect the learning of certain statistical properties of the target locations. In order to investigate what type of learning might happen during a sequence of trials , we propose three different inter-trial models that formalize alternative learning hypotheses.

2.2.2.1. The transition model

In the first candidate inter-trial model, we assume that subjects act like ideal observers: while responding to the sequence of stimuli within a block, they continuously refine an estimate of the underlying Markov transition matrix (see Minka (2001)).

Let be a hidden homogeneous transition matrix with a uniform initial distribution underlying the sequence of target locations . The states of the Markov chain, 1 and 2, encode leftward and rightward targets, respectively. Let denote the number of transitions , for , that have occurred in the sequence of trials observed so far. A maximum likelihood estimate of could be obtained by maximizing the log likelihood function

| (10) |

subject to . Using Lagrange’s method, the maximum likelihood estimates follow.

For example, having observed the first six trials in Table 1, an ideal observer will have counted two ‘leftleft’ transitions, one ‘leftright’ transition, and so forth. From this follows an estimated transition matrix . It is the matrix that makes the observed sequence of trials most likely.

In this form, however, an individual matrix element remains undefined as long as . Instead, we assume an initial uniform prior of for all , which can be thought of as an imaginary ‘prior observation count’ of 1 for each transition event (Minka, 2001). The posterior predictive distribution based on trials then allows subsequent generative models to yield predictions for all trials. Specifically, at the beginning of trial , subjects are assumed to have constructed the estimate

| (11) |

such that and . Using this alternative formulation, the estimated transition matrix in the above example (Table 1) would be .

Obtaining maximum likelihood estimates of the transition matrix elements in this way can equivalently be viewed as a Bayesian update scheme. By counting how often each type of transition has occurred so far, an ideal observer can estimate the joint probabilities , from which estimates of the conditional probabilities can be derived (see Harrison, Duggins, and Friston (2006), for an example).

The initial uniform matrix at the beginning of an experimental block corresponds to maximal uncertainty. As more and more trials are observed, the posterior of the transition matrix is refined and gradually approaches the true matrix.

2.2.2.2. The state model

The inter-trial model outlined so far is transition-oriented in that it assumes an observer who estimates a transition matrix underlying the sequence of stimuli. Alternatively, a state-oriented observer can be imagined who simply estimates a state probabilities vector by counting the frequencies of the two target locations while not paying attention to the transitions between them. At the beginning of trial , in analogy to the Bayesian update scheme outlined above, this estimate is

| (12) |

such that for all . Again, the initial prior is assumed to be uniform, .

Having observed the first six trials of the above example (Table 1), a ‘state’ observer will have counted four ‘left’ trials and two ‘right’ trials. Using (12), an estimated state probabilities vector follows. Using the same notation as in the transition model, this estimate can be written as .

2.2.2.3. The uniform model

The two alternative inter-trial models proposed so far account for different forms of learning, but they do not question whether learning occurs at all. Therefore, a third candidate model is proposed in which subjects are assumed to be entirely ignorant of the history of stimuli. In this uniform model, subjects maintain a uniform prior belief of in either target location throughout the experiment. Using the same notation as in the transition model, this prior can be written as a constant estimate .

Fig. 4 illustrates exemplary predictions generated by the alternative inter-trial models operating on alternative block structures. The individual diagrams show the extent to which the models are able to adapt to the transition matrix underlying the observed sequence of target locations. Crucially, the rate of convergence is highest when the model structure most closely matches the block structure. In particular, convergence takes longer when the true block structure is more complicated than the assumed one. For example, in the case of a uniform block, all three models eventually settle around 0. 5/0.5 predictions, but the ‘state’ model and the ‘transition’ model take longer to converge. Thus, we will be able to make use of measured reaction times from all blocks to find out which model explains a particular subject’s behaviour best (Section 3.4).

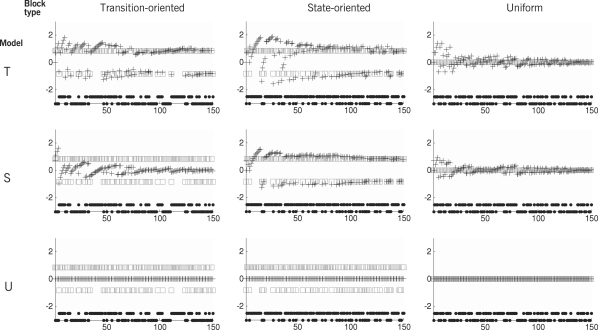

Fig. 4.

Log prior ratios predicted by the alternative inter-trial models. Each diagram is based on the combination of a particular block structure (transition-oriented, state-oriented, or uniform) and a particular inter-trial model (‘transition’ model, ‘state’ model, or ‘uniform’ model). For each trial, the diagrams show the target location (black dots), with high and low markers indicating leftward and rightward targets. Furthermore, they show the log ratio between the prior probability of the true and the false target location (grey squares) as well as a prediction for this log ratio, generated by the respective inter-trial model (black crosses). Since the models are always initialized with uniform priors, the predicted log ratio for trial is . The upper left diagram, for example, shows how the ‘transition’ model gradually adapts to the transition-oriented block structure underlying the observed sequence of trials. By contrast, the central diagram in the left column shows that the ‘state’ model is incapable of learning the structure of a transition-oriented block.

2.2.3. Model construction

The two sub-models outlined above can now be integrated into an overall generative model with three free parameters.

In our paradigm, reciprocal latencies have previously been observed to follow a normal distribution (Carpenter & Williams, 1995). Thus, the rate of the rising decision signal can be modelled as a random variable

| (13) |

where and describe the mean and the standard deviation of the rate across trials. Using , the reciprocal latency can then be modelled as a random variable

| (14) |

The difference between the initial level of the decision signal and its threshold can be expressed in terms of a model parameter and the log prior ratio as derived in Section 2.2.1:

| (15) |

The mean rate of the decision signal was derived in (5) and (9):

| (16) |

The variability of the rate across trials is described by the model parameter . The prior of the two hypotheses is given by the corresponding entry in the transition matrix, as estimated within the inter-trial model:

| (17) |

The overall probability distribution for reciprocal latencies, conditional on the model parameters, is then given by

| (18) |

Note that the overall model does not propose a single parameterized distribution , but a sequence of distributions . This is because of its dependence on the output of the inter-trial model, , which in turn depends on the sequence of target locations observed so far.

2.3. Parameter estimation

Each candidate model constructed in the preceding section proposes a particular distribution of reciprocal saccade latencies parameterized by a vector . Fig. 3 indicates that the parameters are not perfectly independent in their effect on the resulting predictions. For example, an increase in predicted response speed can be obtained by either decreasing the threshold or by increasing the rate of the decision signal . Note, however, that this also alters the dependence of reaction times on the subject’s priors, so the parameters are not completely interchangeable. Nevertheless, to avoid numerical identifiability problems during parameter estimation, we reparameterized the model such that the rate is expressed as per unit threshold, i.e. . Moreover, since the dispersion parameter must take a non-negative value, we used a log-transform. Thus, during numerical parameter estimation, the model was parameterized by .

The maximum likelihood principle identifies those model parameters of the distributions of that are most likely to give rise to the data . An estimate can be found by maximizing

| (19) |

with respect to . The implementation can be simplified by omitting the term which does not depend on the free parameters. Multiplying the expression by , the maximum likelihood estimate is then found as the solution to a minimization problem with respect to , , .

3. Results

3.1. Data analysis

In order to validate our paradigm, we replicated two key results by Carpenter and Williams (1995). First, latencies varied considerably over trials. Across our 20 078 trials, we found an overall mean of and a standard deviation of . Second, reciprocal latencies , with and , closely approximated a normal distribution (see Fig. 5 ).

Fig. 5.

Histogram of latencies and reciprocals. The diagrams are based on 28 blocks containing 3 866 trials from subject S-1. While the latencies themselves have often been described as log normally distributed (Glimcher, 2003), their reciprocals can be approximated by a normal distribution (Carpenter & Williams, 1995).

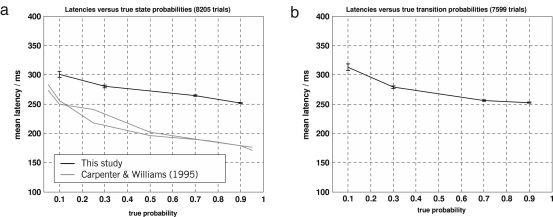

Above and beyond these descriptive features, Carpenter and colleagues showed that latencies declined as the learned state probability of the corresponding target location increased (Basso & Wurtz, 1997, 1998; Carpenter & Williams, 1995). Specifically, they found a significant negative linear relation between log prior probabilities and latencies. In our study, we replicated these results as well, finding a very similar negative linear relation between log state probabilities and latencies in our state-oriented blocks (). We further extended this analysis to transition probabilities. Using a linear regression analysis, we found a significant relation () between reciprocal latencies and log transition probabilities (see Fig. 6 ). Even though this analysis neglects the dynamics of learning completely, it already indicates that human observers are sensitive to transition probabilities in visual input statistics. Yet formal model comparison will provide much stronger evidence for this claim.

Fig. 6.

Saccade latencies and error bars. (a) Saccade latencies versus true state probabilities, based on all trials from state-oriented blocks across all subjects. (b) Saccade latencies versus true transition probabilities, based on all transition-oriented blocks across all subjects. Both diagrams show how saccade latencies decrease with increasing true probability of the respective target location.

3.2. Parameter estimation

In order to obtain maximum likelihood parameter estimates from Eq. (19), a gradient-descent scheme was run on the acquired data from all three subjects separately. The results are given in Table 3 .

Table 3.

Maximum likelihood parameter estimation for different inter-trial models (values rounded to 3 significant figures)

| Inter-trial model | Subject S-1 |

Subject S-2 |

Subject S-3 |

||||||

|---|---|---|---|---|---|---|---|---|---|

| ϑ | ϑ | ϑ | |||||||

| Uniform model | 0.0766 | 24.03 | −4.18 | 0.0327 | 7.87 | −5.06 | 0.0615 | 13.7 | −4.95 |

| State model | 0.199 | 59.6 | −3.28 | 0.126 | 29.6 | −3.77 | 0.631 | 113 | −2.85 |

| Transition model | 0.0724 | 23.5 | −4.26 | 0.109 | 26.1 | −3.92 | 1.37 | 200 | −2.28 |

In order to visualize the dependence between conditional probabilities and latencies, and provide face validity for our parameter estimates, one of the subjects was engaged in an additional session consisting of 10 transition-oriented blocks with identical sequences initially generated from a transition matrix. We fitted the model to the data and predicted the priors using the ‘transition’ model. Fig. 7 shows how observed latencies develop over time and how this is reflected by model predictions.

Fig. 7.

Averaged observed and predicted latencies. The diagram shows saccade latencies from an additional experimental session subject S-1 was engaged in. The dataset consists of 10 transition-oriented blocks, each designed to contain an identical left/right sequence of 150 target locations. The diagram shows the trial-by-trial target locations as separate lines of black dots at the bottom (leftward targets: lower line; rightward targets: upper line). The observed latencies, averaged over these 10 sessions, are plotted in black, and their respective model predictions in grey. Observations and predictions of trials in which the target location has stayed on the same side are shown as small black dots and grey circles, respectively. Observations and predictions of trials in which the target location has just switched to the other side are depicted as black crosses and grey squares, respectively. Predicted reaction times (grey circles and squares) show two key features of an ideal observer who is sensitive to transition probabilities. First, whenever there is a sequence of trials with identical target locations (a ‘run’), reaction times drop continuously as the estimated prior probability of that target location increases. Second, whenever the target changes to the other side (a ‘switch’), there is a single long-reaction-time trial, followed by a return to the previous, lower level of reaction times.

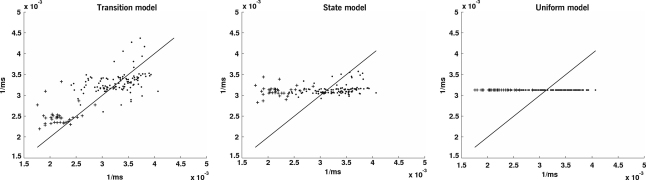

In order to give a qualitative comparison between the predictive power of the three competing inter-trial models, Fig. 8 visualizes measured latencies versus model predictions. The individual diagrams show a marked separation between trials in which the target has just switched to the other side (crosses) and those in which it has not (dots). As expected from a subject that learns transition probabilities, switch trials lead to long latencies, i.e. short reciprocals; accordingly, crosses have low -coordinates. Conversely, non-switch trials lead to short latencies, i.e. long reciprocals; accordingly, dots have high -coordinates. Looking at the -coordinates, the ‘transition’ model is the only model that predicts these two clusters of trials.

Fig. 8.

Averaged observed versus predicted reciprocal latencies from Fig. 7. In each of the diagrams, predicted reciprocal latencies (-axis) are plotted against their observations (-axis). The diagrams are based on the ‘transition,’ the ‘state,’ and the ‘uniform’ model, respectively, applied to the same dataset as in Fig. 7. Thus, the -coordinates of the data points are the same in all three diagrams, whereas their -coordinates differ. Predictions were generated by the alternative models after being individually fitted to the data. The main diagonal represents a perfect match between observations and predictions.

3.3. Statistical classification

The parameter estimates obtained for each subject are sufficiently distinct to demonstrate the use of a statistical classifier that maps an unseen test sample onto the correct class (Jain, Duin, & Mao, 2000). Such a classifier could be used to (i) separate groups of individuals that exhibit similar learning profiles, or (ii) find out whether there are systematic differences between healthy subjects and patients.

A class can be regarded as a discrete random variable and the eye movement data as a vector of continuous random variables with observed realizations . For illustration purposes, let the classes in correspond to the three subjects themselves. Then, given a test sample of unseen saccade data , the classifier is to return

| (20) |

Using Bayes’ theorem and the fact that saccade latencies are independently drawn from their respective underlying distributions, the right-hand side can be rewritten as

| (21) |

| (22) |

| (23) |

We based the classifier on the ‘transition’ model which showed a reasonable fit across all subjects, and trained it by finding maximum likelihood estimates of labelled training data from the three subjects (see Table 4 ). The unknown parameters of the distribution in Eq. (18) were then replaced by these estimates, yielding approximations for the class-conditional probability densities . The priors were chosen to be flat, i.e. . In future applications, when particular classes are to be distinguished such as subgroups of a disease, the priors would be given by the unconditional frequencies of the different conditions.

Table 4.

Parameter estimation for subsequent classification, based on a subset of the original data (S-1: 6 out of 8 blocks. S-2: 9 out of 12 blocks. S-3: 9 out of 12 blocks)

| Class | Training sample | Parameter estimate | |

|---|---|---|---|

| 3049 out of 3866 trials | 0.0724, 23.5, −4.26 | 0.333 | |

| 5999 out of 7860 trials | 0.116, 27.5, −3.89 | 0.333 | |

| 6l35 out of 8352 trials | 1.543, 216.32, −2.147 | 0.333 |

Table 5 shows the resulting joint probabilities as determined by the classifier.

Table 5.

Classification results

| Subject | Test sample | ||||

|---|---|---|---|---|---|

| S-1 | 817 trials | 0.495×104 | 0.395×10 4 | 0.234×10 4 | |

| S-2 | 1861 trials | 0.873×104 | 1.05 ×104 | 0.957×10 4 | |

| S-3 | 2217 trials | 0.880×104 | 1.33×10 4 | 1.39 ×104 |

The classifier was run on three test samples taken from the three subjects. It computed the joint probabilities for all classes , and assigned each test sample to the class that maximized this joint probability (printed in bold font).

The table shows that the classifier has assigned the test sample to the correct class in all cases.

Fig. 4 provides some intuition as to what specific properties of a sequence of latencies allow the classifier to distinguish between different inter-trial models. In a transition-oriented block (left column in the figure), for instance, the ‘transition’ model predicts that log prior ratios fall into two groups: negative and positive ones (top-left diagram). Accordingly, saccadic latencies are expected to be separated into long-latency and short-latency saccades. The ‘state’ model, by contrast, predicts the convergence of log prior ratios around zero (mid-left diagram), and saccadic latencies are expected to display relatively low variability around their mean. Finally, the ‘uniform’ model predicts that log prior ratios remain fixed at zero.

3.4. Model comparison

The maximum likelihood approach discussed so far does not only yield a point estimate of the model parameters. It can also be used to compare the competing inter-trial models. The question of which model explains the observed data best can be posed explicitly by comparing

| (24) |

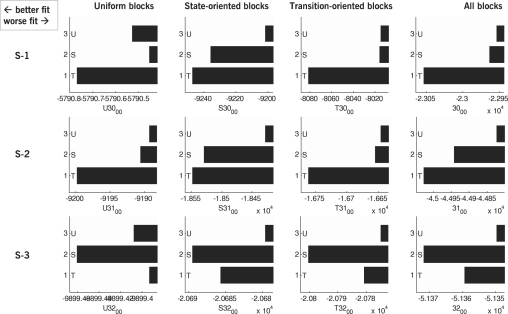

for each inter-trial model , where we approximate the unknown parameter distribution by a Dirac-delta distribution at . The likelihood term evaluated at the maximum likelihood parameter estimate, , is an approximation to the model evidence, . When competing models do not differ with regard to their parameterization (as in our case, where model differences are restricted to the parameter-free function describing the ideal observer), these models can be compared using their likelihood ratio, which is an approximation to the model evidence ratio, or Bayes factor (see Kass and Raftery (1995)). Since the logarithm is a monotonic function, it is common practice, and usually more convenient, to compute and report the log likelihood ratio. Hence, differences between bar lengths in Fig. 9 represent the log likelihood ratio between the associated models (note that differences between logs are mathematically equivalent to the log of the ratio).

Fig. 9.

Negative log likelihood of the inter-trial models fitted to alternative subsets of the data. Each diagram shows the negative likelihood of the ‘uniform,’ the ‘state,’ and the ‘transition’ model when fitted to the data of a particular subject (rows) confronted with a sequence of target locations generated from a uniform, state-oriented, or transition-oriented block (columns). The rightmost column shows the negative likelihood of the model fitted to all blocks of a particular subject. The smaller the negative log likelihood, the better the model fit.

The negative log likelihood values of the competing inter-trial models fitted to different subsets of the data are given in Fig. 9. Each diagram is based on a particular subject and a particular type of block structure. Note that it is meaningless to compare absolute values between subjects since they depend on the mere number of trials a subject was engaged in. Instead, the likelihood value of each model must be interpreted in relation to the likelihood values of the other models from the same subject and block structure. Each diagram has been scaled individually so as to emphasize the full range between the lowest and the highest likelihood.

The data show that model fit differences vary considerably across subjects. In subjects S-1 and S-2, there is very strong evidence for the ‘transition’ model compared to the ‘uniform’ and the ‘state’ model. In subject S-1, for example, the likelihood ratio between the ‘transition’ model and the ‘state’ model, each fitted to all blocks, is , that is, the ‘transition’ model is much more likely to underlie the observed data than the ‘state’ model. Similarly, it is times more likely than the ‘uniform’ model. In subject S-3, by contrast, the ‘state’ model allows for the best fit. For this subject, the likelihood ratios are much smaller than in the other two subjects, but they still constitute strong evidence in favour of the ‘state’ model. For the experimental data presented here, there was always strong evidence for one particular model in each combination of subject and block type.

The most salient result within the diagrams is that for each subject the same model is found to be optimal for all three data sets. The probability of this happening by chance is within each subject, and therefore for the three subjects as a whole.

4. Discussion

In this article, we have extended the class of linear rise-to-threshold models of the relation between a priori probabilities of target location and saccadic latencies. These models are centered around the intuitive, and empirically supported, notion of a decision signal that rises over time until reaching a fixed threshold. We have presented a generative, hierarchical model that combines two separate sub-models for learning of target locations across trials and the decision-making process within a trial, respectively. This has enabled three lines of progress: (i) explicit modelling of how subjects’ priors change across trials as a function of stimulus history, which makes it possible to investigate how saccadic decision making is dynamically shaped by learning; (ii) a model parameterization, inspired by computational considerations, which enables maximum likelihood parameter estimation and the subsequent construction of classifiers for distinguishing subjects with different learning profiles; (iii) differentiation between specific forms of learning by model comparison, e.g., the question of whether saccades are more influenced by marginal or by conditional probabilities.

The focus of this paper is a methodological one, and its primary goal is not to provide major novel neurobiological insights. Nevertheless, we acquired eye movement data from three healthy subjects performing a well-established and simple binary saccadic task. In order to make our results comparable to previous ones, we used the same paradigm as Carpenter and Williams (1995). The aim of this empirical data analysis was to demonstrate the face validity of our modelling approach by showing that under realistic noise levels, as found in standard saccade measurements with infrared video technology, consistent and subject-specific learning profiles can be identified. Indeed, our analysis showed that (i) the behavioural pattern observed in each subject was consistent across all experimental sessions and that (ii) it was distinct from the patterns of the other subjects.

The question that led to these findings was what sort of learning underlies changes of prior probabilities across trials in a particular subject. In our analyses of empirical data, we evaluated three different inter-trial learning models: a ‘state’ model (which learns the marginal probabilities of target locations), a ‘transition’ model (which learns the conditional probabilities of a transition matrix), and a ‘uniform’ model (representing the hypothesis that no learning takes place and prior probabilities therefore remain constant). Fitting the three alternative models to different subsets of the data shows that there is least evidence for the ‘uniform’ model in all cases. We can conclude from this that human observers do take into account the probabilistic structure underlying the sequence of trials. This finding was compatible with simple linear regression analyses of the relation between log prior probability ratios and reciprocal saccade latencies. In these analyses, we fully replicated the previous results by Carpenter and colleagues, who had reported a significant negative correlation between log prior marginal probabilities and reciprocal saccade latencies (Basso & Wurtz, 1997, 1998; Carpenter & Williams, 1995). In addition, we found a significant negative correlation between log prior transition probability ratios and reciprocal saccade latencies. This demonstrates that human observers are sensitive to conditional probabilities, not merely to the marginal probabilities, of target locations.

A particularly interesting finding is that in each of our three subjects there was one model (the ‘transition’ model in subjects S-1 and S-2, and the ‘state’ model in subject S-3) that performed best for all data sets, independent of the hidden probability structure underlying the stimulus sequence. The probability of obtaining such a pattern by chance is very small (). In addition, model comparison, on the basis of likelihood ratios, showed that in each condition the best model allowed for a much better fit than both other models. This suggests that each subject exhibited an inherent and individual learning profile, independent of the current block type. This notion is also confirmed by our classification results, in which all unknown test samples were mapped onto the correct subject.

Although related modelling principles have been used in previous studies, these were implemented for rather different experimental paradigms. For example, Maddox (2002) has looked at a broad range of mathematical frameworks for optimal decision criterion learning in perceptual categorization tasks. In this type of experiment, subjects are asked to assign the correct category to a given stimulus, and a typical modelling approach is to assume that they aim to maximize expected reward. Smith, Wirth, Suzuki, and Brown (2007) consider a paradigm in which subjects are required to associate different cues with different actions. They propose a novel way of analysing such interleaved learning by means of a state-space model that provides a single framework for all associations to be learned. Corrado, Sugrue, Seung, and Newsome (2005) and Lau and Glimcher (2005) analyse sequential choice tasks with probabilistic reinforcers. They propose attractive candidate models that are capable of implementing the matching law described by Herrnstein (1961). Finally, several alternative models for two-choice reaction time tasks were compared by Ratcliff and Smith (2004), who showed that these models, even though based on very different assumptions, often led to similar predictions, at least for very simple tasks.

The above studies describe powerful approaches for characterizing and analysing learning and choice behaviour for a variety of tasks. In this study, by contrast, we were considering a different type of decision task, and we were aiming for a different type of insight. In our paradigm, subjects were not asked to assign a given sample to one of several overlapping categories; decisions were not associated with a reward; and there were no probabilistic reinforcers. Instead, subjects were confronted with a target that was extremely easily detectable, there were only two alternative responses per trial, and no behavioural feedback (e.g., rewards) was given. Similarly, we were not aiming to model the cognitive state of subjects who are evaluating the potential outcome of alternative decisions, or who are learning to associate a cue with a particular action. Instead, we attempted to model subjects’ reaction times in response to an extremely simple stimulus, and how these reaction times depended on learned statistical distributions about stimulus properties.

There are several potential lines of future research that could be based on the model presented in this paper. For example, an interesting extension would be to consider additional inter-trial models, e.g., differently parameterized ‘declining-memory’ models which represent forgetful observers, or observers that adapt their learning rate to the volatility of the environment (Behrens, Woolrich, Walton, & Rushworth, 2007). Because of different numbers of parameters in such models, however, model comparison could no longer be pursued on the basis of log likelihood ratios. Instead, one would require an approach that takes into account both model fit and model complexity, e.g., Bayesian model selection based on the model evidence (Penny, Stephan, Mechelli, & Friston, 2004). For example, different forms of online learning of the probability distribution from which a sequence of events is drawn have been used in the context of modelling SRTT neuroimaging data (Harrison et al., 2006; Strange, Duggins, Penny, Dolan, & Friston, 2005).

Another interesting question would be to investigate the extent to which the explanatory power of linear rise-to-threshold models is restricted to high-contrast settings in which the time taken for decision is often assumed to dominate the time for detection (Carpenter, 2004). This could be addressed by explicitly comparing linear-rise models to random-walk (or diffusion) models while systematically modifying the salience of the target (for a debate on the relationship between the two approaches see Carpenter and Reddi (2001) and Ratcliff (2001)).

Furthermore, the way the brain might implement Bayesian inference can be expressed in alternative mathematical ways (Jazayeri & Movshon, 2006; Ma, Beck, Latham, & Pouget, 2006; Rao, 2004). Current linear-rise models assume an implementation that is instantaneous in that the subject’s posterior belief in the competing hypotheses is calculated on the basis of evidence that evolves over time. That is, each piece of new evidence instantaneously leads to an update. Alternatively, Bayesian inference could be expressed in terms of a gradient-ascent scheme on the free energy which would converge to the true posterior probability of either hypothesis.

Given the dependence of learning on synaptic plasticity and neuromodulatory systems, the modelling approach described in this paper could be of interest for clinical applications, particularly in psychiatry (Stephan, Baldeweg, & Friston, 2006). One potential long-term target for clinical application of learning models of eye movements is schizophrenia. Among the most promising endophenotypes of schizophrenia are abnormalities both in antisaccade tasks (McDowell et al., 2002) and learning tasks (Stephan et al., 2006). These abnormalities are usually observed as subtle statistical discrepancies between groups of healthy subjects and patients. However, to our knowledge, these observations have not yet been successfully used for the development of richer classification systems as well as corresponding subject-specific diagnostics. Further investigation of the release of eye saccades and learning effects from a psychophysical perspective might help to detect systematic differences between healthy and diseased individuals in order to eventually improve early diagnosis. For example, classifiers (albeit more powerful ones than the example used in Section 3.3) could be used to map test samples from patients onto classes that correspond to different subgroups of a disease. In this way, Bayesian learning models could become a valuable tool for studying physiological and pathophysiological mechanisms of saccadic eye movements.

Footnotes

This research has been funded by the Wellcome Trust (VS/06/UCL/A18), the German Academic Exchange Service (DAAD, D/ 06/49008), and the Stiftung Familie Klee (Frankfurt/Main).

References

- Anderson B. Neglect as a disorder of prior probability. Neuropsychologia. 2008;46:1566–1569. doi: 10.1016/j.neuropsychologia.2007.12.006. [DOI] [PubMed] [Google Scholar]

- Asrress K.N., Carpenter R.H.S. Saccadic countermanding: a comparison of central and peripheral stop signals. Vision Research. 2001;41:2645–2651. doi: 10.1016/s0042-6989(01)00107-9. [DOI] [PubMed] [Google Scholar]

- Basso M.A., Wurtz R.H. Modulation of neuronal activity by target uncertainty. Nature. 1997;389:1966–1969. doi: 10.1038/37975. [DOI] [PubMed] [Google Scholar]

- Basso M.A., Wurtz R.H. Modulation of neuronal activity in superior colliculus by changes in target probability. Journal of Neuroscience. 1998;18:7519. doi: 10.1523/JNEUROSCI.18-18-07519.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens T.E.J., Woolrich M.W., Walton M.E., Rushworth M.F.S. Learning the value of information in an uncertain world. Nature Neuroscience. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Carpenter R.H.S. Contrast, probability, and saccadic latency; evidence for independence of detection and decision. Current Biology: CB. 2004;14:1576–1580. doi: 10.1016/j.cub.2004.08.058. [DOI] [PubMed] [Google Scholar]

- Carpenter R.H.S., McDonald S.A. Later predicts saccade latency distributions in reading. Experimental Brain Research. 2007;177:176–183. doi: 10.1007/s00221-006-0666-5. [DOI] [PubMed] [Google Scholar]

- Carpenter R.H.S., Reddi B.A.J. Reply to ‘putting noise into neurophysiological models of simple decision making’. Nature Neuroscience. 2001;4:337. doi: 10.1038/85956. [DOI] [PubMed] [Google Scholar]

- Carpenter R.H.S., Williams M.L.L. Neural computation of log likelihood in control of saccadic eye movements. Nature. 1995;377:59–62. doi: 10.1038/377059a0. [DOI] [PubMed] [Google Scholar]

- Corrado G.S., Sugrue L.P., Seung H.S., Newsome W.T. Linear-nonlinear-poisson models of primate choice dynamics. Journal of the Experimental Analysis of Behavior. 2005;84:581–617. doi: 10.1901/jeab.2005.23-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer B., Biscaldi M., Otto P. Saccadic eye movements of dyslexic adult subjects. Neuropsychologia. 1993;31:887–906. doi: 10.1016/0028-3932(93)90146-q. [DOI] [PubMed] [Google Scholar]

- Gitelman D.R. Ilab: A program for postexperimental eye movement analysis. Behavior Research Methods, Instruments, & Computers. 2002;34:605–612. doi: 10.3758/bf03195488. [DOI] [PubMed] [Google Scholar]

- Glimcher P.W. Making choices: The neurophysiology of visual-saccadic decision making. Trends in Neurosciences. 2001;24:654–659. doi: 10.1016/s0166-2236(00)01932-9. [DOI] [PubMed] [Google Scholar]

- Glimcher P.W. The neurobiology of visual-saccadic decision making. Annual Review of Neuroscience. 2003;26:133–179. doi: 10.1146/annurev.neuro.26.010302.081134. [DOI] [PubMed] [Google Scholar]

- Gold J.I., Shadlen M.N. Representation of a perceptual decision in developing oculomotor commands. Nature. 2000;404:390–394. doi: 10.1038/35006062. [DOI] [PubMed] [Google Scholar]

- Gold J.I., Shadlen M.N. Neural computations that underlie decisions about sensory stimuli. Trends in Cognitive Sciences. 2001;5:10–16. doi: 10.1016/s1364-6613(00)01567-9. [DOI] [PubMed] [Google Scholar]

- Gold J.I., Shadlen M.N. Banburismus and the brain: Decoding the relationship between sensory stimuli, decisions, and reward. Neuron. 2002;36:299–308. doi: 10.1016/s0896-6273(02)00971-6. [DOI] [PubMed] [Google Scholar]

- Grice G.R. Stimulus intensity and response evocation. Psychological Review. 1968;75:359–373. doi: 10.1037/h0026287. [DOI] [PubMed] [Google Scholar]

- Hanes D.P., Carpenter R.H.S. Countermanding saccades in humans. Vision Research. 1999;39:2777–2791. doi: 10.1016/s0042-6989(99)00011-5. [DOI] [PubMed] [Google Scholar]

- Hanes D.P., Patterson W.F., Schall J.D. Role of frontal eye fields in countermanding saccades: Visual, movement, and fixation activity. Journal of Neurophysiology. 1998;79:817–834. doi: 10.1152/jn.1998.79.2.817. [DOI] [PubMed] [Google Scholar]

- Hanes D.P., Schall J.D. Neural control of voluntary movement initiation. Science. 1996;274:427. doi: 10.1126/science.274.5286.427. [DOI] [PubMed] [Google Scholar]

- Hanes D.P., Thompson K.G., Schall J.D. Relationship of presaccadic activity in frontal eye field and supplementary eye field to saccade initiation in macaque: Poisson spike train analysis. Experimental Brain Research. 1995;103:85–96. doi: 10.1007/BF00241967. [DOI] [PubMed] [Google Scholar]

- Harrison L.M., Duggins A., Friston K.J. Encoding uncertainty in the hippocampus. Neural Networks. 2006;19:535–546. doi: 10.1016/j.neunet.2005.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herrnstein R.J. Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jain A.K., Duin R.P.W., Mao J. Statistical pattern recognition: A review. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000;22:4–37. [Google Scholar]

- Jazayeri M., Movshon J.A. Optimal representation of sensory information by neural populations. Nature. 2006;200:6. doi: 10.1038/nn1691. [DOI] [PubMed] [Google Scholar]

- Kass R.E., Raftery A.E. Bayes factors. Journal of the American Statistical Association. 1995;90:773–795. [Google Scholar]

- Kim J.N., Shadlen M.N. Neural correlates of a decision in the dorsolateral prefrontal cortex of the macaque. Nature Neuroscience. 1999;2:176–185. doi: 10.1038/5739. [DOI] [PubMed] [Google Scholar]

- Knill D.C., Pouget A. The Bayesian brain: The role of uncertainty in neural coding and computation. Trends in Neurosciences. 2004;27:712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- Kurata K., Aizawa H. Influences of motor instructions on the reaction times of saccadic eye movements. Neuroscience Research. 2004;48:447–455. doi: 10.1016/j.neures.2004.01.003. [DOI] [PubMed] [Google Scholar]

- Lau B., Glimcher P.W. Dynamic response-by-response models of matching behavior in rhesus monkeys. Journal of the Experimental Analysis of Behavior. 2005;84:555–579. doi: 10.1901/jeab.2005.110-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leach J.C., Carpenter R.H. Saccadic choice with asynchronous targets: Evidence for independent randomisation. Vision Research. 2001;41:3437–3445. doi: 10.1016/s0042-6989(01)00059-1. [DOI] [PubMed] [Google Scholar]

- Loon E.M.V., Hooge I.T.C., Berg A.V.V.den. The timing of sequences of saccades in visual search. Proceedings. Biological Sciences/The Royal Society. 2002;269:1571–1579. doi: 10.1098/rspb.2002.2062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luce R.D. Vol. 1. 1963. Detection and recognition; pp. 103–189. (Handbook of mathematical psychology). [Google Scholar]

- Luce R.D. Oxford University Press; USA: 1986. Response times: Their role in inferring elementary mental organization. [Google Scholar]

- Ludwig C.J.H., Gilchrist I.D., McSorley E., Baddeley R.J. The temporal impulse response underlying saccadic decisions. Journal of Neuroscience. 2005;25:9907–9912. doi: 10.1523/JNEUROSCI.2197-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ma W.J., Beck J.M., Latham P.E., Pouget A. Bayesian inference with probabilistic population codes. Nature Neuroscience. 2006;9:1432–1438. doi: 10.1038/nn1790. [DOI] [PubMed] [Google Scholar]

- Maddox W.T. Toward a unified theory of decision criterion learning in perceptual categorization. Journal of the Experimental Analysis of Behavior. 2002;78:567. doi: 10.1901/jeab.2002.78-567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madelain L., Champrenaut L., Chauvin A. Control of sensorimotor variability by consequences. Journal of Neurophysiology. 2007;98:2255–2265. doi: 10.1152/jn.01286.2006. [DOI] [PubMed] [Google Scholar]

- McDowell J.E., Brown G.G., Paulus M., Martinez A., Stewart S.E., Dubowitz D.J. Neural correlates of refixation saccades and antisaccades in normal and schizophrenia subjects. Biological Psychiatry. 2002;51:216–223. doi: 10.1016/s0006-3223(01)01204-5. [DOI] [PubMed] [Google Scholar]

- McMillen T., Holmes P. The dynamics of choice among multiple alternatives. Journal of Mathematical Psychology. 2006;50:30–57. [Google Scholar]

- Minka T.P. Microsoft Research; Cambridge, UK: 2001. Bayesian inference, entropy, and the multinomial distribution. [Google Scholar]

- Nakahara H., Nakamura K., Hikosaka O. Extended later model can account for trial-by-trial variability of both pre- and post-processes. Neural Networks. 2006;19:1027–1046. doi: 10.1016/j.neunet.2006.07.001. [DOI] [PubMed] [Google Scholar]

- Nazir T.A., Jacobs A.M. The effects of target discriminability and retinal eccentricity on saccade latencies: An analysis in terms of variable-criterion theory. Psychological Research. 1991;53:281–289. doi: 10.1007/BF00920481. [DOI] [PubMed] [Google Scholar]

- Newsome W.T. Deciding about motion: Linking perception to action. Journal of Comparative Physiology A. 1997;181:5–12. doi: 10.1007/s003590050087. [DOI] [PubMed] [Google Scholar]

- Newsome W.T., Britten K.H., Movshon J.A. Neuronal correlates of a perceptual decision. Nature. 1989;341:52–54. doi: 10.1038/341052a0. [DOI] [PubMed] [Google Scholar]

- Newsome W.T., Britten K.H., Salzman C.D., Movshon J.A. Neuronal mechanisms of motion perception. Cold Spring Harbor Symposia on Quantitative Biology. 1990;55:697–705. doi: 10.1101/sqb.1990.055.01.065. [DOI] [PubMed] [Google Scholar]

- Newsome W.T., Pare E.B. A selective impairment of motion perception following lesions of the middle temporal visual area (mt) Journal of Neuroscience. 1988;8:2201–2211. doi: 10.1523/JNEUROSCI.08-06-02201.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oswal A., Ogden M., Carpenter R.H.S. The time course of stimulus expectation in a saccadic decision task. Journal of Neurophysiology. 2007;97:2722–2730. doi: 10.1152/jn.01238.2006. [DOI] [PubMed] [Google Scholar]

- Papoulis A. McGraw-Hill; New York: 1991. Probability, random variables, and stochastic processes. [Google Scholar]

- Penny W.D., Stephan K.E., Mechelli A., Friston K.J. Comparing dynamic causal models. Neuroimage. 2004;22:1157–1172. doi: 10.1016/j.neuroimage.2004.03.026. [DOI] [PubMed] [Google Scholar]

- Platt M.L. Neural correlates of decisions. Current Opinion in Neurobiology. 2002;12:141–148. doi: 10.1016/s0959-4388(02)00302-1. [DOI] [PubMed] [Google Scholar]

- Platt M.L., Glimcher P.W. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Rao R.P.N. Bayesian computation in recurrent neural circuits. Neural Computation. 2004;16:1–38. doi: 10.1162/08997660460733976. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. A theory of memory retrieval. Psychological Review. 1978;85:59–108. [Google Scholar]