Abstract

Neurons in the dorsal subdivision of the medial superior temporal area (MSTd) show directionally selective responses to both visual (optic flow) and vestibular stimuli that correspond to translational or rotational movements of the subject. Previous work has shown that MSTd neurons are clustered within the cortex according to their directional preferences for optic flow, suggesting that there may be a topographic mapping of self-motion vectors in MSTd. If MSTd provides a multisensory representation of self-motion information, then MSTd neurons may also be expected to show clustering according to their directional preferences for vestibular signals, but this has not been tested previously. We have examined clustering of vestibular signals by comparing the tuning of isolated single units (SUs) with the undifferentiated multiunit (MU) activity of several neighboring neurons recorded from the same microelectrode. We find that directional preferences for both translational and rotational vestibular stimuli, like those for optic flow, are clustered within area MSTd. MU activity often shows significant tuning for vestibular stimuli, although this MU selectivity is generally weaker for translation than for rotation. When directional tuning is observed in MU activity, the direction preference generally agrees closely with that of a simultaneously recorded SU. We also examined clustering of visual receptive field properties in MSTd by analyzing receptive field maps obtained using a reverse-correlation technique. We find that both the local directional preferences and overall spatial receptive field profiles are well clustered in MSTd. Overall, our findings have implications for how visual and vestibular signals regarding self-motion may be decoded from populations of MSTd neurons.

INTRODUCTION

Clustering of functional properties is a prominent feature of the cortex, which has been described on many scales, from columns to “patches” to topographic maps and cortical areas (Mountcastle 1957, 1997). One possibility is that clustering facilitates local information processing by placing functionally related neurons near each other in the cortex, thus simplifying the wiring of the cortex and reducing the risk that related neural discharges will be out of phase temporally due to conduction delays. Thus the presence of functional clustering for a particular stimulus parameter might indicate an important role of a cortical area in processing that parameter (but see Horton and Adams 2005 for counter-examples).

Neurons in the dorsal subdivision of the medial superior temporal area (MSTd) are multimodal, with responses to both visual and vestibular stimuli (Bremmer et al. 1999; Duffy 1998; Froehler and Duffy 2002; Gu et al. 2006; Page and Duffy 2003). Visual inputs largely derive from area MT, which is well known for having a robust columnar organization for direction of motion (Albright et al. 1984; DeAngelis and Newsome 1999; Malonek et al. 1994; Zeki 1974), binocular disparity (DeAngelis and Newsome 1999), and other stimulus attributes (Born and Bradley 2005; Born and Tootell 1992). Whereas a few earlier studies commented on a potentially clustered organization for optic flow signals in MSTd (Duffy and Wurtz 1991; Lagae et al. 1994; Saito et al. 1986), Britten (1998) was the first to examine this issue quantitatively. Using a few different types of optic flow stimuli, Britten (1998) provided quantitative evidence that nearby neurons in MST have similar tuning for optic flow. This suggests that area MSTd may contain a topographic map of heading based on optic flow, which may facilitate decoding of self-motion from population activity.

More recent work has shown that MSTd neurons also represent the direction of self-motion based on vestibular signals (Duffy 1998; Gu et al. 2006; Page and Duffy 2003). Specifically, individual MSTd neurons are tuned in three dimensions for both the direction of translation and the axis of rotation when animals are moved in the absence of optic flow (Gu et al. 2006; Takahashi et al. 2007). Moreover, these responses are vestibular in origin because they are abolished following lesions to the vestibular labyrinth (Gu et al. 2007; Takahashi et al. 2007). The majority of neurons in MSTd show selectivity for both optic flow and vestibular signals and their directional preferences for the two cues may be either congruent or opposite (Gu et al. 2006; Takahashi et al. 2007). Thus MSTd could contain a topographic map for direction of self-motion as defined by both visual and vestibular cues. This raises the question of whether vestibular signals in MSTd show a clustered organization similar to that seen for responses to optic flow (Britten 1998).

Herein we examine clustering of vestibular response properties in area MSTd and we compare the strength of this clustering with that seen for optic flow stimuli. To address this issue, we compare the translation and/or rotation selectivity of isolated single units (SUs) with the selectivity of multiunit (MU) signals that represent the combined activity of several other neurons near the tip of the electrode. This allows assessment of local clustering of tuning for translation and rotation. In addition, we also investigate whether other basic visual response properties of MSTd neurons are clustered, including the local directional preference within the visual receptive field and the overall spatial profile of the receptive field. Our results indicate that directional selectivity for both vestibular and visual stimuli is clustered within area MSTd. These results reinforce the notion that MSTd plays important roles in visual/vestibular integration for self-motion perception.

METHODS

Extracellular recordings were made from four hemispheres in three male rhesus monkeys (Macaca mulatta). The methods for surgical preparation, training, and electrophysiological recording have been described in detail in previous publications (Fetsch et al. 2007; Gu et al. 2006; Takahashi et al. 2007). This study involves new analyses of data collected as part of these previous efforts. Accordingly, the methods will be described only briefly here. Each animal was chronically implanted with a circular molded, lightweight plastic ring for head restraint and a scleral search coil for monitoring eye movements inside a magnetic field (CNC Engineering, Seattle, WA). Behavioral training was accomplished using standard operant conditioning procedures. All animal care and experimental procedures conformed to guidelines established by the National Institutes of Health and were approved by the Animal Studies Committee at Washington University.

Vestibular and visual stimuli

During experiments, the monkey was seated comfortably in a primate chair, which was secured to a 6-degree-of-freedom motion platform (MOOG 6DOF2000E; East Aurora, NY). Three-dimensional (3D) movements along or around any arbitrary axis were delivered by this platform. Computer-generated visual stimuli were rear projected (Mirage 2000; Christie Digital Systems, Cyrus, CA) onto a tangent screen placed 30 cm in front of the monkey (subtending 90 × 90° of visual angle), simulating self-motion through a 3D random-dot field (100 cm wide, 100 cm tall, and 40 cm deep). Visual stimuli were programmed using the OpenGL graphics library and generated using an OpenGL accelerator board (Quadro FX 3000G; PNY Technologies, Parsippany, NJ; see Gu et al. 2006 for details). The projector, screen, and magnetic field coil frame were mounted on the platform and moved together with the animal. Image resolution was 1,280 × 1,024 pixels and refresh rate was 60 Hz. Dot density was 0.01/cm3, with each dot rendered as a 0.15 × 0.15-cm triangle. Dot sizes were fixed in the virtual environment, such that dots grew larger on the display screen as they became closer to the eyes. Stimuli were presented stereoscopically as red/green anaglyphs, viewed through Kodak Wratten filters (red #29, green #61). The visual stimulus therefore contained a variety of depth cues, including horizontal disparity, motion parallax, and size information.

Electrophysiological recordings

Tungsten microelectrodes (FHC, Bowdoinham, ME; tip diameter 3 μm, impedance 1–2 MΩ at 1 kHz) were inserted into the cortex through a transdural guide tube, using a hydraulic microdrive (FHC). Behavioral control and data acquisition were accomplished using a commercially available software package (TEMPO; Reflective Computing, Olympia, WA). Neural voltage signals were amplified, filtered (400 to 5,000 Hz), discriminated (Bak Electronics, Mount Airy, MD), and displayed on an oscilloscope. The times of occurrence of action potentials and all behavioral events were recorded with 1-ms resolution. Eye-movement traces were sampled at a rate of 200 Hz. At the same time, raw neural signals were digitized at a rate of 25 kHz using a CED Power 1041 data acquisition system (Cambridge Electronic Design, Cambridge, UK) along with Spike2 software. These raw data were stored to disk for off-line spike sorting and additional analyses, including extraction of MU activity (see following text). Due to electrical noise from the MOOG motion platform, it was necessary to high-pass filter the neural signals sharply at 400 Hz, which precluded analysis of local field potentials.

Area MSTd was identified based on the patterns of gray and white matter transitions along electrode penetrations with respect to MRI scans, the response properties of single units and multiunits (direction selectivity, large receptive fields that often contained the fovea and portions of the ipsilateral visual field), and the eccentricity of receptive fields in underlying area MT (for details, see Gu et al. 2006).

Experimental protocol

We examined the 3D tuning of MSTd neurons for both translation and rotation by recording neural responses to stimuli defined by optic flow or physical motion (Gu et al. 2006; Takahashi et al. 2007). In each real (vestibular) or simulated (visual) motion stimulus, the animal was translated along or rotated around one of 26 directions sampled evenly from a sphere (Fig. 1A). Each movement trajectory (either real or visually simulated) had a duration of 2 s and consisted of a Gaussian velocity profile. For the translation protocol, the amplitude was 13 cm (total displacement), with a peak acceleration of about 0.1 g (∼0.98 m/s2) and a peak velocity of 30 cm/s. For the rotation protocol, the amplitude was 9°, with a peak angular velocity of about 20°/s (Gu et al. 2006; Takahashi et al. 2007). For both translation and rotation protocols, visual and vestibular stimuli were randomly interleaved within a single block of trials.

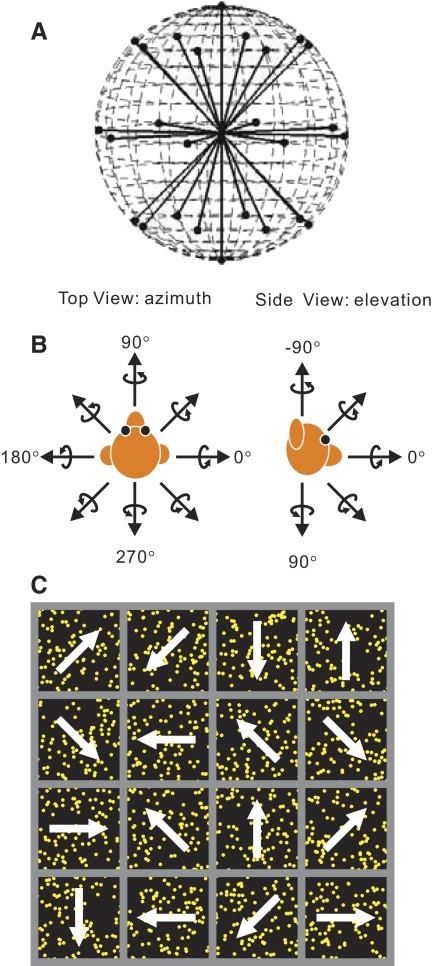

FIG. 1.

A: schematic illustration of the 26 rotational and translational directions tested. The 26 vectors sample all possible combinations of azimuth and elevation, in 45° increments, on a sphere. B, left: definition of azimuth angles. Right: definition of elevation angles. Straight arrows illustrate the direction of translation. Curved arrows illustrate the axis of rotation (according to the right-hand rule). C: reverse correlation stimulus for mapping medial superior temporal area (MSTd) receptive fields. The stimulus consisted of a 4 × 4 grid of stimulus subfields moving independently in one of 8 directions.

In a separate experimental protocol, we used a reverse-correlation technique to measure the spatial and directional receptive field structure of MSTd neurons. The display screen was divided into a 4 × 4 (or 6 × 6) grid of subfields (Fig. 1C). Within each subfield, we presented a coherently moving random-dot stimulus, which could drift in one of eight directions (45° apart) on the screen. The speed of motion was fixed at 40°/s, a value that activates most MSTd neurons (Churchland and Lisberger 2005; Duffy and Wurtz 1995). Motion occurred simultaneously in each subfield and the direction of motion of each patch changed randomly every 100 ms (six video frames). The direction of motion in each subfield was chosen randomly from a uniform distribution across the eight possible directions and each subfield was updated independently of the others. Each 2-s trial thus contained a temporal sequence of 20 directions of motion within each subfield, and a new random sequence of directions was presented each trial. Typically, about 60–100 trials (mean = 80) were necessary to obtain a reasonably smooth receptive field map. This protocol was not run for every neuron because good isolation was sometimes lost during the 3D translation/rotation runs.

For all stimulus conditions, the animal was required to fixate a central target (0.2 × 0.2°) for 200 ms before stimulus onset. The animals were rewarded with a drop of juice at the end of each trial for maintaining fixation throughout stimulus presentation. Trials were aborted and data discarded when the monkey's gaze deviated by >1° from the fixation target.

Data analysis

SINGLE- AND MULTIUNIT TUNING.

Action potentials from a single unit (SU) were isolated with a dual voltage–time window discriminator on-line (Bak Electronics, Mount Airy, MD). Multiunit activity was obtained by off-line processing of the digitized raw neural signal. A multiunit (MU) event was defined as any deflection of the analog voltage signal that exceeded a threshold level. The absolute frequency of the MU response is somewhat arbitrary, depending on the level of the event threshold. To standardize our measurements across recording sites, we adjusted the event threshold to obtain a spontaneous activity level that was 50 spikes/s greater than the spontaneous activity level of the SU. The resulting MU signal reflected the combined activities of several neurons near the electrode tip, including the isolated SU. To make our SU and MU measurements independent, we subtracted one count from time bins of the MU signal that occurred within ±1 ms of each SU spike. This prevented SU spikes from leaking into the MU signal. Counts were subtracted from the MU bins immediately neighboring each SU spike to account for possible jitter in the time binning of events in the SU and MU signals (which were collected using two different hardware systems). This ensures removal of the SU spikes from the MU signal but also removes some additional events from the MU signal as well. To examine the efficacy of this procedure, we computed the cross-correlation function between simultaneous SU and MU recordings (e.g., Fig. 2B). This analysis was carried out for each pair of SU and MU recordings and showed that our procedure was quite effective at removing correlations between SU and MU responses (Fig. 4).

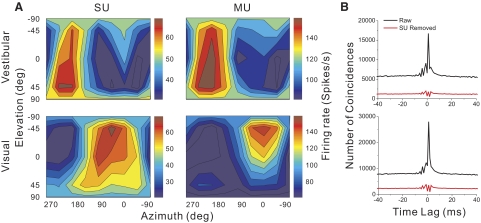

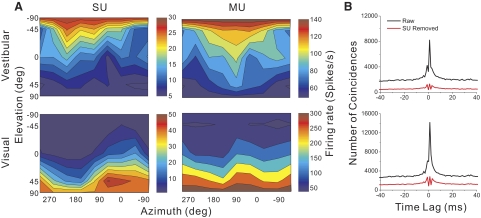

FIG. 2.

Example data from an MSTd neuron tested in the vestibular (top row) and visual (bottom row) translation conditions. A: 3-dimensional (3D) direction tuning profiles are shown for single-unit (SU, left column) and multiunit (MU, right column) activity. Color contour maps show the mean firing rate as a function of azimuth and elevation angles. Each contour map shows the Lambert cylindrical equal-area projection of the original spherical data. B: cross-correlation function between SU and MU responses. The abscissa is the time lag and the ordinate is the number of coincidences. The black curve represents the cross-correlation between SU and MU before SU spikes were excluded from MU. The red curve illustrates the cross-correlation after SU spikes were removed from the MU signal.

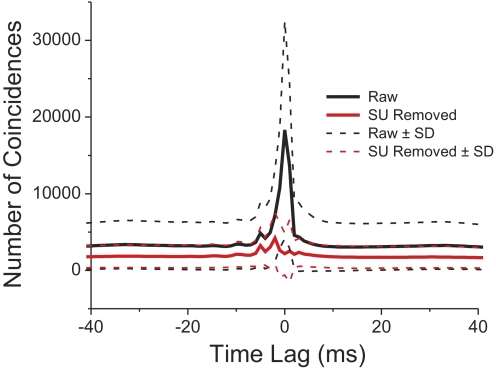

FIG. 4.

Averaged cross-correlation function for all pairs of SU and MU tested using the translation (n = 287) or rotation (n = 81) protocols. Translation and rotation data have been pooled. The solid black line shows the raw average cross-correlation before SU spikes were excluded from the MU signal. The dashed black lines represent ±1 SD around this mean. The solid red line shows the average cross-correlation function after SU spikes were removed from the MU signal. Dashed red lines represent ±1 SD around this mean.

For each SU/MU recording, we constructed 3D tuning profiles for both translation and rotation by plotting the mean firing rate during the central 1 s of the stimulus period as a function of azimuth and elevation (Gu et al. 2006; Takahashi et al. 2007). To plot these spherical data on Cartesian axes (e.g., Fig. 2), the data were transformed using the Lambert cylindrical equal-area projection (Snyder 1987). In these plots, the abscissa represents azimuth and the ordinate represents a cosine-transformed version of elevation. We determined whether a neuron was spatially tuned for rotation and/or translation using a one-way ANOVA with a significance criterion of P < 0.05.

To quantify the strength of spatial tuning for translation and rotation, a direction discrimination index (DDI) was calculated for each data set (SU or MU) according to the following formula (DeAngelis and Uka 2003; Prince et al. 2002; Takahashi et al. 2007)

|

(1) |

where Rmax and Rmin are the maximum and minimum responses from the 3D tuning function, respectively; SSE is the sum squared error around the mean responses; N is the total number of observations (trials); and M is the number of stimulus directions (M = 26). The DDI compares the difference in firing between the preferred and null directions against response variability and quantifies a neuron's reliability for distinguishing between preferred and null motions. Neurons with large response modulations relative to the noise level will have DDI values close to 1, whereas neurons with weak response modulation will have DDI values close to 0. DDI is conceptually similar to a d′ metric in that it quantifies signal-to-noise ratio but it has the advantage of being bounded between 0 and 1, similar to other conventional metrics of response modulation.

RECEPTIVE FIELD ANALYSIS.

To obtain a receptive field (RF) map for each MSTd neuron, the spike train was cross-correlated with the temporal sequence of motion directions for each subfield in the stimulus grid. This approach is similar to that of Borguis et al. (2003) and is analogous to reverse-correlation techniques used previously in other stimulus domains (DeAngelis et al. 1993; Eckhorn et al. 1993; Jones and Palmer 1987; Ringach et al. 1997). This method produces a direction–time map for each individual subfield in the stimulus grid. If there is coupling between the stimulus and response over a range of correlation delays (T), then a pattern will emerge in the direction–time profiles (Fig. 9A); otherwise, the profiles will show no structure. These maps therefore reveal the direction tuning for each subfield that is contained in the MSTd neuron's RF. If a stimulus subfield is outside the RF, then its direction–time map will not show any structure. Note, however, that this particular method will reveal only portions of the RF that have directional selectivity. If there are also portions of an MSTd RF that are not direction selective, these maps will not identify those regions. To summarize the directional tuning and response modulation for each subfield, we identified the peak correlation delay (Tpeak) as the value of T at which the direction tuning curves have maximal variance. A horizontal cross section through the maps at Tpeak (e.g., horizontal lines in Fig. 9) yields a direction tuning curve for each subfield. These tuning curves were fit with a modified wrapped Gaussian function, r(θ) (Yang and Maunsell 2004)

|

(2) |

where θpref indicates the preferred direction, σ represents the tuning bandwidth, a denotes the tuning curve amplitude, and b indicates the baseline firing rate.

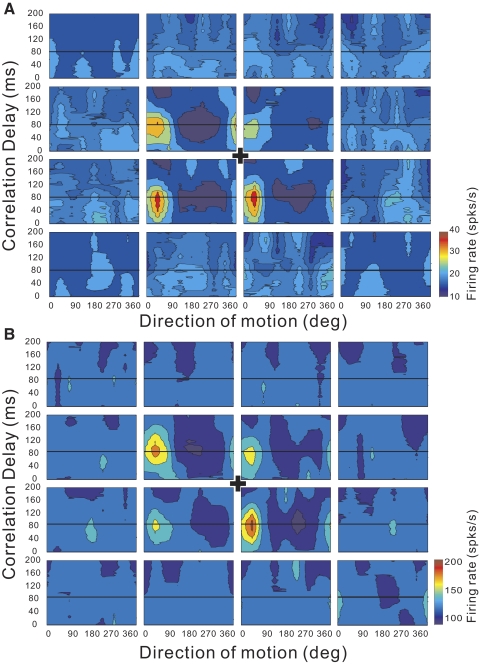

FIG. 9.

Direction–time receptive fields (RFs) are shown as contour plots for an example of SU (A) and MU (B) activity recorded simultaneously. In this case, the stimulated region of the visual field (90 × 90°) was divided into a 4 × 4 grid of 16 locations. Each subfield spanned 15 × 15 cm on the 60 × 60 cm tangent screen (viewed from 30 cm). As a result, the exact angular subtense of each subfield varied somewhat with its location. At each location, the stimulus could move in one of 8 directions. For each location, the neural response is cross-correlated with the motion impulse sequence (see methods). The direction of motion is indicated along the horizontal axis (with 0° indicating rightward and 90° indicating upward) and the reverse-correlation delay is indicated along the vertical axis. A “+” in the center denotes the center of the display screen where the monkey fixated. The optimal reverse-correlation delay is indicated by the black horizontal line in each panel.

To quantify the strength of direction selectivity for each subfield, we computed the vector sum of the normalized responses to the eight directions of motion. If the tuning curve is flat, the vector sum will be close to zero; larger values of the vector sum indicate stronger/narrower tuning. To assess the statistical significance of directional tuning for each subfield, we used a resampling method. For a range of negative (i.e., noncausal) correlation delays from 0 to −200 ms (in 1-ms steps), we again cross-correlated the spike train and stimulus sequence to obtain a set of “flat” direction tuning curves that reflect the noise level in our measurements. For each of these, we again computed the vector sum of the normalized responses. This yielded a distribution of vector sum values that could occur by chance given the noise level in the direction–time map. The direction tuning of a particular subfield was considered significant if its corresponding vector sum value lay outside the 95% confidence interval of this noise distribution.

In addition to quantifying the direction selectivity in each subfield, we estimated the overall spatial RF profile of each MSTd neuron by quantifying how the strength of directional tuning varied across subfields. Specifically, we plotted the amplitude a of the wrapped Gaussian fit to each direction tuning curve as a function of the center location of each subfield (e.g., Fig. 12A). These amplitude data were then fitted with a two-dimensional Gaussian function

|

(3) |

where A is the amplitude; (x0, y0) are the coordinates of the peak of the spatial profile; σx and σy are the tuning widths along the horizontal (x) and vertical (y) dimensions, respectively; and b is the baseline response level. The horizontal and vertical sizes of the RF were computed by measuring the full width at half-maximum (FWHM), which can be written as: FWHM = 2 σ = 2.35σ (Wennekers 2001).

σ = 2.35σ (Wennekers 2001).

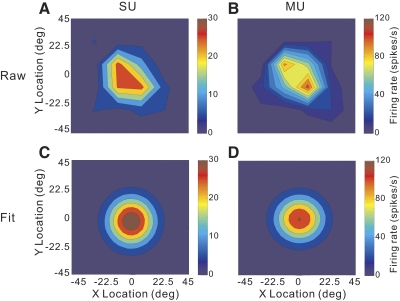

FIG. 12.

Example of spatial RF structure derived from reverse-correlation measurements. Two-dimensional (2D) spatial (X–Y) RFs are shown as contour plots for an example data set. Positive x values indicate the right visual hemifield and positive y values indicate the upper visual field. A: SU receptive field profile. Each point in the RF map represents the amplitude of the direction tuning curve obtained at the peak response latency. B: MU receptive field profile. C and D: 2D Gaussian fits to the spatial RF profiles of the SU and MU responses, respectively. The full width at half-maximum along the x dimension (FWHMx) is 38° for the SU and 41° for the MU; the full width at half-maximum along the y dimension (FWHMy) is 42° for the SU and 38° for the MU. The center of the RF is located at (−1.5°, −5.4°) for the SU response and (2.5°, −0.89°) for the MU response.

To evaluate whether a spatial RF map had significant structure (relative to noise), we performed a permutation test. We first rank-ordered the amplitude values of the wrapped Gaussian fits to the data from each subfield. We then computed the sum of the spatial distances between the pair of subfields having the first and second largest amplitudes and the pair of subfields having the first and third largest amplitudes. If the spatial RF has significant organization, the subfields with the largest amplitudes should be spatially adjacent such that the summed distance is small for these pairs. Next, we randomly permuted the relationships between amplitudes and subfield locations, thus effectively scrambling the spatial RF profile. The summed spatial distances between the largest amplitude pairs were computed for 1,000 such permutations. If the summed spatial distance for the original RF profile was <95% of the random permutations, the spatial RF map was classified as having significant structure. Only those data sets with significant spatial RF profiles for both SU and MU activity were included in the analysis of RF overlap described in the following text.

To assess the degree of overlap of SU and MU RF profiles, we computed normalized separations between the centers of the SU and MU spatial RFs. The normalized separations between SU and MU RFs were defined as

|

for the horizontal dimension and

|

for the vertical dimension. Here (x0_SU, y0_SU) and (x0_MU, y0_MU) are the coordinates of the centers of the spatial RFs for the SU and MU, respectively. σx_SU and σx_MU reflect the horizontal spread of the RFs for SU and MU, respectively, whereas σy_SU and σy_MU indicate the vertical spread of the RFs. If the spatial separation between the centers of the SU and MU RFs is small relative to the sizes of the RFs, then these normalized separation metrics will be <1.

RESULTS

To assess whether visual and vestibular signals are clustered in area MSTd, we compared the responses of isolated single units (SUs) to the responses of multiunit (MU) activity recorded from the same microelectrode (after removing SU spikes from the MU activity). We analyzed whether neurons in MSTd are clustered in two ways. First, we measured the similarity between the 3D translation and rotation tuning of SU and MU responses. Second, we measured the similarity between the directional and spatial RF structure of SU and MU responses. The data presented here were collected as part of previous studies (Gu et al. 2006; Takahashi et al. 2007) in which SU responses were examined in detail. Due to the length of the experimental protocols in those studies, we generally did not collect data from multiple recording sites along penetrations through MSTd. Hence our analyses are limited to a comparison of SU and MU responses at the same recording site and we do not directly address variations in tuning as a function of distance along electrode penetrations.

Clustering of 3D translation and rotation tuning in MSTd

In the translation protocol (see methods), each MSTd neuron was tested with 26 directions of translation, consisting of all combinations of azimuth and elevation separated by 45° on a sphere (Fig. 1, A and B). Figure 2A shows an example of 3D translation tuning for simultaneously recorded SU and MU activity in MSTd. The data are shown as contour maps in which mean firing rate (represented by color) is plotted as a function of azimuth (abscissa) and elevation (ordinate) (see also Gu et al. 2006). Data in the top row show responses obtained in the vestibular stimulus condition and data in the bottom row are from the visual condition. Note that the MU responses are about twofold larger than the SU responses. In the vestibular condition, the SU shows clear spatial tuning for translation, with a preferred direction at 190° azimuth and −1° elevation. A nearly opposite translation preference was seen in the visual condition for this SU, with the direction preference occurring at 47° azimuth and 9° elevation. This pattern of results is typical of an “opposite” cell, as described previously (Gu et al. 2006). The MU activity recorded simultaneously with this SU (Fig. 2A, right column) shows similar tuning for translational motion with a preferred direction of (181°, 0°) for the vestibular condition and (9°, −33°) for the visual condition. This suggests that nearby neurons in MSTd have similar direction preferences.

The similarity in tuning between MU and SU responses cannot simply be due to the same single unit contributing to both signals. To avoid this confound, we have excluded SU spikes from the MU signal (see methods), so that the MU response reflects the combined activity of several other nearby SUs. This was verified by cross-correlation analysis, as shown in Fig. 2B. Before SU spikes were removed, there was a sharp peak in the cross-correlogram centered around 0 ms. After SU spikes were removed, the cross-correlogram was relatively flat, indicating that spikes from the single cell were effectively excluded from the MU signal. Thus the observed similarity in SU and MU tuning for the example in Fig. 2A is not attributable to a common source of spikes. All MU data reported herein had the corresponding SU spikes removed.

For the translation protocol, this analysis was performed on a total of 285 MSTd neurons for the vestibular condition and 270 MSTd neurons for the visual condition. All SU/MU pairs were recorded simultaneously from a single microelectrode. Table 1 summarizes the proportions of SU and MU responses with significant spatial tuning for translation. In the vestibular condition, 56% (161/285) of SUs and 32% (92/285) of MUs had significant spatial tuning (ANOVA, P < 0.05, Table 1). In contrast, 97% (261/270) of SUs and 82% (222/270) of MUs were significantly tuned in the visual translation condition. When the SU was significantly tuned, the MU was also significantly tuned in 45% of cases for the vestibular condition, compared with 84% of cases in the visual condition. When the MU was significantly tuned, the SU was also significantly tuned in 78% of cases for the vestibular condition, compared with 98% cases in the visual condition (see Table 1). Both SU and MU selectivities for translation were less prevalent in the vestibular condition.

TABLE 1.

Percentage of SU and MU with significant spatial tuning for translation (ANOVA, P ≤ 0.05)

| SU |

MU |

|

|---|---|---|

| P ≤ 0.05 | P > 0.05 | |

| Vestibular (n = 285) | ||

| P ≤ 0.05 | 72/285 (25%) | 89/285 (31%) |

| P > 0.05 | 20/285 (7%) | 104/285 (36%) |

| Visual (n = 270) | ||

| P ≤ 0.05 | 219/270 (81%) | 42/270 (16%) |

| P > 0.05 | 3/270 (1%) | 6/270 (2%) |

For the rotation protocol, we analyzed data from a total of 81 and 66 SU/MU pairs for the vestibular and visual conditions, respectively. An example data set is shown in Fig. 3. In the vestibular rotation condition, the SU was spatially tuned with a direction preference at 150° azimuth and −73° elevation, whereas the MU preferred 88° azimuth and −69° elevation. In the visual condition, the peak SU response occurred at 350° azimuth and 69° elevation and the MU response peaked at 10° azimuth and 86° elevation. Thus visual and vestibular rotation preferences were similar for SU and MU activity for this example neuron. The corresponding cross-correlograms between SU and MU responses are shown in Fig. 3B. Again, it can be seen that coupling between SU and MU signals was effectively removed by our procedure.

FIG. 3.

Example data from an MSTd neuron tested in the vestibular (top row) and visual (bottom row) rotation conditions. Format as described for Fig. 2.

As shown in Table 2, 93% (75/81) of SUs and 70% (57/81) of MUs were significantly tuned for vestibular rotation, compared with 99% (65/66) of SUs and 88% (58/66) of MUs for visual rotation (ANOVA, P < 0.05). When MU tuning was significant, SU tuning was also significant for 98% of SUs in both the vestibular and visual conditions. When SU tuning was significant, MU tuning was also significant for 75% of cases for the vestibular and 88% of cases for the visual conditions. Thus the incidence of significant tuning in MU activity was higher for vestibular rotation than that for vestibular translation. This may reflect tighter clustering of vestibular rotation responses or, simply, the fact that vestibular rotation tuning is generally stronger than vestibular translation tuning for SUs (Takahashi et al. 2007). Vestibular rotation responses may also reflect a contribution of retinal slip of the faint visual background since these rotation responses were found to be slightly weaker during viewing in total darkness (Takahashi et al. 2007).

TABLE 2.

Percentage of SU and MU with significant spatial tuning for rotation (ANOVA, P ≤ 0.05)

| SU |

MU |

|

|---|---|---|

| P ≤ 0.05 | P > 0.05 | |

| Vestibular (n = 81) | ||

| P ≤ 0.05 | 56/81 (69%) | 19/81 (23%) |

| P > 0.05 | 1/81 (1%) | 5/81 (6%) |

| Visual (n = 66) | ||

| P ≤ 0.05 | 57/66 (86%) | 8/66 (12%) |

| P > 0.05 | 1/66 (2%) | 0/66 (0%) |

Figure 4 summarizes the results of the cross-correlation analyses between SU and MU responses before and after removing SU spikes from the MU signal. Because we found no significant differences in the cross-correlation results between translation and rotation protocols, we computed average cross-correlograms across all data sets. As shown in Fig. 4, there was a sharp correlation peak at a lag of 0 ms before SUs were removed and the amplitude of this peak greatly exceeded the SD of the baseline correlation (at large time lags). After SUs were removed, the correlogram was relatively flat and the residual peak was within 1SD of the baseline correlation. Therefore our pruning procedure (see methods) was largely effective in removing the artificial coupling between SU and MU signals—we feel confident that any similarity in tuning between SU and MU responses reflects neural clustering in MSTd. Note, however, that there may also be genuine neural coupling between SU and MU signals that takes the form of a broader, shallower peak around time 0. Since we removed events from the MU signal only within ±1 ms of SU spikes, some of these broad correlation peaks remain.

We next summarize the similarity of SU and MU tuning across the population of MSTd neurons. To summarize response strength, we computed the difference between the peak response and spontaneous activity (Rmax − spont). Figure 5A shows this metric for SU and MU responses recorded simultaneously during the translation protocol. The vast majority of data points lie above the diagonal for both the vestibular (red) and visual (cyan) conditions. The average MU/SU peak response ratios were 4.1 and 3.4 for the vestibular and visual conditions, respectively. Figure 5B shows analogous data for the rotation protocol. The average MU/SU peak response ratios were 3.6 and 3.1 for the vestibular and visual rotation conditions, respectively. Thus for both the translation and rotation protocols, the average MU response was significantly stronger than the average SU response (paired t-test, P ≪ 0.001). Thus even if we failed to exclude every SU spike from the MU activity, any residual SU activity would account for little of the MU response.

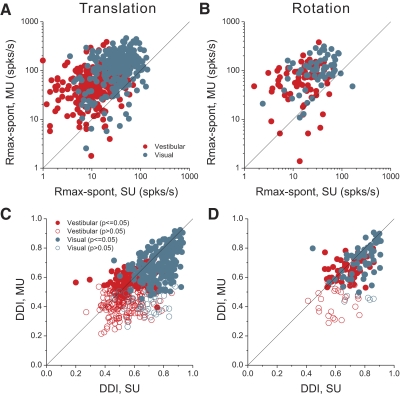

FIG. 5.

Quantitative summary of peak response (Rmax − spont) and tuning strength (direction discrimination index [DDI]) derived from MU and SU responses. In each panel, data from SUs are plotted on the x-axis and data from the corresponding MU responses are plotted on the y-axis. Red and cyan symbols represent data from vestibular and visual conditions, respectively. A: comparison of SU and MU peak responses for the vestibular translation (n = 287) and visual translation (n = 272) conditions. B: comparison of SU and MU peak responses for the vestibular rotation (n = 81) and visual rotation (n = 66) conditions. C: comparison of SU and MU DDI values for the translation conditions. Closed (open) circles: neurons with (without) significant MU tuning (ANOVA, P < 0.05). D: comparison of SU and MU DDIs for the rotation conditions.

To quantify the strength of 3D spatial tuning, we computed a direction discrimination index (DDI; see methods) that quantified the peak to trough modulation of the neuron's tuning profile relative to the noise level (see also Takahashi et al. 2007). Figure 5C compares DDI values from SU and MU responses measured during the translation protocol (red: vestibular; cyan: visual). Filled symbols represent data sets with significant MU tuning (ANOVA, P < 0.05), whereas open symbols indicate nonsignificant MU tuning (ANOVA, P > 0.05). Most data points tend to fall near or slightly below the unity-slope diagonal. As a result, the mean DDI values for SU activity in the vestibular (0.54) and visual (0.76) conditions were significantly larger than the corresponding mean DDI values for MU activity in the vestibular (0.48) and visual (0.66) conditions (Wilcoxon rank-sum tests, P < 0.001). MU and SU DDI values were significantly correlated for both the vestibular (r = 0.32; P < 0.0001) and visual (r = 0.51; P < 0.0001) translation conditions, indicating that SUs tend to exhibit weaker tuning at sites where the MU response is poorly tuned. These findings argue against the possibility that flat MU tuning results solely from a combination of SUs that are individually well tuned, but to different directions. Rather, it appears that weak MU tuning is associated, to some degree, with weaker SU tuning.

Figure 5D shows a similar comparison of SU and MU DDI values for the rotation protocol. Again, mean DDI values for SU activity significantly exceeded those for MU activity in both the vestibular (0.66 vs. 0.58) and visual (0.77 vs. 0.69) conditions (Wilcoxon rank-sum tests, P < 0.001). Despite the smaller sample sizes, MU and SU DDI values were again significantly correlated for both vestibular (r = 0.48; P < 0.0001) and visual (r = 0.41; P < 0.001) conditions. Overall, the extent of clustering appears to be comparable for rotation and translation.

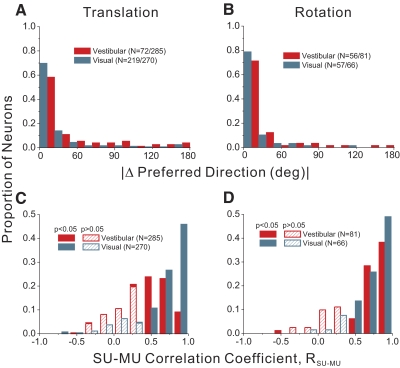

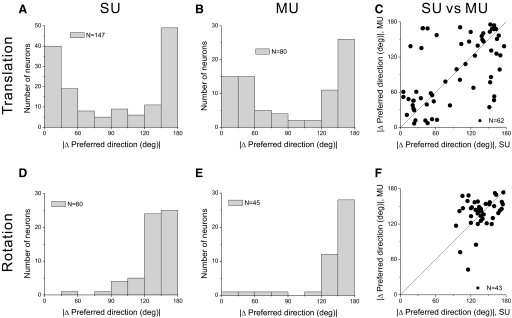

We next consider the matching of 3D direction preferences between SU and MU activity. For the translation protocol, 72 data sets (25%) had significant tuning for both SU and MU responses in the vestibular condition, whereas 219 data sets (81%) showed significant SU and MU tuning in the visual condition. For these data sets, we computed the smallest angle in 3D between the preferred direction vectors for SU and MU activity (|Δ preferred direction|). If SU and MU responses have similar direction preferences, this metric should tend toward zero; if there is no clustering of SU and MU preferences, the distribution of |Δ preferred direction| should be uniform as plotted here. Figure 6A shows the distribution of |Δ preferred direction| for the vestibular (red) and visual (cyan) translation conditions. Both distributions were significantly nonuniform (permutation test, P < 0.001), with peaks close to 0°. For the rotation protocol, analogous data were available from 56 recordings in the vestibular condition and 57 recordings in the visual condition. As shown in Fig. 6B, the distributions of |Δ preferred direction| were again clearly nonuniform (permutation test, P < 0.001), with peaks close to 0°. Thus when both SU and MU activity in MSTd are significantly tuned, the preferred direction vectors tend to be very similar. Occasionally, there are large differences in direction preference between SU and MU responses and these might occur, for example, when the electrode is located near the boundary between two clusters of neurons that have nearly opposite preferences. Such reversals in direction preference have been observed in area MT, for example (Albright et al. 1984; DeAngelis and Newsome 1999; Malonek et al. 1994).

FIG. 6.

Summary of differences in direction tuning between SU and MU responses. In A and B, histograms show the distribution of the difference in 3D preferred directions, |Δ preferred direction|, between corresponding SU and MU responses (|Δ preferred direction| is computed as the smallest angle between the pair of preferred direction vectors in 3D). A: histograms of |Δ preferred direction| between SU and MU for the vestibular translation condition (n = 72/285, magenta) and the visual translation condition (n = 219/270, cyan). Note that data are shown here only for recordings in which both SU and MU responses showed significant tuning. B: histograms of |Δ preferred direction| for the vestibular rotation (n = 56/81) and visual rotation (n = 57/66) conditions. C and D show distributions of correlation coefficients (RSU–MU) computed by comparing SU and MU tuning profiles. C: distributions of RSU–MU are shown for the vestibular translation (n = 285) and visual translation (n = 270) conditions. Filled bars denote values of RSU–MU that are significantly different from zero. D: distributions of RSU–MU are shown for the vestibular rotation (n = 81) and visual rotation (n = 66) conditions.

To further quantify the overall similarity of SU and MU tuning, we computed correlation coefficients between SU and MU response profiles (e.g., Fig. 2A). Distributions of the correlation coefficient, RSU–MU, are shown in Fig. 6C for the translation conditions. For visual translation, most values of RSU–MU (228/270, 84%) are significantly different from zero (filled cyan bars), with an overall median value of 0.76. For vestibular translation, about half of the correlation coefficients (164/285, 57%) are significantly different from zero (filled red bars), with an overall median value of 0.46. This correlation analysis further supports the finding that clustering of selectivity is stronger for visual translation than vestibular translation. Figure 6D shows analogous data for the rotation conditions. For visual rotation, 58/66 data sets (89%) have RSU–MU values significantly different from zero, with an overall median value of 0.80. For vestibular rotation, the values are similar: 60/81 RSU–MU values are significantly different from zero (74%), with an overall median value of 0.73.

The analyses of Fig. 6 consider the similarity of direction tuning between SU and MU responses separately for the visual and vestibular conditions. We now consider the congruency of visual and vestibular selectivity. Figure 7A shows the distribution of differences in direction preference between visual and vestibular responses for SUs recorded in the translation condition. As reported previously (Fetsch et al. 2007; Gu et al. 2006, 2007), this distribution is clearly bimodal. Roughly half of MSTd neurons have similar visual and vestibular translation preferences (“congruent” cells) and roughly half have widely different preferences (“opposite” cells). A very similar pattern is seen for MU activity from the translation condition, as shown in Fig. 7B, indicating that these two extremes of visual/vestibular congruency are preserved in MU activity. For the subset of recordings with significant translation tuning in both visual and vestibular responses, Fig. 7C compares the congruency of SU and MU tuning. With the exception of a handful of recording sites, the congruency of MU responses generally matches well with the congruency of SU responses (R = 0.57, P < 0.001). Thus both congruent and opposite cells appear to be clustered in area MSTd.

FIG. 7.

Congruency of visual and vestibular tuning in SU and MU responses. A: congruency of SU responses for translation. The distribution of differences in 3D direction preference between visual and vestibular responses (|Δ preferred direction|) is bimodal, indicating roughly equal numbers of “congruent” and “opposite” cells. Data are shown for the subset of SUs with significant direction tuning in both visual and vestibular conditions. B: analogous data are shown for MU activity measured during the translation protocol. C: congruency (|Δ preferred direction|) for MU responses is plotted against that for SU responses. Data are shown for recording sites with significant SU and MU tuning for both visual and vestibular conditions. D: congruency of SU responses for the rotation protocol. E: congruency of MU responses to rotation stimuli. F: comparison of congruency between SU and MU responses obtained during the rotation protocol.

Figure 7, D–F shows the analogous congruency data for the rotation experiment. As reported previously (Takahashi et al. 2007), almost all SUs are opposite cells in response to rotation stimuli (Fig. 7D) and MU responses in area MSTd exhibit a very similar pattern of results (Fig. 7E). The SU and MU measures of congruency again generally agree well (Fig. 7F; R = 0.34, P = 0.024), even though there is relatively little variation in these data across recording sites compared with the translation condition.

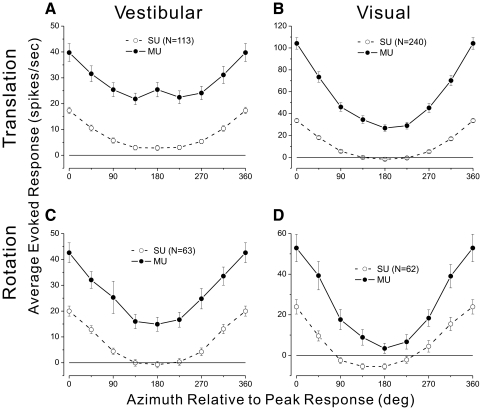

To provide a simple graphical summary of the results quantified in Figs. 5–7, we computed population tuning curves for SU and MU activity. To simplify the presentation, we constructed tuning curves from the subset of stimulus directions that lie in the horizontal plane (azimuth varies with elevation fixed at zero). This yields clear tuning for most neurons (Fetsch et al. 2007; Gu et al. 2006) and allows us to average tuning curves across neurons. Open symbols in Fig. 8A show the average tuning curve for SUs in the vestibular translation condition. For each SU with significant tuning (ANOVA, P < 0.05), the data were shifted such that the maximal response occurs at zero azimuth, spontaneous activity was subtracted, and the resulting curves were averaged across neurons. For comparison, filled symbols in Fig. 8A show the average tuning curve for the corresponding MU responses. In this case, spontaneous activity was again subtracted from each MU curve and the MU data were aligned to the azimuth preferred by each SU. Thus if there was no clustering of tuning in MSTd, the MU population tuning curve should be flat. As seen in Fig. 8A, average MU responses to vestibular translation have tuning consistent with SU responses, although the modulation depth of this MU tuning is modest and the error bars are substantially larger than those of the SU activity. By comparison, in the visual translation condition (Fig. 8B), average MU responses have considerably stronger modulation consistent with the analyses of Fig. 5. Finally, population tuning curves in Fig. 8, C and D show average selectivity for SU and MU responses in the vestibular and visual rotation conditions, respectively. In both rotation conditions, average MU responses show strong tuning that aligns well with the SU responses.

FIG. 8.

Summary tuning curves comparing SU and MU responses to translation and rotation stimuli. For each neuron, an azimuth tuning curve was obtained from the subset of directions lying in the horizontal plane. SU tuning curves (open symbols) show the average response of all SUs with significant tuning in the horizontal plane. The data are aligned to the peak response of each SU and spontaneous activity is subtracted before averaging. MU tuning curves (filled symbols) show the average MU responses (after subtracting spontaneous activity). MU data were aligned to the peak response of each corresponding SU. A: data from the vestibular translation condition. B: data from the visual translation condition. Note that MU tuning is stronger and more reliable compared with vestibular translation. C: average responses from the vestibular rotation condition. D: average responses from the visual rotation condition.

Clustering of receptive field properties in MSTd

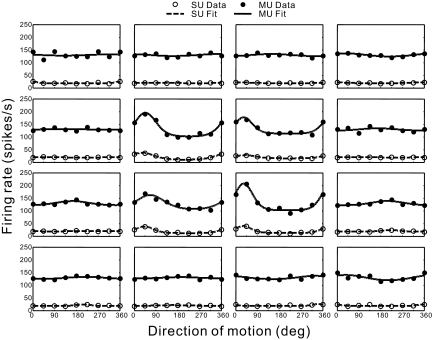

The measurements described earlier strongly suggest that neurons in area MSTd are clustered according to their 3D preferences for translational and rotational movements. Because the optic flow stimuli were large-field (90 × 90°) and the 3D tuning may reflect a complex interaction of visual motion signals across space, we were also interested in whether more basic visual response properties (receptive fields) are clustered in MSTd. Thus for a subset of 70 recordings, we used a reverse-correlation technique (see methods) to quantitatively characterize the directional RF structure of SU and MU responses.

Figure 9A shows the RF map obtained for an example SU. The visual stimulus was a dynamic random-dot display consisting of a 4 × 4 grid of subfields that spanned the 90 × 90° visual display (Fig. 1C). Each subfield contained a random-dot pattern moving coherently in one of eight possible directions (0°: rightward; 90°: upward). Every 100 ms, the direction of motion was chosen randomly for each of the 16 subfields, such that each subregion of the receptive field was probed with all eight directions of motion. The resulting spike train was cross-correlated to the stimulus sequence for each subfield, resulting in a distinct direction–time map for each individual subfield (Fig. 9A). If the portion of the neuron's RF overlying a particular stimulus subfield produces a direction-selective visual response, then that subfield will show structure in the direction–time map. If no directional response is elicited by the stimulus in a particular subfield, the direction–time map will be unstructured. For the example neuron in Fig. 9A, the central four subfields show clear directional structure, with a preference for rightward and slightly upward motion. Note that this MSTd RF is clearly bilateral and includes the fovea. Figure 9B shows the RF map for simultaneously recorded MU activity. The pattern of selectivity for the MU is nearly identical, suggesting that RF properties are locally clustered.

To further quantify the similarity of RF structure between SU and MU responses, we reduced each direction–time map to a single direction tuning curve by taking a cross section through the data at a correlation delay of Tpeak ≈ 80 ms (horizontal lines in Fig. 9). This peak delay (Tpeak) was chosen as the delay that maximized the variance in direction tuning across all subfields of the RF map (estimated separately for SU and MU activities). The resulting direction tuning curves for each subfield are shown in Fig. 10 for the same example recording (MU: filled symbols; SU: open symbols). Solid and dashed curves show the best fits of a wrapped Gaussian function (see methods). Two characteristics were used to quantify each direction tuning curve: 1) the vector sum of normalized responses, which reflects the strength of direction selectivity, and 2) the preferred direction determined by the peak of the Gaussian fit. For the example data set in Fig. 10, the normalized vector sum is significantly greater than zero for both SU and MU responses in the central four subfields (P < 0.05; see methods).

FIG. 10.

Direction tuning curves at the peak response latency (Tpeak = 82 ms) are shown for the same SU and MU data illustrated in Fig. 9. For each location in the stimulus grid, filled (open) circles show the MU (SU) response to 8 different directions of motion, 45° apart. The solid (dashed) curve is the best-fitting wrapped Gaussian function for MU (SU).

Figure 11A summarizes the strength of direction tuning (normalized vector sum) for SU and MU responses obtained from each subfield of all 70 data sets (n = 1,120 total). Filled symbols denote data with significant MU direction tuning (P < 0.05). Most of the data points fall below the diagonal, such that the average vector sum for MU activity (0.24) is significantly lower than the average for SU activity (0.33) (paired t-test, P < 0.05). The vector sum metrics for MU and SU are strongly correlated (r = 0.71; P < 0.001), indicating that subfields with strong directional selectivity in MU activity also have strong SU tuning.

FIG. 11.

Summary of differences in direction tuning between SU and MU responses for each location tested within the receptive fields of a population of neurons. A: the scatterplot shows the relationship between the vector sum of normalized responses for SU and MU activity. Closed (open) circles: locations with (without) significant direction tuning for MU responses (P < 0.05). B: histogram of the differences between SU and MU direction preferences for all RF locations at which both signals showed significant tuning.

To summarize the relationship between direction preferences for SU and MU activity, Fig. 11B shows the distribution of the difference in preferred directions between SU and MU, as determined from the wrapped Gaussian fits. Data are shown for 351 subfields in which both MU and SU responses had significant direction selectivity. This distribution is clearly nonuniform (permutation test, P ≪ 0.001), with a peak close to zero (median value: 21.9°). Notably, 61% of SU/MU pairs have direction preferences within 30° of each other, indicating that local visual direction preferences are strongly clustered in MSTd. There is also a hint of a second peak in the distribution near direction differences of 180°, suggesting that SUs occasionally have direction preferences opposite to the MU preference.

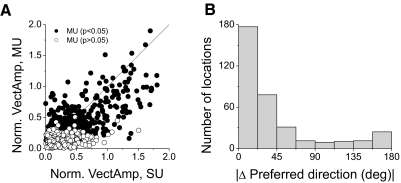

Whereas the preceding analysis focused on the direction tuning of SU/MU activity in each subfield of the mapping grid, the reverse-correlation data can also be used to estimate the overall spatial profile of the receptive field. For this purpose, we plotted the amplitude of the wrapped Gaussian fit as a function of the location of each subfield (e.g., Fig. 12A). Note that this provides a spatial map of the regions of the RF that produce direction-selective responses; nondirectional portions of the receptive field, should they exist, would not be represented. Figure 12B shows the analogous spatial map for the simultaneously recorded MU activity. The SU and MU responses have very similar spatial profiles. To quantify the location and extent of these spatial RFs, the data were fit with a two-dimensional (2D) Gaussian (see methods). For the example SU, the best-fitting Gaussian (Fig. 12C) is centered at (−1.5°, −5.4°) and has dimensions of 38 × 42° (full width at half-maximum [FWHM], horizontal × vertical). For the corresponding MU profile, the center is located at (2.5°, −0.9°) and the dimensions are 41 × 38°.

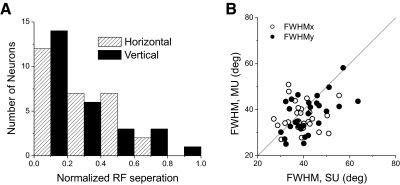

Among the 70 data sets obtained using reverse correlation, spatial RF maps with statistically significant structure (permutation test, P < 0.05; see methods) were obtained for 64% (45/70) of SUs and 66% (46/70) of MUs. Forty-four percent (31/70) had significant spatial structure for both SU and MU responses. Three SU/MU pairs were excluded because of poor fits with the 2D Gaussian function (correlation coefficient, r < 0.8, between measured spatial profile and 2D Gaussian fit). For the 28 data sets that met the above-cited criteria, we quantified the degree of overlap of the SU and MU RFs by taking the distance between the centers of the Gaussian fits and normalizing by the average SD of the Gaussian (see methods). The distribution of this normalized RF separation, along both horizontal and vertical dimensions, is shown in Fig. 13A. If the SU and MU RFs were completely overlapping, these values would all be very close to zero. Mean values are 0.26 ± 0.04 SE for the horizontal separation and 0.27 ± 0.04 SE for vertical. Thus on average the distance between centers of the SU and MU RFs is approximately one fourth of the SD of the Gaussian fit, or about one tenth of the FWHM. This indicates that the spatial profiles of SU and MU responses overlap extensively.

FIG. 13.

Summary of RF overlap between SU and MU responses. A: distribution of the normalized RF separation, which is defined as the distance between the centers of the SU and MU RFs normalized by the average width of the SU and MU RFs. Hatched and filled bars show normalized separations measured along the horizontal and vertical dimensions, respectively. B: comparison of receptive field sizes, FWHMx (open symbols) and FWHMy (filled symbols), between SU and MU responses.

If the RFs of MSTd neurons extend past the boundaries of the 90 × 90° display screen, SU and MU RFs might appear to overlap more extensively than they actually do (thus leading to artificially low values of normalized RF separation). Based on 2D Gaussian fits, we found that 37/70 SUs and 38/70 MUs had RFs that were well contained within the display screen. We computed normalized RF separations for SU/MU pairs with RFs contained within the display screen (n = 25) and compared these to SU/MU pairs in which at least one RF extended off the display screen (n = 8). We found no significant differences between these two groups (t-test, P = 0.29) for horizontal separation. For vertical separation, SU/MU pairs with RFs that extended off the display screen had vertical separations that were significantly greater than SU/MU pairs with RFs contained within the display (P = 0.02). Thus our measures of RF separation were not artificially reduced by RFs extending off the display screen.

Figure 13B compares the size of corresponding SU and MU RFs (FWHM along horizontal and vertical dimensions). There was no significant difference between SU and MU responses in terms of these size metrics (paired t-test, P > 0.05, n = 28). Together with the separation data of Fig. 13A, these results indicate that the spatial RFs of SU and MU responses overlap heavily, although it should be noted that our measurements may miss regions of the RFs that are not directionally selective. The average RF size in our population was 39 × 42° for SUs and 36 × 38° for MUs (FWHM dimensions). These values are largely in agreement with previous reports (Desimone and Ungerleider 1986; Komatsu and Wurtz 1988; Van Essen et al. 1981).

DISCUSSION

By comparing the responses of isolated single units with the undifferentiated activity of neighboring neurons, we have examined the clustering of response properties in area MSTd. Our results confirm previous findings of clustered optic flow selectivity in area MSTd (Britten 1998; Duffy and Wurtz 1991; Lagae et al. 1994; Saito et al. 1986) and also show that basic visual receptive field properties are clustered. Our main new finding is that the vestibular sensitivity of MSTd neurons to both translational and rotational motion is also clustered in MSTd. In general, the selectivity of MU activity for vestibular translation was weak compared with visual translation and rotation, as discussed further in the following text. However, when MU signals showed clear selectivity for direction of translation, the tuning was generally consistent with that of simultaneously recorded single units. These findings suggest that there is a topographic organization of vestibular signals in area MSTd.

It should be noted, however, that our data limited us to a comparison of SU and MU activity obtained from the same recording site. Thus although our results establish clustering of vestibular selectivity in MSTd, we cannot conclude that MSTd contains a columnar organization of vestibular signals. Although clustering is usually associated with columns (Mountcastle 1957, 1997), it may also be possible to have clustering without columns (Liu and Newsome 2003). We also cannot directly infer anything about the topographic organization of vestibular signals across the surface of the cortex because we did not record neural activity at multiple locations along electrode penetrations through MSTd (see Britten 1998). Our results therefore leave open a number of aspects of the organization of vestibular signals in MSTd, but we do clearly establish clustering of vestibular signals and compare this to clustering of optic flow responses.

The remainder of the discussion will consider 1) the strength of visual clustering in our data with respect to previous findings and 2) the difference in strength of clustering between visual and vestibular responses, particularly for translational motion.

Clustering of optic flow selectivity in area MSTd

In his quantitative study of clustering of optic flow properties in MSTd, Britten (1998) measured MU or SU tuning at intervals of 50–100 μm along electrode penetrations through MSTd. He correlated the optic flow tuning observed at a particular recording site with the tuning observed at other sites along the penetration and this revealed a modest, but statistically significant, tendency for optic flow tuning to be clustered. For recording sites separated by <500 μm the optic flow tuning curves were significantly correlated for about 30% of cases. Beyond 500 μm, significant correlations between tuning curves were rare.

The heading data of Britten (1998) (his Fig. 3A) can best be compared with our visual translation condition. In our data, we found significant MU tuning for >80% of recording sites and the heading preferences of SU and MU responses were closely matched in the vast majority of cases (Fig. 6A). Thus it may appear that we have found stronger evidence for clustering of optic flow selectivity than did Britten (1998). However, a couple of methodological differences may account for most of this apparent difference. First, all of our neurons were tested with a range of headings that sampled all possible directions in 3D (Fig. 1A), whereas Britten measured heading tuning curves over a much more restricted range of headings (around straightforward) in the horizontal plane (typically −40 to +40°). For recording sites tested over this restricted range, a weak correlation between tuning curves may result from either site having weak selectivity over the range of headings tested. Second, intersite distance may be a factor. Britten always compared heading tuning at sites separated by some distance, whereas we compared SU and MU activity from the same location. Given that correlations between tuning curves fall off with distance, as shown by Britten, it is not surprising that the strongest similarities in tuning would be observed among neurons close to the tip of the electrode. Given these methodological differences, our results do not appear to be inconsistent with Britten's.

Differences in clustering between visual and vestibular responses

When MU responses show significant tuning, there is generally good agreement between SU and MU preferences for both visual and vestibular stimuli (Fig. 6). This appears to suggest that visual and vestibular responses are clustered to a similar degree. For vestibular translation, on the other hand, only 32% of MU recordings show significant heading tuning, whereas 82% of MU recordings show significant tuning for the visual translation condition. If SU and MU preferences for vestibular translation are generally well matched (Fig. 6A), then why do MU responses seldom show significant tuning? One possible explanation is simply that SU responses to vestibular translation are less selective than SU responses to visual translation, as can be seen in Fig. 5C. Indeed, only 56% of SUs show significant tuning for vestibular translation, whereas 97% of SUs are significantly tuned for visual translation. However, when a SU is significantly tuned, the MU activity is also tuned in only 45% of cases for vestibular translation versus 84% for visual translation.

Another possibility is that weaker selectivity in MU responses to vestibular translation may be affected by the visual–vestibular congruency of tuning for SUs. As shown previously and in Fig. 7, A and B, approximately half of MSTd neurons have congruent preferences for visual and vestibular translation, whereas the other half have opposite preferences (Gu et al. 2006, 2007). This creates an interesting issue for functional organization of these neurons because opposite cells could be clustered with congruent cells according to either their visual preference or their vestibular preference (this issue is much less relevant for rotation because there are very few congruent cells for rotation; Takahashi et al. 2007). If, for example, MSTd neurons were predominantly clustered according to their visual preference for translation, then the vestibular preferences of nearby congruent and opposite neurons will not be consistent. This would contribute to weak MU tuning in the vestibular translation condition while preserving strong MU tuning in the visual translation condition. At present, our data do not allow us to resolve this issue. Additional experiments, involving recording from multiple isolated SUs, may be necessary to fully understand how congruent and opposite neurons are organized within MSTd. This issue may have important consequences for understanding how the responses of congruent and opposite neurons are decoded during perceptual tasks.

GRANTS

This work was supported by National Eye Institute Grants EY-017866 to D. E. Angelaki and EY-016178 and an EJLB Foundation (Canada) grant to G. C. DeAngelis.

Acknowledgments

We thank A. Turner and E. White for excellent monkey care and training.

The costs of publication of this article were defrayed in part by the payment of page charges. The article must therefore be hereby marked “advertisement” in accordance with 18 U.S.C. Section 1734 solely to indicate this fact.

REFERENCES

- Albright et al. 1984.Albright TD, Desimone R, Gross CG. Columnar organization of directionally selective cells in visual area MT of the macaque. J Neurophysiol 51: 16–31, 1984. [DOI] [PubMed] [Google Scholar]

- Borghuis et al. 2003.Borghuis BG, Perge JA, Vajda I, van Wezel RJ, van de Grind WA, Lankheet MJ. The motion reverse correlation (MRC) method: a linear systems approach in the motion domain. J Neurosci Methods 123: 153–166, 2003. [DOI] [PubMed] [Google Scholar]

- Born and Bradley 2005.Born RT, Bradley DC. Structure and function of visual area MT. Annu Rev Neurosci 28: 157–189, 2005. [DOI] [PubMed] [Google Scholar]

- Born and Tootell 1992.Born RT, Tootell RB. Segregation of global and local motion processing in primate middle temporal visual area. Nature 357: 497–499, 1992. [DOI] [PubMed] [Google Scholar]

- Bremmer et al. 1999.Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann NY Acad Sci 871: 272–281, 1999. [DOI] [PubMed] [Google Scholar]

- Britten 1998.Britten KH Clustering of response selectivity in the medial superior temporal area of extrastriate cortex in the macaque monkey. Vis Neurosci 15: 553–558, 1998. [DOI] [PubMed] [Google Scholar]

- Churchland and Lisberger 2005.Churchland AK, Lisberger SG. Relationship between extraretinal component of firing rate and eye speed in area MST of macaque monkeys. J Neurophysiol 94: 2416–2426, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeAngelis and Newsome 1999.DeAngelis GC, Newsome WT. Organization of disparity-selective neurons in macaque area MT. J Neurosci 19: 1398–1415, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeAngelis et al. 1993.DeAngelis GC, Ohzawa I, Freeman RD. Spatiotemporal organization of simple-cell receptive fields in the cat's striate cortex. I. General characteristics and postnatal development. J Neurophysiol 69: 1091–1117, 1993. [DOI] [PubMed] [Google Scholar]

- DeAngelis and Uka 2003.DeAngelis GC, Uka T. Coding of horizontal disparity and velocity by MT neurons in the alert macaque. J Neurophysiol 89: 1094–1111, 2003. [DOI] [PubMed] [Google Scholar]

- Desimone and Ungerleider 1986.Desimone R, Ungerleider LG. Multiple visual areas in the caudal superior temporal sulcus of the macaque. J Comp Neurol 248: 164–189, 1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy 1998.Duffy CJ MST neurons respond to optic flow and translational movement. J Neurophysiol 80: 1816–1827, 1998. [DOI] [PubMed] [Google Scholar]

- Duffy and Wurtz 1991.Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. J Neurophysiol 65: 1329–1345, 1991. [DOI] [PubMed] [Google Scholar]

- Duffy and Wurtz 1995.Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. J Neurosci 15: 5192–5208, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckhorn et al. 1993.Eckhorn R, Krause F, Nelson JI. The RF-cinematogram. A cross-correlation technique for mapping several visual receptive fields at once. Biol Cybern 69: 37–55, 1993. [DOI] [PubMed] [Google Scholar]

- Fetsch et al. 2007.Fetsch CR, Wang S, Gu Y, Deangelis GC, Angelaki DE. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci 27: 700–712, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Froehler and Duffy 2002.Froehler MT, Duffy CJ. Cortical neurons encoding path and place: where you go is where you are. Science 295: 2462–2465, 2002. [DOI] [PubMed] [Google Scholar]

- Gu et al. 2007.Gu Y, DeAngelis GC, Angelaki DE. A functional link between area MSTd and heading perception based on vestibular signals. Nat Neurosci 10: 1038–1047, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu et al. 2006.Gu Y, Watkins PV, Angelaki DE, DeAngelis GC. Visual and nonvisual contributions to three-dimensional heading selectivity in the medial superior temporal area. J Neurosci 26: 73–85, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horton and Adams 2005.Horton JC, Adams DL. The cortical column: a structure without a function. Philos Trans R Soc Lond B Biol Sci 360: 837–862, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones and Palmer 1987.Jones JP, Palmer LA. The two-dimensional spatial structure of simple receptive fields in cat striate cortex. J Neurophysiol 58: 1187–1211, 1987. [DOI] [PubMed] [Google Scholar]

- Komatsu and Wurtz 1988.Komatsu H, Wurtz RH. Relation of cortical areas MT and MST to pursuit eye movements. I. Localization and visual properties of neurons.J Neurophysiol 60: 580–603, 1988. [DOI] [PubMed] [Google Scholar]

- Lagae et al. 1994.Lagae L, Maes H, Raiguel S, Xiao DK, Orban GA. Responses of macaque STS neurons to optic flow components: a comparison of areas MT and MST. J Neurophysiol 71: 1597–1626, 1994. [DOI] [PubMed] [Google Scholar]

- Liu and Newsome 2003.Liu J, Newsome WT. Functional organization of speed tuned neurons in visual area MT. J Neurophysiol 89: 246–256, 2003. [DOI] [PubMed] [Google Scholar]

- Malonek et al. 1994.Malonek D, Tootell RB, Grinvald A. Optical imaging reveals the functional architecture of neurons processing shape and motion in owl monkey area MT. Proc Biol Sci 258: 109–119, 1994. [DOI] [PubMed] [Google Scholar]

- Mountcastle 1957.Mountcastle V Modality and topographic properties of single neurons of cat's somatic sensory area. J Neurophysiol 20: 408–438, 1957. [DOI] [PubMed] [Google Scholar]

- Mountcastle 1997.Mountcastle VB The columnar organization of the neocortex. Brain 120: 701–722, 1997. [DOI] [PubMed] [Google Scholar]

- Page and Duffy 2003.Page WK, Duffy CJ. Heading representation in MST: sensory interactions and population encoding. J Neurophysiol 89: 1994–2013, 2003. [DOI] [PubMed] [Google Scholar]

- Prince et al. 2002.Prince SJ, Pointon AD, Cumming BG, Parker AJ. Quantitative analysis of the responses of V1 neurons to horizontal disparity in dynamic random-dot stereograms. J Neurophysiol 87: 191–208, 2002. [DOI] [PubMed] [Google Scholar]

- Ringach et al. 1997.Ringach DL, Sapiro G, Shapley R. A subspace reverse-correlation technique for the study of visual neurons. Vision Res 37: 2455–2464, 1997. [DOI] [PubMed] [Google Scholar]

- Saito et al. 1986.Saito H, Yukie M, Tanaka K, Hikosaka K, Fukada Y, Iwai E. Integration of direction signals of image motion in the superior temporal sulcus of the macaque monkey. J Neurosci 6: 145–157, 1986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder 1987.Snyder JP Map Projections: A Working Manual. Washington, DC: U.S. Government Printing Office, 1987, p. 182–190.

- Takahashi et al. 2007.Takahashi K, Gu Y, May PJ, Newlands SD, DeAngelis GC, Angelaki DE. Multimodal coding of three-dimensional rotation and translation in area MSTd: comparison of visual and vestibular selectivity. J Neurosci 27: 9742–9756, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Essen et al. 1981.Van Essen DC, Maunsell JH, Bixby JL. The middle temporal visual area in the macaque: myeloarchitecture, connections, functional properties and topographic organization. J Comp Neurol 199: 293–326, 1981. [DOI] [PubMed] [Google Scholar]

- Wennekers 2001.Wennekers T Orientation tuning properties of simple cells in area V1 derived from an approximate analysis of nonlinear neural field models. Neural Comput 13: 1721–1747, 2001. [DOI] [PubMed] [Google Scholar]

- Yang and Maunsell 2004.Yang T, Maunsell JH. The effect of perceptual learning on neuronal responses in monkey visual area V4. J Neurosci 24: 1617–1626, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeki 1974.Zeki SM Functional organization of a visual area in the posterior bank of the superior temporal sulcus of the rhesus monkey. J Physiol 236: 549–573, 1974. [DOI] [PMC free article] [PubMed] [Google Scholar]