Abstract

Objective

To classify events of actual or potential harm to primary care patients using a multilevel taxonomy of cognitive and system factors.

Methods

Observational study of patient safety events obtained via a confidential but not anonymous reporting system. Reports were followed up with interviews where necessary. Events were analysed for their causes and contributing factors using causal trees and were classified using the taxonomy. Five general medical practices in the West Midlands were selected to represent a range of sizes and types of patient population. All practice staff were invited to report patient safety events. Main outcome measures were frequencies of clinical types of events reported, cognitive types of error, types of detection and contributing factors; and relationship between types of error, practice size, patient consequences and detection.

Results

78 reports were relevant to patient safety and analysable. They included 21 (27%) adverse events and 50 (64%) near misses. 16.7% (13/71) had serious patient consequences, including one death. 75.7% (59/78) had the potential for serious patient harm. Most reports referred to administrative errors (25.6%, 20/78). 60% (47/78) of the reports contained sufficient information to characterise cognition: “situation assessment and response selection” was involved in 45% (21/47) of these reports and was often linked to serious potential consequences. The most frequent contributing factor was work organisation, identified in 71 events. This included excessive task demands (47%, 37/71) and fragmentation (28%, 22/71).

Conclusions

Even though most reported events were near misses, events with serious patient consequences were also reported. Failures in situation assessment and response selection, a cognitive activity that occurs in both clinical and administrative tasks, was related to serious potential harm.

Since 2001, interest in patient safety in primary care has been increasing.1,2,3,4,5,6,7,8,9,10 In the late 1990s, research in Australia challenged the prevailing assumption of a safe, error‐tolerant environment, quite different from that of the operating room11 and intensive care.12 Today, UK general practice is changing in ways that may increase the importance of patient safety: patients are discharged from hospital earlier than before; general practitioners (GPs) increasingly prescribe and monitor potentially dangerous drugs; and there are time pressures on consultations,1 increasing fragmentation of services and decreasing continuity of care.13,14

Reporting of patient safety events has become an important data collection tool for research and quality improvement purposes in healthcare, both in the UK15 and abroad.4,16,17 Reporting is usually anonymous to achieve a large number of reports. The disadvantage of anonymous reporting is loss of information, especially information about causes. Furthermore, there is a lack of taxonomies that can be used to classify events and their causes and to maintain the links between them. To fulfil this purpose, taxonomies should be based on a theory of human performance, have mutually exclusive categories and identify the influences of the system on the individual.18,19

A small scale, in‐depth study of identifiable but confidential reports was undertaken with the aim of building a taxonomy of patient safety in general practice. A voluntary and confidential event reporting system was introduced to five general medical practices in the West Midlands over 16 months. Practices were asked to report events during the process of care that led to unintended increase in the risk of harm to patients or to reduction in the probability of effective care, when compared with generally accepted practice. They were encouraged to report both adverse events and near misses. Reporting was open to all practice staff, clinical and non‐clinical. Reports were made on forms designed for the study and sent directly to the investigator in a stamped, addressed envelope. Two‐thirds of the reports were followed up with interviews to obtain further information and/or check the causal analysis. The taxonomy, methods for data collection and causal analysis, and rules for event classification are described in detail elsewhere.20 The structure and rationale of the taxonomy are briefly described below. This paper focuses on the results from the analysis and classification of the patient safety events collected.

The taxonomy

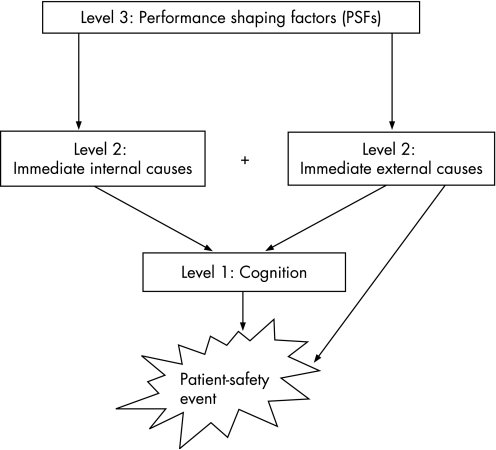

The taxonomy aims to classify either the action or the error that initiated the chain of events. This is carried out to maintain the links between the environment (where the root causes usually lie) and humans, whose actions are shaped by the environment.21 To this end, the taxonomy has three mandatory levels of classification that reflect the line of causation (fig 1), as advocated by current models of accident causation in complex systems: errors are influenced by local workplace conditions, which are in turn determined by organisational factors.22,23,24 A multilevel classification can overcome the problem of what to classify: the task that failed (eg, prescribing, diagnosis, referral), the type of error (eg, omission, repetition, substitution) or the cause (eg, noise, communication, equipment design). Classifying the multiple aspects of an event at different but related levels helps to avoid overlapping categories and maintain the links between levels. This is widely accepted in industry and reflected in the structure of safety taxonomies19,25 and is now being acknowledged in healthcare.26 Maintaining the link between human cognition and the environment that influences it is especially important for the design of countermeasures and system improvement, since errors of different cognitive origin are likely to require different types of management. For example, prescribing incidents may superficially seem similar (wrong drug prescribed) but can be caused by various environmental factors (interface design, workload, rare disease, similar patient or drug names) that affect cognition in different ways (confusion, lack of knowledge, lack of attention). Looking for solutions to reduce these types of incident requires consideration of how they occurred.

Figure 1 The taxonomy structure, showing influences between the three levels of classification. Adapted from Kostopoulou.20

To characterise the cognition of the individuals involved, the taxonomy uses a theoretical model, the “information processing model” of human cognition.27 At level 1, the cognition level, the error/action is classified according to (a) the cognitive domain involved (perception, memory, situation assessment, response execution) and (b) the error mechanism—that is, the psychological mechanism through which the error occurred. At level 2, the immediate causes of the event are classified as internal to the individual (affective, motivational, physiological or cognitive states) or external to the environment. At level 3, the likely contributions to the event (performance‐shaping factors, PSFs) are categorised into work organisation factors and technical factors, each with subcategories. The taxonomy is designed to ensure that analysis of patient safety events includes but also moves away from individual cognition (level 1) to uncover conditions that shaped performance and led to the event (levels 2 and 3; fig 1). The full taxonomy is presented as supplementary material, available at http://qshc.bmj.com/supplemental.

Analyses

The reported events were analysed using causal trees20 and classified using the taxonomy. An example of the analysis and classification of an event is given as supplementary material, available at http://qshc.bmj.com/supplemental.

Frequencies for types of events and contributing factors are presented in the Results section. Two‐tailed χ2 tests and Fisher's exact tests were used to test for statistical relationships between categories of the classification. The exact test was employed when expected frequencies were <5. When the test value was significant, the standardised residuals (SRs) were inspected to determine which cells gave most discrepancy from H0 (SRs>|2| and SRs>|3| for larger tables).28

Results

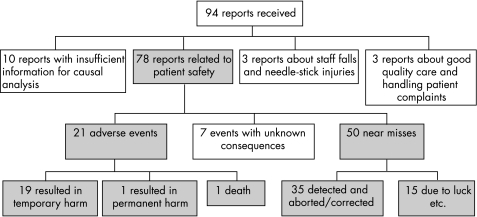

Five practices, two small, two large and one medium sized, took part and submitted 94 reports over 6–16 months (table 1). Altogether 78 reports were related to patient safety and were analysable (fig 2). They included 21 (27%) adverse events and 50 (64%) near misses. The outcome of seven events was unknown at the time of the reporting.

Table 1 Practice demographics and reporting.

| Practice size (patients) | Patient population | Study participation (months) | Reports related to patient safety |

|---|---|---|---|

| Small1 (2000) | Suburban, white, elderly | 11 | 14 |

| Small2 (2200) | Inner city, ethnically diverse | 16 | 14 |

| Medium (5800) | Inner city, white | 11 | 15 |

| Large1 (10 200) | Inner city, ethnically diverse | 16 | 30 |

| Large2 (10 602) | Inner city, ethnically diverse | 6 | 5 |

Figure 2 Number and type of reports submitted.

Although 64% (50/71) of the reported events had no consequence for patient health, 16.7% (13/71) had fairly or very serious patient consequences for physical health, including one death from delayed diagnosis of a brain tumour. Moreover, 75.7% of the reported events had the potential for fairly or very serious patient harm (59/78).

GPs submitted 54% of the reports (42/78), administrative staff 26% (20/78) and nurses 19% (15/78). Most adverse events were reported by GPs (14/21), but the study found no relationship between type of event and reporter (p = 0.06).

Table 2 presents a categorisation of the events according to the clinical area of relevance. Even though events have been counted only once, the categorisation is not mutually exclusive. For example, lack of information/communication failures and administrative errors could cause a number of other types of events (delayed referrals, wrong judgment of urgency, prescribing errors and so on). This is not a satisfactory approach, as it lacks a conceptual underpinning and can give a biased picture about the clustering of events. It is presented to enable comparisons with other studies that categorised events in similar ways.

Table 2 Clinical categorisation of the reported events.

| Immediate cause | ||

|---|---|---|

| Actual or potential consequence: Appropriate care obstructed or delayed/inappropriate care provided | Administrative errors (mainly booking appointments and filing) | 20 (25.6%) |

| Prescribing or medication review | 11 (14%) | |

| Delays or inappropriate care in hospital | 10 (13%) | |

| Lack of information, communication failures | 7 (9%) | |

| Dealing with test results or hospital correspondence | 5 (6.4%) | |

| Referrals (delayed/forgotten) | 5 (6.4%) | |

| Vaccination/drug administration | 4 (5%) | |

| Judging urgency of patient's condition | 2 (2.6%) | |

| External factors/equipment failures | 3 (4%) | |

| Failing to home visit | 2 (2.6%) | |

| Dispensing errors | 2 (2.6%) | |

| Unknown | 1 | |

| No apparent potential for harm to patient | 3 (4%) | |

| Other | 3 (4%) | |

| Total | 78 |

In eight events, there was no error (fig 3). In 23 cases, practice staff had not initiated the event—they were simply reporting it. This “second‐hand” reporting made it impossible to collect further information for ethical reasons, as the investigator had no access to patients or non‐practice healthcare staff. The rest of the patient safety reports (47) contained information about the “active failures” that led to the events. Further information was obtained at the follow‐up interviews.

Figure 3 Information sufficiency for categorisation purposes.

Cognitive classification of these 47 reports was therefore possible, mostly at level 1. There were 21 (45%) errors of situation assessment, 11 (23%) response execution errors, 8 (17%) memory errors and 7 (15%) perception errors (χ2 = 10.45, df = 3, p<0.05). The error mechanism was identifiable in 39 of the 47 reports (table 3). The study found no significant difference between categories of error mechanism. Immediate internal causes, level 2, proved difficult to identify in most cases owing to insufficient information reported or subsequently recalled and an inability to obtain this information for second‐hand reports. No statistical analyses were performed at this level of classification due to small numbers, and only frequencies are reported (table 3).

Table 3 Taxonomy levels 1 and 2—cognition and immediate causes of errors/actions.

| Total | |||||||

|---|---|---|---|---|---|---|---|

| Level 1 | |||||||

| (I) Cognitive domain | |||||||

| Perception | Situation assessment and response selection | Memory | Response execution | ||||

| 7 | 21 | 8 | 11 | 47 | |||

| (II) Error mechanism | |||||||

| Expectancy/selective attention | Loss of attention | Interference/ confusion | False assumption/ expectation | Cognitive bias | |||

| 7 | 9 | 9 | 10 | 4 | 39 | ||

| Level 2 | |||||||

| (I) Immediate internal causes | |||||||

| Alertness, fatigue | Cursory/hurried work approach | Lack of knowledge | Inflexible application of a procedure | Stress, preoccupation | |||

| 0 | 6 | 3 | 2 | 0 | 11 | ||

| (II) Immediate external causes | |||||||

| Lack of information/ incorrect, unclear, illegible information | Cue similarity/proximity Pattern similarity | Task interruption | Out‐of‐date (drugs/vaccines), out‐of‐order (equipment) | ||||

| 11 | 12 | 4 | 3 | 30 | |||

PSFs, level 3, were identifiable in most of the 78 reports, with >1 PSF being identifiable in many reports. Categorisation of PSF was not exclusive (both organisational and technical factors were often identified in relation to the same event), hence only frequencies are reported. Work organisation factors contributed to 71 events and technical factors to 30 events. The most frequent work organisation factors were excessive task demands (47%, 37/71), followed by fragmentation (28%, 22/71) and communication (15%, 12/71). Excessive task demands related mostly to time pressures or workload, unfamiliarity with tasks or equipment, and unclear policies. The technical factors most frequently identified were issues of information salience or presentation (15%, 12/30), followed by information availability or delays (12%, 9/30) and absence of retrieval cues or cues to action (12%, 9/30).

There was a significant relationship between cognitive domain and type of event (adverse event or near miss; χ2 = 9.4, df = 3, p<0.05) and between error mechanism and type of event (χ2 = 11.31, df = 4, p<0.01). “Situation assessment and response selection” was involved in most adverse events where the cognitive domain could be identified (8/10), whereas memory failures and response execution failures only led to near misses. The cognitive domain of situation assessment and response selection refers to a broad category of decision making including clinical diagnosis and management, as well as administrative decisions. Reason's definition of “mistakes”, as judgment or inference failures involved in goal or strategy selection is another way of describing errors of situation assessment and response selection. From the error mechanisms, loss of attention and interference/confusion only led to near misses. However, the SRs were lower than |2|, which suggests that relationships between cognition and event type need to be interpreted with caution.

Most events had no consequences or no serious consequences for the patient. Situation assessment errors were frequently associated with very serious potential consequences (13/18; see Appendix for examples). However, the study failed to detect a significant relationship (p = 0.1).

The study did not find a relationship between practice size and event type (p = 0.31). There was, however, a significant relationship between practice size and cognitive domain (χ2 = 20.72, df = 6, p<0.001), with large practices reporting more events related to situation assessment (16/21).

The most frequent type of detection was incidental (ie, chance detection; 40%, 31/78), followed by outcome‐based detection (23%, 18/78; ie, detection of consequences, eg, worsening symptoms, delay in receiving an appointment). Staff and patients detected only 11.5% (9/78) each of the reported events as a result of active monitoring. Of the reported events, 9% (7/78), were detected through a “limiting function”—that is, some aspect of the environment prevented further action. Only four events were detected by the person who made the error through spontaneously remembering or engaging attention (“action‐based” detection).20,30

The relationship between cognitive domain and PSF was examined, but the numbers were too small for statistical analyses, hence only frequencies are reported. Fragmentation issues contributed mainly to situation assessment (8/15) and memory errors (5/15), task demands contributed to errors in all cognitive domains but mainly situation assessment (9/23), whereas communication problems contributed mainly to situation assessment (5/7). Information that was not sufficiently salient or well‐presented led to perceptual errors (5/10), absence of cues and reminders to memory errors (5/7) and information availability or delays to errors of situation assessment (4/5).

Discussion

The study identified “situation assessment and response selection” as the cognitive domain most frequently involved in patient safety events. It was also linked more frequently than other cognitive domains to very serious potential harm. This is consistent with Wiegmann and Shappell's31 observations that judgment errors were associated more with major aviation accidents than with minor accidents, whereas response execution errors were associated more with minor accidents. Situation assessment was influenced mainly by high task demands, fragmentation and unavailability or non‐salience of information. High task demands included (1) workload and time pressures; (2) unfamiliarity with tasks, equipment or procedures; and (3) unclear/inappropriate policies leading to disagreement and uncertainty. Unclear policies cover situations where, for example, a patient receives initial and/or regular treatment in hospital but neither the hospital nor the practice assumes responsibility for the continuation of the treatment or its monitoring. This led to patients receiving dangerous medication not being monitored. Unsuitable policies can restrict access to certain investigations to GPs—for example, MRI scans in the case of two patients with delayed diagnosis of a brain tumour.

Fragmentation included cases where different members of staff carried out different steps in a single task, such as record updating or dealing with clinical correspondence. It also included cases where different clinicians were looking after different aspects of a patient's care, often resulting in tasks not being carried out. For example, two GPs were looking after the same patient, one for his diabetes, the other for his renal disease. They both noticed that the patient's creatinine had increased and one of them referred the patient to hospital. Neither GP stopped the metformin (“we both assumed that the other one would”), putting the patient at risk of serious illness (lactic acidosis). The patient had raised creatinine for 4 months until the hospital stopped the metformin.

Finally, fragmentation also included cases where tasks, such as referrals and record updating, were carried out after the consultation had finished, sometimes on a different day and by different staff. This resulted in omissions or delays. Some practices were aware of the error‐inducing potential of this type of fragmentation and had developed systems of support, but things could still be missed. Fragmentation may work well in terms of gaining time and freeing up resources, but it also makes a system more vulnerable to error.32

Most reports that contained information permitting cognitive analysis involved “situation assessment and response selection” failures (45%). This cognitive domain includes the task of diagnosis, which is at the heart of medicine. Only two reports (2.6%) were related to diagnosis in general practice (“judging urgency of patient's condition”; table 2). This is similar to other studies of patient safety in primary care that used reporting as a data collection tool. Dovey et al4 obtained 344 reports (330 errors) from 42 American family physicians. They classified 3.9% of the reported errors as “wrong or missed diagnosis”, arising from lack of clinical knowledge/skills. Makeham et al16 obtained 134 errors from 17 Australian GPs. They included diagnostic errors by pharmacists and hospital doctors in their classification, which resulted in 14% of errors described as “diagnostic”. A lower rate (0.5%) was obtained by Rubin et al6 from 10 practices in the UK. In contrast with these studies, a much higher rate is reported by Bhasale et al.17 They obtained 805 incidents from 324 Australian GPs and classified 34% of them as diagnostic. This probably overestimates the rate of diagnostic error. The classification was not mutually exclusive, and 79 incidents belonged to both “diagnostic” and “non‐pharmacological” categories—that is, 79 incidents were counted more than once. Moreover, diagnostic incidents included “diagnostic procedural complications”, such as incorrect test results, and were therefore not related to clinical knowledge, skill or judgment. In fact, “error in judgment” contributed to 22% of the incidents in the Bhasale et al17 study. It is possible, however, that error in judgment is hiding in other types of contributing factors—for example, “failure to recognise signs and symptoms”, “GP tired/rushed/running late”, “inadequate patient assessment”, “patient's history not adequately reviewed”. Similarly, one of the two major error categories in the Dovey et al4 classification, “process errors”—for example, “wrong test/treatment ordered or not ordered when appropriate” or “inappropriate response to an abnormal laboratory result”—may include “errors arising from lack of clinical knowledge or skills”, the other major error category. The need for categories that are precisely defined and mutually exclusive is apparent.

“Problems in communication and sharing information” (a PSF) were identified in 15% of the reports. The same rate of “communication errors” is reported by Makeham et al,16 whereas Dovey et al4 classified 5.8% of the errors as “errors in the process of communication”. Rubin et al6 and Bhasale et al17 report much higher rates of communication errors, 30% and 42%, respectively.

Discrepancies between studies highlight the importance of using both a mutually exclusive cognitive classification at the level of the individual (to classify the error or action) and an associated classification at the level of contributing factors that influenced performance and facilitated error (PSFs). This type of multilevel classification will prevent events or errors being counted more than once and uncertainty as to where to classify them (eg, according to the type of error, the clinical area, or the causes).

In terms of clinical area of relevance (table 2), the most frequently reported type of failure was administrative (25.6%). This is similar to the rates reported by Dovey et al4 (30.9%) and Makeham et al16 (20%). Rubin et al6 also found that most reported errors were administrative and were encountered in reports classified under the broader error categories of “prescription”, “communications” and “appointments”. The high rates of administrative failures may reflect greater staff willingness to report them, greater opportunity for occurrence, and/or greater opportunity for detection than, for example, diagnostic errors.

One limitation of this study is the small number of reports, especially those with detailed information for cognitive analysis. The need for detailed information is a disadvantage of the taxonomy, as it precludes anonymous reporting, therefore small numbers are to be expected. Furthermore, the time‐consuming follow‐up of reports is potentially discouraging. Small numbers did not always allow for statistical analyses, or, when analyses were carried out, may have masked important relationships between categories—for example, cognitive domain and event consequences. However, as a database of classified events builds up over time, it should allow the links between environment and behaviour to be observed more reliably. Statistical associations between the levels of a taxonomy are an important aspect of internal validity and usefulness: can meaningful patterns be gleaned from the data? Which PSFs tend to affect cognition, in what way and through what psychological mechanism? What type of immediate events, internal or external, precede an error? To be able to determine such relationships, large numbers of reports are needed, containing sufficient information about the event and the cognitive processes of the individuals involved, as well as the environmental influences. Such reports are admittedly hard to obtain in large numbers but are nevertheless an ideal that we should be working towards26 if we are to move from simple descriptions of errors as “prescribing” or “administrative” and start to tackle their underlying causes. It may also be worth considering how an anonymous reporting system and a confidential reporting system could operate side by side.

The information processing model, which forms the basis of the cognitive taxonomy, allows the classification of any human action, erroneous or not. This is very important in analysis of incidents. The individual's action may have been entirely appropriate given the circumstances and information available at the time. PSF can still be identified in situations where no errors occurred and their links with human performance are thus maintained. The value of the taxonomy lies largely in the mandatory, multilevel classification that forces consideration of immediate external causes and PSF and points to the environment as shaping human behaviour.

Testing the reliability, comprehensiveness and usability of the cognitive taxonomy is currently being planned. Important questions that we aim to answer include whether users with no background in human factors can be trained to construct causal trees and reliably classify cognition.

Although smaller in scale than analyses of large databases of anonymous reports, in‐depth studies of confidential, identifiable reports followed up with interviews can provide important insights that may otherwise be overlooked. The data obtained from more detailed analyses, supported by a multilevel taxonomy that links human performance with its environment, can improve our attempts to deal with patient safety events by encouraging intervention at a system level without losing sight of the individual. A culture of safety goes far beyond systems alone. Although it is clear that better monitoring of key processes such as investigation results, prescribing and referrals are essential, we also need a better understanding of how to improve decision making, in both clinical and administration tasks. More research is needed to improve the presentation of information, usually via the electronic health record, and to reduce task demands and fragmentation. Teaching of decision making in medical and staff training curricula may also improve the situation assessment skills of healthcare staff. In‐depth studies using detailed taxonomies such as this can contribute much to our understanding of what affects practitioners' performance and ultimately patient safety in primary care.

Acknowledgements

We thank all the staff in the participating practices for their contribution.

Abbreviations

GP - general practitioner

PSFs - performance‐shaping factors

Appendix

Examples of very serious (actual or potential) consequences of “situation assessment” failures by general practice staff.

A 44‐year‐old patient presented with chest tightness for a few days on exertion, relieved by rest, left‐sided cardiac pain with radiation to left arm and a strong family history. Locum general practitioner (GP) decided to start treatment with nicorandil and glyceryl trinitrate, and booked new appointment for blood tests and ECG. The same evening, the patient went to the accident and emergency department with worsening chest pain. He was kept in for a week for investigations.

A patient taking warfarin and with possible stomach ulcer in the past (information from patient record) was prescribed non‐steroidal anti‐inflammatory drugs by locum GP. The error was detected by the pharmacist.

A patient came to the surgery for renewal of her treatment for blood pressure that had ran out. The receptionist told her that she could not have any more medication until she was seen by a GP, the practice policy being that patients should have another month issued at this point. The patient, already non‐compliant, did not come back for some time, leading to her blood pressure going out of control (204/123).

A nurse administered polio booster vaccine to a patient with HIV, without a knowledge of the patient's HIV status. The nurse simply asked the patient whether she was fit and well, the patient said that she was and the nurse did not check her record to confirm this (busy clinic, appointment scheduled for vaccinating the patient's grand‐daughter).

A young woman who had recently had a baby started developing fever. Despite this, the midwife decided not to visit her at home (owing to workload). The patient eventually saw the GP, who diagnosed septicaemia and admitted her urgently to hospital, where she received intravenous antibiotic treatment.

A 67‐year‐old man with diabetes and high blood pressure, was referred by his GP to a dementia open‐access clinic for rapid onset of memory loss. The consultant psychiatrist started treating the patient with medication for vascular disease and depression. He did not perform a CT scan despite the GP's request because he did not think that it was clinically indicated. He did not review the patient again. Meanwhile, the patient's condition was deteriorating. The GP attempted to order a scan directly, but his request was refused (no open access to CT scans). Another urgent request for a scan was finally granted, and showed a brain tumour. A neurologist diagnosed inoperable meningioma owing to its size. The patient subsequently died.

Footnotes

Funding: This research was funded by a National Primary Care Postdoctoral award to OK (Department of Health and the Health Foundation). The work was independent from the funder.

Competing interests: None.

Ethical approval: This study was approved by the South Birmingham and West Birmingham local research ethics committees.

References

- 1.Wilson T, Pringle M, Sheikh A. Promoting patient safety in primary care: research, action, and leadership are required. BMJ 2001323583–584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Sandars J, Esmail A. The frequency and nature of error in primary care: understanding the diversity across studies. Fam Pract 200320231–236. [DOI] [PubMed] [Google Scholar]

- 3.Avery A, Sheikh A, Hurwitz B.et al Safer medicines management in primary care. Br J Gen Pract 200252S17–S22. [PMC free article] [PubMed] [Google Scholar]

- 4.Dovey S, Meyers D, Phillips R J.et al A preliminary taxonomy of medical errors in family practice. Qual Saf Health Care 200211233–238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wilson T, Sheikh A. Enhancing public safety in primary care. BMJ 2002324584–587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rubin G, George A, Chinn D.et al Errors in general practice: development of an error classification and pilot study of a method for detecting errors. Qual Saf Health Care 200312443–447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Fernando B, Savelyich B, Avery A.et al Prescribing safety features of general practice computer systems: evaluation using simulated test cases. BMJ 20043281171–1172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Elder N, Vonder Meulen M V, Cassedy A. The identification of medical errors by family physicians during outpatient visits. Ann Fam Med 20042125–129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Esmail A, Neale G, Elstein M.et alCase studies in litigation: claims reviews in four specialties. Manchester: Manchester Centre for Healthcare Management, University of Manchester, 2004

- 10.Jacobson L, Elwyn G, Robling M.et al Error and safety in primary care: no clear boundaries. Fam Pract 200420237–241. [DOI] [PubMed] [Google Scholar]

- 11.Gaba D, Howard S, Fish K.Crisis management in anesthesiology. New York: Churchill‐Livingstone, 1994

- 12.Donchin Y, Gopher D, Olin M.et al A look into the nature and causes of human errors in the intensive care unit. Crit Care Med 199523294–300. [DOI] [PubMed] [Google Scholar]

- 13.Audit Commission A focus on general practice in England. UK: Audit Commission for Local Authorities and the NHS in England and Wales, 2002

- 14.Wanless D. RCGP Summary Paper 2002/01. RCGP 2002

- 15.Shaw R, Drever F, Hughes H.et al Adverse events and near miss reporting in the NHS. Qual Saf Health Care 200514279–283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Makeham M, Dovey S, County M.et al An international taxonomy for errors in general practice: a pilot study. Med J Aust 200217768–72. [DOI] [PubMed] [Google Scholar]

- 17.Bhasale A, Miller G, Reid S.et al Analysing potential harm in Australian general practice: an incident‐monitoring study. Med J Aust 199816973–76. [DOI] [PubMed] [Google Scholar]

- 18.Isaac A, Shorrock S, Kirwan B. Human error in European air traffic management: the HERA project. Reliability Eng Syst Saf 200275257–272. [Google Scholar]

- 19.Shorrock S, Kirwan B. Development and application of a human error identification tool for air traffic control. Appl Ergon 200233319–336. [DOI] [PubMed] [Google Scholar]

- 20.Kostopoulou O. From cognition to the system: developing a multilevel taxonomy of patient safety in general practice. Ergonomics 200649486–502. [DOI] [PubMed] [Google Scholar]

- 21.Rasmussen J. Afterword. In: Bogner M, ed. Human error in medicine. Hillsdale, NJ: Lawrence Erlbaum Associates, 1994385–393.

- 22.Moray N. Error reduction as a systems problem. In: Bogner M, ed. Human error in medicine. Hillsdale, NJ: Lawrence Erlbaum Associates, 199467–91.

- 23.Reason J.Managing the risks of organizational accidents. Aldershot: Ashgate, 1997

- 24.Bogner M. Safety issues. Minim Invasive Ther Allied Technol 200312121–124. [DOI] [PubMed] [Google Scholar]

- 25.Wiegmann D, Shappell S. Human error analysis of commercial aviation accidents: application of the human factors analysis and classification system (HFACS). Aviat Space Environ Med 2001721006–1016. [PubMed] [Google Scholar]

- 26.Dovey S, Hickner J, Phillips B. Developing and using taxonomies of errors. In: Walshe K, Boaden R, eds. Patient safety: research into practice Maidenhead: Open University Press, 200693–107.

- 27.Wickens C, Hollands J.Engineering psychology and human performance. New Jersey: Prentice‐Hall, 2000

- 28.Woodward M, Francis L.Statistics for health management and research. London: Edward Arnold, 1988

- 29.Reason J.Human error. Cambridge, UK: Cambridge University Press, 1990

- 30.Sellen A. Detection of everyday errors. Appl Psychol Int Rev 199443475–498. [Google Scholar]

- 31.Wiegmann D, Shappell S. Human factors analysis of postaccident data: applying theoretical taxonomies of human error. Int J Aviat Psychol 1997767–81. [Google Scholar]

- 32.Kostopoulou O, Shepherd A. Fragmentation of care and the potential for human error in neonatal intensive care. Top Health Inf Manage 20002078–92. [PubMed] [Google Scholar]