Abstract

The Washington Circle (WC), a group focused on developing and disseminating performance measures for substance abuse services, developed three such measures for private health plans. In this article, we explore whether these measures are appropriate for meeting measurement goals in the public sector and feasible to calculate in the public sector using data collected for administrative purposes by state and local substance abuse and/or mental health agencies. Working collaboratively, twelve states specified revised measures and six states pilot tested them. Two measures were retained from the original specifications: initiation of treatment and treatment engagement. Additional measures were focused on continuity of care after assessment, detoxification, residential or inpatient care. These data demonstrate that state agencies can calculate performance measures from routinely available information and that there is wide variability in these indicators. Ongoing research is needed to examine the reasons for these results which might include lack of patient interest or commitment, need for quality improvement efforts, or financial issues.

Keywords: Performance measures, Substance abuse treatment, Washington Circle, Public sector, Administrative data

1. INTRODUCTION

The Washington Circle (WC) was formed in 1998 with the goals of developing and disseminating performance measures for substance abuse services. These efforts were explicitly endorsed by the Institute of Medicine which recommended the continued development and use of substance abuse performance measures in its recent report, Improving the Quality of Health Care for Mental and Substance Use Conditions (Horgan & Garnick, 2005; Institute of Medicine, 2006). Initially, the WC proposed a continuum of care for substance abuse services and then specified and tested three performance measures - identification of adults with alcohol or other drug diagnoses, treatment initiation and treatment engagement (Garnick et al., 2002; McCorry, Garnick, Bartlett, Cotter, & Chalk, 2000). These WC performance measures have been widely used by private health plans, for which they were designed, and also by the Veterans Health Administration and several state agencies (www.washingtoncircle.org).

Working collaboratively with 12 states, the WC turned more recently to exploring the suitability in terms of matching measurement goals and feasibility of adapting these core measures for use by state and local substance abuse and/or mental health agencies. Focusing on performance measures is particularly important for substance abuse treatment funded by the public sector because this type of funding covers the largest share of specialty treatment for substance use disorders. In 2003, 77 percent of substance abuse treatment was financed by public sources, leaving only 23 percent of expenditures covered by private insurers, philanthropy, or out-of-pocket payments by clients or their families (Mark et al., 2007).

In this paper, we present the results of a two-year process of adapting the original three measures from their original application in private health plans for use by state and local behavioral health agencies. We describe the expanded set of measures, summarize key decisions in expanding the original measures, and show pilot testing results from six states.

1.1 Washington Circle

The Washington Circle (WC), convened by the Substance Abuse and Mental Health Services Administration (SAMHSA), consists of a multidisciplinary group of providers, researchers, health plan representatives and public policymakers. The WC developed performance measures that are focused on the quantity and timing of substance abuse treatment services. First, the WC developed a model of the process of care in four domains representing a continuum of substance abuse services (prevention/education, recognition, treatment and maintenance) based on a chronic disease perspective applied to substance abuse (McCorry et al., 2000). Then the WC selected private health plans for the initial pilot testing because these plans have standardized datasets that make the testing more straightforward (Garnick et al., 2002).

Current users of these performance measures include the National Committee for Quality Assurance (NCQA), an organization whose mission is to improve the quality of health care and whose activities include accrediting health plans and publishing statistics that track the quality of care delivered by the nation’s health plans. NCQA adopted the WC identification, initiation and engagement measures to be included in its 2004 Healthcare Effectiveness Data and Information Set (HEDIS) (National Committee for Quality Assurance, 2007; National Committee on Quality Assurance, 2008). The Veterans Health Administration currently uses WC-like measures to monitor delivery of care (Harris, 2006). The three performance measures are:

Identification - percent of adult enrollees with a substance abuse claim, defined as containing a diagnosis of substance abuse or dependence or a specific substance abuse-related service, on an annual basis.

Treatment Initiation - percent of adults with an inpatient substance abuse admission or with an outpatient claim for substance abuse or dependence and any additional substance abuse services within 14 days following identification.

Treatment Engagement - percent of adults diagnosed with substance abuse disorders who receive two additional substance abuse services within 30 days of the initiation of care.

1.2. Placing Washington Circle Measures in a Conceptual Framework

The Washington Circle measures focus only on a segment of treatment for substance use conditions, so it is useful to consider them in the context of broader conceptual frameworks that encompass the dynamic stages of treatment and recovery as well as patient attributes, organizational characteristics of treatment programs, and the larger social context. For example, the Washington Circle measures correspond to the Texas Christian University (TCU) Treatment Model’s stage of “early engagement”(Simpson, 2004). Also, the Substance Abuse and Mental Health Services Administration has developed a model of Recovery Oriented Systems of Care (ROSC) in which treatment for substance use conditions is only one element in which systems (e.g., treatment/recovery, family, medical, housing/homeless, child welfare, criminal justice, educational) are integrated to offer a fully coordinated menu of services and supports to maximize outcomes (Addiction Technology Transfer Center Network, 2008).

The Washington Circle measures also can provide system markers for measuring the impact of evidence-based practices (EBPs) in increasing clients’ engagement in treatment for substance use conditions. Starting in the 1980s, documented quality deficiencies in general health care and rising health costs created a movement to promote the use of EBPs. In Crossing the Quality Chasm, a report on health care quality, the Institute of Medicine defines EBPs, in general, as “the integration of best research evidence with clinical expertise and patient values.”(Institute of Medicine, 2001) Building on the EBP movement in general health care during the 1990s, many organizations began to focus on EBPs in behavioral health. For example, the National Registry of Evidence-Based Programs and Practices (NREPP) is a repository designed to help both professionals in the field and the public to become better consumers by identifying scientifically tested programs and practices (Center for Substance Abuse Treatment). Other organizations focusing on EBPs include the National Institute on Drug Abuse, with a research-based guide outlining principles of effective treatment (National Institute on Drug Abuse, 1999), the National Institute on Alcohol Abuse and Alcoholism with a range of professional education materials, the American Society of Addiction Medicine (American Society of Addiction Medicine, 2008), SAMHSA’s Treatment Improvement Protocols (TIPs) (Substance Abuse and Mental Health Services Administration, 2008), and the American Psychiatric Association’s guidelines for treatment of substance abuse disorders (American Psychiatric Association, 2008).

In addition, in 2007, the National Quality Forum, a private, not-for-profit membership organization created to develop and implement a national strategy for healthcare quality measurement and reporting, developed a set of recommended treatment practices for substance use disorders that are supported by evidence of effectiveness. Among the eleven recommended practices for substance use conditions, the National Quality Forum included initiation and engagement in substance treatment (National Quality Forum, 2007).

1.3 Process and Outcome Measurement

In considering the WC process measures, it is important to recognize the differences between measures of process and of outcomes. The strengths and weaknesses of each of these types of performance indicators continue to be debated. A consensus seems to be emerging in the medical sector, however, that process and outcome measures are both important and that they complement each other in monitoring the delivery of quality of care (Horgan & Garnick, 2005; Krumholz, Normand, Spertus, Shahlan, & Bradley, 2007; Mant, 2001; McLellan, Chalk, & Bartlett, 2007).

1.3.1 Outcome measures

These measures describe client status. In evaluating substance abuse treatment, outcomes that are frequently used include criminal justice activity, employment, substance use, and reconnection with family and community. In general, outcome measures are focused on goals, make intuitive sense and reflect accumulative aspects of care including providers’ special skills and expertise (Mant, 2001). There may be a time lag before such outcomes are apparent and follow-up with clients to collect outcomes, often expensive, requires much time and effort. Furthermore, improved outcomes often depend on patient compliance and other factors that are not under control of the provider. Outcome measures may suggest specific areas of care that require quality improvement, but further investigation often is necessary to determine how to influence outcomes.

1.3.2 Process measures

These measures specify the treatment services that patients receive. Such a measure would be expressed as the percent of suitable candidates for a particular treatment who have that treatment in the appropriate timeframe. Process measures are used to assess adherence to clinical practices based on evidence or consensus. They offer two advantages: they are more immediately actionable; and they are often useful for identifying specific areas of care that may require improvement. Process measures can be relevant because they may be important for some proximal or in-treatment outcomes.

Some substance abuse research on the association of process and outcomes exists. Treatment completion is a commonly used process measure that has been applied to data on clients in several state-funded treatment systems. This research shows that treatment completion is associated with improved outcomes, such as decreased criminal justice involvement, higher wages, and lower readmissions (Alterman, Langenbucher, & Morrison, 2001; Arria & TOPPS-II Interstate Cooperative Study Group, 2003; Evans, Longshore, Prendergast, & Urada, 2006; Luchansky, Brown, Longhi, Stark, & Krupski, 2000; Luchansky, He, Krupski, & Stark, 2000; Luchansky, He, & Longhi, 2002; Luchansky, Krupski, & Stark, 2007; Luchansky et al., 2006; Wickizer, Campbell, Krupski, & Stark, 2000).

The exploration of the WC measures’ association with outcomes was built on the foundation of the earlier studies cited above. New projects that are aimed specifically at validating the WC measures are in the planning stages, but several studies already have been focused on the association of the WC process measures with outcomes of substance abuse treatment. Using data from Oklahoma, researchers found that clients who initiated a new episode of outpatient treatment and who engaged in treatment were significantly less likely to be arrested or incarcerated in the following year (Garnick et al., 2007). These results were replicated using data from Washington State (Campbell, forthcoming 2008). Also, in a study focused on adolescents in residential treatment, those who achieved continuity of care after their treatment had a significantly increased likelihood of being abstinent at the 3-month follow-up interview (Garner, Godley, Funk, Lee, & Garnick, 2008).

1.4 The Washington Circle Public Sector Workgroup

In 2004 the Washington Circle Public Sector Workgroup was formed to determine if the three WC measures - identification, initiation and engagement -- could be useful tools for ultimately improving continuity of care in the public sector treatment system. This WC workgroup set four tasks: 1) evaluating suitability of these three measures for meeting measurement goals about public sector clients, 2) adapting and potentially expanding the set of measures, 3) developing common specifications and 4) conducting pilot testing. In this paper, we present the revised measure specifications and the findings of this pilot testing.

The Public Sector Workgroup includes members from substance abuse/mental health agencies in twelve states (AZ, CT, DE, KS, MA, NY, NV, NC, OK, TN, VT, and WA), some local jurisdictions, federal officials from the Center for Substance Abuse Treatment, academic researchers, and the Washington Circle Policy Group. Decisions reflected the variety of participants’ experience in: providing treatment to clients and examining service provision patterns and how data are collected and maintained on state databases. In the fall of 2006, six states that are members of the Workgroup pilot tested the expanded set of performance measures for adults.

2. METHODS

In this section, we specify the data required for pilot testing, outline the set of performance measures, and discuss two key questions that the Workgroup grappled with in defining the performance measures.

2.1 Pilot Test Data

Availability of data is a key consideration in exploring the transferability of the WC measures from entities with an enrolled population (e.g. private health plans) to public sector agencies without enrolled populations. All twelve states participated in the conceptual discussions and development of measures specifications, although only some states (CT, MA, NY, NC, OK, and WA) pilot tested the measures using their administrative data on adults aged 18 and over. Information for the other states is not reported here for several reasons: either they did not collect the required data (VT, DE, NV), did not yet have the data or resources to conduct the analyses (AZ and KS are now participating in ongoing measure development), or information was only available for adolescents (TN). One state also had a change in leadership during the measure development process that led to decreased involvement in the WC Public Sector Workgroup (AZ). Three types of administrative data are required to calculate all three of the original measures.

2.1.1 Client Characteristics

All states typically collect admissions and discharge data that have the core data elements required by the Treatment Episode Data Set (TEDS) (SAMHSA, 2006) including client ID, level of care, demographics, substance use problem and frequency of use, and employment. For the pilot testing, all states used routinely collected administrative data on publicly funded admissions and discharges using episodes in their Fiscal Year 2005.

2.1.2 Utilization

Private health plans typically collect information on each treatment encounter with a client through claims that are submitted for payment or through encounter-based data sets. Some states also collect encounter level data that is similar to the claims data maintained at commercial health plans and typically include details regarding services provided and the dates of service linked to a client identification number. With the exception of New York (see below), five of the states that conducted the pilot routinely collect encounter data, including the date of treatment service and type of service, which are needed to calculate the Washington Circle measures. New York collects the number but not the dates of treatment services that a client receives during an episode, so for the pilot the process measures for outpatient and intensive outpatient services in New York were adapted and the results are presented separately.

2.1.3 Enrollment

Private health plans and the Veterans Health Administration serve a defined population and keep enrollment files. This information is key for calculating an identification rate where: the numerator is the number of clients who receive substance abuse services or diagnoses during the year; and the denominator is the total number of enrollees for the year.

Public sector agencies generally do not serve an enrolled population so determining who should be in the denominator is much more complex. Generally states serve individuals who are uninsured, medically underinsured, low income, and those who meet other criteria that vary from state to state. Some state agencies serve those below a specified percent below poverty level (e.g., below 100 percent of the poverty line), while others serve all who request services. Although we wanted to estimate the population that is eligible to be served using federal population and income estimates, we could not find a common approach to estimate a denominator across all states to calculate the identification measure. Therefore, only the results of the initiation and engagement measures are presented in this paper, along with results for new measures that were developed for use in the public sector as described below.

2.2 Performance Measure Definitions

The Workgroup expanded the original WC measures for initiation and engagement into nine measures to better reflect the public sector client population and state behavioral health agencies reporting needs. These measures are described here and summarized in Table 1. Two of the three original WC measures for private health plans were retained with minor adaptations (initiation and engagement for outpatient and intensive outpatient services). Other measures were newly developed for public sector populations building on the original WC continuity of care approach. For these measures, the WC’s original focus on the timing of treatment services was adapted to fit the information obtained from state substance abuse agencies. (More detailed specifications of the measures can be found at http://www.washingtoncircle.org.)

Table 1.

Measure Definitions

| Measure | Index Service Definition | Measure Formula | |

|---|---|---|---|

| Initiation/Engagement | |||

| Outpatient (OP) Initiation | New episode of OP with a 60-day service-free period. Can have assessment or detoxification during service-free period. | |

|

| Outpatient (OP) Engagement | New episode of OP with a 60-day service-free. Can have assessment or detoxification during service-free period. | |

|

| Intensive Outpatient (IOP) Initiation | New episode of IOP with a 60-day service-free period. Can have assessment or detoxification during service-free period. | |

|

| Intensive Outpatient (IOP) Engagement | New episode of IOP with a 60-day service-free period. Can have assessment or detoxification during service-free period. | |

|

| Continuity of Care After: | |||

| Assessment | Any assessment, no need for a service-free period. | |

|

| Detoxification | Any detoxification, no need for a service-free period. | |

|

| Short-term Residential (STR) | Discharge from any STR service, no need for a service-free period. | |

|

| Long-Term Residential (LTR) | Discharge from any LTR service, no need for a service-free period. | |

|

| Inpatient (INP) | Discharge from any inpatient service, no need for a service-free period. | |

|

Not detoxification or crisis care.

Table 2, shows detailed examples of client scenarios and provides explanations of how these scenarios relate to the definitions of the measures. The first and most straightforward example deals with outpatient initiation and engagement measures. The rest of the examples show combinations of client services that reflect the complexities of actual treatment patterns. These examples show that clients can qualify for multiple measures simultaneously, during which time they may meet the criteria for some measures and not for others.

Table 2.

Illustrative Examples of Treatment Scenarios

| Example 1: OP Initiation and Engagement |

| 60 days without a substance abuse (SA) service → OP → OP → OP → OP |

| Day 1 Day 3 Day 30 Day 33 |

| Client with a new episode of OP beginning on Day 1 receives a second OP service on Day 3, thus meeting criteria for initiation. The OP on Days 30 and 33 are two additional services within 30 days of the Day 3 OP initiation, thus meeting the criteria for engagement. |

| Example 2: Continuity After Assessment and Detoxification |

| 60 days without a substance abuse (SA) service → Assessment → Detox → OP |

| Day 1 Day 3 Day 6 |

| The OP service on Day 6 satisfies the continuity of care within 14 days after the assessment on Day 1. The detox service on Day 3 does not qualify for continuity after assessment. The Day 6 OP service qualifies as continuity of care after the detox on Day 3. Additionally, the OP on Day 6 would serve as an index to start a new episode as neither the earlier assessment nor detox count to prevent a “service-free period.” There is no initiation of care after the Day 6 OP. |

| Example 3: Multiple Measures |

| 60 days without a substance abuse (SA) service → OP → OP → residential (Length of stay (LOS))>2days) |

| Day 1 Day 3 Day 29-Day 32 |

| The Day 1 OP is an index service, the Day 3 OP service qualifies for initiation and the residential service starting on Day 29 qualifies for engagement since residential stays of two or more days after OP initiation count as full engagement. The residential service ending on Day 32 will be checked for continuity of care, but there is not another service within 14 days of discharge, so the continuity of care criteria is not met. |

| Example 4: Multiple Measures |

| 60 days without substance abuse (SA) service → Assessment → OP→ residential (Length of stay (LOS)) 7 days) → OP → OP→ |

| Day 1 Day 2 Day3-Day9 Day 32 Day 39 |

| The assessment on Day 1 is followed with continuity of care. The Day 2 OP is followed by an initiation service on Day 3 with a residential stay of 7 days that qualifies for engagement since it is more than two days. After discharge from the residential stay, the OP service on Day 32 does not qualify for continuity after residential since it occurs more than 14 days after discharge. The OP service on Day 32 is not another index service since there is no prior 60-day service-free period. |

| Example 5: Detoxification Continuity |

| Detox → residential → OP |

| Day 1-3 Day 8-14 Day 50 |

| The continuity of care after detox criteria is met by the residential care starting on day 8. However, there is not continuity of care after the residential stay since the OP on Day 50 is not within 14 days. |

| Example 6: Multiple Measures |

| 60 days without substance abuse (SA) service → IOP → Resid (Length of stay (LOS)) 5 days) → Resid (Length of stay (LOS)) 5 days) |

| Day 1 Day 3 - Day 7 Day 15 - Day 19 |

| The criteria for initiation and engagement after IOP are met since there is a five day stay and each day counts as one unit of service. There is continuity after the residential stay ending on Day 7 because there are less than 14 days from discharge from the first residential stay to the beginning of the next residential stay. A readmission to residential care meets the criteria for the continuity of care. |

| Example 7: Detoxification Continuity |

| Detox → OP → Detox |

| Day 1 Day 4 Day 20 |

| Both detox services will be followed up to determine whether there is continuity of care. The first detox shows continuity but the second one does not. The OP on Day 4 is an index service that does not have initiation of care since the detox that follows does not qualify for continuity. |

| Example 8: Multiple Measurement |

| 60 days without substance abuse (SA) service → Assessment → Detox→ OP → Residential (Length of stay (LOS)) 5 Days) → OP |

| Day 1 Day 3 Day 12 Days 15 - Day 19 Day 25 |

| This is the most complicated example but shows how a client could qualify for (and satisfy) multiple measures: continuity of care after assessment, continuity of care after Detox, outpatient initiation and engagement and continuity of care after residential. |

2.2.1 Initiation and Engagement Measures

Initiation measures and engagement measures are defined for two levels of care -- outpatient and intensive outpatient.

Initiation (Outpatient and Intensive Outpatient)

Initiation is defined as the percent of individuals, who have an index outpatient (OP) or intensive outpatient (IOP) service with no other substance abuse services in the previous 60 days and received a second substance abuse service (other than detoxification or crisis care) within 14 days after the index service.

Engagement (Outpatient and Intensive Outpatient Engagement)

Engagement is defined as the percent of individuals who initiated OP or IOP substance abuse treatment and received two additional services within 30 days after initiation.

To be included in the OP and IOP initiation and engagement measures, a client must be starting a new episode of care. The index service should be preceded by a 60-day service-free period during which the client had not received substance abuse treatment, as evidenced by claims or encounters with any diagnoses or services related to alcohol or drug abuse or dependence treatment. Detoxification or assessment can occur within this 60-day “service-free” period because they are not considered to be treatment. For the calculation of initiation and engagement rates, each additional OP or IOP service or each day of residential care counts as one day of service. Also, two or more OP or IOP services that occur on the same day count as one service.

2.2.2 Continuity Measures

Continuity of care is defined for five levels of care.

Continuity of Care After Assessment

This measure is defined as percent of individuals who have a positive assessment for substance abuse and received another substance abuse service (other than detoxification or crisis care) within 14 days.

Continuity of Care After Detoxification

This measure is defined as percent of individuals who receive a detoxification service and received another substance abuse service (other than detoxification or crisis care) within 14 days of discharge from detoxification. Based on how data are maintained on its database, each state can decide between two methods to calculate the detoxification measure when there is a string of multiple detoxification services:

Group multiple detoxifications that occur within a short period of a few days as one service. Look for another service within 14 days of the discharge from the last detoxification in this string of multiple detoxifications.

Each detoxification service is viewed as a separate service if there is any gap of days between services. For each detoxification, look for a follow-up service within 14 days that would qualify as continuity of care.

Continuity of Care in Varying Residential Situations

This measure is defined as percent of individuals, who have a stay that is followed by another service (other than detoxification or crisis care) within 14 days after discharge. This percentage is calculated separately after Short-Term Residential (STR), Long-Term Residential (LTR) or Inpatient (IP) Care in most states. All stays are included in the denominator because there is not a requirement for a new episode of care for this level of care.

Residential stays are classified as short- or long-term according to the type of program and not how long the client stayed. Substance abuse services -- outpatient or intensive outpatient --that occur on the last day of the residential stay should be included in the numerator. The rationale is that the residential facility has made the connection with the next level of care after discharge. Also, either a step-down to a less intensive level of care, a readmission to the same level of care, or a step-up to a more intensive level of care qualifies for continuity of care because no assumptions are being made about the appropriateness of the continuing care for this measure.

2.3 Key Decisions in the Development of Specifications

The Workgroup members reviewed preliminary data and grappled with numerous questions before reaching consensus about how to define the expanded set of measures. Two key decisions related to: first, the types of services included in the measures; and second, differences in measure specification across different levels of substance abuse treatment.

2.3.1 What types of services should be included in the performance measures?

Not all the services that states offer to substance abuse clients can be viewed as services that initiate or engage the client in treatment. Certainly therapeutic services such as individual counseling or group counseling are always considered to be substance abuse treatment services. Some ancillary services, such as employment counseling and housing assistance, were also included because they involve face-to-face contact between the provider and client that supports rehabilitation. Generally, services provided without the client in attendance, such as clinician consultation and laboratory analysis, did not qualify for initiation or engagement. Some services were determined to be appropriate to start a treatment episode but could not count as an additional service for any of the measures. For example, detoxification services can start treatment episodes, but the Workgroup decided that neither further detoxification services nor other crisis care services could count as a subsequent visit because these services are not considered substance abuse treatment.

2.3.2 Should there be separate measures by level of care?

We developed separate measures by level of care in order to make the measures most useful to states and providers who could use more disaggregated information to target quality improvement efforts. We also concluded that the idea of an episode of care needed to be considered differently for each level of care. We reasoned that the measures focused on outpatient (OP) and Intensive Outpatient (IOP) services should include only clients who are starting new treatment episodes. For these OP and IOP clients, it is important to determine that the index service, the first treatment, is the start of a new episode and not the middle or near the end of treatment when frequent services may not be clinically appropriate. For all other services (assessment, detoxification, residential, and inpatient services), regardless of whether there is a preceding service-free period that would indicate the start of a new episode of care, continuity of care is important for monitoring quality of care.

3. RESULTS

The goal of this project was to develop specifications and test feasibility. In addition, comparisons across the selected states may be of interest. Table 3 shows the pilot test results for the five states with client encounter data. Formal testing of the statistical significance of differences between states is a function of sample size and the design effect of clustered data. However, in general terms, differences under 8 percent are not significant at the .05 percent level, under the assumption that a particular design effect is not greater than 10 (a relatively high value).

Table 3.

Initiation, Engagement, and Continuity of Care Rates by State, 2005

| Level of Care Measures | STATES | ||||

|---|---|---|---|---|---|

| CT % (N) | MA % (N) | NC % (N) | OK % (N) | WA % (N) | |

| Initiation/Engagement | |||||

| OP Initiation | 72% (15,795) | 42% (22,822) | 42% (33,031) | 61% (10,115) | 73% (4,176) |

| OP Engagement | 67% (15,795) | 27% (22,822) | 24% (33,031) | 53% (10,115) | 64% (4,176) |

| IOP Initiation | -- | 44% (3,163) | 88% (1,171) | -- | 80% (3,262) |

| IOP Engagement | -- | 34% (3,163) | 76% (1,171) | -- | 75% (3,262) |

| Continuity of Care After: | |||||

| Detoxification* | 59% (12,130) | 40% (52,321) | 40% (7,864) | 19% (4,828) | 23% (11,882) |

| Assessment | -- | -- | 48% (29,603) | -- | 35% (28,810) |

| Short-term Residential** | -- | 38% (7,565) | -- | 23% (3,626) | 47% (1,363) |

| Long-term Residential** | -- | 17% (6,336) | -- | 15% (622) | 30% (2,655) |

| Any residential** | 60% (8,412) | -- | 37% (2,618) | -- | -- |

| Inpatient** | -- | -- | 27% (6,204) | -- | 47% (8,440) |

Washington used the method of grouping contiguous detoxifications while the other states used the individual detoxification method for calculating this measure, while.

Connecticut does not distinguish between outpatient and intensive outpatient services. Connecticut and North Carolina do not distinguish between short- and long-term residential. North Carolina’s inpatient data only includes Medicaid claims; thus, most services in the State Psychiatric hospitals or Alcohol and Drug Abuse Treatment Centers are excluded in this report. Massachusetts does not consider inpatient programs separately from short- or long-term residential programs.

3.1 Initiation and Engagement

Initiation and engagements rates varied widely across states. Outpatient initiation rates ranged from 42 to 73 percent. Based on the measure definitions, engagement rates are always lower than initiation rates, and for outpatient services they ranged from 24 to 67 percent. For Intensive Outpatient services, initiation rates ranged from 44 to 88 percent and engagement rates ranged from 34 to 76 percent.

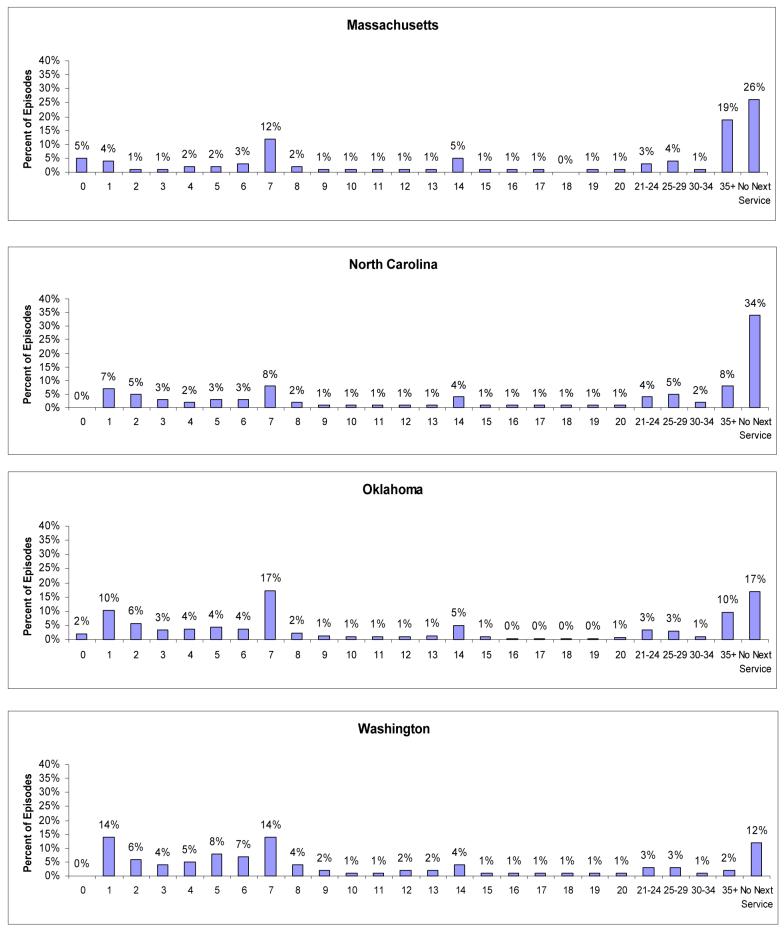

We conducted further analysis to determine the sensitivity of 14-day requirement for a next substance abuse services to meet the outpatient and intensive outpatient initiation specification. To do this, four states calculated the number of days from the index Outpatient service to the next service for each Outpatient episode of care. Figure 1 shows the distribution of days to next service for episodes with an index Outpatient service for each state. As can be seen in Figure 1, changing the initiation cut-off timeline to a few days before or a few days after 14 days would not change the initiation rates substantially because only very small percentages of clients had services in those surrounding days. The results lead to the same conclusion for Intensive Outpatient index services (results not shown).

Figure 1.

Sensitivity Analysis Using Outpatient Index Services

3.2 Continuity of Care

3.2.1 After Detoxification

Continuity of care after detoxification ranged from 19 percent in Oklahoma to 59 percent in Connecticut. We found that using the different methods (individual detoxification vs. grouping contiguous detoxifications) would alter the results minimally (results not shown).

3.2.2 After Assessment

Only two states were able to calculate continuity of care after assessment, North Carolina (48 percent) and Washington (35 percent) because the other states either did not routinely collect assessment information in their administrative databases or did not record information about whether the assessment resulted in a positive substance abuse diagnosis.

3.2.3 After Residential Treatment Services

When calculating continuity of care rates after residential treatment services, some states separated short-term from long-term residential services based on the type of residential treatment program, not on the client’s length of stay.

Two states, Connecticut and North Carolina, do not make a distinction between short- and long-term residential programs. Connecticut’s and North Carolina’s continuity of care after any short- or long-term residential stays were 60 percent and 37 percent, respectively.

For three states that distinguished short-term from long-term residential services (Oklahoma, Washington and Massachusetts) the rates for continuity of care after discharge ranged from 23 to 47 percent for short-term residential stays and from 15 to 30 percent for long-term residential stays.

Only two states, North Carolina and Washington, fund inpatient substance abuse treatment services as distinguished from residential treatment. Their continuity of care rates after inpatient stays were 27 and 47 percent, respectively.

3.3 New York Results

As noted above, New York collects admission and discharge dates for all substance abuse treatment services and the number of services received between admission and discharge, but not the actual dates of those services. Therefore we adapted the measures in the following way: outpatient/intensive outpatient initiation was measured as having two or more days of service during the episode, regardless of length of stay; and engagement was measured as a length of stay of 30 days or more and at least 4 treatment services during the episode. These specifications were designed to approximate as closely as possible the specifications using encounter data.

Based on New York’s definitions, the initiation rate for Outpatient treatment was 83 percent and the engagement rate was 66 percent (N = 91,875). New York’s initiation rate for Intensive Outpatient treatment was also 83%, and the engagement rate was 57percent (N = 7,140). Continuity of care rates were 16 percent for assessment (N = 42,444) and 28 percent for detoxification (N = 96,686). New York does not distinguish between short- and long-term residential treatment, and their overall residential treatment continuity of care rate was 26 percent (N = 24,366). The inpatient treatment continuity of care rate for this state was similar, at 28 percent (N = 39,923).

4. DISCUSSION

It is feasible for some state substance abuse agencies to use routinely collected administrative data to calculate performance measures, as shown by our Washington Circle Public Sector Workgroup pilot project. The use of a common approach and a shared set of specifications allows for comparisons across states. Such comparisons should be done with caution, however, because of variation among states in terms of the types of services offered and data collected, which can influence the results. It is important to reiterate that the main goal of this study is not to focus on state to state comparisons. We focus rather on feasibility testing and explaining methods that can be used across multiple states and on identifying patterns of care in the treatment systems that cut across states. For example, one consistent pattern across states is that two measures (continuity of care after detoxification and after positive assessment) need attention in all the states in the pilot to improve continuity of care.

Nevertheless, these measures, used as performance management tools, can serve as a simple indicator of areas that require further examination. Initiation, engagement and continuity rates vary across providers and across states for a range of reasons including client motivation, systems barriers (such as lack of transportation support for clients), differences in the resources directed towards treatment of substance use conditions and quality improvement initiatives, differences in completeness of recorded data, prevalence of referral to self-help rather than formal treatment, or differences in quality of care provided. In our analysis, we did not focus on the extent that such influences contribute to the rates reported here. These measures might best be used, therefore, for tracking over time as part of quality improvement efforts within providers or states where variations in data collection or local treatment patterns and availability of resources may be less problematic.

The results we report here are the same or higher than reported for different populations. Using the original WC specifications developed for private health plans, the WC pilot testing reported initiation rates from 26 percent to 46 percent and engagement rates from 14 percent to 29 percent (Garnick et al., 2002). In the Veterans Health Administration annual report for Fiscal Year 2006, the initiation rate is 27.7 percent while the engagement rates is 8.6 percent (Harris, Bowe, & Humphreys, 2007). For private health plans reporting to NCQA, the initiation rate in 2005 was 44.5 percent and the engagement rate was 14.1 percent (NCQA, 2007). It is key to note, however, that these results are not directly comparable to the pilot results reported here because all levels of care are reported together for both NCQA and the Veterans Health Administration. In addition, the results reported here for continuity after detoxification are in the same range as the 26.9 percent reported using earlier data from 1996-1997 for Delaware, Oklahoma, and Washington (Mark, Vandivort-Warren, & Montejano, 2006).

4.1. Current State Use of Substance Abuse Performance Measures

State and local behavioral health agencies face challenges in implementing substance abuse performance measures. For states with adequate data systems, assessments of data quality are critical as is the need to balance states’ desire to develop their own methods with the possibility of making cross-state comparisons by using common methods. Faced with resource constraints, states also must evaluate the costs of calculating and disseminating performance measures in terms of the benefits that can arise from using performance measures as a basis for starting a conversation about quality improvement with policy makers, providers, client advocates, contracting with providers, sharing program-specific reports with providers, or offering programs comparisons with others in the state. As the Federal government places more emphasis on performance measurement, the need to develop indicators that can help to target quality improvement efforts may become more important to states. Of course, states face common barriers including lack of encounter-level data on existing data systems to support calculating performance measures such as those developed here, leadership instability that might erode ongoing support, or changing resource constraints that might preclude ongoing programming support.

Although not reported here, our results show variation across programs in client engagement as also has been reported by large-scale field studies (Broome, Simpson, & Joe, 1999; Gerstein et al., 1997; Thune, 2000). This variation is part of the impetus behind the reporting efforts reported below in two states that have already incorporated all or several of the WC measures and activities noted above into their ongoing efforts.

4.1.1 Oklahoma

Since 2003, a quarterly report has been produced and posted on the web (www.odmhsas.org) that reports the results of performance measures for the eight regions in the state. In order to track changes over time, measures are compared to the state average for the last eight quarters. The May 2006 quarterly report shows higher rates than in 2005 and results include the following: initiation after detoxification (24.2 percent); engagement after residential treatment (12.2 percent); initiation after outpatient treatment (80.7 percent); and engagement after outpatient treatment (69.0 percent) (Oklahoma Department of Mental Health and Substance Abuse Services, 2006). Currently, the Washington Circle measures, along with the NOMs and other Oklahoma-specific measures, are provided to contracted treatment facilities in four new formats: a comparison of the current time period to the previous time period: a trend analysis, providing 12 quarters of data; a comparison of performance with all other providers for a specific level of care; and a detailed report providing information about specific clients in each measure.

4.1.2 North Carolina

In 2006, the state started to report on performance indicators for mental health and developmental disabilities in addition to substance abuse (North Carolina Department of Health and Human Services, 2006). The impetus for the quarterly report is the belief that human service systems should be held accountable for provision of services and progress toward set goals. Regular reporting helps to identify areas that are doing well and those that need attention at local and state levels. One of the performance indicators in North Carolina is timely initiation and engagement in treatment. For the first quarter of SFY 2006-2007, 58 percent of those receiving substance abuse services initiated treatment using the WC definition with a range from a low of 31 percent in one local management entity (LME) to a high of 80 percent in another LME. Forty percent engaged in treatment with the range among LMEs from a low of 6 percent to a high of 65 percent.

In addition, New York uses substance abuse performance measures in its system for county stakeholders, Connecticut is offering monthly reports to providers, and Massachusetts provides bi-monthly reports to state leadership.

4.2 Relationship to Other Initiatives

Two other initiatives that are directly relevant to the WC Public Sector measures share our focus on improving quality of care.

4.2.1 The Network for the Improvement of Addiction Treatment (NIATx) (www.niatx.org)

This initiative relates to the WC performance measures in two ways. Most importantly, it offers a tool for providers who seek to improve their performance rates. Through a philosophy based on effective, efficient and consumer-oriented care that focuses on continuous improvement models, the NIATx initiative shows that improved access and retention in substance abuse treatment can be accomplished through changes in management and business practices of treatment programs (McCarty et al., 2007). NIATx uses a Plan-Do-Study-Act cycle to identify problems, develop solutions, implement the new process, and measure the resulting outcomes (Capoccia et al., 2007; McCarty et al., 2007; Wisdom et al., 2006). Through the application of process improvement strategies that reduced the number of days between client’s first contact with the system and first treatment service, reduction in no-shows and increased admission and retention resulted. Also, the WC measures reported on here and NIATx core measures (e.g., timely services and engagement or retention) are similar in concept. Both the NIATx and WC measures capture clients’ experience in the early part of their treatment experience. Both groups measure the proportion of clients with an assessment that become admitted. Once admitted to treatment, NIATx and the WC have developed measures that focus on their actual engagement in treatment within a specified time period post admission. For example, the NIATx continuation measure focuses on four units of service in 30 days and the WC measures of outpatient initiation and engagement focus on four units of service in up to 45 days.

4.2.2 The National Outcome Measures (NOMs) developed by the Substance Abuse and Mental Health Services Administration’s (SAMHSA) (http://www.nationaloutcomemeasures.samhsa.gov/)

This initiative will provide SAMHSA and the States with information that may be used for quality improvement. The NOMs contain 10 distinct domains including the following outcomes for substance abuse treatment: abstinence, employment (or school), criminal justice, housing, and retention in treatment. The WC measures do not track directly to the NOMs, but there are important complementarities. By measuring and reporting on initiation and engagement, states can contribute to improving the NOM for increased retention in treatment by offering information that will allow them to target providers, populations, or levels of services. Many studies of treatment retention indicate that substance abuse clients who remain in treatment for a longer duration of time have better outcomes (Hubbard, Craddock, Flynn, Anderson, & Etheridge, 1997; Hubbard, Craddock, & Anderson, 2003; McLellan, 1997; Simpson, 1995). Improvements in outcomes after treatment have been found for employment (Arria & TOPPS-II Interstate Cooperative Study Group, 2003; Koenig et al., 2005; Luchansky, Brown et al., 2000; Wickizer et al., 2000; Zarkin, Dunlap, Bray, & Wechsberg, 2002), criminal justice involvement (Hubbard et al., 2003; Luchansky et al., 2002; Luchansky et al., 2007; Luchansky et al., 2006), level of functioning (Conners, Grant, Crone, & Whiteside-Mansell, 2006; McLellan, 1997), and reduced alcohol and other drug use (McLellan, 1997). States’ implementation of measurement and reporting of the WC measures has the potential, therefore to contribute to more clients to becoming engaged in the early stages of treatment. Certainly this early engagement is a prerequisite to achieving longer lengths of stay or treatment completion, which in turn are associated with improvement in these four key NOMs.

5. CONCLUSION

Developing and pilot testing substance abuse performance measures for the public sector is only a first step in making them useful tools for performance management and quality improvement. Currently, only about a third of the states collect the detailed encounter data that is required for the optimal calculation of these measures. The concepts we propose are simple but their application to data and the interpretation of the results can be complex. Even for states with complete data on encounters, taking into account the nuances of treatment patterns requires some intricate calculations. Furthermore, these efforts require data collection, analytic support and funding that some states may find difficult to obtain. Nevertheless, between 2004 and 2006 a dozen states have been able to participate in the development of the measures and half of them have also participated in the empirical testing.

Additional efforts related to the Washington Circle measures are needed in several areas. First, immediately important is how states display results and communicate with providers. While these measures can be powerful tools for targeting quality improvement efforts, ongoing work is needed to further develop approaches to offering information to providers on a timely basis and using clear formats. Second,, more attention now is being paid to leadership and staff relations in provider organizations, drawing on emerging evidence for how treatment systems’ and counselors’ attributes can influence quality of services and institutional openness to innovations (Broome, Flynn, Knight, & Simpson, 2007; Greener, Joe, Simpson, Rowan-Szal, & Lehman, 2007; Joe, Broome, Simpson, & Rowan-Szal, 2007; Simpson, Joe, & Rowan-Szal, 2007). Thus, considering how Washington Circle measures fit into providers’ organizational context also is a fruitful area for further investigation. Finally, examining costs in relation to the Washington Circle measures will reveal the business case for using these measures. If reporting initiation and engagement results leads to targeted quality improvement efforts and to subsequent improvements in client outcomes, then there is the potential for savings not only in the cost of reduced readmission to substance abuse treatment but also reduced criminal justice, unemployment or other related costs.

The substance abuse treatment field continues to move in the coming decade towards understanding of the need for a recovery oriented system of care, recognition of substance use as a chronic condition for some individuals, and introduction of new payment approaches and quality improvement methods. The Washington Circle measures described here offer system markers for monitoring the impact of these changes over time in reaching the goals of improving interventions and service quality for individuals in need.

Acknowledgements

This project was supported by the Substance Abuse and Mental Health Services Administration through a supplement to the Brandeis Harvard National Institute on Drug Abuse (NIDA) Center on Managed care and Drug Abuse Treatment, Grant # P50 DA 010233 and National Institute on Alcohol Abuse and Alcoholism grant # R21 AA014229.

REFERENCES

- Addiction Technology Transfer Center Network [Accessed June 3, 2008];The Shift to Recovery-Oriented Systems of Care. 2008 from http://www.nattc.org/learn/topics/rosc/

- Alterman AI, Langenbucher J, Morrison RL. State-level treatment outcome studies using administrative databases. Eval Rev. 2001;25(2):162–183. doi: 10.1177/0193841X0102500203. [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association [Accessed June 3, 2008];Practice Guideline and Resources for Treatment of Patients With Substance Use Disorders. (Second Edition). 2008 from http://www.psychiatryonline.com/pracGuide/pracGuideTopic_5.aspx.

- American Society of Addiction Medicine [Accessed June 3, 2008];Practice Guidelines. 2008 from http://www.asam.org/PracticeGuidlines.html.

- Arria A, TOPPS-II Interstate Cooperative Study Group Drug treatment completion and post-discharge employment in the TOPPS-II Interstate Cooperative Study. Journal of Substance Abuse Treatment. 2003;25(1):9–18. doi: 10.1016/s0740-5472(03)00050-3. [DOI] [PubMed] [Google Scholar]

- Broome KM, Flynn PM, Knight DK, Simpson DD. Program structure, staff perceptions, and client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33(2):149–158. doi: 10.1016/j.jsat.2006.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broome KM, Simpson DD, Joe GW. Patient and program attributes related to treatment process indicators in DATOS. Drug Alcohol Depend. 1999;57(2):127–135. doi: 10.1016/s0376-8716(99)00080-0. [DOI] [PubMed] [Google Scholar]

- Campbell K. Impact of Record-Linkage Methodology on Performance Indicators and Multivariate Relationships. Journal of Substance Abuse Treatment. 2008 doi: 10.1016/j.jsat.2008.05.004. forthcoming. [DOI] [PubMed] [Google Scholar]

- Capoccia VA, Cotter F, Gustafson DH, Cassidy EF, Ford JH, 2nd, Madden L, et al. Making “stone soup”: improvements in clinic access and retention in addiction treatment. Jt Comm J Qual Patient Saf. 2007;33(2):95–103. doi: 10.1016/s1553-7250(07)33011-0. [DOI] [PubMed] [Google Scholar]

- Center for Substance Abuse Treatment [Accessed June 3, 2008];National Registry of Evidence-Based Programs and Practices (NREPP) from http://www.nrepp.samhsa.gov.

- Conners NA, Grant A, Crone CC, Whiteside-Mansell L. Substance abuse treatment for mothers: treatment outcomes and the impact of length of stay. J Subst Abuse Treat. 2006;31(4):447–456. doi: 10.1016/j.jsat.2006.06.001. [DOI] [PubMed] [Google Scholar]

- Evans E, Longshore D, Prendergast M, Urada D. Evaluation of the Substance Abuse and Crime Prevention Act: client characteristics, treatment completion and re-offending three years after implementation. J Psychoactive Drugs. 2006;(Suppl 3):357–367. doi: 10.1080/02791072.2006.10400599. [DOI] [PubMed] [Google Scholar]

- Garner B, Godley M, Funk R, Lee M, Garnick D. Manuscript in progress. at Chestnut Health Systems and Institute for Behavioral Health, Brandeis University; 2008. A validity study of the Washington Circle continuity of care performance measure. [Google Scholar]

- Garnick DW, Horgan CM, Lee MT, Panas L, Ritter GA, Davis S, et al. Are Washington Circle performance measures associated with decreased criminal activity following treatment? J Subst Abuse Treat. 2007;33(4):341–352. doi: 10.1016/j.jsat.2007.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garnick DW, Lee MT, Chalk M, Gastfriend D, Horgan CM, McCorry F, et al. Establishing the Feasibility of Performance Measures for Alcohol and Other Drugs. Journal of Substance Abuse Treatment. 2002;23:375–385. doi: 10.1016/s0740-5472(02)00303-3. [DOI] [PubMed] [Google Scholar]

- Gerstein DR, Datta AR, Ingels JS, Johson RA, Rasinski KA, Schildaus KT, et al. [Accessed June 3, 2008];National Treatment Improvement Evaluation Survey: Final Report. 1997 from http://www.icpsr.org/cocoon/SAMHDA/STUDY/02884.xml.

- Greener JM, Joe GW, Simpson DD, Rowan-Szal GA, Lehman WEK. Influence of organizational functioning on client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33(2):139–147. doi: 10.1016/j.jsat.2006.12.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris AHS. VA Care for Substance Use Disorder Patients: Indicators of Facility and VISN Performance (Fiscal Years 2004 and 2005) Program Evaluation and Resource Center and HSR&D Center for Health Care Evaluation; Palo Alto, CA: 2006. [Google Scholar]

- Horgan C, Garnick D. Background paper for the Institute of Medicine Institute for Behavioral Health, Schneider Institute for Health Policy, Heller School for Social Policy and Management, Brandeis University. 2005. The quality of care for adults with mental and addictive disorders: Issues in performance measurement. [Google Scholar]

- Hubbard R, Craddock S, Flynn P, Anderson J, Etheridge R. Overview of 1-year follow-up outcomes in the Drug Abuse Treatment Outcome study (DATOS) Psychology of Addictive Behaviors. 1997;11(4):261–178. [Google Scholar]

- Hubbard RL, Craddock SG, Anderson J. Overview of 5-year followup outcomes in the drug abuse treatment outcome studies (DATOS) J Subst Abuse Treat. 2003;25(3):125–134. doi: 10.1016/s0740-5472(03)00130-2. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine . Crossing the Quality Chasm: A New Health System for the 21st Century. National Academies Press; Washington, D.C.: 2001. [PubMed] [Google Scholar]

- Institute of Medicine . Improving the Quality of Health Care for Mental and Substance Use Conditions. National Academies Press; Washington, D.C.: 2006. [PubMed] [Google Scholar]

- Joe GW, Broome KM, Simpson DD, Rowan-Szal GA. Counselor perceptions of organizational factors and innovations training experiences. Journal of Substance Abuse Treatment. 2007;33(2):171–182. doi: 10.1016/j.jsat.2006.12.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koenig L, Siegel JM, Harwood H, Gilani J, Chen YJ, Leahy P, et al. Economic benefits of substance abuse treatment: findings from Cuyahoga County, Ohio. J Subst Abuse Treat. 2005;28(Suppl 1):S41–50. doi: 10.1016/j.jsat.2004.09.004. [DOI] [PubMed] [Google Scholar]

- Krumholz HM, Normand S-L, Spertus JA, Shahlan DM, Bradley EH. Measuring performance for treating heart attacks and heart failure: The case for outcomes measurement. Health Affairs. 2007;26(1):75–85. doi: 10.1377/hlthaff.26.1.75. [DOI] [PubMed] [Google Scholar]

- Luchansky B, Brown M, Longhi D, Stark K, Krupski A. Chemical dependency treatment and employment outcomes: results from the ‘ADATSA’ program in Washington State. Drug Alcohol Depend. 2000;60(2):151–159. doi: 10.1016/s0376-8716(99)00153-2. [DOI] [PubMed] [Google Scholar]

- Luchansky B, He L, Krupski A, Stark KD. Predicting readmission to substance abuse treatment using state information systems. The impact of client and treatment characteristics. J Subst Abuse. 2000;12(3):255–270. doi: 10.1016/s0899-3289(00)00055-9. [DOI] [PubMed] [Google Scholar]

- Luchansky B, He L, Longhi D. Substance Abuse Treatment and Arrests: Analyses from Washington State, DSHS Research and Data Analysis Division Fact Sheet 4.42. Washington State Department of Social and Health Services; Seattle, WA: 2002. [Accessed February 1, 2008]. from http://www1.dshs.wa.gov/pdf/hrsa/dasa/ResearchFactSheets/442SubAbusTxArr.pdf. [Google Scholar]

- Luchansky B, Krupski A, Stark K. Treatment response by primary drug of abuse: Does methamphetamine make a difference? J Subst Abuse Treat. 2007;32(1):89–96. doi: 10.1016/j.jsat.2006.06.007. [DOI] [PubMed] [Google Scholar]

- Luchansky B, Nordlund D, Estee S, Lund P, Krupski A, Stark K. Substance abuse treatment and criminal justice involvement for SSI recipients: results from Washington state. Am J Addict. 2006;15(5):370–379. doi: 10.1080/10550490600860171. [DOI] [PubMed] [Google Scholar]

- Mant J. Process versus outcome indicators in the assessment of quality of health care. Int J Qual Health Care. 2001;13(6):475–480. doi: 10.1093/intqhc/13.6.475. [DOI] [PubMed] [Google Scholar]

- Mark T, Levit K, Vandivort-Warren R, Coffey R, Buck J, the Substance Abuse and Mental Health Services Administration (SAMHSA) Spending Estimates Team Trends in Spending for Substance Abuse Treatment, 1986-2003. Health Affairs (Millwood) 2007;26(4):1118–1128. doi: 10.1377/hlthaff.26.4.1118. [DOI] [PubMed] [Google Scholar]

- Mark TL, Vandivort-Warren R, Montejano LB. Factors affecting detoxification readmission: analysis of public sector data from three states. J Subst Abuse Treat. 2006;31(4):439–445. doi: 10.1016/j.jsat.2006.05.019. [DOI] [PubMed] [Google Scholar]

- McCarty D, Gustafson DH, Wisdom JP, Ford J, Choi D, Molfenter T, et al. The Network for the Improvement of Addiction Treatment (NIATx): Enhancing access and retention. Drug Alcohol Depend. 2007:138–145. doi: 10.1016/j.drugalcdep.2006.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCorry F, Garnick DW, Bartlett J, Cotter F, Chalk M. Developing performance measures for alcohol and other drug services in managed care plans. Joint Commission Journal on Quality Improvement. 2000;26(11):633–643. doi: 10.1016/s1070-3241(00)26054-9. [DOI] [PubMed] [Google Scholar]

- McLellan A. Evaluating effectiveness of addiction treatments: reasonable expectations, appropriate comparisons. In: Egertson J, Fox D, Leshner A, editors. Treating Drug Abusers Effectively. Blackwell Publishers; Malden MA: 1997. [Google Scholar]

- McLellan AT, Chalk M, Bartlett J. Outcomes, performance, and quality: what’s the difference? J Subst Abuse Treat. 2007;32(4):331–340. doi: 10.1016/j.jsat.2006.09.004. [DOI] [PubMed] [Google Scholar]

- National Committee for Quality Assurance [Accessed April 1, 2008];The State of Health Care Quality 2007. 2007 from http://www.ncqa.org/Portals/0/Publications/Resource%20Library/SOHC/SOHC_07.pdf.

- National Committee on Quality Assurance . HEDIS 2008 Technical Specifications. Washington, D.C.: 2008. [Accessed February 2, 2008]. from www.ncqa.org. [Google Scholar]

- National Institute on Drug Abuse . Principles of Drug Addiction Treatment: A Research-Based Guide. National Institutes of Health; Bethesda, MD.: 1999. [Accessed November 3, 2007]. NIH Publication No. 99-4180. from http://www.nida.nih.gov/PODAT/PODAT1.html. [Google Scholar]

- National Quality Forum [Accessed March 1, 2007];Evidence-based practices to treat substance use conditions. 2007 from http://www.qualityforum.org/pdf/projects/sud/lsENTIREDRAFT1-22-07.pdf.

- North Carolina Department of Health and Human Services . MH/DD/SAS Community Systems Progress Indicators: Report for First Quarter SFY 2006-2007. Division of Mental Health, Developmental Disabilities, and Substance Abuse Services; Raleigh, North Carolina: 2006. [Accessed November 11, 2007]. from http://www.ncdhhs.gov/mhddsas/statspublications/reports/cspireport_sfy07q1_11-15-06.pdf. [Google Scholar]

- Oklahoma Department of Mental Health and Substance Abuse Services . Regional Performance Management Report, Report for Second Quarter of FY 2006. Decision Support Services; Oklahoma City, Oklahoma: 2006. [Accessed November 1, 2007]. from http://www.odmhsas.org/eda/rpm/okrpmfy2006q2.pdf. [Google Scholar]

- SAMHSA . Treatment Episode Data Set (TEDS): 1994-2004 National Admissions to Substance Abuse Treatment Services. Substance Abuse and Mental Health Services Administration, Office of Applied Studies; Rockville, MD: 2006. DASIS Series: S-33, DHHS Publication No. (SMA) 06-4180. [Google Scholar]

- Simpson D. Client engagement and change during drug abuse treatment. Journal of Substance Abuse Treatment. 1995;7:117–134. doi: 10.1016/0899-3289(95)90309-7. [DOI] [PubMed] [Google Scholar]

- Simpson DD. A conceptual framework for drug treatment process and outcomes. J Subst Abuse Treat. 2004;27(2):99–121. doi: 10.1016/j.jsat.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Simpson DD, Joe GW, Rowan-Szal GA. Linking the elements of change: Program and client responses to innovation. Journal of Substance Abuse Treatment. 2007;33(2):201–209. doi: 10.1016/j.jsat.2006.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration [Accessed June 3, 2008];SAMHSA/CSAT Treatment Improvement Protocols. 2008 from http://www.ncbi.nlm.nih.gov/books/bv.fcgi?rid=hstat5.part.22441.

- Thune E. [Accessed June 3, 2008];The National Treatment Improvement Evaluation Study: Retention Analysis (NEDS Analytic Summary #10) 2000 from http://www.icpsr.org/cocoon/SAMHDA/STUDY/02884.xml.

- Wickizer TM, Campbell K, Krupski A, Stark K. Employment outcomes among AFDC recipients treated for substance abuse in Washington State. Milbank Q. 2000;78(4):585–608. doi: 10.1111/1468-0009.00186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wisdom JP, Ford JH, Hayes RA, Edmundson E, Hoffman K, McCarty D. Addiction treatment agencies’ use of data: a qualitative assessment. The Journal of Behavioral Health Services & Research. 2006;33(4):394–407. doi: 10.1007/s11414-006-9039-x. [DOI] [PubMed] [Google Scholar]

- Zarkin GA, Dunlap LJ, Bray JW, Wechsberg WM. The effect of treatment completion and length of stay on employment and crime in outpatient drug-free treatment. J Subst Abuse Treat. 2002;23(4):261–271. doi: 10.1016/s0740-5472(02)00273-8. [DOI] [PubMed] [Google Scholar]