Summary

Small hospitals sit at the apex of the pyramid of primary care in many low-income country health systems. If the Millennium Development Goal for child survival is to be achieved hospital care for severely ill, referred children will need to be improved considerably in parallel with primary care in many countries. Yet we know little about how to achieve this. We describe the evolution and final design of an intervention study attempting to improve hospital care for children in Kenyan district hospitals. We believe our experience illustrates many of the difficulties involved in reconciling epidemiological rigour and feasibility in studies at a health system rather than an individual level and the importance of the depth and breadth of analysis when trying to provide a plausible answer to the question - does it work? While there are increasing calls for more health systems research in low-income countries the importance of strong, broadly-based local partnerships and long term commitment even to initiate projects are not always appreciated.

Introduction

Under 5 mortality in most of sub-Saharan Africa remains >100/1000 live-births and has remained unchanged for a decade or has risen in some countries including Kenya[1]. Improving child survival will require better delivery of health services and in some cases curative care may be at least as cost effective as preventive interventions [2]. Appropriately therefore, the delivery of health services at the community level and through primary care units has been the subject of considerable global research and calls to action[3,4]. However, district hospitals that provide referral care and the complex environments in which they operate have been largely ignored[2,5,6]. We believe that understanding how to improve the performance of district hospitals in settings such as Kenya is also important for the following reasons.

Firstly, referral care for children is commonly required. In sub-Saharan African countries between 6% and 20% of children assessed at primary care units may require referral [7] although often they do not get it [8,9]. Secondly, it has been estimated that effective hospital care can confer a considerable child survival advantage if access is good[10]. Thirdly, district hospital care can be highly cost effective. In Bangladesh the cost per disability adjusted life year (DALY) averted attributable to a small district hospital was estimated to be $11 [11], while Kenyan data suggest that the cost per child life saved by hospital care may be as low as $105 [6]. Fourthly, in many countries the district hospital has a supervisory and peer leadership role within the formal primary care network. If hospitals fail to provide appropriate leadership the whole primary care network is threatened. Finally, hospitals are an established part of many health systems. Even in African countries the hospital sector consumes a major proportion of health care budgets although the relatively poor quality services they provide may limit their effectiveness and produce a poor return for this investment [12].

How hospitals provide services and maximise health benefits are subjects that are therefore highly relevant to improving health systems in low income settings. Yet the question of how to deliver essential services effectively in small hospitals has scarcely been addressed.

The situation in Kenya

In Kenya there are just over 100 government hospitals providing basic referral care, 70 of which are district hospitals that serve and supervise primary care networks. In common with many countries in sub-Saharan Africa these hospitals face problems with infrastructure, equipment, personnel, supplies of resources [2,12,13] and, sometimes, poor management[14]. However, first line therapeutics for the most common diseases are widely available [13] and clinical staff (predominantly clinical officers with a 3-year medical training) and nursing staff are available.

Strategies for intervention

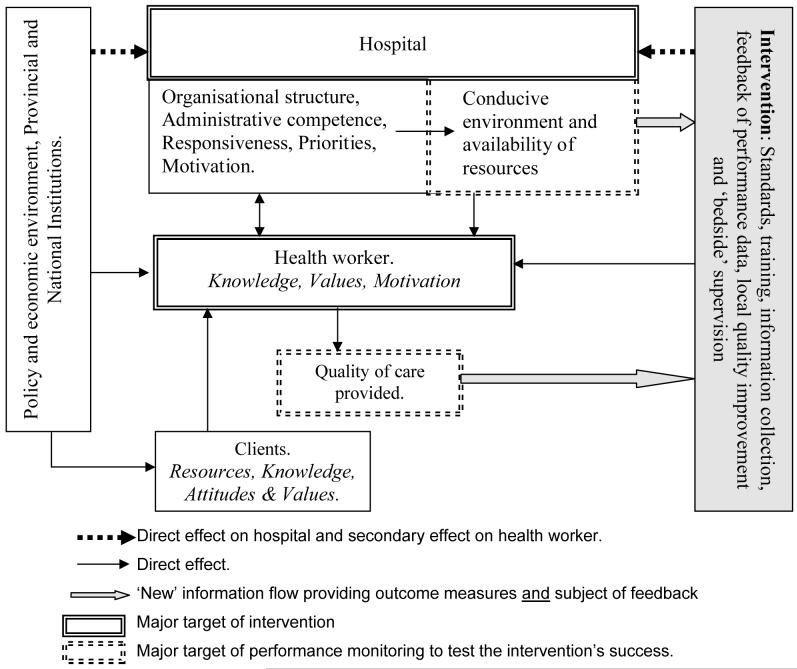

The functioning of district hospitals, as part of complex health systems, is affected by a wide variety of factors (see figure 1). These include effective health policy and regulation and the provision of adequate human, capital and consumable resources[15]. At a local level, resource allocation, individual health worker motivation, organisational structures, institutional and personal values and trust will impact on hospital performance [16,17,18]. The demand for services, reflected by the effectiveness of referral, is also likely to be a key determinant of efficiency, equitable distribution of resources and population health benefits. Given this complexity multiple interventions targeting health system constraints above, within and below the district hospital level are likely to be necessary to optimise performance. Health workers, however, remain central to a health system’s functioning [19].

Figure 1.

A basic framework illustrating the complexity of actors, the centrality of health workers and the major interactions relevant to the provision of improved quality of care.

Health workers and best practice

The desire to ensure that patients are correctly assessed, and receive prompt, safe and effective therapy in an appropriate environment is hardly new. In the most developed countries considerable resources have been invested in evidence based medicine and quality improvement approaches that address these issues. To change health worker behaviour, however, evidence, even in low income settings, suggests that multiple approaches are likely to be required in the form of written, expert guidelines and training combined with job aides, feedback and supervision, or more general quality improvement initiatives [20,21,22,23].

Given the need to improve hospital care for children and uncertainty about the best means to achieve this how can health systems research help? Our aim was to examine how paediatric practices could be improved in the setting of typical, government hospitals in Kenya without resorting to major resource inputs. We now describe the evolution of a study design and some of the practical and scientific tensions that helped to shape it as an illustration of the complexities encountered undertaking health systems research.

Assessing hospital and clinical performance

The rationale for an intervention to promote evidence-based patient care is that such care is on the causal pathway to better outcomes. Therefore it would be logical to consider reduction in inpatient paediatric mortality as the primary endpoint of any study. However, it soon became apparent that basing an assessment on a reduction in paediatric mortality in hospitals would be problematic, particularly in a setting such as Kenya for reasons listed in Box 1. With these in mind it is clear that the resources required to mount a study demonstrating ‘statistically significant’ reductions in mortality that would not be undermined by worries over bias, residual or unrecognised confounding will be beyond most research teams. If mortality is problematic as the major outcome measure of success then are there appropriate alternatives?

Box 1. Problems in interpreting hospital mortality statistics in low income settings.

Inpatient fatality rates are obviously measured at the hospital level. In a traditional randomised controlled trial each hospital studied therefore represents only 1 observation. The sample size, feasibility and cost implications are obvious.

A focus on mortality may result in other valid outcomes such as efficient resource use, preventing errors, or reducing hospital stay being ignored.

Poor data quality - current health information systems in Kenya function poorly and even at the hospital level deaths may go unrecorded. Paradoxically improving information systems during a study may improve ascertainment and result in an increase in recorded mortality.

Variable case-mix - The pattern of disease (for example prevalence of malaria), and therefore mortality, between hospitals may vary depending on the type of cases seen - the case mix. This could result in considerable residual confounding unless large, random samples of hospitals are studied. While in theory it might be possible to make adjustments for case-mix this demands very high quality data that is very rarely available.

Variable case-severity - There is likely to be variation in the severity of disease at presentation between hospitals. For example pneumonia mortality rates will vary depending on the proportion of admitted cases with severe or very severe disease. As for case-mix the lack of high quality case record data limit the ability to account for this.

An intervention may change both case-mix and case severity. For example, a hospital may stop admitting mildly ill children or utilisation could change in response to perceived change in quality of care.

Hospital mortality rates may be confounded by time-dependent factors such as changing hospital funding, natural disasters or epidemics.

The rationale for better case management is that it improves outcomes. If this is true then process indicators that reflect the degree to which best practice care is provided are valid and appropriate endpoints. For example, the proportion of severely dehydrated children receiving fluids of the correct type, volume and rate may be a useful measure. Observations of this type can be made frequently, may be easier to identify and be less subject to confounding. Critically substantial changes in measured indicators may occur, also making impact potentially easier to observe. Thus, process measures have many desirable properties: they may permit between health worker and between hospital comparisons; they can target the most desired attributes of service delivery; they are relatively cheap to measure; they can rapidly incorporate new elements; and they provide results that should be intrinsically meaningful to service providers [24,25]. Process measures may however be affected by the degree to which inputs (resources) are available - a consideration rarely of concern in developed countries. In addition, there are no defined, accepted process performance measures. To develop these we required a very good understanding of the setting within which care is being delivered. Furthermore, how does one select the important measures from all of the possible measures. In our case key measures were selected on the basis of feasibility and one or more of: a clear, logical link to patient outcomes; a clear and proximate link to the intervention; favourable cost-effectiveness; requirement for minimal resource inputs; or, objectivity of the assessment(s) (additional information is available from the authors).

How to intervene?

To change health worker practices and hospital care a suitable intervention is required. In keeping with emerging evidence that multifaceted interventions including training, job aides, feedback, quality improvement and supervision are more likely to be successful we wished to incorporate most of these elements (described briefly in Box 2). However, the lack of available tools or structures in Kenya meant that all of these had to be developed de novo, in collaboration with the Ministry of Health and other stakeholders, keeping in mind what might be sustainable. This process engaged us for three years, with significant effort to ensure what was developed became nationally owned.

Box 2. Package of intervention measures developed by the research team to improve performance in providing paediatric hospital care (see Irimu, G, et al, submitted, for further detail).

Evidence based clinical practice guidelines (CPGs) developed with the Kenyan Ministry of Health and other key stakeholders

Job aides including booklets of CPGs, drug doses, fluid and feed prescription charts, wall charts and structured paediatric admission record forms [2]

A 5.5 day training programme extending WHO’s Emergency Treatment and Triage (ETAT) approach to include management in line with CPGs.

An external supervisory process to mimic a regional supervisory mechanism to be delivered by the research team through visits at least 3 monthly.

Initiation of on-site problem solving or basic quality improvement with direct support for a local, hospital selected, non physician, health worker to act as a facilitator who also provides a telephone link to external, support supervision every 1 to 2 weeks.

Scheme for regular hospital performance assessment and feedback of results on a 6 monthly basis

Study designs that truly inform our understanding of the health system

If our overall aim is to assess the ability of an intervention to achieve practice improvement, assessed with a panel of process measures, the gold standard design remains the randomised controlled trial. When working with hospitals, however, appropriate sample size considerations can pose considerable practical and financial challenges. Additional factors to consider are the types of possible response. Suppose that huge effort and expense results in a trial with strikingly different effect sizes in individual hospitals. Irrespective of whether the overall result is statistically significant would we understand why the intervention worked (or did not work) and would we know how to modify the intervention or the health system to produce more consistent results?

Consider also the nature of the intervention. The size of any effect is likely to be related to the duration and success of support supervision and the capacity to solve problems with implementation. Alternatively time itself, through a changing sociopolitical or general health systems context, might change performance. These issues alone suggest, as has been recently recognised [26], that we need answers to questions that include: What is the pattern of performance improvement over time and how well is the intervention delivered? Is improvement related to the duration and content of supervision? Is performance dependent on sustained supervision? To what degree are hospitals able to solve problems locally? What factors, at national and local levels seem to be important in determining the degree to which hospital performance can be improved? Data collection and analyses also need to acknowledge that while the interventions are delivered at the hospital or health worker level process indicator observations are largely made at the patient level. Thus, data should ideally be of sufficient quality and quantity to allow for health worker and perhaps hospital attributes to be accounted for using statistical models that account for clustering. The demand for large, controlled studies with extremely detailed data collection that all this implies is not easily reconciled with cost-containment - the perennial research dilemma.

Our attempt to maximise the information generated by a Kenyan study, while working within a defined resource envelope, comprises a randomised, parallel group, controlled, intervention project with 4 hospitals per group. Also, incorporated are: pre-intervention baseline measures in both groups to provide for comparison of groups at baseline and for within-hospital before and after comparisons; and, multiple measures before and after the pre-defined major endpoint in the intervention group to explore the relationship between intervention delivery and its withdrawal. Further details of the Kenyan study design are included in Box 3.

Box 3. The Kenyan randomised, parallel group, controlled intervention study of an approach to improve paediatric hospital care.

Representing primarily a detailed case study, before and after, controlled design, intervention hospitals (n = 4) will receive all of the interventions listed in Box 2, beginning with a single hospital training course, over a period of 1.5 years and will be evaluated six-monthly for 2.5 years. Control hospitals, who for practical and ethical reasons cannot truly receive nothing, will receive job aides identical to those of the intervention group, a 1.5 day lecture-based introductory seminar explaining the guidelines and copies of written survey feedback reports. Control hospital performance will be evaluated at 6 months and at 18 months after the start of the study. All hospitals will be examined at baseline. Research evaluation will include:

Comprehensive ascertainment of paediatric workload and mortality in the preceding 6 months.

Assessment of availability of environmental, capital and consumable resources using a structured checklist, observation and key informant interviews based on tools previously adapted [3] from those of WHO [4].

Assessment of admission case management practices on a random selection of 400 admission episodes from the 6 months period immediately preceding the survey and linked to a unique health worker code for the clinician responsible for the admission.

Prospective evaluation of up to 50 admitted children during the survey periods (2 weeks) allowing all treatment received and the caretaker’s understanding of their child’s illness and any discharge treatment to be documented through observation and interview.

Careful observation or data collection by key informant interview will allow the availability of essential resources and changes in the organisation of care, the evolving nature of the intervention, the role of the facilitator and the role of the research / supervisory team to be collated over time.

These data will describe any temporal association of effect with the duration and nature of the intervention. If changes in the same direction and of the same magnitude are consistently observed across the intervention sites but not observed in control hospitals this will increase the plausibility that the intervention is causing the effect and indicate that the effect is not site specific. Failure to demonstrate improvements or inconsistent patterns of improvement between intervention and control hospitals will weaken the argument that the intervention had a specific effect. Such rich data will be invaluable for informing the design of future attempts to improve hospital care in Kenya and countries with similar health systems.

Which hospitals should (or could) be studied?

Kenya has eight provinces and had, at the time of study design, 70 districts. How does one try and ensure representation of diversity and attempt to limit selection bias with a relatively small sample size? To what degree will demands for research efficiency compromise the value of the results. For example, insisting on a minimum hospital workload to permit time-limited performance assessment and restricting geographic sampling to limit the number of stakeholders who must be consulted and kept informed are important practical considerations. But how will they influence the findings or generalisability? In practice selection biases seem almost impossible to overcome, making it imperative that at least the selection process is well documented to aid a study’s interpretation (See Box 4 for our compromise).

Box 4. Kenya study hospital selection.

In collaboration with the Kenyan Ministry of Health hospitals in 4 of Kenya’s 8 provinces were initially considered, avoiding areas with existing major hospital management intervention projects. Those with a minimum of 1,000 paediatric admissions and 1,200 deliveries per year were then listed together with important district specific data (see table below).

Present in this basic sampling frame were 8 hospitals included in previous evaluations of hospital care for children undertaken in 2002[1]. Other than a brief feedback visit in early 2003 the investigators had had no subsequent contact with them. As it was felt that even old data on hospital performance would be a clear advantage in understanding how hospitals change these 8 were initially considered for inclusion. However, in an effort to ensure balance if divided into two groups, one of these eight hospitals was replaced with an alternative facility, permitting seven combinations of two relatively balanced groups to be defined, with two hospitals in each of 4 provinces. After obtaining permission from the hospitals one of the seven balanced combinations was selected randomly to define intervention and control groups, described in the table below.

Conclusion

Results from this study will be reported in due course, together we are sure with further lessons learned during its conduct. What we hope to have conveyed here are insights into some of the challenges involved in preparing for and initiating such studies, a process that took almost three years in our case. It should also be clear that in this field each experiment is unique, the conditions under which the study takes place will never be the same again. Interpretation of the results is only possible if we can define as carefully as possible what has been done and in what context. Finally it should be obvious that strong partnerships with multiple stake-holders are required to undertake health system studies of a significant size. Such partnerships require a considerable investment in time for all parties with no guarantee of success. While there are increasing calls for health systems research in low income settings little of substance may be delivered unless funding agencies are prepared to invest, for the long term, in partnerships and essential preparatory work and at substantial scale if epidemiologically rigorous designs are to be employed.

Box 4 Table.

| Hospital | Malaria transmission setting |

Antenatal HIV prevalence High = >10% Mod = 5 - 10% | Infant mortality rate, per 1,000 | Catchment population with income below $2 per day | Paediatric Admissions per year | Paediatrician & Medical Officer Interns |

|---|---|---|---|---|---|---|

| Intervention | Highland | High | ~ 70 | 50 - 70% | 5,000 | + |

| Control | Highland | High | > 100 | 50 - 70% | 4,500 | + |

| Intervention | Intense | High | > 100 | 50 - 70% | 3,500 | - |

| Control | Intense | High | > 100 | 50 - 70% | 2,500 | - |

| Intervention | Low | Mod | ~ 40 | ~ 35% | 3,300 | - |

| Control | Low | Mod | ~ 40 | ~ 35% | 1,800 | - |

| Intervention | Arid | Mod | ~ 70 | 50 - 70% | 1,700 | - |

| Control | Arid | Mod | ~ 70 | 50 - 70% | 1,100 | - |

Acknowledgements

This manuscript is published with the permission of the Director of KEMRI. The authors would like to thank Prof. Lucy Gilson, Dr. Kara Hanson and Dr. Alex Rowe for specific contributions to the debate on study design and Prof. Robert Snow, Prof. Kevin Marsh, Dr. Bernhards Ogutu and Prof. Fabian Esamai to the general study design. The authors would also like to acknowledge the considerable support received in planning this study from the Division of Child Health, The Department of Preventive and Promotive Health, The Department of Standards and Regulatory Services and the Department of Curative and Rehabilitative Services all in the Ministry of Health in Kenya. Finally we would like to acknowledge the support of the Wellcome Trust in choosing to fund this work.

Funding.

This work is funded through a Wellcome Trust Senior Research Fellowship awarded to Dr. Mike English (#076827). The funders have played no role in the design of this study.

Footnotes

Conflict of Interest.

The authors have no conflict of interest.

References

- 1.Central Bureau of Statistics. Government of Kenya . Kenya Demographic and Health Survey. Nairobi, Kenya: 2003. [Google Scholar]

- 2.English M, Lanata C, Ngugi I, Smith P. The District Hospital. In: Jamison D, Alleyne G, Breman J, Claeson M, Evans D, et al., editors. Disease Control Priorities in Developing Countries - 2nd Edition. World Bank; Washington DC: 2006. pp. 1211–1228. [Google Scholar]

- 3.Bryce J, El Arifeen S, Pariyo G, Lanata C, Gwatkin D, et al. Reducing child mortality: can public health deliver? The Lancet. 2003;362:159–164. doi: 10.1016/s0140-6736(03)13870-6. [DOI] [PubMed] [Google Scholar]

- 4.Lee J. Child survival: a global health challenge. Lancet. 2003;362:262. doi: 10.1016/S0140-6736(03)14006-8. [DOI] [PubMed] [Google Scholar]

- 5.Anonymous Mexico, 2004: Global Health needs a new research agenda. The Lancet. 2004;364:1555–1556. doi: 10.1016/S0140-6736(04)17322-4. [DOI] [PubMed] [Google Scholar]

- 6.Lavis J, Posada FB, Haines A, Osei E. Use of research to inform public policymaking. The Lancet. 2004;364:1615–1621. doi: 10.1016/S0140-6736(04)17317-0. [DOI] [PubMed] [Google Scholar]

- 7.Simoes E, Peterson S, Gamatie Y, Kisanga F, Mukasa G, et al. Management of severely ill children at first-referral lelvel facilities in sub-Saharan Africa when referrral is difficult. Bulletin of the World Health Organisation. 2004;81:522–531. [PMC free article] [PubMed] [Google Scholar]

- 8.Font F, Quinto L, Masanja H, Nathan R, Ascaso C, et al. Paediatric refferals in rural Tanzania:the Kilombero District Study-a case series. BMC International Health and human rights 2: paediatric referral cases,record review. 2002 doi: 10.1186/1472-698X-2-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Peterson S, Nsungwa-Sabiiti J, Were W, Nsabagasani X, Magumba G, et al. Coping with paediatric referral-Uganda parents’ experience. The Lancet. 2004;363:1955–1956. doi: 10.1016/S0140-6736(04)16411-8. [DOI] [PubMed] [Google Scholar]

- 10.Snow R, Mungala V, Forster D, Marsh K. The role of the district hospital in child survival at the Kenyan Coast. Afr J Health Sci. 1994;1:11–15. [PubMed] [Google Scholar]

- 11.McCord C, Chowdhury Q. A cost effective small hospital in Bangladesh: what it can mean for emergency obsteric care. International Journal of Gynecology and Obstetrics. 2003;81:83–92. doi: 10.1016/s0020-7292(03)00072-9. [DOI] [PubMed] [Google Scholar]

- 12.Nolan T, Angos P, Cunha A, Muhe L, Qazi S, et al. Quality of hospital care for seriously ill children in less-developed countries. The Lancet. 2000;357:106–110. doi: 10.1016/S0140-6736(00)03542-X. [DOI] [PubMed] [Google Scholar]

- 13.English M, Esamai E, Wasunna A, Were F, Ogutu B, et al. Delivery of paediatric care at the first-referral level in Kenya. The Lancet. 2004;364:1622–1629. doi: 10.1016/S0140-6736(04)17318-2. [DOI] [PubMed] [Google Scholar]

- 14.Owino W, Korir J. Public Health Sector Efficiency in Kenya: Estimation and Policy Implications. Institute of Policy Analysis and Research; Nairobi: 1997. [Google Scholar]

- 15.Oliveira-Cruz V, Hanson K, Mills A. Approaches to overcoming health system constraints at the peripheral level: review of the evidence. Commission on Macroeconomics and Health. 2001 [Google Scholar]

- 16.Barnum H, Kutzin J. Public Hospitals in Developing Countries. The Johns Hopkins University Press; Baltimore: 1993. [Google Scholar]

- 17.Blaauw D, Gilson L, Penn-Kekana L, Schneider H. Organisational relationships and the ‘software’ of health sector reform. Washington, DC: 2003. Disease Control Priorities Project Background Paper. [Google Scholar]

- 18.Franco L, Bennett S, Kanfer R, Stubblebine P. Determinants and consequences of health worker motivation in hospitals in Jordan and Georgia. Social Science & Medicine. 2003;58:343–355. doi: 10.1016/s0277-9536(03)00203-x. [DOI] [PubMed] [Google Scholar]

- 19.Hongoro C, McPake B. How to bridge the gap in human resources for health. The Lancet. 2004;364:1451–1456. doi: 10.1016/S0140-6736(04)17229-2. [DOI] [PubMed] [Google Scholar]

- 20.Grol R. Implementation of evidence and guidelines in clinical practice: a new field in research. International Journal for Quality in Healthcare. 2000;12:455–456. doi: 10.1093/intqhc/12.6.455. [DOI] [PubMed] [Google Scholar]

- 21.Lanier D, Roland M, Burstin H, Knottnerus J. Doctor performance and public accountability. Lancet. 2003;362:1404–1408. doi: 10.1016/S0140-6736(03)14638-7. [DOI] [PubMed] [Google Scholar]

- 22.O’Brien T, Freemantle N, Oxman A, Wolf F, Davis D, et al. Continuing education meetings and workshops: effects on professional practice and health care outcomes; The Cochrane Library. 2002; [DOI] [PubMed] [Google Scholar]

- 23.O’Brien T, Oxman A, Davis D, Haynes R, Freemantle N, et al. Audit and feedback: effects on professional practice and health care outcomes; The Cochrane Library. 2002; [DOI] [PubMed] [Google Scholar]

- 24.Lilford R, Mohammed M, Spiegelhalter D, Thompson R. Use and misuse of process and outcome data in managing performance of acute medical care: avoiding institutional stigma. Lancet. 2004;363:1147–1154. doi: 10.1016/S0140-6736(04)15901-1. [DOI] [PubMed] [Google Scholar]

- 25.Rubin HR, Pronovost P, Diette GB. The advantages and disadvantages of process-based measures of health care quality. International Journal for Quality in Health Care. 2001;13:469–474. doi: 10.1093/intqhc/13.6.469. [DOI] [PubMed] [Google Scholar]

- 26.Victora C, Habicth J, Bryce J. Evidence-based public health: moving beyond randomized trials. American Journal of Public Health. 2004;94:400–405. doi: 10.2105/ajph.94.3.400. [DOI] [PMC free article] [PubMed] [Google Scholar]