Abstract

Objective

To use an advance in data envelopment analysis (DEA) called congestion analysis to assess the trade-offs between quality and efficiency in U.S. hospitals.

Study Setting

Urban U.S. hospitals in 34 states operating in 2004.

Study Design and Data Collection

Input and output data from 1,377 urban hospitals were taken from the American Hospital Association Annual Survey and the Medicare Cost Reports. Nurse-sensitive measures of quality came from the application of the Patient Safety Indicator (PSI) module of the Agency for Healthcare Research and Quality (AHRQ) Quality Indicator software to State Inpatient Databases (SID) provided by the Healthcare Cost and Utilization Project (HCUP).

Data Analysis

In the first step of the study, hospitals’ relative output-based efficiency was determined in order to obtain a measure of congestion (i.e., the productivity loss due to the occurrence of patient safety events). The outputs were adjusted to account for this productivity loss, and a second DEA was performed to obtain input slack values. Differences in slack values between unadjusted and adjusted outputs were used to measure either relative inefficiency or a need for quality improvement.

Principal Findings

Overall, the hospitals in our sample could increase the total amount of outputs produced by an average of 26 percent by eliminating inefficiency. About 3 percent of this inefficiency can be attributed to congestion. Analysis of subsamples showed that teaching hospitals experienced no congestion loss. We found that quality of care could be improved by increasing the number of labor inputs in low-quality hospitals, whereas high-quality hospitals tended to have slack on personnel.

Conclusions

Results suggest that reallocation of resources could increase the relative quality among hospitals in our sample. Further, higher quality in some dimensions of care need not be achieved as a result of higher costs or through reduced access to health care.

Keywords: Hospital efficiency, data envelopment analysis, congestion, patient safety, nurse-sensitive outcomes

Much is yet to be learned about the interaction of cost, efficiency, and quality. It is not clear that cost containment and quality improvement are mutually consistent objectives. Quality improvement can result in greater resource use because it may require more or better resources. Yet, proponents of total quality management (TQM) argue that it is possible to reduce costs and increase quality simultaneously through efficiency gains.

In this study, we use an advance in data envelopment analysis (DEA), termed congestion analysis, to ascertain whether some hospitals might be experiencing poor quality that could be corrected by changing the number and mix of inputs. Unlike the basic DEA model or stochastic frontier analysis (SFA), the most prominent frontier methods of estimating hospital efficiency, congestion analysis explicitly considers the possibility that some health care outputs can be undesirable (e.g., patient safety events). An advantage of our approach is that we can measure the percent of total outputs that could be increased, as well as how poor quality affects productivity. Further, by conducting slack analysis, we can determine which measured inputs are inefficient and do not add to quality, as well as which inputs should be increased (and by how much) to enhance quality. In addition to assessing quality and efficiency simultaneously, we can also categorize hospitals into peer groups based on efficiency. Our results have the potential to be actionable by providers and policy makers.

LITERATURE REVIEW

The literature provides mixed findings about the relationship among costs, efficiency, and quality. For example, it has been consistently demonstrated that increases in registered nurses (RNs) in the nursing mix of hospitals are positively associated with lower rates of certain patient safety events. See Haberfelde, Bedecarre, and Buffum (2005) for a review.

The use of technologically advanced capital and services has also been associated with higher quality care (Dranove and White 1998; Picone et al. 2003). However, increases in RNs and sophisticated technology also should increase costs, ceteris paribus; therefore, it is possible that higher costs (and perhaps higher inefficiency) and higher reservation quality and/or higher levels of quality are positively related. Carey and Burgess (1999) and Deily and McKay (2006) tested this relationship empirically but in both studies, higher cost or cost inefficiency was associated with increases in poor quality, as measured by risk-adjusted mortality rates. However, the authors of both these studies suggest that their results may be due to inadequate controls for case-mix severity.

The works of McCloskey (1998), Blegen, Goode, and Reed (1998), and Blegen and Vaughan (1998) offer additional insight into the relationship between quality and inefficiency. They found that increasing the number of RNs is quality enhancing, but only to a point, after which it becomes efficiency decreasing. Therefore, it may be assumed that too much of labor and capital inputs lead to inefficiency, not to higher quality. Indeed, even though Picone et al. (2003) found that slack resources and capacity are inputs to quality, too much slack or capacity will lead to inefficiency as well.

Recognizing the importance of this topic, McKay and Deily (2005) call for more research on the trade-off between quality and efficiency. They specifically focus on the issue of nurse staffing. They write, “Recent studies have highlighted the role of nursing quality as a major determinant of high quality care. An unanswered question, however, is how staffing quality affects performance when defined in terms of both quality and efficiency” (p. 357).

In the next section we describe DEA and congestion analysis. We then proceed to a description of the data and the results. The paper concludes with a discussion of the practical implications of our findings for providers and policy makers.

METHODS

The two major frontier techniques that have been used most frequently to estimate inefficiency at the hospital level are DEA and SFA. DEA is a deterministic approach based on the works by Debreu (1951) and Farrell (1957) and updated in terms of economic efficiency and productivity by Färe, Grosskopf, and Lovell (1994). Coelli et al. (2005) and Aaronson et al. (2006) suggest that contextual circumstances should determine which technique is selected when a choice between DEA and SFA has to be made. As our focus is on patient safety in a multi-input, multi-output framework, we opt for a DEA approach that specifically incorporates undesirable outputs. SFA is incapable of doing this.

DEA derives a best practice frontier by solving linear programming problems, which identify those hospitals maximizing outputs given inputs. Individual hospital performance is a proportional measure gauged relative to the frontier that is defined by hospitals deemed to be the best performers. A score of 1.00 indicates that a hospital is operating on the best practice frontier (i.e., one that is efficient). A score >1.00 indicates inefficiency with the difference between the actual score and 1.00 measuring the amount all outputs could be increased, holding inputs constant. In some instances, a hospital may be unique to all others in the sample, in which case it would receive an efficiency score of 1.00 because it lies on its own frontier. Therefore, we reiterate that efficiency is a product of the distribution of hospitals and that this performance measure is a relative measure of productivity and not an absolute measure of efficiency in the engineering sense.

Many benefits of DEA have been cited in the literature. Coelli et al. (2005) provide a comprehensive discussion, and we provide a brief summary in Appendix A. Two benefits of DEA that are particularly germane to our research are as follows. First, hospitals located in the interior of the frontier are strictly inefficient. This permits additional analyses exploring factors that separate best practice performers from less efficient producers. Second, we can decompose the DEA total efficiency measure into its various sources of inefficiency as shown in the following equation:

| (1) |

Total efficiency is measured under assumptions of constant returns to scale (CRS) (i.e., productivity does not increase with size) and strong disposability of outputs (SDO) (i.e., all outputs are considered desirable). Pure technical efficiency, variable returns to scale (VRS), measures only the input–output correspondence absent any scale or congestion effects. CRS/VRS measures scale efficiency attributed to hospital size. Congestion is derived by assessing productivity under assumptions of strong and weak disposability of outputs (WDO) (i.e., some outputs may be undesirable). In the approach taken here, we follow Balk's (1998) assertion that no complete production study can ignore the output of undesirables, particularly from a social point of view. Earlier studies have used congestion analysis to account for pollution (Färe, Grosskopf, and Lovell 1994), hospital uncompensated care (Valdmanis, Kumanarayake, and Lertiendumrong 2004; Ferrier, Rosko, and Valdmanis 2006), and hospital mortality rates (Clement et al. 2008).

As this derivation is described elsewhere (Valdmanis, Kumanarayake, and Lertiendumrong 2004; Ferrier, Rosko, and Valdmanis 2006; Clement et al. 2008), we only describe the essence of the model in brief here. A mathematical exposition of this approach is provided in Appendix A.

Total efficiency is a multiplicative total of all sources of inefficiency as seen in equation (1). (Interested readers should see Färe, Grosskopf, and Lovell [1994] for more information regarding this decomposition and the relevant economic proofs.) Here, we focus on quality congestion as it impacts the total production of hospital care. While an input minimization approach has been typically used in health care DEA studies, our quality-congestion analysis requires an output orientation because of the possible association of a high volume of patients served with the occurrence of more patient safety events. In other words, we need to measure how patient safety may be compromised if too many outputs are being produced, holding inputs fixed.

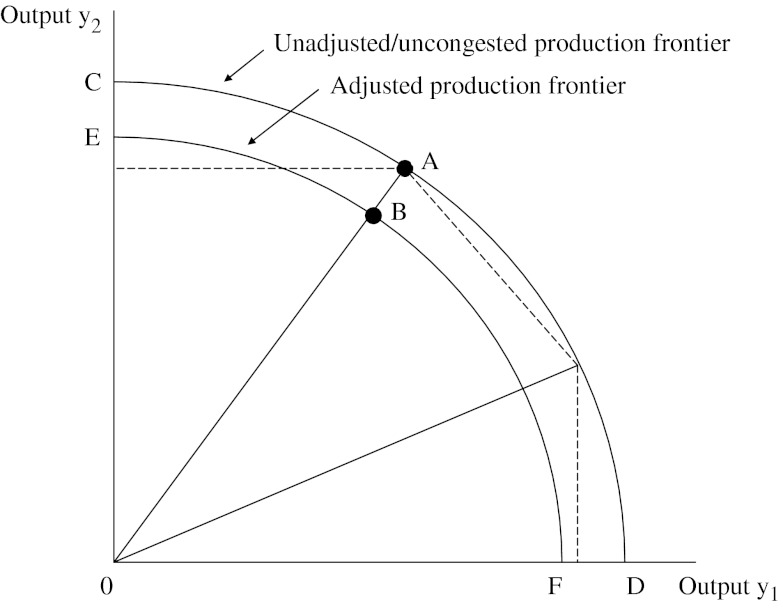

Under the SDO assumption, expansion of all outputs is desirable and reducing one output leads to the possible increase of another output. In contrast, the assumption of WDO treats expansion of some outputs as undesirable. The WDO technology is illustrated in Figure 1. Two production frontiers (i.e., maximum combinations of outputs that can be produced with fixed levels of inputs), represented by curves CD and EF, are shown in the figure. The distance between points B and A on the two output-based production frontiers illustrates congestion—good output cannot be expanded without increasing the “bad” output. Unlike the typical output substitution in productivity studies (i.e., where one good is substituted or traded off for the production of another good), the good and bad outputs move in the same direction as seen by the line. If congestion did not occur, the higher production frontier, CD, would represent greater adjusted output (i.e., the downward adjustment would be 0).

Figure 1.

Comparison of Unadjusted and Adjusted Production Frontiers

Once the quality-congestion measure is derived, we can adjust outputs to account for poor quality. This is accomplished by dividing the outputs by the quality-congestion measure, thereby discounting unadjusted output to form quality-adjusted output. If high-output hospitals have no quality congestion, we can assert that a volume–outcome relationship exists.

High-volume hospitals have been shown to have lower mortality rates than low-volume hospitals for certain technology-intensive, complicated procedures. A considerable body of literature supports the statistical association between high procedure volume and better outcomes. See Dudley et al. (2000) for a review. Staff expertize developed as a result of learning by doing has been hypothesized as an explanation for this relationship, although the reasons for the association are still not entirely understood (Elixhauser, Steiner, and Fraser 2003).

Despite the benefits of DEA identified above, there are some drawbacks. DEA has been criticized for its deterministic nature, which assumes no measurement error (Newhouse 1994). But the stochastic nature of demand may lead hospital decision makers to overestimate resource needs. Depending on one's perspective, this might overstate inefficiency. For example, assuming fixed resources and random patterns of admissions (Grannemann, Brown, and Pauly 1986), unoccupied beds might represent reservation quality (Joskow 1980) rather than slack resources. Conversely, operations that include poor forecasting and inflexible staffing systems could be accurately characterized as inefficient. The reality is probably a mix of the two situations, so the inefficiency estimates might be better viewed as an upper bound as there is no compensation for periods of excessive utilization.

DEA has also been criticized for its inability to capture quality differences (Newhouse 1994). However, with our data we can capture more quality differences than previous DEA studies, and this should partially address the second criticism.

A recent DEA-based study by Clement et al. (2008) empirically assessed how poor quality outcomes detract from overall hospital productivity. This was accomplished by applying the WDO technology to a sample of hospitals to measure the production of undesirable outputs, such as in-hospital mortality. We follow the approach used in Clement et al. (2008) but expand upon it by adjusting outputs (specifically the subset of the Agency for Healthcare Research and Quality [AHRQ] Patient Safety Indicators [PSIs] that are nurse-sensitive outcomes) to define undesirable outcomes rather than the AHRQ Inpatient Quality Indicators (IQIs) used by Clement et al. (2008). We also expand on the work by Clement et al. (2008) by comparing the slack (i.e., excess inputs) values for inputs between the model where all outputs are considered desirable and the slack values for inputs in the case when outputs are adjusted to account for relatively poor quality. Below, we discuss the insights we can gain by making these comparisons.

Slack is derived using the input-based Cooper, Seiford, and Zhu (2000) approach.1 Using the results derived from this second-step analysis, we measure the differences in the slack values for inputs obtained for the high-quality hospitals as compared to the medium- and low-quality hospitals. For example, we can analyze the over- or under-utilization of nurses, for example, by hospital quality status. If the difference between slacks is positive, it would suggest that the hospital is employing excess input that leads to inefficiency. If the difference is negative, it implies that inputs need to be increased to improve quality of care. In fact, the difference indicates the amount of inputs that need to be increased.

Unlike congestion, slack does not impede total production, but may either represent a quality input or excessive inputs leading to inefficiency. (It should be noted that while the congestion measure is multiplicative, the slack measure is additive.)

The identification of slack values can also direct management's attention to areas where inefficiency exists and adjust accordingly to optimize both production and quality of care (Sherman 1984). Therefore, this analysis can show managers how much their hospital needs to increase an input (to increase quality) or decrease an input (to reduce inefficiency) as compared with their hospital's peers.

We also assess quality differentials and slack by organizational factors (ownership, teaching status, resource expenditures, payer-mix, and system membership) and market characteristics (health maintenance organization [HMO] penetration and hospital competition). We conclude by analyzing quality and slack in order to develop a direct link between production performance and input slack.

DATA

Data come from the American Hospital Association (AHA) Annual Survey of Hospitals, augmented by variables from the Medicare Hospital Cost Reports (for number of patient days in nonacute care units), AHRQ (for measures of patient safety and hospital competition), and Solucient Inc (Evanston, IL) (for data on county-level HMO enrollment and number of residents without health insurance). Hospitals included in this study are those defined by the AHA as short term, community hospitals that report complete data. As quality variables from the application of the PSI module of the AHRQ Quality Indicator (QI) software2 to the Healthcare Cost and Utilization Project (HCUP)3 State Inpatient Databases (SID)4 were important in this analysis, this study was restricted to 34 states5 supplying HCUP data. This yielded an analytical file of 1,377 urban hospitals in 2004. As in the case of any model, the selection of inputs and outputs may affect the final results and/or ranking of hospitals in terms of quality.6 Being mindful of this concern, we follow the previous literature in determining inputs and outputs. Our inputs include bassinets, acute beds (i.e., the number of licensed and staffed beds minus the number of beds in nonacute units, such as long-term care), licensed and staffed “other” beds, FTE (full-time equivalent) RNs, licensed practical nurses (LPNs), medical residents, and other personnel. Outputs include Medicare Case Mix Index (MCMI) adjusted admissions (MCMI × admissions), total surgeries (inpatient + outpatient surgeries), total outpatient visits (ER visits + outpatient visits), total births, and total other patient days (i.e., patient days in nonacute care units).7 This specification is consistent with previous hospital DEA studies that typically use a mix of inpatient and outpatient care variables and specify surgery separately from total admissions.8 (See Hollingsworth [2003] for a complete review.)

Measures of undesirable events include the following risk-adjusted PSIs that Savitz, Jones, and Bernard (2005) indicate are sensitive to nurse staffing: failure to rescue (RPPS04), infection due to medical care (RPPS07), postoperative respiratory failure (RPPS11), and postoperative sepsis (RPPS13). Decubitus ulcer and postoperative pulmonary embolism (PE) or deep vein thrombosis (DVT) are also nurse-sensitive measures of quality; however, Houchens, Elixhauser, and Romano (2008) found that a high percentage of these events are present on admission (POA). Therefore, they are not valid measures of hospital quality, and we exclude them from our study.

In a secondary analysis, we examine the relationship between DEA-based inefficiency estimates and various correlates of inefficiency. We include an array of internal factors including ownership, which reflects the role of property rights. Teaching status is regularly included as an organizational feature that may affect a hospital's productive performance. System membership is also included in our study as systems generally have better control of resource use and are better able to exploit bulk purchasing (and other types of discounts) than independent institutions (Lindrooth, Bazzoli, and Clement 2007).

Higher resource use in hospitals has also been associated with higher quality. Therefore, we include the number of high-technology services offered,9 cost per case-mix-adjusted admission (MCMI × admissions) and outpatient volume, the amount of capital expended per bed (depreciation + interest expense), and the ratio of FTE personnel to both adjusted admissions and beds.

We also analyze a variety of variables related to patient and payer mix, including the following percentages: births to total admissions, emergency room (ER) visits to total outpatient visits, outpatient surgeries to total outpatient visits, and Medicaid and Medicare admissions to total admissions, as well as average length of stay.

Historically, hospitals often competed on a nonprice basis, which resulted in the duplication of services. More recent literature on market competition generally finds more efficient, less costly hospitals in more competitive markets. Therefore, we include the county-level Herfindahl–Hirschman index (HHI) to reflect the amount of competition faced by the hospitals in our sample.10 We also use variables compiled by Solucient Inc. to reflect HMO penetration and the percentage of the population without health insurance in the county where the hospital is located as measures of financial pressure. (We used 2002 data for the Solucient variables, as it was the most recent data available to us.)

Descriptive statistics of the inputs, outputs, and environmental factors used in our analysis are presented in Table 1.

Table 1.

Descriptive Statistics—Inputs and Outputs (N=1,377)

| Variable | Mean | SD |

|---|---|---|

| Data envelopment analysis (DEA) inputs | ||

| Bassinets | 21.09 | 19.00 |

| Full-time equivalent registered nurses (FTE RNs) | 390.43 | 382.25 |

| FTE licensed practical nurses (LPNs) | 34.95 | 39.15 |

| FTE other personnel | 1,020.76 | 1,004.67 |

| Other beds | 43.48 | 58.52 |

| Acute beds | 224.54 | 172.05 |

| FTE interns/residents | 32.64 | 111.02 |

| DEA outputs | ||

| Outpatient visits | 190,452.69 | 211,619.08 |

| Total surgeries | 10,047.11 | 8,201.15 |

| Births | 1,636.70 | 1,666.58 |

| Adjusted admissions | 20,341.69 | 17,928.23 |

| Other patients days | 14,649.93 | 15,781.39 |

| “Bad” outcomes | ||

| Failure to rescue (RPPS04) | 0.34 | 0.06 |

| Infection due to medical care (RPPS07) | 0.04 | 0.02 |

| Postoperative respiratory failure (RPPS11) | 0.09 | 0.04 |

| Postoperative sepsis (RPPS13) | 0.09 | 0.06 |

| Environmental and organizational factors | ||

| Total expenditures | 180,959,044 | 186,383,950 |

| Number high-tech services | 6.70 | 4.12 |

| Births (%) | 12.12 | 7.91 |

| Emergency room (ER) visits (%) | 29.62 | 17.33 |

| Outpatient surgeries (%) | 4.79 | 5.66 |

| Medicaid (%) | 16.70 | 10.72 |

| Medicare (%) | 42.42 | 12.21 |

| Occupancy rate | 65.31 | 14.73 |

| Cost/adjusted admission | 13,118.15 | 5,439.35 |

| Average length of stay | 5.16 | 2.44 |

| Capital/bed | 48,996.28 | 26,414.05 |

| FTE/adjusted admission | 0.11 | 0.04 |

| FTE/beds | 5.32 | 1.97 |

| Percent of population in county without health insurance* | 14.16 | 6.94 |

| Percent of population in county covered by health maintenance organization (HMO)* | 24.63 | 13.41 |

| Herfindahl–Hirschman index (HHI)* | 0.33 | 0.29 |

| Frequency (%) | ||

| COTH | 12 | |

| Minor teaching | 22 | |

| Nonteaching | 66 | |

| FP | 17 | |

| Government | 12 | |

| NFP | 71 | |

| Member of a system | 64 | |

These are market-level variables.

COTH, Council of Teaching Hospitals; FP, for-profit hospitals; NFP, not for profit hospitals.

RESULTS

We begin our results section with a presentation of the output-based measures for the unadjusted sample.11 In Table 2, we present the results for the output-based efficiency measures, as well as statistical analysis of these efficiency measures by organizational factors.

Table 2.

Descriptive Statistics—Efficiency Scores (Output-Based Data Envelopment Analysis [DEA]) (N=1,371)

| Score | Mean | SD | Minimum | Maximum |

|---|---|---|---|---|

| Constant returns to scale (CRS) efficiency | 1.35 | 0.02 | 1.00 | 2.43 |

| Variable returns to scale (VRS) efficiency | 1.09 | 0.12 | 1.00 | 2.28 |

| Scale efficiency | 1.24 | 0.21 | 1.00 | 2.13 |

| Congestion | 1.03 | 0.09 | 1.00 | 2.28 |

On average, under CRS technology, outputs could be increased by 25.9 percent [(1.35−1)/1.35)] without increasing inputs in our sample hospitals. Under VRS technology, outputs could be increased by 8.3 percent. The amount of total production lost due to congestion is 2.9 percent. The average scale inefficiency, 19.4 percent, dominates pure technical efficiency, implying that hospitals may be either too large or too small.

Given the wide variation among the efficiency scores, we are interested in whether organizational factors may be relevant in explaining the differences among the sample hospitals. We present efficiency scores by organizational factors in Table 3.

Table 3.

Mean Efficiency Score Values (Output-Based Data Envelopment Analysis [DEA]) and Statistically Significant Wilcoxon Tests by Ownership Status, Teaching Status, and System Membership (N=1,371)

| Characteristic | CRS Efficiency | VRS Efficiency | Scale Efficiency | Congestion† |

|---|---|---|---|---|

| Ownership | ||||

| Public | 1.38*** | 1.09 | 1.26*** | 1.04 |

| Not-for-profit (NFP) | 1.36 | 1.09 | 1.25 | 1.03 |

| For-profit (FP) | 1.29 | 1.08 | 1.19 | 1.03 |

| Teaching | ||||

| COTH | 1.43*** | 1.05*** | 1.37*** | 1.00*** |

| Minor teaching | 1.36 | 1.08 | 1.25 | 1.02 |

| Nonteaching | 1.33 | 1.10 | 1.21 | 1.04 |

| System membership | ||||

| Member | 1.33** | 1.08** | 1.23 | 1.02* |

| Nonmember | 1.39 | 1.10 | 1.26 | 1.04 |

p<.001.

p<.01.

p<.1.

Even though these means appear very similar, the Wilcoxon test is a rank score, so that more highly congested hospitals are either public or NFP relative to FP hospitals.

CRS, constant returns to scale; VRS, variable returns to scale.

We find that the sample public hospitals were more inefficient on all four measures of inefficiency (i.e., overall, technical, scale, and quality congestion). Not-for-profit (NFP) hospitals were the next most inefficient category, although we found little difference between public and NFP hospitals. For-profit (FP) hospitals performed best, on average.

Turning next to teaching status, compared to hospitals with either minor teaching or no teaching program, COTH hospitals were significantly less efficient on the overall and scale measures, but did outperform the other two types of hospitals in both pure technical inefficiency and quality congestion. The latter result is consistent with the observation that better quality of care is provided in major teaching hospitals (Taylor, Whellan, and Sloan 1999).

Interorganizational arrangements may also affect efficiency. System-member hospitals outperformed nonsystem hospitals on all four measures of inefficiency. Therefore, this organizational factor appears to have a positive effect on efficiency.

Building on the quality framework in this paper, we also assess the slack values from each of the two production frontiers—one based on all outputs (which we call the unadjusted frontier) and the other based on the adjusted frontier where we discount outputs by quality congestion to account for poor outcomes. There exist few significant results, but none that are consistent. For descriptive statistics for variables with statistically significant slack by organizational factors and environmental effects, see Tables A1 and A2 in Appendix A.

We next assess how environmental factors and slack values are more directly associated given the quality provided by the hospitals in our sample. To do this, we separate hospitals in our sample into three subgroups according to their quality congestion scores. Those without congestion (i.e., quality congestion scores of 1.0) are classified as high quality (n=901) while the cut-off score (based on the median value for congested hospitals) for medium-quality (n=233) and low-quality (n=237) hospitals was 1.04. These findings are given in Table 4.

Table 4.

Quality-Based Results (High Quality: CONG=1; Medium Quality: CONG<1.04; Low Quality: CONG>1.04)† Environmental Factors and Slack Differentials

| High Quality (n=901) | Medium Quality (n=233) | Low Quality (n=237) | |

|---|---|---|---|

| Overall data envelopment analysis (DEA) efficiency estimate* | 1.30 | 1.43 | 1.44 |

| Organizational and environmental factors | |||

| Total expenditures* | 198,944,690 | 187,362,856 | 106,287,500 |

| High-tech services* | 7.06 | 6.90 | 5.20 |

| Medicare (%)** | 42.05 | 41.87 | 44.40 |

| Occupancy rate* | 66.68 | 66.08 | 59.37 |

| Average length of stay* | 5.31 | 4.88 | 4.88 |

| Cost/admission* | 13,442 | 13,012 | 11,990 |

| Capital/bed* | 50,511 | 51,005 | 50,511 |

| Full-time equivalent (FTE)/bed** | 5.32 | 5.60 | 5.04 |

| Health maintenance organization (HMO) (%)** | 25.46 | 22.38 | 23.67 |

| Herfindahl–Hirschman index (HHI)* | 0.30 | 0.36 | 0.43 |

| Slack differentials | |||

| Bassinets*** | 6.26 | 5.99 | 5.99 |

| FTE registered nurses (RNs) | 0.09 | 2.28 | −2.88 |

| FTE licensed practical nurses (LPNs)**** | −0.82 | 3.35 | −1.35 |

| FTE other personnel** | 4.74 | 13.02 | −40.71 |

| FTE interns/residents | 1.12 | 1.06 | −6.26 |

| Other beds**** | −0.22 | −0.0001 | 0.52 |

| Acute beds*** | −0.03 | −0.54 | 0.51 |

| Congestion (%)* | 1.00 | 1.02 | 1.16 |

p<.001.

p<.01.

p<.05.

p<.10.

A congestion score of 1 indicates no congestion (i.e., bads crowding out goods). The cut-off between medium and low levels of CONG is 1.04, which is the number of hospitals between the mean and the 50th percentile of the congestion score (medium) and above the 50th percentile (low).

In general, our results show that increasing total expenditures and the availability of high-technology services were related to high quality at statistically significant levels (p<.05). Associated with this finding is our result that teaching hospitals in our sample experienced no congestion. In addition, we also find that high-quality hospitals had higher mean overall efficiency than the lower-quality hospitals. The main differences among the three levels of hospital quality in terms of patient mix, were Medicare percent and HMO enrollment, and definite trends emerged regarding resources expended per patient. Hospitals providing higher quality were also in more competitive markets, indicated by the lower average HHI score.

Turning next to slack values and quality, we find that patterns are most similar between high-quality and medium-quality hospitals. Specifically, efficiency could be improved if high- and medium-quality hospitals used fewer other personnel and bassinets. High-quality hospitals needed to cut fewer other personnel than medium-quality hospitals. The only variable for which the sign of the slack variable diverged was LPNs—high-quality hospitals had too few, while medium-quality hospitals had too many. Low-quality hospitals should increase inputs in the LPN and other personnel labor categories and decrease inputs in each of the capital categories.

DISCUSSION

Before discussing our results, it is important to mention some of the limitations of our analysis. First, DEA assumes that there is no measurement error. While we see little potential for a systematic bias, it is quite likely that measurement error exists. Therefore, it is better to focus on broad trends (e.g., in what areas do low-quality hospitals tend to have slack or insufficient inputs?) than on specific point estimates. Further, like most studies of efficiency or costs, there is always the potential for specification error (i.e., omission of important variables).

We found that certain hospital characteristics are associated with inefficiency and quality congestion. The overall inefficiency score was 1.35 (i.e., outputs could be increased by 26 percent). Of this, about 3 percent of the total inefficiency can be attributed to quality congestion. Using this measure, we adjust hospital outputs in order to determine if increases in any of the inputs could increase quality or whether these increases just added to the overall inefficiency.

Consistent with property rights theory, FP hospitals exhibited the least amount of inefficiency relative to either public or NFP hospitals. We also found that most hospitals in our sample were operating at diseconomies of scale, which suggests that slack, especially on beds, may be a factor leading to inefficiency. The finding that public hospitals tend to have too many acute care beds—was supported by the relatively high-scale inefficiency (operating at diseconomies of scale). The analysis of slack on inputs by ownership demonstrated that public hospitals tended to have too many other personnel.

Teaching hospitals were relatively more efficient in terms of pure efficiency and were not congested; the main source of their inefficiency was diseconomies of scale. Members of systems performed better relative to their counterparts, suggesting this type of organization led to better performance.

When comparing environmental variables and slack, we find that higher resource use in terms of total expenditures and high-technology equipment was associated with higher quality. Higher quality hospitals were also operating in markets with a higher percentage of HMO enrollees and more hospital competition on average. Interestingly, these hospitals also had relatively less labor per bed than hospitals with medium quality.

Organizationally, high-quality hospitals tended to have too many labor inputs, leading to inefficiency. Low-quality hospitals, however, hired too few labor inputs in all categories, especially FTE other personnel. An interesting finding from our analysis is that there appears to be a serious need for more LPNs in hospitals producing many poor outcomes.

One of the main benefits of using the DEA approach that we have adopted here is that hospitals are compared to their peers. This approach yields a more realistic picture for policy makers and hospital managers than setting up a theoretical engineering standard that hospitals may or may not be able to achieve. As we are able to identify sources of inefficiency as they relate to quality enhancement, decision makers have the ability to alter input mixes in order to realize savings from both reducing inefficiency and poor quality. The policy implications include more evidence that costs and quality do not necessarily need to be traded off. Indeed hospitals characterized as high quality tended to have higher overall efficiency than the other hospitals in our study. Thus, our results indicate that both these objectives can be met with the more efficient use of resources at hand.

Acknowledgments

Joint Acknowledgement/Disclosure Statement: Dr. Ryan Mutter is an employee of AHRQ, which provides the HCUP data, as well as the Quality Indicator (QI) software, which are used in this paper.

The authors gratefully acknowledge the data organizations in participating states that contributed data to HCUP and that we used in this study: the Arizona Department of Health Services; California Office of Statewide Health Planning and Development; CHIME Inc. (Connecticut); Florida Agency for Health Care Administration; Georgia Hospital Association; Hawaii Health Information Corporation; Illinois Health Care Cost Containment Council; Indiana Hospital and Health Association; Kansas Hospital Association; Kentucky Cabinet for Health and Family Services; Maryland Health Services Cost Review Commission; Massachusetts Division of Health Care Finance and Policy; Michigan Health and Hospital Association; Minnesota Hospital Association; Missouri Hospital Industry Data Institute; Nebraska Hospital Association; University of Nevada, Las Vegas; New Hampshire Department of Health and Human Services; New Jersey Department of Health and Senior Services; New York State Department of Health; North Carolina Department of Health and Human Services; Ohio Hospital Association; Oregon Association of Hospitals and Health Systems; Rhode Island Department of Health; South Carolina State Budget and Control Board; South Dakota Association of Healthcare Organizations; Tennessee Hospital Association; Texas Department of State Health Services; Utah Department of Health; Vermont Association of Hospitals and Health Systems; Virginia Health Information; Washington State Department of Health; West Virginia Health Care Authority; and Wisconsin Department of Health and Family Services.

Disclosures: None.

Disclaimer: This paper does not represent the policy of either the AHRQ or the U.S. Department of Health and Human Services (DHHS). The views expressed herein are those of the authors and no official endorsement by AHRQ or DHHS is intended or should be inferred.

NOTES

The Cooper, Seiford, and Zhu (2000) model is similar to that used by Färe and Grosskopf (2001), which we use here. The only difference is that a nonnegative slack value is added to the input constraint that needs to be minimized. For further discussion on the differences in these approaches, see Cooper, Seiford, and Zhu (2000) and Färe and Grosskopf (2001).

AHRQ makes this software available for free on its website, http://www.qualityindicators.ahrq.gov.

HCUP is a family of health care databases and related software tools developed through a Federal–State–Industry partnership to build a multi-state health data resource for health care research and decision making. For more information, go to http://www.hcup-us.ahrq.gov/home.jsp.

For each participating state, the SID contains the discharge record for every inpatient hospitalization that occurred. For more information, see http://www.hcup-us.ahrq.gov/sidoverview.jsp.

The 34 states are Arizona, California, Connecticut, Florida, Georgia, Hawaii, Illinois, Indiana, Kansas, Kentucky, Maryland, Massachusetts, Michigan, Minnesota, Missouri, Nebraska, New Hampshire, New Jersey, New York, Nevada, North Carolina, Ohio, Oregon, Rhode Island, South Carolina, South Dakota, Tennessee, Texas, Utah, Vermont, Virginia, Washington, West Virginia, and Wisconsin.

Sensitivity analyses had found that sample means and hospital rankings were not affected by any of the 10 different DEA specifications (Valdmanis 1992).

Endogeneity might be a problem for this analysis. There could be a factor that causes both inputs and outputs to be higher, even though we are attributing that third factor to an included input.

Initially, it might appear that total surgeries and total admissions may result in double counting. However, we argue that we are focusing on the number of procedures in the former and the number of patients in the latter. Because surgeries are often considered separate outputs from patient admissions or patient days, we feel that there is no double counting in terms of inputs.

Zuckerman, Hadley, and Iezzoni (1994) developed an index based on eight high-technology services. In 2004, the AHA Annual Survey of Hospitals changed the classification system for hospital services. Several services were split into two or more related services (e.g., transplant services were split into seven distinct types of transplants). Counting each separately listed service that was related to the index originally developed by Zuckerman, Hadley, and Iezzoni (1994), we included 17 services.

HCUP provides a variety of public-use hospital competition measures based on the research of Wong, Zhan, and Mutter (2005) at http://www.hcup-us.ahrq.gov/toolssoftware/hms/hms.jsp. This paper uses the 2004 county-level HHI based on discharges.

It should be noted that outliers might arise due to data or measurement errors. However, when measuring productivity/efficiency under the VRS technology, outliers typically are self-referent meaning that they define their own efficiency score. We double checked to ascertain if this were the case and in a minority of hospitals with scores of 1.00 (efficient) we found that they were unique. However, we include them in our analysis, particularly when comparing efficiency with environmental factors.

SUPPORTING INFORMATION

Additional supporting information may be found in the online version of this article:

Appendix SA1: Author Matrix.

Appendix A1: Benefits of DEA.

Table A1: Mean Slack Values and Statistically Significant Wilcoxon Tests between Slack Values and Organizational Factors (statistically significant relationships only) N = 1,371

Table A2: Statistically Significant Spearman Correlations Between Slack Values and Organization and Environmental Factors (N = 1,371).

Please note: Wiley-Blackwell is not responsible for the content or functionality of any supporting materials supplied by the authors. Any queries (other than missing material) should be directed to the corresponding author for the article.

REFERENCES

- Aaronson WE, Bernet PM, Pilyavsky A, Rosko M, Valdmanis V. East–West: Does It Make a Difference to Hospital Efficiencies in Ukraine? Health Economics. 2006;15(11):1175–86. doi: 10.1002/hec.1120. [DOI] [PubMed] [Google Scholar]

- Balk B. Industrial Price, Quantity, and Productivity Indices: The Micro-Economic Theory and an Application. Boston: Kluwer Academic Publishers; 1998. [Google Scholar]

- Blegen M, Goode C, Reed L. Nurse Staffing and Patient Outcomes. Nursing Research. 1998;47:43–50. doi: 10.1097/00006199-199801000-00008. [DOI] [PubMed] [Google Scholar]

- Blegen M, Vaughan T. A Multi-Site Study of Nurse Staffing and Patient Occurrences. Nurse Economics. 1998;16:196–203. [PubMed] [Google Scholar]

- Carey K, Burgess J. On Measuring the Hospital Cost/Quality Trade-Off. Health Economics. 1999;8:509–20. doi: 10.1002/(sici)1099-1050(199909)8:6<509::aid-hec460>3.0.co;2-0. [DOI] [PubMed] [Google Scholar]

- Clement J, Valdmanis V, Bazzoli G, Zhao M, Chukmaitov A. Is More Better? An Analysis of Hospital Outcomes and Efficiency with a DEA Model of Output Congestion. Health Care Management Science. 2008;11:67–77. doi: 10.1007/s10729-007-9025-8. [DOI] [PubMed] [Google Scholar]

- Coelli T, Rao D, O'Donnel C, Battese G. An Introduction to Efficiency and Productivity Analysis. 2d Edition. New York: Springer; 2005. [Google Scholar]

- Cooper W, Seiford L, Zhu J. A Unified Additive Model Approach for Evaluating Efficiency and Congestion. Social-Economic Planning Sciences. 2000;34:1–36. [Google Scholar]

- Debreu G. The Coefficient of Resource Utilization. Econometrica. 1951;19(3):273–92. [Google Scholar]

- Deily M, McKay N. Cost Inefficiency and Mortality Rates in Florida Hospitals. Health Economics. 2006;15:419–31. doi: 10.1002/hec.1078. [DOI] [PubMed] [Google Scholar]

- Dranove D, White W. Medicaid-Dependent Hospitals and Their Patients: How Have They Fared? Health Services Research. 1998;33(part 1):163–86. [PMC free article] [PubMed] [Google Scholar]

- Dudley RA, Johansen K, Brand R, Rennie D, Milstein A. Selective Referral to High-Volume Hospitals: Estimating Potentially Avoidable Deaths. Journal of the American Medical Association. 2000;283:1159–66. doi: 10.1001/jama.283.9.1159. [DOI] [PubMed] [Google Scholar]

- Elixhauser A, Steiner C, Fraser I. Volume Thresholds and Hospital Characteristics in the United States. Health Affairs. 2003;22:167–77. doi: 10.1377/hlthaff.22.2.167. [DOI] [PubMed] [Google Scholar]

- Färe R, Grosskopf S. When Can Slacks be Used to Identify Congestion? An Answer to W.W. Cooper, L., Seiford, and J. Zhu. Social-Economic Planning Sciences. 2001;35:217–21. [Google Scholar]

- Färe R, Grosskopf S, Lovell CK. Production Frontiers. Cambridge: Cambridge University Press; 1994. [Google Scholar]

- Farrell M. The Measurement of Productive Efficiency. Journal of the Royal Statistics Society Series A, General. 1957;120(3):253–81. [Google Scholar]

- Ferrier G, Rosko M, Valdmanis V. Analysis of Uncompensated Hospital Care Using a DEA Model of Output Congestion. Health Care Management Science. 2006;9:181–8. doi: 10.1007/s10729-006-7665-8. [DOI] [PubMed] [Google Scholar]

- Grannemann TW, Brown RS, Pauly MV. Estimating Hospital Costs: A Multiple-Output Analysis. Journal of Health Economics. 1986;5:107–27. doi: 10.1016/0167-6296(86)90001-9. [DOI] [PubMed] [Google Scholar]

- Haberfelde M, Bedecarre D, Buffum M. Nurse-Sensitive Patient Outcomes: An Annotated Bibliography. Journal of Nursing Administration. 2005;35(6):293–9. doi: 10.1097/00005110-200506000-00005. [DOI] [PubMed] [Google Scholar]

- Hollingsworth B. Non-Parametric and Parametric Applications Measuring Efficiency in Health Care. Health Care Management Science. 2003;6(4):203–18. doi: 10.1023/a:1026255523228. [DOI] [PubMed] [Google Scholar]

- Houchens R, Elixhauser A, Romano P. How Often Are Potential Patient Safety Events Present on Admission? Joint Commission Journal on Quality and Patient Safety. 2008;34:154–63. doi: 10.1016/s1553-7250(08)34018-5. [DOI] [PubMed] [Google Scholar]

- Joskow P. The Effects of Competition and Regulation on Hospital Bed Supply and the Reservation Quality of the Hospital. Bell Journal of Economics. 1980;11:421–47. [Google Scholar]

- Lindrooth R, Bazzoli G, Clement J. Hospital Reimbursement and Treatment Intensity. Southern Economic Journal. 2007;73:575–87. [Google Scholar]

- McCloskey J. Nurse Staffing and Patient Outcomes. Nurse Outlook. 1998;46:199–200. doi: 10.1016/s0029-6554(98)90047-1. [DOI] [PubMed] [Google Scholar]

- McKay N, Deily M. Comparing High- and Low-Performing Hospitals under Risk-Adjusted Excess Mortality and Cost Inefficiency. Health Care Management Review. 2005;30:347–60. doi: 10.1097/00004010-200510000-00009. [DOI] [PubMed] [Google Scholar]

- Newhouse J. Frontier Estimation: How Useful a Tool for Health Economics? Journal of Health Economics. 1994;13(3):317–22. doi: 10.1016/0167-6296(94)90030-2. [DOI] [PubMed] [Google Scholar]

- Picone G, Sloan F, Chou S, Taylor D. Does Higher Hospital Cost Imply Higher Quality of Care? Review of Economics and Statistics. 2003;85(1):51–62. [Google Scholar]

- Savitz L, Jones C, Bernard S. Quality Indicators Sensitive to Nurse Staffing in Acute Care Settings. Advances in Patient Safety: From Research to Implementation. Volume 4, AHRQ Publication No. 050021 (4) Rockville, MD: Agency for Healthcare Research and Quality; 2005. [PubMed] [Google Scholar]

- Sherman D. Hospital Efficiency Measurement and Evaluation: Empirical Test of a New Technique. Medical Care. 1984;22:922–35. doi: 10.1097/00005650-198410000-00005. [DOI] [PubMed] [Google Scholar]

- Taylor D, Whellan D, Sloan F. Effects of Admission to a Teaching Hospital on the Cost and Quality of Care for Medicare Beneficiaries. New England Journal of Medicine. 1999;340(4):293–9. doi: 10.1056/NEJM199901283400408. [DOI] [PubMed] [Google Scholar]

- Valdmanis V. Sensitivity Analysis for DEA Models: An Empirical Example Using Public versus NFP Hospitals. Journal of Public Economics. 1992;48:185–205. [Google Scholar]

- Valdmanis V, Kumanarayake L, Lertiendumrong J. Capacity of Thai Public Hospitals and the Production of Care for Poor and Non-Poor Patients. Health Services Research. 2004;39(6, part 2):2117–34. doi: 10.1111/j.1475-6773.2004.00335.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong H, Zhan C, Mutter R. Do Different Measures of Hospital Competition Matter in Empirical Investigations of Hospital Behavior. Review of Industrial Organization. 2005;26:27–60. [Google Scholar]

- Zuckerman S, Hadley J, Iezzoni L. Measuring Hospital Efficiency with Frontier Cost Functions. Journal of Health Economics. 1994;13:255–80. doi: 10.1016/0167-6296(94)90027-2. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.