Abstract

Objective

This study examined rapid word learning in 5- to 14-year-old children with normal and impaired hearing. The effects of age and receptive vocabulary were examined as well as those of high-frequency amplification. Novel words were low-pass filtered at 4 kHz (typical of current amplification devices) and at 9 kHz. It was hypothesized that: 1) the children with normal hearing would learn more words than the children with hearing loss, 2) word learning would increase with age and receptive vocabulary for both groups, and 3) both groups would benefit from a broader frequency bandwidth.

Design

Sixty children with normal hearing and 37 children with moderate sensorineural hearing losses participated in this study. Each child viewed a 4-minute animated slideshow containing 8 nonsense words created using the 24 English consonant phonemes (3 consonants per word). Each word was repeated 3 times. Half of the 8 words were low-pass filtered at 4 kHz and half were filtered at 9 kHz. After viewing the story twice, each child was asked to identify the words from among pictures in the slide show. Prior to testing, a measure of current receptive vocabulary was obtained using the Peabody Picture Vocabulary Test (PPVT-III).

Results

The PPVT-III scores of the hearing-impaired children were consistently poorer than those of the normal-hearing children across the age range tested. A similar pattern of results was observed for word-learning in that the performance of the hearing-impaired children was significantly poorer than that of the normal-hearing children. Further analysis of the PPVT and word learning scores suggested that although word learning was delayed in the hearing-impaired children, their performance was consistent with their receptive vocabularies. Additionally, no correlation was found between overall performance and the age of identification, age of amplification, or years of amplification in the children with hearing loss. Results also revealed a small increase in performance for both groups in the extended bandwidth condition but the difference was not significant at the traditional p=0.05 level.

Conclusion

The ability to learn words rapidly appears to be poorer in children with hearing loss over a wide range of ages. These results coincide with the consistently poorer receptive vocabularies for these children. Neither the word-learning or receptive-vocabulary measures were related to the amplification histories of these children. Finally, providing an extended high-frequency bandwidth did not significantly improve rapid word-learning for either group with these stimuli.

Of the many developmental processes that take place during childhood, the ability to learn new words is particularly robust and is an essential aspect of speech and language development. Young children (2-3 years old) are believed to learn approximately 2 new words per day, whereas older children (8-12 years old) can learn as many as 12 words per day. By the time a child graduates from high school, it is estimated that he or she will have a vocabulary of approximately 60,000 words [see Bloom (2000) for a review].

In recent years, the focus of research on vocabulary development has shifted away from gross measures of vocabulary size toward an examination of the underlying word-learning process. In rapid word-learning paradigms, children are introduced to unfamiliar words presented via live-voice or through video-taped stories. The stimuli are typically nonsense words comprised of English phonemes or real English words that are substantially beyond the vocabulary levels of the children. After the new words have been paired with appropriate objects (e.g., novel words with nonsense objects) and presented to the child a fixed number of times, the child is asked to identify the object associated with each word, or to produce the word upon presentation of the object. The child’s performance reflects his or her ability to learn new words under the conditions imposed in the experiment. Lederberg (2000) distinguishes two types of word learning: rapid word-learning (i.e., fast mapping) and novel mapping (i.e., quick incidental learning). In rapid word-learning, the child is given an explicit reference whereas in novel mapping the child is expected to make the connection between the new word and the unfamiliar object. A rapid word-learning paradigm was chosen for the present study.

A number of factors associated with rapid word-learning have been identified. These factors may be categorized as internal or external to the child. For example, internal factors include the size of a child’s current receptive vocabulary (Gilbertson & Kamhi, 1995; Mervis & Bertrand, 1994; Mervis & Bertrand, 1995), the child’s age (Bloom.P., 2000; Oetting, Rice, & Swank, 1995), awareness of phonemic representations (Briscoe, Bishop, & Norbury, 2001), and working memory capacity (Gathercole, Hitch, Service, & Martin, 1997). Variables external to a child include, but are not limited to, the number of exposures to a new word (Dollaghan, 1987; Rice, Oetting, Marquis, Bode, & Pae, 1994), the number of new words presented within a fixed period of time (Childers & Tomasello, 2002), the type of word to be learned (e.g., noun, verb, adjective) (Oetting et al., 1995; Stelmachowicz, Pittman, Hoover, & Lewis, 2004), and the phonotactic probability of the new word (Storkel, 2001; Storkel, 2003; Vitevitch, Luce, Charles-Luce, & Kemmerer, 1997; Vitevitch, Luce, Pisoni, & Auer, 1999). Phonotactic probability is the likelihood of certain phoneme sequences occurring in a particular order within a given language. Words having high phontactic probability contain sequences of phonemes that occur in the same order and position as many other words. Words having low phonotactic probability contain phoneme sequences that occur rarely across words. More rapid word-learning has been reported for words containing common sequences than for words containing uncommon sequences (Storkel, 2001; Storkel & Rogers, 2000).

For children with hearing loss, additional factors may affect word learning. Word learning may be reduced due to the degree and configuration of hearing loss, non-optimal hearing aid characteristics, environmental noise, reverberation, and distance from the speaker. Several studies have been conducted to determine the effect of hearing loss on word learning in children (Gilbertson et al., 1995; Lederberg, Prezbindowski, & Spencer, 2000; Stelmachowicz et al., 2004). Gilbertson and Kamhi (1995), for example, investigated the production and perception of nonsense words in 5- to 9-year-old children with normal hearing and in 7- to 10-year-old children with hearing loss. Their results revealed significantly poorer performance by the children with hearing loss for at least 3 of the 4 nonsense words presented. Additional analyses revealed a significant correlation with receptive vocabulary for the hearing-impaired children. Lederberg et al. (2000), studied both rapid word-learning and novel mapping in 3- to 6-year-old children with moderate to profound hearing losses. Although they hypothesized that rapid word-learning would precede novel mapping in these children, their results suggest that for some children, the reverse was true. They also reported a significant relation between performance and receptive vocabulary.

Stelmachowicz et al. (2004) examined rapid word-learning in 6- to 10-year-old children with moderate hearing losses and children with normal hearing. In addition to group differences, significant effects of presentation level and number of exposures were reported. That is, the children with hearing loss performed more poorly overall even though both groups benefited from higher stimulus levels and more exposures. Regression analyses indicated that current vocabulary size was a significant predictor of word learning for both groups. Specifically, the higher the score on the Peabody Picture Vocabulary Test (Dunn & Dunn, 1997), the more words a child was able to learn. These investigators also concluded that a word-learning paradigm may be useful for comparing different types of hearing-aid signal processing.

One type of hearing-aid signal processing that may affect word learning is extended high-frequency amplification. The results of several studies suggest that extended high-frequency amplification may be important to the process of speech and language development. Specifically, the perception of the phoneme /s/1 was found to improve with bandwidths greater than those typically provided by current amplification devices (Kortekaas & Stelmachowicz, 2000; Stelmachowicz, Pittman, Hoover, & Lewis, 2001; Stelmachowicz, Pittman, Hoover, & Lewis, 2002). The results of other studies with hearing-impaired adults however, have shown that extending the bandwidth of current amplification devices may have equivocal or detrimental affects on speech perception (Ching, Dillon, & Byrne, 1998; Hogan & Turner, 1998; Turner, 1999; Turner & Cummings, 1999). For example, Hogan and Turner (1998) reported no significant increase in the recognition of nonsense syllables presented at bandwidths ≥ 4 kHz in hearing-impaired adults, and in some cases, recognition decreased. Others, however, have shown some improvements in performance under specific conditions or for individual adults (Hornsby & Ricketts, 2003; Skinner, 1980; Sullivan, Allsman, Nielsen, & Mobley, 1992; Turner et al., 1999).

The purpose of the present study was to compare rapid word-learning in normal-hearing and hearing-impaired children representing a broad range of ages and receptive vocabularies. In addition, the effects of stimulus bandwidth were evaluated. It was hypothesized that: 1) the children with normal hearing would learn more words than the children with hearing loss, 2) word learning would increase with age and receptive vocabulary for both groups, and 3) both groups would benefit from a broader frequency bandwidth.

Methods

Subjects

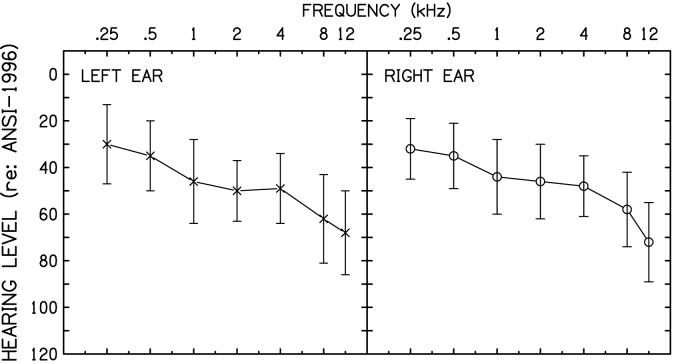

Sixty children with normal hearing and 37 children with hearing loss participated in this study. The children with normal hearing were between the ages of 5 and 13 years (mean: 9 years, 3 months; SD: 2 years, 3 months) and had hearing thresholds less than 20 dB HL at octave frequencies from 0.25 to 12 kHz. The children with hearing loss were between the ages of 5 and 14 years (mean: 9 years, 4 months; SD: 2 years, 4 months). These children had mild to moderately-severe sensorineural hearing losses that were sloping in configuration. Figure 1 shows the average audiometric thresholds (±1 SD) for the right and left ears. Prior to testing, normal middle-ear function was confirmed in both groups using acoustic immittance measures.

Figure 1.

Average hearing threshold levels (± 1 SD) for the right and left ears of the children with hearing loss.

Vocabulary

To estimate the receptive vocabulary of each child, raw scores from the Peabody Picture Vocabulary Test [PPVT-III, Form B; (Dunn et al., 1997)] were obtained. The children with hearing loss wore their personal hearing aids during administration of the test. Although measures of language age and standard scores were calculated from the PPVT-III results, it was decided that the raw scores would provide an independent variable that would better reflect the relation between age and vocabulary for both groups. It is important to note, however, that raw scores indicate the number of words within the test that were familiar to the child and is not the typical application of the PPVT-III.

Word-Learning Paradigm

Although previous perceptual bandwidth studies with children focused on the phoneme /s/ because of it’s relative importance in English (e.g., denotes plurality, possession, tense), it is only one of many English phonemes. In the current study, the novel words included a wide variety of speech sounds. Eight CVCVC nonsense words were created using the 24 English consonant phonemes (3 per word). Table 1 shows the orthographic representation of each word in the first column and the phonetic transcription in the second column. None of the phonemes occurred in positions contrary to the English language (e.g., /N/ in the initial position, /η/ in the final position). Using each consonant phoneme only once ensured that the acoustic characteristics of each were represented across the stimuli. However, because the acoustic characteristics of a phoneme can change substantially with its position in a word, not all acoustic characteristics could be incorporated. Also, these words did not reflect the frequency with which each phoneme occurs in American English [e.g., 5 times as many /s/ phonemes than /g/ phonemes (Denes, 1963)]. To achieve such a goal, far too many words would have been required (approximately 387).

Table 1.

Presentation characteristics of the novel words

| Word | Phonetic Transcription | Picture | Order in Both Stories | Story 1 | Story 2 | ||

|---|---|---|---|---|---|---|---|

| Bandwidth (kHz) | Level (dB SPL) | Bandwidth (kHz) | Level (dB SPL) | ||||

| Deewim | diwɪm |  |

1 | 4 | 67 | 9 | 67 |

| Vojing | vodʒɪɲ |  |

2 | 4 | 66 | 9 | 65 |

| Bayrill | beɪrɪl |  |

3 | 9 | 64 | 4 | 64 |

| Hoochiff | hutʃɪf |  |

4 | 4 | 61 | 9 | 62 |

| Zeekin | zikɪn |  |

5 | 9 | 59 | 4 | 58 |

| Youzzap | juʒæp |  |

6 | 9 | 69 | 4 | 68 |

| Thogish | θogɪʃ |  |

7 | 9 | 68 | 4 | 67 |

| Tathus | teð∂s |  |

8 | 4 | 58 | 9 | 60 |

The 8 novel words were embedded in a 4-minute, animated story (Microsoft, PowerPoint). The story was read by a female talker and recorded at a sampling rate of 22.05 kHz through a microphone with a flat frequency response to 10 kHz (AKG, C535 EB). Stress was placed on the initial syllable of each word during recording. In the story, the words were paired with pictures of uncommon toys (column 3, Table 1) and repeated on three different occasions within the story in the order noted in column 4 of Table 1.

Because new factors affecting word learning continue to be identified, it is safe to assume that all of the factors are not yet known. If the stimuli presented in each condition of interest are not equivalent, significant effects may be due to factors other than those being investigated. For example, because Stelmachowicz et al. (2004) presented half of their eight novel words at one level and half at a different level, it is not clear whether the effect of level was truly significant or if the words presented at one level were easier to learn due to a variable not accounted for in the stimuli or paradigm (e.g., phonotactic probability, picture preference).

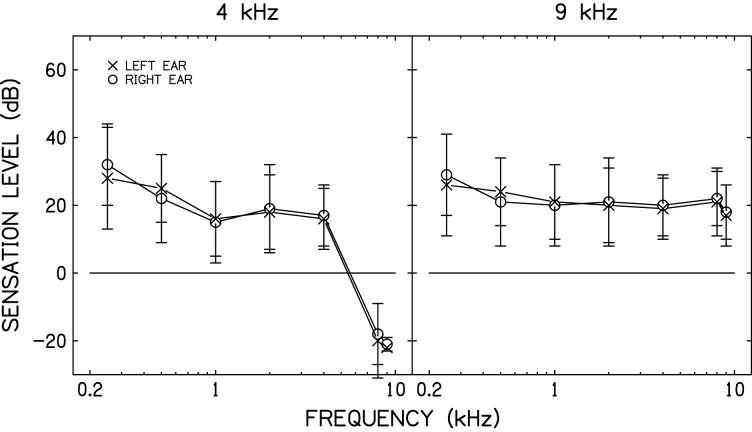

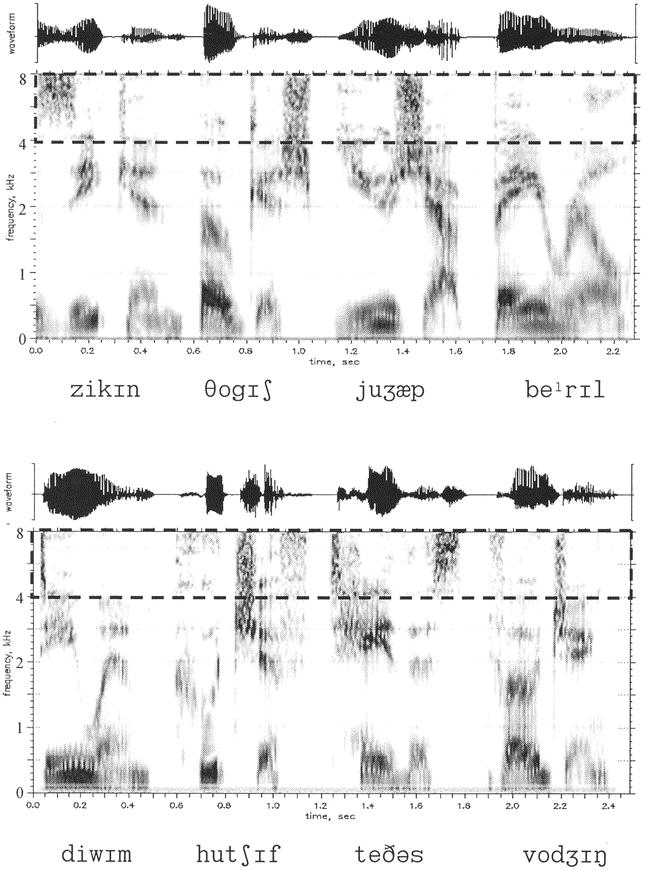

To reduce the effects of unknown variables on word learning in the present study, the stimuli in each condition were counterbalanced and presented to separate groups of children. Specifically, one group of children learned half the words in the 4-kHz condition and half in the 9-kHz condition. Another group of children learned the same words but the bandwidth conditions were reversed. This was achieved by creating separate versions of the same story in which everything remained the same except the words that were low-pass filtered. Columns 5 and 6 of Table 1 list the filter conditions and presentation levels (see Audibility section, below) of each word in both stories. The advantage of this approach is that variables that might affect word learning (known and unknown) are distributed across the two groups of children. The disadvantage is that twice the number of children is needed to maintain adequate statistical power and only one or two variables may be manipulated within an experiment. Because hearing loss in children is rather heterogeneous (Pittman & Stelmachowicz, 2003), it can be difficult to recruit large numbers of subjects with similar degrees and configurations of hearing losses. However, the question of interest in this study was considered compelling enough to justify the effort. Therefore, 4 of the 8 words were low-pass filtered at 4 kHz with a rejection rate of approximately 60 dB/octave. The remaining 4 words were low-pass filtered with the rest of the story at 10 kHz. Because a 10-kHz low-pass filter sharply attenuates frequencies above approximately 9.5 kHz, the effective bandwidth of this condition was estimated to be 9 kHz. Spectrograms of the eight words are provided in Figure 2. The dashed lines in each panel indicate the portion of the stimuli that was removed in the 4-kHz low-pass filter condition. It is important to note that the frequency scale in this figure is not the typical linear configuration. The figure was manipulated to reflect a log scale and better represent the relatively small portion of the signal that was removed in the narrow bandwidth condition.

Figure 2.

Spectrograms of the eight novel words. The dashed lines indicate the portion of the stimuli removed in the 4-kHz bandwidth condition.

To reduce the perceptual discontinuities associated with filtering at 4 kHz, the story segments immediately preceding and following the 4-kHz words were also filtered. Although this did not eliminate the discontinuities entirely, those that remained were subtle and described to the children as a less than perfect recording of the story. The instructions given to the children were, “You will hear a story that has words you’ve never heard before that are names of toys you’ve probably never seen before. We want to find out how many of those toy names you can remember after only hearing the story twice.” The children also were told that they did not have to learn to produce the words. Although previous studies have demonstrated children’s ability to learn novel words through incidental exposures, a more direct approach was implemented in this study for two reasons. First, it seemed unlikely that a truly incidental word-learning paradigm could be achieved in a laboratory setting with children old enough to discern that they were participating in a learning study. Second, it was thought that without instruction the children might try to determine the purpose of the study on their own and incorrectly focus their attention on some other aspect of the story

All testing was completed under earphones (Sennheiser, 25D) via binaural presentation. The story was presented to the children with normal hearing at 60 dB sound pressure level (SPL), which is consistent with average conversational speech. To accommodate the individual hearing levels of the children with hearing loss, frequency shaping was applied to the stimuli using target gain values for average conversational speech provided by the Desired Sensation Level fitting algorithm (Seewald et al., 1997). DSL provides targets for frequencies between 0.25 and 6 kHz only. The targets for 8 and 12 kHz were estimated by comparing the level of the long-term average spectra of the stimuli to each listener’s hearing levels. Sufficient frequency shaping was applied to provide at least a 10 dB sensation level at 9 kHz.

Audibility

The presentation levels of each word in each bandwidth condition (Table 1) reflect the long-term average of the three utterances in each story as presented to the normal-hearing children. Note that the overall levels of each word varied from 59 to 69 dB SPL (typical for conversational speech) and that for some words these levels were slightly higher in the 9-kHz bandwidth due to the added spectral energy. The audibility of each word was then calculated relative to the hearing thresholds of the children with hearing loss. This ensured that the words were presented at levels well above threshold so that the 10 dB variation across words would be unlikely to affect performance significantly.

Estimates of audibility typically are calculated by comparing hearing levels to the long-term average of the stimuli recorded at the tympanic membrane using a probe microphone. Because small variations in probe-tube placement can result in large variations in SPL in the high frequencies (Gilman & Dirks, 1986), audibility of the 4- and 9-kHz stimuli was confirmed using measures of sensation level. Hearing threshold levels at octave frequencies (0.25 to 12 kHz) were obtained through an audiometer (GSI 61) with the same earphones used during the word-learning paradigm (Sennheiser, 25D). The hearing levels at each frequency were then converted to SPL by measuring the levels developed in a 6-cm3 coupler through these earphones. Thresholds at 9 kHz were interpolated from the thresholds at 8 and 12 kHz using the following formula:

where fU and fL are the upper and lower frequencies adjacent to the threshold being interpolated (in Hz) and θ is the hearing threshold in dB SPL at those frequencies. Separate concatenated files of the 4- and 9-kHz stimuli were then recorded in the same 6-cm3 coupler and the long-term average spectra were measured in third-octave bands.2 Sensation level was calculated as the difference between the amplified speech spectrum and the listener’s hearing thresholds.

Figure 3 shows the average stimulus sensation levels (±1 SD) for the children with hearing loss as a function of frequency. The left and right panels show the 4- and 9-kHz low-pass filter conditions, respectively. The parameter in each panel is ear. These functions show that: 1) on average, the individual frequency shaping characteristics resulted in similar sensation levels for both ears for all children, 2) adequate audibility was provided at frequencies greater than 9 kHz, and 3) sufficient filtering was applied to the narrower bandwidth condition (4 kHz).

Figure 3.

Average stimulus sensation levels (± 1 SD) for the 4- and 9-kHz bandwidth conditions. The parameter in each panel is ear.

Word Identification Task

Immediately following the 2nd presentation of the story, word learning was evaluated using an identification task in which pictures of all eight words were displayed on a touch-screen monitor. Each child was asked to touch the picture that corresponded to the word presented. Each word was presented 10 times for a total of 80 trials. The total test time for this task was between 8 and 10 minutes. The bandwidth conditions for the version of the story presented to the child were maintained during testing. Feedback was given for correct responses using an interactive video game format to help maintain interest in the task. To reduce the possibility of further word learning during the identification task, reinforcement for the 7- to 14-year-olds was delayed until after every 5th response when the game would advance by the number of correct responses. Because many of the youngest children (5- and 6-year-olds) had difficulty understanding the concept of delayed reinforcement, trial-by-trial feedback was provided (n=16).

Results

Group Effects

Overall, the children with normal hearing out performed the children with hearing loss. The average performance for the word identification task was 56.7% (SD=25.6%) for the children with normal hearing and 41.5% (SD=26.7%) for the children with hearing loss. A one-way ANOVA revealed a significant difference between the performance of the two groups (F(1,95)=7.810, p=0.006). To determine the effects of immediate and delayed feedback on word learning, performance was calculated after every 10 trials over the 80 trial series. The average performance for each block of trials is given in Table 2. An increase in performance as the test progressed was not observed for any group. Interestingly, the performance of the youngest 4 hearing-impaired children appeared to decrease throughout the test despite feedback on every trial. A repeated-measures analysis of variance again revealed a significant difference in overall performance between the normal-hearing and hearing-impaired groups (F(1,92) = 4.936, p=0.029). However, there was no significant linear increase or decrease in performance from the first to the last block of trials for either type of reinforcement (F(1,92)=1.491, p=0.225).

Table 2.

Average performance (% correct) on the word-learning task for each block of ten trials (total of 80 trials). Data are shown for the 5-6 year children receiving immediate reinforcement and for the 7-14 year olds receiving delayed reinforcement

| Block of 10 Trials | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Group | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

| 5-6 year olds (immediate reinforcement) | |||||||||

| NHC (n=12) | Mean | 39 | 26 | 30 | 29 | 25 | 40 | 39 | 35 |

| (SD) | (16) | (15) | (22) | (16) | (23) | (18) | (24) | (27) | |

| HIC (n=4) | Mean | 47 | 37 | 22 | 20 | 15 | 17 | 22 | 15 |

| (SD) | (17) | (15) | (18) | (14) | (19) | (12) | (12) | (12) | |

| 7-14 year olds (delayed reinforcement) | |||||||||

| NHC (n=48) | Mean | 65 | 62 | 58 | 56 | 57 | 65 | 70 | 60 |

| (SD) | (27) | (24) | (29) | (27) | (29) | (30) | (25) | (28) | |

| HIC (n=33) | Mean | 40 | 44 | 41 | 40 | 37 | 42 | 49 | 45 |

| (SD) | (30) | (29) | (28) | (31) | (31) | (31) | (30) | (29) | |

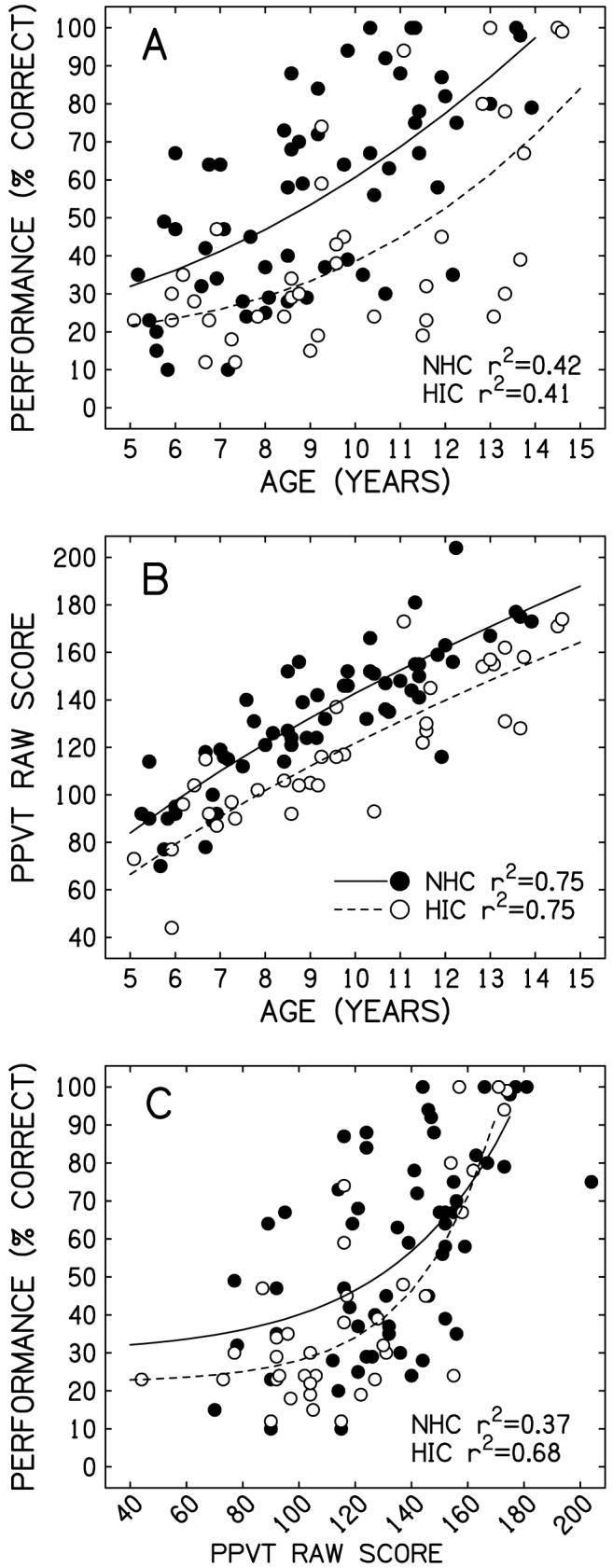

The three panels in Figure 4 show the relation between word learning, PPVT-III, and age for the children with normal hearing (filled circles) and the children with hearing loss (open circles). Non-linear functions, maximized for goodness of fit, are displayed for each group in each panel. In Panel A, performance on the word identification task (percent correct for the 80-trial word-learning task) is plotted as a function of age. Although considerable variability in rapid word-learning was apparent for both groups, the performance of the children with hearing loss was consistently poorer than that of the normal-hearing children across the age range tested. In Panel B, the PPVT-III raw scores are plotted as a function of age. Again, the scores for the children with hearing loss were consistently poorer than those of the children with normal hearing. A multivariate ANOVA revealed significant differences between groups for both word learning (F(1,94)=20.046, p<0.0001) and receptive vocabulary (F(1,94)=44.808, p<0.0001) after the effects of age were controlled.

Figure 4.

Panel A: performance on the word-learning task as a function of age. Panel B: PPVT-III raw score as a function of age. Panel C: performance on the word-learning task as a function of PPVT-III raw score. The parameter in each panel is group. R2 values indicate the goodness of fit for the non-linear functions fit to each data set.

Because the hearing-impaired children performed more poorly on both the word learning and PPVT-III tasks, the relation between word learning and receptive vocabulary was examined. Panel C shows the relation between performance on the word identification task and the PPVT-III raw score. Unlike the functions fit to the data in Panels A and B, the functions fit to the data in Panel C are less parallel. These results suggest that word learning in the children with hearing loss was consistent with that of the normal-hearing children having similar PPVT scores. In fact, only two children performed in a manner inconsistent with their PPVT-III scores. One child scored well on the PPVT-III but poorer than expected on the word-learning task (far right data point). The other child performed better on the word-learning task than would be predicted from the PPVT-III score (far left data point). These two children either failed to attend to one of the two tasks or they used strategies to learn new words that could not be predicted by the PPVT-III. Overall, these data suggest a predictable relation between word-learning and receptive vocabulary.

Finally, it was of interest to know if each child’s amplification history was related to his or her ability to learn new words. Table 3 shows the group assignment, current age, age of identification of the hearing loss, age of amplification, years of amplification, and overall performance on the word-identification task for each child with hearing loss at the time of testing. Separate bivariate correlations were calculated between overall performance and age at identification (r=0.20, p=0.242), age at amplification (r=0.25, p=0.167), and years of amplification (r=0.32, p=0.066). In each case the correlations were not significant. These results are consistent with studies showing that simplistic measures of hearing aid history are not always good predictors of performance because other factors such as compliance with amplification, progression of hearing loss, and parental involvement may also contribute substantially (Moeller, 2000).

Table 3.

Test group, performance, and amplification history of each hearing-impaired child

| Subject # | Group Assign. | Age (yrs:mos) | Age of Identification of Hearing Loss (yrs:mos) | Age of Amplification (yrs:mos) | Years of Amplification at Test (yrs:mos) | Word-Learning Performance (%) |

|---|---|---|---|---|---|---|

| 1 | 2 | 5:1 | 0:0 | 0:5 | 4:8 | 23 |

| 2 | 1 | 5:11 | 0:0 | 4:5 | 1:6 | 30 |

| 3 | 2 | 5:11 | 2:0 | 2:3 | 3:8 | 23 |

| 4 | 1 | 6:3 | 1:8 | 1:9 | 5:6 | 35 |

| 5 | 2 | 6:5 | 2:11 | NOT AMPLIFIED | 22 | |

| 6 | 2 | 6:8 | 3:2 | 3:2 | 3:6 | 12 |

| 7 | 1 | 6:9 | 0:2 | 2:6 | 4:7 | 23 |

| 8 | 2 | 6:11 | 5:0 | 5:0 | 1:11 | 47 |

| 9 | 1 | 7:3 | 5:11 | 6:0 | 1:3 | 18 |

| 10 | 1 | 7:4 | 0:0 | 0:6 | 6:10 | 12 |

| 11 | 2 | 7:10 | 1:6 | 1:6 | 6:4 | 24 |

| 12 | 1 | 8:5 | 3:9 | 3:10 | 4:7 | 24 |

| 13 | 2 | 8:6 | 5:8 | 5:10 | 2:8 | 34 |

| 14 | 2 | 8:7 | 0:0 | 2:5 | 6:2 | 29 |

| 15 | 1 | 8:7 | 7:6 | 7:6 | 1:2 | 30 |

| 16 | 2 | 8:9 | 1:0 | 1:2 | 7:7 | 15 |

| 17 | 1 | 9:1 | 3:6 | 3:7 | 5:6 | 19 |

| 18 | 1 | 9:2 | 6:5 | 6:10 | 2:4 | 59 |

| 19 | 1 | 9:4 | 5:10 | 5:11 | 3:5 | 74 |

| 20 | 2 | 9:4 | ∼5:0 | 8.11 | 0:5 | 38 |

| 21 | 1 | 9:7 | 1:0 | NOT AMPLIFIED | 48 | |

| 22 | 2 | 9:7 | 3:1 | NOT AMPLIFIED | 45 | |

| 23 | 1 | 9:9 | 4:11 | 5:0 | 4:9 | 24 |

| 24 | 2 | 10:4 | 5:0 | NOT AMPLIFIED | 94 | |

| 25 | 1 | 11:2 | 0:2 | 0:9 | 10:5 | 19 |

| 26 | 1 | 11:6 | 6:5 | 6:10 | 4:8 | 23 |

| 27 | 1 | 11:7 | 4:0 | 4:6 | 7:1 | 32 |

| 28 | 1 | 11:7 | 4:0 | 4:6 | 7:1 | 45 |

| 29 | 2 | 11:8 | 3:4 | 3:5 | 8:3 | 80 |

| 30 | 2 | 12:10 | 1:7 | 1:8 | 11:2 | 100 |

| 31 | 2 | 13:0 | 4:4 | 4:10 | 8:2 | 24 |

| 32 | 1 | 13:2 | 2:4 | 2:5 | 10:9 | 30 |

| 33 | 1 | 13:4 | 4:8 | 4:9 | 8:7 | 78 |

| 34 | 2 | 13:4 | 4:5 | 4:5 | 8:11 | 39 |

| 35 | 1 | 13:8 | 11:9 | 11:9 | 1:11 | 67 |

| 36 | 2 | 13:9 | 1:6 | 2:5 | 11:4 | 100 |

| 37 | 2 | 14:5 | 10:5 | 10:10 | 3:7 | 99 |

Bandwidth Effects

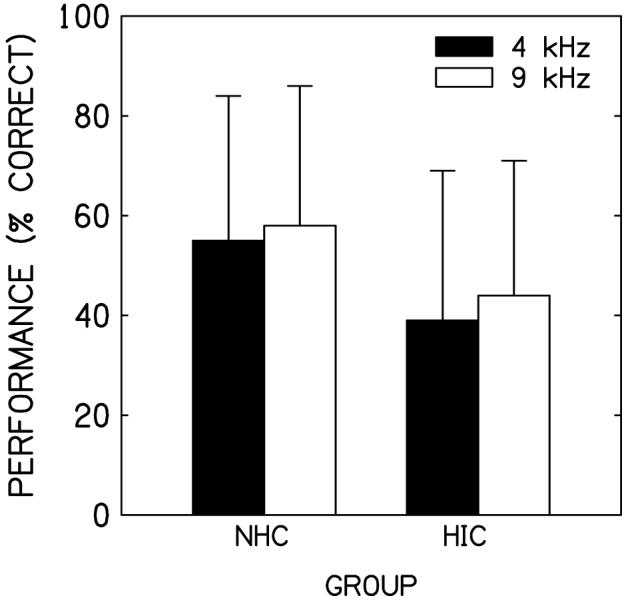

Recall that one of the purposes of this study was to determine the effect of extended high-frequency amplification on word learning. Those results are shown in Figure 5. Mean performance (+1 SD) for the word-identification task is plotted as a function of group. The filled and open bars represent performance for the 4- and 9-kHz conditions, respectively. The large standard deviations reflect the wide age range (5 to 14 years) of the children in this study and their correspondingly wide range of PPVT-III scores. On average, both groups demonstrated small increases in word learning as a function of bandwidth (3.4% for the children with normal hearing and 5.3% for the children with hearing loss). A univariate ANOVA was performed to characterize the effects of group (F(1,776)=17.549, p<0.001) and bandwidth (F(1,776) = 2.729, p=0.099) after the effects of receptive vocabulary (F(1,776) = 14.648, p<0.001), and age (F(1,776) = 15.122, p<0.001) were controlled. These results again confirm the strong relation between word learning and hearing status, receptive vocabulary, and age. The effect of bandwidth, however, was not significant at the traditional p=0.05 level.

Figure 5.

Average performance (±1 SD) on the word-learning task as a function of group for the 4-kHz bandwidth condition (solid bars) and the 9-kHz bandwidth condition (open bars).

Discussion

The purpose of the present study was to extend our knowledge of word learning in children with hearing loss compared to children with normal hearing. Of particular interest was the difference between groups across a wide range of ages and receptive vocabularies. Also, the effect of high-frequency amplification was examined. The rapid word-learning paradigm used in this study was chosen for several reasons. First, rapid word-learning has been used successfully and extensively with children having specific language impairment. Second, a variable of interest can be evaluated in a single test session rather than waiting several months or years for the effects to become apparent through the typical course of development. Third, factors internal and external to a child may be evaluated more systematically and in an environment free from uncontrolled variables like compliance with hearing aid use and varying levels of parental involvement. However, it is important to note a limitation of this paradigm. Specifically, each of the 8 words was comprised of 3 unique English phonemes. This unique phonemic composition meant that the children only needed to learn one phoneme per word to perform well. If these children adopted this strategy, the paradigm would be relatively insensitive to changes in bandwidth. In other words, this paradigm may have been a best-case scenario for word-learning and a worst-case scenario for detecting bandwidth effects.

On average, the results of this study suggest that the receptive vocabulary of the hearing-impaired children lagged behind those of their age-matched normal-hearing peers which is consistent with previous research (Blair, Peterson, & Viehweg, 1985; Blamey et al., 2001; Davis, Elfenbein, Schum, & Bentler, 1986; Davis, Shepard, Stelmachowicz, & Gorga, 1981; Gilbertson et al., 1995). Although clear differences in the PPVT-III raw scores were apparent, none of the children would have been identified as having delayed lexical development by virtue of their standard scores. Specifically, the scores of only 5 of the hearing-impaired children were >1 standard deviation below the mean and none of the children were >2 standard deviations.

The ability of the children with hearing loss to learn new words was also reduced compared to the children with normal hearing. Although the children with hearing loss learned fewer words than their age-matched normal-hearing peers, they performed at a level consistent with their receptive vocabularies. These data suggest an overall delay in vocabulary acquisition rather than an increasing disparity between the two groups due to the presence of hearing loss. Also, there was no age at which the word-learning rate of the hearing-impaired children accelerated to close the gap between the two groups. Instead, the delayed vocabulary acquisition of the hearing-impaired children was consistent across age. It is important to note, however, that these cross-sectional data may not necessarily reflect the pattern that would be observed in a longitudinal study.

Finally, the results of this study revealed small improvements in overall performance in the extended bandwidth condition. The lack of a significant difference at the traditional p=0.05 level suggests that the effect was not robust. It is important to recall however, that each novel word in the present study contained three unique phonemes. The children could have easily learned the words via the phonemes that were resistant to the bandwidth conditions. Recent data (Stelmachowicz, Pittman, Hoover, Lewis, & Moeller, 2004) have shown that even hearing-impaired children who are identified and aided early exhibit marked delays in the acquisition of all speech sounds relative to their normal-hearing peers. Interestingly, these delays were shortest for vowels and longest for the fricative class, supporting the concept that hearing-aid bandwidth may influence word learning. In future bandwidth studies, it may be wise to construct words with a higher representation of phonemes from the fricative class. Also, in addition to studying the effect of bandwidth on overall word-learning performance (short-term effects) it may be of interest to determine the rate of word learning over time (long-term effects). In fact, during the course of typical vocabulary development it is expected that all children eventually become proficient with most of the words introduced to them. Repeated exposures over time (as occurs naturally in childhood) may produce different results than those found in the short term. In summary, the results of the present study suggest that, although word learning in these children did not increase significantly as a function of bandwidth, the extended high-frequency amplification was not detrimental to word learning.

Acknowledgements

The authors would like to thank Mary Pat Moeller for her comments on earlier versions of this manuscript as well as the helpful comments of three anonymous reviewers. This work was supported by grants from the National Institute on Deafness and Other Communication Disorders, National Institutes of Health (R01 DC04300 and P30 DC04662)

Footnotes

The peak energy of /s/ occurs between 4 and 9 kHz depending on the talker (Boothroyd, Erickson, & Medwetsky, 1994; Pittman, Stelmachowicz, Lewis, & Hoover, 2003).

Because much of the upper frequencies of the 9-kHz third-octave band (frequency range = 8.018 to 10.102 kHz) were attenuated by the 10-kHz low-pass filter, stimulus levels at 9 kHz were calculated out to 9.335 kHz, which represented the 6-dB down point relative to the amplitude at 8.018 kHz.

Reference List

- Blair J, Peterson M, Viehweg S. The effects of mild sensorineural hearing loss on academic performance of young school-age children. Volta Review. 1985;87:87–93. [Google Scholar]

- Blamey PJ, Sarant JZ, Paatsch LE, Barry JG, Bow CP, Wales RJ, et al. Relationships among speech perception, production, language, hearing loss, and age in children with impaired hearing. J.Speech Lang Hear.Res. 2001;44:264–285. doi: 10.1044/1092-4388(2001/022). [DOI] [PubMed] [Google Scholar]

- Bloom P. How Children Learn the Meaning of Words. MIT Press; Cambridge, MA: 2000. Fast mapping in the course of word learning; pp. 25–53. [Google Scholar]

- Boothroyd A, Erickson FN, Medwetsky L. The hearing aid input: a phonemic approach to assessing the spectral distribution of speech. Ear Hear. 1994;15:432–442. [PubMed] [Google Scholar]

- Briscoe J, Bishop DV, Norbury CF. Phonological processing, language, and literacy: a comparison of children with mild-to-moderate sensorineural hearing loss and those with specific language impairment. J.Child Psychol.Psychiatry. 2001;42:329–340. [PubMed] [Google Scholar]

- Childers JB, Tomasello M. Two-year-olds learn novel nouns, verbs, and conventional actions from massed or distributed exposures. Dev.Psychol. 2002;38:967–978. [PubMed] [Google Scholar]

- Ching TY, Dillon H, Byrne D. Speech recognition of hearing-impaired listeners: predictions from audibility and the limited role of high-frequency amplification. J.Acoust.Soc.Am. 1998;103:1128–1140. doi: 10.1121/1.421224. [DOI] [PubMed] [Google Scholar]

- Davis JM, Elfenbein J, Schum R, Bentler RA. Effects of mild and moderate hearing impairments on language, educational, and psychosocial behavior of children. J.Speech Hear.Disord. 1986;51:53–62. doi: 10.1044/jshd.5101.53. [DOI] [PubMed] [Google Scholar]

- Davis JM, Shepard NT, Stelmachowicz PG, Gorga MP. Characteristics of hearing-impaired children in the public schools: part II--psychoeducational data. J.Speech Hear.Disord. 1981;46:130–137. doi: 10.1044/jshd.4602.130. [DOI] [PubMed] [Google Scholar]

- Denes PB. On the statistics of spoken English. Journal of the Acoustical Society of America. 1963;35:892–905. [Google Scholar]

- Dollaghan CA. Fast mapping in normal and language-impaired children. J.Speech Hear.Disord. 1987;52:218–222. doi: 10.1044/jshd.5203.218. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3rd ed. American Guidance Services, Inc.; Circle Pines, MN: 1997. [Google Scholar]

- Gathercole SE, Hitch GJ, Service E, Martin AJ. Phonological short-term memory and new word learning in children. Dev.Psychol. 1997;33:966–979. doi: 10.1037//0012-1649.33.6.966. [DOI] [PubMed] [Google Scholar]

- Gilbertson M, Kamhi AG. Novel word learning in children with hearing impairment. J.Speech Hear.Res. 1995;38:630–642. doi: 10.1044/jshr.3803.630. [DOI] [PubMed] [Google Scholar]

- Gilman S, Dirks DD. Acoustics of ear canal measurement of eardrum SPL in simulators. J.Acoust.Soc.Am. 1986;80:783–793. doi: 10.1121/1.393953. [DOI] [PubMed] [Google Scholar]

- Hogan CA, Turner CW. High-frequency audibility: benefits for hearing-impaired listeners. J.Acoust.Soc.Am. 1998;104:432–441. doi: 10.1121/1.423247. [DOI] [PubMed] [Google Scholar]

- Hornsby BW, Ricketts TA. The effects of hearing loss on the contribution of high- and low-frequency speech information to speech understanding. J.Acoust.Soc.Am. 2003;113:1706–1717. doi: 10.1121/1.1553458. [DOI] [PubMed] [Google Scholar]

- Kortekaas RW, Stelmachowicz PG. Bandwidth effects on children’s perception of the inflectional morpheme /s/: acoustical measurements, auditory detection, and clarity rating. J.Speech Lang Hear.Res. 2000;43:645–660. doi: 10.1044/jslhr.4303.645. [DOI] [PubMed] [Google Scholar]

- Lederberg AR, Prezbindowski AK, Spencer PE. Word-learning skills of deaf preschoolers: the development of novel mapping and rapid word-learning strategies. Child Dev. 2000;71:1571–1585. doi: 10.1111/1467-8624.00249. [DOI] [PubMed] [Google Scholar]

- Mervis CB, Bertrand J. Early lexical acquisition and the vocabulary spurt: a response to Goldfield & Reznick. J.Child Lang. 1995;22:461–468. doi: 10.1017/s0305000900009880. [DOI] [PubMed] [Google Scholar]

- Mervis CB, Bertrand J. Acquisition of the novel name--nameless category (N3C) principle. Child Dev. 1994;65:1646–1662. doi: 10.1111/j.1467-8624.1994.tb00840.x. [DOI] [PubMed] [Google Scholar]

- Moeller MP. Early intervention and language development in children who are deaf and hard of hearing. Pediatrics. 2000;106:E43. doi: 10.1542/peds.106.3.e43. [DOI] [PubMed] [Google Scholar]

- Oetting JB, Rice ML, Swank LK. Quick Incidental Learning (QUIL) of words by school-age children with and without SLI. J.Speech Hear.Res. 1995;38:434–445. doi: 10.1044/jshr.3802.434. [DOI] [PubMed] [Google Scholar]

- Pittman AL, Stelmachowicz PG. Hearing loss in children and adults: audiometric configuration, asymmetry, and progression. Ear Hear. 2003;24:198–205. doi: 10.1097/01.AUD.0000069226.22983.80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pittman AL, Stelmachowicz PG, Lewis DE, Hoover BM. Spectral characteristics of speech at the ear: implications for amplification in children. J.Speech Lang Hear.Res. 2003;46:649–657. doi: 10.1044/1092-4388(2003/051). [DOI] [PubMed] [Google Scholar]

- Rice ML, Oetting JB, Marquis J, Bode J, Pae S. Frequency of input effects on word comprehension of children with specific language impairment. J.Speech Hear.Res. 1994;37:106–122. doi: 10.1044/jshr.3701.106. [DOI] [PubMed] [Google Scholar]

- Seewald RC, Cornelisse LE, Ramji KV, Sinclair ST, Moodie KS, Jamieson DG. DSL v4.1 for Windows: A software implementation of the Desired Sensation Level (DSL{i/o]) method for fitting linear gain and wide-dynamic-range compression hearing instruments. [Computer software] Hearing Healthcare Research Unit, University of Western Ontario; London, Ontario, Canada: 1997. [Google Scholar]

- Skinner MW. Speech intelligibility in noise-induced hearing loss: effects of high-frequency compensation. J.Acoust.Soc.Am. 1980;67:306–317. doi: 10.1121/1.384463. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Aided perception of /s/ and /z/ by hearing-impaired children. Ear Hear. 2002;23:316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Novel-word learning in children with normal hearing and hearing loss. Ear Hear. 2004;25:47–56. doi: 10.1097/01.AUD.0000111258.98509.DE. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Effect of stimulus bandwidth on the perception of /s/ in normal- and hearing-impaired children and adults. J.Acoust.Soc.Am. 2001;110:2183–2190. doi: 10.1121/1.1400757. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE, Moeller MP. The importance of high-frequency audibility in the speech and language development of children with hearing loss. Arch.Otolaryngol.Head Neck Surg. 2004;130:556–562. doi: 10.1001/archotol.130.5.556. [DOI] [PubMed] [Google Scholar]

- Storkel HL. Learning new words: phonotactic probability in language development. J.Speech Lang Hear.Res. 2001;44:1321–1337. doi: 10.1044/1092-4388(2001/103). [DOI] [PubMed] [Google Scholar]

- Storkel HL. Learning new words II: Phonotactic probability in verb learning. J.Speech Lang Hear.Res. 2003;46:1312–1323. doi: 10.1044/1092-4388(2003/102). [DOI] [PubMed] [Google Scholar]

- Storkel HL, Rogers MA. The effect of probabilistic phonotactics on lexical acquisition. Clinical Linguistics & Phonetics. 2000;14:407–425. [Google Scholar]

- Sullivan JA, Allsman CS, Nielsen LB, Mobley JP. Amplification for listeners with steeply sloping, high-frequency hearing loss. Ear Hear. 1992;13:35–45. doi: 10.1097/00003446-199202000-00008. [DOI] [PubMed] [Google Scholar]

- Turner CW. The limits of high-frequency amplification. Hearing Aid Journal. 1999;52:10–14. [Google Scholar]

- Turner CW, Cummings KJ. Speech audibility for listeners with high-frequency hearing loss. Am.J.Audiol. 1999;8:47–56. doi: 10.1044/1059-0889(1999/002). [DOI] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA, Charles-Luce J, Kemmerer D. Phonotactics and syllable stress: implications for the processing of spoken nonsense words. Lang Speech. 1997;40(Pt 1):47–62. doi: 10.1177/002383099704000103. [DOI] [PubMed] [Google Scholar]

- Vitevitch MS, Luce PA, Pisoni DB, Auer ET. Phonotactics, neighborhood activation, and lexical access for spoken words. Brain Lang. 1999;68:306–311. doi: 10.1006/brln.1999.2116. [DOI] [PMC free article] [PubMed] [Google Scholar]