Abstract

Two experiments examined young–old differences in speed of identifying emotion faces and labeling of emotion expressions. In Experiment 1, participants were presented arrays of 9 faces in which all faces were identical (neutral expression) or 1 was different (angry, sad, or happy). Both young and older adults were faster identifying faces as “different” when a discrepant face expressed anger than when it expressed sadness or happiness, and this was true whether the faces were schematics or photographs of real people. In Experiment 2, participants labeled the Experiment 1 schematic and real faces. Older adults were significantly worse than young when labeling angry schematic faces, and angry and sad real faces. Together, this research indicates no age differences in identifying discrepant angry faces from an array, although older adults do have difficulty choosing the correct emotion label for angry faces.

Keywords: Aging, Emotion labeling, Pop-out effect

THERE are good reasons for thinking that threatening, angry faces might capture attention particularly well compared with nonthreatening faces. In our evolutionary history, the ability to spot a threat quickly and take action might have made all the difference in enabling survival, making it likely that enhanced threat detection would have been selected for in evolution. Indeed, although the precise methodology has an effect on the outcome (Juth, Lundqvist, Karlsson, & Öhman, 2005; Purcell, Stewart, & Skov, 1996), there is evidence that young adults tend to detect an angry, threatening face in an array of other stimuli more quickly than a nonthreatening face (Eastwood, Smilek, & Merikle, 2001; Fox et al., 2000; Gilboa-Schectman, Foa, & Amir, 1999; Hansen & Hansen, 1988; Juth et al., 2005; Öhman, Lundqvist, & Esteves, 2001; Tipples et al., 2002).

Mather and Knight (2006) demonstrated that similar effects are obtained with older adults. They presented arrays of nine schematic faces to young and older adults. Half the time the nine faces were identical (all neutral expressions), and half the time one face was different (with eight faces neutral and the ninth expressing anger, sadness, or happiness). The task was to press a key to identify the faces as the same or as different. Mather and Knight found that although older adults responded more slowly overall compared with young adults, angry faces tended to “pop out” so that both young and older adults identified angry faces as different more quickly than sad or happy faces.

Likewise, Hahn, Carlson, Singer, and Gronlund (2006) demonstrated that both young and older adults are quicker to locate an angry schematic face among an array of neutral schematic faces (by touching a computer screen) than to identify a happy schematic face.

The findings of Mather and Knight (2006) and Hahn and colleagues (2006) are particularly interesting for two reasons. First, when asked to rate the dangerousness of faces, young adults tend to rate unsmiling male faces as more dangerous than smiling female faces, and although older adults do likewise, they do not differentiate the faces as much as young adults. Furthermore, the differences between young and older adults seem to be restricted to differentiating faces. There are no differences in the way that young and older adults differentiate non-face stimuli such as photographs of sporting activities or occupations (Ruffman, Sullivan, & Edge, 2006). Second, older adults have well-established difficulties labeling certain facial expressions, with a recent meta-analysis establishing that facial expressions of anger, sadness, and fear present particular difficulty for older adults (Ruffman, Henry, Livingstone, & Phillips, 2008). These age differences have been obtained in at least two broad cultures (Western cultures and Japan), using a combination of Western models (the Facial Expressions of Emotion: Stimuli and Tests set; Young, Perrett, Calder, Sprengelmeyer, & Ekman, 2002) and a mix of Western and Japanese models (the Matsumoto & Ekman, 1988, set). Although the findings are based on cross-sectional rather than longitudinal studies so that cohort effects have not been examined, they are suggestive of declining emotion labeling with age.

These findings suggest the following possibility: older adults might react quickly to angry faces yet be unable to label faces as angry and they might be less able to differentiate faces for their potential danger value when asked to do so. The ability to label faces as angry or dangerous requires explicit knowledge of the terms “angry” and “dangerous” because older adults are explicitly asked about these properties. However, angry faces may nevertheless pop out and be attended to more quickly when in an array of neutral faces because rapid attention does not require explicit labeling of the faces as angry or dangerous. That is, quicker reaction times would demonstrate that older adults process the angry faces in a meaningful way even when they lack explicit knowledge of the faces as “angry.” Although reaction times slow with age, they typically remain sensitive measures of implicit knowledge. For instance, Bennett, Howard, and Howard (2007) had young and older adults try to predict the location of a stimulus on a computer screen when it appeared in one of three positions. The stimulus location followed a complicated pattern, and the ability to discern the pattern would result in decreases in reaction time as participants predicted the location. Young and old showed comparable decreases in reaction times over trials indicating equivalent implicit learning, although explicit learning was worse in older adults than in young adults.

There is a difficulty in interpreting previous findings at present, however. Mather and Knight (2006) and Hahn and colleagues (2006) used schematic drawings of faces, whereas researchers examining explicit emotion labeling or explicit threat detection use photographs of real faces. It is not clear whether older adults would experience the same difficulties labeling schematic expressions as they do labeling photographs of real faces. If older adults do not experience similar difficulties with schematics, it would mean that using reaction times to measure a pop-out effect would not provide unique insight into older adults’ ability to process emotional expressions. Thus, it seems important to test whether the response times of older adults would also be faster when the discrepant face is a photograph of a real face expressing anger and, also, to test whether older adults would have difficulties recognizing the emotional expressions portrayed in schematics as they do for photographs.

We examined these issues in Experiment 1 by measuring response time to both schematic faces and photographs of real faces, and in Experiment 2, by asking participants to label each of the schematic and real emotion faces used in Experiment 1. Our interest was in whether older adults had difficulties identifying the schematic or real emotion faces yet still responded more quickly to angry faces than to sad or happy faces. In Experiment 1, we presented young and older adults with arrays of nine faces. In one block of trials, the faces were schematic faces, and in another block, they were all photographs of real faces. In each block, the faces were all the same (all neutral) in half the trials, and in half the trials, eight faces were neutral and one was discrepant (angry, sad, or happy). Participants’ task was to push a button on a keyboard to indicate the faces were either all the same or one was different.

EXPERIMENT 1

Methods

Participants.—

Table 1 presents the participant characteristics in Experiments 1 and 2. There were 29 young adults (M = 21 years) and 31 older adults (M = 71 years). In both experiments, young adults were recruited from undergraduate psychology classes for a small portion of course assessment or from a student employment agency for payment. Older adults were recruited by telephone and were selected from a database compiled through newspaper advertisements. Older adults were offered book and petrol vouchers or money as payment, and had their vision tested on Snellen's Eye Chart to ensure that they had adequate visual acuity to complete the task. All older adults had corrected vision in the normal range (i.e., 20/20 to 20/30). As expected, relative to young adults, older adults had equal levels of self-reported positive affect and lower levels of negative affect (see Table 1). These findings replicate those in Mather and Knight (2006) and are consistent with positivity effects with age (Mather & Carstensen, 2005). A typical cognitive profile in aging includes intact or improved verbal ability (Salthouse, 2000), and participants in the present study were typical in this respect (see Table 1). Mather and Knight reported that older adults tended to perform better in the morning and young adults in the afternoon. Therefore, in both experiments equal numbers of young and old were tested throughout the day to avoid any biases for either age group.

Table 1.

Descriptive Statistics for Participants in Experiments 1 and 2

| Young Adults | Older Adults | |

| Experiment 1 | ||

| Number | 29 | 31 |

| Mean age, years | 21 | 71 |

| Age range, years | 18–28 | 64–85 |

| Number females | 15 | 20 |

| Vocabulary | 16.79a (3.37) | 19.94b (2.89) |

| Positive affect | 30.76a (6.09) | 32.81a (6.67) |

| Negative affect | 17.48a (3.68) | 14.13b (4.02) |

| Experiment 2 | ||

| Number | 30 | 30 |

| Mean age, years | 19 | 69 |

| Age range, years | 18–22 | 62–85 |

| Number females | 19 | 19 |

| Vocabulary | 16.30a (3.42) | 20.73b (2.32) |

| Years education | 13.93a (1.02) | 13.40a (3.67) |

Note: t tests comparing young and old were conducted for vocabulary, education, and positive affect and negative affect. Different superscripts indicate significant age group differences (p < .05, two tailed).

Materials.—

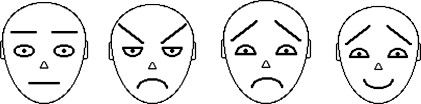

The four schematic faces used (neutral, angry, sad, happy) were identical to those used in Mather and Knight (2006) and are included in Figure 1. The 108 photographs of real faces used (27 each of neutral, angry, sad, and happy) were from the MacBrain Face Stimulus set* and featured 27 different people expressing anger, sadness, or happiness, or having a neutral expression. Figure 2 includes examples of the real faces. The real faces consisted of color photographs of 14 young adult men and 13 young adult women (all approximately in their early to mid-20s). In each trial, nine photographs appeared on the computer monitor in a 3 × 3 array against a white background. Each schematic and real face was standardized at 84 × 98 pixels or 2.96 × 3.46 cm. Eight of the nine faces were neutral expressions of the same individual (either the neutral schematic or a neutral photo of 1 of the 27 people included in the MacBrain set). The ninth face was either identical (the same individual with a neutral expression) or of the same individual expressing anger, sadness, or happiness.

Figure 1.

The schematic stimuli used in Experiments 1 and 2.

The faces are neutral, angry, sad, and happy.

Figure 2.

Examples of the photographs of real faces used in Experiments 1 and 2.

The faces are neutral, angry, sad, and happy.

The Peabody Picture Vocabulary Test III was used as a measure of crystallized abilities (Dunn & Dunn, 1997) and the Positive and Negative Affect Scale as a measure of mood (Watson, Clark, & Tellegen, 1988).

Design.—

The schematic faces were given in one block and the real faces in another, with participants first receiving 12 practice trials (3 schematic same, 3 schematic different, 3 real same, 3 real different), and then receiving the two experimental blocks in a counterbalanced order. Within each block there were 162 trials, 81 of which were same trials (all faces were neutral), and 81 of which were different trials with eight neutral faces and one discrepant face in the 3 × 3 array (27 trials each for angry, sad, and happy). The discrepant face was situated randomly in one of the nine positions in the 3 × 3 array. In the real faces block, each discrepant face appeared three times in each of the nine possible positions in the 3 × 3 array. The order of the 162 trials in each block was randomly determined.

Procedure.—

In each trial, a fixation cross first appeared for 2 s at the center of a 38-cm computer monitor. The nine-face array then appeared until the participant pressed a color-coded key labeled “DIF” or “SAM” using the left or right index finger to indicate whether there was one discrepant face or all the faces were the same. After two seconds, the next trial was signaled by the reappearance of the fixation cross. Participants were encouraged to respond both quickly and accurately and took breaks at regular intervals to ensure they did not become overtired.

Results

Same–different judgments.—

First, we examined the proportion of errors made when making the “same–different” judgments. As in Mather and Knight (2006), very few errors were made (see Table 2). Total errors across neutral, angry, sad, and happy trials were compared using Mann–Whitney U tests. Older adults made fewer errors than young adults on both schematic faces, Z = −2.10, p < .05, and real faces, Z = −2.29, p < .05.

Table 2.

Proportion of Trials (and SDs) Participants Erred on Same–Different Judgments for Schematic Faces in Experiment 1

| Younger Adults | Older Adults | |

| Schematic faces | ||

| Neutral | .006 (.011) | .0004 (.002) |

| Angry | .003 (.010) | .004 (.011) |

| Sad | .005 (.013) | .000 (.000) |

| Happy | .008 (.023) | .002 (.013) |

| Real faces | ||

| Neutral | .012 (.020) | .004 (.006) |

| Angry | .061 (.074) | .031 (.044) |

| Sad | .161 (.147) | .100 (.101) |

| Happy | .066 (.088) | .022 (.039) |

Schematic response times.—

Next, we examined mean response times (see Table 3). The response times for trials in which same–different judgments were incorrect were omitted from the analysis. Outliers were identified separately for each item in each age group in the following manner. For each item, mean response times and standard deviations were calculated for each age group and the data were transformed so that any value outside the mean value ±3 SD was transformed to equal the next closest value (e.g., a value within 3 SD) + 1 (Tabachnick & Fidell, 1989). Once reaction times for individual items had been adjusted, mean response times for individuals were calculated for each category of items (e.g., for angry schematic faces).

Table 3.

Mean Reaction Times (and SDs) on Same–Different Judgments for Schematic and Real Faces in Experiment 1

| Reaction Time, ms (SD) |

||

| Younger Adults | Older Adults | |

| Schematic faces | ||

| Neutral | 1,181.52 (510.20) | 2,758.81 (1,533.30) |

| Angry | 713.56a (228.04) | 1,247.46a (484.87) |

| Sad | 746.71b (237.62) | 1,295.29b (459.38) |

| Happy | 755.18b (256.85) | 1,363.45c (544.31) |

| Real faces | ||

| Neutral | 2,591.47 (898.24) | 5,267.05 (2,166.57) |

| Angry | 452.70a (123.08) | 884.53a (291.06) |

| Sad | 520.25b (181.34) | 968.32b (295.41) |

| Happy | 496.58b (138.37) | 933.42b (303.04) |

Note: We compared reaction times for angry, sad, and happy within each age group. Different superscripts indicate significantly different means (p < .05).

Because the emotion trials were of most interest, we conducted a 3 (emotion: angry, sad, happy) × 2 (age group: young, old) analysis of variance with reaction times on the schematic trials as the dependent variable. In all analyses of variance, where assumptions of sphericity were violated, Huynh–Feldt-corrected p values and mean square errors (MSEs) are reported. The effect for emotion was significant, F(2, 116) = 17.56, p < .001, MSE = 93,065.95, ηp2 = .23, as was the effect for age group with older adults slower, F(1, 58) = 31.20, p < .001, MSE = 14,277,383.65, ηp2 = .35, and the interaction, F(2, 116) = 4.39, p < .05, MSE = 23,247.37, ηp2 = .07.

Table 3 lists planned comparisons in reaction times within age groups and shows that both young and older adults responded more quickly to angry faces than happy or sad faces, and in addition, older adults responded more quickly to sad faces than to happy faces. The interaction indicates a difference in young–old responding and it was explored with three t tests comparing young versus older adult difference scores (anger reaction time (RT) – sadness RT, anger RT – happiness RT, sadness RT – happiness RT). Large difference scores meant that participants were quicker to label (a) the angry faces as different relative to labeling the sad faces as different, (b) the angry faces as different relative to the happy faces, or (c) the sad faces as different relative to the happy faces. After applying Holms correction to keep the family-wise error rate at p < .05, the absolute value of one difference score was larger for older adults compared with young adults; that between anger and happiness. In other words, both young and older adults were quicker to label the angry schematic faces as different relative to labeling the happy schematic faces as different, but the difference in reaction times was greater for older adults than young adults.

Finally, we used a t test, with a correction factor for unequal variances to compare response times with neutral schematic faces. Older adults were slower to respond than young adults, t(59) = 2.12, p < .05.

Real face response times.—

As for schematic faces, response times for incorrect same–different judgments were omitted from the analysis, and outliers were adjusted as previously described. We conducted a 3 (emotion) × 2 (age group) analysis of variance, with reaction times on the real faces as the dependent variable. The effect for emotion was significant, F(2, 116) = 12.17, p < .001, MSE = 87,260.31, ηp2 = .17, as was the effect for age group with older adults slower, F(1, 58) = 56.03, p < .001, MSE = 8,659,469.62, ηp2 = .49. The interaction was not significant, indicating young and old sped up to an equal extent on angry faces relative to sad and happy faces, F(2, 116) = 0.14, p = .87, MSE = 1439.11, ηp2 = 0. Table 3 also lists comparisons in reaction times within age groups and shows that both young and older adults responded more quickly to angry faces than happy or sad faces.

Finally, we used a t test with a correction factor for unequal variances to compare response times with neutral real faces. Older adults were slower to respond than young adults, t(59) = 2.92, p < .01.

Within–age group analyses.—

Finally, we conducted planned comparisons within each age group, comparing reaction times in the different emotion pairs. The results are shown in Table 3. For both schematic and real faces, young and older adults responded more quickly on angry faces compared with sad and happy faces. In addition, older adults responded more quickly on sad schematic faces relative to happy schematic faces.

Discussion

In Experiment 1, we replicated Mather and Knight's (2006) finding that both young and older adults respond more quickly to angry schematic faces than to sad or happy schematic faces. Furthermore, we extended these findings to real faces. As for schematics, both young and old responded more quickly to photographs of real angry faces than real sad faces or real happy faces. The fact that we obtained similar results for schematic and real faces is important because the real faces clearly have more in the way of ecological validity, and because older adults tend to have difficulty labeling photographs of real angry (and sad) faces (Ruffman et al., 2008). In addition, compared with young adults, older adults made fewer errors when judging whether the real faces were the same or different, although there appeared to be something of a speed–accuracy trade-off because older adults were also slower than younger adults. The slower reaction time was likely due to more careful responding but also generally slower processing speeds in older adults (Salthouse, 2000). Older adults’ generally good performance differentiating the faces as the same or different indicates that they are relatively good when assessing a face's perceptual features, a result also obtained by Sullivan and Ruffman (2004). However, recognizing that faces look the same or different falls short of recognizing that a face looks angry or sad, and it is this latter judgment that typically proves difficult for older adults.

In Experiment 1, the facilitation in response times with an angry schematic face was greater for older adults than for young adults. Although this was not true for real faces, the result for schematics is similar to that of Mather and Knight (2006), who found that older adults had a larger effect size than younger adults when comparing reaction times on threatening schematic faces (angry and sad) with nonthreatening faces (happy). Another finding of Experiment 1 was that older adults were quicker to respond to sad schematic faces than to happy schematics. Although not obtained with real faces, the result for schematics is consistent with the idea that negative emotion faces in general attract attention.

Interestingly, same–different judgments appeared to be quicker for real faces compared with schematic faces. A speed–accuracy trade-off may again have been at work given that more errors were made with real faces. Importantly, although more errors were made by both young and older adults when categorizing the real faces, participants were faster responding to angry faces for both schematics and real faces. The Experiment 1 findings again raise the question as to whether older adults might respond more quickly to angry faces even when they have difficulty labeling such faces as angry. In Experiment 2, we tested this idea.

EXPERIMENT 2

As discussed at the outset, there are now well-established findings that older adults find it more difficult than younger adults to label photographs of angry and sad faces (Ruffman et al., 2008). The findings of Experiment 1, and in particular the faster responding on photographs of angry faces, raise a question. On the one hand, it is possible that older adults’ faster responding to angry faces indicates an ability to differentiate emotion expressions even when they have difficulties explicitly labeling these emotion faces. On the other hand, different faces have been used in emotion labeling research (the Ekman and Friesen, 1976, or Matsumoto and Ekman, 1988, sets) than in Experiment 1 (the MacBrain stimuli), and it is possible that the faces used in Experiment 1 were particularly easy to label and that older adults would not be worse labeling these than younger adults.

In Experiment 2, we tested these possibilities by having a new group of young and older adults label all emotion faces used in Experiment 1. Our interest was in whether there were age group differences in labeling of emotions even though in Experiment 1 there were no age group differences in same–different judgments or reaction times (on angry faces relative to sad and happy faces).

Methods

Participants.—

The participants of Experiment 2 came from the same pool as those of Experiment 1 but none served in both experiments. Participant characteristics are listed in Table 1. There were 30 young adults (M = 19 years, range = 18–22) and 30 older adults (M = 69 years, range = 62–85 years). Young and older adults had similar levels of vocabulary to those of Experiment 1. In Experiment 2, we also collected information on years of education, with young and older adults having very similar levels. All participants had vision within the normal range on Snellen’s Eye Chart. The participants in the two experiments came from the same participant pool and had similar levels of vocabulary, suggesting that they were a good match for comparing response times (in Experiment 1) to labeling (in Experiment 2). Given that the Experiment 2 participants were from the same pool as Experiment 1, the measures of vocabulary and positive and negative affect used in Experiment 1 had demonstrated the predicted age group characteristics, and because measures of mood or vocabulary are typically seen as tangential to the main aims of emotion labeling experiments and hence are not typically included, we did not include these measures in Experiment 2.

Materials and procedure.—

We gave participants the same materials in the same experimental design with the same blocked random order of stimuli (i.e., a block of 162 schematic trials and 162 trials with photographs of real people), counterbalancing blocks. For each block, there were 27 angry faces, 27 sad faces, and 27 happy faces. The difference compared with Experiment 1 was that for each presentation of photo, we were not interested in same–different reaction times, but instead, whether participants could identify the emotion expressed.

Participants were asked to say whether all the faces were the same or one was different. If one face was different, they were asked to label that face using the basic emotion labels: angry, sad, fearful, disgusted, surprised, and happy. These labels were attached to computer keys and participants pressed a key to indicate the correct label. If participants thought all the faces were the same, then they indicated this and pressed any button to continue. We examined all responses on the “different” trials and our interest was in whether participants correctly labeled the angry, sad, and happy faces.

Results

Schematic faces.—

Table 4 presents the proportion of correct responses when labeling angry, sad, and happy schematic faces. These data were examined with a 3 (emotion) × 2 (age group) analysis of variance, with proportion schematic faces labeled correctly as the dependent variable. There was an effect for age group, F(1, 58) = 4.31, p < .05, MSE = 314.69, ηp2 = .07, indicating better emotion recognition by the young adults overall, and a significant interaction, F(2, 116) = 5.09, p < .01, MSE = 175.34, ηp2 = .08. The effect for emotion was not significant, F(2, 116) = 0.37, p = .37, MSE = 12.82, ηp2 = .01. The interaction was explored using three t tests comparing young and old on the angry, sad, and happy trials. After applying Holms correction, there was one age group difference; older adults were significantly worse labeling angry schematic faces.

Table 4.

Proportion of Schematic and Real Faces Correctly Recognized in Experiment 2

| Younger Adults | Older Adults | |

| Schematic faces | ||

| Angry | .87a (.18) | .66b (.31) |

| Sad | .86a (.17) | .73a (.30) |

| Happy | .78a (.27) | .82a (.30) |

| Real faces | ||

| Angry | .79a (.12) | .68b (.17) |

| Sad | .69a (.15) | .53b (.21) |

| Happy | .85a (.11) | .91b (.07) |

Note: t tests were used to compare young versus old for each emotion. Different superscripts indicate different means after applying Holms correction (p < .05, two tailed).

Real faces.—

Table 4 presents the proportion of correct responses when labeling photographs of angry, sad, and happy real faces. These data were examined with a 3 (emotion) × 2 (age group) analysis of variance, with proportion of real faces labeled correctly as the dependent variable. There was a main effect for emotion, F(2, 116) = 71.77, p < .001, MSE = 959.10, ηp2 = .55, a main effect for age group, F(1, 58) = 7.24, p < .01, MSE = 170.14, ηp2 = .11, indicating better emotion recognition by the young adults overall, and a significant interaction, F(2, 116) = 12.08, p < .001, MSE = 161.40, ηp2 = .17. As aforementioned, the interaction was explored using three t tests comparing young and old on the angry, sad, and happy trials. After applying Holms correction, the findings showed that older adults were significantly worse labeling angry and sad real faces but significantly better labeling happy real faces.

Discussion

In Experiment 2, labeling of emotion in the schematic faces was well below ceiling on both schematics and real faces. The most compelling finding to come out of Experiment 2 was that older adults were significantly worse than young adults when labeling angry schematic faces and angry and sad real faces. In the General Discussion, we discuss how the results of Experiment 2 might be integrated with the Experiment 1 finding of faster responding to angry faces in both young and older adults.

One thing to note about our findings is how they relate to previous emotion labeling data. Ruffman and colleagues’ (2008) meta-analysis indicates that older adults experience particular difficulties when labeling facial expressions of anger, sadness, and fear. Although the studies examined in that meta-analysis used the Ekman and Friesen (1976) or Matsumoto and Ekman (1988) stimuli, our results using the photographs in the MacBrain stimuli yielded similar results. This helps to confirm both that there is nothing unusual about the response times obtained using the MacBrain stimuli in Experiment 1 and also that previous emotion labeling results are not simply a facet of the stimuli researchers have used.

GENERAL DISCUSSION

At the outset, we pointed out that the findings of Mather and Knight (2006) and Hahn and colleagues (2006) coupled with findings demonstrating emotion labeling difficulties in older adults, consistent with the idea that emotion labeling difficulties might exist alongside the ability to differentiate emotion faces in other ways and respond to angry faces more quickly. At the same time, it was not possible to determine whether faster responding to angry faces in Mather and Knight and Hahn and colleagues had occurred due to the use of schematic faces, whereas emotion recognition research has used photographs of real faces. However, the results of the current experiment converge to provide clear evidence that older adults do respond more quickly to angry faces even when they have difficulty labeling them.

In Experiment 1, we replicated Mather and Knight's (2006) finding that both young and older adults are quicker to respond to angry schematic faces than to sad or happy faces in a nine-face array. Indeed, similar to Mather and Knight's finding of a larger effect size for older adults, we found that the amount by which older adults sped up on angry schematic faces relative to happy faces was greater than the amount by which young adults sped up. When we used color photographs of real faces we also found that both age groups were quicker to respond to angry faces than to sad or happy faces. Thus, across both types of stimuli, older adults were similar to young adults in that they were quicker to label angry faces as different compared with sad and happy faces. Despite the fact that angry faces were responded to more quickly in Experiment 1, in Experiment 2 we found that older adults were worse than young adults at labeling angry schematic and real faces as “angry.”

Together, the results of the present study reveal something of a contrast. The angry face apparently facilitates same–different judgments because it “pops out,” although older adults have difficulty labeling such faces as angry. In all likelihood, we suspect that such a contrast is not uncommon. For instance, primates will typically respond appropriately and quickly to a conspecific’s threat or fear display, and differently to these displays than to other displays such as a mating ritual. Yet, primates obviously have no ability to provide verbal labels for these displays. The same is true of human infants in that 3.5-month-olds differentiate between mothers’ looks of sadness versus happiness (Montague & Walker-Andrews, 2002) but clearly cannot label these emotions as such. In general, to survive and flourish in the world, an organism needs to accurately process and respond to different emotion displays. In the present study, the faster responding to angry faces indicates that on some level older adults differentiate these faces in a meaningful way from expressions of sadness or happiness.

Neuroscience might provide some clues as to why angry faces are responded to more quickly. Angry faces activate a number of brain regions, including the amygdala (Adolphs, Russell, & Tranel, 1999; Adolphs, Tranel, Damasio, & Damasio, 1994, 1995; Fischer et al., 2005; Morris, Öhman, & Dolan, 1998; Young, Aggleton, Hellawell, & Johnson, 1995) and cortical regions such as the anterior cingulate cortex and the orbitofrontal cortex (Blair, 1999; Blair and Cipolotti, 2000; Fine & Blair, 2000; Iidaka et al., 2001; Murphy, Nimmo-Smith, & Lawrence, 2003; Sprengelmeyer, Rausch, Eysel, & Przuntek, 1998). Older adults’ faster responding to angry faces must be reconciled with previous evidence that they have lower levels of amygdala activation compared with young adults when viewing negative emotional expressions (Fischer et al., 2005; Gunning-Dixon et al., 2003; Iidaka et al., 2001; Mather et al., 2004; Tessitore et al., 2005; although see Wright, Wedig, Williams, Rauch, & Albert, 2006).

The question raised by the present study is how is it that there is less amygdala activation in older adults compared with young adults but equally fast responding to angry faces? Although our study does not address this issue directly, it is important to try to understand the connection between age-related reductions in brain activation but no change in response times to angry faces. The answer might lie in the fact that amygdala activation can come about by means of both an unconscious route through the superior colliculus and pulvinar nucleus of the thalamus, or a conscious route through the cortex including the visual cortex, the anterior cingulate cortex, and the orbitofrontal cortex (Öhman, 2006). For instance, if angry faces are presented to young adults for only 30 ms and followed by a mask, they result in amygdala activation although they are not consciously perceived (Morris et al., 1998). In this case, amygdala activation takes place by means of the unconscious route. Faces presented for longer durations result in amygdala stimulation by means of the cortical route (Öhman, 2002).

In keeping with the idea that implicit perception is typically spared in aging and that reaction times involve a larger implicit than explicit component (Bennett et al., 2007), we propose that reaction times might be unaffected by aging because they reflect processing through the unconscious, noncortical route. In contrast, the cortical route might be affected because of declines in cell volume and metabolism in the orbitofrontal and cingulate cortices. Because these regions have rich reciprocal connections with the amygdala, reductions in activation in cortical areas would likely result in reductions in activation in the amygdala as well (for a summary of relevant research, see Ruffman et al., 2008).

Consistent with these ideas, Williams and colleagues (2006) found that facial fear labeling in young and middle-aged adults was correlated with gray matter loss in frontal regions. In other words, because imaging studies measure amygdala activation over extended periods of time, they will in large part be measuring activation through the cortical route, and reduced amygdala activation in such studies might stem from reduced cortical activation even if stimulation through the noncortical route is largely unaffected. Also consistent with these ideas, reaction times—the measure on which older adults were similar to young adults—are thought to be more likely to reveal unconscious knowledge (Berry & Dienes, 1993) compared with the direct questions used in emotion labeling research or the threat detection study of Ruffman and colleagues (2006).

This account is speculative in that there are no measures of brain activity in the present experiment, and there is no direct evidence that the reaction times indexed implicit understanding. Nevertheless, we think it is a plausible account and goes some way to explaining the dissociation between reaction times versus labeling. If correct, it might also be that the emotion processing of persons with dementias remains spared to some extent. For instance, recent research demonstrates that people in the early stages of Alzheimer's disease show a similar but more severe profile of emotion recognition to adults undergoing healthy aging in that their recognition of facial expressions of disgust is spared (even relative to young adults), whereas their recognition of some other emotions typically experienced as difficult by older adults (including anger) is worse than older adult controls (Henry et al., 2008). It is possible that, as in healthy aging, response times to angry faces might also be spared given that some implicit processes are spared in Alzheimer's disease (Meiran & Jelicic, 1995).

Another issue concerns the extent of implicit emotion processing in older adults. Although older adults have difficulty labeling emotion faces, it is unclear how well they can differentiate different emotion stimuli on an automatic level (e.g., a sad face from a fearful face). It also remains unclear how important explicit labeling of emotions is to well-being as opposed to implicit detection. For instance, we have argued that implicit social understanding is more relevant to the severity of autistic symptomatology than explicit social understanding (Ruffman, Garnham, & Rideout, 2001). It is possible that implicit detection of emotion will result in effective social functioning in older adults in many circumstances.

According to socioemotional selectivity theory, older adults are motivated to attend primarily to positive emotional stimuli rather than negative stimuli (e.g., Mather & Carstensen, 2003). Mather and Knight argued that the bias to attend to positive stimuli occurs at a later stage and is influenced by cognitive control and emotion regulation goals. In contrast, the tendency for rapid attention to threatening stimuli is an automatic response mostly inaccessible to cognitive control. For this reason, the results are not inconsistent with the idea that older adults attend preferentially to positive emotion stimuli.

Another issue addressed by our research concerns whether the pop-out effect occurs with both schematic faces and real faces. Previously, there has been some question as to whether photographs of real angry faces are responded to more quickly. Sometimes photographs of angry faces are detected more quickly (Gilboa-Schectman et al., 1999; Hansen & Hansen, 1988) but sometimes they are not (Pernilla et al., 2005; Purcell et al., 1996). Furthermore, in some experiments, Juth and colleagues (2005) found that happy faces are recognized more quickly, leading them to argue that perceptual factors likely play a role in face detection. They suggest that happy faces were sometimes recognized more quickly because they are easier to process, citing as evidence the fact that labeling of individual happy faces was better than angry faces. Our findings cannot be explained in the same way because schematic and real angry faces were detected more quickly by both young and older adults, but labeling of angry faces tended to be worse than labeling of happy faces (particularly for older adults).

Another way in which perceptual factors might have had an impact is in the nature of the distracters. Whereas Juth and colleagues (2005) included many different persons as distracters, researchers who have found faster reaction times for angry photographs (ourselves, Hansen & Hansen, 1988, and Gilboa-Schectman et al., 1999) have all employed just one person (the same person depicted in the target photograph) for the distracters. Thus, our distracters were perceptually identical (i.e., they depicted the same person with a neutral expression), whereas the distracters of Juth and colleagues were perceptually different (i.e., they all had a neutral expression but included seven different people). It seems obvious that the contrast between an emotional face and the distracters would be clearer when both portray the same person and that any increase in contrast between target item and distracters would provide a more sensitive means of revealing a threat detection advantage. Thus, it is likely that our methodology maximized the likelihood of obtaining a threat detection advantage, but it is possible that a threat detection advantage is relatively fragile and could be disrupted by various perceptual factors such as those found in the study by Juth and colleagues.

In sum, our findings are consistent with those of Mather and Knight (2006) in indicating that some emotion insights are intact even when others, such as the ability to label certain emotions, tend to be compromised in older adults. We have argued that the present findings might indicate that noncortical processing of facial expressions of anger remains intact in older adults, even if cortical processing reduces.

Footnotes

Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

References

- Adolphs R, Russell JA, Tranel D. A role for the human amygdala in recognizing emotional arousal from unpleasant stimuli. Psychological Science. 1999;10:167–171. [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Fear and the human amygdala. Journal of Neuroscience. 1995;15:5879–5891. doi: 10.1523/JNEUROSCI.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett IJ, Howard JH, Howard DV. Age-related differences in implicit learning of subtle third-order sequential structure. Journals of Gerontology: Psychological Sciences. 2007;62:P98–P103. doi: 10.1093/geronb/62.2.p98. [DOI] [PubMed] [Google Scholar]

- Berry DC, Dienes Z. Implicit learning: Theoretical and empirical issues. Hove, England: Erlbaum; 1993. [Google Scholar]

- Blair RJR, Morris JS, Frith CC, Perrett DI, Dolan RJ. Dissociable neural responses to facial expressions of sadness and anger. Brain. 1999;122:883–893. doi: 10.1093/brain/122.5.883. [DOI] [PubMed] [Google Scholar]

- Blair RJR, Cipolotti L. Impaired social response reversal. A case of ‘acquired sociopathy’. Brain. 2000;123:1122–1141. doi: 10.1093/brain/123.6.1122. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Circle Pines, MN: American Guidance Service; 1997. [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM. Differential attentional guidance by unattended faces expressing positive and negative emotion. Perception & Psychophysics. 2001;63:1004–1013. doi: 10.3758/bf03194519. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Fine C, Blair RJR. Mini review: The cognitive and emotional effects of amygdala damage. Neurocase. 2000;6:435–450. [Google Scholar]

- Fischer H, Sandblom J, Gavazzeni J, Fransson P, Wright CI, Bäckman L. Age-differential patterns of brain activation during perception of angry faces. Neuroscience Letters. 2005;386:99–104. doi: 10.1016/j.neulet.2005.06.002. [DOI] [PubMed] [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K. Facial expressions of emotion: Are angry faces detected more efficiently. Cognition and Emotion. 2000;4:61–92. doi: 10.1080/026999300378996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilboa-Schectman E, Foa EB, Amir N. Attentional biases for facial expressions in social phobia: The face-in-the-crown paradigm. Cognition & Emotion. 1999;13:305–318. [Google Scholar]

- Gunning-Dixon FM, Gur RC, Perkins AC, Schroeder L, Turner T, Turetsky BI, Chan RM, Loughhead JW, Alsop DC, Maldjian J, et al. Age-related differences in brain activation during emotional face processing. Neurobiology of Aging. 2003;24:285–295. doi: 10.1016/s0197-4580(02)00099-4. [DOI] [PubMed] [Google Scholar]

- Hahn S, Carlson C, Singer S, Gronlund SD. Aging and visual search: Automatic and controlled attentional bias to threat faces. Acta Psychologica. 2006;123:312–336. doi: 10.1016/j.actpsy.2006.01.008. [DOI] [PubMed] [Google Scholar]

- Hansen CH, Hansen RD. Finding the face in the crowd: An anger superiority effect. Journal of Personality and Social Psychology. 1988;54:917–924. doi: 10.1037//0022-3514.54.6.917. [DOI] [PubMed] [Google Scholar]

- Henry JD, Ruffman T, McDonald S, Peek O'Leary M-A, Phillips LH, Brodaty H, Rendell PG. Recognition of disgust is selectively preserved in Alzheimer's disease. Neuropsychologia. 2008;46:1363–1370. doi: 10.1016/j.neuropsychologia.2007.12.012. [DOI] [PubMed] [Google Scholar]

- Iidaka T, Omori M, Murata T, Kosaka H, Yonekura Y, Tomohisa O, Sadato N. Neural interaction of the amygdala with the prefrontal and temporal cortices in the processing of facial expressions as revealed by fMRI. Journal of Cognitive Neuroscience. 2001;13:1035–1047. doi: 10.1162/089892901753294338. [DOI] [PubMed] [Google Scholar]

- Juth P, Lundqvist D, Karlsson A, Öhman A. Looking for foes and friends: Perceptual and emotional factors when finding a face in the crowd. Emotion. 2005;5:379–395. doi: 10.1037/1528-3542.5.4.379. [DOI] [PubMed] [Google Scholar]

- Mather M, Canli T, English T, Whitfield S, Wais P, Ochsner K, Gabrieli J, Carstensen LL. Amygdala responses to emotionally valenced stimuli in older and younger adults. Psychological Science. 2004;15:259–263. doi: 10.1111/j.0956-7976.2004.00662.x. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and attentional bias for emotion faces. Psychological Science. 2003;14:409–415. doi: 10.1111/1467-9280.01455. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and motivated cognition: The positivity effect in attention and memory. Trends in Cognitive Sciences. 2005;9:496–502. doi: 10.1016/j.tics.2005.08.005. [DOI] [PubMed] [Google Scholar]

- Mather M, Knight MR. Angry faces get noticed quickly: Threat detection is not impaired among older adults. Journal of Gerontology: Psychological Sciences. 2006;61:54–57. doi: 10.1093/geronb/61.1.p54. [DOI] [PubMed] [Google Scholar]

- Matsumoto D, Ekman P. San Francisco: 1988. Japanese and Caucasian Facial Expressions of Emotion and Neutral Faces (JACFEE and JACNeuF) [Google Scholar]

- Meiran N, Jelicic M. Implicit memory in Alzheimer's disease: A meta-analysis. Neuropsychology. 1995;9:291–303. [Google Scholar]

- Montague DPF, Walker-Andrews AS. Mothers, fathers, and infants: The role of person familiarity and parental involvement in infants’ perception of emotion expressions. Child Development. 2002;73:1339–1352. doi: 10.1111/1467-8624.00475. [DOI] [PubMed] [Google Scholar]

- Morris JS, Öhman A, Dolan RJ. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998;393:467–470. doi: 10.1038/30976. [DOI] [PubMed] [Google Scholar]

- Murphy FC, Nimmo-Smith I, Lawrence AD. Functional neuroanatomy of emotion: A meta-analysis. Cognitive, Affective, & Behavioral Neuroscience. 2003;3:207–233. doi: 10.3758/cabn.3.3.207. [DOI] [PubMed] [Google Scholar]

- Öhman A. Automaticity and the amygdala: Nonconscious responses to emotional faces. Current Directions in Psychological Science. 2002;11:62–66. [Google Scholar]

- Öhman A. The role of the amygdala in human fear: Automatic detection of threat. Psychoneuroendocrinology. 2006;30:953–958. doi: 10.1016/j.psyneuen.2005.03.019. [DOI] [PubMed] [Google Scholar]

- Öhman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. Journal of Personality and Social Psychology. 2001;80:381–396. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Pernilla R, Lundqvist D, Karlson A, Öhman A. Looking for foes and friends: Perceptual and emotional factors when finding a face in the crowd. Emotion. 2005;5:379–395. doi: 10.1037/1528-3542.5.4.379. [DOI] [PubMed] [Google Scholar]

- Purcell DG, Stewart AL, Skov RB. It takes a confounded face to pop out of a crowd. Perception. 1996;25:1091–1108. doi: 10.1068/p251091. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Garnham W, Rideout P. Social understanding in autism: Eye gaze as a measure of core insights. Journal of Child Psychology and Psychiatry. 2001;42:1083–1094. doi: 10.1111/1469-7610.00807. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience and Biobehavioral Reviews. 2008;32:863–881. doi: 10.1016/j.neubiorev.2008.01.001. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Sullivan S, Edge N. Differences in the way older and younger adults rate threat in faces but not situations. Journals of Gerontology: Psychological Sciences. 2006;61:P187–P194. doi: 10.1093/geronb/61.4.p187. [DOI] [PubMed] [Google Scholar]

- Salthouse TA. Aging and measures of processing speed. Biological Psychology. 2000;54:35–54. doi: 10.1016/s0301-0511(00)00052-1. [DOI] [PubMed] [Google Scholar]

- Sprengelmeyer R, Rausch M, Eysel UT, Przuntek H. Neural structures associated with recognition of facial expressions of basic emotions. Proceedings of the Royal Society of London B: Biological Sciences. 1998;265:1927–1931. doi: 10.1098/rspb.1998.0522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sullivan S, Ruffman T. Emotion recognition deficits in the elderly. International Journal of Neuroscience. 2004;114:94–102. doi: 10.1080/00207450490270901. [DOI] [PubMed] [Google Scholar]

- Tabachnick BG, Fidell LS. Using multivariate statistics. New York: Harper & Row; 1989. [Google Scholar]

- Tessitore A, Hariri AR, Fera F, Smith WG, Das S, Weinberger DR, Mattay VS. Functional changes in the activity of brain regions underlying emotion processing in the elderly. Psychiatry Research: Neuroimaging. 2005;139:9–18. doi: 10.1016/j.pscychresns.2005.02.009. [DOI] [PubMed] [Google Scholar]

- Tipples J, Young AW, Quinlan P, Broks P, Ellis AW. Searching for threat. Quarterly Journal of Experimental Psychology. 2002;55A:1007–1026. doi: 10.1080/02724980143000659. [DOI] [PubMed] [Google Scholar]

- Watson D, Clark LA, Tellegen A. Development and validation of brief measures of positive and negative affect: The PANAS scales. Journal of Personality and Social Psychology. 1988;54:1063–1070. doi: 10.1037//0022-3514.54.6.1063. [DOI] [PubMed] [Google Scholar]

- Williams LM, Brown KJ, Palmer D, Liddell BJ, Kemp AH, Olivieri G, Peduto A, Gordon E. The mellow years?: Neural basis of improving emotional stability over age. Journal of Neuroscience. 2006;26:6422–6430. doi: 10.1523/JNEUROSCI.0022-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright CL, Wedig MM, Williams D, Rauch SL, Albert MS. Novel fearful faces activate the amygdala in healthy young and elderly adults. Neurobiology of Aging. 2006;27:361–374. doi: 10.1016/j.neurobiolaging.2005.01.014. [DOI] [PubMed] [Google Scholar]

- Young AW, Aggleton JP, Hellawell DJ, Johnson M. Face processing impairments after amygdalotomy. Brain. 1995;118:15–24. doi: 10.1093/brain/118.1.15. [DOI] [PubMed] [Google Scholar]

- Young AW, Perrett D, Calder A, Sprengelmeyer R, Ekman P. Facial expressions of emotion: Stimuli and tests. Suffolk, England: Thames Valley Test; 2002. [Google Scholar]