Abstract

The Liaison Committee for Medical Education requires monitoring of the students’ clinical experiences. Student logs, typically used for this purpose, have a number of limitations. We used an electronic system called Patient Tracker to passively generate student encounter data. The data contained in Patient Tracker was compared to the information reported on student logs and data abstracted from the patients’ charts. Patient Tracker identified 30% more encounters than the student logs. Compared to the student logs, Patient Tracker contained a higher average number of diagnoses per encounter (2.28 vs. 1.03, p<0.01). The diagnostic data contained in Patient Tracker was also more accurate under 4 different definitions of accuracy. Only 1.3% (9/677) of diagnoses in Patient Tracker vs. 16.9% (102/601) diagnoses in the logs could not be validated in patients’ charts (p<0.01). Patient Tracker is a more effective and accurate tool for documenting student clinical encounters than the conventional student logs.

Introduction

Medical Student education in the 3rd and 4th years of medical school is primarily clinical. The number of patients seen and variety of diagnoses cared for are important process measures during this time. The Liaison Committee for Medical Education (LCME), the body which accredits medical schools, requires faculty define the types of patients and clinical conditions students must encounter. Faculty are also required to monitor the students’ experiences and must ensure that all students have the required experiences. (The experiences can be provided by means other than patient encounters including simulated experiences such as standardized patients or online or paper cases).1 Many institutions fulfill this requirement by requiring students to complete logs of patient encounters.

Collecting such information is difficult and existing methods have significant limitations. A common method for collecting such data is to have students compile a hand-written log.2 Such logs have limitations, they require medical students to recall their patient encounters and accurately characterize their patients’ diagnoses. They may also require another individual to enter data into a database for analysis. Studies have shown that such student logs are unreliable.3,4 Different technologies have been utilized to try to improve this process including personal digital assistants (PDAs)5 and personal computers.6 While these systems show improvements over paper logs, they nonetheless have limitations. Fundamentally, these systems require the students’ active participation. In spite of these shortcomings, a number of medical education studies have relied on unvalidated student logs.5,7–9

The development of Patient Tracker, an electronic system for sign out and discharge planning, provided an alternative way to collect patient encounter data.10 The Patient Tracker system is used to manage daily patient activities by all members of the medical care team including medical students. All patients admitted to the hospital are automatically entered into the system and are assigned to the relevant teams. The medical staff enters additional data including diagnosis, medications, a “to do” list, and discharge criteria. We hypothesized that the data collected in this system could be used to generate more accurate patient logs, in part, because it was a passive process and did not require additional effort on the part of the medical students.

Methods and Materials

Setting:

Medical students at the University of Utah perform the inpatient component of their third year pediatrics clerkship at Primary Children’s Medical Center (PCMC). PCMC is a freestanding, 242-bed, tertiary-care referral center, owned and operated by Intermountain HealthCare. All students are required to submit patient logs at the end of their clinical rotations reporting the name, age, sex, and diagnosis of all patients they cared for. Students rotate on three teams, two conventional teams along with the pediatric interns and a nonconventional team comprised of four medical students but no intenrs.11 The students rotating on the nonconventional or Glasgow Service were selected for participation in this study because the team design obviated the need to differentiate whether or not a patient was cared for by an intern and/or a student.

Patient Selection Criteria:

Patients admitted to the Glasgow Service between July 2004 and June 2005.

Software:

Patient Tracker is web-based server software (Apache), built on the Java (Sun Microsystems Inc, Santa Clara, CA) architecture, developed using an Oracle 9i (Oracle Corporation, San Jose, CA) database.10 Patient demographics are pulled into the Oracle 9i tables from the legacy electronic medical record database and include name, encounter number, date of birth, age, sex, weight, admission date and time, and room number. The software stores additional data entered by a variety of users. The housestaff are responsible for designating team assignment, attending, intern and/or medical student, diagnosis, medications, and a “to do” list. The intern and/or medical student and diagnosis are entered into free text fields. The diagnosis field has minimal formatting requirements and can accommodate lists of any length. Patient information is updated daily by members of the care team to reflect changing or evolving diagnoses and treatments. The Patient Tracker is not used to document procedures. Data from this application can be downloaded in to a variety of database formats including Microsoft Access ®.

Data Sources:

Patient’s names and diagnoses were obtained from three sources: 1) students’ logs, 2) Patient Tracker, and 3) the patient’s chart.

Study Procedures:

Patient’s name, encounter number, student’s name, and diagnosis(es) were retrieved from Patient Tracker and stored into an Access database. All students’ names and diagnoses entered during the hospital stay were included, not just the initial student name entered. Patient encounters were verified by reviewing the medical recording for the student’s name on an admission history and physical examination, accept note, progress note or discharge summary. Patient’s diagnoses were also identified from the patient’s medical chart. These were extracted from the attending physician’s assessment in his/her admission history and physical examination or accept note and from the primary and secondary diagnosis fields of the patient’s discharge orders. The discharge orders are typically completed by the medical student but must be reviewed and cosigned by the supervising resident or attending physician.

All diagnosis data was in a free text format. In order to perform comparisons, these had to be translated into a standardized vocabulary. A preliminary list of 36 diagnoses was generated from the table of contents of Pediatric Hospital Medicine. This list was augmented by 4 additional codes based on additional diagnoses commonly listed in students’ logs and Patient Tracker. Two independent coders (SP and CM) performed this translation and resolved any initial differences through mutual agreement.

Statistical Analysis:

We evaluated the accuracy of the diagnostic information contained in the students’ logs and the Patient Tracker in comparison with the information abstracted from the patients’ charts using four different definitions of accuracy:

Accuracy 1: Percentage of encounters with at least 1 diagnosis from the student logs or Patient Tracker reported in the patients’ chart.

Accuracy 2: Average percentage of diagnoses per encounter contained in the chart also reported in the student logs or Patient Tracker.

Complete Match: Percentage of encounters with complete concordance between diagnoses contained in the student logs or Patient Tracker and in the chart.

Complete Mismatch: Percentage of encounters with complete disagreement between the diagnoses list in the student logs or Patient Tracker and in the chart.

Fisher’s Exact tests for equality of the proportions and Wilcoxon Rank Sum for non-normality distributed data were used to calculate statistical significance.

The study was approved by the University of Utah’s Institutional Review Board.

Results

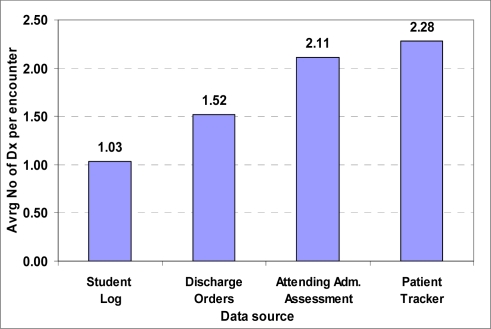

64 students rotated on the Glasgow Service between July 2004 and June 2005. All students submitted patient logs. Collectively the student logs contained 601 encounters, 11 of which could not be validated in the patient’s chart. Patient Tracker contained 783 encounters. Data from 677 encounters was collected from patient charts and all of this subset of encounters was validated. Patient Tracker identified 30% (783 vs. 601) more unvalidated encounters than the student logs. Only data from validated encounters was used in the subsequent analysis. The student logs had the lowest average number of diagnoses per encounter of the 4 data sources, while Patient Tracker had the highest average (Figure 1). Compared to the student logs, the average number of diagnoses per encounter generated from Patient Tracker was significantly higher (2.28 vs. 1.03, p< 0.01).

Figure 1.

Average Number of Diagnoses per Encounter

When diagnostic information contained in the student logs and Patient Tracker was assessed against the diagnoses contained in the patients’ charts, the accuracy of diagnostic information generated from Patient Tracker was significantly higher compared to the information obtained from the student logs regardless of the definition of accuracy used. In addition, there was a complete mismatch between the diagnoses reported in the student logs and the chart in 16.9% of the encounters (102/601) and between the diagnoses listed in Patient Tracker and the chart 1.3% (9/677, p < 0.01). See Table 1.

Table 1.

Accuracy of Diagnoses Reported in the Student Logs and Patient Tracker as Compared to Data Extracted from the Patients’ Charts

| Measure | Patient Trackervs. Charts | Student Logs vs. Charts | p-value |

|---|---|---|---|

| Accuracy 1 | 99.8% (676/677) | 95.7% (575/601) | < 0.01 |

| Accuracy 2 | 74.7% | 51.3% | < 0.01 |

| Complete Match | 49.8% (337/677) | 29.6% (178/601) | < 0.01 |

| Complete Mismatch | 1.3% (9/677) | 16.9% (102/601) | < 0.01 |

Discussion

The purpose of this study was to evaluate the use of Patient Tracker as a tool for reporting medical students’ clinical encounters which does not rely on the students’ ability to recall and accurately report this information or their active participation.

Patient Tracker, contained a larger number of encounters and, on average, generated twice the number of diagnoses than the student logs. In addition, the accuracy of diagnostic information generated from Patient Tracker was higher than the information reported by the student under multiple definitions of accuracy.

Patient Tracker is a real-time electronic system used by all members of the medical team, including medical students, in the daily management of patients. This software can also be used to report medical students’ clinical encounters during their clinical rotations.

The quality of medical students’ clinical rotation depends in large part on the number and types of patients students care for. The LCME, the accrediting body for undergraduate medical education, requires faculty define the types of patients and clinical conditions students must encounter. Faculty are also required to monitor the students’ experiences and must ensure that all students have the required experiences.1 Dolmans et al.12 demonstrated that students’ logs can be used to improve the structure of clinical education.

Unfortunately, students’ self-reported logs have significant limitations. For example, in their comparison of students’ logs and dictated reports, Patricoski et al13 found that only 82.7% of dictated patients were included in students’ logs. Some authors have proposed the incorporation of information technology to improve these results. Lee et al3 used demographic data to match students logs recorded on handheld computers to patient charts. They found that students recorded approximately 60% of their patients and the patients’ diagnoses. Although information technology may make it easier to transfer and analyze data, it is not clear that it eases data entry. Both paper and electronic logs have limitations, they rely on the students’ willingness to record encounters, ability to accurately recognize diagnoses, and/or ability to recall this information. Students may not report all encounters, carelessly or erroneously recall information, and may even falsify data.

We hypothesized that the use of Patient Tracker to generate student logs would overcome these limitations because its use was already built into the students’ workflow rather than being an additional obligation. The students use Patient Tracker for sign-out which provided them direct benefit. In addition, the information contained in Patient Tracker was used by other members of the team, including the supervising second-year resident and attending physician, which provided some oversight of its accuracy. Similar passive systems reported in the literature include Sequist et al’s extraction of information from the electronic medical record14 and Johnson et al’s use of a computerized medical records system for tracking medical student activity.15

The complexity of diagnosis makes it difficult to have a single unitary definition of accuracy. Patients may have multiple diagnoses including diagnoses describing the cause of their admission as well as diagnoses describing chronic conditions. For example, a patient could be diagnosed with hypoxia, bronchiolitis, and Trisomy 21. Patients’ diagnoses may also evolve during the course of their hospitalization. Coding permits the reduction of some of this variability. For example, both respiratory syncytial virus infection and bronchiolitis would be coded as bronchiolitis. The residual, legitimate variability makes comparison difficult. Multiple measures of accuracy were generated to provide different ways of matching. The fact that the data contained in Patient Tracker was more accurate under all of the definitions as compared to the data contained in the student logs makes the conclusions more robust.

Student logs generated from Patient Tracker can be used both to improve the clinical rotation and to study educational interventions. A list of students’ encounters could be generated midway through their rotation and future patients or simulated learning exercises assigned to increase students’ likelihood of being exposed to core experiences. In addition, a number of studies have made recommendations based on unvalidated student log data including our own study of the Glasgow service.11 Future educational research could incorporate this more reliable data.

Limitations: This study has a number of limitations including the following:

Not all encounters documented in Patient Tracker were validated and analyzed due to time constraints. This, however, only represents 12% of the total encounters and there is no reason to believe that diagnostic data contained in these encounters is substantially less accurate.

While the number of encounters reported in the students’ logs and Patient Tracker was validated using the patients’ charts, the charts were not surveyed for encounters not reported in these other sources. The equivalent of a “false negative” rate, therefore, cannot be reported.

Diagnostic data in the students’ logs, Patient Tracker, and the patients’ charts are entered as free text. This data had to be translated into a standardized vocabulary to permit comparison. The selection of only 40 categories or the specific categories may limit discrimination.

Patient Tracker relies on local information technology infrastructure, and may not be exportable to other sites. The software could, however, be readily modified to accommodate other clerkship structures. For example, the intern and/or medical student field could be divided into two separate fields.

Future software enhancement:

Patient Tracker could be further improved by having diagnoses entered in or translated into a standardized vocabulary. Existing standardized vocabularies of diagnoses include the International Classification of Diseases, Ninth Revision (ICD-9), Clinical Modification Codes16 or SNOMED Clinical Terms.17,18 Initial data entry into these vocabularies could be implemented using structured data entry such as pull-down lists or auto-fill data elements. Alternatively, diagnoses entered as free text could be converted using Natural Language Processing. Modifications such as these would provide greater uniformity and allow real-time monitoring of student’s experiences.

Conclusion

Patient Tracker captures a larger number of encounters compared to student logs and also contains more accurate diagnostic information under multiple definitions of accuracy.

References

- 1.Change to Standard on the Criteria for the Types of Patients and Clinical Conditions Encountered by Students. Education Liaison Committee on Medical Education. Current LCME Accreditation Standards 2007. Available at http://www.lcme.org/standard.htm

- 2.Withy K. An inexpensive patient-encounter log. Acad Med. 2001;76(8):860–862. doi: 10.1097/00001888-200108000-00024. [DOI] [PubMed] [Google Scholar]

- 3.Lee J, Sineff S, Sumner W. Validation of electronic student encounter logs in an emergency medicine clerkship. Proc AMIA Symp. 2002:425–429. [PMC free article] [PubMed] [Google Scholar]

- 4.Rattner S, Louis D, Rabinowitz C, Gottlieb J, Nasca T, Markham F, Gottlieb R, Caruso J, Lane J, Veloski J, Hojat M, Gonnella J. Documenting and comparing medical students' clinical experiences. JAMA. 2001;286(9):1035–40. doi: 10.1001/jama.286.9.1035. [DOI] [PubMed] [Google Scholar]

- 5.Sumner W. Student documentation of multiple diagnoses in family practice patients using a handheld student encounter log. Proc AMIA Symp. 2001:687–90. [PMC free article] [PubMed] [Google Scholar]

- 6.Shaw G, Clark J, Morewitz S. Implementation of computerized student-patient logs in podiatric medical education. J Am Podiatr Med Assoc. 2003;93(2):150–6. doi: 10.7547/87507315-93-2-150. [DOI] [PubMed] [Google Scholar]

- 7.Butterfield P, Libertin A. Learning outcomes of an ambulatory care rotation in internal medicine for junior medical students. J Gen Intern Med. 1993;8(4):189–92. doi: 10.1007/BF02599265. [DOI] [PubMed] [Google Scholar]

- 8.Carney P, Pipas C, Eliassen M, Donahue D, Kollisch D, Gephart D, Dietrich A. An encounter-based analysis of the nature of teaching and learning in a 3rd-year medical school clerkship. Teach Learn Med. 2000;12(1):21–7. doi: 10.1207/S15328015TLM1201_4. [DOI] [PubMed] [Google Scholar]

- 9.Worley P, Prideaux D, Strasser R, March R, Worley E. What do medical students actually do on clinical rotations. Med Teach. 2004;26(7):594–8. doi: 10.1080/01421590412331285397. [DOI] [PubMed] [Google Scholar]

- 10.Maloney C, Wolfe D, Gesteland P, Hales J, Nkoy F. A Tool for Improving Patient Discharge Process and Hospital Communication Practices: the Patient Tracker. Proc AMIA Symp. 2007:493–97. [PMC free article] [PubMed] [Google Scholar]

- 11.Antommaria A, Firth S, Maloney C. Evaluation of an innovative pediatric clerkship structure using multiple outcome variables including career choice. J Hosp Med. 2007;2(6):401–8. doi: 10.1002/jhm.250. [DOI] [PubMed] [Google Scholar]

- 12.Dolmans D, Schmidt A, Van Der Beek J, Beintema M, Gerver W. Does a student log provide a means to better structure clinical education. Med Educ. 1999;33(2):89–94. doi: 10.1046/j.1365-2923.1999.00285.x. [DOI] [PubMed] [Google Scholar]

- 13.Patricoski C, Shannon K, Doyle G. The accuracy of patient encounter logbooks used by family medicine clerkship students. Fam Med. 1998;30(7):487–9. [PubMed] [Google Scholar]

- 14.Sequist T, Singh S, Pereira A, Rusinak D, Pearson S. Use of an electronic medical record to profile the continuity clinic experiences of primary care residents. Acad Med. 2005;80(4):390–4. doi: 10.1097/00001888-200504000-00017. [DOI] [PubMed] [Google Scholar]

- 15.Johnson V, Michener J. Tracking medical students' clinical experiences with a computerized medical records system. Fam Med. 1994;26(7):425–7. [PubMed] [Google Scholar]

- 16.International Classification of Diseases, 9th revision, Clinical Modification (ICD-9-CM)CDC, NCHS2007. Available at http://www.cdc.gov/nchs/about/otheract/icd9/abticd9.htm

- 17.Donnelly K. SNOMED-CT: The advanced terminology and coding system for eHealth. Stud Health Technol Inform. 2006;121:279–90. [PubMed] [Google Scholar]

- 18.Wasserman H, Wang J. An applied evaluation of SNOMED CT as a clinical vocabulary for the computerized diagnosis and problem list. AMIA Annu Symp Proc. 2003:699–703. [PMC free article] [PubMed] [Google Scholar]