Abstract

Does feeling an emotion require changes in autonomic responses, as William James proposed? Can feelings and autonomic responses be dissociated? Findings from cognitive neuroscience have identified brain structures that subserve feelings and autonomic response, including those induced by emotional music. In the study reported here, we explored whether feelings and autonomic responses can be dissociated by using music, a stimulus that has a strong capacity to induce emotional experiences. We tested two brain regions predicted to be differentially involved in autonomic responsivity (the ventromedial prefrontal cortex) and feeling (the right somatosensory cortex). Patients with damage to the ventromedial prefrontal cortex were impaired in their ability to generate skin conductance responses to music, but generated normal judgments of their subjective feelings in response to music. Conversely, patients with damage to the right somatosensory cortex were impaired in their self-rated feelings in response to music, but generated normal skin conductance responses to music. Control tasks suggested that neither impairment was due to basic defects in hearing the music or in cognitively recognizing the intended emotion of the music. The findings provide evidence for a double dissociation between feeling emotions and autonomic responses to emotions, in response to music stimuli.

The mechanisms whereby sensory experience can result in changes in emotion and mood have been a topic of intense recent interest in cognitive neuroscience (Allen & Coan, 2007; J. Borod, 2000; Cacioppo, Tassinary, & Berntson, 2007; Dalgleish, 2004; Davidson, Goldsmith, & Scherer, 2003; Feldman-Barrett, Niedenthal, & Winkielman, 2005). Much of the interest derives from obtaining a more detailed account of the different components of emotion and their implementation in the brain. At the outset, it is important to acknowledge that there is no unanimous agreement on what the “components” of emotion are, and it is possible to distinguish many different aspects of emotion. Here we follow the framework perhaps most common within cognitive neuroscience, and one we have used in our prior work (Tsuchiya & Adolphs, 2007), which makes a primary distinction between the conscious experience of an emotion (feeling), its expression (response), and semantic knowledge about it (recognition). The current view in cognitive neuroscience regarding the substrates of the first two is essentially a modern update and correction on William James' earlier views (James, 1884): feeling (emotion experience) draws upon representations of changes in one's own body (Damasio, 1994; Craig, 2002), whereas emotional responses (somatic, physiological, and behavioral reactions) can occur in the absence of conscious experiences of the emotion and can in turn form the basis for feelings. Recognition of emotion, the aspect most commonly investigated in experimental psychology and neuroscience, simply asks subjects to decode the meaning of an emotional signal, such as a facial expression or a piece of music, and there are typically multiple strategies available to do so. Here we focus on the first two of these components: feeling emotions (assessed through verbal report), and emotional response (assessed through psychophysiology).

Another source of interest lies in explaining how these different components interact causally. One of the most widely known theories of emotion dates back to the psychologist William James, who argued that seeing a stimulus, such as a bear charging at us, would first trigger emotional responses (increases in sympathetic autonomic activity, increased somatomotor activity, etc. associated with sprinting away from the bear), and that the perception of these changes in internal body states would subsequently form the basis for our conscious experience of the emotion (James, 1884).

James' theory has been the source of considerable debate, continuing to modern day. A notable resurrection of James' theory is based on the finding that a failure to trigger autonomic responses to emotional contexts is correlated with impaired decision-making and social behavior (A. R. Damasio, 1994), and that there are dedicated afferent channels for conveying somatic visceral information to the brain (Craig, 2002). Damasio's “somatic marker hypothesis” (A. R. Damasio, 1996), and his broader theoretical framework for thinking about emotion (A. R. Damasio, 1999), argue that interoception of changes in body state forms the substrate of feelings. In other words, our perception of changes in our own body contributes to the content of conscious experiences of emotion—feeling sad is feeling a constriction in one's chest and throat, feeling afraid is feeling tenseness in one's body, and so on. These theoretical considerations are underpinned by neuroanatomical details provided by functional neuroimaging and lesion studies. Several regions have been highlighted as important to particular components of emotion: the hypothalamus and brainstem nuclei for efferent autonomic control, the amygdala and ventromedial prefrontal cortex for higher-order autonomic control, and the insula and right somatosensory-related cortices for the conscious experience of the emotion (see Craig, 2002; and Tsuchiya & Adolphs, 2007).

The ventromedial prefrontal cortex is arguably one of the most celebrated of these structures. It is the region on which Damasio's somatic marker hypothesis is focused, and the region that finds an historical example in the famous case of Phineas Gage (H. Damasio, Grabowski, Frank, Galaburda, & Damasio, 1994). Gage's early example, and a large number of modern lesion and neuroimaging studies, have confirmed that this region of the prefrontal cortex is important for triggering emotional responses of various kinds, to varied stimuli. A particularly clear case comes from the example of skin conductance responses to emotionally salient stimuli: these can be triggered by direct electrical stimulation of this brain region, are impaired by lesions to it (A. R. Damasio, Tranel, & Damasio, 1990; Tranel & H. Damasio, 1994), and correlate with activation of it in fMRI studies (Critchley, Elliott, Mathias, & Dolan, 2000). This region of the brain has also been implicated in other aspects of autonomic and behavioral emotional response, such as changes in heart rate, freezing, and blood pressure (Kaada et al., 1949; Hall et al., 1977; Al Maskati et al., 1989; Critchley et al., 2000). Indeed, so profound are these effects that electrical stimulation of the ventromedial prefrontal cortex can cause damage to the heart (Hall et al., 1977). In humans, direct electrical stimulation of this brain region causes changes in respiration, heart rate, and pupil dilation, all indices of autonomic arousal (Livingston, 1948). There is thus very substantial evidence linking the ventromedial prefrontal cortex to autonomic components of emotional responses.

The neural substrates of emotional experience (feeling) have been difficult to pin down. Regions of right parietal cortex have long been thought to play a role in emotion, although the details of this role have been debated (J. Borod, 1992; J. C. Borod, 1993). Lesion studies have found that damage to the right hemisphere can impair verbal reports of emotional experience, but leave explicit judgments of the emotional meaning of stimuli relatively intact (J. C.Borod et al., 1996), suggesting a role in the social communication of emotion (Blonder, Bowers, & Heilman, 1991). Impaired emotion processing to sensory stimuli following right hemisphere damage is found across sensory modalities and across different kinds of emotions (J. C. Borod et al., 1998).

We have previously shown that lesions encompassing somatosensory-related cortices in the right hemisphere impair the recognition of emotion from facial expressions. That study involved a large number of lesion subjects and suggested that right insula, somatosensory cortex (SI and SII) as well as adjacent polymodal regions, played a role in emotion recognition (Adolphs, Damasio, Tranel, Cooper, & Damasio, 2000). More recently, these findings have been confirmed in an fMRI approach (Winston, O'Doherty, & Dolan, 2003), and a large literature now exists implicating the insula in emotion experience (Carr, Iacoboni, Dubeau, Mazziotta, & Lenzi, 2003; Critchley, Wiens, Rotshtein, Oehman, & Dolan, 2004; Singer et al., 2004). Of particular interest is a framework proposed by Craig, which argues for dedicated afferent interoceptive somatosensory channels that convey signals about the state of the body ultimately to the right insula (Craig, 2002). Craig suggests that the right insula is a key substrate for the conscious awareness of feeling emotions, and that this role may be unique to primates.

The above empirical findings fit well with theoretical accounts of emotion (Adolphs, 2002; A. R. Damasio, 1999), and are consistent with William James' original idea. In this framework, emotionally salient sensory stimuli are processed by primary sensory and association cortices with respect to their meaning, and are then associated specifically with their rewarding or punishing value in ventromedial prefrontal cortex, which serves as a high-level trigger for autonomic emotional responses to such stimuli. The right somatosensory-related cortex, on the other hand, represents changes in body state—including those arising from changes triggered by the ventromedial prefrontal cortex—and as such generates emotional experience. It is important to note that each of these regions encompasses a tightly connected network of structures, and that the entire network is typically compromised by lesions, which typically include subjacent white matter. Thus, there is a limbic-somatosensory network that connects primary and secondary somatosensory cortices with insula and amygdala (Friedman, Murray, O'Neill, & Mishkin, 1986), and there is a medial prefrontal network that interconnects ventromedial prefrontal cortices, anterior cingulate cortex (Öngür & Price, 2000), and subcortical structures involved in emotional response (Öngür, An, & Price, 1998).

While we have emphasized the role of the ventromedial prefrontal cortex in autonomic components of emotional response, and right somatosensory-related cortices in emotional experience, many studies have found both these regions to be involved in both aspects of emotion (Adolphs, 2002). This has left William James' theory of uncertain status: it remains debated, and there is still no unambiguously conclusive evidence to support or refute it. Nonetheless, there have been hints that emotional response and experience can be dissociated. For instance, Keillor, Barrett, Crucian, Kortenkamp, & Heilman (2002) reported a patient who appeared to experience normal emotional feelings despite impaired ability to express emotions due to facial paralysis. Also, there are well documented cases of pathological emotional reactions in the absence of emotional experience (e.g., Parvizi, Anderson, Martin, Damasio, & Damasio, 2001). Given the evidence to date, we hypothesized that ventromedial prefrontal cortex and right somatosensory-related cortex would be most critical for autonomic responses (an aspect of emotional response) and experience of emotions (feelings), respectively. We examined the role of each brain region for autonomic responses and experience of emotions using music as the emotional stimulus.

Music is one of the most potent and universal stimuli for mood induction, an observation that has not been lost on clinicians who wish to use music therapy with neurologically or psychiatrically ill patients. Yet little is known about the neural substrates of experiencing emotion from music. The majority of studies about neural mechanisms of music processing have focused on perception of specific musical features (such as consonance and dissonance; see reviews in Avanzini, Faienza, Minciacchi, Lopez, & Majno, 2003; Peretz & Zatorre, 2005), and fewer studies have begun to focus on the experience of emotions from music (e.g., Blood & Zatorre, 2001; see Peretz, 2001 for review and discussion).

The present study extends previous research by using the lesion method to investigate how the brain processes emotional experiences from music, including both autonomic responses and subjective feelings. We asked neurological patients with focal brain lesions, as well as healthy comparison participants, to listen to music clips designed to evoke strong emotions. We first validated the efficacy of our stimuli in healthy participants, and then tested the emotion processing ability of neurological groups in an experiment that probed perception (hearing), recognition of emotion, autonomic responses to emotionally-laden music stimuli (skin conductance responses), and subjective feelings from music. The specific predictions from the overall hypothesis were as follows: (1) Patients with ventromedial prefrontal cortex lesions would have impaired (i.e. reduced) autonomic responses to emotional music, but would have normal subjective reports of emotional experience induced by music; (2) Patients with right somatosensory cortex lesions would have impaired (i.e. reduced) subjective reports of emotional experience induced by music, but would have normal autonomic responses to emotional music. In summary, we predicted a double dissociation for both autonomic responses to emotional music and subjective experience of emotional music.

Method

Participants

We tested two groups of neurological patients with focal brain lesions, selected from the Cognitive Neuroscience Patient Registry at the University of Iowa so as to test the predictions outlined in the Introduction: 10 patients with damage to the ventromedial prefrontal cortex (VMPFC), and 15 patients with damage to right somatosensory cortex (RSS). The patient groups were compared to a neurologically and psychiatrically healthy normal comparison group (NC, N=20). The lesions in the patient groups had the following causes: VMPFC: surgical treatment of anterior communicating artery aneurysm rupture (5), benign tumor resection (5); RSS: cerebrovascular accident (11), benign tumor resection (4). For inclusion in the study, lesion participants had to have a focal, stable, acquired lesion, available neuroanatomical data to confirm lesion location, and no other history of neurological or psychiatric disease. Exclusionary criteria were previous professional musical experience or significant musical training (e.g., involvement in music lessons or organizations since childhood and formal music courses in college), scores above 20 (moderate depressive symptoms) on the Beck Depression Inventory-II (Beck, Steer, & Brown, 1996), or if participants could not hear the stimuli based either on self-report or measured hearing loss greater than 55 decibels averaged across frequencies. Hearing was assessed by presenting pure tones at intervals between 250 and 8000 Hz to participants separately to each ear through headphones using a Grason-Stadler, Inc (GSI) 17 Audiometer. The lowest sound pressure level at which participants could detect each frequency was recorded, and the mean hearing threshold was calculated by averaging responses to all frequencies across both ears.

Seven participants did not show skin conductance responses to control stimuli (they failed to show the conventional change of at least 0.05 microSiemens, as described in Dawson et al., 2000) and were omitted from skin conductance analyses (3 NC, 2 VMPFC, 1 RSS). Three participants were consistent outliers on skin conductance (values greater than 2 standard deviations above the group mean during the baseline condition or during more than half of the music clips) and were excluded from further analyses (1 VMPFC, 2 RSS). These outlier values appeared to be caused by movement artifact.

Both healthy and neurological participants were recruited from extant Registries maintained in the Department of Neurology at the University of Iowa. For the neurological participants, this was the Patient Registry noted above; for the healthy participants it was a similar registry for healthy comparison subjects. All participants gave informed consent for participation in the research in accord with Institutional and Federal guidelines.

Measures and Procedure

Music stimuli

Emotional music clips were selected from film soundtracks because this music is often composed or selected to produce emotions and uses musical conventions that reliably and rapidly elicit specific emotional responses (A. J. Cohen, 2001; Gorbman, 1987). An initial set of 40 music clips was selected that had no lyrics and had musical features consistent with specific emotions (e.g., major mode and fast tempo for happy music). The original emotion categories selected for the music clips were happiness, sadness, anger, and fear, because these are widely considered basic emotions (e.g., Ekman, 1999) and have been used as emotion categories in a previous study that used music as an emotional stimulus in our laboratory (Gottselig, 2001). Anger was later excluded as an emotion category due to the nonspecific responses participants provided (the original “angry” clips were frequently rated as both eliciting anger and fear).

A sample of 131 undergraduate participants (75% female) rated these initial 40 clips, and we selected the 12 clips (4 happy, 4 sad, and 4 fearful) with the highest mean intensity and least variability in the ratings (further details in doctoral dissertation, Johnsen, 2004; see Table 1). For the present study, these music clips were recorded from compact discs and digitized on a Macintosh computer at a 44,100 Hz sampling rate using SoundEdit16.

Table 1.

Music Clips

| Emotion of music | Soundtrack | Title of clip | Length of clip (seconds) | Dominant tempo (beats/ minute) | Presentation order (1-12) |

|---|---|---|---|---|---|

| Happy

(H1) |

Charlie! | Cancan à Paris Boulevard | 138 | 115 | 1 |

| Happy

(H2) |

A Midsummer Night's Sex Comedy | Vivace non troppo | 122 | 124.5 | 3 |

| Happy

(H3) |

Gone with the Wind | Mammy | 140 | 85.3 | 6 |

| Happy

(H4) |

Dances with Wolves | The Buffalo Hunt | 162 | 105 | 10 |

|

| |||||

| Sad

(S1) |

Vertigo | Madeleine and Carlotta's Portrait | 99 | 65 | 5 |

| Sad

(S2) |

Backdraft | Brothers | 140 | 44 | 7 |

| Sad

(S3) |

Out of Africa | Alone on the Farm | 149 | 68 | 8 |

| Sad

(S4) |

Spartacus | Blue Shadows and Purple Hills | 74 | 58 | 11 |

|

| |||||

| Fear

(F1) |

Dangerous Liaisons | Tourvel's Flight | 101 | 61 | 2 |

| Fear

(F2) |

Crimson Tide | Alabama | 130 | 42.5 | 4 |

| Fear

(F3) |

Vertigo | Vertigo Prelude and Rooftop | 100 | 60 | 9 |

| Fear

(F4) |

Henry V | The Battle of Agincourt | 124 | 115.3 | 12 |

Skin conductance

Skin conductance was chosen as a primary psychophysiology dependent variable, given previous evidence of reliable skin conductance changes in response to music (Gomez & Danuser, 2004; Khalfa, Peretz, Blondin, & Robert, 2002; Krumhansl, 1997). The specific dependent measure used in this study was skin conductance area under the curve. Ag-AgCl electrodes were attached to the thenar and hypothenar areas on the palm of the non-dominant hand as described previously (Tranel & Damasio, 1994). A multichannel polygraph was used to collect skin conductance data at 1 kHz and data were subsequently analyzed using the AcqKnowledge MP100WS system (BIOPAC Systems, Inc.). After sitting quietly for a few minutes, baseline resting skin conductance measurements were recorded. Evoked skin-conductance responses were then measured to two control stimuli: orienting responses (elicited by the subject taking a deep breath) and responses evoked by emotionally neutral auditory stimuli (an ascending and descending tone sequence that lasted one minute and was presented through headphones). The auditory tones were used as the baseline for later group comparisons.

Musical emotion ratings

Music clips were presented through Bose noise canceling headphones using a Macintosh computer. Three sample music clips were played to allow participants to become accustomed to the procedure. They were given the opportunity to ask questions, and then the music task began. The 12 target music clips were presented in a fixed randomized order (see Table 1) at a fixed volume level that pilot participants (similar in age to the patient sample) had indicated was comfortable. All participants found this level to be comfortable and comparable to that at which they would normally listen to music. Participants were seated in a comfortable chair facing away from the investigator, who monitored the psychophysiology equipment throughout the session. Participants were given the following information about the study: “This is a research study that looks at how the brain processes emotion from music. In this study you will first complete some questionnaires and then we'll be doing a hearing test. After this, I'll attach some sensors to you that measure physical responses and you'll spend most of the session listening to music clips and providing ratings about your emotional responses.”

Participants completed a questionnaire immediately following each music clip that assessed feelings of happiness, sadness, and fear in response to the music on a scale from 0 “not at all (happy, sad, fearful)” to 9 “very (happy, sad, fearful).” Participants were specifically instructed to rate how they themselves felt from listening to the music. The derived measures from these data included mean experienced emotional intensity for the intended emotion, and specificity of experienced emotion (mean ratings of the 2 non-intended emotions subtracted from the rating for the intended emotion). As a measurement of the cognitive ability to recognize emotion signaled by music, participants were asked to categorically select the one word (happy, sad, fear, or neutral) that best reflected what emotion they believed the music was intended to express, regardless of how they felt during the music. The questionnaire took approximately one minute to complete and the next clip was presented immediately thereafter.

Background information

The Beck Depression Inventory-II was administered and participants with significant current depression were excluded as noted above. Musical background was assessed through questions about any professional musical experience, music education background, and participation in musical organizations in high school, college, and in the community. A background questionnaire was given to the normal comparison group to gather demographic information, including age, sex, race, handedness, and education. This information was already available for participants in the lesion groups due to their previous participation in other studies within the same laboratory.

Statistical Analyses

Background variables

Group differences in demographic variables, mood, and musical background were assessed using one-way ANOVAs for continuous variables (e.g., age, education) and chi square analyses for categorical variables (e.g., sex). To verify the efficacy of our selected stimuli, paired t-tests assessed whether the normal comparison group provided ratings in the expected direction for music with different intended emotions.

Psychophysiology

AcqKnowledge 3.7 software was used for post-acquisition mathematical transformations. Data were detrended (corrected for direct current drift), smoothed with a median filter to eliminate high-frequency noise, and area under the curve (AUC) was calculated as the positive time integral of the response amplitude, per minute. Paired-sample t-tests analyzed whether skin conductance area under the curve was higher during music in each emotion category (happy, sad, and fear) in comparison to skin conductance AUC during the neutral baseline tones condition.

Emotion recognition

The cognitive task that asked participants to categorize the intended emotion in the music was analyzed by using non-parametric Kruskal-Wallis tests to assess group differences in number of “correct” responses per musical emotion (ranging from 0 to 4 correct responses per emotion category). Non-parametric tests were used because the responses were not normally distributed.

Intensity and specificity of experienced emotion ratings

Repeated measure ANOVAs tested differences between the normal comparison group and brain lesion groups for 1) ratings of experienced emotional intensity on the intended emotion, and 2) specificity of the ratings (2 non-intended emotions subtracted from the intended emotion). Group (NC, VMPFC, RSS) functioned as a between-subjects variable and emotion of music (happy, sad, and fear) was a within-subjects variable. Follow-up simple effects comparisons were used to understand differences across group and emotion category (non-Bonferroni adjusted p values are reported). The NC and VMPFC groups were later combined due to a similar pattern of responses and these groups combined were compared with the RSS group using the repeated measure ANOVA described above.

Effect sizes and confidence intervals were calculated for the difference between the combined NC/VMPFC group and RSS group on happy, sad, and fearful music, as well as ratings when all three emotion categories were combined. The effect size statistic used was Cohen's d, in which the means of the two groups were subtracted from each other and this number was divided by the pooled standard deviation. As specified by Cohen (J. Cohen, 1988), the effect sizes were considered to be small at d = .20, medium at d = .50, and large at d = .80. The confidence intervals were visually inspected and possible group differences were supported when the range of differences between the groups did not include zero. Presentation of effect sizes and confidence intervals was also helpful in addressing questions that arise in connection with the relatively small group sample sizes, which can contribute to low statistical power.

Skin conductance area under the curve (SC AUC)

A univariate ANOVA compared skin conductance AUC during the neutral tones condition across the NC, VMPFC, and RSS groups to confirm that the groups did not have significant baseline differences in skin conductance. A repeated measures ANOVA was used to compare skin conductance AUC across groups during the music, with group (NC, VMPFC, and RSS) as a between-subjects variable and emotion of music (happy, sad, and fear) as a within-subjects variable. Caution is needed in interpretation of the within-subject effects (emotion) due to the fixed order of clip presentation, although the within subjects effects were not a primary focus of this study. Follow-up simple effects comparisons were used to understand differences across group and emotion category (non-Bonferroni adjusted p values are reported). The NC and RSS groups were combined due to a similar pattern of responses and these groups combined were compared with the VMPFC group using the repeated measure ANOVA described above.

Because there were large differences between the standard deviations of the NC/RSS group and VMPFC group and the homogeneity of variance assumption for ANOVA was violated, non-parametric Kruskal-Wallis analyses were also used to test for differences between the NC/RSS group and VMPFC group. Effect sizes and confidence intervals were calculated to assist in understanding patterns of differences between the NC/RSS and VMPFC groups for SC AUC responses to happy, sad, and fearful music, as well as SC AUC responses to the music combined across all three emotion categories.

Results

Baseline variables

Table 2 presents demographic information for the NC and lesion groups. There were no significant differences across groups in age, sex, race, handedness, time since lesion onset for the two lesion groups, hearing threshold, or musical experience. A significant group difference was found for education (F (2, 42) = 3.71, p = .03), with a higher level of education in the NC group relative to both the VMPFC and the RSS groups (p's < .05). A significant group difference was also found for depression (F (2, 42) = 3.53, p = .04), with the RSS group showing a higher score on the Beck Depression Inventory-II compared to the NC group (p = .01). No significant correlations were found between the study dependent variables (emotion ratings and psychophysiology) and education or between the dependent variables and depressive symptoms, so these variables were not included as covariates in the primary study analyses.

Table 2.

Demographic Variables: Means (and Standard Deviations) or Percentages by Lesion Group

| Variable | NC (n=20) | VMPFC (n=10) | RSS (n=15) |

|---|---|---|---|

| Age | 49.9 (11.5) | 55.3 (9.6) | 54.3 (16.1) |

| Sex | 45% M, 55% F | 50% M, 50% F | 46.7% M, 53.3% F |

| Race | 90% W, 10% AA | 100% W | 100% W |

| Handedness | 100% RH | 88.9% RH | 93.3% RH |

| Education (years) | 15.7 (3.0) | 13.2 (2.5) | 13.7 (2.3) |

| Years since lesion onset | N/A | 11.2 (8.7) | 6.3 (7.3) |

| Hearing threshold (decibels) | 23.8 (11.3) | 26.7 (7.2) | 28.5 (10.6) |

| BDI-II score | 4.4 (3.6) | 5.4 (6.1) | 8.6 (5.1) |

| % who participated in formal musical organizations | HS: 40% | HS: 30% | HS: 60% |

| CL: 0% | CL: 0% | CL: 7% | |

| CM: 25% | CM: 30% | CM: 47% |

Note. NC: Normal comparison, VMPFC: ventromedial prefrontal cortex, RSS: right somatosensory cortex. M: Male, F: Female, W: White, AA: African American, RH: Right handed, LH: left handed, BDI: Beck Depression Inventory-II, HS: during high school, CL: during college, CM: in the community.

Efficacy and specificity of the stimuli

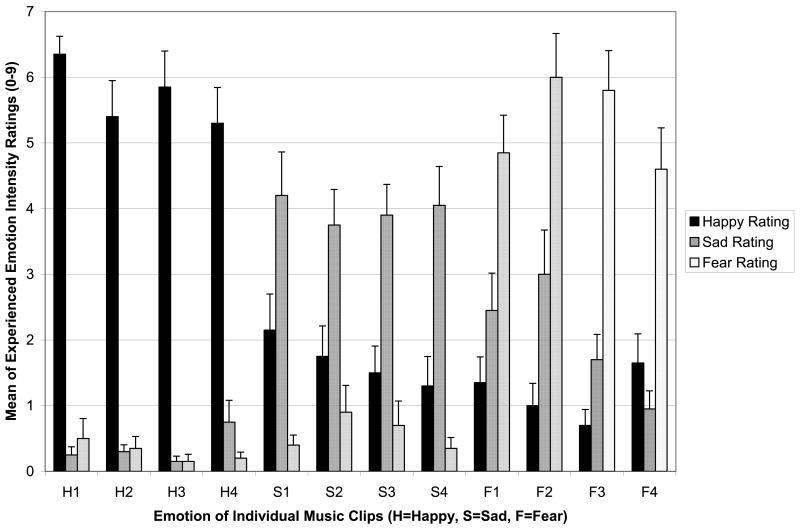

Paired sample t-tests explored whether the NC participants provided emotional experience ratings that were consistent with the intended emotion of the music. For clips selected to elicit happiness, happy ratings were significantly higher than both sad (t(19)=13.19, p<.001) and fearful ratings (t(19)=14.59, p<.001). Sad ratings for music intended to elicit sadness were significantly higher than happy (t(19)=3.50, p=.002) and fear (t(19)=6.87, p<.001) ratings. For fearful clips, ratings of fear were significantly higher than intensity ratings of happiness (t(19)= 6.07, p<.001) and sadness (t(19)=8.02, p<.001). Therefore, the clips selected were specific to the intended emotion. Figure 1 shows the ratings of experienced emotion intensity for NC participants.

Figure 1. Validation of the Stimuli.

Mean ratings (+SE) from the normal comparison group are shown for experienced happiness (black), sadness (dark gray), and fear (light gray), for each clip intended to convey these emotions (as indicated on the x-axis). For all clips, the ratings for the intended emotion were much higher than ratings of the other (non-intended) emotions.

Normal comparison participants showed higher SC AUC during emotional music compared to SC AUC during the baseline tones. Paired sample t-tests found significant increases in skin conductance AUC compared to baseline during happy (t(16)=-5.75, p<.001), sad (t(16)=-4.84, p<.001), and fearful music (t(16)=-4.66, p<.001) in the NC group. The amount of increase from baseline did not differ between happy, sad, and fearful music (p's>.13), indicating that there were similar levels of psychophysiological arousal on this measure across musical emotion categories. Overall, the rating and skin conductance results indicate that the music stimuli were effective in eliciting the intended emotion in healthy listeners, concomitant with eliciting emotional arousal.

Recognizing the intended emotion

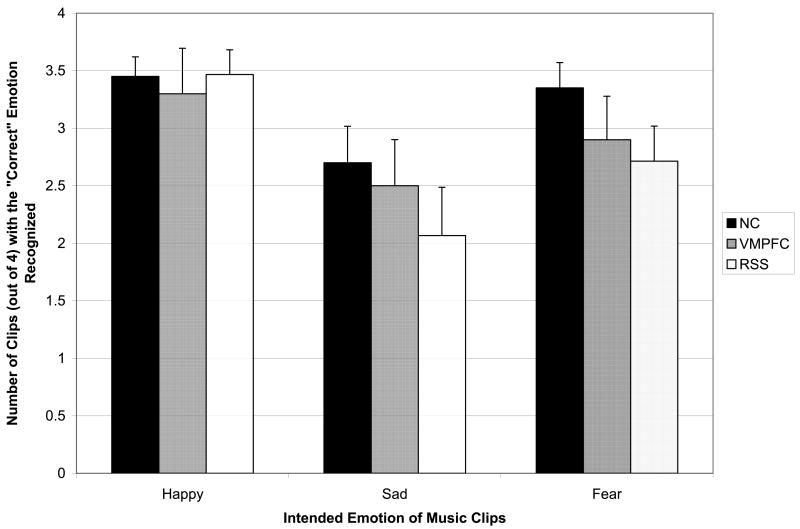

Figure 2 shows the number of music clips (out of 4 per emotion category) for which the NC, VMPFC, and RSS groups correctly recognized the intended emotion. No significant differences were found between the NC and VMPFC groups in ability to recognize the intended emotion in the music (Happy: X2(1)=.00, p=.98, Sad: X2(1)=.23, p=.63, Fear: X2(1)=1.69, p=.19, All Clips: X2(1)=.34, p=.56). There were also no significant differences between the NC and RSS group in the ability to recognize the intended emotion for the music clips (Happy: X2(1)=.02, p=.89, Sad: X2(1)=1.18, p=.28, Fear: X2(1)=3.10, p=.08, All Clips: X2(1)=2.93, p=.09). Therefore, lesions to the ventromedial prefrontal cortex and right somatosensory cortex did not appear to substantially interfere with the ability to recognize emotion in this set of music clips, at a cognitive level.

Figure 2. Recognizing the Intended Emotion.

Mean number of clips (out of a maximal 4; +SE) correctly recognized, plotted for the three subject groups, NC (black), VMPFC (dark gray), and RSS (light gray). The three groups were comparable for all three emotions.

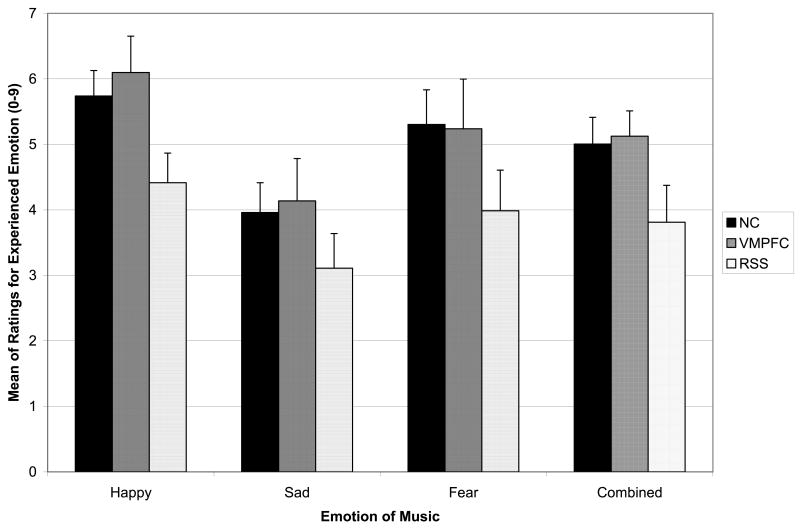

Intensity of emotional experience

Figure 3 depicts ratings of the intensity of emotional experience, as a function of group and emotion category. A repeated measures ANOVA was conducted on this measure with group as a between-subjects variable and emotion as a within-subjects variable. A significant emotion main effect was found (F (2, 41) = 22.95, p < .001), with follow-up comparisons showing that emotion intensity ratings were higher for happy clips than for both sad (p<.001) and fearful (p=.008) clips. Emotion intensity ratings were higher for fearful clips than sad clips (p<.001). The group main effect was not statistically significant (F (2, 42) = 2.23, p = .12), indicating that the ratings across all emotion categories combined did not differ substantially among the three groups. Planned follow-up pairwise comparisons found that the RSS group provided lower ratings than the NC and VMPFC groups at marginal significance levels (p = .07, p = .09, respectively) and the NC and VMPFC groups did not differ in emotion intensity ratings (p = .87). The group by emotion interaction was not significant (F (4, 82) = .43, p = .78), indicating that groups did not differ in patterns of ratings across emotion categories.

Figure 3. Experience of Emotion.

Mean ratings of the intensity of experienced emotion (+SE), plotted for happy, sad, fearful, and combined music selections as a function of subject group (NC, black; VMPFC, dark gray; RSS, light gray). The NC and VMPFC groups were very comparable, while the RSS group consistently reported lower experience of emotion, for all three emotion types.

Due to the similarity of ratings between the NC and VMPFC groups, these groups were collapsed and then compared together with the RSS group using the ANOVA previously described. This group main effect was statistically significant (F (1, 43) = 4.53, p = .04), with the RSS group showing significantly lower ratings of experienced emotion intensity for the emotional music across the combined emotion categories compared to the NC/VMPFC group. The group by emotion interaction was not significant (F (2, 42) = .30, p = .74), indicating no difference in patterns of ratings based on emotion category (happy, sad, fear).

Table 3 provides effect sizes and confidence intervals of the difference between the NC/VMPFC group and RSS group on emotional intensity ratings. The effect sizes for the ratings combined across all emotion categories and for happy clips were medium to large, and the effect sizes for sad and fearful music were medium. The confidence intervals for the differences in ratings did not contain zero for happy music or the music combined across emotions. Therefore, an inspection of effect sizes is consistent with a pattern of lower emotional experience ratings across emotion categories in the RSS group and lower ratings for happy music, relative to the NC and VMPFC groups combined.

Table 3.

Effect Sizes and Confidence Intervals for Differences in Experienced Emotional Intensity Ratings Between the NC/VMPFC combined group and the RSS group.

| Clip emotion | Sample sizes | Mean difference (95% CI) | Effect size (d)

(95% CI) |

|---|---|---|---|

| Happy | 30, 15 | -1.38

(-2.46 to -.29) |

-.80

(-1.43 to -.15) |

| Sad | 30, 15 | -.98

(-2.29 to .33) |

-.48

(-1.10 to .16) |

| Fear | 30, 15 | -1.34

(-2.88 to .19) |

-.56

(-1.18 to .08) |

| Combined Emotions | 30, 15 | -1.23

(-2.40 to -.06) |

-.67

(-1.30 to -.03) |

Note: Negative effect size values are in the hypothesized direction (RSS group has a lower score than the NC/VMPFC group). CI = Confidence interval. Sample sizes are given for combined NC + VMPFC groups, and then for the RSS group.

Specificity of emotion ratings

Figure 4 depicts specificity of emotion ratings as a function of group and emotion category. A repeated measures ANOVA with emotion as a within-subjects variable and group as a between-subjects variable found a significant emotion main effect (F (2, 41) = 34.77, p = .00), and follow-up pairwise comparisons found that participants provided higher ratings on the intended emotion and lower ratings on the non-intended emotion for happy music in comparison to both sad and fearful music (p's < .001). The participants provided more specific ratings of fearful clips (higher on the intended emotion, lower on the two non-intended emotions) in comparison to sad music (p = .04), which was the least specifically rated musical emotion. The group main effect was not statistically significant (F (2, 42) = 1.93, p = .16), indicating that the ratings across all emotion categories combined did not differ substantially between the NC and lesion groups. Planned follow-up pairwise comparisons found that the RSS group provided marginally less specific ratings of the musical emotion relative to the NC group (p = .06). No significant differences were found in the specificity of emotion ratings between the NC and VMPFC groups (p = .77) or between the RSS and VMPFC groups (p = .19).

Figure 4. Specificity of Emotion Ratings.

Mean specificity (+SE), calculated as the intensity on the intended emotion minus the mean intensity on the two unintended emotions for a given clip, plotted as a function of emotion type and subject group (NC, black; VMPFC, dark gray; RSS, light gray). As for the experience of emotion shown in Figure 3, the NC and VMPFC groups were comparable in their specificity ratings, whereas the RSS group was consistently lower.

In parallel to the intensity of emotional experience rating analyses above, the NC/VMPFC groups were collapsed to investigate differences from the RSS group in specificity of emotion ratings. A marginally significant group main effect (F (1, 43) = 3.85, p = .06) suggested a trend for the RSS group to provide less specific ratings of emotion across all emotion categories relative to the NC/VMPFC group. The group by emotion interaction was not significant (F (2, 42) = .37, p = .69), indicating no difference in patterns of ratings across emotion categories between groups.

Table 4 provides effect sizes and confidence intervals of the difference between the NC/VMPFC group and RSS group on specificity of emotion ratings. The effect size for specificity of emotion ratings following happy music was large and the effect size for specificity of emotion ratings for the clips combined across emotion categories was medium, which was consistent with less specific emotion ratings by the RSS group relative to the NC/VMPFC group. The effect sizes for sad and fearful music were small to medium, and in the same direction, with the RSS group showing less specific ratings relative to the NC/VMPFC group. The confidence interval for the differences between groups on happy music did not include zero, which supported less specific ratings on happy music by the RSS group relative to the other groups combined.

Table 4.

Effect Sizes and Confidence Intervals for Differences in Specificity of Emotion Ratings Between the NC/VMPFC combined group and the RSS group.

| Clip emotion | Sample sizes | Mean difference (95% CI) | Effect size (d)

(95% CI) |

|---|---|---|---|

| Happy | 30, 15 | -1.40

(-2.51 to -.30) |

-.81

(-1.44 to -.16) |

| Sad | 30, 15 | -.83

(-2.25 to .60) |

-.37

(-.99 to .26) |

| Fear | 30, 15 | -1.03

(-2.49 to .43) |

-.45

(-1.07 to .19) |

| Combined Emotions | 30, 15 | -1.09

(-2.20 to .03) |

-.62

(-1.24 to .02) |

Note: Negative effect size values are in the hypothesized direction (RSS group has a lower score than the NC/VMPFC group). CI = Confidence interval.

Psychophysiological responses to emotional music

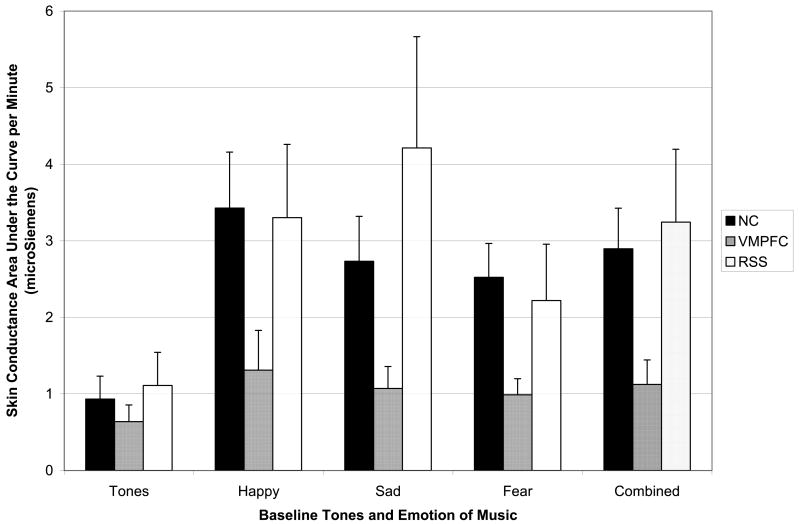

Figure 5 depicts skin conductance area under the curve (SC AUC), presented as a function of group and condition (tones, happy, sad, fearful, and all musical emotions combined). For the neutral tones condition, there was no significant group main effect (F (2, 33) = .32, p = .73), indicating that the lesion groups and NC group showed similar baseline levels of psychophysiological response to a neutral, orienting stimulus.

Figure 5. Skin Conductance Response to Music.

Skin conductance area under the curve per Minute (+SE) for happy, sad, fearful, and combined music selections, as a function of subject group (NC, black; VMPFC, dark gray; RSS, light gray). The pattern was the reverse of the experience of emotion findings reported in Figure 3; specifically, the NC and RSS groups were very comparable, while the VMPFC group consistently produced lower skin conductance responses to the emotional music clips, for all three emotion types. The groups were comparable for the baseline neutral tones.

A repeated measures ANOVA, with emotion as a within-subjects variable and group as a between-subjects variable, found a significant main effect for emotion (F (2, 32) = 3.22, p = .05), and follow-up comparisons found that SC AUC was lower for fearful than happy music (p=.05) and marginally lower for fearful than for sad music (p=.06). No significant difference was found between SC for happy vs. sad music (p=.99). The group main effect for SC AUC was not statistically significant (F (2, 33) = 1.78, p = .19), indicating that the autonomic response across all emotion categories combined did not differ substantially between the three groups. Planned pairwise comparisons showed that the VMPFC group had marginally lower SC AUC than the RSS group across musical emotion categories combined (p = .08). Although visual inspection showed lower SC AUC in the VMPFC group relative to the NC group, the difference was not statistically significant (p = .12). No significant difference was found in SC AUC between the NC and RSS group (p = .71). The group by emotion interaction was not significant (F (4, 64) = 1.44, p = .23), indicating no pattern of differences between groups in psychophysiological arousal across specific musical emotion categories.

Due to no significant differences in SC AUC, the NC and RSS groups were collapsed into a single group and then compared with the VMPFC group. The previous ANOVA was repeated with these revised groups. A marginally significant main effect was found for group (F (1, 34) = 3.50, p = .07), which was consistent with lower SC AUC in the VMPFC group relative to the NC/RSS group. The group by emotion interaction was not significant (F (2, 33) = .55, p = .58), indicating no differences in pattern of psychophysiological arousal across musical emotion categories.

Due to some violation of the homogeneity of variance ANOVA assumption, non-parametric Kruskal-Wallis analyses were also conducted to compare the NC/RSS group with the VMPFC group. The VMPFC group had significantly lower SC AUC during happy (X2(1)=4.24, p=.04), sad (X2(1)=4.57, p=.03), and fearful music (X2(1)=3.92, p=.05), and the musical emotion categories combined (X2(1)=5.66, p=.02) relative to the NC/RSS group.

Table 5 provides effect sizes and confidence intervals of differences between the NC/RSS group and VMPFC group in SC AUC. The effect sizes for differences between the groups were at least medium for happy, sad, and fearful music and for the music clips combined across emotions. All the confidence intervals for the differences in SC AUC between the groups included zero, which is likely due to the high amount of variability in this measure. Overall, the effect sizes suggest lower skin conductance in the VMPFC group relative to the NC and RSS groups.

Table 5.

Effect Sizes and Confidence Intervals for Differences in Skin Conductance Area Under the Curve Between the NC/RSS combined group and the VMPFC group.

| Clip emotion | Sample sizes | Mean difference (95% CI) | Effect size (d)

(95% CI) |

|---|---|---|---|

| Happy | 29, 7 | -2.07

(-4.51 to .38) |

-.72

(-1.55 to .13) |

| Sad | 29, 7 | -2.27

(-5.18 to .63) |

-.67

(-1.49 to .18) |

| Fear | 29, 7 | -1.41

(-3.07 to .25) |

-.73

(-1.55 to .13) |

| Combined Emotions | 29, 7 | -1.92

(-4.00 to .16) |

-.79

(-1.62 to .07) |

Note: Negative effect size values are in the hypothesized direction (VMPFC group has a lower score than the NC/RSS group). CI = Confidence interval.

Discussion

The present study provides further insight into the emotion components subserved by distinct cortical sites, and adds a valuable datapoint to the literature on the neuroscience of processing emotion from music. Our two main emotion components of interest were autonomic response and emotion experience, and our two neuroanatomical sites of interest were the ventromedial prefrontal cortex and right somatosensory cortices. We had hypothesized that the two emotion components would map onto the two brain regions, respectively, but in a dissociable fashion.

Our basic hypothesis was supported by the data, albeit with some caveats that we discuss further below. To summarize:

Our stimuli were effective in eliciting both reliable autonomic responses and emotional experience in healthy individuals.

Lesions to the VMPFC disproportionately impaired autonomic responses, while leaving emotional experience relatively unaffected.

Lesions to the RSS disproportionately impaired emotional experience, while leaving autonomic response relatively unaffected.

Taken together, the pattern of findings provides support for the idea that autonomic response and emotional experience can be dissociated, and that each can occur despite impairment of the other. Moreover, the mapping of emotion component to brain region is what one would expect, and what we hypothesized, given what is known about the function of the two brain structures that we investigated. These data show, at a minimum, that disruption (but not abolition) of autonomic responses does not necessarily disrupt emotional experience. Thus, the data argue against at least the strongest version of William James' original idea, that bodily emotional reactions are required for emotional experience. It is important to note that the data do not, and could not, argue against weaker and broader versions of James' theory (e.g., A.R. Damasio, 1999), since we did not assess all components of emotional response (we only measured skin conductance response, a specific aspect of sympathetic autonomic arousal), and since we did not measure all aspects of emotional experience (for instance, it is possible that simply asking people to rate their experience is an insufficiently sensitive measure of feelings). These limitations are well known, present in most studies to date, and remain a key reason why James' theory (broadly construed) is difficult to refute or confirm decisively.

The statistical analysis of our study was constrained in several respects, largely due to small sample sizes that are often a factor in lesion studies in humans. In addition to significance tests, we also provided effect sizes and confidence intervals in the tables, which characterize the patterns in the data more objectively and allow readers to evaluate the effects for themselves. Our basic statistical tests consisted of a two-step approach: we first asked whether any of the lesion groups was normal on a given measure (i.e., did not differ, even at trend level, from the normal comparison group); if so, we collapsed that lesion group and the normal comparison group, effectively creating a new group with a larger sample size. We then contrasted this new group against the remaining lesion group. In each case, this remaining lesion group had initially already shown a difference from both the normal comparison group as well as the other (normal-performing) lesion group, but that difference remained at a trend level. The subsequent contrast of the impaired lesion group against the pooled group then provided greater statistical power, and achieved a lower statistical threshold (more significance). We believe this more exploratory approach to the analysis of our data in fact characterizes the patterns that we found, without penalizing for the small samples and large variances encountered in lesion studies. The tables and the figures summarize the actual data, and provide material that can be used in future studies for comparison purposes or for meta-analysis. Future studies could no doubt improve upon statistical power issues through simply increasing sample sizes, both for the lesion patients (where available) and also for the healthy comparison subjects.

In addition to its implications for understanding the neural basis of emotion processing, and in particular the causal relationship of components in such processing, as we have noted above, our study is of interest in its use of music, given increased empirical attention to the biological foundations of music (e.g., Zatorre & Peretz, 2001; Avanzini, et al., 2003 and 2006). Although most previous studies have focused on music perception abilities (e.g., detection of pitch), there has been increased interest in how the brain processes musical emotion, as music appears to be a “language of emotion” across cultures (A. Damasio & Damasio, 1977, p. 142, Storr, 1992). While we believe that music is a particularly well-suited stimulus for investigating the two components of emotion we examined in our study (psychophysiological response and feeling), we would expect other classes of emotional stimuli, such as films or non-musical emotional sounds, to produce a similar pattern with respect to our target neural regions.

Many lesion studies of emotion from music have focused on the ability to detect what emotion a piece of music is intended to convey, rather than on actual emotional experiences in the listener (Gosselin et al., 2005, 2007; Gottselig, 2001; Kinsella, Prior, & Jones, 1990; Peretz & Gagnon, 1999; Peretz, Gagnon, & Bouchard, 1998). Several of these studies have found relatively intact judgment of emotion in music. However, there has been evidence of more selective impairment in detecting fear associated with focal lesions to the unilateral (Gosselin et al., 2005) and bilateral amygdala (Gosselin et al., 2007) in participants with normal general music perception abilities. These findings are consistent with existing studies on the role of the amygdala in recognizing fear in faces (see review in Adolphs, 2002).

An increasing body of neuroimaging research has begun to investigate how the normal brain responds to emotional experiences from music. For example, Blood & Zatorre (2001) used participant-selected music that consistently produced “shivers down the spine” or “chills” and found cerebral blood flow changes in brain regions thought to be associated with reward/motivation (ventral striatum, midbrain, amygdala, orbitofrontal cortex, and ventral medial prefrontal cortex) as intensity of reported chills increased. Music has been used to enhance emotional feelings in response to other stimuli such as affective pictures, and the addition of music has been associated with increased activation in structures involved in emotion processing such as limbic and prefrontal regions (Eldar et al., 2007; Baumgartner et al., 2006).

A final point of interest from our study is the preservation of the ability to recognize the intended emotion from music, in all subject groups. Previous lesion studies have found relatively normal ability to detect emotion in music (Gottselig, 2001), including preserved emotion recognition following damage to right hemisphere (Kinsella et al., 1990), with the possible exception of the amygdala's role in fear recognition noted above. Moreover, intact ability to recognize the emotion conveyed by music can persist even when other aspects of music perception are severely impaired (Peretz et al., 1998). These findings are consistent with the ones of our present study. Together, they provide suggestive evidence for dissociation with respect to yet a third component of emotion processing: Not only can autonomic response and emotional experience be dissociated, but they appear to each be dissociable from cognitive recognition of the intended emotion. These dissociations are of value also for clinicians interested in music as therapy. They suggest that patients who do not show normal responses or arousal to music may still experience emotions from music, and that even when arousal or experience are compromised, music can be used for the social communication of mood and emotion.

Acknowledgments

This study was completed by the first author under the supervision of the other authors in partial fulfilment of the requirements for the PhD degree at the University of Iowa. The study was supported by NIDA R01 DA022549 and NINDS P01 NS19632

References

- Adolphs R. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1:21–61. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Cooper G, Damasio AR. A role for somatosensory cortices in the visual recognition of emotion as revealed by 3-D lesion mapping. The Journal of Neuroscience. 2000;20:2683–2690. doi: 10.1523/JNEUROSCI.20-07-02683.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Al Maskati HA, Zbrozyna AW. Stimulation in prefrontal cortex area inhibits cardiovascular and motor components of the defence reaction in rats. Journal of the Autonomic Nervous System. 1989;28:117–126. doi: 10.1016/0165-1838(89)90084-2. [DOI] [PubMed] [Google Scholar]

- Allen J, Coan J. Handbook of Emotion Elicitation and Assessment. New York: Oxford University Press; 2007. [Google Scholar]

- Avanzini G, Faienza C, Minciacchi D, Lopez L, Majno M. The Neurosciences and Music. Annals of the New York Academy of Sciences. 2003;999 [Google Scholar]

- Avanzini G, Lopez L, Koelsch S. The Neurosciences and Music II: From Perception to Performance. Annals of the New York Academy of Sciences. 2006;1060 [Google Scholar]

- Baumgartner T, Lutz K, Schmidt CF, Jancke I. The emotional power of music: How music enhances the feeling of affective pictures. Brain Research. 2006;1075:151–164. doi: 10.1016/j.brainres.2005.12.065. [DOI] [PubMed] [Google Scholar]

- Beck AT, Steer RA, Brown GK. Manual for Beck Depression Inventory (BDI-II) San Antonio, TX: Psychology Corporation; 1996. [Google Scholar]

- Blonder LX, Bowers D, Heilman K. The role of the right hemisphere in emotional communication. Brain. 1991;114:1115–1127. doi: 10.1093/brain/114.3.1115. [DOI] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. PNAS. 2001;98:11818–11823. doi: 10.1073/pnas.191355898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borod J. Interhemispheric and intrahemispheric control of emotion: a focus on unilateral brain damage. J Consulting and Clinical Psychology. 1992;60:339–348. doi: 10.1037//0022-006x.60.3.339. [DOI] [PubMed] [Google Scholar]

- Borod J. Neuropsychology of Emotion. NY: Oxford University Press; 2000. [Google Scholar]

- Borod JC. Cerebral mechanisms underlying facial, prosodic, and lexical emotional expression: a review of neuropsychological studies and methodological issues. Neuropsychology. 1993;7:445–463. [Google Scholar]

- Borod JC, Kashemi DR, Haywood CS, Andelman F, Obler LK, Welkowitz J, et al. Hemispheric specialization for discourse reports of emotional experiences: relationships to demographic, neurological, and perceptual variables. Neuropsychologia. 1996;34:351–359. doi: 10.1016/0028-3932(95)00131-x. [DOI] [PubMed] [Google Scholar]

- Borod JC, Obler LK, Erhan HM, Grunwald IS, Cicero BA, Welkowitz J, et al. Right hemisphere emotional perception: evidence across multiple channels. Neuropsychology. 1998;12:446–458. doi: 10.1037//0894-4105.12.3.446. [DOI] [PubMed] [Google Scholar]

- Cacioppo JT, Tassinary LG, Berntson GG. Handbook of Psychophysiology. 3rd. Cambridge, England: Cambridge University Press; 2007. [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL. Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci U S A. 2003;100(9):5497–5502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen AJ. Music as a source of emotion in film. In: J PN, Sloboda JA, editors. Music and Emotion. Oxford: Oxford University Press; 2001. pp. 249–272. [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. 2nd. Hillsdale, NJ: Lawrence Erlbaum Associates; 1988. [Google Scholar]

- Craig AD. How do you feel? Interoception: the sense of the physiological condition of the body. Nature Reviews Neuroscience. 2002;3:655–666. doi: 10.1038/nrn894. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Elliott R, Mathias CJ, Dolan RJ. Neural activity relating to generation and representation of galvanic skin conductance responses: a functional magnetic resonance imaging study. The Journal of Neuroscience. 2000;20:3033–3040. doi: 10.1523/JNEUROSCI.20-08-03033.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley HD, Wiens S, Rotshtein P, Oehman A, Dolan RJ. Neural systems supporting interoceptive awareness. Nature Neuroscience. 2004;7:189–195. doi: 10.1038/nn1176. [DOI] [PubMed] [Google Scholar]

- Dalgleish T. The emotional brain. Nature Reviews Neuroscience. 2004;5:582–589. doi: 10.1038/nrn1432. [DOI] [PubMed] [Google Scholar]

- Damasio A, Damasio H. Musical faculty and cerebral dominance. In: Critchley M, Henson RA, editors. Music and the Brain. 1977. pp. 141–155. [Google Scholar]

- Damasio AR. Descartes' Error: Emotion, Reason, and the Human Brain. New York: Grosset/Putnam; 1994. [Google Scholar]

- Damasio AR. The somatic marker hypothesis and the possible functions of the prefrontal cortex. (B).Phil Trans R Soc London. 1996;351:1413–1420. doi: 10.1098/rstb.1996.0125. [DOI] [PubMed] [Google Scholar]

- Damasio AR. The Feeling of What Happens: Body and Emotion in the Making of Consciousness. New York: Harcourt Brace; 1999. [Google Scholar]

- Damasio AR, Tranel D, Damasio H. Individuals with sociopathic behavior caused by frontal damage fail to respond autonomically to social stimuli. Behav Brain Res. 1990;41:81–94. doi: 10.1016/0166-4328(90)90144-4. [DOI] [PubMed] [Google Scholar]

- Damasio H, Grabowski T, Frank R, Galaburda AM, Damasio AR. The return of Phineas Gage: Clues about the brain from the skull of a famous patient. Science. 1994;264:1102–1104. doi: 10.1126/science.8178168. [DOI] [PubMed] [Google Scholar]

- Davidson RJ, Goldsmith HH, Scherer K. Handbook of Affective Science. New York: Oxford University Press; 2003. [Google Scholar]

- Dawson ME, Schell AM, Filion DL. The electrodermal system. In: Cacciopo JT, Tassinary LG, Berntson GB, editors. Handbook of psychophysiology. Cambridge: Cambridge University Press; 2000. pp. 200–223. [Google Scholar]

- Ekman P. Basic emotions. In: Dalgleish T, Power MJ, editors. Handbook of cognition and emotion. Chichester, England: John Wiley & Sons; 1999. pp. 45–60. [Google Scholar]

- Eldar E, Ganor O, Bleich A, Hendler T. Feeling the real world: Limbic response to music depends on related content. Cerebral Cortex. 2007;17:2828–2840. doi: 10.1093/cercor/bhm011. [DOI] [PubMed] [Google Scholar]

- Feldman-Barrett L, Niedenthal PM, Winkielman P. Emotion and Consciousness. New York: Guilford Press; 2005. [Google Scholar]

- Friedman DP, Murray EA, O'Neill JB, Mishkin M. Cortical connections of the somatosensory fields of the lateral sulcus of macaques: evidence for a corticolimbic pathway for touch. J Comp Neurol. 1986;252:323–347. doi: 10.1002/cne.902520304. [DOI] [PubMed] [Google Scholar]

- Gomez P, Danuser B. Affective and physiological responses to environmental noises and music. International Journal of Psychophysiology. 2004;53:91–103. doi: 10.1016/j.ijpsycho.2004.02.002. [DOI] [PubMed] [Google Scholar]

- Gorbman C. Unheard melodies: narrative film music. London: BFI Publications; 1987. [Google Scholar]

- Gosselin N, Peretz I, Noulhiane M, Hasboun D, Beckett C, Baulac M, et al. Impaired recognition of scary music following unilateral temporal lobe excision. Brain. 2005;128:628–640. doi: 10.1093/brain/awh420. [DOI] [PubMed] [Google Scholar]

- Gottselig JM. Doctoral Dissertation. University of Iowa; 2001. Human neuroanatomical systems for perceiving emotion in music. 2001. [Google Scholar]

- James W. What is an emotion? Mind. 1884;9:188–205. [Google Scholar]

- Johnsen EL. Doctoral dissertation. University of Iowa; 2004. Neuroanatomical correlates of emotional experiences from music. 2004. [Google Scholar]

- Hall RE, Livingston RB, Bloor CM. Orbital cortical influences on cardiovascular dynamics and myocardial structure in conscious monkeys. J Neurosurg. 1977;46:638–647. [PubMed] [Google Scholar]

- Kaada BR, Pribram HH, Epstein JA. Respiratory and vascular responses in monkeys from temporal pole, insula, orbital surface, and cingulate gyrus. J Neurophysiol. 1949;12:347–456. doi: 10.1152/jn.1949.12.5.347. [DOI] [PubMed] [Google Scholar]

- Keillor JM, Barrett AM, Crucian GP, Kortenkamp S, Heilman KM. Emotional experience and perception in the absence of facial feedback. Journal of the International Neuropsychological Society. 2002;8:130–135. [PubMed] [Google Scholar]

- Khalfa S, Peretz I, Blondin J, Robert M. Event-related skin conductance responses to musical emotions in humans. Neuroscience Letters. 2002;328:145–149. doi: 10.1016/s0304-3940(02)00462-7. [DOI] [PubMed] [Google Scholar]

- Kinsella G, Prior M, Jones V. Judgment of mood in music following right hemisphere damge. Archives of Clinical Neuropsychology. 1990;5:359–371. [PubMed] [Google Scholar]

- Krumhansl CL. An exploratory study of musical emotions and psychophysiology. Canadian Journal of Experimental Psychology. 1997;51:336–352. doi: 10.1037/1196-1961.51.4.336. [DOI] [PubMed] [Google Scholar]

- Livingston RB, Chapman WP, Livingston KE. Stimulation of orbital surface of man prior to frontal lobotomy. The Frontal Lobes Res Publ Assoc Res Nerv Ment Dis. 1948;8(4):21–432. [PubMed] [Google Scholar]

- Öngür D, An X, Price JL. Prefrontal cortical projections to the hypothalamus in macaque monkeys. J Comp Neurol. 1998;401:480–505. [PubMed] [Google Scholar]

- Öngür D, Price JL. The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys, and humans. Cerebral Cortex. 2000;10:206–219. doi: 10.1093/cercor/10.3.206. [DOI] [PubMed] [Google Scholar]

- Parvizi J, Anderson SW, Martin CO, Damasio H, Damasio A. Pathological laughter and crying. A link to the cerebellum. Brain. 2001;124:1708–1719. doi: 10.1093/brain/124.9.1708. [DOI] [PubMed] [Google Scholar]

- Peretz I. Listen to the brain: a biological perspective on musical emotions. In: Juslin PN, Sloboda JA, editors. Music and Emotion. Oxford: Oxford University Press; 2001. pp. 105–134. [Google Scholar]

- Peretz I, Gagnon L. Dissociation between recognition and emotional judgements for melodies. Neurocase. 1999;5:21–30. [Google Scholar]

- Peretz I, Gagnon L, Bouchard B. Music and emotion: perceptual determinants, immediacy, and isolation after brain damage. Cognition. 1998;68:111–141. doi: 10.1016/s0010-0277(98)00043-2. [DOI] [PubMed] [Google Scholar]

- Peretz I, Zatorre R. Brain organization for music processing. Annual Review of Psychology. 2005;56:89–114. doi: 10.1146/annurev.psych.56.091103.070225. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan RJ, Frith CD. Empathy for pain involves the affective but not sensory components of pain. Science. 2004;303:1157–1162. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Storr A. Music and the Mind. New York: Ballantine Books; 1992. [Google Scholar]

- Tranel D, Damasio H. Neuroanatomical correlates of electrodermal skin conductance responses. Psychophysiology. 1994;31:427–438. doi: 10.1111/j.1469-8986.1994.tb01046.x. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Adolphs R. Emotion and consciousness. Trends in Cognitive Sciences. 2007;11:158–167. doi: 10.1016/j.tics.2007.01.005. [DOI] [PubMed] [Google Scholar]

- Winston JS, O'Doherty J, Dolan RJ. Common and distinct neural responses during direct and incidental processing of multiple facial expressions. Neuroimage. 2003;20:84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Peretz I, editors. Annals of the New York Academy of Sciences. Vol. 930 2001. The biological foundation of music. [Google Scholar]