Abstract

During motor adaptation the nervous system constantly uses error information to improve future movements. Today's mainstream models simply assume that the nervous system adapts linearly and proportionally to errors. However, not all movement errors are relevant to our own action. The environment may transiently disturb the movement production—for example, a gust of wind blows the tennis ball away from its intended trajectory. Apparently the nervous system should not adapt its motor plan in the subsequent tennis strokes based on this irrelevant movement error. We hypothesize that the nervous system estimates the relevance of each observed error and adapts strongly only to relevant errors. Here we present a Bayesian treatment of this problem. The model calculates how likely an error is relevant to the motor plant and derives an ideal adaptation strategy that leads to the most precise movements. This model predicts that adaptation should be a nonlinear function of the size of an error. In reaching experiments we found strong evidence for the predicted nonlinear strategy. The model also explains published data on saccadic gain adaptation, adaptation to visuomotor rotations, and force perturbations. Our study suggests that the nervous system constantly and effortlessly estimates the relevance of observed movement errors for successful motor adaptation.

INTRODUCTION

Our movements are affected by the ever-changing environment and our body. To move precisely, we need to adjust motor commands to counteract these changes using our perceived error information. Many experimental studies have addressed how people may solve this computational problem by imposing two distinct classes of perturbations onto subjects. The first class alters the visual feedback. Manipulations include wearing prism glasses to shift the view of the external world (e.g., Harris 1965; van Beers et al. 1996), moving the visual target during saccades (e.g., McLaughlin 1967), and shifting or rotating the visual display of the hand in a virtual reality environment (e.g., Baddeley et al. 2003; Cheng and Sabes 2007; Elliott 1981). The second class of disturbances is to mechanically disturb the movement. This includes changing the inertial property of the moving limb (e.g., Bock 1990) or introducing a novel force field by a research robot (e.g., Scheidt et al. 2001; Shadmehr and Mussa-Ivaldi 1994). Both visual and mechanical disturbances induce movement errors to which subjects readily adapt.

The role of error feedback in motor adaptation has been emphasized in many theoretical approaches. Most studies to date that focus on how the amplitude of errors influences adaptation typically assume that the size of adaptation is a linear function of past errors (Kawato et al. 1987; Scheidt et al. 2001; Thoroughman and Shadmehr 2000; Wolpert and Kawato 1998). However, we want to illustrate an important problem of this strategy with a simple example. When we return a tennis ball to a corner of the court (which challenges our opponent), we perceive how precisely the ball lands (movement error) for every serve and adjust our motor command accordingly. However, if the ball is deflected by the opponent before landing, we should not adapt our motor command according to this error. This example demonstrates that people take the relevance of perceived errors into account during motor adaptation—i.e., they disregard the movement error that is induced by irrelevant factors. We ask how the nervous system achieves this feat and how relevance estimation influences the relationship between error and motor adaptation.

Relevance of sensory information has previously been considered in research on perception. Cue combination studies investigate how sensory inputs from different modalities are integrated into a coherent percept. If we know that two cues—for example, a moving mouth and a speech signal have a common cause (i.e., both from one person)—then we can combine these two signals and use our visual perception to improve speech recognition (McGurk and MacDonald 1976; Munhall et al. 1996). If we see a mouth moving without coherence to ongoing speech we will not combine the signals. It was also found that with increasing spatial or temporal disparities, multimodal cues have diminishing influence on one another (Gepshtein and Banks 2003; Gepshtein et al. 2005; Hillis et al. 2002; Roach et al. 2006). These findings have been explained by the idea of causal inference (Ernst 2006; Knill 2003; Körding et al. 2007a), which is the term used extensively in cognitive science (Cheng and Novick 1992). It is believed that the nervous system interprets cues in terms of their causes (see also Treisman 1996; von Helmholtz 1954). The important implication from these studies is that when cues are very different from one another in space and time, the nervous system will infer that they are not related and thus should be processed separately. Thus the estimation of the relevance of sensory cues determines whether and how the nervous system combines cues.

Here we hypothesize that motor adaptation involves an analogous process to estimate the relevance of the error information. For example, whenever the visual system detects an error, the nervous system needs to consider two hypotheses. The error may be induced by extrinsic factors, unrelated to the motor plant such as the deflected tennis ball mentioned earlier. In this case, the nervous system should largely disregard the visual error and exhibit only a small adaptive change, mostly driven by proprioceptive feedback. Alternatively, the error may be induced by intrinsic factors that are within the motor plant, such as fatigue of the muscles. In this case, the visual error is relevant and the motor plant should make adaptive changes large enough to overcome the error on future movements. Because our perception is inherently noisy, the process of inferring the relevance of an error always happens in the presence of uncertainty. The nervous system can thus never be completely certain about the relevance of visual errors. Rather it has to estimate the probability of the above-cited hypotheses using the multimodal sensory information and their disparities and assign weight or credit to each hypothesis. Because the CNS needs to solve the relevance estimation problem in the presence of uncertainty, the appropriate approach is to use Bayesian statistics, which is the basic modeling tool in the present study.

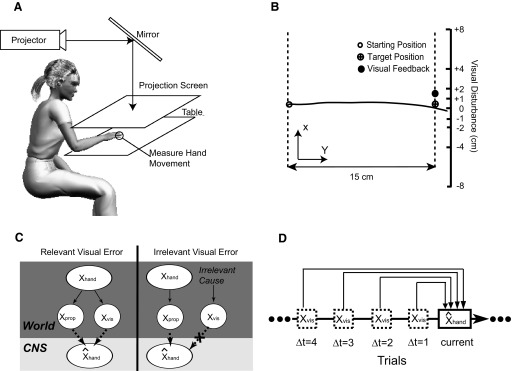

To test our hypothesis we examine how people adapt to visual perturbations while they perform reaching movements in virtual reality. The visual error feedback is perturbed with different magnitudes and we measure the adaptation as a function of the size of the perturbation (Fig. 1, A and B). We develop a Bayesian model that estimates whether visual feedback is relevant and should thus be used for motor adaptation or whether it is perturbed and should thus be ignored (Fig. 1C). The model fits the obtained data well and also explains previously published data on adaptation of saccades (Robinson et al. 2003), adaptation to visuomotor rotation (Wei et al. 2005), and to force perturbations (Fine and Thoroughman 2007). Our results show that motor adaptation is a nonlinear function of the size of the error and that this nonlinearity can be predicted by a model of relevance estimation.

FIG. 1.

Experimental setup and an exemplary movement trajectory. A: sketch of the experimental setup. The hand movement is measured by the robot and displayed as a cursor. The projector displays the cursor and the starting and target positions. Vision of the arm is occluded. B: typical movement trajectories (shown as a solid black line) are shown in a projection from above along with the visual error feedback received by the subject (black dot). C: graphical model of relevance estimation. When the error is inferred as relevant both proprioceptive and visual cues are used to estimate the hand position. When visual cues are inferred to be irrelevant to movement production, it will be disregarded and only proprioceptive cues are used. The final estimate of the hand position is a weighted average of position estimates from both cases where the weights are the probability of each case given the 2 types of sensory cues. D: illustration of time-varying effect of visual feedback in previous trials. The current estimation of hand position is influenced by visual feedbacks from 4 previous trials that are marked as Δt = 1, 2, 3, and 4, respectively.

METHODS

Experimental setup

Seven naïve subjects participated in the study after providing informed consent. All procedures were approved by the institutional review board of Northwestern University. All subjects were right-handed with normal or corrected-to-normal vision. The subjects were seated in front of a table and used their right hand to hold a robotic manipulandum (Phantom Haptics Premium 1.5). The movement of the hand was measured by the manipulandum and visual feedback was given in real time through a mirror-projection system (projector: NEC LT170) with a display frequency of 75 Hz (Fig. 1A). Vision of the arm and hand was occluded because the movement was performed underneath a mirror. A black cloth was draped over the shoulder of the subject to minimize residual visual information about hand movement. Each subject performed a total of 900 trials, resulting in approximately 1 h of participation.

Experimental task

The visual feedback of the hand position, the starting position, and the target were presented on the projection screen (Fig. 1B). The starting position was represented as a hollow circle at the middle of the workspace, aligned with the midline of the seated subject. The position of the hand was indicated by a cursor. Each trial started when the subject placed the cursor at the starting position. After the hand remained stationary at that position for 100 ms, a target, represented as a circle of 0.5-cm diameter, was displayed 15 cm to the right of the starting position. At the same time, the circle representing the starting position disappeared and this triggered the subject to make an accurate horizontal movement to the target.

Disturbance of the visual feedback

As soon as the subject started moving the hand toward the target, the display of the cursor was extinguished. Feedback of the cursor's position was given only when the cursor crossed the target in the horizontal direction (y direction in Fig. 1B). This feedback was perturbed in the depth direction (x direction in Fig. 1B), randomly and independently and identically distributed by one of nine possible values: 0, ±1, ±2, ±4, and ±8 cm. Throughout this study we use the word disturbance to denote perturbations in depth and we use the word deviation to denote deviations of the actual hand in the same direction. After the movement, the feedback remained visible for 100 ms at the same position. Subsequently, the subject returned the hand to the starting position for the next trial. This kind of feedback is similar to the terminal feedback used in other visuomotor adaptation studies (Baddeley et al. 2003; Bock 1992; Cheng and Sabes 2007; Ingram et al. 2000). The instruction was to “hit” the target as accurately as possible in a single reach without corrective movement close to the target. Before starting the experiment, subjects were familiarized with the setup by practicing without visual disturbances for 3 min. By applying visual disturbances of nine different sizes to the actual hand position at the end of each reach, we manipulated the size of the visual error.

Second experiment with smaller movement amplitude

We were interested in factors that affect relevance estimation of movement errors during adaptation. One important factor is the inherent motor variance of the subject. Our model (see following text) predicts that it is easier to estimate the relevance of the visual error if there is less motor variance, allowing the subject to be more certain that the movement error is not self-produced, but is rather caused by irrelevant external factors. To test this hypothesis, we manipulated the motor variance of the same reaching task by asking the subject to move a smaller distance in a second experiment. We assumed that variability in the final position decreases when the movement amplitude becomes smaller (analogous to Körding et al. 2007a). All subjects who participated in the first experiment subsequently participated in the second experiment, which used the same experimental setup and protocol in terms of conditions, number of trials, and visual disturbances. The only difference was that the horizontal distance between the starting position and the target was 5 cm, compared with the 15 cm of the first experiment. As a result, these smaller movements had relatively large visual disturbances.

Ideal observer model

We developed an ideal observer model derived from the specification of a probabilistic generative model. This ideal observer estimates the likelihood that the visual cues are relevant for the movement production—i.e., the visual cue and the proprioceptive cue both reflect the true movement error. The results of this calculation were used to derive an estimate of the position of the hand. This estimate, in turn, was used to predict how subjects adapt. In other words we assumed that subjects adapt in response to their estimate of the hand position rather than the visually perceived hand position.

Generative model

We assumed that vision and proprioception provide the sensory cues in estimating the hand position, although they are noisy (Fig. 1C). The proprioceptively perceived position xprop was assumed to be drawn from a Gaussian distribution N(xhand, σprop2), whereas the visually perceived position xvis was from a similar distribution N(xhand, σvis2) in the case of unperturbed visual feedback. xhand is the true hand position. σprop and σvis are the SDs of proprioception and vision, respectively. Note xvis and xprop are independently conditional on xhand when no visual perturbation was applied. In the case of perturbed feedback, xvis was assumed to be independent of the true hand position and randomly drawn from a flat distribution. Last, we assumed that subjects do not use prior knowledge about the hand position xhand and, as such, xhand is unbiased and comes from a flat distribution.

An ideal observer will estimate the probability that visual and proprioceptive cues both reflect the hand position. The prior probabilities of relevance and irrelevance are defined as p(relevant) and p(irrelevant), respectively. Using Bayes rule, we obtain

|

(1) |

Given that p(relevant) + p(irrelevant) = 1, the denominator, which is also called partition function in Bayesian statistics and is crucial for the derivation, can be rewritten as follows

|

(2) |

Equation 2 does not imply that the CNS tries to choose between the relevant case and the irrelevant case. Given the ambiguity of available perceptual information and inherent motor noise, our system instead estimates the probability of perceptual information being relevant. For the mathematical derivation we need to calculate the probability of stimuli assuming hypothetical knowledge of the fact that the stimulus is relevant or irrelevant [represented as conditional probability terms p(xvis, xprop|relevant) and p(xvis, xprop|irrelevant), respectively]. The optimal estimate then is a weighted combination of results based on relevant or irrelevant knowledge (see Eq. 2). In the relevant case the visual feedback is indicative of the position of the hand and we calculated the joint probabilities of visual and proprioceptive perception using the flat prior over the hand position xhand

|

(3) |

Because this process is governed by normal distributions, we can solve it analytically. This function can be rewritten as a different Gaussian N(xvis − xprop, σ2) [denoted as N(xvis, σ2) in the following text], where

|

(4) |

is a function of the variances of the prior and the likelihoods.

In the irrelevant case the visual feedback is not indicative of the position of the hand and the probability of sensory information conditional on the visual stimulus being irrelevant; p(xvis, xprop|irrelevant) does not depend on xvis. This is because p(xvis, xprop|irrelevant) is equal to p(xvis|irrelevant)p(xprop|irrelevant) because xvis and xprop are conditionally independent in the irrelevant case. p(xvis|irrelevant) does not depend on xvis because we had assumed a flat distribution over the irrelevant visual feedback. p(xprop|irrelevant) does not depend on xvis either because xvis carries no information in the irrelevant case. Thus the term p(xvis, xprop|irrelevant) is constant in xvis. By combining with Eqs. 2 and 3 and factoring out p(relevant) in the numerator and the denominator, we can thus rewrite Eq. 1 as

|

(5) |

where c = p(xvis, xprop|irrelevant)p(irrelevant)/p(relevant) is a constant in xvis and serves as one of the free parameters in the model.

The subjects were instructed to minimize the distance to the target and we thus assumed that the cost function they used is the squared error between the estimated position and the target position (the distance in depth direction), as shown by

|

(6) |

where xtar is the target position and in our experiment xtar = 0. Because the cost function is quadratic of the estimated position, the best estimate to minimize the cost function can be calculated as the weighted mean of estimated positions from the cases of relevant and irrelevant visual feedback (Fig. 3C)

|

(7) |

where ◯relevant and ◯irrelevant are the optimal estimates of hand position when the nervous system is certain that the visual feedback is relevant and irrelevant, respectively. The statistically optimal way to integrate multimodal sensory cues, under the assumption that all the cues are relevant, is to weight the cues proportionally to their inverse variances (e.g., Ernst and Banks 2002). Thus we obtain

|

(8) |

If we are interested only in expected values of the estimate of the hand position, we can drop the term that is proportional to the hand position, which is usually very close to zero and unbiased (see Fig. 2A)

|

(9) |

where 〈·〉 denotes the average over all trials.

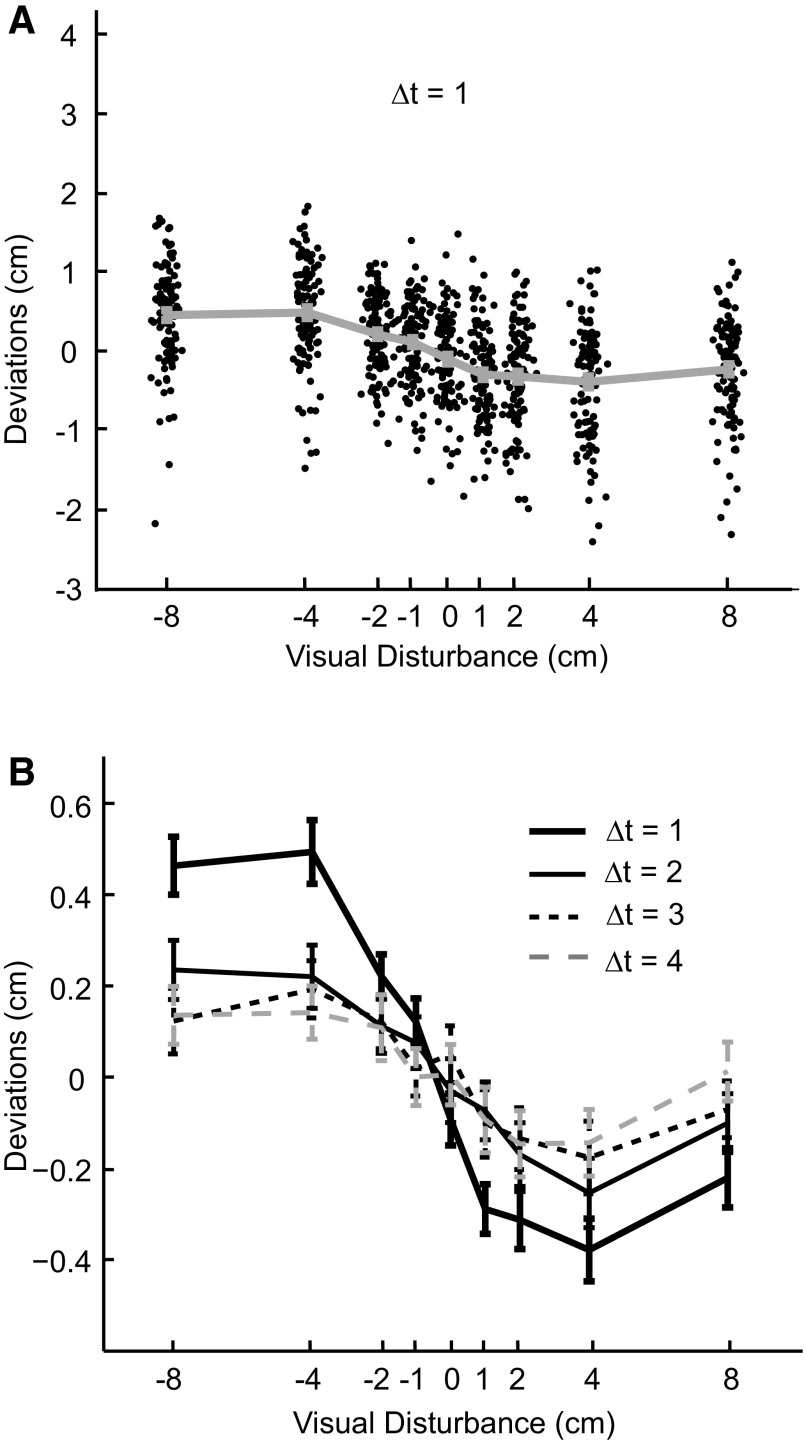

FIG. 2.

The data from a typical subject. A: deviations from all trials plotted as a function of the previous visual disturbances (Δt = 1). Each dot stands for a single reach and the gray error bar shows the mean and SE (Note: it is small) for each visual disturbance type. Data points are spread in the x direction for better visibility. B: mean deviations are plotted as a function of the size of visual disturbances for the same typical subject. Each line stands for data from trials of different lags.

In psychophysical experiments it is never possible to directly measure the subjects' percepts (i.e., xvis and xprop). However, if we are interested only in the average estimate as a function of visual error size, Eq. 7 can be rewritten by dropping the second term since ◯irrelevant on average is zero. We can also simplify Eq. 8 by recognizing it is a multiplication of xvis with a scaling factor σprop2/(σprop2 + σvis2). Then, by combining Eqs. 5, 7, and 9, and acknowledging the fact that xprop is on average unbiased, we obtain

|

(10) |

where the free parameter s characterizes the magnitude of the influence of a visual disturbance on future trials. The variable σ characterizes the typical distance up to which a disturbance can still be explained by the assumption of relevant visual feedback and c is the free parameter and also a constant that affects the form of p(relevant|xvis, xprop) (see Eq. 5).

Estimation of the hand position is affected not only by visual error at the current trial but also by those from previous trials (Fig. 1D). To capture this time-varying effect, visual disturbances up to four trials (identified as trial lag Δt = 1, 2, 3, 4) before the current trial were assessed. The choice of choosing four trials was based on the finding that visual disturbances did not strongly influence the performance after four trials in our initial data analysis. We redefine s as s(Δt) such that it is a function of lag Δt and captures the way the influence of a visual error changes over time. σ and c are constrained to be the same across lags because they are not assumed to be time-varying. Taken together, the estimation of hand position at the current trial was determined by combined effect from four previous trials and Eq. 10 is rewritten as

|

(11) |

All subsequent analysis was done with this reduced model. We assume subjects used this estimation of hand position to guide the hand to the target in the subsequent trials. We maximized the probability of the data (the deviation of the hand), given the model, to infer the parameters for each subject separately.

RESULTS

In our experiment subjects moved their hands horizontally to a fixed target. The visual feedback of the hand position was displayed only at the end of the trial and this feedback was randomly disturbed in the depth direction by varying distances for different trials. This manipulation created a discrepancy between the visual cue and the proprioceptive cue about the actual hand position. Adaptation to a visual disturbance on one trial should lead to a compensatory deviation of the hand into the opposite direction in the following trials. The deviation of the hand position is the measure of adaptation in our task. We sought to test how adaptation to visual errors is related to the size of the error and whether relevance estimation of the error can account for their relationship.

Adaptation following a visual disturbance

Deviations of the hand position are shown in Fig. 2A as a function of preceding visual perturbations for a typical subject. The deviations were affected both by the preceding disturbances and by considerable trial-to-trial noise. However, we found that positive disturbances on average led to deviations that were negative in the subsequent trial and negative disturbances on average led to deviations that were positive (Fig. 2, A and B), as expected by virtually all models of adaptation. This deviation in the opposite direction of the proceeding disturbance indicates adaptation in the direction that compensates for errors. Importantly, we found that the average size of the deviation was affected in a nonlinear way by the size of the preceding disturbance. Additionally, each deviation was affected not only by the single preceding disturbance but also by the history of disturbances. A disturbance had a strong effect on the immediately subsequent trial (Δt = 1) and progressively weaker effects on later trials (Fig. 2B).

Of particular interest for the current study is the relationship between the size of the disturbance and adaptation in subsequent trials. If the disturbance was zero it had no effect on subsequent trials because the deviation was not significantly different from zero (P = 0.287, t-test). For small disturbances between −2 and +2 cm we found an approximately linear influence of the size of the disturbance on subsequent trials. However, for large disturbances this influence became sublinear.

We proceeded to analyze data from all seven subjects (Fig. 3). Similar patterns have been found across subjects: the size of the visual disturbance had an interesting nonlinear influence on subsequent trials and the influence of previous disturbances decreased over time. By visually inspecting the data we found that the adaptation was linear with respect to disturbances of size from −2 to 2 cm and became sublinear beyond this range. To quantify this effect we fit a linear function to the range −2 to 2 cm and found that the average slope for lag 1 (Δt = 1) trials was −0.049 ± 0.006 (mean ± SE over subjects). We fit a linear function to the full range and found the average slope to be −0.030 ± 0.005 (mean ± SE). The difference between these two slopes was significant (P < 0.05, paired t-test). Thus adaptation was sublinear with respect to the error size of the full range of data. It also appeared that the nonlinear shapes for different lags were scaled versions of each other. Overall, these findings demonstrate that for small errors the classical assumption of linear adaptation (Kawato 2002; Scheidt et al. 2001; Thoroughman and Shadmehr 2000) is well justified, although it needs to be revised for large errors.

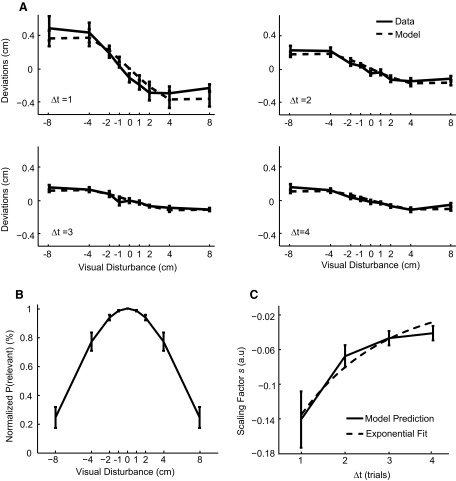

FIG. 3.

Data and model predictions for the first experiment with 15-cm movement amplitude from all subjects. Error bars denote the SEs over subjects. A: mean deviations and the corresponding model predictions are plotted as a function of the size of visual disturbances for all subjects. Different subplots are for trials from different trial lags Δt. B: the normalized probability of relevant error as a function of the size of visual disturbances. C: the estimated scaling factor is plotted as a function of trial lags. An exponential function is fitted and shown as a dashed line.

Fitting with the relevance estimation model

To understand how the disturbance size affects adaptation we examined the relevance estimation model explained in the introduction (details in methods). The model proposes that the nervous system estimates how likely an error has been caused by either the motor system or the virtual reality system and uses this estimate to adapt optimally. Over the set of all subjects, the model closely fit to the behavior of the subjects (Fig. 3A) and could explain 90.8 ± 1.5% of the variance. If we fit the data with a linear model (one two-parameter linear fit for data of each lag), only 68.7 ± 4.9% of the total variance could be explained. Note the linear models for fitting data of all lags have eight free parameters compared with six parameters in our relevance estimation model. We compared the relevance estimation model to the linear model and found that the log-likelihood ratio favored the relevance estimation model by −58.8 ± 13.4 (this is the relative log likelihood by assuming the log likelihood for the relevance estimation mode is 0). To take the model complexity into account, we used the Bayesian information criterion (BIC; see Burnham and Anderson 2002) to compare the relevance estimation model to the linear model and found that the relevance estimation model had a BIC that was lower than that of the linear model by 66.0 ± 13.4. We thus found that the model provided a good fit to our data and was preferable on statistical grounds.

We proceeded to analyze what properties of the model were involved in obtaining these fits. The model parameters were inferred for individual subjects separately. Two factors—N(xvis, σ2) and s(Δt)—are central to the way our model predicts adaptation. The first factor N(xvis, σ2) specifies that large errors should lead to low probability of relevance. The second factor s(Δt) specifies the way information is integrated over time. N(xvis, σ2)/[N(xvis, σ2) + c] is indicative of p(relevant|xvis, xprop), the probability of relevance estimated by the subject (Eq. 5). We normalized this term so that the value is 1 for 0-cm visual disturbance, enabling us to readily compare it among subjects for plotting and statistical data analysis purposes. The normalized probability of relevance varied systematically with the size of the error (Fig. 3B). As expected by the model, it decreased with increasingly larger disturbance. The second factor is the way the influence of a disturbance evolves over time. A full normative model of motor adaptation would assume how properties of the body (such as the fatigue level of the muscles) change over time (Baddeley et al. 2003; Körding et al. 2007b; Korenberg and Ghahramani 2002). Such models approximately weigh past trials in an exponential fashion such that more immediate trials have more weight. Our model allows a time-varying scaling factor s(Δt) to capture this time-varying effect. The scaling factor indeed decreased roughly exponentially with increasing trial lag Δt (Fig. 3C, fitted exponential curve with R2 = 0.948 and 2 degrees of freedom). Taken together, we found that adaptation was affected by both the error size and the elapsed time from its appearance.

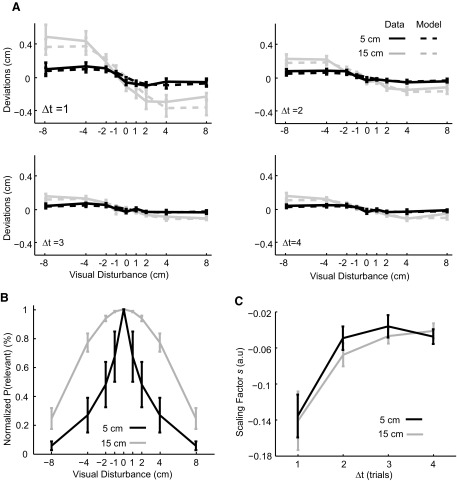

Second experiment

The relevance estimation model predicts that the size of error relative to motor variance is a variable that determines relevance estimation and thus the nonlinearity of motor adaptation. To test this prediction we introduced a second experiment where subjects produced hand movements smaller (5 cm) than those in the first experiment (15 cm). Motor noise and variability in the final position decrease when the movement amplitude becomes smaller (Fitts 1954; Harris and Wolpert 1998). We analyzed all trials immediately following trials without a visual disturbance and found the SD values of deviations were 0.55 ± 0.07 cm for 15-cm movements and 0.29 ± 0.045 cm for 5-cm movements. We used this measure to approximate motor variance. Thus the variance of the endpoint deviation was significantly smaller for 5-cm movements than that for 15-cm movements (paired t-test, P < 0.01). Because disturbances were larger with respect to ongoing motor errors, our model predicted that human subjects should be more likely to infer that a visual error had been caused by a visual disturbance, especially for rather large disturbances. Only for small disturbances should subjects assume that visual errors are relevant for movement production.

In this second experiment adaptation was again a nonlinear function of the disturbance (Fig. 4A, black). However, the shape of the nonlinear adaptation curve looked remarkably different and the range of linear adaptation was much smaller (compare with Fig. 4A, gray). The model fits indicated that the probability of inferring a common cause was generally lower compared with that of the first experiment (Fig. 4B). The probability drops with increasingly large visual disturbances and this drop was faster than that in the first experiment, which led to a sharper curve. To compare this probability between two experiments, we performed a 2 (experiment) × 9 (visual disturbance) two-way ANOVA on the normalized probability of relevance. The interaction effect did not reach a significant level (P = 0.11) and the main effect on visual disturbance was significant with F(8,108) = 19.92 and P < 0.0001. Importantly, the main effect on experiment is significant with F(1,108) = 53.52 and P < 0.0001, which implies that on average subjects had lower estimates of relevance when their movements were shorter. To quantify the range of the error size within which subjects infer that errors are relevant, we calculated the error size that leads the normalized probability drop to 50% of the maximal value. We found this spatial scale for relevance detection was 6.0 ± 1.4 and 2.6 ± 2.1 cm for the 15- and 5-cm experiments, respectively. A paired t-test confirmed that the difference between the two experiments was significant with P < 0.01. Similar to the first experiment, the time-varying scaling factor s(Δt) decreased roughly exponentially with increasing lag Δt. Interestingly, this scaling factor was remarkably similar to the one from the first experiment (Fig. 4C), as predicted by our model.

FIG. 4.

Data and model predictions for the second experiment with 5-cm movement amplitudes from all subjects. Error bars denote the SEs over subjects. Data and model predictions from the first experiment are plotted in gray lines for direct comparison. A: mean deviations from the data and corresponding model predictions are plotted as a function of the size of visual disturbances for all subjects. Different subplots are for trials from different trial lags Δt. B: the normalized probability of relevant error is plotted as a function of the size of visual disturbances. C: the estimated scaling factor is plotted as a function of trial lags.

DISCUSSION

In this study we have introduced a relevance estimation model that predicts whether an error is caused by factors within the body or by a disturbance in the extrinsic world. Our model assumes that sensory signals and movement production are noisy and thus estimating whether an error is relevant is an inference problem that involves uncertainty. The specific assumptions in our model include Gaussian noise in vision and proprioception, a cost function minimizing the squared error to the target, and independent generative processes for visual feedback and proprioceptive feedback when error information is relevant. The first two assumptions have been widely used for sensorimotor studies. The third assumption is intuitive for our experimental setup: when the visual error is artificially perturbed in virtual reality, it is indeed from a separate process other than the body. The model estimates hand positions based on the history of error information and uses this estimate to guide subsequent movements, although the model does not pertain to details of motor execution. The model predicts that adaptation is linear for small errors, but sublinear for large errors. By systematically perturbing visual feedback and studying the subsequent adaptation, we have demonstrated that, indeed, there is a nonlinear relationship between the error size and the magnitude of adaptation. Our model also predicts that the adaptation to the errors of the same magnitude should be less when movement variability is reduced. This prediction has been confirmed by the results from our second experiment, which differs from the first one only by a smaller movement magnitude.

Understanding how the nervous system uses error information has been studied extensively (e.g., Wolpert and Ghahramani 2004). Most models of motor adaptation have assumed a linear dependence between adaptation and error size (Kawato 2002; Scheidt et al. 2001; Thoroughman and Shadmehr 2000). Without theoretical modeling, some recent experimental studies have found evidence for a nonlinear relationship between the size or the rate of adaptation and the size of the error (Fine and Thoroughman 2007; Robinson et al. 2003; Wei et al. 2005). Fine and Thoroughman (2007) investigated how people adapted to a velocity-dependent force field that perturbed a straight reaching movement. The force field's gain, which determined the strength of the perturbing force, changed randomly from trial to trial. In one of the experiment conditions, the random field perturbed the movement trajectory either to the left or to the right. Subjects counteracted this perturbation by moving in the opposite direction in the next trial. Results indicated that this adaptation showed a nonlinear scaling relationship to the perturbation amplitude, a pattern similar to our results (Fig. 5A).

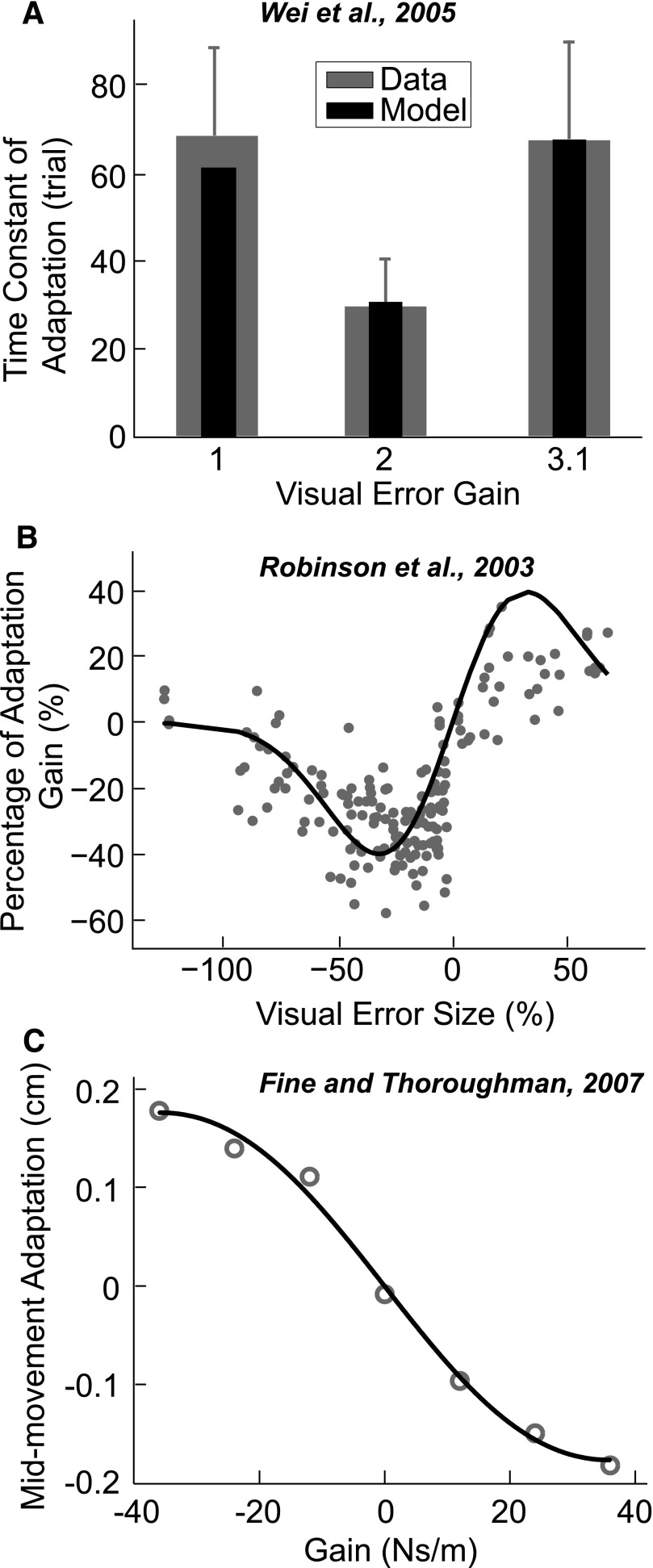

FIG. 5.

Empirical data from three other adaptation studies and the corresponding predictions of the relevance estimation model. A: the study by Wei et al. (2005): the adaptation rate in a visuomotor adaptation task is plotted as a function of visual error gain (gray bars). The black bars denote predictions from the relevance estimation model. B: the study by Robinson et al. (2003): the adaptation gain in saccades is plotted as a function of the visual error size. The line is the prediction from the relevance estimation model. C: the study by Fine and Thoroughman (2007): the amount of adaptation in reaching movements is plotted as a function of the gain of the viscous perturbation. The gray circles are the data from the paper and the black line is the prediction from the relevance estimation model.

Wei and colleagues (2005) investigated adaptation in a reaching task in virtual reality where the visual representation of the hand was rotated 30°. The reaching movement was curved under initial exposure of this visuomotor rotation and in subsequent training sessions the trajectories gradually became straight. The movement error was quantified as the deviation from the straight line. In some conditions, the visual error feedback was amplified by a gain factor of 1, 2, or 3.1. Subjects adapted to the visuomotor rotation and achieved a straight-line reaching trajectory with training. However, the rate of adaptation increased with initially increased gain (gain from 1 to 2), but decreased when the gain increased further (gain from 2 to 3.1). This finding indicated that the effect of visual error on adaptation rate is a nonlinear function of error size (Fig. 5B).

Robinson et al. (2003) studied saccadic adaptation by systematically varying the size of visual errors introduced after the initiation of saccades. They shifted the visual target after each saccade and kept the distance (error) between the eye position and the initial target position constant for each training session. This visual error signal elicited adaptation in the amplitude of saccade to compensate for the visual error. Expressed as the percentage of initially intended saccade size, the visual error had a nonlinear relationship with the adaptation gain (Fig. 5C).

The important implication from these studies is that, although they used different experimental paradigms such as different tasks (arm movements and saccades) and different types of perturbations (visual and mechanical disturbances), the nonlinear relationship between adaptation and error size persisted. These nonlinear relationships can be explained by our relevance estimation model. The basic form of our model was kept the same with σ capturing the nonlinear relationship between error size and adaptation. The scaling factor s was changed to one scalar (instead of a vector of 4) because these studies did not account for the time-varying effect of the error and they deal with the adaptation on only a single timescale. The model can explain, respectively, 99.4 and 65% of the total variance reported in studies by Fine and Thoroughman (2007) and Robinson et al. (2003) (see gray symbols in Fig. 5). No variance result can be reported for data in the study by Wei et al. (2005) because we have only average data.

The nonlinear relationship between error and adaptation might be taken as an indication that human subjects are indifferent to outliers (such as very large movement errors) in sensorimotor tasks. A previous study on human decision making found that the cost function of sensorimotor learning is insensitive to outliers (Körding and Wolpert 2004). In the task, subjects expressed their judgment about the location of a distribution “center.” By comparing possible cost functions, the authors indicated that the widely used quadratic cost function was applicable only for small errors and human subjects punished large errors much less than expected. As such, the system appeared robust to outliers. We could have fit our data with a loss function other than quadratic and obtained similar results. However, if we following this logic, we would need to assume a different loss function (albeit of the same form) for the second experiment despite the fact that the only modification of the second experiment from the first is shortened movement amplitude. Our relevance estimation model, instead of inferring the loss function in a post hoc fashion, simply assumes that movement variability is less with smaller movement magnitude. It thus predicts that the nervous system treats outliers as less likely to be causally related to the variable being estimated. This relevance estimation naturally leads to adaptation as we observed in the experiment.

Another study (Cohen et al. 2008) found that visual perception also followed predictions of robust statistics. Subjects were asked to judge the overall orientation of two adjacent dot clusters that had their own orientations. Results indicated that subjects weighted the small dot cluster less when it was farther away from the large dot cluster and a model based on robust principal component analysis accounted for this finding. However, they also found a phenomenon that could not be accounted for by the model: when the density of dots in both clusters increased, the small cluster was weighted less. The simple robust statistical model instead predicted an unchanged weighting for increasing dot density. Recognizing the limitation of a robust estimator that relied on numeric residuals, the authors suggested that a psychologically valid generative model is needed. Our relevance estimation model presented here naturally explains their findings. A better segmentation of the small cluster from the principal cluster can be obtained by increasing distance between them and/or increasing the dot density of the small cluster. This leads to a smaller likelihood that the small cluster belongs to the overall dot cluster and as such its influence on perception of the overall orientation is reduced. This is exactly what has been found in Cohen et al.'s study but is unexplained by their robust statistical model.

Our model is based on the notion of relevance estimation—a concept closely related to causal inference in cognitive studies. Starting from early infancy, people infer the causes of observed events (Gopnik et al. 2004; Holland 1986; Saxe et al. 2005; Tenenbaum and Griffiths 2003). Typically, interpreting an event in terms of causes is automatic and effortless. Within the Bayesian framework, such phenomena have been studied in high-level cognition (e.g., Tenenbaum et al. 2006) and sensory cue combination (Körding et al. 2007a). Here we demonstrate that in sensorimotor tasks when the nervous system continuously adapts its motor commands it solves a similar inference problem: estimate how likely it is that the error feedback is relevant to the movement production. We postulate that cognitive activities, perception, and motor adaptation might share a similar mechanism that estimates the relevance of feedback.

The present study may have important implications for robotic rehabilitation, where robots assist patients to relearn impaired movement abilities (Burgar et al. 2000; Hogan and Krebs 2004). These rehabilitation programs are usually performed in a virtual reality environment. Our study suggests that feedback should be designed such that it indicates to the nervous system that both visual and proprioceptive cues are relevant for movement production instead of being artificially manipulated in the virtual reality setting. Under this circumstance, the nervous system will attribute observed errors to the body and this will facilitate the learning process by enabling faster and stronger adaptation. The nonlinear relationship between the size of the error feedback and the adaptation also suggests that there exists a “sweet spot” in terms of the magnitude of augmented feedback where subjects may learn fastest.

GRANTS

This work was supported by the Falk Trust and National Institute of Neurological Disorders and Stroke Grant R01 NS-057814-01.

Acknowledgments

We thank J. Trommershäuser, S. Serwe, and J. Patton for feedback on the manuscript.

The costs of publication of this article were defrayed in part by the payment of page charges. The article must therefore be hereby marked “advertisement” in accordance with 18 U.S.C. Section 1734 solely to indicate this fact.

REFERENCES

- Baddeley et al. 2003.Baddeley RJ, Ingram HA, Miall RC. System identification applied to a visuomotor task: near-optimal human performance in a noisy changing task. J Neurosci 23: 3066–3075, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bock 1990.Bock O Load compensation in human goal-directed arm movement. Behav Brain Res 41: 167–177, 1990. [DOI] [PubMed] [Google Scholar]

- Bock 1992.Bock O Adaptation of aimed arm movements to sensorimotor discordance: evidence for direction-independent gain control. Behav Brain Res 51: 41–50, 1992. [DOI] [PubMed] [Google Scholar]

- Burgar et al. 2000.Burgar CG, Lum PS, Shor PC, Machiel Van der Loos HF. Development of robots for rehabilitation therapy: the Palo Alto VA/Stanford experience. J Rehabil Res Dev 37: 663–673, 2000. [PubMed] [Google Scholar]

- Burnham and Anderson 2002.Burnham KP, Anderson DR. Model Selection and Multimodel Inference: A Practical-Theoretic Approach. New York: Springer, 2002.

- Cheng and Novick 1992.Cheng PW, Novick LR. Covariation in natural causal induction. Psychol Rev 99: 365–382, 1992. [DOI] [PubMed] [Google Scholar]

- Cheng and Sabes 2007.Cheng S, Sabes PN. Calibration of visually guided reaching is driven by error-corrective learning and internal dynamics. J Neurophysiol 97: 3057–3069, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen et al. 2008.Cohen EH, Singh M, Maloney LT. Perceptual segmentation and the perceived orientation of dot clusters: the role of robust statistics. J Vis 8: 1–13, 2008. [DOI] [PubMed] [Google Scholar]

- Elliott and Roy 1981.Elliott D, Roy EA. Interlimb transfer after adaptation to visual displacement: patterns predicted from the functional closeness of limb neural control centres. Perception 10: 383–389, 1981. [DOI] [PubMed] [Google Scholar]

- Ernst 2006.Ernst MO A Bayesian view on multimodal cue integration. In: A Bayesian View on Multimodal Cue Integration, edited by Knoblich GM, Grosjean I, Thornton M. New York: Oxford Univ. Press, 2006, p. 105–131.

- Ernst and Banks 2002.Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415: 429–433, 2002. [DOI] [PubMed] [Google Scholar]

- Fine and Thoroughman 2007.Fine MS, Thoroughman KA. Trial-by-trial transformation of error into sensorimotor adaptation changes with environmental dynamics. J Neurophysiol 98: 1392–1404, 2007. [DOI] [PubMed] [Google Scholar]

- Fitts 1954.Fitts PM The information capacity of the human motor system in controlling the amplitude of movements. J Exp Psychol 47: 381–394, 1954. [PubMed] [Google Scholar]

- Gepshtein and Banks 2003.Gepshtein S, Banks MS. Viewing geometry determines how vision and haptics combine in size perception. Curr Biol 13: 483–488, 2003. [DOI] [PubMed] [Google Scholar]

- Gepshtein et al. 2005.Gepshtein S, Burge J, Ernst MO, Banks MS. The combination of vision and touch depends on spatial proximity. J Vis 5: 1013–1023, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gopnik et al. 2004.Gopnik A, Glymour C, Sobel DM, Schulz LE, Kushnir T, Danks D. A theory of causal learning in children: causal maps and Bayes nets. Psychol Rev 111: 3–32, 2004. [DOI] [PubMed] [Google Scholar]

- Harris 1965.Harris C Perceptual adaptation to inverted, reversed, and displaced vision. Psychol Rev 72: 419–444, 1965. [DOI] [PubMed] [Google Scholar]

- Harris and Wolpert 1998.Harris CM, Wolpert DM. Signal-dependent noise determines motor planning. Nature 394: 780–784, 1998. [DOI] [PubMed] [Google Scholar]

- Hillis et al. 2002.Hillis JM, Ernst MO, Banks MS, Landy MS. Combining sensory information: mandatory fusion within, but not between, senses. Science 298: 1627–1630, 2002. [DOI] [PubMed] [Google Scholar]

- Hogan and Krebs 2004.Hogan N, Krebs HI. Interactive robots for neuro-rehabilitation. Restor Neurol Neurosci 22: 349–358, 2004. [PubMed] [Google Scholar]

- Holland 1986.Holland P Statistics and causal inference. J Am Stat Assoc 81: 945–960, 1986. [Google Scholar]

- Ingram and van Donkelaar 2000.Ingram HA, van Donkelaar P, Cole J. The role of proprioception and attention in a visuomotor adaptation task. Exp Brain Res 132: 114–126, 2000. [DOI] [PubMed] [Google Scholar]

- Kawato 2002.Kawato M Cerebellum and motor control. In: Handbook of Brain Theory and Neural Networks (2nd ed.), edited by Arbib MA. Cambridge, MA: MIT Press, 2002, p. 190–195.

- Kawato et al. 1987.Kawato M, Furukawa K, Suzuki R. A hierarchical neural-network model for control and learning of voluntary movement. Biol Cybern 57: 169–185, 1987. [DOI] [PubMed] [Google Scholar]

- Knill 2003.Knill DC Mixture models and the probabilistic structure of depth cues. Vision Res 43: 831–854, 2003. [DOI] [PubMed] [Google Scholar]

- Körding et al. 2007a.Körding KP, Beierholm U, Ma WJ, Quartz S, Tenenbaum JB, Shams L. Causal inference in multisensory perception. PLoS ONE 2: e943, 2007a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Körding et al. 2007b.Körding KP, Tenenbaum JB, Shadmehr R. The dynamics of memory as a consequence of optimal adaptation to a changing body. Nat Neurosci 10: 779–786, 2007b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Körding and Wolpert 2004.Körding KP, Wolpert DM. The loss function of sensorimotor learning. Proc Natl Acad Sci USA 101: 9839–9842, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Korenberg and Ghahramani 2002.Korenberg AT, Ghahramani Z. A Bayesian view of motor adaptation. Curr Psychol Cogn 21: 537–564, 2002. [Google Scholar]

- McGurk and MacDonald 1976.McGurk H, MacDonald J. Hearing lips and seeing voices. Nature 264: 746–748, 1976. [DOI] [PubMed] [Google Scholar]

- McLaughlin 1967.McLaughlin S Parametric adjustment in saccadic eye movement. Percept Psychophys 2: 359–362, 1967. [Google Scholar]

- Munhall et al. 1996.Munhall KG, Gribble P, Sacco L, Ward M. Temporal constraints on the McGurk effect. Percept Psychophys 58: 351–362, 1996. [DOI] [PubMed] [Google Scholar]

- Roach et al. 2006.Roach NW, Heron J, McGraw PV. Resolving multisensory conflict: a strategy for balancing the costs and benefits of audio-visual integration. Proc Biol Sci 273: 2159–2168, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson et al. 2003.Robinson FR, Noto CT, Bevans SE. Effect of visual error size on saccade adaptation in monkey. J Neurophysiol 90: 1235–1244, 2003. [DOI] [PubMed] [Google Scholar]

- Saxe and Tenenbaum 2005.Saxe R, Tenenbaum JB, Carey S. Secret agents: inferences about hidden causes by 10- and 12-month-old infants. Psychol Sci 16: 995–1001, 2005. [DOI] [PubMed] [Google Scholar]

- Scheidt et al. 2001.Scheidt RA, Dingwell JB, Mussa-Ivaldi FA. Learning to move amid uncertainty. J Neurophysiol 86: 971–985, 2001. [DOI] [PubMed] [Google Scholar]

- Shadmehr and Mussa-Ivaldi 1994.Shadmehr R, Mussa-Ivaldi F. Adaptive representation of dynamics during learning of a motor task. J Neurosci 14: 3208–3224, 1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tenenbaum and Griffiths 2003.Tenenbaum JB, Griffiths TL. Theory-based causal inference. In: Advances in Neural Information Processing Systems, edited by Becker S. Cambridge, MA: MIT Press, 2003, vol. 15, p. 43–50.

- Tenenbaum et al. 2006.Tenenbaum JB, Griffiths TL, Kemp C. Theory-based Bayesian models of inductive learning and reasoning. Trends Cogn Sci 10: 309–318, 2006. [DOI] [PubMed] [Google Scholar]

- Thoroughman and Shadmehr 2000.Thoroughman KA, Shadmehr R. Learning of action through adaptive combination of motor primitives. Nature 407: 742–747, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treisman 1996.Treisman A The binding problem. Curr Opin Neurobiol 6: 171–178, 1996. [DOI] [PubMed] [Google Scholar]

- van Beers et al. 1996.van Beers RJ, Sittig AC, Denier van der Gon JJ. How humans combine simultaneous proprioceptive and visual position information. Exp Brain Res 111: 253–261, 1996. [DOI] [PubMed] [Google Scholar]

- von Helmholtz 1954.von Helmholtz HF On the Sensations of Tone as a Physiological Basis for the Theory of Music. New York: Dover, 1954. (original work published in 1863).

- Wei et al. 2005.Wei Y, Bajaj P, Scheidt RA, Patton JL. A real-time haptic/graphic demonstration of how error augmentation can enhance learning. In: Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, April 18–22. Piscataway, NJ: IEEE, 2005, p. 4406–4411.

- Wolpert and Kawato 1998.Wolpert D, Kawato M. Multiple paired forward and inverse models for motor control. Neural Networks 11: 1317–1329, 1998. [DOI] [PubMed] [Google Scholar]

- Wolpert and Ghahramani 2004.Wolpert DM, Ghahramani Z. Computational motor control. In: The Cognitive Neurosciences (3rd ed.), edited by Gazzaniga MS. Cambridge, MA: MIT Press, 2004, p. 485–494.