Abstract

Humans commonly use their hands to move and to interact with their environment by processing visual and proprioceptive information to determine the location of a goal-object and the initial hand position. It remains elusive, however, how the human brain fully uses this sensory information to generate accurate movements. In monkeys, it appears that frontal and parietal areas use and combine gaze and hand signals to generate movements, whereas in humans, prior work has separately assessed how the brain uses these two signals. Here we investigated whether and how the human brain integrates gaze orientation and hand position during simple visually triggered finger tapping. We hypothesized that parietal, frontal, and subcortical regions involved in movement production would also exhibit modulation of movement-related activation as a function of gaze and hand positions. We used functional MRI to measure brain activation while healthy young adults performed a visually cued finger movement and fixed gaze at each of three locations and held the arm in two different configurations. We found several areas that exhibited activation related to a mixture of these hand and gaze positions; these included the sensory-motor cortex, supramarginal gyrus, superior parietal lobule, superior frontal gyrus, anterior cingulate, and left cerebellum. We also found regions within the left insula, left cuneus, left midcingulate gyrus, left putamen, and right tempo-occipital junction with activation driven only by gaze orientation. Finally, clusters with hand position effects were found in the cerebellum bilaterally. Our results indicate that these areas integrate at least two signals to perform visual-motor actions and that these could be used to subserve sensory-motor transformations.

INTRODUCTION

Everyday we interact with our environment by moving our hands to grasp objects, type, and implement other goal-directed upper-limb movements. To generate accurate and efficient visually guided hand movements, one determines the position of a visible target and the initial hand position using vision and proprioception and an internal model. The importance of computing gaze and hand positions for successful arm movements is well established because misreaching can occur when one or both of these sources of information is absent or altered (Bédard and Proteau 2001, 2005; Bock 1986; Desmurget et al. 1998; Enright 1995; Henriques et al. 1998; McIntyre et al. 1997; Prablanc et al. 1979; Rossetti et al. 1994; Rothwell et al. 1982; Sanes et al. 1984). Many neurophysiological studies in monkeys and neuroimaging studies with humans have revealed that gaze orientation and hand position represent prominent parameters computed by the brain when generating hand movements.

Gaze orientation modulates neuronal spike counts in parietal cortical areas (Andersen and Mountcastle 1983; Andersen et al. 1985; Batista et al. 1999; Battaglia-Mayer et al. 2000; 2003; Buneo et al. 2002; Cisek and Kalaska 2002; Pesaran et al. 2006) and the ventral and dorsal parts of the premotor cortex (PMv and PMd) (Boussaoud et al. 1998; Cisek and Kalaska 2002; Jouffrais and Boussaoud 1999; Mushiake et al. 1997; Pesaran et al. 2006) when monkeys reach voluntarily; the same effect has not yet been observed in primary motor cortex (M1) (Mushiake et al. 1997). Neurons in the visual cortex also exhibit gaze-related modulation of spiking on processing visual information (Rosenbluth and Allman 2002; Trotter and Celebrini 1999). In humans, neuroimaging studies have shown that gaze orientation modulates visual processing in visual areas (Andersson et al. 2007; Bédard et al. 2008; DeSouza et al. 2002) and hand-movement-related activation in parietal (Baker et al. 1999; Bédard et al. 2008; DeSouza et al. 2000; Medendorp et al. 2003) and frontal motor-related areas (Baker et al. 1999; Bédard et al. 2008). Importantly, these gaze effects on visual processing and arm movements yield a structured spatial organization. Neuroimaging studies have revealed that more functional MRI signal is generated in a gradient-like fashion as gaze deviates from the left toward the right of the body midline for movements made with the right hand (Baker et al. 1999; Bédard et al. 2008; DeSouza et al. 2000) and the converse holds true for left hand movements (DeSouza et al. 2000).

Prior results have also demonstrated that the brain codes for geometric configuration of the arm insofar as neural spiking in monkey Brodmann area 5 seems to represent updates of the location of the initial hand position (Graziano 2001; Graziano et al. 2000; Lacquaniti et al. 1995) and that arm configuration in the workspace modifies the activity in parietal cortex, PM, and M1 on reaching and isometric force exertion (Caminiti et al. 1990, 1991; Kakei et al. 2001; Pesaran et al. 2006; Scott and Kalaska 1997; Scott et al. 1997; Sergio and Kalaska 2003). In humans, a frontal-parietal network appears to code arm configuration because parietal cortical damage impairs the internal representation of the body's state (Wolpert et al. 1998), and neuroimaging studies have revealed frontal-parietal activation on updating body's configuration to plan arm movements or to localize visual stimuli (Lloyd et al. 2003; Makin et al. 2007; Pellijef et al. 2006; Rushworth et al. 1997).

Studies done with monkeys have also revealed that some areas in a parietal-frontal network, such as PM and within the intraparietal sulcus, code movements with respect to both gaze and hand positions (Battaglia-Mayer et al. 2000, 2001, 2003; Buneo et al. 2002; Pesaran et al. 2006). In humans, however, prior studies required that participants point or saccade to a set of targets while their eyes or hand, respectively, remained stationary (e.g., Astafiev et al. 2003; Bédard et al. 2008; Beurze et al. 2007; Connolly et al. 2003; Gorbet et al. 2004; Medendorp et al. 2003; Simon et al. 2002), or the data were analyzed only across gaze or hand positions (DeSouza et al. 2000). Finally, others assessed how the brain integrates target location and choice of effector (eyes, right or left hand) (Beurze et al. 2007; Connolly et al. 2003; Medendorp et al. 2005). These studies with humans have not systematically varied both gaze and hand positions as done in monkeys studies. Therefore knowledge is missing on whether gaze and hand signals are combined in these parietal-frontal areas as in the monkey brain or whether each area uses a single code.

As evident from the preceding text, prior investigations of eye-hand interactions have typically occurred in the context of goal-directed reaching movements intended to mimic plausible real-life actions. By design and necessity, these interactions have a level of complexity that cannot necessarily address whether eye-hand interactions occur during eye fixation and movements without a spatial goal. Our prior work (Baker et al. 1999; Bédard et al. 2008) demonstrated that these interactions do occur, even for simple, repetitive finger movements with gaze fixed at different locations, thereby suggesting that brain systems controlling skeletal and ocular movements have a fundamental substrate for interactions. However, our prior observations (Baker et al. 1999; Bédard et al. 2008) did not address whether representations for low-level skeletal-ocular interactions exhibit specificity for one or another coordinate reference frame, such as eye- or hand-centered. Parallel to work done with neural recording in non-human primates, we would predict the existence of both eye- and hand-centered representations in humans for these postural and nongoal-directed movements.

To address these issues, we designed an event-related fMRI experiment to determine how the human brain combines gaze orientation and hand position on generating arm muscles commands. Building on previous work (e.g., Baker et al. 1999; Bédard et al. 2008; Buneo et al. 2002; DeSouza et al. 2000), we hypothesized that the spatial organization of gaze effects will be altered by changing hand position in the workspace. This manipulation also tests an underlying conclusion of prior work in our lab on gaze effects of finger-movement-related activation (Baker et al. 1999; Bédard et al. 2008): that pertaining to maximal effects of gaze on movement-related activation when gaze and hand were aligned in the same spatial sector. We further reasoned that these hand-gaze interaction effects should appear in the brain areas along a frontal-parietal network and possibly subcortical structures such as the cerebellum and putamen.

METHODS

Participants, tasks, and apparatus

We recruited 15 healthy adults from the Brown University community (aged 19–34 yr; 8 females, 7 males, all right-handed as assessed by a modified handedness scale) (Oldfield 1971). They had no history of neurological, sensory, or motor disorder. All participants provided written informed consent according to established Institution Review Board guidelines for human participation in experimental procedures at Brown University and Memorial Hospital of Rhode Island (the site of the MR imaging). We adhered to the principles of the Declaration of Helsinki. Participants received modest monetary compensation for their participation.

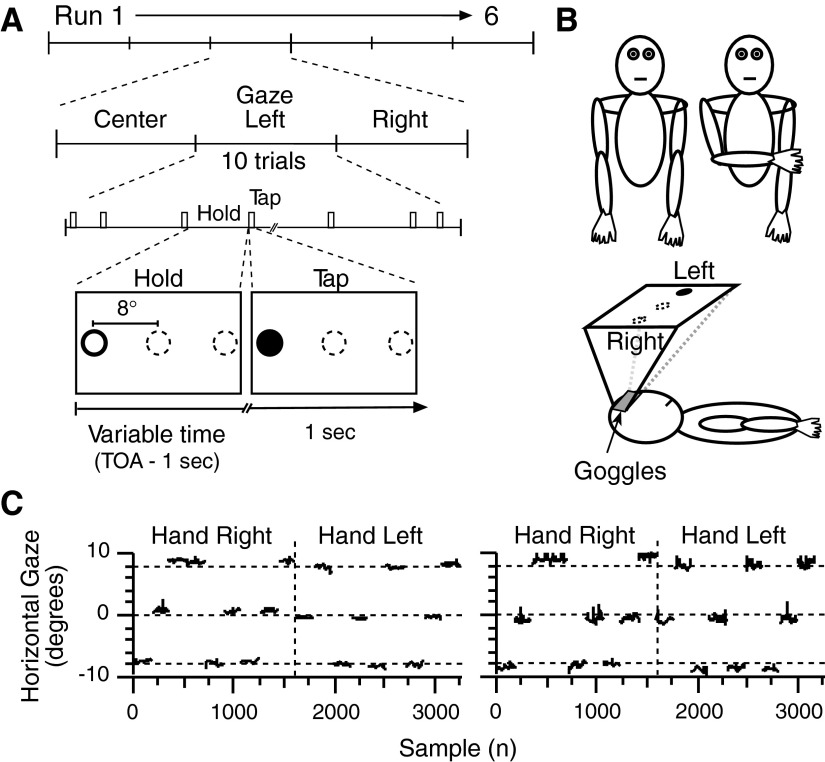

The experiment was divided in six runs of scanning (6.18 min/run; Fig. 1A). During each run, participants received instructions to fix gaze at one of three visible targets (black annulus): left, center, or right of their body midline; only one annulus was visible at any given time. Each target was used once per run, and the order of target presentation occurred randomly for each run. Thus gaze was maintained at a target location for one-third of each run (∼2 min). At the end of that interval, the target disappeared and a new target appeared. For each gaze position, participants tapped thrice (at 3 Hz) with their right thumb when the center of the annulus turned from white to black (Fig. 1A, tap). These movement cues occurred 10 times per gaze position. We also included 10 null trials in which no cue to tap appeared; these occurred randomly within the 10 movement trials. We added null trials to allow better disentanglement of the tapping-related functional MRI signals by stretching out the time in between events. We considered these data points as a baseline control. The order of presentation of both types of trials was randomized. Both types of trials occurred with varying trial-onset asynchronies (3.86, 4.825, 5.79, 6.755, or 7.72 s), and these occurred randomly (and on the average) once every five trials to facilitate the subsequent event-related functional MRI data analysis procedures. The design did not include a traditional preparatory period, that is, one have a warning stimulus. While participants might have elected to prepare upcoming movements during the interval between trials, they could not accurately predict the onset of any one trial.

FIG. 1.

Experimental design and eye position record. A: task schematic. During each of 6 runs, participants gazed at 1 of 3 targets (black outlined ring, lower section, left box) for 10 consecutive tapping trials. During each trial, the color of the ring center changed from white to black to cue participants to tap 3 times with the right thumb; trial-onset-asynchronies were 3.86, 4.825, 5.79, 6.755, or 7.72 s. B: experimental set-up. Participants positioned their right hand on their right side for one-half of the experiment and across their body midline aligned with the left shoulder for the other half of the experiment. They wore a pair of magnetic resonance imaging (MRI)-compatible goggles that displayed visual stimuli and recorded eye positions. C: eye positions across the experiment of 2 participants for the 1st 600 ms after the cue to tap appeared.

Participants performed the task while positioning their right arm in one of two configurations (Fig. 1B). In one configuration, the right arm was fully extended and half-pronated beside a participant's right side. In the other configuration, the arm crossed the body midline in midflexion so that the right hand became aligned with the left shoulder. Half of the participants performed the first half of the experiment with the hand on the right side of the body and the second half of the experiment with the right hand on the left side of the body. The other half of participants did the experiment in the reverse order. The experimenter moved each participant's arm in between the third and fourth run while participants remained motionless. Figure 1B (lower schematic) illustrates how participants lay in the scanner.

After receiving task instructions, participants practiced the procedures for a few minutes before entering the MRI system and becoming positioned in the standard supine body position. Participants wore a set of headphones, for ear protection and communication with the experimenter, and they held an optically coupled, MRI-compatible push-button (Bull Engineering, Rehoboth, MA) in their right hand that sensed the right thumb movements. They wore a pair of MRI-compatible LCD-based goggles for delivery of visual stimuli and eye movement monitoring with an embedded infrared camera (Resonance Technology.; 800 × 600 pixels resolution; accuracy of ±1°, sampling rate 30 Hz; Viewpoint software, Arrington Research, Scottsdale, AZ). We used PsychToolbox for Matlab 5.2 [http://www.psychtoolbox.org/ (Brainard 1997; Pelli 1997); Mathworks, Natick, MA] running on a Macintosh G3 Powerbook to present visual stimuli and to store the occurrences of thumb movements and a Windows-operated Dell computer to run software to measure eye position (Viewpoint, Arrington Research). To ensure accurate timing between the recordings of gaze positions and events in the experiment, the Macintosh G3 Powerbook triggered the acquisition of gaze position with a TTL pulse. We did not record vertical eye position.

MRI procedures

We used a 1.5 T Symphony MRI Magnetom MR system equipped with Quantum gradients (Siemens Medical Solutions, Erlangen, Germany) to acquire anatomical and functional MR images. Participants lay supine inside the magnet bore with the head resting inside a circularly polarized receive-only head coil used for radio frequency reception; the body coil transmitted radio frequency signals. Head movements were reduced by cushioning and mild restraint. After shimming the standing magnetic field, we acquired a high-resolution three-dimensional anatomical image consisting of 160 1-mm parasagittal slices [magnetization prepared rapid acquisition gradient echo sequence, MPRAGE; repetition time (TR) = 1,900 ms, echo time (TE) = 4.15 ms, inversion time = 20 ms, 1-mm isotropic voxels, 256 mm field of view]. We then acquired T2*-weighted gradient echo images using the blood-oxygenation-level-dependent (BOLD) mechanism (Kwong et al. 1992; Ogawa et al. 1992). For each of the six runs, the sequence acquisition contained 96 volumes. We acquired functional MR images across the entire brain using isotopic voxels of 3 mm and 48 axial slices (TE = 38 ms, TR = 3.86 s, field of view = 192 mm, image matrix = 64 × 64). The MRI system acquired the images in an interleaved manner. The MRI system did not collect any data for the first volume of each run because of T1 saturation effect leaving 95 volumes of data per run.

MRI SIGNAL PROCESSING.

We used AFNI (Analysis of Functional NeuroImages; Medical College of Wisconsin; National Institute of Health: http://afni.nimh.nih.gov/afni) (Cox 1996; Cox and Hyde 1997) and FSL software packages (FMRIB software Library, http://www.fmriib.ox.ac.uk/fsl/) to process, analyze, and visualize MRI images. For each run, separately we removed the linear trend in the time series and then scaled the time series by its mean to yield percentage signal change; we then concatenated the time series acquired from all runs. Then the BOLD data set for each participant was motion corrected to the third image acquired using a six-parameter rigid-body cubic polynomial interpolation (3dvolreg tool in AFNI). We then co-registered and normalized the anatomical and functional data sets to the MNI152 template using FSL and finally spatially smoothed the functional data set with a 6-mm full-width half-maximum Gaussian kernel.

MRI STATISTICAL ANALYSIS.

The occurrence of each tapping cue in each of the six conditions (2 hand positions × 3 gaze positions) was convolved with a gamma variate function (waver tool in AFNI) (Cohen 1997) to yield an impulse response function. We then used these six reference functions and the six motion correction parameters as inputs to a multiple regression analysis (3dDeconvolve tool in AFNI) to estimate the weight of each condition on a voxel-wise base. Reported results come from group-wise, random-effect analyses.

To assess condition-dependent activation, we first identified voxels having significant finger-movement-related activation by testing the null hypothesis that finger movement did not elicit activation using a t-test for each target separately and retaining voxels that passed a threshold of P ≤ 0.001 for at least one of the six conditions. We then corrected the resulting voxel-level analysis for multiple comparisons by setting a cluster threshold of P ≤ 0.05 corresponding to 12 adjacently clustered voxels. The cluster-level analysis used the Monte Carlo sampling procedure implemented in AFNI (AlphaSim tool) and yielded 19 clusters deemed to have finger movement-related activation (Fig. 2; Table 1; results). We then submitted the functional MRI data (expressed in percentage signal relative to a global mean) to a two-way ANOVA contrasting the two hand positions (left and right) by two gaze positions (left and right) with repeated measures on each factor at P ≤ 0.05. For these analysis, we excluded from further consideration the functional MRI signals obtained when participants fixed gaze centrally because the main experimental hypothesis bore directly on alignment of hand and eye position, thus only the left and right positions for eye and hand. While we did exclude data related to central gaze for this analysis, we note that when the hand was positioned to the right, finger-movement-related activation when gaze was directed centrally or rightward ubiquitously exceeded that for leftward gaze; however, for some participants and for some regions, we found nonlinear effects as gaze shifted from left to center to right. To evaluate the time course of physiological responses, we used a deconvolution procedure (3dDeconvolve tool in AFNI) and estimated the hemodynamic response for each TR following the finger-tap response from 0 to 12 s and re-sampled it to half-TR for illustration. To localize activation to brain areas, we used the brain atlas of Duvernoy (1991), the cerebellum atlas of Schmahmann et al. (1999), and a web-based human brain atlas (www.msu.edu/∼brains/brains/human/index.html).

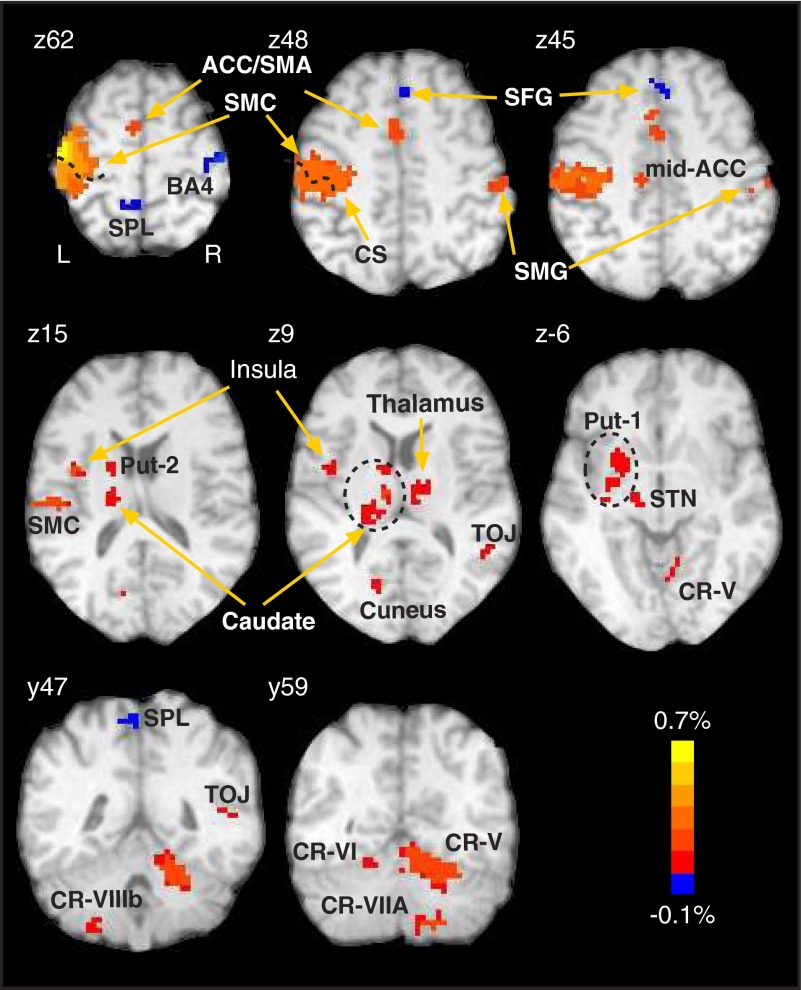

FIG. 2.

Brain activation (% MRI signal) related to finger movement. Activation presented here results from data pooled across all 6 conditions. Note strong activation in classically defined motor areas, such as contralateral primary motor cortex (M1), supplementary motor area (SMA), cerebellum (CR = Crus), anterior cingulate gyrus and right supramarginal gyrus (SMG). Table 1 lists all activated areas. CS, central sulcus.

TABLE 1.

Cluster report for the finger-related activation

| Brain Regions (BA) | Volume | Mean Intensity | Maximum Intensity | Coordinates |

||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Cortical areas | ||||||

| L Sensorimotor cortex (1-4) | 21168 | 0.24 | 0.7 | 42 | 21 | 57 |

| L ACC/SMA (6, 24, 32) | 1917 | 0.1 | 0.16 | 5 | −5 | 48 |

| L Cuneus (31) | 972 | 0.08 | 0.11 | 16 | 71 | 10 |

| L Superior frontal gyrus (8) | 648 | −0.05 | −0.08 | 1 | −32 | 45 |

| R Supramarginal gyrus (40) | 513 | 0.1 | 0.17 | −56 | 25 | 49 |

| R Pre-central gyrus (4) | 459 | −0.12 | −0.17 | −42 | 20 | 63 |

| L Mid-cingulate gyrus (24) | 432 | 0.08 | 0.1 | 12 | 22 | 41 |

| L Pre-cuneus (5, 7) | 405 | −0.05 | −0.07 | 7 | 46 | 65 |

| R Tempo-occipital junction (39, 22) | 324 | 0.06 | 0.09 | −49 | 51 | 8 |

| Sub-cortical areas | ||||||

| R Cerebellum (CR-V) | 9018 | 0.15 | 0.24 | −16 | 57 | −20 |

| R Cerebellum (CR-VIIa) | 2241 | 0.11 | 0.17 | −15 | 64 | −50 |

| L Cerebellum (CR-VIIIb) | 540 | 0.08 | 0.1 | 28 | 51 | −56 |

| L Cerebellum (CR-VI) | 432 | 0.08 | 0.11 | 17 | 64 | −17 |

| L Putamen | 2997 | 0.08 | 0.13 | 26 | 2 | −5 |

| L Putamen | 351 | 0.08 | 0.1 | 19 | 1 | 14 |

| L Caudate nucleus/thalamus | 2835 | 0.09 | 0.16 | 15 | 18 | 9 |

| R Thalamus | 1026 | 0.08 | 0.11 | −12 | 14 | 7 |

| L Subthalamic nucleus/thalamus | 405 | 0.08 | 0.1 | 14 | 18 | −7 |

| L Insula | 1215 | 0.09 | 0.13 | 43 | 1 | 10 |

Cluster volume in μl with one voxel = 27 μl. Mean intensity represents the average activation across all conditions and Maximum intensity represent the maximal value within a cluster. Coordinates represent the center of mass of an activation cluster, with +x indicating left hemisphere. ACC, anterior cingulate cortex; SMA, Supplementary motor area.

RESULTS

Behavior

We first determined whether participants maintained their gaze on the target as they tapped their thumb by inspecting the eye position samples corresponding to the first 600 ms after the target turned black. During this interval, we identified gaze position samples >3° beyond the median gaze position required for a particular target and considered these samples as eye movements only if four or more consecutive samples fell outside that range. Using this criterion, <2% of the trials across the group contained eye movements. Figure 1C illustrates gaze positions in the horizontal dimension for two representative participants. Note that gaze remained anchored on the target during finger movements for both hand positions (separated by the vertical dashed line). With this preponderance of evidence that participants fundamentally and continuously maintained fixation on the required target, we decided not to reject any trials from the functional MRI analysis.

Next we tested whether manual reaction time (RT, defined as the elapsed time between the onset of the tapping cue and the occurrence of the 1st tap) differed as a function of hand and/or gaze positions. We eliminated two behavioral data sets due to hardware malfunctions. We tested the null-hypothesis of no difference of RT across all conditions, using a two-way ANOVA (2 hand positions × 2 gaze positions) with repeated measures. This analysis revealed a significant interaction, F(1,12) = 23.18, P ≤ 0.001. Exploration of the interaction (t-test) revealed that RT was not significantly different between gaze position with the hand on the right (404 ± 13 and 410 ± 13, means ± SE, for left and right gaze, P = 0.09), but with the hand on the left RT were significantly slower when gazing right (407 ± 13 and 429 ± 14, P ≤ 0.001).

Movement-related activation

We found 19 clusters that exhibited finger-movement-related activation (Table 1, Fig. 2). We found activation in several regions traditionally considered as movement-related including the left sensorimotor cortex (labeled SMC in Fig. 2), that spanned left M1 (BA4) and primary somatic sensory cortex (BA1-3) in a contiguous region that encompassed the motor area of the left cingulate gyrus (Picard and Strick 2001) and the left SMA (hereafter called ACC/SMA, BA32, BA24, BA6; note P = 0.06), in the left midcingulate gyrus (BA24; labeled mid-ACC), a region corresponding to the anterior superior parietal lobule (BA5) and part of the left precuneus (BA7; labeled SPL), and the right supramarginal gyrus (SMG, BA40). We also found activation in the left cuneus (BA31), left superior frontal gyrus (SFG, BA8), right precentral gyrus (BA4), and in the tempo-occipital junction (39, 22; labeled TOJ). In subcortical areas, we found two activation clusters in the left cerebellum (CR-VIIIb and CR-VI) and also two clusters in the right cerebellum (CR-V and CR-VIIa). We also found two clusters in the left putamen (labeled put-1 and put-2), left insula, left subthalamic nucleus (STN), right thalamus, and in the left caudate nucleus.

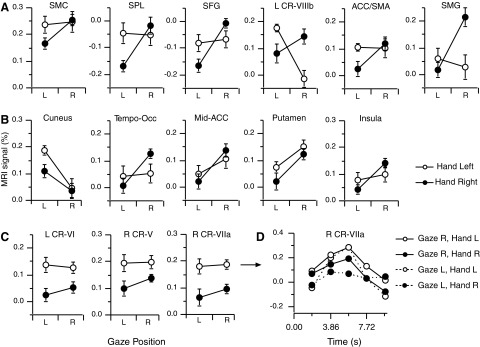

Next we assessed how gaze and hand positions modulated brain activation in these aforementioned clusters with a two-way repeated-measures ANOVA (2 hand positions × 2 gaze positions). We found four clusters with a significant hand × gaze interaction that included the left SFG (BA 8), left cerebellum (CR-VIIIb), right SMG (BA 40), and left SPL (BA 5–7). We also found two clusters with a marginal interaction in the left ACC/SMA (BA 6, 24, 32; P = 0.088) and in the SMC (P = 0.109); all others clusters had nonsignificant changes in activation (P > 0.13). Figure 3A illustrates the location of significant activation for the clusters that exhibited a gaze × hand interaction. We then explored the interaction using post hoc t-test. When the hand was on the right, the activation of these clusters was higher (P ≤ 0.05) when participants fixed gaze rightward compared with when they fixed gaze leftward, though the difference did not reach statistical significance for the left cerebellum (P = 0.23). However, when the hand was positioned to the left, only the cerebellum depicted gaze effects with more activation while gazing left (P ≤ 0.001); otherwise we did not reject the null hypothesis of no difference in finger-movement-related activation between right- and leftward gaze. These results demonstrate that the effect of gaze orientation on movement-related activation shown in our previous work and others (Baker et al. 1999; Bédard et al. 2008; DeSouza et al. 2000) was changed by moving the hand to a different location.

FIG. 3.

A: brain areas depicting finger movement-related activation with hand-gaze interaction effects. Values reflect the average derived from the beta-weights across the response period. Legend applies to A–C. B: brain areas with gaze-only effects. Note that all clusters except for the 1 in the cuneus showed more activation with the hand positioned on the right. C: brain areas with hand-only effects. D: evoked hemodynamic response for the cluster found in the right cerebellum (CR-VIIa) for a single participant. Each point represents the functional MRI signal interpolated to twice temporal resolution for illustration purposes. Note different legend from A–C.

The ANOVA also revealed a significant main effect of gaze position in six other clusters exhibiting finger movement-related activation (Fig. 3B); these regions included the left insula, left cuneus (BA31), left midcingulate gyrus (BA24, labeled mid-ACC), left putamen (labeled put-2), and right tempo-occipital junction (BA39-22, labeled TOJ). All these clusters but the left cuneus, depicted more activation when participants gazed right than left; the converse occurred for the left cuneus. Finally, the ANOVA revealed significant main effect of Hand position in the right cerebellum (CR-V), right cerebellum (CR-VIIA), and left cerebellum (CR-VI) with all these regions showing more activation when the hand was positioned to the left of the body (Fig. 3C). Figure 3D illustrates the evoked hemodynamic response obtained from one participant from a region in the right cerebellum (CR-VIIA) identified at the group level to exhibit a difference in activation related to eye and hand position. Clusters in which no effects were found included the left putamen (put-1), caudate nucleus/thalamus, thalamus, right precentral gyrus, and left STN/thalamus (data not shown).

DISCUSSION

To generate appropriate arm motor commands, the brain identifies the initial conditions for movement and computes target location. We investigated how the brain combines gaze orientation and initial hand position to generate movements because previous work in human studied how the brain codes these movements in relation to gaze or hand position (e.g., Baker et al. 1999; Bédard et al. 2008; DeSouza et al. 2000; Wolpert et al. 1998) or how the brain integrates target location with effector choice (saccade, right or left arm) (Beurze et al. 2006; Connolly et al. 2003; DeSouza et al. 2000; Medendorp et al. 2005). We hypothesized that changing hand position would interact with gaze orientation to modulate finger-movement-related activation, restricting our analysis only to these areas showing hand-movement-related activation and found regions that exhibited combinatorial effects of gaze orientation and hand positions. We found activation that had sensitivity to both gaze and hand position in several cortical and subcortical regions such as SMC, SPL, and ACC/SMA, primarily those previously identified as having involvement in generating hand movements, as was shown in the monkeys (Battaglia-Mayer et al. 2000, 2001; Buneo et al. 2002; Caminiti et al. 1990, 1991). We also found areas like the right and left cerebellum that only used hand signals to code hand movements and others like the cuneus, mid-ACC, and putamen that only used gaze signals.

Our results replicate previous studies that found that gaze orientation modulated movement-related activation in humans (Baker et al. 1999; Bédard et al. 2008; DeSouza et al. 2000; Medendorp et al. 2003) and in monkeys (Batista et al. 1999; Buneo et al. 2002; Pesaran et al. 2006). We also found that these gaze effects were organized spatially in the workspace as shown in our previous studies with a strong preference for increased activation as gaze deviated rightward (Baker et al. 1999; Bédard et al. 2008; see also DeSouza et al. 2000; for monkeys, see Andersen and Mountcastle 1983; Boussaoud et al. 1998; Bremmer et al. 1998). However, a new finding of the current study regarding human brain organization relates to the interaction between gaze orientation and hand position that echoes findings in non-human primate of combined representations for eye and hand movements (Battiglia-Mayer et al. 2001; Ferraina et al. 2001).

Previously, we found gaze effects in M1, ACC, and SMA (Baker et al. 1999; Bédard et al. 2008) that had not been previously observed (DeSouza et al. 2000; Mushiake et al. 1997). In our earlier work, we also described augmented finger-movement-related activation (using the right hand) as gaze deviated toward the right sector of space. The current interaction, as found in several regions, add two features to interactions between hand movements and gaze position. First, alignment of gaze and the right hand to the left of the body does not necessarily yield augmented activation; we observed this only in one cluster. Second, it appears, instead, that there exists a mixture of gaze and hand signals in the ACC and SMA components of a premotor system that has anatomical projections both to M1 and to the spinal cord (Dum and Strick 1991, 1996, 2002; He et al. 1993, 1995). In M1, neuronal spiking relates to various kinematic and kinetic parameters of movement (Georgopoulos et al. 1982; Kakei et al. 2001; Paninski et al. 2004; Sergio et al. 2005), but no current evidence exists that links gaze with output from M1 (Mushiake et al. 1997). Frontal and parietal regions, such as PMA and posterior parietal cortex, carry combined gaze and hand signals, and these areas project to M1. While it has not been definitively established that individual PMA and parietal neurons exhibit combined eye-hand signals, the overwhelming evidence points to a convergence in M1 of gaze and hand position signals (Marconi et al. 2001); these could then become used in the process that generates hand movements, although most clearly further processing is done in the spinal interneurons downstream of M1 (Yanai et al. 2008).

The current results extend prior findings of a movement-related role for the midcingulate gyrus. The activation cluster located in the ACC/SMA most likely corresponds to the ACC motor areas or rostral cingulate zone (Picard and Strick 2001), while the mid-ACC cluster likely corresponds to the caudal cingulate zone, a separate cingulate motor areas (Picard and Strick 2001). In monkey ACC and SMA, neurons code hand movements in relation to target and hand positions (Crutcher et al. 2004). Note also that the ACC and SMA have reciprocal projections with M1 (Picard and Strick 1996) and the precuneus (Cavanna and Trimble 2006; Leichnetz 2001), both of which exhibited hand-gaze interaction in the current work. Therefore our findings support a role for the human cingulate cortex for generating even simple hand movements with gaze and hand signals converging here that suggests that cingulate cortex participates in some way in transforming sensory information into movements.

Prior work has consistently suggested that the parietal cortex participates in generating hand movements using a variety of signals such as gaze and hand position (e.g., Battaglia-Mayer et al. 2000; Buneo et al. 2002) although in human the convergence of these two signals had not been shown yet. We found that the left precuneus/BA5 processed both gaze and hand signals. Note that we also found deactivation that could relate to the finger-tapping task used as opposed to goal-directed movement. This region appears to have significant involvement in sensory-motor actions because many neuroimaging studies have shown activation there during hand movements (Astafiev et al. 2003; Beurze et al. 2007; Diedrichsen et al. 2005; Simon et al. 2002; Wenderoth et al. 2005). Furthermore, damage to this region yields impaired reaching (Battaglia-Mayer et al. 2006; Rondot et al. 1977). Neurons in BA5 of monkeys process visual, gaze, and hand position signals (Battaglia-Mayer et al. 2000, 2003; Buneo et al. 2002; Graziano et al. 2000; Lacquaniti et al. 1995; Scott et al. 1997) and project to frontal motor areas and subcortical areas (Johnson et al. 1996; Marconi et al. 2001; Petrides and Panya 1984; Strick and Kim 1978). Thus this region seemed well positioned to participate in the sensory-motor transformations necessary to generate hand movements by transforming sensory information about target location and hand position using gaze and hand signals.

Other structures with key involvement in movement control such as the inferior portion of the parietal cortex (SMG) and cerebellum depicted movement-related activation. More importantly, our results further show that gaze and hand signals reached these regions. Baker et al. (1999) also reported gaze effects in this parietal area (but that study focused mostly on contralateral hemisphere). The cerebellum receives inputs from the parietal cortex (Glickstein 2003; Middleton and Strick 1998) and participates in eye-hand coordination and regulation of ongoing movements (Desmurget et al. 2001; Miall and Reckess 2002; Robinson and Fuchs 2001). It may have been expected that the left SMG and right cerebellum showed these effects because participants used their right hand. However, it is well recognized that portions of the ipsilateral hemisphere have involvement in hand movements. Previous work demonstrated that the left cerebellum (CR-VIIIB) has right hand-movement-related activation (Diedrichsen et al. 2005; Kawashima et al. 1998) and right IPL damage also leads to impairments in reaching (Karnath and Perenin 2005). Additionally, perturbing the right M1 with TMS alters the timing of muscle recruitment during right hand movements (Davare et al. 2007), and patients with right hemisphere stroke display motor impairments when using their right hand (Schaefer et al. 2007). Therefore our results confirm a role for the right SMG and left cerebellum even in simple hand movements with gaze and hand signals modulating its activity.

The current results show that for even simple hand movements with low spatial requirements that both gaze and hand positions exert substantial effects on brain activation. The findings may suggest an underlying fundamental aspect of brain organization for eye-hand control, even occurring in the absence of goal-directed movements. In particular, these two systems likely have a fundamental property for synergistic action when the eyes and hand have compatible spatial alignment. This conjecture may find support in behavioral evidence of coordination between the eyes and the hand during goal directed reaching (e.g., Johansson et al. 2001) and reaction time deficits occurring when visual inputs signaling manual responses cross the brain midline (e.g., Iacoboni and Zaidel 2004). Thus we suggest that the current results might represent baseline conditions for eye-hand coordination on which the brain then superimposes processing specific to goal-directed movements.

Somewhat unexpectedly, we did not find finger-movement-related activation in premotor cortex and regions of the medial intraparietal cortex that has been previously implicated in visual-motor transformations (Battaglia-Mayer et al. 2003; Cisek and Kalaska 2002; Pesaran et al. 2006). These negative results might have related to task differences, insofar as we used tapping, while most of the studies describing involvement of these areas in sensory-motor transformations used pointing; the differences in experimental design across studies might suggest that these regions have specific spatial requirements for exhibiting movement-related activation. For example, the task we employed entailed static gaze and arm position for long periods of time, both of which could modify the way brain circuits respond to movements and thus modify the functional MRI signal (e.g., Cisek and Kalaska 2002; Peseran et al. 2006).

We showed here that in humans, the brain combines gaze orientation with the hand position to generate simple and nongoal-directed hand movements. These results suggest that the brain uses a multiplicity of frame of reference for movements (Battaglia-Myer et al. 2003; Carrozzo et al. 2002; Ghez et al. 2007; Lemay and Stelmach 2005) as opposed to a pure gaze- (Henriques et al. 1998; McIntyre et al. 1997) or a hand-centered reference frame (Gordon et al. 1994; Soechting and Flanders 1989; Vindras and Viviani 1998). Much remains to be discovered concerning how different brain areas select a reference frame and under which conditions. Future studies that will merge different fields of research such as robotics with that of neuroscience have the potential to gain further insight into how the brain mediates sensory-motor transformations (Souères et al. 2007). The regions that we identified probably also integrate other signals about the body configuration and the external world via visual, proprioceptive, auditory information, and efference copy to yield appropriate body representations that mediate effective and accurate movements.

GRANTS

This work was supported National Eye Institute Grant R01-EY-01541 and by funds from the Ittleson Foundation.

The costs of publication of this article were defrayed in part by the payment of page charges. The article must therefore be hereby marked “advertisement” in accordance with 18 U.S.C. Section 1734 solely to indicate this fact.

REFERENCES

- Andersen et al. 1985.Andersen RA, Essick GK, Siegel RM. Encoding of spatial location by posterior parietal neurons. Science 230: 456–458, 1985. [DOI] [PubMed] [Google Scholar]

- Andersen and Mountcastle 1983.Andersen RA, Mountcastle VB. The influence of the angle of gaze upon the excitability of the light-sensitive neurons of the posterior parietal cortex. J Neurosci 3: 532–548, 1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersson et al. 2007.Andersson F, Joliot M, Perchey G, Petit L. Eye position-dependent activity in the primary visual area as revealed by fMRI. Hum Brain Mapp 28: 673–680, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astafiev et al. 2003.Astafiev SV, Shulman GL, Stanley CM, Snyder AZ, Van Essen DC, Corbetta M. Functional organization of human intraparietal and frontal cortex for attending, looking, and pointing. J Neurosci 23: 4689–4699, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker et al. 1999.Baker JT, Donoghue JP, Sanes JN. Gaze direction modulates finger movement activation patterns in human cerebral cortex. J Neurosci 19: 10044–10052, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Batista et al. 1999.Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science 285: 257–260, 1999. [DOI] [PubMed] [Google Scholar]

- Battaglia-Mayer et al. 2006.Battaglia-Mayer A, Archambault PS, Caminiti R. The cortical network for eye-hand coordination and its relevance to understanding motor disorders of parietal patients. Neuropsychologia 44: 2607–2620, 2006. [DOI] [PubMed] [Google Scholar]

- Battaglia-Mayer et al. 2003.Battaglia-Mayer A, Caminiti R, Lacquaniti F, Zago M. Multiple levels of representation of reaching in the parieto-frontal network. Cereb Cortex 13: 1009–1022, 2003. [DOI] [PubMed] [Google Scholar]

- Battaglia-Mayer et al. 2001.Battaglia-Mayer A, Ferraina S, Genovesio A, Marconi B, Squatrito S, Molinari M, Lacquaniti F, Caminiti R. Eye-hand coordination during reaching. II. An analysis of the relationships between visuomanual signals in parietal cortex and parieto-frontal association projections. Cereb Cortex 11: 528–544, 2001. [DOI] [PubMed] [Google Scholar]

- Battaglia-Mayer et al. 2000.Battaglia-Mayer A, Ferraina S, Mitsuda T, Marconi B, Genovesio A, Onorati P, Lacquaniti F, Caminiti R. Early coding of reaching in the parietooccipital cortex. J Neurophysiol 83: 2374–2391, 2000. [DOI] [PubMed] [Google Scholar]

- Bédard and Proteau 2001.Bédard P, Proteau L. On the role of static and dynamic visual afferent information in goal-directed aiming movements. Exp Brain Res 138: 419–431, 2001. [DOI] [PubMed] [Google Scholar]

- Bédard and Proteau 2005.Bédard P, Proteau L. Movement planning of video and of manual aiming movements. Spat Vis 18: 275–296, 2005. [DOI] [PubMed] [Google Scholar]

- Bédard et al. 2008.Bédard P, Thangavel A, Sanes JN. Gaze influences finger movement-related and visual-related activation across the human brain. Exp Brain Res 2008; doi: 10.1007/s00221-008-1339-3.2008. [DOI] [PMC free article] [PubMed]

- Beurze et al. 2007.Beurze SM, de Lange FP, Toni I, Medendorp WP. Integration of target and effector information in the human brain during reach planning. J Neurophysiol 97: 188–199, 2007. [DOI] [PubMed] [Google Scholar]

- Beurze et al. 2006.Beurze SM, Van Pelt S, Medendorp WP. Behavioral reference frames for planning human reaching movements. J Neurophysiol 96: 352–362, 2006. [DOI] [PubMed] [Google Scholar]

- Bock 1986.Bock O Contribution of retinal versus extraretinal signals towards visual localization in goal-directed movements. Exp Brain Res 64: 476–482, 1986. [DOI] [PubMed] [Google Scholar]

- Boussaoud et al. 1998.Boussaoud D, Jouffrais C, Bremmer F. Eye position effects on the neuronal activity of dorsal premotor cortex in the macaque monkey. J Neurophysiol 80: 1132–1150, 1998. [DOI] [PubMed] [Google Scholar]

- Brainard 1997.Brainard DH The psychophysics toolbox. Spat Vis 10: 433–436, 1997. [PubMed] [Google Scholar]

- Bremmer et al. 1998.Bremmer F, Pouget A, Hoffmann KP. Eye position encoding in the macaque posterior parietal cortex. Eur J Neurosci 10: 153–160, 1998. [DOI] [PubMed] [Google Scholar]

- Buneo et al. 2002.Buneo CA, Jarvis MR, Batista AP, Andersen RA. Direct visuomotor transformations for reaching. Nature 416: 632–636, 2002. [DOI] [PubMed] [Google Scholar]

- Caminiti et al. 1991.Caminiti R, Johnson PB, Galli C, Ferraina S, Burnod Y. Making arm movements within different parts of space: the premotor and motor cortical representation of a coordinate system for reaching to visual targets. J Neurosci 11: 1182–1197, 1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caminiti et al. 1990.Caminiti R, Johnson PB, Urbano A. Making arm movements within different parts of space: dynamic aspects in the primate motor cortex. J Neurosci 10: 2039–2058, 1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrozzo et al. 2002.Carrozzo M, Stratta F, McIntyre J, Lacquaniti F. Cognitive allocentric representations of visual space shape pointing errors. Exp Brain Res 147: 426–436, 2002. [DOI] [PubMed] [Google Scholar]

- Cavanna and Trimble 2006.Cavanna AE, Trimble MR. The precuneus: a review of its functional anatomy and behavioural correlates. Brain 129: 564–583, 2006. [DOI] [PubMed] [Google Scholar]

- Cisek and Kalaska 2002.Cisek P, Kalaska JF. Modest gaze-related discharge modulation in monkey dorsal premotor cortex during a reaching task performed with free fixation. J Neurophysiol 88: 1064–1072, 2002. [DOI] [PubMed] [Google Scholar]

- Cohen 1997.Cohen MS Parametric analysis of fMRI data using linear systems methods. Neuroimage 6: 93–103, 1997. [DOI] [PubMed] [Google Scholar]

- Connolly et al. 2003.Connolly JD, Andersen RA, Goodale MA. FMRI evidence for a “parietal reach region” in the human brain. Exp Brain Res 153: 140–145, 2003. [DOI] [PubMed] [Google Scholar]

- Cox 1996.Cox RW AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29: 162–173, 1996. [DOI] [PubMed] [Google Scholar]

- Cox and Hyde 1997.Cox RW, Hyde JS. Software tools for analysis and visualization of fMRI data. NMR Biomed 10: 171–178, 1997. [DOI] [PubMed] [Google Scholar]

- Crutcher et al. 2004.Crutcher MD, Russo GS, Ye S, Backus DA. Target-, limb-, and context-dependent neural activity in the cingulate and supplementary motor areas of the monkey. Exp Brain Res 158: 278–288, 2004. [DOI] [PubMed] [Google Scholar]

- Davare et al. 2007.Davare M, Duque J, Vandermeeren Y, Thonnard JL, Olivier E. Role of the ipsilateral primary motor cortex in controlling the timing of hand muscle recruitment. Cereb Cortex 17: 353–362, 2007. [DOI] [PubMed] [Google Scholar]

- Desmurget et al. 2001.Desmurget M, Grea H, Grethe JS, Prablanc C, Alexander GE, Grafton ST. Functional anatomy of nonvisual feedback loops during reaching: a positron emission tomography study. J Neurosci 21: 2919–2928, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desmurget et al. 1998.Desmurget M, Grea H, Prablanc C. Final posture of the upper limb depends on the initial position of the hand during prehension movements. Exp Brain Res 119: 511–516, 1998. [DOI] [PubMed] [Google Scholar]

- DeSouza et al. 2000.DeSouza JF, Dukelow SP, Gati JS, Menon RS, Andersen RA, Vilis T. Eye position signal modulates a human parietal pointing region during memory-guided movements. J Neurosci 20: 5835–5840, 2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeSouza et al. 2002.DeSouza JF, Dukelow SP, Vilis T. Eye position signals modulate early dorsal and ventral visual areas. Cereb Cortex 12: 991–997, 2002. [DOI] [PubMed] [Google Scholar]

- Diedrichsen et al. 2005.Diedrichsen J, Hashambhoy Y, Rane T, Shadmehr R. Neural correlates of reach errors. J Neurosci 25: 9919–9931, 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dum and Strick 1991.Dum RP, Strick PL. The origin of corticospinal projections from the premotor areas in the frontal lobe. J Neurosci 11: 667–689, 1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dum and Strick 1996.Dum RP, Strick PL. Spinal cord terminations of the medial wall motor areas in macaque monkeys. J Neurosci 16: 6513–6525, 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dum and Strick 2002.Dum RP, Strick PL. Motor areas in the frontal lobe of the primate. Physiol Behav 77: 677–682, 2002. [DOI] [PubMed] [Google Scholar]

- Duvernoy 1991.Duvernoy HM The Human Brain: Surface, Three-Dimensional Sectional Anatomy and MRI. New York: Springer-Verlag, 1991.

- Enright 1995.Enright JT The non-visual impact of eye orientation on eye-hand coordination. Vision Res 35: 1611–1618, 1995. [DOI] [PubMed] [Google Scholar]

- Ferraina et al. 2001.Ferraina S, Battaglia-Mayer A, Genovesio A, Marconi B, Onorati P, Caminiti R. Early coding of visuomanual coordination during reaching in parietal area PEc. J Neurophysiol 85: 462–467, 2001. [DOI] [PubMed] [Google Scholar]

- Georgopoulos et al. 1982.Georgopoulos AP, Kalaska JF, Caminiti R, Massey JT. On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex. J Neurosci 2: 1527–1537, 1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghez et al. 2007.Ghez C, Scheidt R, Heijink H. Different learned coordinate frames for planning trajectories and final positions in reaching. J Neurophysiol 98: 3614–3626, 2007. [DOI] [PubMed] [Google Scholar]

- Glickstein 2003.Glickstein M Subcortical projections of the parietal lobes. Adv Neurol 93: 43–55, 2003. [PubMed] [Google Scholar]

- Gorbet et al. 2004.Gorbet DJ, Staines WR, Sergio LE. Brain mechanisms for preparing increasingly complex sensory to motor transformations. Neuroimage 23: 1100–1111, 2004. [DOI] [PubMed] [Google Scholar]

- Gordon et al. 1994.Gordon J, Ghilardi MF, Ghez C. Accuracy of planar reaching movements. I. Independence of direction and extent variability. Exp Brain Res 99: 97–111, 1994. [DOI] [PubMed] [Google Scholar]

- Graziano 2001.Graziano MS Is reaching eye-centered, body-centered, hand-centered, or a combination? Rev Neurosci 29: 175–185, 2001. [DOI] [PubMed] [Google Scholar]

- Graziano et al. 2000.Graziano MS, Cooke DF, Taylor CS. Coding the location of the arm by sight. Science 290: 1782–1786, 2000. [DOI] [PubMed] [Google Scholar]

- He et al. 1993.He SQ, Dum RP, Strick PL. Topographic organization of corticospinal projections from the frontal lobe: motor areas on the lateral surface of the hemisphere. J Neurosci 13: 952–980, 1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He et al. 1995.He SQ, Dum RP, Strick PL. Topographic organization of corticospinal projections from the frontal lobe: motor areas on the medial surface of the hemisphere. J Neurosci 15: 3284–3306, 1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henriques et al. 1998.Henriques DY, Klier EM, Smith MA, Lowy D, Crawford JD. Gaze-centered remapping of remembered visual space in an open-loop pointing task. J Neurosci 18: 1583–1594, 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni and Zaidel 2004.Iacoboni M, Zaidel E. Interhemispheric visuo-motor integration in humans: the role of the superior parietal cortex. Neuropsychologia 42: 419–425, 2004. [DOI] [PubMed] [Google Scholar]

- Johansson et al. 2001.Johansson RS, Westling G, Backstrom A, Flanagan JR. Eye-hand coordination in object manipulation. J Neurosci 21: 6917–6932, 2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson et al. 1996.Johnson PB, Ferraina S, Bianchi L, Caminiti R. Cortical networks for visual reaching: physiological and anatomical organization of frontal and parietal lobe arm regions. Cereb Cortex 6: 102–119, 1996. [DOI] [PubMed] [Google Scholar]

- Jouffrais and Boussaoud 1999.Jouffrais C, Boussaoud D. Neuronal activity related to eye-hand coordination in the primate premotor cortex. Exp Brain Res 128: 205–209, 1999. [DOI] [PubMed] [Google Scholar]

- Kakei et al. 2001.Kakei S, Hoffman DS, Strick PL. Direction of action is represented in the ventral premotor cortex. Nat Neurosci 4: 1020–1025, 2001. [DOI] [PubMed] [Google Scholar]

- Karnath and Perenin 2005.Karnath HO, Perenin MT. Cortical control of visually guided reaching: evidence from patients with optic ataxia. Cereb Cortex 15: 1561–1569, 2005. [DOI] [PubMed] [Google Scholar]

- Kawashima et al. 1998.Kawashima R, Matsumura M, Sadato N, Naito E, Waki A, Nakamura S, Matsunami K, Fukuda H, Yonekura Y. Regional cerebral blood flow changes in human brain related to ipsilateral and contralateral complex hand movements–a PET study. Eur J Neurosci 10: 2254–2260, 1998. [DOI] [PubMed] [Google Scholar]

- Kwong et al. 1992.Kwong KK, Belliveau JW, Chesler DA, Goldberg IE, Weisskoff RM, Poncelet BP, Kennedy DN, Hoppel BE, Cohen MS, Turner R. Dynamic magnetic resonance imaging of human brain activity during primary sensory stimulation. Proc Natl Acad Sci USA 89: 5675–5679, 1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lacquaniti et al. 1995.Lacquaniti F, Guigon E, Bianchi L, Ferraina S, Caminiti R. Representing spatial information for limb movement: role of area 5 in the monkey. Cereb Cortex 5: 391–409, 1995. [DOI] [PubMed] [Google Scholar]

- Leichnetz 2001.Leichnetz GR Connections of the medial posterior parietal cortex (area 7m) in the monkey. Anat Rec 263: 215–236, 2001. [DOI] [PubMed] [Google Scholar]

- Lemay and Stelmach 2005.Lemay M, Stelmach GE. Multiple frames of reference for pointing to a remembered target. Exp Brain Res 164: 301–310, 2005. [DOI] [PubMed] [Google Scholar]

- Lloyd et al. 2003.Lloyd DM, Shore DI, Spence C, Calvert GA. Multisensory representation of limb position in human premotor cortex. Nat Neurosci 6: 17–18, 2003. [DOI] [PubMed] [Google Scholar]

- Makin et al. 2007.Makin TR, Holmes NP, Zohary E. Is that near my hand? Multisensory representation of peripersonal space in human intraparietal sulcus. J Neurosci 27: 731–740, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marconi et al. 2001.Marconi B, Genovesio A, Battaglia-Mayer A, Ferraina S, Squatrito S, Molinari M, Lacquaniti F, Caminiti R. Eye-hand coordination during reaching. I. Anatomical relationships between parietal and frontal cortex. Cereb Cortex 11: 513–527, 2001. [DOI] [PubMed] [Google Scholar]

- McIntyre et al. 1997.McIntyre J, Stratta F, Lacquaniti F. Viewer-centered frame of reference for pointing to memorized targets in three-dimensional space. J Neurophysiol 78: 1601–1618, 1997. [DOI] [PubMed] [Google Scholar]

- Medendorp et al. 2005.Medendorp WP, Goltz HC, Vilis T. Remapping the remembered target location for anti-saccades in human posterior parietal cortex. J Neurophysiol 94: 734–740, 2005. [DOI] [PubMed] [Google Scholar]

- Medendorp et al. 2003.Medendorp WP, Goltz HC, Vilis T, Crawford JD. Gaze-centered updating of visual space in human parietal cortex. J Neurosci 23: 6209–6214, 2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miall and Reckess 2002.Miall RC, Reckess GZ. The cerebellum and the timing of coordinated eye and hand tracking. Brain Cogn 48: 212–226, 2002. [DOI] [PubMed] [Google Scholar]

- Middleton and Strick 1998.Middleton FA, Strick PL. The cerebellum: an overview. Trends Neurosci 21: 367–369, 1998. [DOI] [PubMed] [Google Scholar]

- Mushiake et al. 1997.Mushiake H, Tanatsugu Y, Tanji J. Neuronal activity in the ventral part of premotor cortex during target-reach movement is modulated by direction of gaze. J Neurophysiol 78: 567–571, 1997. [DOI] [PubMed] [Google Scholar]

- Ogawa et al. 1992.Ogawa S, Tank DW, Menon R, Ellermann JM, Kim SG, Merkle H, Ugurbil K. Intrinsic signal changes accompanying sensory stimulation: functional brain mapping with magnetic resonance imaging. Proc Natl Acad Sci USA 89: 5951–5955, 1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield 1971.Oldfield RC The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9: 97–113, 1971. [DOI] [PubMed] [Google Scholar]

- Paninski et al. 2004.Paninski L, Shoham S, Fellows MR, Hatsopoulos NG, Donoghue JP. Superlinear population encoding of dynamic hand trajectory in primary motor cortex. J Neurosci 24: 8551–8561, 2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli 1997.Pelli DG The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10: 437–442, 1997. [PubMed] [Google Scholar]

- Pellijeff et al. 2006.Pellijeff A, Bonilha L, Morgan PS, McKenzie K, Jackson SR. Parietal updating of limb posture: an event-related fMRI study. Neuropsychologia 44: 2685–2690, 2006. [DOI] [PubMed] [Google Scholar]

- Pesaran et al. 2006.Pesaran B, Nelson MJ, Andersen RA. Dorsal premotor neurons encode the relative position of the hand, eye, and goal during reach planning. Neuron 51: 125–134, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides and Pandya 1984.Petrides M, Pandya DN. Projections to the frontal cortex from the posterior parietal region in the rhesus monkey. J Comp Neurol 228: 105–116, 1984. [DOI] [PubMed] [Google Scholar]

- Picard and Strick 1996.Picard N, Strick PL. Motor areas of the medial wall: a review of their location and functional activation. Cereb Cortex 6: 342–353, 1996. [DOI] [PubMed] [Google Scholar]

- Picard and Strick 2001.Picard N, Strick PL. Imaging the premotor areas. Curr Opin Neurobiol 11: 663–672, 2001. [DOI] [PubMed] [Google Scholar]

- Prablanc et al. 1979.Prablanc C, Echallier JE, Jeannerod M, Komilis E. Optimal response of eye and hand motor systems in pointing at a visual target. II. Static and dynamic visual cues in the control of hand movement. Biol Cybern 35: 183–187, 1979. [DOI] [PubMed] [Google Scholar]

- Robinson and Fuchs 2001.Robinson FR, Fuchs AF. The role of the cerebellum in voluntary eye movements. Annu Rev Neurosci 24: 981–1004, 2001. [DOI] [PubMed] [Google Scholar]

- Rondot et al. 1977.Rondot P, de Recondo J, Dumas JL. Visuomotor ataxia. Brain 100: 355–376, 1977. [DOI] [PubMed] [Google Scholar]

- Rosenbluth and Allman 2002.Rosenbluth D, Allman JM. The effect of gaze angle and fixation distance on the responses of neurons in V1, V2, and V4. Neuron 33: 143–149, 2002. [DOI] [PubMed] [Google Scholar]

- Rossetti et al. 1994.Rossetti Y, Stelmach G, Desmurget M, Prablanc C, Jeannerod M. The effect of viewing the static hand prior to movement onset on pointing kinematics and variability. Exp Brain Res 101: 323–330, 1994. [DOI] [PubMed] [Google Scholar]

- Rothwell et al. 1982.Rothwell JC, Traub MM, Day BL, Obeso JA, Thomas PK, Marsden CD. Manual motor performance in a deafferented man. Brain 105: 515–542, 1982. [DOI] [PubMed] [Google Scholar]

- Rushworth et al. 1997.Rushworth MF, Nixon PD, Renowden S, Wade DT, Passingham RE. The left parietal cortex and motor attention. Neuropsychologia 35: 1261–1273, 1997. [DOI] [PubMed] [Google Scholar]

- Sanes et al. 1984.Sanes JN, Mauritz KH, Evarts EV, Dalakas MC, Chu A. Motor deficits in patients with large-fiber sensory neuropathy. Proc Natl Acad Sci USA 81: 979–982, 1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaefer et al. 2007.Schaefer SY, Haaland KY, Sainburg RL. Ipsilesional motor deficits following stroke reflect hemispheric specializations for movement control. Brain 130: 2146–2158, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmahmann et al. 1999.Schmahmann JD, Doyon J, McDonald D, Holmes C, Lavoie K, Hurwitz AS, Kabani N, Toga A, Evans A, Petrides M. Three-dimensional MRI atlas of the human cerebellum in proportional stereotaxic space. Neuroimage 10: 233–260, 1999. [DOI] [PubMed] [Google Scholar]

- Scott and Kalaska 1997.Scott SH, Kalaska JF. Reaching movements with similar hand paths but different arm orientations. I. Activity of individual cells in motor cortex. J Neurophysiol 77: 826–852, 1997. [DOI] [PubMed] [Google Scholar]

- Scott et al. 1997.Scott SH, Sergio LE, Kalaska JF. Reaching movements with similar hand paths but different arm orientations. II. Activity of individual cells in dorsal premotor cortex and parietal area 5. J Neurophysiol 78: 2413–2426, 1997. [DOI] [PubMed] [Google Scholar]

- Sergio et al. 2005.Sergio LE, Hamel-Paquet C, Kalaska JF. Motor cortex neural correlates of output kinematics and kinetics during isometric-force and arm-reaching tasks. J Neurophysiol 94: 2353–2378, 2005. [DOI] [PubMed] [Google Scholar]

- Sergio and Kalaska 2003.Sergio LE, Kalaska JF. Systematic changes in motor cortex cell activity with arm posture during directional isometric force generation. J Neurophysiol 89: 212–228, 2003. [DOI] [PubMed] [Google Scholar]

- Simon et al. 2002.Simon O, Mangin JF, Cohen L, Le Bihan D, Dehaene S. Topographical layout of hand, eye, calculation, and language-related areas in the human parietal lobe. Neuron 33: 475–487, 2002. [DOI] [PubMed] [Google Scholar]

- Soechting and Flanders 1989.Soechting JF, Flanders M. Errors in pointing are due to approximations in sensorimotor transformations. J Neurophysiol 62: 595–608, 1989. [DOI] [PubMed] [Google Scholar]

- Souères et al. 1978.Souères P, Jouffrais C, Celebrini S, Trotter Y. Robotics insight for the modeling of visually guided movements in primates. In: Biological and Control Theory: Current Challenges, edited by Queinnec et al. LNCIS 357. Berlin: Springer-Verlag p. 53–75.

- Strick and Kim 1978.Strick PL, Kim CC. Input to primate motor cortex from posterior parietal cortex (area 5). I. Demonstration by retrograde transport. Brain Res 157: 325–330, 1978. [DOI] [PubMed] [Google Scholar]

- Trotter and Celebrini 1999.Trotter Y, Celebrini S. Gaze direction controls response gain in primary visual-cortex neurons. Nature 398: 239–242, 1999. [DOI] [PubMed] [Google Scholar]

- Vindras and Viviani 1998.Vindras P, Viviani P. Frames of reference and control parameters in visuomanual pointing. J Exp Psychol Hum Percept Perform 24: 569–591, 1998. [DOI] [PubMed] [Google Scholar]

- Wenderoth et al. 2005.Wenderoth N, Debaere F, Sunaert S, Swinnen SP. The role of anterior cingulate cortex and precuneus in the coordination of motor behavior. Eur J Neurosci 22: 235–246, 2005. [DOI] [PubMed] [Google Scholar]

- Wolpert et al. 1998.Wolpert DM, Goodbody SJ, Husain M. Maintaining internal representations: the role of the human superior parietal lobe. Nat Neurosci 1: 529–533, 1998. [DOI] [PubMed] [Google Scholar]

- Yanai et al. 2008.Yanai Y, Adamit N, Israel Z, Harel R, Prut Y. Coordinate transformation is first completed downstream of primary motor cortex. J Neurosci 28: 1728–1732, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]