Abstract

Purpose: To determine the quality of reporting of diagnostic accuracy studies before and after the Standards for Reporting of Diagnostic Accuracy (STARD) statement publication and to determine whether there is a difference in the quality of reporting by comparing STARD (endorsing) and non-STARD (nonendorsing) journals.

Materials and Methods: Diagnostic accuracy studies were identified by hand searching six STARD and six non-STARD journals for 2001, 2002, 2004, and 2005. Diagnostic accuracy studies (n = 240) were assessed by using a checklist of 13 of 25 STARD items. The change in the mean total score on the modified STARD checklist was evaluated with analysis of covariance. The change in proportion of times that each individual STARD item was reported before and after STARD statement publication was evaluated (χ2 tests for linear trend).

Results: With mean total score as dependent factor, analysis of covariance showed that the interaction between the two independent factors (STARD or non-STARD journal and year of publication) was not significant (F = 0.664, df = 3, partial η2 = 0.009, P = .58). Additionally, the frequency with which individual items on the STARD checklist were reported before and after STARD statement publication has remained relatively constant, with little difference between STARD and non-STARD journals.

Conclusion: After publication of the STARD statement in 2003, the quality of reporting of diagnostic accuracy studies remained similar to pre–STARD statement publication levels, and there was no meaningful difference (ie, one additional item on the checklist of 13 of 25 STARD items being reported) in the quality of reporting between those journals that published the STARD statement and those that did not.

© RSNA, 2008

Before incorporating evidence into practice or including the results of a study in a systematic review, the clinician or researcher must assess the validity of the study results, as flaws in research design can lead to biased results. Previous research has shown that diagnostic test accuracy may be overestimated in studies having methodological shortcomings (1–3). To guard against the determination of decisions on the basis of biased information, the clinician or researcher must critically appraise the evidence. This appraisal can be difficult because many clinicians and researchers lack critical appraisal skills (4) and, for those who do have adequate skills, the substandard reporting of many published research reports hampers the critical appraisal exercise. A survey of diagnostic accuracy studies published in four major medical journals between 1978 and 1993 showed that the methodological quality of the studies was mediocre at best (5). The authors of the survey, in addition, reported that the quality assessments were hampered because many reports lacked key information about important design elements (5).

Awareness of the need to improve the quality of reporting of diagnostic accuracy studies was increased with the publication of the Standards for Reporting of Diagnostic Accuracy (STARD) statement (6,7). The STARD statement provides guidelines for improving the quality of reporting of studies of diagnostic accuracy and draws on 33 previously published checklists (8). It is hypothesized that complete and informative reporting will lead to better decisions in health care.

Similar initiatives have been launched previously for the reporting of therapy articles (Consolidated Standards of Reporting Trials [CONSORT]) (9) and systematic reviews (Quality of Reporting of Meta-analyses, known as QUOROM [10]). In addition, studies (11,12) in which the effect of these initiatives was evaluated have been published. For example, Moher et al (11) found that the use of the CONSORT statement was associated with improvements in the quality of reports of randomized controlled trials.

To date, researchers have published several studies in which the quality of reporting of diagnostic accuracy studies by using the STARD checklist was summarized (13–15). In only two studies (16,17) did the researchers investigate whether the quality of reporting had improved since the STARD statement was published. In one study (16), researchers found a slight improvement in the quality of reporting for only a few items on the STARD checklist, whereas in the other study (17), researchers found no improvement. Both studies conducted their post–STARD statement publication evaluation in 2004, however, which may have been too soon to determine the full impact of the STARD statement because the first journals to publish the statement did so in 2003.

In view of the mounting interest in evidence-based care, including diagnostic test information and the rise of systematic reviews of diagnostic accuracy studies, evaluation of the quality of reporting in diagnostic studies and the potential impact of the STARD initiative is important. The purpose of this study was to determine the quality of reporting of diagnostic accuracy studies before and after STARD statement publication and to determine whether there is a difference in the quality of reporting by comparing STARD (endorsing) and non-STARD (nonendorsing) journals.

MATERIALS AND METHODS

This research was funded by the U.S. National Library of Medicine and Canadian Institutes of Health. The funding source had no involvement in the collection, analysis, or interpretation of data or in the writing of the report.

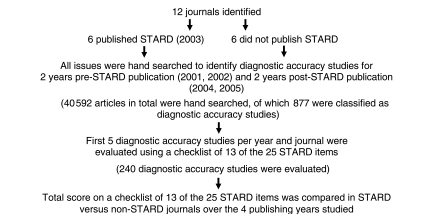

The Figure outlines the study design. Briefly, diagnostic accuracy studies were identified by hand searching six journals that published the STARD statement in 2003 and six journals that did not publish the STARD statement. The 12 journals that were hand searched were identified by using data from the Clinical Hedges Study (18,19). Of the 170 journals included in the Clinical Hedges database, six published the STARD statement in 2003 (herein referred to as the STARD journals). These six journals were American Journal of Roentgenology and Radiology (two journals about diagnostic radiology); Annals of Internal Medicine (one journal about internal medicine); and BMJ, JAMA, and Lancet (three journals about general medicine). Five journals that had a similar content area did not publish the STARD statement. These five journals were Pediatric Radiology (one journal about diagnostic radiology); Archives of Internal Medicine (one journal about internal medicine); and American Journal of Medicine, British Journal of General Practice, and New England Journal of Medicine (three journals about general medicine).

Figure 1.

Study design.

To locate an additional comparator journal in the diagnostic area, as one was not available in the Clinical Hedges database, we used a method similar to that of Moher et al (11) when choosing a journal subset for evaluating the effect of the CONSORT statement for reporting clinical trials. Criteria for choosing the sixth comparator journal were as follows: (a) The journal was identified by the journal subject category of “Radiology, Nuclear Medicine and Medical Imaging” and was chosen on the Institute for Scientific Information Journal Citation Reports Web site at http://apps.isiknowledge.com/. (b) The journals in this subject category were sorted in descending order according to journal impact factor. (c) The first journal that was available through the Health Sciences Library at McMaster University, Hamilton, Ontario, Canada, that published at least one diagnostic accuracy study (after the contents of two 2001 issues of the journal were reviewed) and was indexed in both MEDLINE and EMBASE was identified. European Radiology was chosen by using these criteria, and this journal completes the set of the six comparator journals herein referred to as non-STARD journals.

Four years of publication were studied independently: The years 2001 and 2002 were studied to obtain a pre–STARD statement publication assessment; 2004 and 2005 were studied to obtain a post–STARD statement publication assessment. Diagnostic accuracy studies were defined as those in which the outcomes from one or more tests considered for evaluation were compared with outcomes from the reference standard, both measured in the same study population (7). Three trained research assistants, who all had master's degree–level training in critical appraisal, participated in three training sessions held during 1 month. In the training sessions, the research assistants discussed and honed application of the definition for diagnostic accuracy studies. Crude agreement for identifying those studies was also calculated. The research assistants independently reviewed all issues of the 12 journals for the 4 years of publication to identify all diagnostic accuracy studies.

Identified diagnostic accuracy studies were evaluated by using the STARD checklist by assigning a yes or no response to each item, depending on whether the authors had reported it. Twenty-five items make up the STARD checklist. When the papers considered in this study were assessed, items 13 (“Methods for calculating test reproducibility, if done”), 23 (“Estimates of variability of diagnostic accuracy between subgroups of participants, readers or centers, if done”), and 24 (“Estimates of test reproducibility, if done”) were removed from the STARD checklist because all have the qualifier “if done.” Thus, if these items were not reported in the diagnostic accuracy papers evaluated, it would be impossible to determine whether this lack of reporting was because the item was not done or because it was not reported.

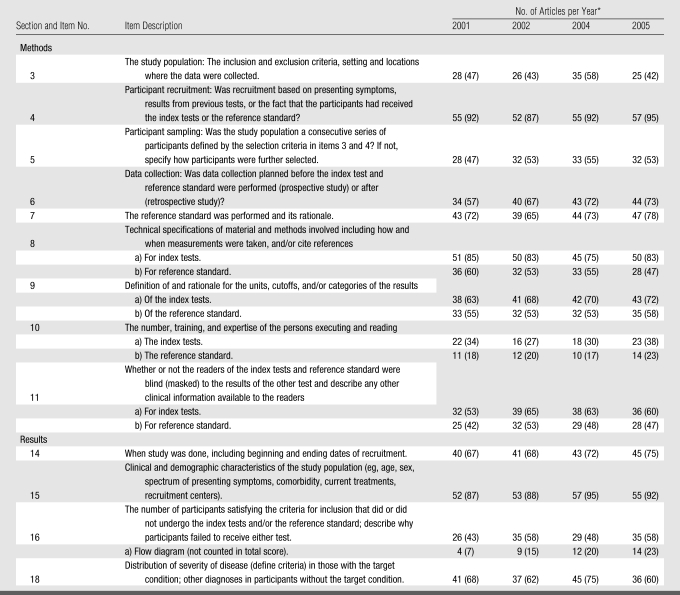

In addition, only those STARD items that have been empirically shown to have a potentially biasing effect on the results of diagnostic accuracy studies and those items that appear to account for variation between studies were evaluated. On the basis of the findings in three studies (1–3), the following features, with corresponding STARD checklist item number, appear to have had a biasing effect or account for variation between studies and should therefore be reported: (a) a description of the distribution of severity of disease of the study population (item 18), (b) a description of the reference standard and test (items 7–10), (c) whether blinding to results of the interpretation of the test and the reference standard was done (item 11), (d) a description of the study population (items 3–5 and 14–16), and (e) a description of the data collection process (item 6). Thus, only these 13 items were assessed in this study. The checklist of 13 of the 25 STARD items we used is shown in Table 1 because only these items have been empirically shown to have had a potentially biasing effect on the results of diagnostic accuracy studies or to account for variation among studies.

Table 1.

Reporting of Items on a Checklist of STARD Items for Evaluation of Studies of Diagnostic Accuracy according to Year of Publication

Note.—The checklist for this study included 13 of 25 items in the STARD checklist.

Numbers in parentheses are percentages, which were calculated on the basis of 60 articles per year of publication.

Items 8–11 on the checklist concern the index tests, as well as the reference standard. These four items were split into two questions, one for the index tests and one for the reference standard. A total STARD score for each article was calculated by summing the number of reported items (0–13 points available). Unit weights were applied for each of the items except for items 8–11 that were split in two and that were given a weight of 0.5 for each of the index tests and the reference standard.

Four trained research assistants who all had master's degree–level training in critical appraisal (three of these research assistants were involved in identifying diagnostic accuracy studies) participated in three training sessions held to review in detail the application of the STARD checklist to 21 articles. These research assistants independently evaluated the diagnostic accuracy articles (assigned randomly) in duplicate (ie, two research assistants per article). Disagreements on checklist items were resolved in consensus involving a third trained research assistant who was one of the four not involved in the duplicate assessment of the article in question. Consensus evaluations were used in the analysis. Crude agreement levels were calculated for each of the 13 items after a series of 42 consecutive articles was assessed. Once crude agreement for all 13 items had reached more than 95% for at least two of the four readers, these two readers independently evaluated the remaining articles. Thus, 118 articles were assessed in duplicate (2124 individual items assessed with 55 disagreements), with the consensus evaluations used in the analysis, and 122 articles were assessed by one or the other of two readers after they attained more than 95% crude agreement.

By using software (SPSS, version 13; SPSS, Chicago, Ill), the total score on the STARD checklist was treated as the dependent variable in an analysis of covariance with two independent factors, journal type (STARD vs non-STARD) and year of publication (2001, 2002, 2004, and 2005), with one covariate, content area of the journal (ie, diagnostic radiology vs other [general and internal medicine]). A critical level of P < .05 was used to define significance. Effect sizes, as an estimate of the proportion of variance in the dependent variable (ie, total score on the STARD checklist) explained by the independent variables (ie, journal type and year of publication), are expressed as partial η2. By using the software, a similar analysis of covariance was also conducted, with year of publication as a binary variable (pre–STARD [years 2001 and 2002] and post–STARD [years 2004 and 2005] statement publication) to increase the statistical power to detect a difference in total scores.

Norman and Streiner (20) provide two methods for calculating sample size when conducting an analysis of covariance that is based on the work of Kleinbaum and colleagues (21). The second method is most relevant for determining the sample size in this study and involves use of the formula for a t test and calculation of the sample size on the basis of the comparison of most interest and, in this study, that comparison is the difference in total score between STARD and non-STARD journals. By using research tool software (Arcus QuickStat Biomedical; Research Solutions, Cambridge, England), a mean difference score of 2 (a difference score of 2 would be meaningful because all 13 items on the selected checklist of STARD criteria have been empirically shown to have a biasing effect on diagnostic accuracy studies or account for the variation between studies) and a standard deviation of 5.1 (the standard deviation of the total score on a 40-item CONSORT scale in 1994 in CONSORT adopters journals [11]), 102 articles per group (α = .05, β = .20), are required. On the basis of this sample size calculation, 102 articles are required in each of the STARD and non-STARD journal sets.

To provide equal samples within each journal title across 4 years of sampling, five diagnostic accuracy articles per journal per year of publication are required, leading to a total of 240 articles. The first five diagnostic accuracy articles in each journal for each year of publication were chosen for evaluation with the checklist we developed that was based on STARD criteria. When this was not possible (ie, when fewer than five diagnostic accuracy articles were published in a given journal within a given year), additional diagnostic accuracy articles were included from the other journals in that year and the journal subset (ie, STARD journals or non-STARD journals).

By using the research tool software, the proportion of times that each of the 13 STARD items were reported across the 4 years of publication was also analyzed by using the Armitage 2 × 4 (4 years of publication) χ2 test for linear trend. A critical level of P < .05 was used to define significance.

RESULTS

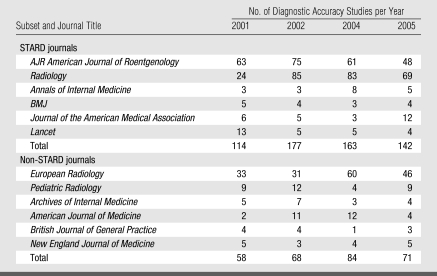

Of the 40 592 articles reviewed, 877 were classified as diagnostic accuracy studies. Crude agreement of 96% in classifying 428 articles as diagnostic accuracy studies was attained by the three research assistants. Table 2 shows the number of diagnostic accuracy studies for each of the 12 journals that were hand searched according to the year of statement publication. Most of the studies on diagnostic accuracy were published in three of the diagnostic radiology journals (American Journal of Roentgenology, Radiology, and European Radiology).

Table 2.

Number of Diagnostic Accuracy Studies according to Journal Title and Year

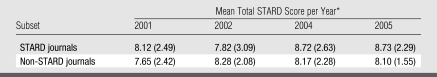

In total, 240 diagnostic accuracy studies were evaluated by using a checklist of 13 of the 25 STARD items; there were 30 articles per year (2001, 2002, 2004, and 2005) per journal subset (STARD and non-STARD journals). The mean total scores on the checklist of 13 of 25 STARD items, according to journal subset and year of publication, are shown in Table 3.

Table 3.

Mean Total STARD Score according to Journal Subset and Year of Publication

Score was based on a checklist of 13 of the 25 STARD items and a maximum total score of 13. Numbers in parentheses are the standard deviations.

By using the mean total score as the dependent factor, an analysis of covariance showed that the interaction between the two independent factors (STARD or non-STARD journal and year of publication [a categoric factor with four levels]) was not significant (F = 0.664, df = 3, P = .58). Partial η2 was 0.009, which means that 0.9% of the variability in the dependent variable can be explained or accounted for by the independent variables. This nonsignificant result means that the change in the mean total score in STARD journals was not significantly different from the change in the mean total score in non-STARD journals during the 4 years of publication. The main effects for journal type (STARD vs non-STARD) (F = 0.897, df = 1, partial η2 = 0.004, P = .35) and journal year of publication (F = 0.789, df = 3, partial η2 = 0.010, P = .50) were also not significant. All effects (interaction and main effects) were also nonsignificant in the analysis where year of publication was considered as a binary variable.

Because there was no statistical difference between STARD and non-STARD journals, Table 1 shows the reporting of individual items according to year of publication. By using the proportion of times that each item was reported across the 4 years of publication, the Armitage χ2 test for linear trend showed no significant overall differences or linear trends for any item except for the inclusion of a flow diagram (affiliated with item 16 on the STARD checklist), which is a diagram showing the exact number of patients at each stage of the study and the number of subjects who failed to undergo either the index test or the reference standard (χ2 for linear trend, P = .01).

DISCUSSION

After publication of the STARD statement, the quality of reporting remained similar to pre–STARD statement publication levels. In addition, there is no meaningful difference (ie, one additional item on the checklist of 13 of the 25 STARD items being reported) in the quality of reporting between those journals that published the STARD statement and those that did not. One possible explanation for the lack of improvement after STARD statement publication is that evaluation was conducted too soon for an improvement to be evident. Evaluation of the impact of the STARD statement 2 years after publication seemed reasonable, however, because the CONSORT statement showed an improvement at 2 years after publication (11). Perhaps, as some authors suggest, the design aspects of therapeutic trials were better known and easier to apply when the CONSORT statement was published, and this same level of knowledge and ease of application was not apparent for diagnostic accuracy study principles when the STARD statement was published (22). Evaluation of the impact of the STARD statement several years after publication may be needed, particularly since the time from manuscript submission to article publication can be on the order of 12–15 months and, in some cases, longer.

Another possible explanation for the lack of improvement after STARD statement publication is that, in the journals that published the STARD statement, the editors and the peer reviewers are not enforcing the statement's guidelines. When reviewing the instructions to authors for each of the STARD journals online, it is difficult to determine the extent of enforcement of the guidelines, and it is impossible to determine whether the enforcement has changed during the 2 years of publication after the STARD statement publication.

A further possible explanation for the lack of a difference before and after STARD statement publication and the lack of a difference between STARD and non-STARD journals is that, overall, there was a general awareness of diagnostic accuracy design principles. This awareness could be the case because the STARD checklist drew on 33 previously published checklists, making reporting guidelines widely available to all authors before and after STARD statement publication. However, we found low rates of adherence to the STARD checklist items, so awareness would then not equate to adherence.

Smidt et al (16) also did not find a difference in reporting between STARD and non-STARD journals. However, they did find a slight improvement in the reporting of seven STARD items, four of which were evaluated in this study. One additional difference between our study and the study of Smidt et al (16) is that there are some marked differences in the frequency with which various items are reported. For example, Smidt et al found that item 9a, “Definition of and rationale for the units, cutoffs and/or categories of the results of the index tests”) was reported 83%–94% of the time, whereas the corresponding figures from our study were 63%–71%. In conducting this study, we found that many of the STARD checklist items were open to interpretation and that many of them were multifaceted. Thus, the evaluation process for each item could vary between studies, leading to differences in the apparent frequency of item reporting.

This study had several limitations. First, it is possible that those journals classified as non-STARD journals did employ the STARD criteria even though they did not publish the STARD statement. This would make it difficult to find a difference in the quality of reporting between STARD and non-STARD journals. Second, including some journals that are nondiagnostic in focus in this study sample may have made it more difficult to discern whether the STARD statement has had an impact on the quality of reporting of diagnostic accuracy studies. Third, in this study, diagnostic accuracy studies were assessed by using only 13 of the 25 STARD criteria. This may have caused underestimation of the impact of the STARD statement publication and may have caused difficulty in the comparison of the results of this study with results of other studies in which the quality of reporting was evaluated. In addition, some may not agree with the decision to assess only those items that were empirically shown to have a biasing effect or to account for the variation between studies.

Overall, our study results show that the quality of reporting on many of the items that have been shown to have a biasing effect on the results of diagnostic accuracy studies or appear to account for the variation between studies is substandard, making it difficult for clinicians and researchers to judge study validity. Authors, editors, and peer reviewers are encouraged to adhere to and enforce STARD statement guidelines because there is clearly room for improvement in the reporting of diagnostic accuracy studies. Research is needed to determine the factors that influence journal editor and author uptake of reporting guidelines.

ADVANCES IN KNOWLEDGE

After publication of the STARD statement in 2003, the quality of reporting of diagnostic accuracy studies remained similar to levels before STARD statement publication, and there was no meaningful difference (ie, one additional item on the checklist of 13 of 25 STARD items being reported) in the quality of reporting between those journals that published the STARD statement and those that did not in the first 2 years after publication.

Quality of reporting of diagnostic accuracy is substandard, with a great deal of room for improve-ment.

Acknowledgments

Brian Haynes, MD, PhD, Stephen Walter, PhD, and Ruta Valaitis, PhD, were involved in the development of the research protocol. Cynthia Lokker, PhD, Monika Kastner, MSc, and Leslie Baier, BA, assisted with data collection.

Abbreviations

CONSORT = Consolidated Standards of Reporting Trials

STARD = Standards for Reporting of Diagnostic Accuracy

See also the editorial by Bossuyt in this issue.

Author contribution: Guarantor of integrity of entire study, N.L.W.

Author stated no financial relationship to disclose.

Funding: This research was supported by the National Library of Medicine.

References

- 1.Lijmer JG, Mol BW, Heisterkamp S, et al. Empirical evidence of design-related bias in studies of diagnostic tests. JAMA 1999;282:1061–1066. [DOI] [PubMed] [Google Scholar]

- 2.Whiting P, Rutjes AW, Reitsma JB, Glas AS, Bossuyt PM, Kleijnen J. Sources of variation and bias in studies of diagnostic accuracy: a systematic review. Ann Intern Med 2004;140:189–202. [DOI] [PubMed] [Google Scholar]

- 3.Rutjes AW, Reitsma JB, Di Nisio M, Smidt N, van Rijn JC, Bossuyt PM. Evidence of bias and variation in diagnostic accuracy studies. CMAJ 2006;174:469–476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Putnam W, Twohig PL, Burge FI, Jackson LA, Cox JL. A qualitative study of evidence in primary care: what the practitioners are saying. CMAJ 2002;166:1525–1530. [PMC free article] [PubMed] [Google Scholar]

- 5.Reid MC, Lachs MS, Feinstein AR. Use of methodological standards in diagnostic test research: getting better but still not good. JAMA 1995;274:645–651. [PubMed] [Google Scholar]

- 6.Bossuyt PM, Reitsma JB; Standards for Reporting of Diagnostic Accuracy. The STARD initiative [letter]. Lancet 2003;361:71.. [DOI] [PubMed] [Google Scholar]

- 7.Bossuyt PM, Reitsma JB, Bruns DE, et al. The STARD statement for reporting studies of diagnostic accuracy: explanation and elaboration. Clin Chem 2003;49:7–18. [DOI] [PubMed] [Google Scholar]

- 8.Bossuyt PM, Reitsma JB, Bruns DE, et al. Toward complete and accurate reporting of studies of diagnostic accuracy: the STARD initiative. Fam Pract 2004;21:4–10. [DOI] [PubMed] [Google Scholar]

- 9.Begg C, Cho M, Eastwood S, et al. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA 1996;276:637–639. [DOI] [PubMed] [Google Scholar]

- 10.Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement—quality of reporting of meta-analyses. Lancet 1999;354:1896–1900. [DOI] [PubMed] [Google Scholar]

- 11.Moher D, Jones A, Lepage L; CONSORT Group (Consolidated Standards for Reporting of Trials). Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA 2001;285:1992–1995. [DOI] [PubMed] [Google Scholar]

- 12.Devereaux PJ, Mannes BJ, Ghali WA, Quan H, Guyatt GH. The reporting of methodological factors in randomized controlled trials and the association with a journal policy to promote adherence to the Consolidated Standards of Reporting Trials (CONSORT) checklist. Control Clin Trials 2002;23:380–388. [DOI] [PubMed] [Google Scholar]

- 13.Smidt N, Rutjes AW, van der Windt AW, et al. Quality of reporting of diagnostic accuracy studies. Radiology 2005;235:347–353. [DOI] [PubMed] [Google Scholar]

- 14.Selman TJ, Khan KS, Mann CH. An evidence-based approach to test accuracy studies in gynecologic oncology: the ‘‘STARD’’ checklist. Gynecol Oncol 2005;96:575–578. [DOI] [PubMed] [Google Scholar]

- 15.Siddiqui MA, Azuara-Blanco A, Burr J. The quality of reporting of diagnostic accuracy studies published in ophthalmic journals. Br J Ophthalmol 2005;89:261–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Smidt N, Rutjes AW, van der Windt DA, et al. The quality of diagnostic accuracy studies since the STARD statement: has it improved? Neurology 2006;67:792–797. [DOI] [PubMed] [Google Scholar]

- 17.Coppus SF, van der Veen F, Bossuyt PM, Mol BW. Quality of reporting of test accuracy studies in reproductive medicine: impact of the Standards for Reporting of Diagnostic Accuracy (STARD) initiative. Fertil Steril 2006;86:1321–1329. [DOI] [PubMed] [Google Scholar]

- 18.Haynes RB, Wilczynski NL. Optimal search strategies for retrieving scientifically strong studies of diagnosis from Medline: analytical survey. BMJ 2004;328:1040–1042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wilczynski NL, Haynes RB; Hedges Team. EMBASE search strategies for identifying methodologically sound diagnostic studies for use by clinicians and researchers. BMC Med 2005;3:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Norman GR, Streiner DL. Biostatistics: the bare essentials. 2nd ed. Hamilton, Ontario, Canada: BC Decker, 2000.

- 21.Kleinbaum DG, Kupper LL, Muller KE. Applied regression analysis and other multivariable methods. 2nd ed. Boston, Mass: PWS-Kent, 1988.

- 22.Reeves BC. Evidence about evidence. Br J Ophthalmol 2005;89:253–254. [DOI] [PMC free article] [PubMed] [Google Scholar]