Abstract

Linguistic processing–especially syntactic processing–is often considered a hallmark of human cognition, thus the domain-specificity or domain-generality of syntactic processing has attracted considerable debate. These experiments address this issue by simultaneously manipulating syntactic processing demands in language and music. Participants performed self-paced reading of garden-path sentences in which structurally unexpected words cause temporary syntactic processing difficulty. A musical chord accompanied each sentence segment, with the resulting sequence forming a coherent chord progression. When structurally unexpected words were paired with harmonically unexpected chords, participants showed substantially enhanced garden-path effects. No such interaction was observed when the critical words violated semantic expectancy, nor when the critical chords violated timbral expectancy. These results support a prediction of the shared syntactic integration resource hypothesis (SSIRH, Patel, 2003), which suggests that music and language draw on a common pool of limited processing resources for integrating incoming elements into syntactic structures.

The extent to which syntactic processing of language relies on special-purpose cognitive modules is controversial. Some theories claim that syntactic processing relies on domain-specific processes (e.g., Caplan & Waters, 1999) while others implicate cognitive mechanisms not unique to language (e.g., Lewis, Vasishth, & Van Dyke, 2006). One interesting way to approach this debate is to compare syntactic processing in language and music. Like language, music has a rich syntactic structure in which discrete elements are hierarchically organized into rule-governed sequences (Patel, 2008). And, like language, the extent to which the processing of this musical syntax relies on specialized neural mechanisms is debated. Dissociations between disorders of language and music processing (aphasia and amusia) suggest that syntactic processing in language and music rely on distinct neural mechanisms (Peretz & Coltheart, 2003). In contrast, neuroimaging studies reveal overlapping neural correlates of musical and linguistic syntactic processing (e.g., Maess, Koelsch, Gunter, & Friederici, 2001; Patel, Gibson, Ratner, Besson, & Holcomb, 1998).

A possible reconciliation of these findings distinguishes between syntactic representations and the processes that act on those representations. While the representations involved in language and music syntax are likely quite different, both types of representations must be integrated into hierarchical structures as sequences unfold. This shared syntactic integration resource hypothesis (SSIRH) claims that music and language rely on shared, limited processing resources that activate separable syntactic representations (Patel, 2003). The SSIRH thereby accounts for discrepant findings from neuropsychology and neuroimaging by assuming that dissociations between aphasia and amusia result from damage to domain-specific representations, whereas the overlapping activations found in neuroimaging studies reflect shared neural resources involved in integration processes.

A key prediction of the SSIRH is that syntactic integration in language should be more difficult when these limited integration resources are taxed by the concurrent processing of musical syntax (and vice versa). In contrast, if separate processes underlie linguistic and musical syntax, then syntactic integration in language and music should not interact. Koelsch and colleagues (Koelsch, Gunter, Wittforth, & Sammler, 2005; Steinbeis & Koelsch, 2008) provided electrophysiological evidence supporting the SSIRH by showing that the left anterior negativity component elicited by syntactic violations in language was reduced when paired with a simultaneous violation of musical syntax. Crucially, this interaction did not occur between non-syntactic linguistic and musical manipulations.

The current experiments tested the SSIRH’s prediction of interference by relying on the psycholinguistic phenomenon of garden-path effects and on musical key structure. Garden-path effects refer to comprehenders’ difficulty on encountering a phrase that disambiguates a local syntactic ambiguity to a less preferred structure (for a review, see Pickering & van Gompel, 2006). For example, when reading a reduced sentence complement (SC) structure like The attorney advised the defendant was guilty, a reader is likely to initially (or preferentially) analyze the defendant as the direct object of advised rather than as the subject of an embedded sentence. This syntactic misanalysis leads to slower reading times on was when compared to a full-SC structure that includes the optional function word that and thus has no such structural ambiguity (The attorney advised that the defendant was guilty). Difficulty at the disambiguating region might either reflect a need to abandon the initial analysis and reanalyze (e.g., Frazier & Rayner, 1982) or a need to raise the activation of a less-preferred analysis (e.g., MacDonald, Pearlmutter, & Seidenberg, 1994). However, under both accounts comprehension is taxed because of the need to integrate syntactically unexpected information. Therefore, the current experiments used garden-path sentences to manipulate linguistic syntactic integration demands while simultaneously manipulating musical syntactic integration demands via expectancies for musical key.

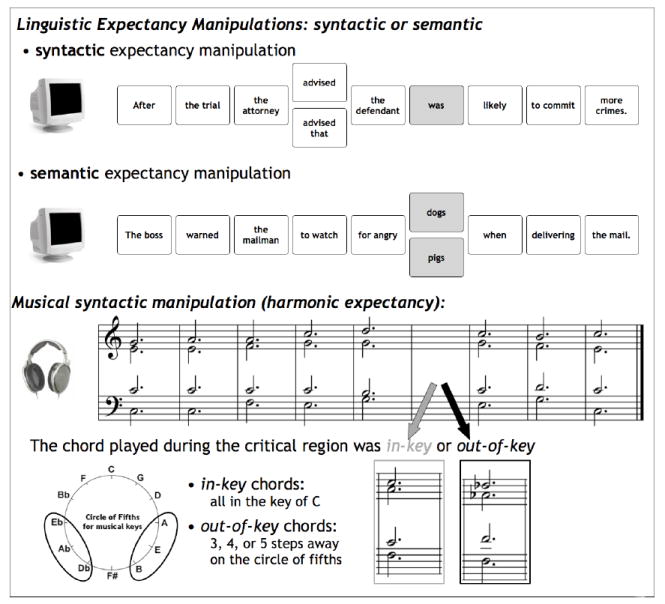

A musical key (within Western tonal music) consists of a set of pitches that vary in stability. Pitches from a key combine to form chords, which combine into sequences that follow structural norms to which even musically untrained listeners are sensitive (Smith & Melara, 1990). Musical keys sharing many pitches and chords are considered closely related, as represented in the circle of fifths (Figure 1, bottom). Increasing distance between keys along the circle corresponds to a decrease in the perceived relatedness between these keys (Thompson & Cuddy, 1992). Thus chords are syntactically unexpected when from a key harmonically distant from that of preceding chords (see Patel, 2008, for a review).

Figure 1.

Schematic of the experimental self-paced reading task. Participants pressed a button for each segment of text (between one and four words long), which was accompanied by a chord. The critical region of the experimental sentences (shaded in grey) manipulated either syntactic or semantic expectancy, and the chord accompanying the critical region manipulated harmonic expectancy. Harmonically expected chords came from the key of the musical phrase (C major, the key at the top of the circle of fifths) while harmonically unexpected chords were the tonic chords of distant keys (indicated by ovals on the circle of fifths). In this example, the harmonically expected chord is an F-major chord and the unexpected chord is a D-flat major chord.

If syntactic processing resources are shared between language and music, then disruption due to local sentence ambiguities (garden-paths) should be especially severe when paired with harmonically unexpected chords. In contrast, if musical and linguistic syntactic processing rely on separable resources, then disruptions due to garden-path structures should not be influenced by harmonically unexpected chords. The SSIRH thus predicts interactions between syntactic difficulty in language and music.

The SSIRH does not make any claim regarding the relationship of musical syntactic processing to other types of linguistic processing such as semantics. Evidence regarding this relationship is mixed: some studies suggest independent processing of linguistic semantics and musical syntax (Besson, Faïta, Peretz, Bonnel, & Requin, 1998; Bonnel, Faïta, Peretz, & Besson, 2001; Koelsch et al., 2005), while others suggest shared components (Poulin-Charonnat, Bigand, Madurell, & Peereman, 2005; Steinbeis & Koelsch, 2008). The current experiments address this issue by also crossing semantic expectancy in language with harmonic expectancy in music. Semantic expectancy was manipulated by using words with either high or low cloze probability, which refers to the likelihood that a particular word follows a given sentence fragment. For example, dogs is a relatively likely continuation of the fragment “The mailman was attacked by angry…” whereas pigs is an unlikely continuation and so is semantically unexpected. This unexpectancy is not syntactic in nature (both dogs and pigs play the expected syntactic role) so if language and music share resources that are specific to syntactic processing than this manipulation of semantic expectancy should produe effects independent of musical syntactic expectancy. However, if language and music share resources for a more general type of processing (e.g., for a process of integrating new information into any type of evolving representation), then both syntactic and semantic manipulations in language should interact with musical syntax.

To control for attentional factors (cf. Escoffier & Tillmann, 2008), Experiment 2 crossed both syntactic and semantic expectancy in language with a non-syntactic musical manipulation of timbre.

Experiment 1

Participants read sentences while hearing tonal chord progressions. Demands on linguistic syntactic integration were manipulated by using garden-path sentences, and demands on musical syntactic integration were manipulated by relying on musical key structure. Additionally, semantic expectancy in language was manipulated to determine whether any effect of harmonic expectancy on language processing is specific to syntax.

Method

Participants

Ninety-six UCSD undergraduates participated in Experiment 1 in exchange for course credit. Nearly half of the participants (49.4%) reported no formal musical training, and the other half averaged 7 years of training (SD=4.3 years).

Materials

Twelve of the twenty-four critical sentences manipulated syntactic expectancy by including either a full or reduced sentence complement, thereby making the syntactic interpretation expected or unexpected at the critical word (underlined in example (1) below; note that most of these sentences were adapted from Trueswell, Tanenhaus, & Kello, 1993). Twelve other sentences manipulated semantic expectancy by including a word with either high or low cloze probability, underlined in example (2) below, thereby making the semantic interpretation expected or unexpected at the critical word. An additional 24 filler sentences were included that contained neither syntactically nor semantically unexpected elements (e.g., After watching the movie, the critic wrote a negative review). Thus only 25% of the sentences read by any one participant contained an unusually unexpected element (six garden-path sentences and six sentences with low-cloze-probability words), making it unlikely that participants would notice the linguistic manipulations.

After the trial, the attorney advised (that) the defendant was likely to commit more crimes.

The boss warned the mailman to watch for angry (dogs / pigs) when delivering the mail.

A separate chord sequence was composed for each sentence. These were four-voiced chorales in C-major that were loosely modeled on Bach-style harmony and voice leading, ended with a perfect authentic cadence, and were recorded with a piano timbre. The length of chorales paired with critical stimuli ranged from 8 to 11 chords (mean=9.5, SD=0.93) with at least 5 chords preceding the critical region to establish the key. Two versions of the 24 chorales paired with the critical linguistic items were created: one version with all chords in the key of C, and one version identical except for one chord in the position corresponding to the critical region of the sentence, which was replaced with the tonic chord from a distant key (equally often three, four, or five steps away on the circle of fifths). Additionally, one-sixth of the chorales paired with filler sentences contained an out-of-key chord, thus two-thirds of the chorales heard by any one participant contained no key violations.

Procedure

Participants read sentences by pressing a button to display consecutive segments of text in the center of the screen. Each segment was accompanied by a chord (presented over headphones), which began on text onset and decayed over 1.5 seconds or was cut off when the participant advanced to the next segment (see Figure 1 for a schematic of the task). After each sentence, a yes/no comprehension question was presented to encourage careful reading. For example, participants were asked “Did the attorney think the defendant was innocent?” following example (1) and “Did the neighbor warn the mailman?” following example (2). Correct responses initiated the next trial, and incorrect responses caused a 2.5 second delay while “Incorrect!” was displayed.

Participants were instructed to read the sentences quickly but carefully enough to accurately answer the comprehension questions. Participants were told that they would hear a chord accompanying each segment of text but were instructed that the chords were not task-relevant and to concentrate on the sentences. Response latencies were collected for each segment.

Design and Analysis

The experimental design included three within-participant factors, each with two levels: linguistic expectancy, musical expectancy, and linguistic manipulation. Four lists rotated each critical stimulus through the within-item manipulations (linguistic expectancy and musical expectancy), so each participant saw a given item only once, but each item occurred in all four conditions equally across the experiment. Items were presented in a fixed pseudo-random order, constrained such that critical and filler items were presented on alternate trials and no more than two consecutive trials contained out-of-key chords.

Reading times (RTs) below 50 ms or above 2500 ms per segment were discarded, as were RTs above or below 2.5 standard deviations from each participant’s mean reading time. These criteria led to the exclusion of 1.9% and 0.62% of critical observations in Experiments 1 and 2 respectively.1 RTs were logarithmically transformed and analyzed using orthogonal contrast coding in generalized linear mixed effects models as implemented in the lme4 package (Bates, Maechler, & Dai 2008) in the statistical software R (version 2.7.1; R Development Core Team, 2008). Linguistic expectancy, musical expectancy, and linguistic manipulation were entered as fixed effects, with participants and items as crossed random effects. Significance was assessed with Markov chain Monte Carlo sampling as implemented in the languageR package (Baayen, 2008). Separate analyses were conducted for the critical sentence region and for the immediately preceding (pre-critical) and following (post-critical) regions.

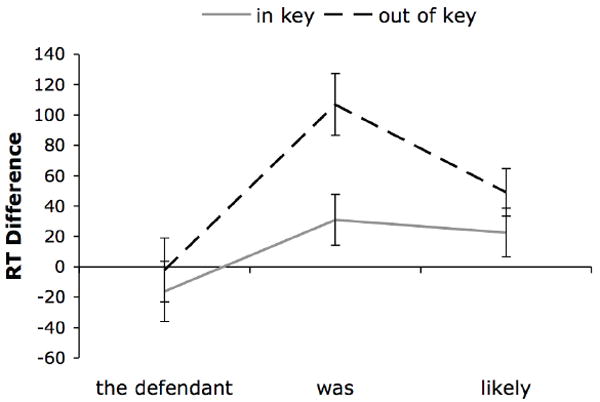

Results

Table 1 lists mean RTs by condition and sentence region, Table 2 lists comprehension question accuracies by condition, and Figure 2 plots the difference between RTs in the syntactically unexpected and expected conditions as a function of musical expectancy and position in the sentence. This difference score shows how much slower participants read phrases in reduced-SC sentences (without that) than in full-SC sentences (with that). Thus, the positive difference score for the embedded verb was reflects a standard garden-path effect. Crucially, this garden-path effect was considerably larger when the chord accompanying the embedded verb was foreign to the key established by the preceding chords in the sequence.

Table 1.

Mean reading times (in milliseconds, with standard errors in parenthesis) for Experiment 1 by sentence region (relative to the critical region) and by condition.

| Preceding Region | ||||||

|---|---|---|---|---|---|---|

| Syntactically | Semantically | |||||

| expected | unexpected | difference | expected | unexpected | difference | |

| in-key | 726 (26) | 710 (27) | -16 | 640 (24) | 636 (23) | -4 |

| out-of-key | 723 (25) | 721 (27) | -2 | 640 (23) | 601 (20) | -39 |

|

Critical Region | ||||||

| Syntactically | Semantically | |||||

| expected | unexpected | difference | expected | unexpected | difference | |

| in-key | 639 (24) | 670 (24) | 31 | 648 (26) | 719 (29) | 71 |

| out-of-key | 606 (22) | 713 (28) | 107 | 652 (25) | 690 (29) | 38 |

|

Following Region | ||||||

| Syntactically | Semantically | |||||

| expected | unexpected | difference | expected | unexpected | difference | |

| in-key | 630 (23) | 652 (27) | 22 | 651 (25) | 710 (23) | 59 |

| out-of-key | 642 (22) | 691 (26) | 49 | 635 (22) | 691 (22) | 56 |

Table 2.

Mean accuracies (with standard errors in parentheses) on the post-sentence comprehension questions in Experiments 1 and 2 by condition. Participants were more accurate in the semantic than syntactic cases, probably because questions were not matched in difficulty across conditions.

| Experiment 1 | ||||

|---|---|---|---|---|

| Syntactically | Semantically | |||

| expected | unexpected | expected | unexpected | |

| in-key | 83.3% (2.3%) | 81.6% (2.4%) | 89.2% (1.7%) | 87.5% (2.0%) |

| out-of-key | 81.3% (2.5%) | 81.3% (2.3%) | 90.6% (1.7%) | 86.1% (2.1%) |

|

Experiment 2 | ||||

| Syntactically | Semantically | |||

| expected | unexpected | expected | unexpected | |

| expected timbre | 78.5% (2.5%) | 80.9% (2.2%) | 92.0% (1.5%) | 85.1% (2.0%) |

| unexpected timbre | 78.5% (2.4%) | 77.1% (2.7%) | 86.5% (2.1%) | 85.8% (2.1%) |

Figure 2.

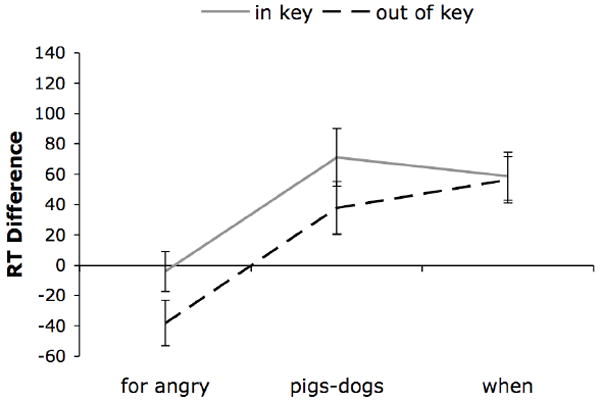

The difference between reading times (RTs; in milliseconds) in the unexpected and expected language syntax conditions (Figure 2, left) and semantic conditions (Figure 3, right) of Experiment 2 as a function of timbral expectancy in the concurrent musical chorale and of sentence region (the x-axis labels come from the example given in the method section). Error bars indicate standard errors. Positive difference scores over the critical region (was in Figure 2, and pigs/dogs in Figure 3) reflect standard garden-path and semantic unexpectancy effects.

Figure 3 plots the same information for the semantically unexpected and expected conditions. Here, the positive difference score for the semantically manipulated region reflects slower reading of semantically unexpected items (e.g., pigs) than of semantically expected items (e.g., dogs). This effect of semantic expectancy did not differ as a function of musical expectancy.

Figure 3.

The difference between reading times (RTs; in milliseconds) in the unexpected and expected language syntax conditions (Figure 2, left) and semantic conditions (Figure 3, right) of Experiment 2 as a function of timbral expectancy in the concurrent musical chorale and of sentence region (the x-axis labels come from the example given in the method section). Error bars indicate standard errors. Positive difference scores over the critical region (was in Figure 2, and pigs/dogs in Figure 3) reflect standard garden-path and semantic unexpectancy effects.

These observations were supported by statistical analysis. In the pre-critical region, RTs were longer in the syntactically-manipulated than in the semantically-manipulated sentences (a main effect of linguistic manipulation: b=0.13, SE=0.031, t=4.12, p<.001). This is unsurprising because different items were used in these conditions, and should have no important consequences for the questions of interest. Surprisingly, RTs were also longer in the linguistically expected than unexpected condition (a main effect of linguistic expectancy: b=0.026, SE=0.012, t=2.25, p<.05), which may be due to earlier differences in the sentences (e.g., the presence or absence of that). Because this effect was small (16 ms) and in the opposite direction of a garden-path effect, it seems unlikely to have led to the pattern in the critical region.

In the critical region, RTs were slowed by both syntactic and semantic unexpectancy (a main effect of linguistic expectancy: b=-0.082, SE=0.012 t=-6.83, p<.0001). No other effects reached significance except a three-way interaction between linguistic manipulation, linguistic expectancy, and musical expectancy (b=0.032, SE=0.012, t=2.62, p<. 01). Planned contrasts showed that this interaction reflects a simple interaction between linguistic and musical expectancy for the syntactically manipulated sentences (b=0.042, SE=0.017, t=2.46, p<. 05), but no such interaction for the semantically manipulated sentences (b=-0.021, SE=0.017, t=-1.25, n.s.). The simple interaction between musical expectancy and garden path effects did not correlate with years of musical training (r=-0.10, n.s.).2

In the post-critical region, RTs were longer in the linguistically unexpected than in the expected conditions (a main effect of linguistic expectancy: b=-0.074, SE=0.011, t=-6.73, p<.0001), especially for the semantically manipulated sentences (an interaction between linguistic manipulation and linguistic expectancy: b=-0.027, SE=0.011, t=-2.42, p<.05). Additionally, linguistic manipulation and musical expectancy interacted (b=-0.031, SE=0.011, t=-2.84, p<. 01) reflecting slower responses after an out-of-key chord on the syntactically manipulated sentences (b=-0.041, SE=0.016, t=-2.65, p<. 01), but not on the semantically manipulated sentences (b=0.021, SE=0.016, t=1.36, n.s.). No other effects reached significance.

Discussion

Participants showed both garden-path effects and slowing for semantically anomalous phrases. However, only garden-path effects interacted with harmonic expectancy, suggesting that processes of syntactic integration in language and of harmonic integration in music draw upon shared cognitive resources, whereas semantic integration in language and harmonic integration in music rely on distinct mechanisms (at least in the present task; see below). Given that harmonically unexpected chords typically lead to slowed responses even on non-musical tasks (e.g., Poulin-Charonnat et al. 2005), it is surprising that participants in this experiment were not, overall, slower to respond when the concurrent chord was from an unexpected key. It is unclear why there was no such main effect of harmonic expectancy, though it may be because the task was unspeeded (unlike in Poulin-Charonnat et al. 2005) or because of the relatively high attentional demands of the sentence-processing task (cf. Loui & Wessel, 2007).

These results support the hypothesis that processing resources for linguistic and musical syntax are shared (Patel, 2003). However, while Experiment 1 showed a clear dissociation between the effects of musical syntactic demands on linguistic syntax and semantics, it is important to show that these results are not simply due to the unexpected nature of the musical stimulus (i.e., perhaps the unexpected chord simply distracted attention away from the primary task of sentence parsing). It is not obvious why the cost of this distraction would occur only in the garden-path sentences and not in the semantically unexpected sentences; however, it is possible that the garden-path sentences were more difficult, and thus more susceptible to distraction. To address this concern, Experiment 2 repeated Experiment 1, but with a non-syntactic, but easily noticeable (thus potentially distracting) manipulation of the target chord.

Experiment 2

Experiment 1 revealed an interaction between the processing of musical and linguistic syntax, but not between musical syntax and linguistic semantics, suggesting shared processes underlie the processing of syntax in music and language. This assumes that the rule-based processing of harmonic relationships leads to this interaction; if so, other types of musical unexpectancy that are non-syntactic should not interfere with syntactic processing in language. To test this claim, Experiment 2 manipulated the timbre of the critical chord, which either had the expected piano timbre, or a pipe-organ timbre. This difference does not depend on any type of hierarchical organization, but is perceptually salient and represents a significant psychoacoustic deviation from the preceding sequence, thus should be at least as distracting as a change in key.

Method

Participants

Ninety-six UCSD undergraduates participated in Experiment 2 in exchange for course credit. Information on musical training was not collected because of a programming error.

Materials, Design, and Procedure

The materials, design, and procedure were identical to Experiment 1 except that musical expectancy was manipulated as timbral expectancy. Specifically, musically expected and unexpected chords were the same in-key chords, but unexpected chords were played with a pipe organ timbre.

Results

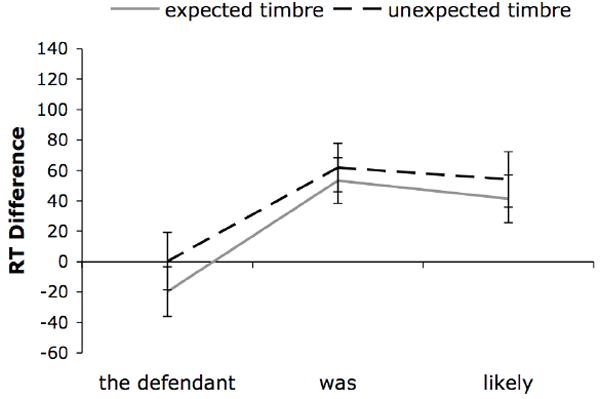

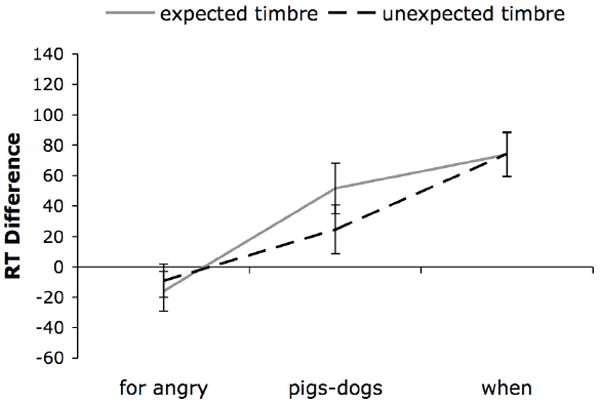

Table 3 lists mean RTs by condition and sentence region, Table 2 lists comprehension question accuracies, and Figure 4 plots the difference between RTs in the unexpected and expected linguistic syntax conditions as a function of timbral expectancy and sentence region. The positive difference score over the embedded verb reflects a garden-path effect, which was no larger when the chord accompanying the embedded verb was of an unexpected musical timbre. Figure 5 plots the same information for the semantically unexpected and expected conditions. Semantically unexpected items were read more slowly than semantically expected items, however this effect of semantic expectancy did not differ as a function of timbral expectancy.

Table 3.

Mean reading times (RTs; in milliseconds, with standard errors in parenthesis) for Experiment 2 by sentence region (relative to the critical region) and by condition.

| Preceding Region | ||||||

|---|---|---|---|---|---|---|

| Syntactically | Semantically | |||||

| expected | unexpected | difference | expected | unexpected | difference | |

| expected timbre | 618 (23) | 599 (21) | -19 | 533 (18) | 517 (19) | -16 |

| unexpected timbre | 633 (22) | 633 (25) | 0 | 532 (18) | 523 (18) | -9 |

|

Critical Region | ||||||

| Syntactically | Semantically | |||||

| expected | unexpected | difference | expected | unexpected | difference | |

| expected timbre | 518 (19) | 571 (21) | 53 | 532 (19) | 583 (24) | 51 |

| unexpected timbre | 550 (17) | 612 (23) | 62 | 571 (23) | 595 (25) | 24 |

|

Following Region | ||||||

| Syntactically | Semantically | |||||

| expected | unexpected | difference | expected | unexpected | difference | |

| expected timbre | 524 (20) | 566 (23) | 42 | 522 (17) | 596 (23) | 74 |

| unexpected timbre | 576 (24) | 630 (24) | 54 | 538 (18) | 612 (24) | 74 |

Figure 4.

The difference between reading times (RTs; in milliseconds) in the unexpected and expected language syntax conditions (Figure 4, left) and semantic conditions (Figure 5, right) of Experiment 2 as a function of timbral expectancy in the concurrent musical chorale and of sentence region (the x-axis labels come from the example given in the method section). Error bars indicate standard errors. Positive difference scores over the critical region (was in Figure 4, and pigs/dogs in Figure 5) reflect standard garden-path and semantic unexpectancy effects.

Figure 5.

The difference between reading times (RTs; in milliseconds) in the unexpected and expected language syntax conditions (Figure 4, left) and semantic conditions (Figure 5, right) of Experiment 2 as a function of timbral expectancy in the concurrent musical chorale and of sentence region (the x-axis labels come from the example given in the method section). Error bars indicate standard errors. Positive difference scores over the critical region (was in Figure 4, and pigs/dogs in Figure 5) reflect standard garden-path and semantic unexpectancy effects.

Statistical analyses supported these patterns. In the pre-critical region, RTs were longer in syntactically manipulated sentences than semantically manipulated sentences (b=0.15, SE=0.033, t=4.54, p<. 001), which likely reflects differences between the materials used in these manipulations and should not have important consequences for the questions of interest. In the critical region, RTs were longer in garden-path and semantically anomalous sentences (a main effect of linguistic expectancy; b=-0.69, SE=0.012, t=-5.88, p<.0001) and were longer in phrases accompanied by a chord of unexpected timbre (a main effect of musical expectancy; b=-0.054, SE=0.012, t=-4.61, p<. 0001). No interactions reached significance, including the 3-way interaction corresponding to the significant effect in Experiment 1 (t=0.92, n.s.).

In the post-critical region, RTs in linguistically unexpected sentences were longer than in expected sentences (b=-0.095, SE=0.012, t=-8.21, p<.0001) and were longer following a timbrally unexpected chord (b=-0.055, SE=0.012, t=-4.78, p<.0001), especially in the syntactic condition (an interaction between linguistic condition and musical expectancy; b=-0.038, SE=0.012, t=-3.33, p<.001). No other effects reached significance.

Discussion

Participants in Experiment 2 showed standard garden-path and semantic unexpectancy effects, but neither effect interacted with the manipulation of musical timbre. Participants were slowed overall when hearing a chord of an unexpected timbre, suggesting that this manipulation did draw attention from the primary task of sentence parsing. A comparable main effect of musical expectancy was not observed in Experiment 1, suggesting that hearing a chord with an unexpected timbre may actually be more attention-capturing than hearing a chord from an unexpected key. These results show that the interaction between the processing of linguistic syntax and harmonic key relationships found in Experiment 1 did not result from the attention-capturing nature of unexpected sounds, but instead reflects overlap in structural processing resources for language and music.

General Discussion

The experiments reported here tested a key prediction of the SSIRH (Patel, 2003) that concurrent difficult syntactic integrations in language and in music should lead to interference. In Experiment 1, resolution of temporarily ambiguous garden-path sentences was especially slowed when hearing an out-of-key chord, suggesting that the processing of these harmonically unexpected chords drew upon the same limited resources that are involved in the syntactic reanalysis of garden-path sentences. Participants were not especially slow to process semantically improbable words when accompanied by an out-of-key chord, and Experiment 2 showed that manipulations of musical timbre did not interact with syntactic or semantic expectancy in language.

It is somewhat surprising that the extent to which musical harmonic unexpectancy interacted with garden-path reanalysis in Experiment 1 did not vary with musical experience. However, self-reported “years of musical training” may be a relatively imprecise measure of musical expertise. This, plus evidence that out-of-key chords elicit larger amplitude electrophysiological responses in musicians than in nonmusicians (e.g., Koelsch, Schmidt, & Kansok, 2002), suggests that this issue deserves further investigation.

That semantic expectancy in language did not interact with harmonic expectancy in music fits with some previous findings (Besson et al., 1998; Bonnel et al., 2001; Koelsch et al., 2005), but contrasts with other work showing interactions between semantic and harmonic processing. For example, semantic priming effects are reduced for target words sung on harmonically unexpected chords (Poulin-Charronnat et al., 2005). Note, however, that these results were not interpreted as evidence for shared processing of harmony and semantics but were argued to reflect modulations of attentional processes by harmonically unexpected chords (cf. Escoffier and Tillman, 2008). Another example of semantic/harmonic interactions is that the N400 component elicited by semantically unexpected words leads to reduced amplitude of the N500 component elicited by harmonically unexpected chords (Steinbeis & Koelsch, 2008). The discrepancy between that study and this one may reflect task differences. In particular, Steinbeis and Koelsch required participants to monitor sentences and chord sequences whereas the present experiments included no musical task.

The current experiments indicate that syntactic processing is not just a hallmark of human language but is a hallmark of human music as well. Of course, not all aspects of linguistic and musical syntax are shared, but these data suggest that common processes are involved in both domains. This overlap between language and music provides two viewpoints of our impressive syntactic processing abilities and thus should provide an opportunity to develop a better understanding of the mechanisms underlying our ability to process hierarchical syntactic relationships in general.

Acknowledgments

We thank Evelina Fedorenko, Victor Ferreira, Florian Jaeger, Stefan Koelsch, Roger Levy, and two anonymous reviewers for helpful comments, and Serina Chang, Rodolphe Courtier, Katie Doyle, Matt Hall, and Yanny Siu for assistance with data collection. This work was supported by NIH grants R01 MH-64733 and F32 DC-008723 and by Neurosciences Research Foundation as part of its program on music and the brain at The Neurosciences Institute, where ADP is the Esther J. Burnham Senior Fellow.

Footnotes

Analyses were also conducted on untrimmed log-transformed RTs, which yielded the same pattern of results.

Musical training also did not predict participants’ contribution to the statistical model (i.e., participants’ random intercepts were not correlated with musical training; r=0.09, n.s.), and allowing random slopes for musical expectancy did not provide a better fitting model (χ2=0.17, n.s.) suggesting that the effect of musical expectancy did not differ across subjects.

Portions of this work were presented at the CUNY Sentence Processing conference in March, 2007, the Conference on Language and Music as Cognitive Systems in May, 2007, and the 10th International Conference on Music Perception and Cognition (ICMPC10) in August, 2008.

Archived Materials The following materials associated with this article may be assessed through the Psychonomic Society’s Norms, Stimuli, and Data archive, www.psychonomic.org/archive. To access these files, search the archive for this article using the journal name (Psychonomic Bulletin & Review), the first author’s name (Slevc), and the publication year (2009).

References

- Baayen RH. languageR: Data sets and functions with “Analyzing Linguistic Data: A practical introduction to statistics”. R package version 0.95 2008 [Google Scholar]

- Bates DM, Maechler M, Dai B. Ime4: Linear mixed-effects models using S4 classes. R package version 0.999375-24 2008 [Google Scholar]

- Besson M, Faïta F, Peretz I, Bonnel A-M, Requin J. Singing in the brain: Independence of lyrics and tunes. Psychological Science. 1998;9:494–498. [Google Scholar]

- Bonnel A-M, Faïta F, Peretz I, Besson M. Divided attention between lyrics and tunes of operatic songs: Evidence for independent processing. Perception and Psychophysics. 2001;63:1201–1213. doi: 10.3758/bf03194534. [DOI] [PubMed] [Google Scholar]

- Caplan D, Waters GS. Verbal working memory and sentence comprehension. behavioral and brain Sciences. 1999;22:77–126. doi: 10.1017/s0140525x99001788. [DOI] [PubMed] [Google Scholar]

- Escoffier N, Tillmann B. The tonal function of a task-irrelevant chord modulates speed of visual processing. Cognition. 2008;107:1070–1083. doi: 10.1016/j.cognition.2007.10.007. [DOI] [PubMed] [Google Scholar]

- Frazier L, Rayner K. Making and correcting errors during sentence comprehension: Eye movements in the analysis of structurally ambiguous sentences. Cognitive Psychology. 1982;14:178–210. [Google Scholar]

- Koelsch S, Gunter TC, Wittfoth M, Sammler D. Interaction between syntax processing in language and in music: an ERP Study. Journal of Cognitive Neuroscience. 2005;17:1565–1577. doi: 10.1162/089892905774597290. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Schmidt B-H, Kansok J. Effects of musical expertise on the early right anterior negativity: An event-related brain potential study. Psychophysiology. 2002;39:657–633. doi: 10.1017.S0048577202010508. [DOI] [PubMed] [Google Scholar]

- Lewis RL, Vasishth S, Van Dyke JA. Computational principles of working memory in sentence comprehension. Trends in Cognitive Sciences. 2006;10:447–454. doi: 10.1016/j.tics.2006.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loui P, Wessel DL. Harmonic expectation and affect in Western music: Effects of attention and training. Perception and Psychophysics. 2007;69:1084–1092. doi: 10.3758/bf03193946. [DOI] [PubMed] [Google Scholar]

- MacDonald MC, Pearlmutter NJ, Seidenberg MS. The lexical nature of syntactic ambiguity resolution. Psychological Review. 1994;101:676–703. doi: 10.1037/0033-295x.101.4.676. [DOI] [PubMed] [Google Scholar]

- Maess B, Koelsch S, Gunter TC, Friederici AD. Musical syntax is processed in Broca’s area: An MEG study. Nature Neuroscience. 2001;4:540–545. doi: 10.1038/87502. [DOI] [PubMed] [Google Scholar]

- Patel AD. Language, music, syntax and the brain. Nature Neuroscience. 2003;6:674–681. doi: 10.1038/nn1082. [DOI] [PubMed] [Google Scholar]

- Patel AD. Music, Language, and the Brain. New York: Oxford University Press; 2008. [Google Scholar]

- Patel AD, Gibson E, Ratner J, Besson M, Holcomb PJ. Processing syntactic relations in language and music: An event-related potential study. Journal of Cognitive Neuroscience. 1998;10:717–733. doi: 10.1162/089892998563121. [DOI] [PubMed] [Google Scholar]

- Peretz I, Coltheart M. Modularity of music processing. Nature Neuroscience. 2003;6:688–691. doi: 10.1038/nn1083. [DOI] [PubMed] [Google Scholar]

- Pickering MJ, van Gompel RPG. Syntactic Parsing. In: Traxler MJ, Gernsbacher M, editors. Handbook of Psycholinguistics. New York: Academic Press; 2006. [Google Scholar]

- Poulin-Charonnat B, Bigand E, Madurell F, Peereman R. Musical structure modulates semantic priming in vocal music. Cognition. 2005;94:B67–78. doi: 10.1016/j.cognition.2004.05.003. [DOI] [PubMed] [Google Scholar]

- R Development Core Team. 2008. R: A language and environment for statistical computing (Version 2.7.1): R Foundation for Statistical Computing, Vienna, Austria. URL: http://www.R-project.org.

- Smith JD, Melara RJ. Aesthetic preference and syntactic prototypicality in music: ’Tis the gift to be simple. Cognition. 1990;34:279–298. doi: 10.1016/0010-0277(90)90007-7. [DOI] [PubMed] [Google Scholar]

- Steinbeis N, Koelsch S. Shared neural resources between music and language indicate semantic processing of musical tension-resolution patterns. Cerebral Cortex. 2008;18:1169–1178. doi: 10.1093/cercor/bhm149. [DOI] [PubMed] [Google Scholar]

- Thompson WF, Cuddy LL. Perceived key movement in four-voice harmony and single voices. Music Perception. 1992;9:427–438. [Google Scholar]

- Trueswell JC, Tanenhaus MK, Kello C. Verb-specific constraints in sentence processing: separating effects of lexical preference from garden-paths. Journal of Experimental Psychology: Learning Memory and Cognition. 1993;19:528–553. doi: 10.1037//0278-7393.19.3.528. [DOI] [PubMed] [Google Scholar]