Abstract

Several lines of research have documented early-latency non-linear response interactions between audition and touch in humans and non-human primates. That these effects have been obtained under anesthesia, passive stimulation, as well as speeded reaction time tasks would suggest that some multisensory effects are not directly influencing behavioral outcome. We investigated whether the initial non-linear neural response interactions have a direct bearing on the speed of reaction times. Electrical neuroimaging analyses were applied to event-related potentials in response to auditory, somatosensory, or simultaneous auditory–somatosensory multisensory stimulation that were in turn averaged according to trials leading to fast and slow reaction times (using a median split of individual subject data for each experimental condition). Responses to multisensory stimulus pairs were contrasted with each unisensory response as well as summed responses from the constituent unisensory conditions. Behavioral analyses indicated that neural response interactions were only implicated in the case of trials producing fast reaction times, as evidenced by facilitation in excess of probability summation. In agreement, supra-additive non-linear neural response interactions between multisensory and the sum of the constituent unisensory stimuli were evident over the 40–84 ms post-stimulus period only when reaction times were fast, whereas subsequent effects (86–128 ms) were observed independently of reaction time speed. Distributed source estimations further revealed that these earlier effects followed from supra-additive modulation of activity within posterior superior temporal cortices. These results indicate the behavioral relevance of early multisensory phenomena.

Keywords: multisensory, crossmodal, event-related potential, auditory, somatosensory, tactile, reaction time, redundant signals effect

Introduction

Multisensory interactions have been documented across the animal kingdom as well as across varying pairs of sensory modalities and can serve to enhance perceptual abilities and response speed (Calvert et al., 2004; Driver and Spence, 2004; Stein and Meredith, 1993; Stein and Stanford, 2008; Welch and Warren, 1980) as well as learning (Lehmann and Murray, 2005; Murray et al., 2004, 2005a; Shams and Seitz, 2008). With increasing neuroscientific research in this domain, there is now abundant anatomic and functional evidence that multisensory convergence and non-linear interactions can occur during the initial post-stimulus processing stages as well as within regions hitherto considered solely unisensory in their function, including even primary cortices (Calvert et al., 1997; Cappe and Barone, 2005; Falchier et al., 2002; Foxe et al., 2000; Giard and Peronnet, 1999; Hall and Lomber, 2008; Kayser and Logothetis, 2007; Rockland and Ojima, 2003; Wang et al., 2008). It is worth noting that these kinds of effects do not preclude additional effects at later stages and within higher-order brain regions (Driver and Noesselt, 2008).

Despite this shift in how sensory processing is considered to be organized (Foxe and Schroeder, 2005; Ghazanfar and Schroeder, 2006; Wallace et al., 2004), a challenge remains regarding whether there are direct links between early, low-level multisensory interactions and behavioral indices of multisensory processing. The case of auditory–somatosensory (AS) multisensory interactions is promising with regard to addressing this issue because it has been thoroughly investigated using relatively rudimentary stimuli (i.e., meaningless sounds and vibrotactile or electrocutaneous stimulation) in both humans and non-human primates with anatomic (Cappe and Barone, 2005; Cappe et al., 2009; Hackett et al., 2007a,b; Smiley et al., 2007), electrophysiologic (Brett-Green et al., 2008; Foxe et al., 2000; Fu et al., 2003; Gonzalez Andino et al., 2005; Lakatos et al., 2007; Murray et al., 2005b; Schroeder et al., 2001, 2003), and hemodynamic methods (Beauchamp et al., 2008; Foxe et al., 2002; Kayser et al., 2005). One consistency is that convergence and non-linear interactions occur within the initial temporal stages of cortical processing (i.e., within 50–100 ms post-stimulus) and involve belt regions of auditory cortex adjacent to primary cortices. These effects are seen despite paradigmatic variations in terms of passive stimulus presentation vs. performance of a simple stimulus detection task (in the case of studies in humans), or even the use of anesthetics (in the case of studies in non-human primates). In part because of the large consistency in the observed effects, AS interactions represent a situation wherein one might reasonably hypothesize that early effects within low-level cortices are relatively automatic and unaffected by cognitive factors and have no direct bearing on subjects' subsequent behavior (e.g., reaction time).

The present study sought to provide empirical evidence that would either support or refute this proposition. To this end, a subset of behavioral and EEG data from our previously published study (Murray et al., 2005b) were re-considered by using a median split according to reaction times to sort and average EEG trials into those leading to fast and slow reaction times. This was done separately for each unisensory (auditory and somatosensory) condition as well as for the multisensory condition. From such and for each participant, we calculated event-related potential (ERP) indices of non-linear interactions based on an additive model for both trials leading to fast and slow reaction times, separately. We reasoned that if AS multisensory integration was independent of behavior, then no differences would be observed between these indices. In the alternative, these indices should differ if non-linear neural response interactions impact subsequent behavior.

Materials and Methods

Participants

The present analyses are based on the data from eight paid volunteers, who partook in our previously published study (Murray et al., 2005b). Their mean age was 27.5 years, and they included three women. Seven of the eight participants were right-handed (Oldfield, 1971). All reported normal hearing and touch, and no one reported history of neurological or psychiatric illnesses. They all provided written, informed consent to the experimental procedures, which were approved by the institutional Review Board of The Nathan Kline Institute.

Stimuli and task

Full details of the paradigm are reported elsewhere (Murray et al., 2005b). We supply only the details pertinent to the present analyses. Participants' arms were comfortably outstretched in front of them on armrests and were separated by ∼100° in azimuth. Each hand was located next to a loudspeaker (JBL, model no. CM42). Auditory stimuli were 30 ms white noise bursts (70 dB; 2.5 ms rise/fall time) delivered through a stereo receiver (Kenwood, model no. VR205). In their hands an Oticon bone conduction vibrator (Oticon-A 100 Ω, 1.6 × 2.4 cm surfaces; Oticon Inc., Somerset, NJ, USA) was held between the thumb and index finger (and away from the knuckles to avoid bone conduction of sound). Somatosensory stimuli were driven by DC pulses (±5 V; ∼685 Hz square waves of 15 ms duration). To further prevent the somatosensory stimuli from being heard, the hands were either wrapped in sound-attenuating foam (N = 7) or earplugs (N = 1) were worn (though in the latter case sounds remained audible and localizable). Participants were presented with auditory, somatosensory, or synchronous AS stimuli. Each of eight stimulus configurations (i.e., four unisensory and four multisensory combinations) was randomly presented with equal frequency in blocks comprising 96 trials. Participants were instructed to make simple reaction time responses to detection of any stimulus through a pedal located under the right foot, while maintaining central fixation. They were asked to emphasize speed, but to refrain from anticipating. The inter-stimulus interval varied randomly from 1.5 to 4 s.

EEG acquisition and pre-processing

Continuous EEG was acquired at 500 Hz through a 128-channel Neuroscan Synamps system referenced to the nose (Neurosoft Inc.; inter-electrode distance ∼2.4 cm, 0.05–100 Hz band-pass filter, impedances <5 kΩ) that included HEOG and VEOG recordings. Here, only data from the following three conditions were analyzed: auditory stimulation of the right loudspeaker, somatosensory stimulation at the right hand, and AS multisensory stimulation of the right loudspeaker and right hand. Peri-stimulus EEG epochs (−100 ms pre-stimulus to 600 ms post-stimulus onset) from these conditions were first sorted according to whether the RT on that trial was faster or slower than the participant's median value for that condition. In addition to the application of an automated artifact criterion of ±80 μV, epochs with blinks, eye movements, or other sources of transient noise were rejected. Data from each epoch were also band-pass filtered (0.51–40 Hz) and baseline corrected using the entire peri-stimulus period to eliminate DC shifts. This procedure was used to generate ERPs for each experimental condition and subject. Summed ERPs from the unisensory auditory and somatosensory conditions were also generated in this manner1. Prior to group-averaging, data were recalculated to the average reference, down-sampled to a standard 111-channel electrode array (3D splin interpolation, Perrin et al., 1987), normalized by their mean global field power across time, and baseline corrected using the −100 to 0 ms pre-stimulus interval2. In this way, eight ERPs were generated per participant (i.e., 4 stimulus conditions × 2 RT speeds). On average, 138 epochs (range 135–140) were included in the auditory, somatosensory, or multisensory ERP from a given participant. This value did not significantly differ across experimental conditions (p > 0.11) or as a function of RT speed (p > 0.4). Moreover, trials producing fast and slow RTs were distributed evenly across the duration of the experiment (i.e., each set of five blocks of trials). Repeated measures analysis of variance (ANOVA) using speed of RT and block of trials as within-subjects factors revealed no main effect or interaction (all p-values >0.44). This argues against an explanation of our results in terms of fatigue or procedural learning.

EEG analyses

Because the focus of this study was on whether indices of non-linear multisensory interactions vary as a function of RT speed, we compared ERPs to multisensory stimulus pairs with not only the ERPs to each unisensory condition, but also the summed ERPs to the constituent unisensory stimuli for trials leading to fast and slow RTs. This resulted in eight ERPs per participant, which we hereafter refer to as Pair_fast and Pair_slow for the multisensory conditions, Aud_fast and Aud_slow for the auditory conditions, Soma_fast and Soma_slow for the somatosensory conditions, and Sum_fast and Sum_slow for the summed responses from the constituent unisensory conditions. This generated a 2 (speed) × 4 (condition) within-subjects design that was subjected to repeated measures ANOVA. The Greenhouse–Geisser correction was applied when sphericity was significantly violated. Our analyses are based on the comparison of these ERPs examining global measures of the electric field at the scalp. These so-called electrical neuroimaging analyses allow for the differentiation of effects following from modulations in the strength of responses of statistically indistinguishable brain generators from alterations in the configuration of these generators (viz. the topography of the electric field at the scalp). As these methods have been extensively detailed elsewhere (Michel et al., 2004; Murray et al., 2004, 2006, 2008; Pourtois et al., 2008), we provide only the essential details here.

Modulations in the topography of the electric field at the scalp were assessed by submitting the collective post-stimulus periods of the ERPs from all conditions to a topographic pattern (i.e., map) analysis based on a hierarchical clustering algorithm (Murray et al., 2008; Spierer et al., 2007, 2008; Toepel et al., 2009). The neurophysiologic utility of this cluster analysis is that topographic changes indicate differences in the brain's underlying active generators (Lehmann, 1987). This method is independent of the reference electrode and is insensitive to pure amplitude modulations across conditions (topographies of normalized maps are compared). The optimal number of maps (i.e., the minimal number of maps that accounts for the greatest variance of the dataset) was determined using a modified Krzanowski–Lai criterion (Murray et al., 2008). It is worth mentioning that this method imposes no assumption that template maps be orthogonal. The pattern of maps observed in the group-averaged data was statistically tested by comparing each of these maps with the moment-by-moment scalp topography of an individual participant's ERPs from each condition – a procedure that we here refer to as ‘fitting’. For this fitting procedure, each time point of each ERP from each participant was labeled according to the map with which it best correlated spatially (Murray et al., 2008). Because this analysis did not reveal any significant modulations between conditions, we do not discuss them further here. Rather, this analysis served to identify time windows for analyses of global field power (GFP) and for source estimations.

Modulations in the strength of the electric field at the scalp were assessed using GFP (Lehmann and Skrandies, 1980) for each participant and stimulus condition. GFP is calculated as the square root of the mean of the squared value recorded at each electrode (vs. the average reference) and represents the spatial standard deviation of the electric field at the scalp. It yields larger values for stronger electric fields. We would emphasize that analyses of GFP are independent and orthogonal to those of topography, such that contemporaneous or asynchronous changes in the electric field topography can co-occur. However, the observation of a GFP modulation in the absence of a topographic modulation would most parsimoniously be interpreted as the modulation of statistically indistinguishable generators across experimental conditions.

Source estimations

We estimated the sources in the brain underlying the ERPs from each condition and RT speed using a distributed linear inverse solution applying the local autoregressive average (LAURA) regularization approach (Grave de Peralta et al., 2001, 2004; also Michel et al., 2004 for a comparison of inverse solution methods). LAURA selects the source configuration that better mimics the biophysical behavior of electric vector fields (i.e., activity at one point depends on the activity at neighboring points according to electromagnetic laws). In our study, homogenous regression coefficients in all directions and within the whole solution space were used. LAURA uses a realistic head model, and the solution space included 4024 nodes, selected from a 6 mm × 6 mm × 6 mm grid equally distributed within the gray matter of the Montreal Neurological Institute's average brain (courtesy of R. Grave de Peralta Menendez and S. Gonzalez Andino3). Source estimations were calculated over the time windows determined through the above topographic pattern analysis. For a given time window, data were first averaged as a function of time to generate a single data point for each subject and condition. This procedure increases the signal-to-noise ratio of single-subject data. Statistical analyses were then performed on the mean scalar values of the source estimations within identified clusters using a 4 × 2 repeated measures ANOVA. For the present analyses, the spatial extent of the cluster was restricted to those solution points with responses of at least 60% that of the maximal value. Additionally, clusters were required to include a minimum of at least 15 contiguous solution points to minimize the likelihood of erroneous activity. For analyses we then selected that cluster with the largest spatial extent across conditions.

Results

Behavioral results

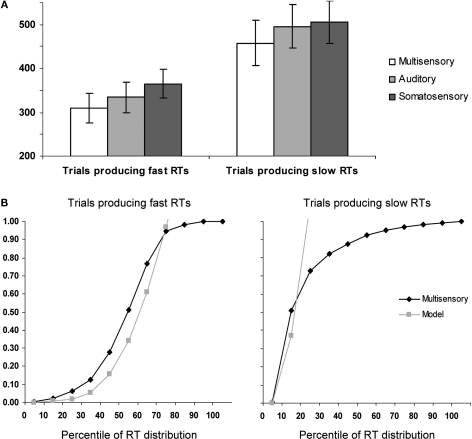

On average, subjects detected 99.8 ± 0.4% of auditory right stimuli, 99.9 ± 1.3% of somatosensory right stimuli, and 99.7 ± 0.5% of multisensory right stimulus pairs. Detection rates did not differ significantly between conditions. Mean RTs were faster for AS multisensory stimulus pairs than for either of the corresponding unisensory stimuli for both the faster and the slower halves of trials (Figure 1A). A repeated measures ANOVA using experimental condition (AS, A, and S) and portion of the RT distribution (fast and slow) as within subject factors revealed significant main effects of experimental condition [F(2,6) = 89.09; p < 0.0001] as well as portion of the RT distribution [F(1,7) = 55.93; p < 0.0001]; the latter of which was, of course, built-in to the analysis. The interaction between these factors showed a non-significant trend [F(2,6) = 4.81; p = 0.057]. Post hoc contrasts indicated that AS multisensory stimuli from both fast and slow portions of the distribution resulted in significantly faster RTs than either unisensory condition from the corresponding portion of the RT distribution (all p-values <0.002).

Figure 1.

Behavioral results. (A) Mean reaction times (SEM shown) for auditory–somatosensory multisensory pairs, auditory, and somatosensory stimuli (white, light gray, and dark gray bars, respectively). The left and right panels show data from trials producing faster and slower RTs, respectively. (B) Results of applying Miller's (1982) race model inequality to the cumulative probability distributions of the reaction time data. The model is the arithmetic sum of the cumulative probabilities from auditory and somatosensory trials. The x-axis indicates the percentile of the reaction time distribution after median split of the data. As above, the left and right panels show data from trials producing faster and slower RTs, respectively.

For each half of the RT distribution, separately, we assessed whether the redundant signals effect (RSE) could be fully explained by probability summation (also referred to as a race model account; Raab, 1962). To do this, we applied Miller's (1982) inequality, which has been widely used as a benchmark of integrative processes contributing to RT facilitation (Cappe et al., 2008; Martuzzi et al., 2007; Molholm et al., 2002; Murray et al., 2001, 2005a; Romei et al., 2007; Schroger and Widmann, 1998; Tajadura-Jimenez et al., 2008; Zampini et al., 2007). Briefly, this procedure involved the following steps. Cumulative probability distributions were calculated that are first normalized in terms of the percentile of the range of RTs for each participant across conditions (bin widths of 10% were used in the present study). The values predicted by the race model are then calculated for each bin (i.e., equal to the summed values from the unisensory conditions). Finally the actual probability of the multisensory condition is statistically compared with the modeled values (2-tailed t-test). In those cases where the probability predicted by the model is exceeded, it can be concluded that the race model cannot account for the facilitation in the redundant signals condition, thus supporting a neural co-activation model.

The results of applying this inequality to the cumulative probability of RTs to each of multisensory stimulus and unisensory counterparts for the aligned right condition with fast and slow trials are presented in Figure 1B. For the slow trials, violation of the race model was observed for the 10th percentile (p = 0.04; 2-tailed t-test). By contrast, for the fast trials, the race model was significantly violated over the 10th to 60th percentiles of the RT distribution (p = 0.04, 0.004, 0.002, 0.004, 0.002, and 0.02, respectively). These results thus suggest that neural response interactions need forcibly be invoked over a wide portion of trials producing faster RTs, but not for trials producing slower RTs. These behavioral analyses thus indicate that the likelihood of a fast RT with multisensory trials exceeded the likelihood of obtaining a similarly fast RT with either unisensory condition. Thus, any facilitative neural response interactions occurring for these multisensory trials are unlikely to be the consequence of fast processing in one sensory modality and slow processing in the other. We return to this point below in the Discussion.

Electrophysiologic results

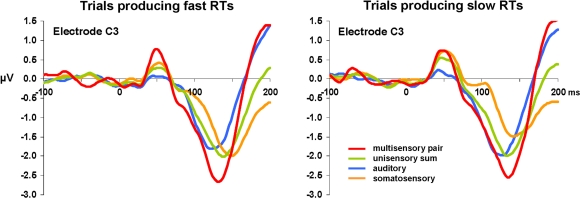

Visual inspection of the group-averaged ERPs across conditions at an exemplar left-lateralized central electrode site (C3 according to the 10–10 nomenclature; American Electroencephalographic Society, 1994) suggests that over the ∼40–80 ms post-stimulus period responses to multisensory stimuli were of higher amplitude than those to all other conditions when RTs were subsequently fast (see Figure 2). By contrast, no such modulation was evident upon inspection of responses from trials ultimately producing slow RTs. However, we would remind the reader that analyses of voltage waveforms are entirely dependent on the choice of the reference electrode(s), thereby severely limiting their neurophysiologic interpretability (detailed in Murray et al., 2008). Consequently, our analyses were based on reference-independent metrics.

Figure 2.

Group-averaged voltage waveforms. Data are displayed at an exemplar left-lateralized central electrode site (C3) from each condition. Separate graphs depict ERPs from trials producing fast and slow RTs. Non-linear neural response interactions appear to start over the ∼40–80 ms post-stimulus onset for trials leading to fast but not to slow RTs.

The first of these examined if and when the topography of the electric field at the scalp differed across conditions and/or between trials leading to fast vs. slow RTs. Such effects would be indicative of changes in the configuration of the underlying intracranial generators – i.e., differences in the active brain network. This was assessed with a topographic pattern analysis (see Section ‘Materials and Methods’ for details). However, there was no evidence of topographic variation across conditions during the initial 200 ms post-stimulus onset, in keeping with our prior findings (Murray et al., 2005b). Nonetheless, this analysis does provide information regarding time periods of stable electric field topography as a function of time – i.e., ERP components. In particular, stable topographies were observed across conditions over the 40–84 and 86–128 ms post-stimulus intervals, which will be used below for defining the time windows for analyses of GFP area and for source estimations.

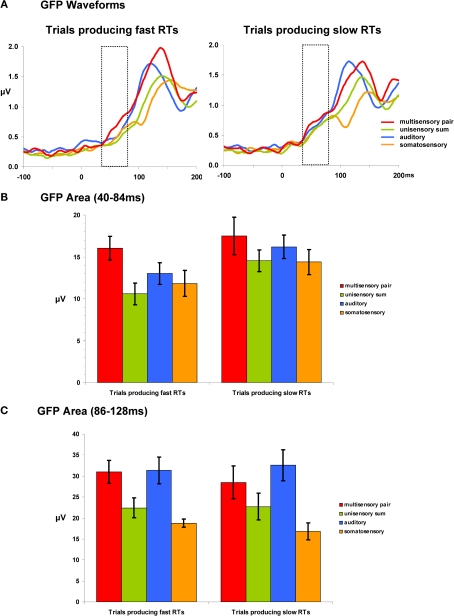

The second reference-independent measure examined if and when the strength of the electric field differed across conditions and/or between trials leading to fast vs. slow RTs. Such also provides a means of identifying non-linear interactions (either supra- or sub-additive) between multisensory pairs and summed unisensory conditions. Group-averaged GFP waveforms are shown separately for trials producing fast and slow RTs (Figure 3A). For trials producing fast RTs, responses to multisensory pairs appeared to be stronger than all other conditions over the 40–84 ms period (see hashed box in Figure 3A). Such was not evident in the case of trials producing slow RTs. To quantify and statistically assess this observation, GFP area measures were submitted to a repeated measures ANOVA. Over the 40–84 ms post-stimulus interval there were main effects of condition [F(3,21) = 7.280; p = 0.002] and of RT speed [F(1,7) = 11.581; p = 0.011]. The interaction between these factors was not reliable (p = 0.477). Given the main effect of RT speed, additional focused ANOVAs were separately performed for trials producing fast and slow RTs. In the case of trials producing fast RTs, there was a main effect of condition [F(3,21) = 11.169; p < 0.001]. Post hoc paired contrasts indicated that this was due to a stronger GFP in response multisensory pairs than any other condition (all t-values >2.440 and p-values <0.05; see Figure 3B). Likewise, the GFP area from none of the other conditions significantly differed from each other (all p-values >0.15). In the case of trials producing slow RTs, there was no evidence of a statistically reliable difference across conditions for trials producing slow RTs [F(3,21) = 1.916; p > 0.15]. This pattern of results for responses over the 40–84 ms post-stimulus interval suggests that modulations across conditions are evident only for trials producing fast RTs and that these trials furthermore result in supra-additive non-linear interactions between multisensory and summed unisensory responses. It is also worthwhile to mention that there was no evidence for robust differences over this time period between multisensory trials producing fast and slow RTs (p > 0.35). The non-linear interactions observed for trials producing fast RTs would instead appear to follow from modulated responses to unisensory conditions, rather than specifically from enhanced responses to multisensory stimuli.

Figure 3.

Global field power (GFP) waveforms and analyses. (A) group-averaged GFP waveforms are displayed for each condition and are also separated according to later RT speed. The dotted insets indicate the 40–84 ms post-stimulus interval over which area measures were calculated. (B) Mean (SEM shown) GFP area measurements over the 40–84 ms post-stimulus interval. (C) Mean (SEM shown) GFP area measurements over the 86–128 ms post-stimulus interval.

Analyses of GFP measures over the 86–128 ms post-stimulus interval (Figure 3C) revealed a main effect of condition [F(3,21) = 11.725; p = 0.005]. Neither the main effect of RT speed nor the interaction between these factors were statistically reliable (p-values >0.40). Consequently, we collapsed the data across RT speeds and conducted post hoc paired contrasts accordingly. These comparisons revealed that GFP area was stronger for multisensory pairs than either the unisensory summed responses [t(7) = 6.522; p < 0.001] or the somatosensory responses [t(7) = 3.178; p = 0.016]. There was no statistically reliable difference between multisensory and auditory responses (p > 0.168). This pattern of results would thus indicate there to be a general supra-additive interaction irrespective of later RT speed.

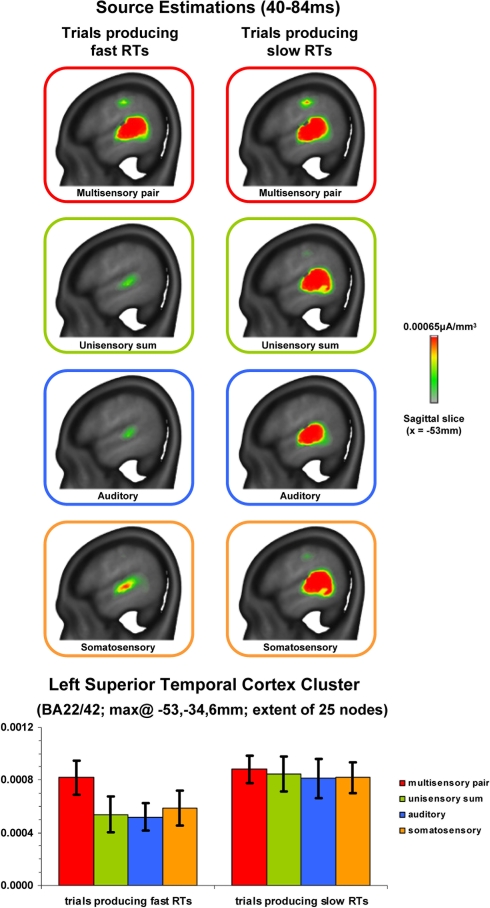

Results to this point would indicate that supra-additive non-linear interactions occur earlier when RTs were ultimately faster with no evidence of subsequent effects varying with RT speed. We therefore next sought to estimate those brain regions likely contributing to this earlier effect. Source estimations were performed over the 40–84 ms interval; the results of which are shown in Figure 4 for each condition and RT speed. In all cases, sources were identified within the posterior superior temporal cortex of the left hemisphere (Brodmann's Areas 22 and 42). A cluster of 25 contiguous nodes was identified based on the multisensory responses. The mean scalar values across these nodes were then submitted to a 4 condition × 2 RT speed within-subjects ANOVA (bar graph in Figure 4). There was a main effect of RT speed [F(1,7) = 8.593; p = 0.022]. Neither the main effect of condition nor the interaction were statistically reliable (p-values >0.19). Separate analyses were therefore conducted for each RT speed using a repeated measures ANOVA with the within subject factor of condition. For trials producing fast RTs there was a main effect of condition [F(3,21) = 3.599; p = 0.031] that was due to stronger source strength for multisensory responses. For trials producing slow RTs there was no statistically reliable main effect of condition [F(3,21) = 0.116; p = 0.949]. This pattern of results is highly consistent with that observed for the above analysis of GFP area over the same time period. As above, no reliable differences were observed between mean scalar values from multisensory trials producing fast and slow RTs (p > 0.45). Instead, the main effect of condition for trials producing fast RTs follows from weaker sources to unisensory conditions.

Figure 4.

Source estimations and statistical contrasts. The upper panels depict the mean source estimations over the 40–84 ms post-stimulus interval for each condition and subsequent RT speed at the sagittal slice of maximal amplitude (x = −53 mm using the Talairach and Tournoux (1988) coordinate system). The lower panels depict the mean (SEM shown) scalar values across nodes within the superior temporal cluster identified across conditions.

Discussion

This study investigated whether early AS neural response interactions impact subsequent behavior during a simple detection task. Previous observations of such neural response interactions in non-human primates and humans have consistently pointed to effects both early in time (i.e., within the initial 100 ms post-stimulus onset) and/or within primary auditory and caudal belt regions, despite wide variation in both the recording methods (i.e., microelectrodes, EEG, fMRI) and the circumstances (i.e., anesthetized preparation, passive stimulus presentation, simple detection task) under which non-linear effects were elicited. Those studies examining the temporal dynamics of interactive effects have hitherto employed passive paradigms, making impossible the ability of (directly) associating neurophysiologic and behavioral effects. Our behavioral and electrophysiologic results provide evidence that early-latency low-level AS interactions vary according to the later speed of RTs.

Our behavioral results indicate that only trials producing faster RTs required the invocation of neural response interactions. That is, only these trials led to a facilitation of RTs in excess of predictions based on simple probability summation. On the other hand, trials producing slower RTs exhibited no such violation even though a significant facilitation of mean RTs was observed with respect to either unisensory condition. These behavioral results replicate what was already highlighted by Miller in his seminal work (Miller, 1982); namely that analyses targeted at identifying integrative phenomena should incorporate information about the distribution of RTs. More germane, this pattern of results provides one level of support for a difference in the underlying brain mechanisms at work prior to faster vs. slower RTs, though we would emphasize that neural response interactions can be observed even when the probability summation assumption is not violated (see Figure 3C; also Murray et al., 2001).

At an electrophysiologic level, supra-additive non-linear neural response interactions between multisensory stimulus pairs and summed unisensory responses were evident vis-à-vis strength modulations of statistically indistinguishable brain generators during two successive time periods. Two features are worth mentioning. The first is that our analyses provide no evidence that trials producing faster vs. slower RTs engage distinct networks of brain regions. Rather, effects were limited to modulations in the strength of statistically indistinguishable brain networks over similar time periods. This is consistent with our prior findings applying topographic analyses with the data collapsed across RT speeds (Murray et al., 2005b) and suggests that non-linear multisensory effects are occurring within areas already active under unisensory conditions. The second is that only trials producing faster RTs displayed non-linear effects over the earlier period (40–84 ms), whereas both types of trials (i.e., those producing faster or slower RTs) exhibited non-linear effects over the later period (86–128 ms). In other words, supra-additive interactions over the 40–84 ms period appear to be linked to faster performance of a simple detection task. Moreover, source estimations localized these non-linear effects to the posterior superior temporal cortex extending into the posterior insula. Statistical analyses of our source estimations furthermore indicated that non-linear effects in these regions over the 40–84 ms period were limited to trials producing faster RTs, though all conditions elicited reliable non-zero responses (see bar graphs in Figure 4). Regions of the posterior superior temporal cortex have been repeatedly documented as subserving AS non-linear interactions in prior hemodynamic imaging studies (humans: Foxe et al., 2002 and non-human primates: Kayser et al., 2005) and intracranial electrophysiologic studies (Lakatos et al., 2007; see also Fu et al., 2003; Schroeder and Foxe, 2002; Schroeder et al., 2001 for evidence of multisensory convergence). For example, caudio-medial belt regions of the auditory cortex and even primary auditory cortex have been demonstrated in non-human primates to exhibit AS convergence (Kayser et al., 2005; Lakatos et al., 2007; Schroeder et al., 2001). Additional anatomic data suggest that such effects could follow from either or both cortico-cortical or cortico-thalamo-cortical pathways (Cappe and Barone, 2005; Cappe et al., 2009: Hackett et al., 2007a,b; Smiley et al., 2007). While most data in humans also emphasize effects within caudio-medial auditory cortices (Foxe et al., 2002), additional data also highlight the role of the superior temporal sulcus (Beauchamp et al., 2008). The source estimations of the present study would suggest that effects can extend throughout these regions contiguously, though the spatial precision of source estimations is currently inferior to that of fMRI. Future intracranial studies in humans will undoubtedly help resolve the specific contributions of each of these regions to AS interactions and perhaps also address unresolved mechanistic issues, including (but not limited to) the likely involvement of sub-threshold and sub-additive interactions (e.g., Meredith et al., 2006).

Several potential mechanisms could be proposed to account for this pattern of results. For example, it might be proposed that the present variations in multisensory interactions simply follow from variations in participants' level of attention such that fast trials were the result of high levels of attention and vice versa. Indeed, prior work has shown how spatial attention (Talsma and Woldorff, 2005) and selective attention (Talsma et al., 2007) can modulate auditory–visual multisensory integration. In these studies, attention resulted in larger and/or supra-additive effects within the initial 200 ms post-stimulus presentation. In the present study, however, subjects were instructed to attend to both sensory modalities (i.e., audition and touch) and were instructed to ignore spatial variation in the stimuli (i.e., they performed a simple detection task irrespective of the spatial position of the stimuli). It is therefore unlikely that our participants were modulating their spatial or selective attention in a systematic manner here, though we cannot unequivocally rule such out. Nonetheless, that our behavioral results show a RSE would not be predicted if the participants had selectively attended (systematically) to one or the other sensory modality. In terms of spatial attention, all eight stimulus conditions (i.e., four unisensory and four multisensory; see Murray et al., 2005b for details) were equally probable within a block of trials and the fact that all spatial combinations resulted in multisensory facilitation of RTs would suggest that participants indeed attended to both left and right hemispaces simultaneously. Finally, examination of the distribution of trials producing fast and slow RTs showed there to be an even distribution throughout the duration of the experiment. This would argue against a systematic effect of attention, arousal, or fatigue.

Another possibility is based on the recent observation that somatosensory inputs into supragranular layers of primary auditory cortices may serve to reset the phase of ongoing oscillatory activity that in turn modulates the responsiveness to auditory stimuli across the cortical layers (Lakatos et al., 2007). As a consequence, the phase of the reset oscillations was predictive of whether the auditory (and by extension multisensory) response was enhanced or suppressed. While this model is undoubtedly provocative, it is not straightforward how one might extrapolate it to the present study. For one, their maximal enhancement effects were observed when the auditory and somatosensory stimuli were synchronous, which was always the case here. Second, their measurements (which have much higher spatial precision than ours) were limited to primary auditory cortices, thus leaving unresolved the kinds of mechanisms at work in other brain regions, e.g., caudio-medial fields, known to contribute to AS interactions. Such notwithstanding, in the case of the present study, trials producing fast RTs may be those where ongoing oscillations (in primary auditory cortex or elsewhere) are reset such that their phase is optimal for response enhancement. Substantiating this speculation will require additional experimentation. However, related evidence from humans already supports a role for oscillatory activity in the beta frequency range (13–30 Hz) as contributing to subsequent RT speed (Senkowski et al., 2006; see also Senkowski et al., 2008 for review). Specifically, they observed a negative correlation between the power of early (50–170 ms) evoked beta oscillations and RT speed collapsed across unisensory auditory/visual and multisensory conditions. That is, increased power was linked with faster RTs. In the present study, there was no evidence for significant correlations between GFP and RT, though this may be influenced by our relatively small sample size. A related possibility concerns the impact of pre-stimulus brain activity on subsequent stimulus-related responses. For example, Romei and colleagues have shown that pre-stimulus oscillations in the alpha frequency range (8–14 Hz) are indicative of the excitability of visual cortices (Romei et al., 2008b) and can be used as a predictor of visual perception (Romei et al., 2008a). No pre-stimulus effects were observed in the present analyses, even when pre-stimulus baseline correction was not performed. However, we cannot entirely exclude the possibility of pre-stimulus effects that are not phase-locked to stimulus onset. It may be possible to resolve the contributions of specific frequencies within specific brain regions to multisensory interactions and behavior by combining single-trial time-frequency analyses that are performed subsequent to source estimations (e.g., Gonzalez Andino et al., 2005).

In addition to the non-linear effects, it was also the case that responses to unisensory conditions were significantly weaker (both in terms of GFP and mean scalar values of estimated sources) than that to multisensory stimulation when RTs were fast, but not when RTs were slow. At present, there is no immediate explanation for this pattern of results. One speculative possibility is that RTs are fast when a response threshold is more readily met. If this is the case, responses to unisensory conditions would be expected to be weaker when preceding fast RTs. A related type of process is often invoked to account for stimulus repetition effects where stimulus repetition often leads to suppressed neural responses as well as facilitated RTs (e.g. Grill-Spector et al., 2006). One model to account for such, colloquially referred to as a ‘fatigue’ model, proposes that there is a proportionally equivalent reduction in neural response across initial and repeated presentation without any concomitant modulation in their pattern or temporal profile. Rather all neurons responsive to a given stimulus, including those most selective, exhibit repetition suppression. Another model, colloquially referred to as a ‘sharpening’ model (e.g. Desimone, 1996), proposes that repetition leads to a reduction in the number of neurons responsive to a stimulus, with effects predominantly impacting those neurons least selective for a given stimulus. Finally, facilitation models propose there to be a latency shift in response profiles following repeated exposure. The extent to which any of these varieties of models can account for the present observations awaits continued investigation. Such notwithstanding, our data indicate how subsequent RT can impact both the occurrence of non-linear interactions as well as the strength of responses to unisensory conditions.

A final additional consideration is the possibility that (partially) distinct anatomic pathways are involved in trials producing fast and slow RTs. Although the present analyses provide no statistical evidence for topographic differences across conditions (instead only strength modulations), we cannot exclude the possibility of un-detected effects. For example, recent research has shown that the thalamus could, in principle, also play an important role in multisensory and motor integration. For example, the medial pulvinar receives projections from somatosensory and auditory areas and projects to premotor cortex (Cappe et al., 2007, 2009). Whether such pathways are implicated in the present effects will require additional investigations likely involving microelectrode recordings.

Irrespective of the mechanisms ultimately identified as mediating the variation in multisensory integration and subsequent RT speed, the present results add to the growing body of evidence highlighting the behavioral relevance of early and low-level multisensory phenomena.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The Swiss National Science Foundation provided financial support (grant #3100A0-118419 to Micah M. Murray). Partial support was also provided by the US National Institute of Mental Health (NIMH RO1 MH65350 to John J. Foxe).

Footnotes

1Given that our prior research applying single-trial analysis methods identified supra-additive interactions (Gonzalez Andino et al., 2005), we considered it warranted to first perform a median-split of the unisensory responses and then to sum together auditory and somatosensory ERPs producing fast and slow RTs, separately. In this prior work, the response to multisensory stimuli was compared with the pooled data (i.e. union) from the corresponding unisensory conditions. Thus, supra-additive effects do no appear to be the simple result of summing.

2We also assessed whether pre-stimulus differences could account for any of the hypothetical differences in brain responses associated with fast and slow RTs by analyzing the data without the application of the pre-stimulus baseline correction. No statistically reliable pre-stimulus effects were observed, though the post-stimulus effects were qualitatively similar to what we observed based on data that were pre-stimulus baseline-corrected. Thus, there was no indication that pre-stimulus responses (at least the 100 ms period before the stimulus) differed as a function of the ensuing RT.

References

- American Electroencephalographic Society (1994). American Electroencephalographic Society. Guideline thirteen: guidelines for standard electrode position nomenclature. J. Clin. Neurophysiol. 11, 111–113 [PubMed] [Google Scholar]

- Beauchamp M. S., Yasar N. E., Frye R. E., Ro T. (2008). Touch, sound and vision in human superior temporal sulcus. Neuroimage 41, 1011–1020 10.1016/j.neuroimage.2008.03.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brett-Green B. A., Miller L. J., Gavin W. J., Davies P. L. (2008). Multisensory integration in children: a preliminary ERP study. Brain Res. 1242, 283–290 10.1016/j.brainres.2008.03.090 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calvert G. A., Bullmore E. T., Brammer M. J., Campbell R., Williams S. C., McGuire P. K., Woodruff P. W., Iversen S. D., David A. S. (1997). Activation of auditory cortex during silent lipreading. Science 276, 593–596 10.1126/science.276.5312.593 [DOI] [PubMed] [Google Scholar]

- Calvert G. A., Spence C., Stein B. E. (2004). The Handbook of Multisensory Processing. Cambridge, MA, MIT Press [Google Scholar]

- Cappe C., Barone P. (2005). Heteromodal connections supporting multisensory integration at low levels of cortical processing in the monkey. Eur. J. Neurosci. 22, 2886–2902 10.1111/j.1460-9568.2005.04462.x [DOI] [PubMed] [Google Scholar]

- Cappe C., Morel A., Barone P., Rouiller E. M. (2009). The thalamocortical projection systems in primate: an anatomical support for multisensory and sensorimotor integrations. Cereb. Cortex. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cappe C., Morel A., Rouiller E. M. (2007). Thalamocortical and the dual pattern of corticothalamic projections of the posterior parietal cortex in macaque monkeys. Neuroscience 146, 1371–1387 10.1016/j.neuroscience.2007.02.033 [DOI] [PubMed] [Google Scholar]

- Cappe C., Thut G., Romei V., Murray M. M. (2008). Selective integration of auditory–visual looming cues by humans. Neuropsychologia 47, 1045–1052 10.1016/j.neuropsychologia.2008.11.003 [DOI] [PubMed] [Google Scholar]

- Desimone R. (1996). Neural mechanisms for visual memory and their role in attention. Proc. Natl. Acad. Sci. USA 93, 13494–13499 10.1073/pnas.93.24.13494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J., Noesselt T. (2008). Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron 57, 11–23 10.1016/j.neuron.2007.12.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J., Spence C. (2004). Crossmodal Space and Crossmodal Attention. Oxford, Oxford University Press [Google Scholar]

- Falchier A., Clavagnier S., Barone P., Kennedy H. (2002). Anatomical evidence of multimodal integration in primate striate cortex. J. Neurosci. 22, 5749–5759 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foxe J. J., Morocz I. A., Murray M. M., Higgins B. A., Javitt D. C., Schroeder C. E. (2000). Multisensory auditory–somatosensory interactions in early cortical processing revealed by high-density electrical mapping. Brain Res. Cogn. Brain Res. 10, 77–83 10.1016/S0926-6410(00)00024-0 [DOI] [PubMed] [Google Scholar]

- Foxe J. J., Schroeder C. E. (2005). The case for feedforward multisensory convergence during early cortical processing. Neuroreport 16, 419–423 10.1097/00001756-200504040-00001 [DOI] [PubMed] [Google Scholar]

- Foxe J. J., Wylie G. R., Martinez A., Schroeder C. E., Javitt D. C., Guilfoyle D., Ritter W., Murray M. M. (2002). Auditory–somatosensory multisensory processing in auditory association cortex: an fMRI study. J. Neurophysiol. 88, 540–543 [DOI] [PubMed] [Google Scholar]

- Fu K. M., Johnston T. A., Shah A. S., Arnold L., Smiley J., Hackett T. A., Garraghty P. E., Schroeder C. E. (2003). Auditory cortical neurons respond to somatosensory stimulation. J. Neurosci. 23, 7510–7515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar A. A., Schroeder C. E. (2006). Is neocortex essentially multisensory? Trends Cogn. Sci. 10, 278–285 10.1016/j.tics.2006.04.008 [DOI] [PubMed] [Google Scholar]

- Giard M. H., Peronnet F. (1999). Auditory–visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J. Cogn. Neurosci. 11, 473–490 10.1162/089892999563544 [DOI] [PubMed] [Google Scholar]

- Gonzalez Andino S. L., Murray M. M., Foxe J. J., de Peralta Menendez R. G. (2005). How single-trial electrical neuroimaging contributes to multisensory research. Exp. Brain Res. 166, 298–304 10.1007/s00221-005-2371-1 [DOI] [PubMed] [Google Scholar]

- Grave de Peralta R., Gonzalez Andino S., Lantz G., Michel C., Landis T. (2001). Noninvasive localization of electromagnetic epileptic activity. I. Method descriptions and simulations. Brain Topogr. 14, 131–137 10.1023/A:1012944913650 [DOI] [PubMed] [Google Scholar]

- Grave de Peralta R., Murray M. M., Michel C. M., Martuzzi R., Gonzalez Andino S. L. (2004). Electrical neuroimaging based on biophysical constraints. Neuroimage 21, 527–539 10.1016/j.neuroimage.2003.09.051 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K., Henson R.N., Martin A. (2006). Repetition and the brain, neural models of stimulus-specific effects. Trends Cogn. Sci. 10, 14–23 10.1016/j.tics.2005.11.006 [DOI] [PubMed] [Google Scholar]

- Hackett T. A., De La Mothe L. A., Ulbert I., Karmos G., Smiley J., Schroeder C. E. (2007a). Multisensory convergence in auditory cortex, II. Thalamocortical connections of the caudal superior temporal plane. J. Comp. Neurol. 502, 924–952 10.1002/cne.21326 [DOI] [PubMed] [Google Scholar]

- Hackett T. A., Smiley J. F., Ulbert I., Karmos G., Lakatos P., de la Mothe L. A., Schroeder C. E. (2007b). Sources of somatosensory input to the caudal belt areas of auditory cortex. Perception 36, 1419–1430 10.1068/p5841 [DOI] [PubMed] [Google Scholar]

- Hall A. J., Lomber S. G. (2008). Auditory cortex projections target the peripheral field representation of primary visual cortex. Exp. Brain Res. 190, 413–430 10.1007/s00221-008-1485-7 [DOI] [PubMed] [Google Scholar]

- Kayser C., Logothetis N. K. (2007). Do early sensory cortices integrate cross-modal information? Brain Struct. Funct. 212, 121–132 10.1007/s00429-007-0154-0 [DOI] [PubMed] [Google Scholar]

- Kayser C., Petkov C. I., Augath M., Logothetis N. K. (2005). Integration of touch and sound in auditory cortex. Neuron 48, 373–384 10.1016/j.neuron.2005.09.018 [DOI] [PubMed] [Google Scholar]

- Lakatos P., Chen C. M., O'Connell M. N., Mills A., Schroeder C. E. (2007). Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53, 279–292 10.1016/j.neuron.2006.12.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehmann D. (1987). Principles of spatial analysis. In Handbook of Electroencephalography and Clinical Neurophysiology, Vol. 1: Methods of Analysis of Brain Electrical and Magnetic Signals, Gevins A. S., Remond A., eds (Amsterdam, Elsevier; ), pp. 309–405 [Google Scholar]

- Lehmann D., Skrandies W. (1980). Reference-free identification of components of checkerboard-evoked multichannel potential fields. Electroencephalogr. Clin. Neurophysiol. 48, 609–621 10.1016/0013-4694(80)90419-8 [DOI] [PubMed] [Google Scholar]

- Lehmann S., Murray M. M. (2005). The role of multisensory memories in unisensory object discrimination. Brain Res. Cogn. Brain Res. 24, 326–334 10.1016/j.cogbrainres.2005.02.005 [DOI] [PubMed] [Google Scholar]

- Martuzzi R., Murray M. M., Michel C. M., Thiran J. P., Maeder P. P., Clarke S., Meuli R. A. (2007). Multisensory interactions within human primary cortices revealed by BOLD dynamics. Cereb. Cortex 17, 1672–1679 10.1093/cercor/bhl077 [DOI] [PubMed] [Google Scholar]

- Meredith M. A., Keniston L. R., Dehner L. R., Clemo H. R. (2006). Crossmodal projections from somatosensory area SIV to the auditory field of the anterior ectosylvian sulcus (FAES) in Cat: further evidence for subthreshold forms of multisensory processing. Exp. Brain Res. 172, 472–484 10.1007/s00221-006-0356-3 [DOI] [PubMed] [Google Scholar]

- Michel C. M., Murray M. M., Lantz G., Gonzalez S., Spinelli L., Grave de Peralta R. (2004). EEG source imaging. Clin. Neurophysiol. 115, 2195–2222 10.1016/j.clinph.2004.06.001 [DOI] [PubMed] [Google Scholar]

- Miller J. (1982). Divided attention: evidence for coactivation with redundant signals. Cogn. Psychol. 14, 247–279 10.1016/0010-0285(82)90010-X [DOI] [PubMed] [Google Scholar]

- Molholm S., Ritter W., Murray M. M., Javitt D. C., Schroeder C. E., Foxe J. J. (2002). Multisensory auditory–visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res. Cogn. Brain Res. 14, 115–128 10.1016/S0926-6410(02)00066-6 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Brunet D., Michel C. M. (2008). Topographic ERP analyses: a step-by-step tutorial review. Brain Topogr. 20, 249–264 10.1007/s10548-008-0054-5 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Foxe J. J., Higgins B. A., Javitt D. C., Schroeder C. E. (2001). Visuo-spatial neural response interactions in early cortical processing during a simple reaction time task: a high-density electrical mapping study. Neuropsychologia 39, 828–844 10.1016/S0028-3932(01)00004-5 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Foxe J. J., Wylie G. R. (2005a). The brain uses single-trial multisensory memories to discriminate without awareness. Neuroimage 27, 473–478 10.1016/j.neuroimage.2005.04.016 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Molholm S., Michel C. M., Heslenfeld D.J., Ritter W., Javitt D. C., Schroeder C. E., Foxe J. J. (2005b). Grabbing your ear: rapid auditory–somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb. Cortex 15, 963–974 10.1093/cercor/bhh197 [DOI] [PubMed] [Google Scholar]

- Murray M. M., Imber M. L., Javitt D. C., Foxe J. J. (2006). Boundary completion is automatic and dissociable from shape discrimination. J. Neurosci. 26, 12043–12054 10.1523/JNEUROSCI.3225-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray M. M., Michel C. M., Grave de Peralta R., Ortigue S., Brunet D., Gonzalez Andino S., Schnider A. (2004). Rapid discrimination of visual and multisensory memories revealed by electrical neuroimaging. Neuroimage 21, 125–135 10.1016/j.neuroimage.2003.09.035 [DOI] [PubMed] [Google Scholar]

- Oldfield R. C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9, 97–113 10.1016/0028-3932(71)90067-4 [DOI] [PubMed] [Google Scholar]

- Perrin F., Pernier J., Bertrand O., Giard M. H., Echallier J. F. (1987). Mapping of scalp potentials by surface spline interpolation. Electroencephalogr. Clin. Neurophysiol. 66, 75–81 10.1016/0013-4694(87)90141-6 [DOI] [PubMed] [Google Scholar]

- Pourtois G., Delplanque S., Michel C., Vuilleumier P. (2008). Beyond conventional event-related brain potential (ERP): exploring the time-course of visual emotion processing using topographic and principal component analyses. Brain Topogr. 20, 265–277 10.1007/s10548-008-0053-6 [DOI] [PubMed] [Google Scholar]

- Raab D. H. (1962). Statistical facilitation of simple reaction times. Trans. NY Acad. Sci. 24, 574–590 [DOI] [PubMed] [Google Scholar]

- Rockland K. S., Ojima H. (2003). Multisensory convergence in calcarine visual areas in macaque monkey. Int. J. Psychophysiol. 50, 19–26 10.1016/S0167-8760(03)00121-1 [DOI] [PubMed] [Google Scholar]

- Romei V., Brodbeck V., Michel C., Amedi A., Pascual-Leone A., Thut G. (2008a). Spontaneous fluctuations in posterior alpha-band EEG activity reflect variability in excitability of human visual areas. Cereb. Cortex 18, 2010–2018 10.1093/cercor/bhm229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romei V., Rihs T., Brodbeck V., Thut G. (2008b). Resting electroencephalogram alpha-power over posterior sites indexes baseline visual cortex excitability. Neuroreport 19, 203–208 10.1097/WNR.0b013e3282f454c4 [DOI] [PubMed] [Google Scholar]

- Romei V., Murray M. M., Merabet L. B., Thut G. (2007). Occipital transcranial magnetic stimulation has opposing effects on visual and auditory stimulus detection: implications for multisensory interactions. J. Neurosci. 27, 11465–11472 10.1523/JNEUROSCI.2827-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schroeder C. E., Foxe J. J. (2002). The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res. Cogn. Brain Res. 14, 187–198 10.1016/S0926-6410(02)00073-3 [DOI] [PubMed] [Google Scholar]

- Schroeder C. E., Lindsley R. W., Specht C., Marcovici A., Smiley J. F., Javitt D. C. (2001). Somatosensory input to auditory association cortex in the macaque monkey. J. Neurophysiol. 85, 1322–1327 [DOI] [PubMed] [Google Scholar]

- Schroeder C. E., Smiley J., Fu K. G., McGinnis T., O'Connell M. N., Hackett T. A. (2003). Anatomical mechanisms and functional implications of multisensory convergence in early cortical processing. Int. J. Psychophysiol. 50, 5–17 10.1016/S0167-8760(03)00120-X [DOI] [PubMed] [Google Scholar]

- Schroger E., Widmann A. (1998). Speeded responses to audiovisual signal changes result from bimodal integration. Psychophysiology 35, 755–759 10.1017/S0048577298980714 [DOI] [PubMed] [Google Scholar]

- Senkowski D., Molholm S., Gomez-Ramirez M., Foxe J. J. (2006). Oscillatory beta activity predicts response speed during a multisensory audiovisual reaction time task: a high-density electrical mapping study. Cereb. Cortex 16, 1556–1565 10.1093/cercor/bhj091 [DOI] [PubMed] [Google Scholar]

- Senkowski D., Schneider T. R., Foxe J. J., Engel A. K. (2008). Crossmodal binding through neural coherence: implications for multisensory processing. Trends Neurosci. 31, 401–409 10.1016/j.tins.2008.05.002 [DOI] [PubMed] [Google Scholar]

- Shams L., Seitz A. R. (2008). Benefits of multisensory learning. Trends Cogn. Sci. 12, 411–417 10.1016/j.tics.2008.07.006 [DOI] [PubMed] [Google Scholar]

- Smiley J. F., Hackett T. A., Ulbert I., Karmas G., Lakatos P., Javitt D. C., Schroeder C. E. (2007). Multisensory convergence in auditory cortex, I. Cortical connections of the caudal superior temporal plane in macaque monkeys. J. Comp. Neurol. 502, 894–923 10.1002/cne.21325 [DOI] [PubMed] [Google Scholar]

- Spierer L., Murray M. M., Tardif E., Clarke S. (2008). The path to success in auditory spatial discrimination: electrical neuroimaging responses within the supratemporal plane predict performance outcome. Neuroimage 41, 493–503 10.1016/j.neuroimage.2008.02.038 [DOI] [PubMed] [Google Scholar]

- Spierer L., Tardif E., Sperdin H., Murray M. M., Clarke S. (2007). Learning-induced plasticity in auditory spatial representations revealed by electrical neuroimaging. J. Neurosci. 27, 5474–5483 10.1523/JNEUROSCI.0764-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein B. E., Meredith M. A. (1993). The Merging of the Senses. Cambridge, MA, MIT Press [Google Scholar]

- Stein B. E., Stanford T. R. (2008). Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266 10.1038/nrn2331 [DOI] [PubMed] [Google Scholar]

- Tajadura-Jimenez A., Kitagawa N., Valjamae A., Zampini M., Murray M. M., Spence C. (2008). Auditory–somatosensory multisensory interactions are spatially modulated by stimulated body surface and acoustic spectra. Neuropsychologia 47, 195–203 10.1016/j.neuropsychologia.2008.07.025 [DOI] [PubMed] [Google Scholar]

- Talairach J., Tournoux P. (1988). Co-Planar Stereotaxic Atlas of the Human Brain. New York, Thieme [Google Scholar]

- Talsma D., Doty T. J., Woldorff M. G. (2007). Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? Cereb. Cortex 17, 679–690 10.1093/cercor/bhk016 [DOI] [PubMed] [Google Scholar]

- Talsma D., Woldorff M. G. (2005). Selective attention and multisensory integration: multiple phases of effects on the evoked brain activity. J. Cogn. Neurosci. 17, 1098–1114 10.1162/0898929054475172 [DOI] [PubMed] [Google Scholar]

- Toepel U., Knebel J. F., Hudry J., le Coutre J., Murray M. M. (2009). The brain tracks the energetic value in food images. Neuroimage 44, 967–974 10.1016/j.neuroimage.2008.10.005 [DOI] [PubMed] [Google Scholar]

- Wallace M. T., Ramachandran R., Stein B. E. (2004). A revised view of sensory cortical parcellation. Proc. Natl. Acad. Sci. USA 101, 2167–2172 10.1073/pnas.0305697101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang Y., Celebrini S., Trotter Y., Barone P. (2008). Visuo-auditory interactions in the primary visual cortex of the behaving monkey: electrophysiological evidence. BMC Neurosci. 9, 79. 10.1186/1471-2202-9-79 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Welch R. B., Warren D. H. (1980). Immediate perceptual response to intersensory discrepancy. Psychol. Bull. 88, 638–667 10.1037/0033-2909.88.3.638 [DOI] [PubMed] [Google Scholar]

- Zampini M., Torresan D., Spence C., Murray M. M. (2007). Auditory–somatosensory multisensory interactions in front and rear space. Neuropsychologia 45, 1869–1877 10.1016/j.neuropsychologia.2006.12.004 [DOI] [PubMed] [Google Scholar]