Abstract

Most studies investigating speeded orientation towards threat have used manual responses. By measuring orienting behaviour using eye movements a more direct and ecologically valid measure of attention can be made. Here, we used a forced-choice saccadic and manual localization task to investigate the speed of discrimination for fearful and neutral body and face images. Fearful/neutral body or face pairs were bilaterally presented for either 20 or 500 ms. Results showed faster saccadic orienting to fearful body and face emotions compared with neutral only at the shortest presentation time (20 ms). For manual responses, faster discrimination of fearful bodies and faces was observed only at the longest duration (500 ms). More errors were made when localizing neutral targets, suggesting that fearful bodies and faces may have captured attention automatically. Results were not attributable to low-level image properties as no threat bias, in terms of reaction time or accuracy, was observed for inverted presentation. Taken together, the results suggest faster localization of threat conveyed both by the face and the body within the oculomotor system. In addition, enhanced detection of fearful body postures suggests that we can readily recognize threat-related information conveyed by body postures in the absence of any face cues.

Keywords: saccades, emotion, facial expressions, body postures, attention

1. Introduction

As responding rapidly to danger is crucial for survival in threatening situations, it has been argued that threat-related information is processed in a highly efficient manner. This is characterized by reflexive orienting of attention towards threat and the prioritization of threat over other stimuli (Öhman & Mineka 2001). Behaviourally, visual search, dot-probe, and attentional blink tasks have found that threat-related stimuli capture attention. For instance, participants are faster to detect fearful or angry faces among distracters than neutral or happy faces (Fox et al. 2000; Öhman et al. 2001; Lundqvist & Öhman 2005), faster to respond to probes that replace threatening faces (Bradley et al. 1998, 2000; Mogg & Bradley 1999) and are more likely to perceive threat-related faces (Fox et al. 2005; Milders et al. 2006; Maratos et al. 2008). Neuropsychological studies suggest that this attentional modulation is associated with increased activation of the limbic structures, including the amygdala and the visual cortices (LeDoux 1996), with very brief presentation of threat (Morris et al. 1998). Moreover, electrophysiological examination has shown that viewing briefly presented threat-related stimuli compared with neutral stimuli produces an enhanced N2pc component, but only for individuals reporting high levels of trait anxiety (Fox et al. 2008).

Attention in visual search and dot-probe tasks is typically measured using manual reaction time responses. This measure is quite limited in that orienting behaviour can only be inferred indirectly as responses are made via key presses by the fingers. By contrast, when encountering danger in the environment, the first natural response is to move one's eyes towards the spatial location of the threat rather than one's hands to point towards the danger. Therefore, recent studies have begun to measure eye movements because it provides a more biologically relevant measure in which orienting of visual attention is measured directly. Studies in oculomotor capture (Theeuwes et al. 1998) suggest that salient events can ‘capture’ eye movements even when they are task-irrelevant. As threatening events may be highly salient, it has been investigated whether threat can capture eye movements more than neutral events. This has shown that threat-related stimuli are more likely to be fixated earlier and gazed at longer than neutral stimuli (Hermans et al. 1999; Rohner 2002; Calvo & Lang 2004; Miltner et al. 2004; Nummenmaa et al. 2006).

Further illustrating the appeal of eye movements, Hunt et al. (2007b) examined the time course of eye movements and manual localization responses towards targets in the presence of distracters. They showed that, for both eye movements and manual responses, the proportion of trials on which responses were misdirected towards the distracter reflected the quality of information about the visual display at a given time period. They hypothesized that the quality of visual information changes over time and that different response systems access this information at different moments in time. Eye movements tend to be initiated at early stages in time when the acquisition of information about a target is still taking place. Manual responses, however, tend to be initiated at a point where there is sufficient information about a target location so that the response is reasonably accurate. This results in eye movements being initiated considerably faster, but also less accurately, than manual responses. For example, visual categorization tasks using meaningful stimuli, such as animal scenes, have revealed that eye movements can be initiated in as little as 120 ms (Kirchner & Thorpe 2006) compared with average manual response speeds of approximately 450 ms (Thorpe et al. 1996). Therefore, eye movements are able to sample visual information at a time period that is not available to manual responses.

Despite the benefits of measuring eye movements, few studies (Hunt et al. 2007a; Kissler & Keil 2008) have investigated whether threat-related stimuli can influence parameters of eye movements, such as saccadic reaction times. In a previous study (Bannerman et al. in press), we showed that faster discrimination of fearful/neutral face stimuli can be carried out within the oculomotor system. Moreover, saccadic biases towards threat emerged at very brief (20 ms) stimulus durations, whereas manual threat-related bias emerged only at longer (500 ms) stimulus durations, consistent with the proposal that the eyes and hands sample visual information at different time periods (Hunt et al. 2007b).

While many important insights have been obtained from the study of facial expressions, emotion is not just conveyed by the face but by the whole body. For instance, when people are afraid, as well as showing emotion in their face, they may run away from the potential threat. This has led to research into the importance of perceiving emotional body language, which is an emotion expressed by the whole body, consisting of coordinated movements and a meaningful action (de Gelder 2006). Research shows that emotional body stimuli can be easily recognized even when no verbal labels are provided (Van den Stock et al. 2007).

Moreover, similarities in the way we process faces and bodies have been documented. Both faces and bodies are processed configurationally as indicated by the inversion effect (see Tanaka & Farah (1993) for facial inversion effect; see Reed et al. (2003) and Stekelenburg & de Gelder (2004) for body inversion effect). In addition to behavioural evidence, single-cell recordings in monkeys have revealed specialization for either faces (Perrett et al. 1992) or neutral body images (Gallese et al. 1996) in the superior temporal sulcus. Also, fMRI results (Hadjikhani & de Gelder 2003) have shown that viewing fearful bodily expressions activates two well-established face areas (inferior occipital gyrus and middle fusiform gyrus). Given the similarities in how faces and bodies are processed, preferential processing of fearful compared with neutral body images may exist, as threat-related bias has been documented extensively for faces. This would be adaptive in the environment where the proximity to perceive threat conveyed by facial emotions may be a luxury that a threat appraisal system cannot rely upon. When we see an emotional body expression, however, we can readily identify the specific action associated with a particular emotion, leaving little need for the interpretation of the signal as with facial expressions (de Gelder 2006). Rapidly recognizing such action would enable us to act, and therefore be crucial to survival.

In summary, most previous investigations of preferential attention towards threat have made use of threat-related facial expressions, while research on threat biases towards emotional body postures lags considerably behind. Therefore, in the present study, we expand the examinations of such attentional effects by investigating saccadic and manual responses towards threatening and neutral body postures and facial expressions. Facilitated processing of threat-related body postures would hold adaptive benefits, especially when the facial expression of the observer is not visible. Moreover, using eye movements, initial orienting behaviour towards threat is measured directly and threat information may be sampled at a time period that is not available to manual responses.

2. Material and methods

(a) Participants

Ten participants (seven females, three males; mean age=24.5 years; range 22–27) took part. All had normal visual acuity and normal state (M=35.5; s.d.=8) and trait (M=27.8; s.d.=6) anxiety levels as measured by the State-Trait Anxiety Inventory (STAI; Spielberger et al. 1983). The experiment was approved by the University of Aberdeen ethics committee and performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki. All participants gave informed consent.

(b) Materials

The stimuli consisted of body images of 10 individuals (with the face blurred), 5 male and 5 female, taken from a standard set of body expression pictures (see Van den Stock et al. 2007) and face pictures of 10 individuals, 5 male and 5 female, taken from a standard set of facial expression pictures (Ekman & Friesen 1976). In the body pictures, each individual performed meaningful actions that expressed fear or were emotionally neutral (e.g. combing their hair, speaking on the phone, pouring juice into a glass). These neutral body actions provide a suitable control because, like emotional body movements, they contain the illustration of biological movements, have semantic properties and are familiar (de Gelder et al. 2004). Similarly, in the face pictures each individual showed two expressions, fearful and neutral. Fearful expressions were chosen as threat-related stimuli because, unlike angry expressions, which represent a direct threat, the relationship between fearful faces and threat is more ambiguous in that fearful faces can signal the presence of danger, but not its source. Such ambiguity may result in a threat/vigilance system favouring fearful faces, which require additional information to be understood (Whalen et al. 1998).

Using these pictures, a series of body and face image pairs were generated. Each pair consisted of two pictures of the same individual. In one picture, the individual portrayed a fearful body or facial expression, and in the other a neutral body or facial expression. The position of the fearful and neutral bodies and faces was counterbalanced. In half of the body and face pairs the stimuli were upright, and in the other half they were inverted. Inverted versions were created by rotating the image through 180°. Inverted stimuli were presented because it has been suggested that certain features of a threat-related stimulus are more salient (e.g. wide eye whites in fearful faces) and these features, not valence, are responsible for the attentional effects. Using inversion, it is possible to distinguish meaning from features. Inversion disrupts face (Tanaka & Farah 1993) and body (Reed et al. 2003; Stekelenburg & de Gelder 2004) processing and the recognition of facial emotion (Searcy & Bartlett 1996; de Gelder et al. 1997) while maintaining feature differences. If threat-bias effects reduce with inversion, then negative valence is crucial, rather than features. As a manipulation check, all 10 participants rated both the upright and inverted body and face stimuli in terms of emotional intensity at the end of the experiment. Ratings showed that inverting the stimuli resulted in a significant reduction in the expressed intensity for both body (p<0.05) and face (p<0.01) images.

Stimuli were presented centrally on a 21″ CRT monitor with 100 Hz refresh rate using a SVGA graphics card (Cambridge Research Systems, UK) in a dimly lit room (10 lux). All body and face pairs were in greyscale and were presented against a uniform white background (80 cd m−2). Images portraying different emotions did not vary significantly in mean luminance. Each body of the pair subtended on average 6.8×17.0°. Each face of the pair subtended on average 7.5×11.2°. Body and face pairs were presented to the left and right of a fixation cross, centred at 9.2° eccentricity at a viewing distance of 37 cm. A forehead-and-chin rest stabilized head position.

(c) Procedure

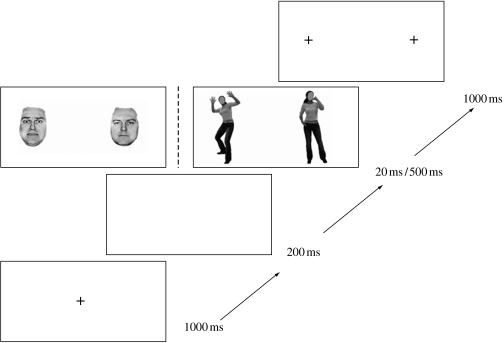

For the saccade mode, a fixation point appeared in the centre of the screen for 1000 ms, followed by a 200 ms gap period (blank screen), thought to speed up saccade initiation (Saslow 1967; Fischer & Weber 1993) before stimulus presentation. Stimulus pairs (fearful body + neutral body or fearful face + neutral face) were then presented for 20 ms on one-half of the trials and 500 ms on other half of the trials. Participants were instructed to make a saccade, as fast as possible, to the side where the fearful body (fearful body target condition), fearful face (fearful face target condition), neutral body (neutral body target condition) or neutral face (neutral face target condition) appeared. Two fixation points, presented for 1000 ms, indicated the landing position for the eye movements (figure 1). For the saccade mode, each participant performed 960 trials in total. The 20 and 500 ms presentation time trials were blocked. For each presentation time (20 and 500 ms), there were a total of 480 trials that were separated into 16 blocks, each comprising 30 trials. There were four blocks per target condition (fearful body, neutral body, fearful face and neutral face). Upright and inverted trials were randomly interleaved within the blocks. Block order was counterbalanced between participants.

Figure 1.

Schematic representation of presentation sequence. After a 1000 ms fixation episode, a time gap (blank screen) for 200 ms preceded the simultaneous presentation of two stimuli (one fearful and one neutral) in the left and right visual fields for 20 or 500 ms. This was followed by the appearance of two fixation points for 1000 ms.

The stimuli and experimental protocol for the manual mode were exactly the same as those for the saccade mode except that participants had to indicate the position of the fearful and neutral targets by pressing the left and right button of a response box, respectively. The ordering of the response mode (saccade and manual) was counterbalanced between the participants. Prior to the experiment, participants viewed the body and face pictures one by one and labelled the expression on each. Recognition was impressive (99.8% correct for bodies and 100% correct for faces) and participants proceeded with 40 practice trials (20 manual localization; 10 fearful and 10 neutral and 20 saccadic localization; 10 fearful and 10 neutral).

(d) Response recording

Eye movements were monitored and recorded using horizontal EOG electrodes (1 kHz, low pass at 90 Hz, notch at 50 Hz; Acknowledge v. 3.59: Biopac Systems). Only saccades on correct trials and that exceeded an amplitude threshold of greater than 30 μV were analysed (saccade detection criterion). Saccadic reaction time was determined as the time difference between the onset of the images (time 0) and the start of the saccade. Saccade starting points were recorded at a 10 per cent change in amplitude between steady fixation and movement. Trials with saccadic latencies below 80 ms were discarded on the basis that these saccades may be anticipatory and result in chance performance (Kaylesnykas & Hallett 1987). In both the saccade and manual modes, latencies exceeding more than 3 s.d. above the mean were also discarded.

3. Results

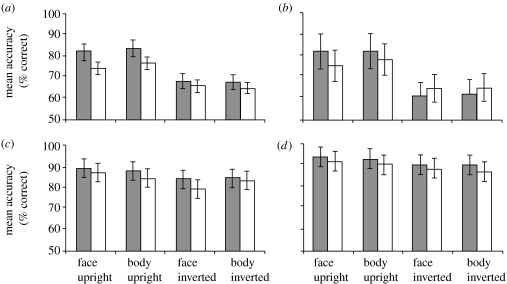

Mean accuracy levels (percentage of correct responses) for the whole sample are displayed in figure 2a,b for the saccade mode and figure 2c,d for the manual mode. Comparison of these accuracy levels by means of a 2 (mode: saccadic versus manual response) ×2 duration (20 versus 500 ms) ×2 (stimulus type: body versus face) ×2 (orientation: upright versus inverted) ×2 (target type: fearful versus neutral) analysis of variance (ANOVA) revealed significant main effects of mode (F1,9=33.76, MSE=2, p<0.001, ηp2=0.79), orientation (F1,9=25.45, MSE=0.64, p<0.01, ηp2=0.74) and target type (F1,9=10.10, MSE=0.07, p<0.05, ηp2=0.53), revealing that, overall, participants were more accurate in the manual compared with saccade mode when the target was presented upright compared with inverted and when the target was fearful compared with neutral, respectively. There was a significant mode×orientation interaction (F1,9=15.34, MSE=0.22, p<0.01, ηp2=0.63) resulting from participants being more accurate in localizing a target when it was upright versus inverted in the saccade mode (p<0.01), but showing no differences between upright and inverted accuracy levels when responding manually (p>0.1). No other interactions or main effects were significant (all p>0.05). This included no significant main effects or any interactions involving stimulus type (body versus face), implying that both body and face stimuli produced similar patterns of results.

Figure 2.

Mean accuracy levels (percentage of correct responses) in the saccadic ((a) 20 ms and (b) 500 ms) and manual ((c) 20 ms and (d) 500 ms) modes (grey bars, fear target; white bars, neutral target). Error bars represent standard errors of the mean (s.e.m.).

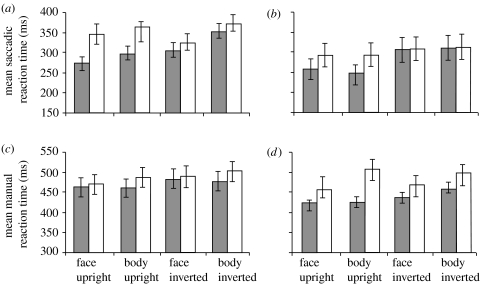

Mean reaction times (RTs) for the whole sample are displayed in figure 3a,b for the saccade mode and figure 3c,d for the manual mode. Mean correct RTs were analysed by means of a 2 (mode: saccadic versus manual response) ×2 duration (20 versus 500 ms) ×2 (stimulus type: body versus face) ×2 (orientation: upright versus inverted) ×2 (target type: fearful versus neutral) ANOVA. There were main effects for mode (F1,9=99.20, MSE=2 039 688, p<0.001, ηp2=0.92), duration (F1,9=7.67, MSE=65 380, p<0.05, ηp2=0.46), orientation (F1,9=16.77, MSE=42 136, p<0.01, ηp2=0.65) and target type (F1,9=36.14, MSE=79758, p<0.001, ηp2=0.80), revealing that participants were faster overall in the saccade mode compared with manual mode, at 500 ms compared with 20 ms, when the target was presented upright compared with inverted, and when the target was fearful compared with neutral, respectively. There was a significant three-way interaction of mode×duration×target type (F1,9=14.57, MSE=12 726, p<0.01, ηp2=0.62). As is clear from figure 3, and confirmed by pairwise Bonferroni comparisons, this was due to differential patterns of responding to the target at the two durations (20 and 500 ms) in the saccade and manual response modes. In the saccade mode, saccades towards fearful faces and bodies were initiated faster than those towards their neutral counterparts at 20 ms (p<0.05 for faces; p<0.01 for bodies) but not at 500 ms (p=0.503 for faces; p=0.210 for bodies). The opposite pattern was observed in the manual mode. Here, fearful faces and bodies were localized faster than neutral faces and bodies at 500 ms (p<0.05 for both faces and bodies) but not at 20 ms (p=1.000 for faces; p=0.598 for bodies). Finally, demonstrating the effect of inversion and ruling out low-level image differences as a plausible explanation for faster fearful localization, there was a significant interaction of orientation×target type (F1,9=20.42, MSE=14526, p<0.01, ηp2=0.69), which was subsumed under a significant three-way interaction of task×orientation×target type (F1,9=9.80, MSE=5314, p<0.05, ηp2=0.52). As illustrated by figure 3, and confirmed by pairwise Bonferroni comparisons, the three-way interaction reflected the fact that saccadic and manual RTs towards fearful faces and bodies were faster than those towards their neutral counterparts when presented upright, but not when inverted in the saccade (p>0.3) or manual (p>0.1) mode. Moreover, saccadic RTs were faster in the upright relative to inverted conditions; however, this pattern was not observed for manual RTs. No other interactions or main effects achieved significance (all p>0.05). Importantly, there were no significant main effects or any interactions involving stimulus type (body versus face). This suggests that both body and face stimuli produced similar patterns of results.

Figure 3.

Mean saccadic ((a) 20 ms and (b) 500 ms) and manual ((c) 20 ms and (d) 500 ms) reaction times (grey bars, fear target; white bars, neutral target). Error bars represent s.e.m.

In summary, the results showed that bodies and faces were processed in a similar fashion. Participants were more accurate when responding manually and when targets were presented upright and were fearful, respectively. This was consistent for both bodies and faces. Regarding reaction time, saccadic responses towards fearful bodies and faces were faster than those towards neutral, but only at the shortest presentation time (20 ms). By contrast, manual responses towards fearful bodies and faces were initiated faster than those towards neutral, but only at the longest presentation time (500 ms). No fearful advantage was observed for inverted presentation.

4. Discussion

The present study expanded the examinations of threat-related attentional effects beyond facial expressions by investigating whether threat-related body postures would be discriminated faster than neutral ones using saccadic and manual reaction times. Enhanced detection of fearful body postures would suggest that fear conveyed through body language can act as a salient signal of imminent danger. The results showed faster saccadic discrimination of both fearful body and face stimuli at the shortest presentation time (20 ms), and faster manual discrimination of fearful bodies and faces at the longer duration (500 ms). This is consistent with the proposal that eye movements and manual responses sample visual information at different time periods (Hunt et al. 2007b). Moreover, accuracy levels when localizing neutral bodies or faces were lower than for localizing fearful bodies or faces. This suggests that fearful stimuli captured attention, even when participants were instructed to localize a neutral target. These results extend our initial findings on faster saccadic and manual localization of emotional facial expressions (Bannerman et al. in press) by showing that exactly the same pattern of responses applies to fearful faces and fearful body postures, thereby suggesting that fear expressed in faces and bodies is processed in a similar fashion. To rule out the possibility that low-level image differences between fear and neutral stimuli may have been responsible for the observed effect, we inverted the stimuli, a procedure that interferes with face (Tanaka & Farah 1993) and body (Reed et al. 2003; Stekelenburg & de Gelder 2004) processing and the recognition of facial emotion (Searcy & Bartlett 1996; de Gelder et al. 1997), but maintains feature differences. No significant differences between fear and neutral, in terms of reaction time or accuracy, were observed for any of the inverted stimulus conditions, suggesting that emotional valence, rather than features, is the critical factor in influencing the speed of saccadic and manual localization.

Taken together, our results suggest that threat-related body postures, like threat-related facial expressions, may capture attention more effectively than their neutral counterparts, resulting in faster localization. Until recently, it was thought that saccadic eye movements were influenced only by low-level characteristics of a scene (Parkhurst & Niebur 2003). However, there is now increasing evidence suggesting that overt attention is also rapidly allocated towards emotionally meaningful stimuli, such as fearful facial expressions (Hunt et al. 2007a; Kissler & Keil 2008; Bannerman et al. in press) and now fearful body postures. This may imply that the processing of threat-related information is dependent on extracting expressive signals not just from the face but from the body as well.

The remarkable similarity in the pattern of results obtained using body stimuli and face stimuli may suggest that the perception of faces and bodies are subserved by the same neural mechanisms, with a particularly rapid neural mechanism attuned for the perceptual processing of fear signals. This idea is corroborated by electrophysiological evidence, which shows that viewing fearful compared with neutral bodily expressions produces an early emotion effect on the P1 peak latency of approximately 112 ms after the stimulus onset (van Heijnsbergen et al. 2007), comparable to that reported for fearful faces (Batty & Taylor 2003; Eger et al. 2003; Pourtois et al. 2005; Righart & de Gelder 2006). Moreover, functional brain imaging studies have shown that the fusiform cortex and the amygdala are central to the processing of fearful body expressions (Hadjikhani & de Gelder 2003; de Gelder et al. 2004, 2006), as well as for fearful facial expressions (Morris et al. 1998; Dolan et al. 2001; Rotshtein et al. 2001). Neuropsychological findings of a patient with bilateral amygdala damage, who showed an equivalent deficit at recognizing fear from facial expressions and body postures (Sprengelmeyer et al. 1999), further supports the proposal of similar underlying neural systems for faces and bodies.

In summary, from an evolutionary perspective, being able to rapidly detect fear when it is conveyed through body postures has many important adaptive benefits, especially when the facial expression of the observer is not visible (e.g. owing to the perspective of the viewer). Here, we provided evidence that, similar to threat-related faces, threat-related body postures are localized more rapidly than their neutral counterparts using both saccadic and manual reaction times. This suggests that we can readily detect threat-related information conveyed through body postures in the absence of any face cues.

Acknowledgments

We would like to thank Mariska Kret for constructing the body stimuli, James Urquhart for providing technical support and Dr Ben Jones for comments on an earlier version of the manuscript. R.L.B. is supported by postgraduate scholarships from Aberdeen Endowments Trust and Robert Nicol Trust.

References

- Bannerman, R. L., Milders, M. & Sahraie, A. In press. Processing emotion: comparison of saccadic and manual choice-reaction times. Cogn. Emot. (doi:10.1080/02699930802243303)

- Batty M., Taylor M.J. Early processing of the six basic facial emotional expressions. Brain. Res. Cogn. Brain. Res. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. doi:10.1016/S0926-6410(03)00174-5 [DOI] [PubMed] [Google Scholar]

- Bradley B.P., Mogg K., Falla S., Hamilton L.R. Attentional bias for threatening facial expressions in anxiety: manipulations of stimulus duration. Cogn. Emot. 1998;12:737–753. doi:10.1080/026999398379411 [Google Scholar]

- Bradley B.P., Mogg K., Millar N.H. Covert and overt orientation of attention to emotional faces in anxiety. Cogn. Emot. 2000;14:789–808. doi:10.1080/02699930050156636 [Google Scholar]

- Calvo M.G., Lang P.J. Gaze patterns when looking at emotional pictures: motivationally biased attention. Motiv. Emot. 2004;28:221–243. doi:10.1023/B:MOEM.0000040153.26156.ed [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 2006;7:242–249. doi: 10.1038/nrn1872. doi:10.1038/nrn1872 [DOI] [PubMed] [Google Scholar]

- de Gelder B., Teunisse J.P., Benson P.J. Categorical perception of facial expressions: categories and their internal structure. Cogn. Emot. 1997;11:1–23. doi:10.1080/026999397380005 [Google Scholar]

- de Gelder B., Snyder J., Greve D., Gerard G., Hadjikhani N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc. Natl Acad. Sci. USA. 2004;101:16 701–16 706. doi: 10.1073/pnas.0407042101. doi:10.1073/pnas.0407042101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B., Meeren H.K., Righart R., van den Stock J., van de Riet W.A., Tamietto M. Beyond the face: exploring rapid influences of context on face processing. Prog. Brain. Res. 2006;155:37–48. doi: 10.1016/S0079-6123(06)55003-4. doi:10.1016/S0079-6123(06)55003-4 [DOI] [PubMed] [Google Scholar]

- Dolan R.J., Morris J.S., de Gelder B. Crossmodal binding of fear in voice and face. Proc. Natl Acad. Sci. USA. 2001;98:10 006–10 010. doi: 10.1073/pnas.171288598. doi:10.1073/pnas.171288598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E., Jedynak A., Iwaki T., Skrandies W. Rapid extraction of emotional expression: evidence from evoked potential fields during brief presentation of face stimuli. Neuropsychologia. 2003;41:808–817. doi: 10.1016/s0028-3932(02)00287-7. doi:10.1016/S0028-3932(02)00287-7 [DOI] [PubMed] [Google Scholar]

- Ekman P., Friesen W. Consulting Psychologists Press; Palo Alto, CA: 1976. Pictures of facial affect. [Google Scholar]

- Fischer B., Weber H. Express saccades and visual attention. Behav. Brain. Sci. 1993;16:533–610. [Google Scholar]

- Fox E., Lester V., Russo R., Bowles R.J., Pichler A., Dutton K. Facial expressions of emotion: are angry faces detected more efficiently? Cogn. Emot. 2000;14:61–92. doi: 10.1080/026999300378996. doi:10.1080/026999300378996 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E., Russo R., Georgiou G. Anxiety modulates the degree of attentive resources required to process emotional faces. Cogn. Affect. Behav. Neurosci. 2005;5:396–404. doi: 10.3758/cabn.5.4.396. doi:10.3758/CABN.5.4.396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E., Derakshan N., Shoker L. Trait anxiety modulates the electrophysiological indices of rapid spatial orienting towards angry faces. Neuroreport. 2008;19:259–263. doi: 10.1097/WNR.0b013e3282f53d2a. doi:10.1097/WNR.0b013e3282f53d2a [DOI] [PubMed] [Google Scholar]

- Gallese V.L., Fadiga L., Fogassi G., Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. doi:10.1093/brain/119.2.593 [DOI] [PubMed] [Google Scholar]

- Hadjikhani N., de Gelder B. Seeing fearful body expressions activates the fusiform cortex and amygdala. Curr. Biol. 2003;13:2201–2205. doi: 10.1016/j.cub.2003.11.049. doi:10.1016/j.cub.2003.11.049 [DOI] [PubMed] [Google Scholar]

- Hermans D., Vansteenwegen D., Eelen P. Eye movement registration as a continuous index of attention deployment: data from a group of spider anxious students. Cogn. Emot. 1999;13:419–434. doi:10.1080/026999399379249 [Google Scholar]

- Hunt A.R., Cooper R., Hungr C., Kingstone A. The effect of emotional faces on eye movements and attention. Vis. Cogn. 2007a;15:513–531. doi:10.1080/13506280600843346 [Google Scholar]

- Hunt A.R., von Mühlenen A., Kingstone A. The time course of attentional and oculomotor capture reveals a common cause. J. Exp. Psychol. Hum. Percept. Perform. 2007b;33:271–284. doi: 10.1037/0096-1523.33.2.271. doi:10.1037/0096-1523.33.2.271 [DOI] [PubMed] [Google Scholar]

- Kaylesnykas R.P., Hallett P.E. The differentiation of visually guided and anticipatory saccades in gap and overlap paradigms. Exp. Brain. Res. 1987;68:115–121. doi: 10.1007/BF00255238. doi:10.1007/BF00255238 [DOI] [PubMed] [Google Scholar]

- Kirchner H., Thorpe S.J. Ultra-rapid object detection with saccadic eye movements: visual processing speed revisited. Vis. Res. 2006;46:1762–1776. doi: 10.1016/j.visres.2005.10.002. doi:10.1016/j.visres.2005.10.002 [DOI] [PubMed] [Google Scholar]

- Kissler J., Keil A. Look—don't look! How emotional pictures affect pro- and anti-saccades. Exp. Brain. Res. 2008;118:214–222. doi: 10.1007/s00221-008-1358-0. doi:10.1007/s00221-008-1358-0 [DOI] [PubMed] [Google Scholar]

- LeDoux J.E. Simon & Schuster; New York, NY: 1996. The emotional brain: the mysterious underpinnings of emotional life. [Google Scholar]

- Lundqvist D., Öhman A. Emotion regulates attention: the relation between facial configurations, facial emotion and visual attention. Vis. Cogn. 2005;12:51–84. doi:10.1080/13506280444000085 [Google Scholar]

- Maratos F.A., Mogg K., Bradley B. Identification of angry faces in the attentional blink. Cogn. Emot. 2008;22:1340–1352. doi: 10.1080/02699930701774218. doi:10.1080/02699930701774218 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milders M., Sahraie A., Logan S., Donnellon N. Awareness of faces is modulated by their emotional meaning. Emotion. 2006;6:10–17. doi: 10.1037/1528-3542.6.1.10. doi:10.1037/15283542.6.1.10 [DOI] [PubMed] [Google Scholar]

- Miltner W.H.R., Krieschel S., Hecht H., Trippe R., Weiss T. Eye movements and behavioural responses to threatening and non-threatening stimuli during visual search in phobic and non-phobic subjects. Emotion. 2004;4:323–339. doi: 10.1037/1528-3542.4.4.323. doi:10.1037/1528-3542.4.4.323 [DOI] [PubMed] [Google Scholar]

- Mogg K., Bradley B.P. Orienting of attention to threatening facial expressions presented under conditions of restricted awareness. Cogn. Emot. 1999;13:713–740. doi:10.1080/026999399379050 [Google Scholar]

- Morris J.S., Öhman A., Dolan R.J. Conscious and unconscious emotional learning in the human amygdala. Nature. 1998;393:467–470. doi: 10.1038/30976. doi:10.1038/30976 [DOI] [PubMed] [Google Scholar]

- Nummenmaa L., Hyöna J., Calvo M. Eye movement assessment of selective attentional capture by emotional pictures. Emotion. 2006;6:257–268. doi: 10.1037/1528-3542.6.2.257. doi:10.1037/1528-3542.6.2.257 [DOI] [PubMed] [Google Scholar]

- Öhman A., Mineka S. Fears, phobias, and preparedness: toward an evolved module of fear and fear learning. Psychol. Rev. 2001;108:483–522. doi: 10.1037/0033-295x.108.3.483. doi:10.1037//0033-295X.108.3.483 [DOI] [PubMed] [Google Scholar]

- Öhman A., Lundqvist D., Esteves F. The face in the crowd revisited: a threat advantage with schematic stimuli. J. Pers. Soc. Psychol. 2001;80:381–396. doi: 10.1037/0022-3514.80.3.381. doi:10.1037/0022-3514.80.3.381 [DOI] [PubMed] [Google Scholar]

- Parkhurst D., Niebur E. Scene content selected by active vision. Spat. Vis. 2003;16:125–154. doi: 10.1163/15685680360511645. doi:10.1163/15685680360511645 [DOI] [PubMed] [Google Scholar]

- Perrett D.I., Hietanen J.K., Oram M.W., Benson P.J., Rolls E.T. Organization and functions of cells responsive to faces in the temporal cortex [and discussion] Phil. Trans. R. Soc. Lond. B. 1992;335:23–30. doi: 10.1098/rstb.1992.0003. doi:10.1098/rstb.1992.0003 [DOI] [PubMed] [Google Scholar]

- Pourtois G., Dan E.S., Grandjean D., Sander D., Vuilleumier P. Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum. Brain. Mapp. 2005;26:65–79. doi: 10.1002/hbm.20130. doi:10.1002/hbm.20130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed C.L., Stone V.E., Bozova S., Tanaka J. The body inversion effect. Psychol. Sci. 2003;14:302–308. doi: 10.1111/1467-9280.14431. doi:10.1111/1467-9280.14431 [DOI] [PubMed] [Google Scholar]

- Righart R., de Gelder B. Context influences early perceptual analysis of faces: an electrophysiological study. Cereb. Cortex. 2006;16:1249–1257. doi: 10.1093/cercor/bhj066. doi:10.1093/cercor/bhj066 [DOI] [PubMed] [Google Scholar]

- Rohner J.C. The time course of visual threat processing: high trait anxious individuals eventually avert their gaze from angry faces. Cogn. Emot. 2002;16:837–844. doi:10.1080/02699930143000572 [Google Scholar]

- Rotshtein P., Malach R., Hadar U., Graif M., Hendler T. Feeling or features: different sensitivity to emotion in high-order visual cortex and amygdala. Neuron. 2001;32:747–757. doi: 10.1016/s0896-6273(01)00513-x. doi:10.1016/S0896-6273(01)00513-X [DOI] [PubMed] [Google Scholar]

- Saslow M.G. Latency for saccadic eye movement. J. Opt. Soc. Am. A. 1967;57:1030–1033. doi: 10.1364/josa.57.001030. doi:10.1364/JOSA.57.001030 [DOI] [PubMed] [Google Scholar]

- Searcy J.H., Bartlett J.C. Inversion and processing of component and spatial-relational information in faces. J. Exp. Psychol. Hum. Percept. Perform. 1996;22:904–915. doi: 10.1037//0096-1523.22.4.904. doi:10.1037/0096-1523.22.4.904 [DOI] [PubMed] [Google Scholar]

- Spielberger C.D., Gorsuch R.L., Lushene R., Vagg P.R., Jacobs G.A. Consulting Psychologists Press; Palo Alto, CA: 1983. State-trait anxiety inventory. [Google Scholar]

- Sprengelmeyer R., Young A.W., Schroeder U., Grossenbacher P.G., Federlein J., Büttner T., Przuntek H. Knowing no fear. Proc. R. Soc. Lond. B. 1999;266:2451–2456. doi: 10.1098/rspb.1999.0945. doi:10.1098/rspb.1999.0945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stekelenburg J.J., de Gelder B. The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport. 2004;15:777–780. doi: 10.1097/00001756-200404090-00007. doi:10.1097/01.wnr.0000119730.93564.e8 [DOI] [PubMed] [Google Scholar]

- Tanaka J.W., Farah M.J. Parts and wholes in face recognition. Q. J. Exp. Psychol. A. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Theeuwes J., Kramer A.F., Hahn S., Irwin D.E. Our eyes do not always go where we want them to go: capture of the eyes by new objects. Psychol. Sci. 1998;9:379–385. doi: 10.1111/1467-9280.00071 [Google Scholar]

- Thorpe S.J., Fize D., Marlot C. Speed of processing in the human visual system. Nature. 1996;381:520–522. doi: 10.1038/381520a0. doi:10.1038/381520a0 [DOI] [PubMed] [Google Scholar]

- Van den Stock J., Righart R., de Gelder B. Body expressions influence recognition of emotions in the face and voice. Emotion. 2007;7:487–494. doi: 10.1037/1528-3542.7.3.487. doi:10.1037/1528-3542.7.3.487 [DOI] [PubMed] [Google Scholar]

- van Heijnsbergen C.C.R.J., Meeren H.K.M., Grèzes J., de Gelder B. Rapid detection of fear in body expressions, an ERP study. Brain. Res. 2007;1186:233–241. doi: 10.1016/j.brainres.2007.09.093. doi:10.1016/j.brainres.2007.09.093 [DOI] [PubMed] [Google Scholar]

- Whalen P.J., Rauch S.L., Etcoff N.L., McInerney S.C., Lee M.B., Jenike M.A. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J. Neurosci. 1998;18:411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]