Abstract

Objectives

To assess whether audio taping simulated patient interactions can improve the reliability of manually documented data and result in more accurate assessments.

Methods

Over a 3-month period, 1340 simulated patient visits were made to community pharmacies. Following the encounters, data gathered by the simulated patient were relayed to a coordinator who completed a rating form. Data recorded on the forms were later compared to an audiotape of the interaction. Corrections were tallied and reasons for making them were coded.

Results

Approximately 10% of cases required corrections, resulting in a 10%-20% modification in the pharmacy's total score. The difference between postcorrection and precorrection scores was significant.

Conclusions

Audio taping simulated patient visits enhances data integrity. Most corrections were required because of the simulated patients' poor recall abilities.

Keywords: community pharmacy, nonprescription medications, simulated patients, assessment

INTRODUCTION

The use of simulated patients in research has become increasingly widespread over the past 3 decades.1 Commonly referred to as pseudo customers/patrons/patients, simulated/standardized patients, covert participants, and mystery shoppers,2,3 their use originally gained popularity in the business/marketing sector, with clients ranging from small businesses to industry leaders such as McDonald's, Disney, and the Hard Rock Café.4-6 The technique has also been adapted to assess quality practice in the health sector, 7-10 and for at least 2 decades, practice behavior in pharmacy.11

As diversely as the technique has been named it has been defined.2,7-9,12-14 For the purposes of this study, simulated patient research was defined as involving the use of an experimental confederate who engages a staff member(s) in the natural setting of their workplace with the intent to observe and report on behaviors elicited.15 The simulated patient adopts the role of a typical customer/patient without revealing their status as confederate.

Simulated patient methodology has a number of benefits compared to competing techniques. The use of simulated patients is said to be more cost-effective than either direct observation or customer surveys.4,9 Finn and Kayane claim that since simulated patients are paid and trained to be observant, their feedback will be more reliable than that solicited from regular customers; assuming the simulated patients are typical of regular customers/patients.4 Indeed, as long as the simulated patient is assumed genuine, the technique will retain face validity.14 A degree of experimental deceit is an essential element of the technique and must be dealt with in an ethically appropriate manner.

In spite of its increasing application in community pharmacy research worldwide (in countries including Australia,16 the United States,17 the United Kingdom,14 Germany,2 and New Zealand13), a potential weakness of the simulated patient technique has been the reliance on human cognitive processes. There are several factors related to memory processes that may affect the accuracy of data collected. Omissions from and distortions of simulated patient memory can occur at each stage of memory processing: encoding, storage, and retrieval.1 These can potentially lead to discrepancies between real (ie, observed) and reported behavior. Although the technique is still relatively new to pharmacy research, a number of studies have used concealed audio recorders in an attempt to overcome these potential problems, while still maintaining a covert methodology.13,15,16 Additionally, studies that have not used audio taping have suggested it could be an improvement adopted in future research.14 Limited research has been conducted to investigate the nature and frequency of errors inherent to the technique, and the extent to which audio taping overcomes them.9

That the simulated patient methodology is a comparatively new technique in pharmacy practice, and indeed health research in general, may explain the relative lack of quantitative research into the benefits it provides. While the concept of an objective checking procedure to enhance the reliability of simulated patient data seems intuitive, quantifying both the direction and extent of such an effect is an important step towards a more sound understanding of the research method. The current study attempted to complete this gap in the literature.

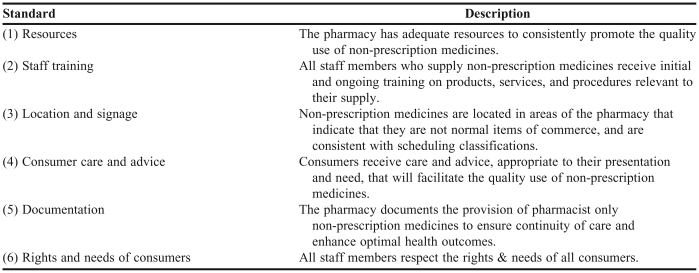

As self-medicating with nonprescription medications becomes increasingly popular,18 standards of practice have been developed by the pharmacy profession to address the provision of these drugs, along the lines of a consumer-focused/risk management approach.19 At the broadest level, the aim of these standards is to optimize pharmacy practice in the provision of medicines and ensure that all community pharmacies provide appropriate and consistent professional advice. These standards apply to pharmacists, pharmacy assistants, and the pharmacy environment. There are 6 areas covered by the standards (Table 1).20

Table 1.

Summary of the Professional Practice Standards, Version 320

Since 2002 the application of these standards in Australia has been monitored by the Quality Care Pharmacy Support Centre (QCPSC).16, 21 The primary objectives of the QCPSC are to assess, benchmark, and support pharmacies accredited under the Quality Care Pharmacy Program.16, 21 This monitoring is achieved through use of Standard Maintenance Assessment (SMA) visits, which utilize simulated patient methodology. All SMA visits are audio taped in an attempt to derive a valid and reliable assessment of intervention strategies on practice behavior.16 The present study aimed to compare the accuracy of data from assessment forms completed by a trained assessor immediately after a simulated patient visit with a post assessment of the audiotape by an independent pharmacist at the QCPSC. It was hypothesized that there would be no significant difference between the accuracy of data based on the simulated patient process alone and that verified by audiotape checking.

METHODS

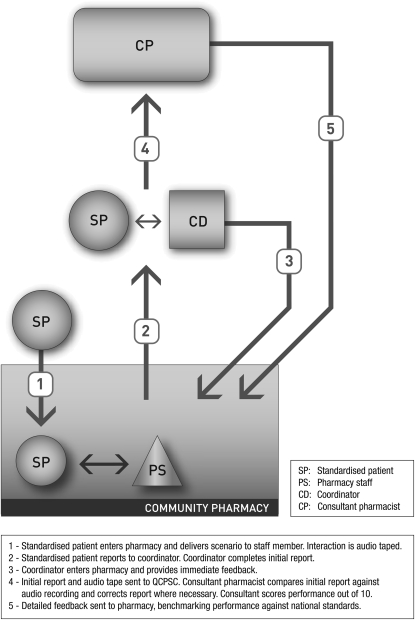

Simulated patient methodology was implemented according to SMA visit protocol (Figure 1). The process of conducting SMA visits has been outlined in detail elsewhere.16, 21 SMA visits utilize simulated patient methodology to assess quality practice in pharmacy as outlined in the standards and protocols accepted by the profession. Assessment of the pharmacy's performance is based on an analysis of a behavioral interaction and is not intended to be a measure of clinical skill.

Figure 1.

Simulated patient methodology implemented for Standard Maintenance Assessment visit.

The QCPSC operates a network of 14 state-based coordinators. These coordinators managed the SMA visits and the team of simulated patients. The simulated patients receive scenario-specific training. Two new scenarios are developed for use each month, with as many as 70 different scenarios being used in visits over a 5-year period, representing a broad range of requests for information about nonprescription medications that simulate common patient presentations. Each SMA visit involves a simulated patient (with a concealed tape recorder) entering the pharmacy and either asking to purchase a nonprescription medicine (direct product request [DPR]), or asking for advice on symptoms (symptom-based request [SBR]). QCPSC planning schedules 3 DPR scenarios to every 1 SBR scenario. Typically, there are 300-400 SMA visits conducted per month, with individual pharmacies eligible for at most 1 visit every 6 months. Pharmacists are informed of their eligibility for a visit by letter 2 weeks before a visit might occur.

The study was conducted over a 3-month period from July 2005 to October 2005 in community pharmacies throughout Australia. The simulated patients were recruited from the general population and trained by the QCPSC.

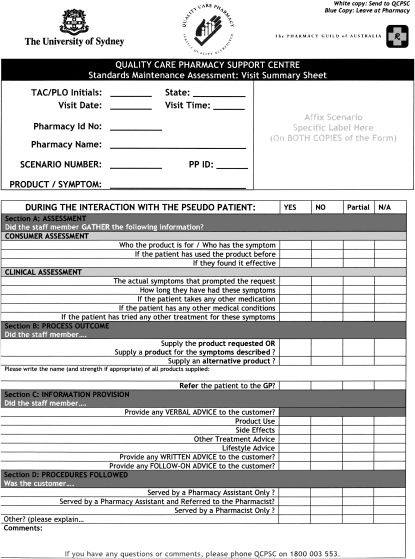

Following the interaction between the staff member and simulated patient, the simulated patient exited the pharmacy and reported to the coordinator. The coordinator completed a standardized form (Appendix 1) based on the verbal report from the simulated patient, then entered the pharmacy to inform the pharmacist an SMA visit was conducted. At this point, immediate verbal feedback was provided.

The standardized form provided a 4-choice response: yes, no, part (partial), or N/A (not applicable). A response of yes indicated the simulated patient was certain that the behavior was performed, no indicated the patient was sure the behavior was not performed, part (partial) indicated the simulated patient believed a behavior may have been completed but was not completely sure of the accuracy of his/her recall, and “N/A” indicated the behavioral category was not relevant to the scenario at hand. The coordinator forwarded the completed form and corresponding audiotape to the QCPSC for analysis. The pharmacy was also given a copy of the form.

At the QCPSC a consultant pharmacist compared the hard copy of the standardized form to an audio recording of the interaction. Pharmacies were given a score from 0 to 10 according to their performance, each score belonging to 1 of 3 categories; scores of 0-3 were classed as unsatisfactory, scores of 4-6 as satisfactory, and scores of 7-10 excellent. Detailed feedback was then sent to the pharmacy, benchmarking their performance against national standards. Pharmacies who received an unsatisfactory score were offered training.

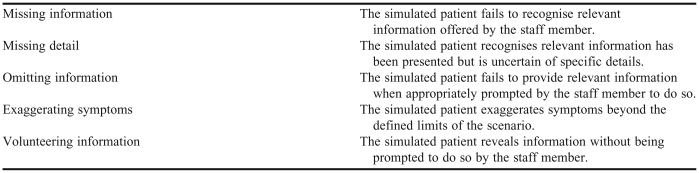

The consultant pharmacist to the QCPSC checked data from all SMA visits. Any corrections made to hard copy SMA summary sheets were tallied and reason(s) for the correction being required were coded. QCPSC research had identified 5 mutually exclusive reasons to explain the need for score correction (Table 2).

Table 2.

Reasons for SMA Score Correction

Corrections were made by comparing the behavioral response as outlined on the standardized form with the audiotape of the interaction. Under QCPSC standard operating procedure, tape recordings used to verify the accuracy of the simulated patient's report of the interaction were erased 3 months after the visit in accordance with ethics committee requirements.

The research methodology was developed in accordance with, and approved by the Human Research Ethics Committee at the University of Sydney. Community pharmacies agree to terms of participation when becoming accredited by the Pharmacy Guild of Australia. All pharmacies have the right to withdraw their consent to participate if any staff member objects to being audio taped. Historically, less than 5% of pharmacies have withdrawn consent.

The results from each visit were entered into a Microsoft Excel database, and extracted into SPSS version 14.0 (SPSS, Chicago, Ill) for analysis. Summary statistics were used to describe the frequency of corrections required, the change in score resulting from correction and reason(s) for corrections being made. A paired-samples t test compared scores derived from the simulated patient process alone to scores checked by audio taping the encounter.

A paired-samples t test was used to compare scores precorrection to those postcorrection to see whether there was a significant effect of correcting SMA visits based on checking the audio-taped interaction. All assumptions for conducting t tests were met. Only scores where a correction had been made were analysed.

RESULTS

During the 3-month study period, 1340 SMA visits were reviewed by the consultant pharmacist at the QCPSC. These visits used 12 different scenarios, most of which (86%) were direct product requests (14% were symptom based requests). Of these visits, 135 were corrected as a result of inconsistencies between written data and the audio-taped interaction. These represent 10.1% of the total number of SMA visits conducted during this period.

Of the 135 corrected SMA forms, total score (0-10) was calculated pre and postcorrection to index the impact of data checking. In every case, the postcorrection score was greater than the precorrection score. Most corrected scores (88%; n=119) improved by 1 mark out of 10. However, 12% (n=16) of corrections resulted in a 2-mark improvement (equivalent to 20% of the overall score). No correction resulted in greater than a 2-point change. Mean score improved as a result of the corrections made.

The difference between scores based on simulated patient recall (mean = 4.0; SD =1.9) and scores corrected on the basis of audiotape checking (mean = 5.1; SD = 1.9) was significant at a level of p<0.001 (t = -40.06).

Of the SMA reports corrected as a result of audiotape checking, 45% (n=61) involved a shift in category when comparing precorrection to postcorrection scores. Given that the postcorrection score was greater than the precorrection score in every case, only 2 types of category shift were possible; from unsatisfactory to satisfactory, or satisfactory to excellent. Most (70%; n=43) were shifts from unsatisfactory to satisfactory.

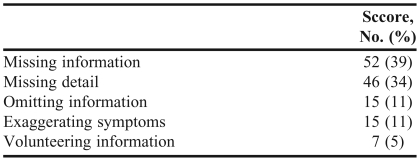

Five reasons can explain the need for score correction; 2 of these (missing information and missing detail) can explain 73% of all corrections made (Table 3).

Table 3.

Reasons for Correcting SMA Score (N =135)

DISCUSSION

The results of this study provided evidence on the extent to which audio taping of simulated patient visits maintains data integrity. In 10% of cases, failings in the SMA process led to erroneous recollection of the staff-simulated patient interaction, resulting in a misrepresentation of performance. These findings closely replicate those of Luck & Peabody who found 91% agreement between audio recordings and simulated patient checklists.9 The hypothesis that there would be no significant difference between the accuracy of data based on simulated patient process alone and that verified by audiotape checking was rejected.

Audio taped checking procedures identified a 10% to 20% underestimation of real behavior as reported by simulated patients immediately after the SMA visit. In the current study, every correction made was based on an underestimation of performance. This suggests that discrepancies between real and reported behavior were the result of omissions from memory (ie, forgetting information) not distortions of memory (ie, fabricating information); simulated patients appear more likely to acknowledge having forgotten information than they were to fabricate data while transferring information to the coordinator after the visit. Acknowledging forgotten information results in a behavior not being given a score (a part response) until checking of the audiotape revealed it should have been (yes response). Fabricating data would result in a false positive score (yes response that should have been no) and as such would see the overall score reduced during checking; however, at no point in the current study did this occur.

Furthermore, results of the current study suggest that without data checking, a significant proportion of the study population would have had their SMA performance (ie, practice behavior) underestimated. Forty-five percent of all corrected visits shifted the category of the overall result after correction, either from unsatisfactory (0-3) to satisfactory (4-6), or from satisfactory to excellent (7-10). Indeed, 70% of these pharmacies would have received a result of unsatisfactory when they actually deserved a satisfactory rating. As the QCPSC is a monitoring center for pharmacy practice standards nationwide, ensuring the accuracy of SMA visits is important in maintaining both research rigor and pharmacy staff morale. Moreover, the fact that unsatisfactory pharmacies are offered additional training options suggests fiscally beneficial outcomes of data checking in that audiotape checking could prevent pharmacies whose performance is genuinely satisfactory (but “incorrectly rated” as unsatisfactory) from being unnecessarily offered further education.

The most common reasons given for correcting an SMA score were “missing information” and “missing detail.” Together, these explained 73% of all required corrections and reinforced the notion that erroneous simulated patient performance was largely derived from human memory factors.

“Missing information” refers to a situation whereby the simulated patient did not detect relevant information presented by a staff member during the SMA interaction. This could indicate a failure of memory at any stage of processing – encoding, storage, or retrieval. Future research should attempt to elucidate the role played by memory process in simulated patient research.

“Missing detail” is an index of self-monitoring behavior. It demonstrates that the simulated patient has an awareness of potentially relevant information presented by the staff member, but also recognizes he/she may have not successfully encoded and/or retained the data.

Both of these reasons relate to a fallible human cognition, and reinforce audio taping as an essential method component in simulated patient research. The remaining 3 reasons, explaining 27% of the need for corrected scores, may reflect weaknesses in the protocol for training the simulated patients.

Omitting information, volunteering information, and exaggerating symptoms are all problems that stem from issues in the standardization of patient training protocols. Be it failing to provide relevant information when prompted, revealing relevant information without being prompted, or exaggerating information beyond the scope of the scenario, these issues are derived from not adhering to scenario-specific details. There may be a memory component involved in that simulated patients may not be able to retain the scenario information effectively enough to present it in an appropriately standardized manner. However, for the purposes of the current study, this is seen as a training issue rather than a memory issue (as it is not involved in, and cannot be overcome by, the audio taping of SMA visits).

The current study was designed to evaluate the impact audio taping has on the validity and reliability of simulated patient research. However, there are a number of areas that future research could potentially address and improve. The current study, although able to differentiate between memory and training factors to explain failings in simulated patient's reporting of an SMA visit, did not address what aspects of each system were failing. Especially with regard to memory processes, future research could attempt to identify whether encoding, storage, or retrieval processes were affecting simulated patient recollection, and include training in relevant memory techniques to enhance performance.

While much focus has been placed on the role played by simulated patient recall in the accuracy (or otherwise) of the SMA process, it is essential to point out another area of potential fallibility. Immediately after the SMA visit is conducted, the simulated patient leaves the pharmacy and reports details of the interaction to a coordinator (Figure 1, step 2). The coordinator completes a standardized initial report based on the information provided. Although information loss is more likely to result from failings in patient memory, the current study design does not account for potential error in the information transfer process. Future research could investigate the accuracy of this information transfer from the perspective of the coordinator.

A major issue to be addressed by future research is that audio taping does not pick up on any non-verbal communication present in the staff-simulated patient interaction. This would be essential to measure in both staff and simulated patients. Should video recording technology become fiscally viable and easily concealable, it could significantly improve the validity and reliability of simulated patient research.

CONCLUSION

Audio taping patient-pharmacist interactions significantly enhances the reliability of simulated patient research. Relying on the simulated patient process alone can lead to inaccuracies in the data collected. Audio taping interactions allows patient recall to be verified and data to be adjusted accordingly. Future research could focus on what specific aspects of simulated patient memory are responsible for inaccurate recollection and develop training in memory techniques to help enhance recall.

ACKNOWLEDGEMENTS

The authors would like to acknowledge the Pharmacy Guild of Australia for providing funding to the Quality Care Pharmacy Support Centre, Ruth-Anne Khouri for her assistance in the data collection process, Honey Rogue Design for the “SMA Process” figure, and Alison Roberts, Jackie Knox, and Katie Vines for casting eyes over early drafts of this paper.

Appendix 1.

REFERENCES

- 1.Morrison LJ, Colman AM, Preston CC. Mystery customer research: cognitive processes affecting accuracy. J Market Res Soc. 1997;39:349. [Google Scholar]

- 2.Berger K, Eickhoff C, Schulz M, Berger K, Eickhoff C, Schulz M. Counselling quality in community pharmacies: implementation of the pseudo customer methodology in Germany. J Clin Pharm Ther. 2005;30:45–57. doi: 10.1111/j.1365-2710.2004.00611.x. [DOI] [PubMed] [Google Scholar]

- 3.Watson MC, Walker A, Grimshaw J, Bond C. Why educational interventions are not always effective: a theory-based process evaluation of a randomised controlled trial to improve non-prescription medicine supply from community pharmacies. Int J Pharm Pract. 2006;14:249–54. [Google Scholar]

- 4.Finn A, Kayane U. Unmasking a phantom: a psychometric assessment of mystery shopping. J Retailing. 1999;75:195–217. [Google Scholar]

- 5.Leeds B. Mystery shopping: from novelty to necessity. Bank Marketing. 1995;27:17. [Google Scholar]

- 6.Meister JC. Disney approach typifies quality service. Marketing News. 1990;24:38. [Google Scholar]

- 7.Beullens J, Rethans JJ, Goedhuys J, Buntinx F. The use of standardized patients in research in general practice. Fam Pract. 1997;14:58–62. doi: 10.1093/fampra/14.1.58. [DOI] [PubMed] [Google Scholar]

- 8.Kopelow ML, Schnabl GK, Hassard TH. Assessing practicing physicians in two settings using standardized patients. Acad Med. 1992;67:S19–21. doi: 10.1097/00001888-199210000-00026. [DOI] [PubMed] [Google Scholar]

- 9.Luck J, Peabody JW. Using standardised patients to measure physicians' practice: validation study using audio recordings. BMJ. 2002;325:679. doi: 10.1136/bmj.325.7366.679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Woodward CA, McConvey GA, Neufeld V, Norman GR, Walsh A. Measurement of physician performance by standardized patients: refining techniques for undetected entry in physicians' offices. Med Care. 1985;23:1019–27. doi: 10.1097/00005650-198508000-00009. [DOI] [PubMed] [Google Scholar]

- 11.Wertheimer AI, Shefter E, Cooper RM. More on the pharmacist as a drug consultant: three case studies. Drug Intell Clin Pharm. 1973;7:58–61. [Google Scholar]

- 12.de Almeida Neto AC, Benrimoj SI, Kavanagh DJ, Boakes RA. A pharmacy-based protocol and training program for non-prescription analgesics. J Soc Admin Pharm. 2000;17:183–92. [Google Scholar]

- 13.Norris PT. Purchasing restricted medicines in New Zealand pharmacies: results from a “mystery shopper” study. Pharm World Sci. 2002;24:149–53. doi: 10.1023/a:1019506120713. [DOI] [PubMed] [Google Scholar]

- 14.Watson MC, Skelton JR, Bond CM, et al. Simulated patients in the community pharmacy setting. Using simulated patients to measure practice in the community pharmacy setting. Pharm World Sci. 2004;26:32–7. doi: 10.1023/b:phar.0000013467.61875.ce. [DOI] [PubMed] [Google Scholar]

- 15.Neto ACD, Benrimoj SI, Kavanagh DJ, Boakes RA. Novel educational training program for community pharmacists. Am J Pharm Educ. 2000;64:302. [Google Scholar]

- 16.Benrimoj SI, Werner JB, Raffaele C, Roberts AS, Costa FA. Monitoring quality standards in the provision of non-prescription medicines from Australian Community Pharmacies: results of a national programme. Q Safety Health Care. 2007;16:354–8. doi: 10.1136/qshc.2006.019463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Austin Z, Gregory P, Tabak D. Simulated patients vs. standardized patients in objective structured clinical examinations. Am J Pharm Educ. 2006;70((5)) doi: 10.5688/aj7005119. Article 119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Roberts AS, Hopp T, Sorensen EW, et al. Understanding practice change in community pharmacy: a qualitative research instrument based on organisational theory. Pharm World Sci. 2003;25:227–34. doi: 10.1023/a:1025880012757. [DOI] [PubMed] [Google Scholar]

- 19.Benrimoj SI, Frommer MS, Benrimoj SI, Frommer MS. Community pharmacy in Australia. Aust Health Rev. 2004;28:238–46. doi: 10.1071/ah040238. [DOI] [PubMed] [Google Scholar]

- 20.Pharmaceutical Society of Australia, 2006 Professional Practice Standards version 3, Pharmaceutical Society of Australia ISBN: 0-908185-83-9, pages 151–211.

- 21.Benrimoj SI, Werner JB, Raffaele C, Roberts AS. A system for monitoring quality standards in the provision of non-prescription medicines from Australian community pharmacies. Pharm World Sci. 2008;30:7. doi: 10.1007/s11096-007-9162-7. [DOI] [PubMed] [Google Scholar]