Educational Objectives

The reader will 1) understand the broad range of deficits in phonological perception and processing that accompany deficits in musical pitch recognition, and 2) recognize the possible utility of musical evaluation measures and music-based therapies in the treatment of phonological and other speech disorders.

Introduction

Some individuals with difficulties understanding language also have problems perceiving music (Anvari, 2002). These problems may be related because musical pitch perception and speech perception share many of the same processing requirements. Both rely on the elements of sound (notes in music and phonemes in speech) organized into acoustic sequences comprised of small perceptual units and highly structured sequences (Patel and Daniele, 2002). These sequences are formed by rule-based permutations of a limited number of these discrete elements (phonemes in speech or tones in music) to yield meaningful structures (words or musical phrases). These are subject to further hierarchical organization resulting in more complex entities, such as sentences or melodies (Foxton et al., 2004; Zatorre et al., 2002).

Music and speech are common to all human societies (Peretz et al, 2002). Both develop over time, and require continuous sustained attention, memory, exposure, and training. (Foxton et al., 2004). Furthermore, music perception and speech perception are the result of sound processing by the listener (Ayotte, 2002; de Cheveigne, 2004; Shamma, 2004; Zatorre et al., 2002), and pitch information is an important component of both melodies and speech sounds.

In view of these concepts, it was surprising when studies of individuals with focal brain lesions demonstrated instances in which perception of these two sound classes could be separated, and isolated deficits in the perception of either one or the other class of sounds were clearly documented (Peretz, 2002). The separation of these functions was also supported by brain imaging studies. PET and fMRI scans showed that while areas of the left temporal lobe, in particular Broca’s and Wernicke’s areas, are most highly activated in speech, musical listening activates other more caudal regions of the brain, such as the superior temporal sulcus, Hechl’s gyrus, and the plana polare and temporale. (Levitin & Menon, 2003).

However, more recent brain activity and functional imaging studies have supported the view that auditory processing of speech and music are localized in similar regions of the brain. Koelsch (2002) noted that there is considerable overlap of cortical networks, in the inferior fronto-lateral, anterior, and posterior temporal lobe structures in both hemispheres that are involved in the processing of speech and music. These structures have been assumed to be specific to language and auditory processing (Koelsch, 2000; Levitin & Menon, 2003; Patterson, 2002). Recent studies by Wong et al. (2007) have supported similar localization, and suggest that in addition to cortical processes, the brainstem functions to encode both musical and linguistic pitch perception.

In a study of music and speech, Anvari et al. (2002) investigated language and musical skills in children 4 to 5 years of age. They theorized that some of the auditory skills used in the processing of speech, such as blending and segmenting sounds, are similar to the skills necessary for music perception, such as rhythmic, melodic, and harmonic discrimination. Their results indicated that music perception appears to make use of auditory mechanisms that partially overlap with those related to phonological awareness (Anvari et al., 2002).

In another study, Overy (2003) investigated musical timing skills in children with deficits in phonological awareness. Their results indicated that children with phonological awareness deficits also demonstrated deficits in some areas of musical processing, including those required for rapid timing, rhythm, and tempo. However, these children demonstrated strong pitch discrimination skills. Thus, despite a large amount of data from studies using a number of different experimental approaches, the degree of overlap in the structures and mechanisms required to process speech sounds versus musical sounds has remained uncertain.

Another way to examine pitch and speech sound perception is to study naturally occurring disorders of these functions. Although deficits in musical pitch perception and speech perception are well documented in individual adults (Peretz et al., 2002), we focused on a large group of tune deaf individuals to obtain a broader view of this population. Several previous studies of music and speech perception focused on young children in whom rapid brain development occurs. We limited our study to adults to reduce the impact of developmental factors on the results.

Deficits in musical pitch perception have also been referred to as congenital amusia, note-deafness, dysmelodia, tune deafness, or music agnosia (Allen, 1878; Ayotte et al., 2002; Hyde & Peretz, 2004; Kalmus and Fry, 1980; Drayna et al., 2001; Foxton et al., 2004). We have operationally defined tune deaf individuals as those with reproducibly low scores on the Distorted Tunes Test (DTT), a widely used test of musical pitch recognition (Kalmus & Fry, 1980; Drayna et al, 2001). To understand the relationship between musical pitch perception and speech sound perception, we evaluated a number of phonologic and phonemic functions in tune deaf individuals.

We used the Comprehensive Test of Phonological Processing (CTOPP) to assess phonological awareness, which is the ability to manipulate and discriminate sounds in syllables and words, and phonemic awareness, which is the ability to segment and blend phonemes, which are the sounds in words. The CTOPP is a well-validated instrument that measures a number of aspects of phonological processing and memory (Lennon & Slesinski, 2001). We hypothesized that tune deafness, initially ascertained as deficits in musical pitch recognition, may be related to specific components of auditory processing used in speech, including phonological and phonemic awareness.

1. Method

1.1. Ascertainment and Screening

Eight hundred and sixty-four individuals ranging in age from 15 to 60 years old were enrolled in the screening phase of this study via public appeal (Jones et al. 2008). All participants volunteered for this research, which was conducted with informed consent under IRB-approved protocol 00-DC-0176 (NINDS/NIDCD/NIH).

All participants completed the Distorted Tunes Test (DTT), a screening test used to determine the ability to recognize wrong notes in popular melodies, to identify individuals with deficits in musical pitch recognition (Kalmus & Fry, 1980). The DTT has been widely used as a screening tool and to quantitate musical pitch perception (Drayna et al., 2001). This test consists of 26 short, unaccompanied melodic fragments, played in a synthesized piano timbre. For each melody, subjects are asked whether the tune is played correctly or not, and to report whether the melody is familiar or unfamiliar. Scores range from perfect (26, 100%) to chance (~13, 50%). Population performance normative data for this test, along with data demonstrating good validity and reliability have been previously reported (Drayna et al., 2001, Jones et al., 2008). Individuals displaying DTT scores in the bottom 10th percentile (raw scores ≤ 18) have been defined as tune deaf. The DTT was played on an Audiophase portable CD player (Model CD-315) and delivered through Sony over-the-ear headphones (MDR-Q25LP). Ambient noise levels were reduced through the use of hearing protectors (Husqvarna; Model 192766; Noise-Reduction-Rating 25 dB). The participants were required to determine whether each of the 26 melodies was correct or incorrect, and to report whether they were familiar with the melody using a pencil and paper format.

Participants also completed the Five-Minute Hearing Test, a written questionnaire previously correlated with pure tone hearing thresholds (Koike et al., 1994), to rule out individuals with probable hearing loss. Participants were invited to participate in Phase II of this study if: a) they scored below the 10th percentile on the DTT (≤18, the tune deaf group) or above the 60th percentile (≥24, the normal group), b) they scored ≤15 on the 5-Minute Hearing Test (likely to have normal hearing) and c) they had no reported cognitive or neurological impairments.

1.2. Subject Selection

Seventy-six (76) individuals were admitted into Phase II of the study. Each of the participants underwent a comprehensive audiological evaluation to rule out hearing loss or indications of middle ear disease. Participants were queried regarding a history of tinnitus, noise exposure and middle ear infection, aural fullness and otalgia, and then given a comprehensive hearing evaluation. The hearing tests were carried out in a double-walled sound-attenuating booth using a GSI-61 clinical audiometer, and stimuli were presented via ER3A insert earphones. Participants were required to have normal hearing as measured by pure tone thresholds by air conduction no greater than 20 dB HL from 250 to 8000 Hz, and word recognition of 90% or better bilaterally. Following the hearing evaluation, participants who met the acceptance criteria were included in the study.

Seven (7) of the 76 participants were disqualified because they demonstrated a hearing loss greater than 20 dB in at least one ear. The remaining sixty-nine (69) qualified individuals continued the study. The experimental group consisted of 35 native English-speaking participants (21 females and 14 males) who scored 18 or below on the DTT. The normal control group consisted of 34 native English speaking individuals (21 females and 13 males) who scored 24 or above on the DTT. Participants were matched for age, gender, handedness, and education [Table 1].

Table 1. Demographics of Participants.

| Participants | DTT Mean score (% correct) |

Age, Mean | Right Handed | Left Handed | Female | Male | Education, years |

|---|---|---|---|---|---|---|---|

| Normal Controls | 25 (96) |

26.7 | 30 | 4 | 17 | 17 | 15 |

| Tune Deaf | 16 (61) |

25 | 30 | 5 | 21 | 14 | 14 |

1.3. Test Protocols

Tests were administered to each subject individually at the NIH Clinical Center in Bethesda, MD in a binaural listening condition in the same facility where the hearing tests were performed. The following standardized tests were used to assess phonological and phonemic awareness.

Auditory Word Discrimination: a subtest of The Test of Auditory Processing Skills - TAPS (adapted and modified from Gardner, 1994). This test examines an individual’s ability to differentiate among phonemes and measures the individual’s ability to discriminate between stimuli that are acoustically similar, but phonemically distinct. Participants were asked to detect subtle similarities or differences between speech sounds in a given word pair, and identify whether the two words were the same or different (e.g., pat/bat). It consists of 33 items that were audio-recorded on a CD.

Syllable Segmentation: a subtest of the Fullerton Language Test for Adolescents - 2nd Edition (adapted and modified from Thorum, 1986). Syllable segmentation (syllabication) examines an individual’s ability to divide words and sentences into syllables. The test items were recorded on a CD and presented to each participant through the equipment described above. On each of the 20 trials, the subject was required to listen to a word or sentence and report the number of syllables he or she heard.

-

Phonological Processing: The Comprehensive Test of Phonological Processing (CTOPP) developed by Torgensen et al. (1994). This test was designed to evaluate an individual’s phonological abilities in three general areas: phonological and phonemic awareness, phonological short-term memory, and rapid naming. Six out of 8 subtests of the CTOPP were pre-recorded on an audiocassette tape and digitally transferred to a Sony Vaio Computer using Audition software 1.0 (Adobe Audition, 2001). The subtests not originally pre-recorded on the standardized version of the CTOPP (i.e., Elision and Segmenting Words) were digitally recorded using Audition and placed on the CD in the order that each subtest appeared on the original test protocol. The following subtests of the CTOPP were administered.

Elision is a 20-item subtest that measures the participant’s ability to identify what word would remain if part of a word (syllable or phoneme) were omitted from the original word. Three of the 20 items are compound words for which participants had to say the remaining word after dropping one of the syllables (e.g., “Say notebook; now say notebook without saying note.”). The remainder of the items required the participant to listen to and repeat a monosyllabic word, and then say the word without a specific sound (phoneme) that was removed from either initial, medial, or final position of the word (e.g., “Say cat; now say cat without saying /k/”).

Phoneme Reversal is an 18 item subtest measuring a subject’s ability to identify what word would be produced by reversing the phonemes of a nonsense test word. Participants were presented with real words spoken in reversed phoneme order (e.g., “What word would you get if you said teb backwards?”; answer = bet).

Segmenting Words is a 20 item subtest that measures the participant’s ability to verbally separate the individual phonemes that make up a word (e.g., “Say beet one sound at a time.” answer = /b/- /i/-/t/)

Segmenting Nonwords is a 20 item subtest that measures the participant’s ability to verbally separate individual phonemes that make up a nonsense word (e.g., “Say reen; now say reen one sound at a time.”; answer = /r/- /i/- /n/).

Blending Words is a 20 item subtest measuring an individual’s ability to combine or synthesize individual speech sounds (i.e., phonemes) to form real words. (e.g., “What word do you get if you blend these sounds: /b/-/i/-/t/?”; answer = beet).

Blending Nonwords is an 18 item subtest that measures an individual’s ability to combine or synthesize individual speech sounds (phonemes) to form a nonsense word (e.g., “What word do you get if you blend these sounds: /r/-/i/-/n/?; answer = reen).

1.4. Statistical Analyses

To determine whether the observed differences between the two groups were significant, a two-tailed t-Test was performed when test scores were not significantly different from a normal distribution. In cases where scores were not normally distributed, the non-parametric Wilcoxon test implemented in SSPS (Nie et al., 2006) was used.

3. Results

3.1. Phonological and Phonemic Awareness

Tables 2 and 3 show the results and analyses of the phonological and phonemic awareness tests for the tune deaf and control groups, together with the group mean scores for each test.

Table 2. Descriptive Statistics for Phonological Awareness.

Scores are presented as mean ± SD for tune deaf and control groups.

| N | Mean | Std. Deviation | Mean Std. Error | p-values | ||

|---|---|---|---|---|---|---|

| Auditory Word Discrimination | Controls | 34 | 32.35 | .98 | .16 | |

| Tune Deaf | 35 | 30.80 | 2.63 | .44 | <0.0001* | |

| Elision | Controls | 34 | 18.50 | 2.31 | .39 | |

| Tune Deaf | 35 | 16.20 | 3.31 | .56 | <0.0001* | |

| Phoneme Reversal | Controls | 34 | 12.82 | 3.50 | .61 | |

| Tune Deaf | 35 | 8.86 | 4.22 | .71 | <0.0001 | |

| Blending Words | Controls | 34 | 16.21 | 3.14 | .53 | |

| Tune Deaf | 35 | 12.37 | 4.30 | .72 | <0.0001 | |

| Blending Nonwords | Controls | 34 | 12.18 | 2.88 | .49 | |

| Tune Deaf | 35 | 8.57 | 2.67 | .45 | <0.0001 |

p–values derived from t-tests,

denotes p-values from non-parametric Wilcoxon Test when scores were not normally distributed

Table 3. Descriptive Statistics for Phonemic Awareness.

Scores are presented as mean ± SD for tune deaf and control groups.

| N | Mean | Std. Deviation | Mean Std. Error | p-values | ||

|---|---|---|---|---|---|---|

| Segmenting Words | Controls | 34 | 14.00 | 3.97 | .68 | |

| Tune Deaf | 35 | 7.54 | 4.09 | .69 | <0.0001 | |

| Segmenting Nonwords | Controls | 34 | 14.47 | 4.22 | .72 | |

| Tune Deaf | 35 | 9.11 | 4.23 | .71 | <0.0001 | |

| Syllable Segmentation | Controls | 34 | 18.91 | 2.02 | .34 | |

| Tune Deaf | 35 | 15.31 | 5.32 | .90 | <0.0001* |

p–values derived from t-tests,

denotes p-values from non-parametric Wilcoxon Test when scores were not normally distributed

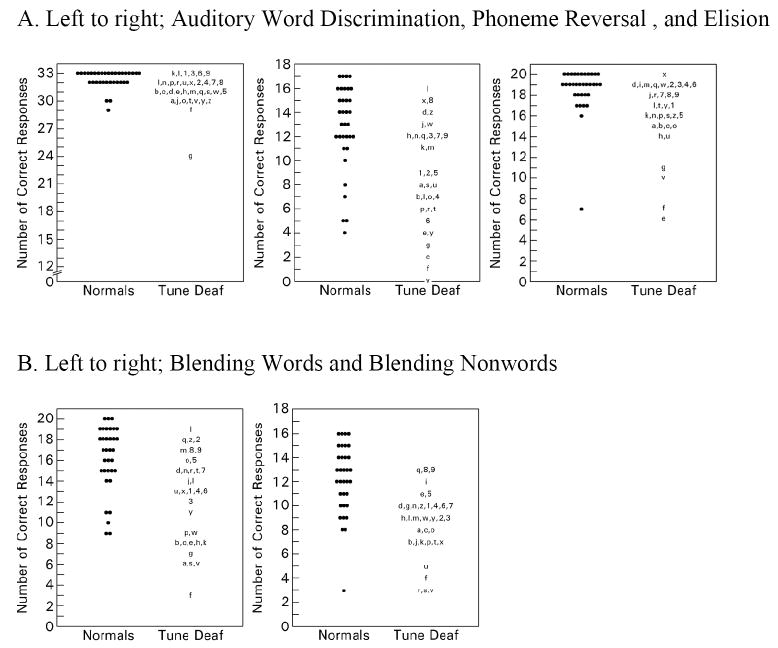

Five measures of phonological awareness were administered to each participant in each group (Figures 1A and 1B): auditory word discrimination, elision, phoneme reversal, blending words, and blending nonwords. All five tests revealed a highly significant difference (p<.0001) between the tune deaf group and normal controls. On the test for auditory word discrimination, the score was abnormal (defined as below the 7th percentile) for only one of 35 tune deaf participants and normal for all tune deaf individuals as well as for all members of the control group (30.8 ± 2.6 vs. 32.3 ± 0.98 for the tune deaf vs. control groups, respectively). On the test of elision, nine of 35 individuals with tune deafness, but only one of the 34 controls scored below the normal range (16.2 ± 3.3 vs. 18.5 ± 2.3 for the tune deaf vs. control groups, respectively). Phoneme reversal scores were below normal for 14 of 35 participants with tune deafness, and were below normal for 4 of 34 controls (8.86 ± 4.2 vs.12.8 ± 3.5 for the tune deaf vs. control groups, respectively). The scores of the tune deaf group on the blending words and blending nonwords subtests are shown in Figure 1B. Fourteen of 35 tune deaf participants scored below the 7th percentile in the blending words subtest, while five of 34 controls scored below normal (12.4 ± 4.3 vs. 16.2 ± 3.1 for the tune deaf vs. control groups, respectively). The blending nonwords subtest scores were below normal for 14 of 35 tune deaf participants, but were abnormal for only three of 34 controls (8.57 ± 2.7 vs. 12.2 ± 2.9 for the tune deaf vs. control groups, respectively).

Figure 1.

Comparison of normal and tune deaf subjects on tests of phonological awareness. The scores of each normal subject are denoted by black circles. The scores of each tune deaf subject are denoted by an individual identifier, either a letter A-Z or number 1-9. A given individual identifier denotes the same individual in all test results.

Despite substantial overlap in the distribution of the scores between the tune deaf and control groups on these five tests, the mean scores between the two groups were different at a significance level of p<0.0001.

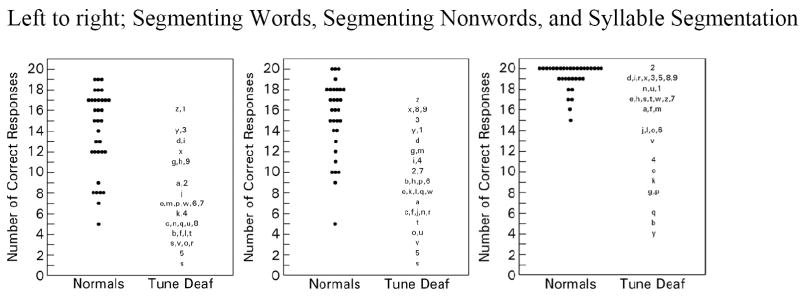

Three measures of phonemic awareness were administered to each participant in each group (Figure 2): segmenting words, segmenting nonwords, and syllable segmentation. Scores for segmenting words were below normal for 25 of 35 tune deaf participants and for seven of 34 controls (17.5 ± 4.1 vs. 14.0 ± 4.0). Twenty-eight of 35 tune deaf participants and seven of 34 normal controls scored below normal on segmenting nonwords (19.1 ± 4.2 vs. 14.5 ± 4.2). Nine of 35 tune deaf participants and one of 34 normal controls scored below the 7th percentile on the syllable segmentation task (18.9 ± 2.0 vs. 15.3 ± 5.3).

Figure 2.

Comparison of normal and tune deaf subjects on tests of phonemic awareness

Thus, significant differences between the tune deaf and control groups were found in all five measures of phonological awareness and all three measures of phonemic awareness, with tune deaf group performing significantly worse than normal control group on all measures.

4. Discussion

The tune deaf subjects in this study demonstrated significant deficits in phonological awareness, i.e. the awareness of, and access to the sound structures in one’s repertoire (Lennon & Slesinski, 2001; Torgensen et al., 1994). Musical pitch discrimination and auditory discrimination of speech and non-speech sounds involve distinguishing subtle differences in the sound of musical notes and speech sounds, respectively. These sounds may be located at the beginning, in the middle, or at the end of melodies or words. Auditory discrimination of speech and non-speech sounds is used in the manipulation of phonemes within spoken words and to differentiate among phonemes. Deficits in the discrimination of speech and non-speech sounds may affect how a person understands speech, i.e., spoken words, as well as notes and melodies.

We found that tune deaf subjects have significant deficits in phonemic awareness. Composite scores of phonemic awareness showed abnormalities in 80% of the tune deaf subjects. By contrast, only 29% of control subjects demonstrated abnormalities in segmenting words, segmenting nonwords, or syllable segmentation. These findings complement the results of other studies that suggest that phonological awareness and music perception share some of the same auditory mechanisms (Anvari et al., 2002; Shastri et al., 1999).

It was previously reported that auditory short-term memory, as measured by Memory for Digits and Nonword Repetition, and visual long-term memory as measured by Rapid Naming Tasks were normal in tune deaf subjects (Jones et al., submitted). Thus, auditory short-term memory that is relatively independent of phonological information (for example, digit span) and visual long-term memory do not appear to be contributing factors to tune deafness.

One area of interest has been the degree to which speech and musical sound comprehension and processing overlaps the functions required for reading (Anvari et al. 2002). The tune deaf group displayed significant deficits on all measures of phonological awareness and phonemic awareness, and had poorer scores than the control group on tasks of discrimination, manipulation, segregation, and amalgamation of speech sounds in words and syllables. These abilities are the building blocks for reading development (Catts, 1989), and given the association of speech and reading skills, it will important to better understand the possible contribution of pitch perception deficits on reading. Although the present study did not assess reading skills in tune deaf subjects, the data collected support the view that music perception is correlated with phonological awareness. The tasks used in this study measured basic abilities used during early reading development, not reading skills or fluency. Although the relationship between deficits in musical pitch perception and reading skills remains unclear, our data are consistent with the view that music perception skills are related to auditory processing skills and cognitive mechanisms utilized in phonological awareness.

Our results provide strong evidence that deficits in musical pitch perception impact functions necessary for the processing and production of speech sounds. We believe our findings will be important for future research in the area of deficits in musical pitch perception that focus attention upon their relationship to speech and language disorders in children and adults. It is important to determine which other processing components (i.e., reading, spelling, etc.) are uniquely involved in deficits in musical pitch perception and which are not. Studies of literacy in young adults with deficits in pitch perception are especially warranted, to understand the effects of tune deafness on this function in adults before the onset of age effects on hearing. Conversely, it will be important to investigate musical pitch perception and auditory and phonological processing in subjects with dyslexia. This may provide clues as to the roots of brain specialization for music and speech processing, together with the impact this specialization may have on reading and language perception, comprehension, and development. It will also be important to investigate speech and language disorders in relation to musical pitch ability. Previous research has demonstrated that melodic intonation therapy (MIT) can be effective in facilitating speech recovery for patients with aphasia, and in children with apraxia of speech. However, no research suggests that MIT is effective in individuals who demonstrate auditory disorders or who are tune deaf with auditory processing disorders. Our results support the need for further studies to learn how therapies such as MIT affect musical pitch abilities and auditory and phonological abilities in the tune deaf individuals pre- and post-treatment.

Conclusions

Deficits in musical pitch perception are accompanied by deficits in the perception and processing of many other types of sounds, including speech sounds. Thus tune deafness appears to be highly syndromic, and may best be labeled as such.

Acknowledgments

We thank A. Griffith and A. Madeo for helpful comments on the manuscript, and the volunteers who served as subjects in this study.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adobe Audition for Windows. Audition 1.0. Adobe System Incorporated; 345 Park Avenue, San Jose California 95110: 2001. [Google Scholar]

- Allen G. Note-deafness. Mind. 1878;3:157–67. [Google Scholar]

- Anvari SH, Trainor LJ, Woodside J, Levy BA. Relations among musical skills, phonological processing, and early reading ability in preschool children. Journal of Experimental Child Psychology. 2002;83:111–30. doi: 10.1016/s0022-0965(02)00124-8. [DOI] [PubMed] [Google Scholar]

- Ayotte J, Peretz I, Hyde K. Congenital amusia: A group study of adults afflicted with a music-specific disorder. Brain. 2002;125:238–51. doi: 10.1093/brain/awf028. [DOI] [PubMed] [Google Scholar]

- Catts H. Phonological processing deficits and reading disabilities. In: Kahmi AG, Catts HW, editors. Reading disabilities: A developmental language perspective. Boston: Little-Brown; 1989. pp. 101–31. [Google Scholar]

- de Cheveigné A. Pitch Perception Models. In: Plack C, Oxenham A, editors. Pitch. Chapter 6 New York: Springer Verlag; 2004. [Google Scholar]

- Drayna D, Manichaikul A, de Lange M, Sneider H, Spector T. Genetic correlates of musical pitch recognition in humans. Science. 2001;291:1969–72. doi: 10.1126/science.291.5510.1969. [DOI] [PubMed] [Google Scholar]

- Foxton JM, Dean JL, Gee R, Peretz I, Griffiths TD. Characterization of deficits in pitch perception underlying ‘tone deafness.’. Brain. 2004;127:801–10. doi: 10.1093/brain/awh105. [DOI] [PubMed] [Google Scholar]

- Foxton JM, Brown A, Chambers S, Griffiths T. Training improves acoustic pattern perception. Current Biology. 2004;14:322–325. doi: 10.1016/j.cub.2004.02.001. [DOI] [PubMed] [Google Scholar]

- Freyman RL, Nelson DA. Frequency discrimination as a function of signal frequency and level in normal-hearing and hearing-impaired listeners. Journal of Speech and Hearing Research. 1991;34:1371–1386. doi: 10.1044/jshr.3406.1371. [DOI] [PubMed] [Google Scholar]

- Gardner MF. Test of Auditory-Perceptual Skills. Revised edition. Austin, Texas: Pro-Ed; 1997. [Google Scholar]

- Hyde KL, Peretz I. “Out-of-Pitch” but still “In-Time”: An Auditory Psychophysical Study in Congenital Amusic Adults. Annals of the New York Academy of Science. 2003;999:173–176. doi: 10.1196/annals.1284.023. [DOI] [PubMed] [Google Scholar]

- Jones J, Zalewski C, Brewer C, Lucker J, Drayna D. Audiological and auditory processing characterization of individuals with deficits in musical pitch recognition. Ear & Hearing. 2008 in press. [Google Scholar]

- Kalmus H, Fry DB. On tune deafness (dysmelodia): frequency, development, genetics and musical background. Annals of Human Genetics. 1980;43:369–382. doi: 10.1111/j.1469-1809.1980.tb01571.x. [DOI] [PubMed] [Google Scholar]

- Koike K, Hurst M, Wetmore S. Correlation between the American Academy of Otolaryngology Head and Neck Surgery five-minute hearing test and standard audiologic data. Otolaryngology-Head and Neck Surgery. 1994;111:625–632. doi: 10.1177/019459989411100514. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Gunter TC, Cramon DY, Zysset S, Lohmann G, Friederici AD. Bach speaks: A cortical “language-network” serves the processing of music. NeuroImage. 2002;17:956–966. [PubMed] [Google Scholar]

- Koelsch S, Gunter T, Friederici AD, Schroger E. Brain indices of music processing: “nonmusicians” are musical. Journal of Cognitive Neuroscience. 2000;12:520–541. doi: 10.1162/089892900562183. [DOI] [PubMed] [Google Scholar]

- Lennon JE, Slesinski C. Comprehensive Test of Phonological Processing (CTOPP) cognitive-linguistic assessment of severe reading problems. Dumont/Willis; 2001. [Google Scholar]

- Levitin DJ, Menon V. Musical structure is processed in “language” areas of the brain: a possible role for Brodmann Area 47 in temporal coherence. NeuroImage. 2003;20:2142–2152. doi: 10.1016/j.neuroimage.2003.08.016. [DOI] [PubMed] [Google Scholar]

- Nie NH, Hull H, Bent DH. Statistical Package for the Social Sciences (SPSS) SPSS Inc; Headquarters Chicago, Illinois 60606: 2006. [Google Scholar]

- Overy K. Dyslexia and music: From timing deficits to musical intervention. Annals of the New York Academy of Science. 2003;999:497–505. doi: 10.1196/annals.1284.060. [DOI] [PubMed] [Google Scholar]

- Patel AD, Daniele JR. An empirical comparison of rhythm in language and music. Cognition. 2003;87:835–845. doi: 10.1016/s0010-0277(02)00187-7. [DOI] [PubMed] [Google Scholar]

- Patterson RD, Uppenkamp S, Johnrude IS, Griffiths TD. The processing of temporal pitch and melody information in auditory cortex. Neuron. 2002;36:767–776. doi: 10.1016/s0896-6273(02)01060-7. [DOI] [PubMed] [Google Scholar]

- Peretz I, Ayotte J, Zatorre RJ, Mehler J, Ahad P, Penhune V. Congenital amusia: a disorder of fine-grained pitch discrimination. Neuron. 2002;33:185–191. doi: 10.1016/s0896-6273(01)00580-3. [DOI] [PubMed] [Google Scholar]

- Peretz I. Brain specialization for music. [Review] Neuroscientist. 2002;8:372–380. doi: 10.1177/107385840200800412. [DOI] [PubMed] [Google Scholar]

- Shamma SA. Topographic Organization is essential for pitch perception. Proceedings of the National Academy of Science, USA. 2004;101:1114–1115. doi: 10.1073/pnas.0307334101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shastri LC, Shuangyu C, Greenberg S. Syllable Detection and Segmentation Using Temporal Flow Neural Networks. International Congress of Phonetic Sciences. 1999:1721–1724. [Google Scholar]

- Thorum AR. Fullerton Language Test for Adolescents, FLTA-2. Second Edition. Palo Alto, CA: Consulting Psychologist Press; 1986. [Google Scholar]

- Torgensen JK, Wagner RK, Rashott CA. Longitudinal studies of phonological processing and reading. Journal of Learning Disabilities. 1994;27:276–286. doi: 10.1177/002221949402700503. [DOI] [PubMed] [Google Scholar]

- Wong PCM, Skoe E, Russo NM, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nature Neuroscience. 2007;10:420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. TRENDS in Cognitive Sciences. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Bouffard M, Ahad P, Belin P. Where is ‘where’ in the human auditory cortex. Nature Neuroscience. 2002;5:905–909. doi: 10.1038/nn904. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ. Neural specializations for tonal processing. Annals of the New York Academy of Science. 2001;930:193–210. doi: 10.1111/j.1749-6632.2001.tb05734.x. [DOI] [PubMed] [Google Scholar]