Abstract

The auditory cortex is critical for perceiving a sound's location. However, there is no topographic representation of acoustic space, and individual auditory cortical neurons are often broadly tuned to stimulus location. It thus remains unclear how acoustic space is represented in the mammalian cerebral cortex and how it could contribute to sound localization. This report tests whether the firing rates of populations of neurons in different auditory cortical fields in the macaque monkey carry sufficient information to account for horizontal sound localization ability. We applied an optimal neural decoding technique, based on maximum likelihood estimation, to populations of neurons from 6 different cortical fields encompassing core and belt areas. We found that the firing rate of neurons in the caudolateral area contain enough information to account for sound localization ability, but neurons in other tested core and belt cortical areas do not. These results provide a detailed and plausible population model of how acoustic space could be represented in the primate cerebral cortex and support a dual stream processing model of auditory cortical processing.

Keywords: auditory cortex, macaque, population model, sound localization

Sound localization is a fundamental function of the auditory system in terrestrial vertebrates. Unilateral lesions of the auditory cortex have been shown to produce deficits in contralesional space in a variety of species (1–4), indicating a critical role for auditory cortex. However, despite considerable effort to determine how the cerebral cortex processes acoustic space, our understanding remains rudimentary (e.g., 5–17). From these studies and others, we know that (i) acoustic space is not topographically organized in the mammalian cerebral cortex and (ii) single neuron spatial receptive fields are very broad and, in themselves, unlikely to account for localization ability. Thus, some form of population code is likely used to represent acoustic space in the auditory cortex. Several models have been proposed (e.g., 13, 17), yet they do not illustrate how such a coding scheme could actually work, and it remains unclear which aspects of the neuronal response carry the information. Recent studies in extrastriate visual cortex have shown that a logarithmic maximum-likelihood estimator could account for direction selectivity of visual motion based on the firing rate of populations of neurons (18). The perception of azimuthal acoustic space can be similarly modeled as direction over 360°, and such an estimator may be a common cortical process for encoding secondary stimulus properties.

A recent study examining single neuron recordings in the macaque auditory cortex (22) was consistent with the hypothesis put forth by Rauschecker and others (19–21) that acoustic space could be represented in a hierarchical fashion, starting in the core field(s) of auditory cortex and progressing to the belt and para-belt fields. That study showed that spatial tuning of single neurons was sharpest in the caudolateral (CL) and caudomedial (CM) fields compared with core and more rostral (R) belt areas, but that study did not address how acoustic space could be represented.

In the present study, the same neuronal responses described earlier (22) are used in a population coding model similar to that shown previously in extrastriate middle temporal cortex (MT) (18). Acoustic space was tested at 4 different stimulus intensities in a plane through 0° elevation and 360° in azimuth. Correlates between the population responses in 6 different cortical areas were compared to psychophysical studies from normal human subjects localizing the same acoustic stimuli. Specifically, we tested whether the cortical population code could accurately predict the stimulus location consistent with 2 basic aspects related to the sound localization performance of normal and lesioned primates: (i) contralateral space is better represented than ipsilateral space, giving rise to contralesional deficits (1–4); and (ii) higher-intensity stimuli are better localized than lower-intensity stimuli (23–27).

Results

Human Psychophysics.

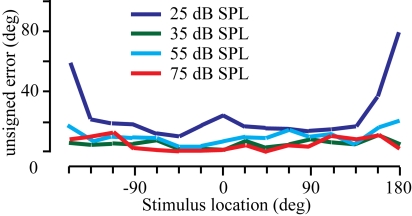

Psychophysical data were collected from 7 normal human subjects using 16 spatial locations and 4 absolute stimulus intensities. These data were consistent with previous reports of the effects of intensity on sound localization performance in both humans (23–26) and monkeys (27). The localization results are summarized across subjects in Fig. 1. The stimulus location is shown on the x axis, and the mean unsigned error across subjects is plotted on the y axis. Three main observations can be made. First, localization performance improved as the intensity increased, as evidenced by the means nearing zero (compare red to dark blue lines). Second, localization was best along the midline and decreased in the far periphery in the back quadrant. Third, although there were considerable individual differences (not shown), this variability decreased with increasing stimulus intensity. These observations were substantiated by statistical analysis, in which there was a main effect of stimulus intensity (ANOVA; F = 62.7; df = 3; P < 0.01) and stimulus location (ANOVA; F = 7.2; df = 15; P < 0.01). Post-hoc Tukey analysis showed that there was no difference in performance between 75 and 55 dB stimuli, but that performance was significantly worse as the intensity decreased (all P values < 0.01). Further analysis also showed that there was no significant difference between left and right locations (−157.5° to −22.5° vs. +22.5° to + 157.5°; P > 0.05), but performance directly behind the subject was significantly worse than directly in front for all intensities except 75 dB (t test, P < 0.01 with Bonferroni correction).

Fig. 1.

Sound localization performance. Mean unsigned error is shown for the 7 subjects localizing broadband noise at 4 different stimulus intensities (Inset).

Physiological Results.

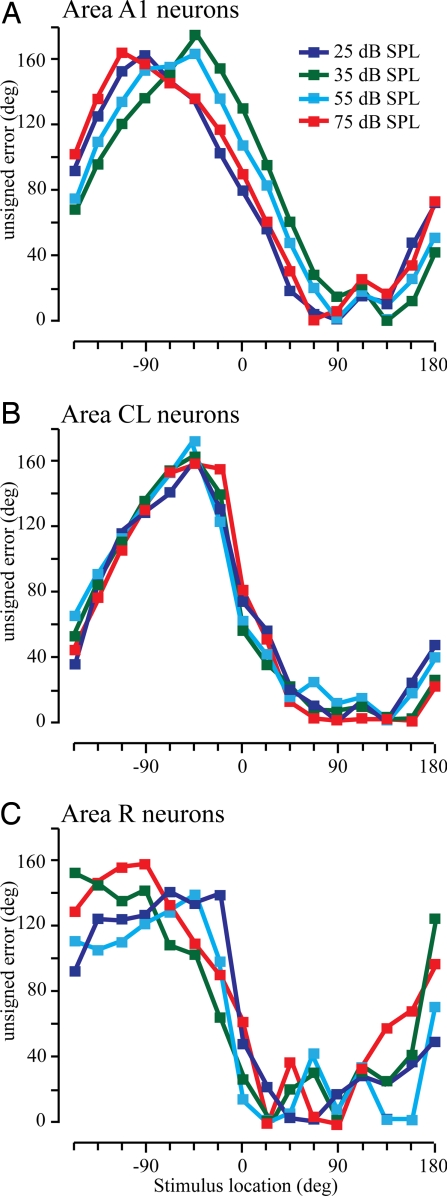

These results are based on the recordings of 970 neurons taken from 6 functionally and histologically defined cortical areas in the left hemisphere of 3 monkeys (see Methods and ref. 22). These data were restricted to neurons whose greatest response was statistically significantly different from the spontaneous activity (t test, P < 0.01). Additionally, neurons had to have a non-zero spontaneous rate as the analysis incorporated some of this activity to prevent taking the logarithm of zero, which is negative infinity (see Methods). The maximum likelihood estimator was used for each cortical area separately, but the neurons within a cortical area and the single trials were randomly selected for a total of 128 neurons comprising the input. This estimator was then repeated 1,000 times to generate mean unsigned errors as a function of stimulus location, shown in Fig. 2. In each panel, the mean unsigned error is shown as a function of the actual stimulus location, with each color representing a different stimulus intensity. Fig. 2A shows the results using the activity of primary auditory cortex (A1) neurons. It is clear from this and each of the other 2 panels that the model accuracy is much worse in ipsilateral space (Fig. 2 Left) compared with contralateral space, consistent with previous lesion data [see SI Text for a more complete description of the ipsilateral errors]. The model performance in contralateral space using CL neurons (Fig. 2B) is much better than when using A1 neurons (Fig. 2A), and much worse when using activity from R neurons (Fig. 2C).

Fig. 2.

Mean unsigned errors from the maximum likelihood estimator across stimulus locations. Colored lines correspond to different stimulus intensities: A1 neurons (A), CL neurons (B); and R neurons (C).

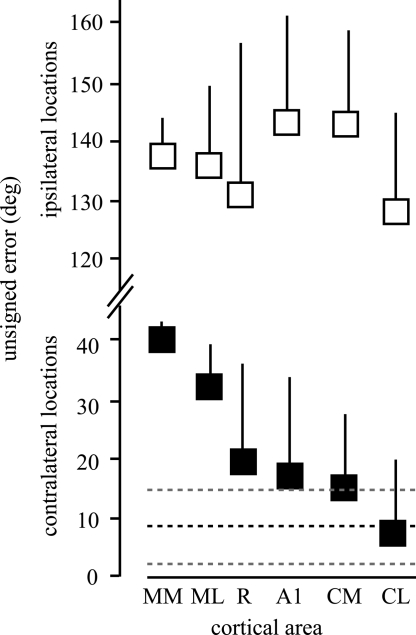

To determine if the information in certain cortical areas is consistent with sound localization performance, we compared the mean unsigned error of the model predictions for each cortical area to the unsigned error taken from the human subjects. Given the differences in the model performance between contralateral and ipsilateral locations, which was not seen psychophysically, the estimates were pooled from the 5 most ipsilateral locations (−45° to −135°) and the 5 most contralateral locations (+45° to +135°). The results are shown in Fig. 3. The ipsilateral (open squares) and contralateral (closed squares) data are shown on the same scale (note the y axis). Ipsilateral locations were poorly discriminated by the model regardless of the population of neurons that contributed to the computation. In contrast, the model did much better for all cortical areas when contralateral locations were estimated. The model had intermediate performance when A1 neurons were used, with the mean slightly greater than the mean plus 1 SD from the human observers. The model performed less accurately in R, ML (mediolateral), and MM (mediomedial) areas and more accurately in caudal cortex in the CM and CL fields. The model estimate based on the population of CL neurons was nearly identical to the psychophysical results. Paired t tests showed that the estimates were not significantly different from the human observers (P > 0.05) only when the population of CL neurons were used. This shows that the spike rate of populations of neurons in CL contain enough information to account for sound localization performance in azimuth across a broad range of stimulus intensities using a relatively simple spike rate code.

Fig. 3.

Mean unsigned errors from the maximum likelihood estimator using neuronal populations from different cortical areas. Means and SDs from human subjects are shown as heavy and light dashed lines, respectively. Ipsilateral (open squares) and contralateral (filled squares) locations are shown. Note the break in the y axis. Paired t tests between the model (contralateral) and human results resulted in P values of 0.0002, 0.0035, 0.0159, 0.0425, 0.0395, and 0.5233 for MM, ML, R, A1, CM, and CL, respectively.

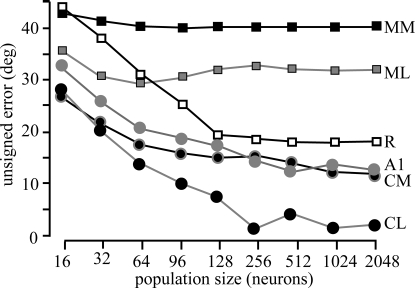

A final consideration is how many cortical neurons are necessary for this performance. One possibility is that simply having a large number of neurons would ultimately result in similar model performance. To investigate this issue, we calculated the model error based on the responses of 16 to 2,048 randomly selected neurons (Fig. 4). For all cortical areas, asymptotic performance was noted by 128 to 256 neurons, as minimal gains were observed when this number was increased by nearly 10 to 20 fold. What is also clear is that the performance does not necessarily improve across cortical areas with increases in neuron number, as the accuracy of the model predictions based on MM, ML, and R neurons was consistently worse than those from CL, CM, and even A1. This provides further evidence that the spatial processing of neurons in CL is significantly different from that from neurons in core and more rostral or medial belt fields.

Fig. 4.

Effect of population size on estimates. Each line corresponds to populations of neurons from different cortical areas. Population size has little effect over 100 neurons.

Discussion

The potential for a spike-based population representation of acoustic space in the alert primate was tested across 6 cortical areas using broadband noise stimuli at 4 different intensities in 3 monkeys. These electrophysiological results were compared with psychophysical results in human subjects localizing the same acoustic stimuli. It was found that the model showed the most accurate performance based on the population responses of neurons in CL, but not the core and other tested belt fields. These data present a plausible model of how the mammalian cerebral cortex could represent acoustic space.

Population Modeling.

Population modeling used here is similar to that used in extrastriate cortex (18). It is a relatively straightforward procedure in which weight is given to each neuron based on the spatial tuning function of that neuron and the direction of the stimulus, and uses single trial estimates similar to the information available to the monkey on a single trial. Visual motion direction discrimination is in principle similar to horizontal sound localization, given that both must decode a stimulus attribute in 2D space. There are clear differences between the 2 sensory systems in the parameters used as well as the neural elements involved in making these computations. Nonetheless, the same population model can account for perceptual abilities based on the neuronal responses in both MT of extrastriate cortex and CL of auditory cortex, and with roughly the same population size, 100 to 200 neurons (32). This indicates that such 2D representations may be a general neocortical mechanism to represent secondary stimulus features. One important feature of this model is that it does not require a topographic representation, which is clearly not present for acoustic space in the mammalian cerebral cortex, and thus can likely be applied in a wide number of sensory cortices.

The performance of the population code in the present context was tested against 2 known lesion and psychophysical characteristics of sound localization. First, unilateral lesions of auditory cortex result in a deficit in sound localization ability in contralesional space, but not ipsilesional space (1–4). The model was extremely poor at predicting the location of stimuli in ipsilateral space but could accurately encode contralateral space, consistent with the lesion results. This is in contrast to models using spike rate as well as spike timing and pattern, wherein ipsilateral locations are discriminated as well as contralateral locations (e.g., 14, 16–17). Second, sound localization ability is degraded at low stimulus intensities (23–27). The results from this study also demonstrate an influence of stimulus intensity on sound localization ability that was consistent across all studied subjects.

The population model presented here is a quantitative demonstration that the spike rate alone of cortical neurons contains sufficient information to account for sound localization across locations and stimulus intensities (see SI Text for additional discussion). Previous studies in cats have suggested that spike timing and pattern could carry additional location information than firing rate alone (e.g., 7–9, 14–17). The present study shows that spike rate alone is sufficient to describe localization ability under these circumstances, but does not eliminate the potential for spike pattern contributing to localization ability, particularly in other contexts. However, it is unlikely that spike latency is a strong contributor to this encoding, as spatial receptive fields based on spike latency are much broader than those based on spike rate (22). It may be that these differences are caused by species (and anesthesia) differences, although there are commonalities between the 2 classes of studies. For example, Stecker et al. (17) found that neurons in different cortical fields of the cat, particularly the posterior auditory field, carried more spatial information than A1, similar to the results here with the differences between the caudal and rostral fields.

Other population models could potentially yield similar results. We had also attempted to predict stimulus location based on a population vector model similar to that done in the motor cortex (28, 29) or using a linear pattern discriminator model (e.g., 30) (Figs. S1, S2, and S3). One difficulty with these approaches is that the model accurately predicted the location across all stimulus locations if one used a winner-take-all criterion (see SI Text). However, to achieve results similar to the psychophysical experiments, one has to select an arbitrary threshold, which differed between the 2 techniques. An advantage of the technique used here is that the model uses a winner-take-all criterion, which has precedent in neuronal correlates of behavior (e.g., 31). A second advantage is that it uses the real distributions of neurons with respect to their best direction, firing rate, and spatial tuning, which varies considerably across cortical areas (22). These differences between neural populations in distinct cortical areas underlie the observed differences in model performance.

Hierarchical Processing in Auditory Cortex.

Previous anatomical and physiological data suggest that acoustic information is processed in parallel and hierarchical “streams,” in which information is processed from the core areas to the belt areas to the parabelt areas and ultimately to parietal and frontal lobe regions (see 19–22, 33, 34). This predicts that neurons in CL would better represent acoustic space than neurons in A1, as well as other rostral cortical fields, which is exactly the result that we observed. This suggests that CL receives the output of A1 neurons, further refines the spatial representation, and then relays this information to the next cortical area(s), such as the caudal parabelt. Our study does not demonstrate the mechanism of spatial refinement between A1 and CL, but it does show that spatial representations in CL have been made more accessible to a biologically plausible population decoder of firing rate alone. Finally, it is likely that area CL does not contain the only representation of acoustic space, and although we predict that it is necessary for contralateral sound localization, it is probably not sufficient (although see ref. 2).

Although the results of this study are consistent with the idea that spatial information is processed along a caudal pathway, it is also clear that the rostral and medial areas also have some representation of acoustic space (see SI Text). It is likely that some spatial information is retained in these non-caudal fields, and it is similarly likely that some non-spatial information is carried along in the caudal fields. For example, in environments with multiple acoustic objects, attention may operate in these non-caudal regions to later re-integrate this information at higher levels in the nervous system.

Conclusions

This study shows that the population of neurons in CL contains enough information in the spike rate to account for sound localization in contralateral space. This finding leads to several testable predictions. First, this model predicts that it will be robust to localization tasks that vary in difficulty, such as narrow-band stimuli as opposed to the broadband stimuli used here. Further, lesions restricted to the caudal belt areas should result in much more profound sound localization deficits compared with lesions of more rostral areas. This model also predicts that restricted lesions, or local interventions such as micro-stimulation, will likely not cause any perceptual effects as the encoding occurs over a large cortical area, such as area CL.

Methods

Apparatus and Stimuli.

All experiments were performed in a double-walled acoustic chamber (IAC) measuring 2.4 × 3.0 × 2.0 m (length × width × height; inner dimensions) and lined with echo-attenuating foam. Speakers with a flat frequency profile from 500 to 12,000 Hz and a 6 dB/octave roll-off at higher and lower frequencies were located 1 m from the center of the subject's head every 22.5° at the elevation of the interaural axis. Acoustic stimuli (200 ms duration unfrozen Gaussian noise with 5-ms linear on/off ramps) were generated using TDT hardware and software (TDT) controlled by a PC. Stimulus intensities were 25 dB, 35 dB, 55 dB, and 75 dB sound pressure level (average intensity; A-weighted) measured at the center of the apparatus in the absence of the monkey, and were randomly varied ±2 dB in 0.5-dB steps across presentations.

Human Psychophysics.

Seven subjects (3 males) ranging in age from 23 to 39 years participated. All subjects provided informed consent and all procedures were approved by the institutional review board and followed Public Health Service and Society for Neuroscience guidelines. All subjects had normal or corrected-to-normal vision and no known auditory deficits. Subjects sat in the same sound booth and were presented with the same stimuli as in the physiological experiments described later. A blinking LED oriented the subject to a consistent initial head location that was monitored via closed circuit video by the experimenter. The subject depressed a button to initiate a trial and cause the LED to remain on continuously. After a variable delay (300–500 ms), a single 200-ms noise burst was randomly presented from one of the 16 speakers at one of 4 different intensities. The subjects were provided with a sheet representing the 256 different trials (4 trials per stimulus) and a small figurine that showed an overhead view of the apparatus and numbered the speakers from 1 to 16 with 8 being directly in front of the subject and 4 and 12 being directly left and right, respectively. Subjects were asked to write down the number of the speaker that corresponded to the location of the previous stimulus. Subjects were told that they could turn to look at the speakers behind them if they wished, but to return to looking forward before the next trial. Each subject was tested on 5 different sessions for a total of 20 trials per stimulus.

Psychophysical Data Analysis.

The unsigned error was calculated for each stimulus and location by multiplying the probability that the subject selected a particular location by the distance in absolute degrees from the actual stimulus location. Trials when the subject reported that they did not hear a sound were not included in the analysis. This occurred no more than 3 times in a single subject and never for the same location on 2 trials.

Animals and Tasks.

The neural data from 3 monkeys presented here have been described in detail previously (22). Two monkeys were trained to depress a lever to initiate a trial, then after a 300- to 500-ms delay, 3 to 7 stimuli of different intensities and locations were presented (i.e., S1 stimuli) with an inter-stimulus interval of 800 ms before the same stimulus intensity and location was presented a second time (i.e., S2 stimuli). Immediately following the S2 stimulus offset, the solenoid providing fluid reinforcement would open briefly and audibly. If the monkey released the lever within 800 ms,, the solenoid would open again for a longer time to provide the fluid reinforcement. Failure to do so, or responses during the S1 stimulus, resulted in a brief time-out. Monkeys were thus attending to the acoustic environment but were not required to discriminate the location of the stimulus. We were unable to train a third monkey to perform this task, and this animal received a fluid reward after 3 to 7 stimuli. Continuous monitoring via closed-circuit video and the fact that the monkey was receiving fluids every several seconds assured that it remained alert throughout the session. All animal procedures were approved by the institutional Animal Care and Use Committee and followed Public Health Service and Society for Neuroscience guidelines.

Data Recording.

Each monkey was implanted with a recording cylinder over the left hemisphere oriented in the vertical plane to allow the electrode to penetrate the superior temporal gyrus from a roughly orthogonal direction (35, 36). Neuronal signals were recorded from tungsten microelectrodes (FHC), filtered, amplified, and displayed on an oscilloscope and audio monitor. Search stimuli consisted of tone and/or noise bursts, band-passed noise, clicks, and vocalizations. Single neuron waveforms were isolated using a time-amplitude window discriminator (BAK). Action potentials were time stamped on the computer at 1 ms resolution from stimulus onset and neural data were recorded for 350 ms following stimulus onset. All data in this report are from neurons in which the unit isolation was stable and data were collected for at least 8 randomly interleaved S1 stimuli of each of the 16 locations and 4 intensities. In addition, one trial type in which no stimulus was presented was included to measure the spontaneous activity. The total number of neurons analyzed in each cortical field was as follows: A1, 325; R, 96; ML, 113; MM, 42; CL, 185; and CM, 209.

Following all experiments, monkeys were given an overdose of sodium pentobarbital and perfused through the heart with normal saline solution followed by 4% paraformaldehyde in 0.1 M phosphate buffer, pH 7.2. The brains were histologically processed to reconstruct electrode tracts.

Optimally Decoding the Population Response.

We used an optimal decoding technique to quantify how well a neural population could identify stimulus direction on a single trial (18). This technique relies on maximum likelihood estimation (MLE), wherein log-likelihood functions reflect the probability that a certain stimulus elicited the observed neural response.

We defined neural responses as action potentials occurring 0 to 300 ms following stimulus onset, which would include any offset responses (37). Tuning curves were then identified for each stimulus attenuation as the mean number of action potentials per trial as a function of direction. Notice that tuning curves with values equal to zero present a numerical difficulty for log-likelihood approaches, as the log of zero is negative infinity. We thus added to each neuron's tuning curve a small offset, equal to the neuron's spontaneous rate scaled by its Poisson probability for an observation of zero spikes. For example, if a neuron had spontaneous rate 4.75 spikes (the empirical mean across all neurons), this added 0.04 spikes to all directions of its tuning curve. We also excluded neurons with zero spontaneous rate (A1, n = 2; R, n = 1; ML, n = 1; MM, n = 0; CL, n = 1; CM, n = 3 neurons).

We performed the MLE calculation on each stimulus direction by using “single-trial” population responses. As the neurons were not recorded simultaneously, population responses ignore interneuronal correlations as in the work of Jazayeri and Movshon (18). We analyzed cortical fields and the 4 attenuations independently. A population response consisted of one random trial from each neuron in a randomly sampled population. Population size was set to 128 neurons for most analyses, although other numbers were tested as described for Fig. 4. When a cortical field had fewer neurons than the desired population size, its data were replicated until the number of neurons equaled or exceeded the population size. Every MLE calculation was repeated 1,000 times with a new, randomly selected population response. Our results are thus based on the mean and variance of the MLE estimate across 1,000 iterations.

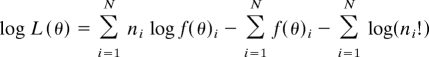

All tuning curves were scaled to have a peak equal to one. These scaled tuning curves are denoted f(θ)i, where θ is stimulus direction and the subscript i indexes neuron. For each single-trial population response, each of N neurons in the population responds with ni spikes to a stimulus of direction θ. The log-likelihood function for any stimulus θ, from equation 2 of Jazayeri and Movshon (18), is then:

|

The first right-hand term is a weighted sum of tuning curves. The second term is essential for populations with nonuniform distribution of direction preference, as when contralateral space is more strongly represented than ipsilateral space. For every one of the 1,000 iterations in each direction, the maximum likelihood estimate was defined as the peak of the log-likelihood function. Mean unsigned error is the mean difference in degrees between actual and MLE-estimated stimulus direction.

Supplementary Material

Acknowledgments.

The authors thank T. M. Woods, S. E. Lopez, J. H. Long and J. E. Rahman for their participation in these studies, and J. Engle for comment on previous versions of this manuscript. This work was funded by National Institutes of Health grants DC-02371, DC-00442, AG-024372 (to G.H.R.), and DC-008171 (to L.M.M.).

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/cgi/content/full/0901023106/DCSupplemental.

References

- 1.Thompson GC, Cortez AM. The inability of squirrel monkeys to localize sound after unilateral ablation of auditory cortex. Beh Brain Res. 1983;8:211–216. doi: 10.1016/0166-4328(83)90055-4. [DOI] [PubMed] [Google Scholar]

- 2.Jenkins WM, Merzenich MM. Role of cat primary auditory cortex for sound-localization behavior. J Neurophysiol. 1984;52:819–847. doi: 10.1152/jn.1984.52.5.819. [DOI] [PubMed] [Google Scholar]

- 3.Heffner HE, Heffner RS. Effect of bilateral auditory cortex lesions on sound localization in Japanese macaques. J Neurophysiol. 1990;64:915–931. doi: 10.1152/jn.1990.64.3.915. [DOI] [PubMed] [Google Scholar]

- 4.Smith AL, et al. An investigation of the role of auditory cortex in sound localization using muscimol-releasing Elvax. Eur J Neurosci. 2004;19:3059–3072. doi: 10.1111/j.0953-816X.2004.03379.x. [DOI] [PubMed] [Google Scholar]

- 5.Eisenman LM. Neural encoding of sound location: An electrophysiological study in auditory cortex (AI) of the cat using free field stimuli. Brain Res. 1974;75:203–214. doi: 10.1016/0006-8993(74)90742-2. [DOI] [PubMed] [Google Scholar]

- 6.Rajan R, Aitkin LM, Irvine DR. Azimuthal sensitivity of neurons in primary auditory cortex of cats. II. Organization along frequency-band strips. J Neurophysiol. 1990;64:888–902. doi: 10.1152/jn.1990.64.3.888. [DOI] [PubMed] [Google Scholar]

- 7.Middlebrooks JC, Clock AE, Xu L, Green DM. A panoramic code for sound location by cortical neurons. Science. 1994;264:842–844. doi: 10.1126/science.8171339. [DOI] [PubMed] [Google Scholar]

- 8.Brugge JF, Reale RA, Hind JE. The structure of spatial receptive fields of neurons in primary auditory cortex of the cat. J Neurosci. 1996;16:4420–4437. doi: 10.1523/JNEUROSCI.16-14-04420.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Middlebrooks JC, Xu L, Eddins AC, Greeen DM. Codes for sound-source location in nontonotopic auditory cortex. J Neurophysiol. 1998;80:863–881. doi: 10.1152/jn.1998.80.2.863. [DOI] [PubMed] [Google Scholar]

- 10.Schnupp JWH, Mrsic-Flogel TD, King AJ. Linear processing of spatial cues in primary auditory cortex. Nature. 2001;414:200–204. doi: 10.1038/35102568. [DOI] [PubMed] [Google Scholar]

- 11.Jenison RL, Reale RA, Hind JE, Brugge JF. Modeling of auditory spatial receptive fields with spherical approximation functions. J Neurophysiol. 1998;80:2645–2656. doi: 10.1152/jn.1998.80.5.2645. [DOI] [PubMed] [Google Scholar]

- 12.Skottun BC. Sound localization and neurons. Nature. 1998;393:531. doi: 10.1038/31134. [DOI] [PubMed] [Google Scholar]

- 13.Recanzone GH, Guard DC, Phan ML, Su TK. Correlation between the activity of single auditory cortical neurons and sound localization behavior in the macaque monkey. J Neurophysiol. 2000;83:2723–2739. doi: 10.1152/jn.2000.83.5.2723. [DOI] [PubMed] [Google Scholar]

- 14.Furukawa S, Xu L, Middlebrooks JC. Coding of sound-source location by ensembles of cortical neurons. J Neurosci. 2000;20:1216–1228. doi: 10.1523/JNEUROSCI.20-03-01216.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Reale RA, Jenison RL, Brugge JF. Directional sensitivity of neurons in the primary auditory AI cortex: Effects of sound-source intensity level. J Neurophysiol. 2002;89:1024–1038. doi: 10.1152/jn.00563.2002. [DOI] [PubMed] [Google Scholar]

- 16.Mickey BJ, Middlebrooks JC. Representation of auditory space by cortical neurons in awake cats. J Neurosci. 2003;23:8649–8643. doi: 10.1523/JNEUROSCI.23-25-08649.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Stecker GC, Harrington IA, Middlebrooks JC. Location coding by opponent neural populations in the auditory cortex. PLoS Biol. 2005;3:520–528. doi: 10.1371/journal.pbio.0030078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jazayeri M, Movshon JA. Optimal representation of sensory information by neuronal populations. Nat Neurosci. 2006;5:690–696. doi: 10.1038/nn1691. [DOI] [PubMed] [Google Scholar]

- 19.Rauschecker JP. Parallel processing in the auditory cortex of primates. Aud Neuro-Otol. 1998;3:86–103. doi: 10.1159/000013784. [DOI] [PubMed] [Google Scholar]

- 20.Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proc Natl Acad Sci. 2000;97:11793–11799. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Woods TM, Lopez SE, Long JH, Rahman JE, Recanzone GH. Effects of stimulus azimuth and intensity on the single-neuron activity in the auditory cortex of the alert macaque monkey. J Neurophysiol. 2006;96:3323–3337. doi: 10.1152/jn.00392.2006. [DOI] [PubMed] [Google Scholar]

- 23.Altshuler MW, Comalli PE. Effect of stimulus intensity and frequency on median horizontal plane sound localization. J Auditory Res. 1975;15:262–265. [Google Scholar]

- 24.Comalli PE, Altshuler MW. Effect of stimulus intensity, frequency, and unilateral hearing loss on sound localization. J Auditory Res. 1976;16:275–279. [Google Scholar]

- 25.Su TK, Recanzone GH. Differential effect of near-threshold stimulus intensities on sound localization performance in azimuth and elevation in normal human subjects. J Assoc Research Otolaryngol. 2001;2:246–256. doi: 10.1007/s101620010073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Sabin AT, Macpherson EA, Middlebrooks JC. Human sound localization at near-threshold levels. Hear Res. 2005;199:124–134. doi: 10.1016/j.heares.2004.08.001. [DOI] [PubMed] [Google Scholar]

- 27.Recanzone GH, Beckerman NS. Effects of intensity and location on sound location discrimination in macaque monkeys. Hear Res. 2004;198:116–124. doi: 10.1016/j.heares.2004.07.017. [DOI] [PubMed] [Google Scholar]

- 28.Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal population coding of movement direction. Science. 1986;233:1416–1419. doi: 10.1126/science.3749885. [DOI] [PubMed] [Google Scholar]

- 29.Georgopoulos AP, Kettner RE, Schwartz AB. Primate motor cortex and free arm movements to visual targets in three-dimensional space. II. Coding of the direction of movement by a neuronal population. J Neurosci. 1988;8:2928–2937. doi: 10.1523/JNEUROSCI.08-08-02928.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Russ BE, Ackelson AL, Baker AE, Cohen YE. Coding of auditory-stimulus identity in the auditory non-spatial processing stream. J Neurophysiol. 2007;99:87–95. doi: 10.1152/jn.01069.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Recanzone GH, Wurtz RH. Effects of attention on MT and MST neuronal activity during pursuit initiation. J Neurophysiol. 2000;83:777–790. doi: 10.1152/jn.2000.83.2.777. [DOI] [PubMed] [Google Scholar]

- 32.Shadlen MN, Britten KH, Newsome WT, Movshon JA. A computational analysis of the relationship between neuronal and behavioral responses to visual motion. J Neurosci. 1996;16:1486–1510. doi: 10.1523/JNEUROSCI.16-04-01486.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Romanski LM, et al. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Rauschecker JP, Tian B, Pons T, Mishkin M. Serial and parallel processing in rhesus monkey auditory cortex. J Comp Neurol. 1997;382:89–103. [PubMed] [Google Scholar]

- 35.Pfingst BE, O'Connor TA, Miller JM. A vertical stereotaxic approach to auditory cortex in the unanesthetized monkey. J Neurosci Methods. 1980;2:33–45. doi: 10.1016/0165-0270(80)90043-6. [DOI] [PubMed] [Google Scholar]

- 36.Recanzone GH, Guard DC, Phan ML. Frequency and intensity response properties of single neurons in the auditory cortex of the behaving macaque monkey. J Neurophysiol. 2000;83:2315–2331. doi: 10.1152/jn.2000.83.4.2315. [DOI] [PubMed] [Google Scholar]

- 37.Recanzone GH. Response profiles of auditory cortical neurons to tone and noise stimuli in the behaving macaque monkey. Hearing Res. 2000;150:104–118. doi: 10.1016/s0378-5955(00)00194-5. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.