Abstract

Objective

Cochlear implants (CI) have provided tremendous benefit for speech recognition in quiet for patients with severe and profound hearing impairment, but implant users still have great difficulty perceiving music. The purpose of this study was to develop a test to quantify music perception by CI listeners in a clinically practical manner that could be standardized for administration at any implant center.

Study Design

Prospective convenience sample.

Setting

Hearing research center at an academic hospital.

Patients

Eight CI listeners, including 5 men and 3 women with implant experience ranging from 0.5 to 6 years, participated in this study. They represented a variety of implant devices and strategies.

Intervention

Administration of the Clinical Assessment of Music Perception test in a standardized sound field.

Main Outcome Measures

Music perception was assessed using a computerized test comprising pitch direction discrimination, melody identification, and timbre identification. The pitch subtest used a 2-alternative forced-choice adaptive procedure to determine a threshold interval for discrimination of complex pitch direction change. The melody and timbre subtests assessed recognition of 12 isochronous melodies and 8 musical instruments, respectively.

Results

Testing demonstrated a broad range of perceptual accuracy on all 3 subtests. Test duration averaged less than 45 minutes.

Conclusion

Clinical Assessment of Music Perception is an efficient computerized test that may be used to measure 3 different aspects of music perception in CI users in a standardized and clinically practical manner.

Keywords: Cochlear implants, Music perception, Music test

The cochlear implant (CI) restores substantial hearing in profoundly deafened adults and children. Cochlear implant signal processing strategies have been optimized for speech understanding in quiet, such that most postlingually deafened adults with implants can now recognize 70% to 80% of sentences presented in quiet (1). However, music perception and appraisal, although highly variable, remain generally poor for CI listeners (2–5). Still, many CI recipients have indicated that music is an important part of their lives and auditory experience and have expressed a desire to enjoy music again (6).

The definition of music differs among various cultures and social milieus. In addition, there are numerous subjective factors influencing enjoyment, including personal preferences for musical genre and situational context, such as the listening environment and the listener’s mood. These subjective factors can all greatly affect music appraisal and thereby render appraisal difficult to measure. Thus, many studies focus on the objective characteristics of sound, which can be described in terms of physical parameters of the acoustic signals (7). Several structural features of music that have been examined with regard to music perception include rhythm, pitch, melody, and timbre. Timbre is the attribute of sound that enables one to differentiate between sounds having the same pitch, loudness, and duration, such as when distinguishing the same musical note played on different instruments.

Previous studies have shown that CI recipients have perceptual accuracy similar to normal-hearing adults for simple rhythms presented at a moderate tempo. However, CI recipients are significantly less accurate than normal-hearing adults on perception of pitch, pitch patterns, melodies, and timbre (5–10). In one study on the cases of 49 CI listeners, Gfeller et al. (8) found complex pitch direction discrimination thresholds ranging from 1 semitone to 2 octaves (24 semitones), with a mean of 7.6 semitones. This can be interpreted to indicate that on average, CI users require complex tones to be more than 7 notes apart on the western musical scale to correctly identify which one is higher in pitch. In comparison, normal-hearing listeners demonstrated a mean threshold of 1.1 semitones.

Melody and timbre identification are similarly poor: in open-set recognition tasks, CI users scored an average of 12% correct in melodies and 47% correct in instrument recognition, compared with 55% and 91%, respectively, for normal-hearing listeners (8,10). Melody recognition was better for melodies with distinctive rhythms. Gfeller et al. (8) suggest that advanced age and greater length of profound deafness have a negative impact on melody recognition of implant recipients. Timbre recognition has a weak negative correlation with age, length of implant use, speech processing, and cognitive measures of sequential processing (10,11). Appraisal tests have shown that CI listeners give higher ratings to the lower-frequency instruments in each family; however, in identification tasks, CI listeners often mistake instruments from different families for the target item (10,11).

No commercial strategy or device has been objectively demonstrated to be superior for music perception. Until recently, CI development has focused on improving speech recognition in quiet. Accordingly, implant technology has implemented a vocoder approach, which preserves the temporal envelope of frequency-specific bands but greatly limits the delivery of temporal fine-structure information important for perceiving music (12). Such information is also important for the understanding of tonal languages (13), speech perception in noise (14), and the perception of interaural time differences for sound localization (15). The delivery of spectral information is also limited to about 6 to 8 functional frequency channels (1). Future technologies that improve the delivery of temporal or spectral information could enhance music perception, and a practical, valid, and reliable test is a necessary tool for evaluation.

Because of the importance of music and these related tasks, some tests of music perception have been developed. Gfeller et el. (2,9,16) began by adapting the Primary Measures of Music Audiation test and also developed the Musical Excerpt Recognition Test. These are lengthy tests of open-set recognition and music appraisal, which can take many hours and require trained musical personnel to code the responses. Many other groups have also assembled in-house tests to evaluate novel CI strategies and designs developed by their laboratories (14,17). These instruments used in these studies were designed to address specific research questions regarding perception of different structural features of music. The methodologies used are often similar, but they were not intended to be standardized tests and it is not possible to directly compare the results across laboratories. Thus, we have developed a short, computerized test, the University of Washington Clinical Assessment of Music Perception (CAMP), comprising pitch direction discrimination, melody identification, and timbre identification. In this study, we describe the test stimuli and protocol, discuss considerations in developing a test that is clinically practical, and report preliminary results.

METHODS

Listeners

Eight CI listeners took part in this study. This convenience sample of patients was identified from patients returning from surveillance visits for their CIs at the University of Washington Medical Center. There were 5 men and 3 women, with ages ranging from 27 to 76 years. Table 1 lists additional background information for the CI listeners. All CI listeners were native speakers of American English. Listener L5 received an implant soon after having sudden traumatic hearing loss and was notable for demonstrating speech recognition scores of 90% correct on Hearing in Noise Test sentences as soon as 2 days after implant mapping. Listeners L5 and L6 each had less than 1 year of implant experience. Listeners L7 and L8 had congenital hearing impairment and became deaf as adults. Listener L7 was tested twice using different signal processing strategies, HiRes and HiRes 120, on his Clarion Harmony processor. Experiments were conducted in compliance with procedures approved by the University of Washington institutional review board, and all listeners were informed of the testing protocol in compliance with human subjects procedures for informed consent. The listeners were reimbursed for transportation costs.

TABLE 1.

Biographical information for the CI users who participated in the studies

| L | Agea | Dur deafa | CI expa | Cause | Device | Processor | Strategy |

|---|---|---|---|---|---|---|---|

| L1 | 27 | 13 | 6 | Unknown | C1 | Platinum BTE | CIS |

| L2 | 61 | 1.5 | 1 | Unknown | CI24R(CS) | ESPrit 3G | ACE |

| L3 | 69 | 22 | 6 | Idiopathic | CI24M | ESPrit 3G | ACE |

| L4 | 61 | 2 | 2 | Infection | CI24R(CS) | Sprint | ACE |

| L5 | 41 | 0.5 | 0.5 | Trauma | HiRes 90K | Auria | HiRes-P |

| L6 | 76 | 40 | 0.5 | Otosclerosis | HiRes 90K | Auria | HiRes-P |

| L7 | 41 | 41 | 5 | Congenital | CII | Harmony | HiRes/HiRes 120 |

| L8 | 49 | Unknown | 2 | Congenital | Combi40+ | Tempo+ | CIS |

In years. L, listener; Dur deaf, duration of deafness; CI exp, duration of CI experience; C1, Clarion C1; CI24R, Nucleus CI24R; CI24M, Nucleus CI24M; CI24R (CS), Nucleus CI24R (CS); HiRes 90K, Advanced Bionics HiRes 90K; CII, Clarion CII; Combi 40+, Med-El Combi 40+; BTE, behind the ear; CIS, continuous interleaved sampling; ACE, advanced combinational encoder.

Stimuli Creation and Test Procedure

Discrimination of Complex Pitch Direction Change

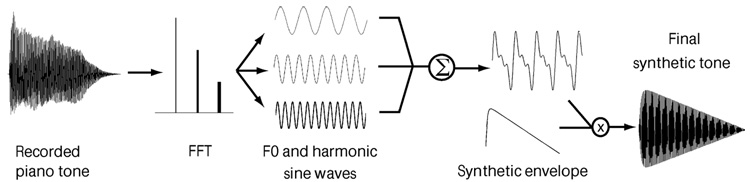

Digitally synthesized complex tones were chosen for study because they are representative of real-world acoustic tones in which fundamental frequency and overtone information are relevant cues for pitch discrimination. The synthetic tones had identical spectral envelopes derived from a recorded piano note at middle C (262 Hz) and uniform synthetic temporal envelopes to eliminate any temporal envelope cues that might be present. A custom peak-detection algorithm was used to select the fast Fourier transform components of the recorded piano note that corresponded to the fundamental frequency (F0) and harmonic frequencies. Harmonic peaks were defined as peaks greater than 15% of the maximum peak, representing F0, and the corresponding harmonic frequency was determined within a window of 50 Hz around each peak. For each tone, the spectral envelope and phase relationships derived from the fast Fourier transform peaks were applied to sets of sinusoidal waves corresponding to the target frequency and its harmonics. The sine waves were summed up, and the resulting waveform multiplied by a uniform temporal envelope with exponential onset and linear decay to create the final synthetic complex pitch tones of 760-millisecond duration. A graphical depiction of the synthesis procedure is shown in Figure 1. This synthesis method generated a musically pleasing but well-controlled tone for study.

FIG. 1.

Diagram showing synthetic complex pitch tones constructed by summation of sinusoidal waves with spectral amplitude and phase values derived from a recorded piano tone. The resulting waveform was then multiplied with a uniform temporal envelope to create the final tone.

The pitch direction test was implemented using a 2-alternative, forced-choice test with 1-up, 1-down adaptive tracking. On each presentation, a tone at the reference F0 and a higher-pitched tone determined by the adaptive interval size were played in random order. The users were asked to identify which note was higher in pitch. The minimum tested interval was 1 semitone (approximately 6% F0 difference), and the maximum was 12 semitones or 1 octave (100% increase in F0). To create an accurate psychometric function, a reversal at zero was automatically added by the test algorithm when the user answered correctly at 1 semitone. Adaptive tracking was performed simultaneously with samples from 4 base frequencies (185 Hz [F#3], 262 Hz [C4, or middle C], 330 Hz [E4], and 391 Hz [G4]) interleaved in random order, until 3 trials of 8 reversals for each base frequency were completed. The threshold values for each base frequency were calculated separately by using the mean of the last 6 reversals for each trial and averaging the results from the 3 trials into a final threshold for discrimination of synthetic complex pitch direction change.

The frequencies were distributed within the octave surrounding middle C. Western musical instruments and the human voice are capable of a much wider range, but the octave surrounding middle C is the most common octave among the prototypical frequency ranges for western musical instruments, sung voice, and nursery songs such as those used in the melody test. Musical instruments are also capable of producing sounds in increments smaller than a semitone, but the semitone is the smallest interval size in the scale for traditional Western music. Thus, to keep the test short and practical for a clinical setting, the octave around middle C and the semitone were chosen to be a representative range and interval size that would be important for music perception. Because studies have suggested F0-dependent differences in pitch discrimination ability (17,18), the pitch subtest uses 4 different base frequencies: F#3 is the lower limit of the octave surrounding middle C; C4, E4, and G4 are the 3 most common notes in the melodies of the melody test. A short training period allowed the listeners to practice with a range of intervals at each base frequency.

Melody Identification

In this subtest, the listeners were asked to identify the recordings of 12 common melodies from a closed set. The melodies were selected for their general familiarity through discussions among hearing and music professionals, and from earlier studies in which recognition tests demonstrated that the melodies were familiar for normal-hearing and CI listeners (14,19). To maximize cross-cultural recognition, input was also solicited from individuals with international backgrounds, particularly in industrialized European and Asian countries where cochlear implantation is prevalent. Table 2 lists the final 12 melodies chosen and the frequency components of each melody.

TABLE 2.

The 12 common melodies selected for the test and their frequency ranges

| Melody | Range | Largest intervala |

Interval extantb |

Longest repeated notec |

|---|---|---|---|---|

| Frère Jacques | 262–392 (C4–G4) | 3rd | 7 | 4 |

| Happy Birthday | 262–533 (C4–C5) | Octave | 12 | 4 |

| Here Comes the Bride | 262–587 (C4–D5) | 5th | 7 | 6 |

| Jingle Bells | 262–392 (C4–G4) | 5th | 7 | 9 |

| London Bridge | 294–440 (D4–A4) | 4th | 7 | 3 |

| Mary Little Lamb | 262–392 (C4–G4) | M3rd | 7 | 4 |

| Old MacDonald | 294–494 (D4–B4) | 6th | 9 | 3 |

| Rock-a-Bye Baby | 247–494 (B3–B4) | 6th | 12 | 6 |

| Row Row Row | 262–523 (C4–C5) | 5th | 12 | 5 |

| Silent Night | 294–523 (D4–C5) | D7th | 10 | 6 |

| Three Blind Mice | 262–392 (C4–G4) | 5th | 7 | 4 |

| Twinkle Twinkle | 262–440 (C4–A4) | 5th | 9 | 2 |

Largest interval in the melody (m, minor; d, diminished).

Interval extant (range [in semitones] between the highest and the lowest notes in the melody).

Longest string of the same note.

All melodies were created using digitally synthesized musical tones, similar to those used in the pitch test. The tones had 500-millisecond duration and were presented at a tempo of 60 beats per minute. The amplitude of each note in the melody was randomly roved by ±4 dB, and 5 different versions of each melody were prerecorded. One version was randomly chosen each time the melody was presented, and all melodies were truncated at 8 seconds to prevent song length as a potential cue. To eliminate rhythm cues for melody recognition, the melodies were created by repeating all longer notes in an eighth-note pattern, yielding isochronous melodies.

During testing, the listeners were presented with the names of the 12 melodies on a questionnaire and were asked to indicate their familiarity with each song. Unfamiliar songs were included in the test but were removed from the final analysis. The listener was permitted to listen to all of the test melodies during the practice section; then, each melody was presented 3 times in random order for identification from a closed set. The final score was reported as a percentage of correct response on the melodies with which the listener was familiar.

Timbre Identification

The timbre section tested closed set recognition of 8 commonly recognizable musical instruments. Some studies of timbre have used synthesized or highly controlled samples, which have the advantage of greater experimental control. However, it is difficult to extrapolate the findings from such isolated stimuli to contextualized experiences of real-life music listening, and even trained musicians can have difficulty recognizing instruments on the basis of synthetic representations (10). Thus, we used recordings of real instruments playing a standardized, connected melodic sequence, which preserves transients that are important cues for recognition. The instruments were selected to represent a variety of harmonic spectra and for general familiarity, established through surveys of hearing and music experts and from earlier studies in which many of the same instruments have been used successfully (2,11). These instruments included the 4 major instrument families: pitched percussion was represented by the piano; string by the violin, cello, and acoustic guitar; brass by the trumpet; and woodwind by the flute, clarinet, and saxophone.

The selected instruments were recorded playing an identical melodic sequence composed specifically for this test. This concise melodic pattern consisted of 5 equal-duration notes and included bidirectional intervallic changes, both stepwise movements and skips, within the octave above middle C. Experienced musicians were instructed to play using a uniform tempo indicated by a blinking metronome at 82 beats per minute, the same intensity of mezzo forte, and the same articulation and phrasing. The recordings were performed at a professional recording studio using a Royer R-121 ribbon microphone and an AKG C-414 B-ULS TLII large diaphragm condenser microphone. They were amplified by a Focusrite ISA 428 preamplifier, recorded at 48 kHz and 24-bit resolution into a Soundscape R.Ed 32 hard disk using Soundscape I-Box XLR 24 converters. The microphone placement and mix varied depending on the instrument. The amplitude of each sample was normalized using root mean square followed by peak normalization by means of Adobe Audition software.

Similar to the melody test format, the listeners were permitted to listen to all instruments during the practice section. Each instrument sample was then presented 3 times in random order for identification from a closed set. The final score was reported as the percentage of correct response.

Test Administration

For all listeners, the CAMP test was presented on a computerized interface developed on Matlab 7.0 and run on an Apple PowerBook G4 with OS X. The graphical interface consisted of large buttons that the listeners navigated using a mouse. The test was administered in sound field in a sound attenuating booth, with stimuli presented through B&W DM303 external speakers at 65 dBA. The CI listeners were allowed to adjust their processor to the most comfortable level of loudness. A test administrator monitored the test progress outside the booth and was available for questions. Feedback on performance was given at the end of the test.

RESULTS

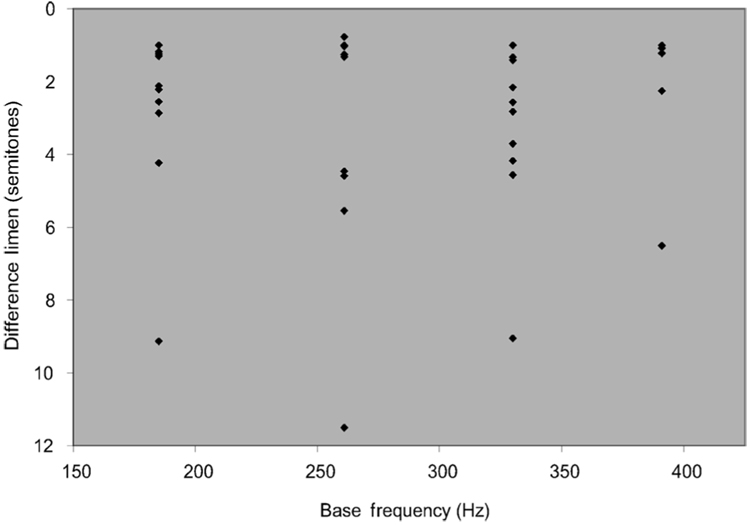

In the pitch discrimination subtest, performance at each base frequency ranged from a minimum difference limen (DL) of 1 semitone to a maximum of 9.1 semitones at 185 Hz, 11.5 semitones at 262Hz, 9.0 semitones at 330Hz, and 6.5 semitones at 394 Hz. Figure 2 shows the distribution of scores among listeners at the 4 base frequencies. For some listeners, the raw pitch score and the psychometric curve suggest that the true DL was less than 1 semitone, but because 1 semitone was the smallest tested interval, it was considered the best achievable score.

FIG. 2.

Graph showing a wide range in performance in complex pitch direction change results. Difference limen refers to the size of the interval between notes necessary for a listener to distinguish that they are different notes. Each data point corresponds to 1 listener. Note the y-axis reversal such that smaller DLs, which are better scores, are at the top.

Performance on the synthetic melody identification test ranged from 6% to 81% correct, with a mean of 23% and a standard deviation (SD) of 23%. Many CI listeners found this task very difficult. Some reported a lack of familiarity with some melodies, and Listener L7 reported having never been able to hear well enough to know them. The range of performance was positively skewed, suggesting that this test was particularly difficult.

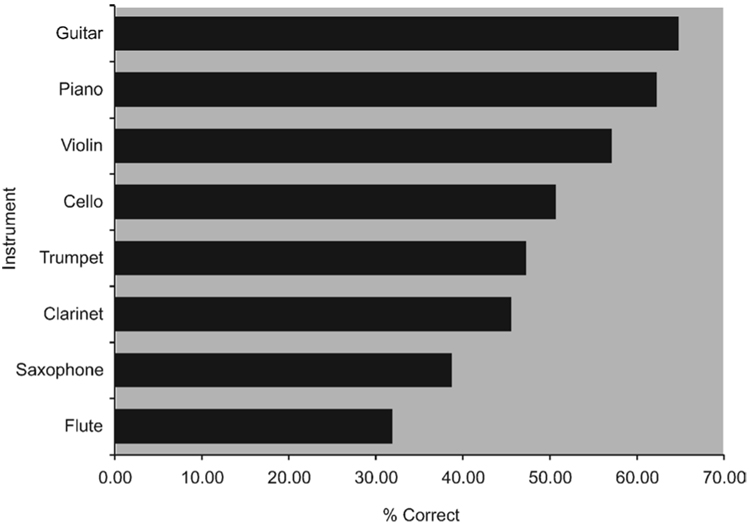

Timbre scores ranged from 21% to 54% correct for the CI listeners, with a group mean of 49% and an SD of 11%. The CI listeners correctly identified the guitar most often (64% correct), and the flute least often (31% correct) (Fig. 3). Confusion matrices showed that the flute was most often confused with the cello. Interfamily confusions were common for all of the woodwinds: when presented with a woodwind instrument, the CI listeners were more likely to select a brass or string instrument than to select another woodwind. Interfamily confusions by CI listeners, particularly mistaking the flute for a cello, were also reported by Gfeller et al. (10).

FIG. 3.

Graph showing the total percentage of correct responses scored by all listeners for each instrument, demonstrating relative difficulty of each instrument on the timbre test.

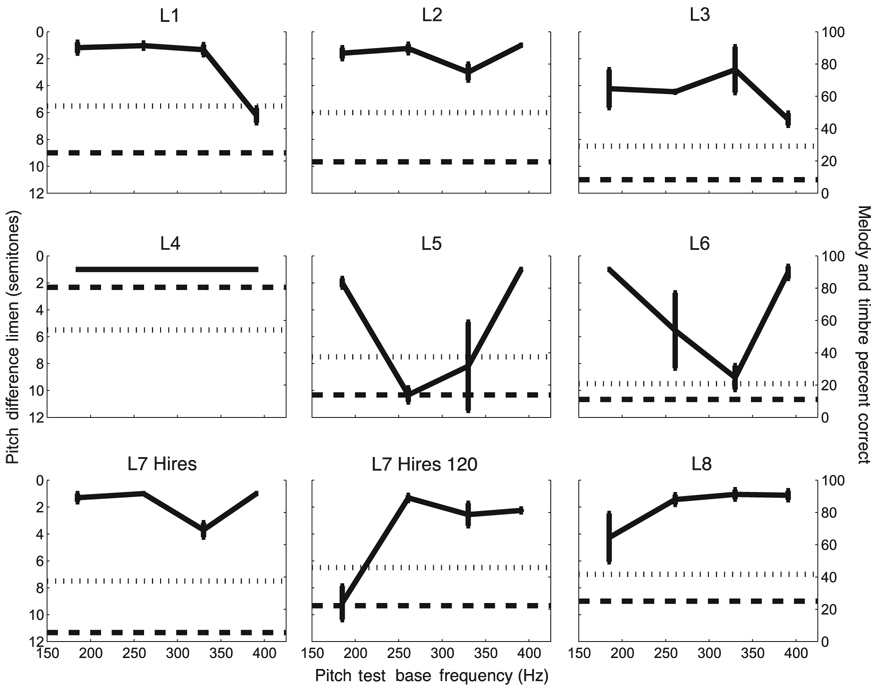

Figure 4 shows composite graphs of pitch, melody, and timbre scores for each listener. Analysis of the extremes of performance suggests that a listener with a pitch threshold greater than 1 semitone at any base frequency will exhibit poor melody recognition. Listener L4, who was the only listener with a DL of 1 semitone at all of the tested pitch base frequencies, demonstrated the highest melody score (81%). Except for L4, listeners generally scored better on the timbre test than on the melody test. Listener L5 had the poorest single-pitch discrimination score (10.9 semitones at 262 Hz) and the lowest mean score (5.3 semitones) for 262, 330, and 394 Hz, the 3 base frequencies included in the melodies. Thus, not surprisingly, L5 had 1 of the lowest melody recognition scores (11%) and the lowest timbre recognition score (21%).

FIG. 4.

Graphs showing pitch scores (solid line with standard error bars) for each listener, overlaid with their melody (long hash line) and timbre test (short hash line) results. Note that the pitch score axis is inverted such that a smaller DL, which corresponds to a better score, is located higher on the graph.

The average duration of all tests, including the pitch, melody, and timbre subsections, was 42.1 minutes (SD, 10.9 min). Listeners reported that the melody test was particularly difficult, but all were able to complete the 3 tests with minimal intervention from the test administrator.

DISCUSSION

A good test of music perception in CI listeners should reliably differentiate many levels of ability in musically relevant tasks. Although music is a broadly defined, subjective experience, there are physical properties of music that can be controlled and objectively assessed. Pitch discrimination, melody recognition, and timbre recognition have been used in other studies of music perception in CI users. Consistent with those studies and the objectives of test development, initial clinical trials with the CAMP test demonstrated a broad range of perceptual accuracy in CI listeners on all 3 subtests. The sampling of CI listeners exhibited a range of demographic and CI usage characteristics. Test administration conditions were standardized by maintaining uniform sound booth parameters and use of the computerized test format. In addition, minimal oversight by the test administrator was necessary.

The melody test was the most difficult subtest for our listeners. The rationale for testing familiar melody recognition is that not only does it efficiently test whether listeners are able to hear distinguishing features of the melody but it also tests whether listeners hear them correctly. For example, misperceiving any part of the melody —the component pitches, the pitch interval changes, or the overall melodic contour—can completely change the melody. Recognition is a high-level task that is expected to be difficult, and yet, fundamental properties in the music and cultural exposure together enable English-speaking, normal-hearing adults to identify melodies with high levels of accuracy (3). Some CI listeners are also able to score high, which demonstrates that the test is not excessively difficult. As CI technology continues to advance, improved electronic hearing should facilitate improved melody recognition. Because the melody test is able to separate a broad spectrum of ability, it should be useful for tracking these improvements.

A limitation of melody recognition, though, is that the test assumes previous knowledge of the songs. The test, then, might not be valid for prelingually deafened individuals. However, because the test includes a practice portion, it is possible that a listener with adequate music perception ability could learn the melody de novo. If high performance can be achieved through practice alone, it would imply that melody recognition is limited by the implant’s transmission of information and not by musical experience. Nonetheless, testing those with little or no music experience is suboptimal. The familiarity factor is one that is difficult to control. To limit this effect, we have chosen extremely common melodies.

The term melody test is also arguably a misnomer because real melodies have varying note durations and may have accompanying lyrics. These are important cues for song recognition that were intentionally omitted because it is known that implants already transmit this information reasonably well. We focused on pitch and pitch patterns because they are poorly represented and are implicated in other important skills, such as perception of speech in noise, understanding of tonal languages, and sound localization (12–14).

CONCLUSION

Music perception is a complex, multifactorial task that is highly variable but generally difficult for CI users. A variety of new signal processing strategies and implant designs are in development to address deficiencies in the transmission of pitch information, but no standardized, clinically practical test of music perception currently exists to enable uniform comparisons. We have developed a test of music perception for CI listeners, which comprises complex pitch direction change discrimination, melody recognition, and timbre recognition. Preliminary studies suggest that the test demonstrates a broad spectrum of ability and provides a method to measure music perception in CI listeners that is efficient and self-administrable. If in subsequent studies, the CAMP test can be shown to be a reliable and valid measure of music perception with minimal patient burden, it may have application as a widely distributed, standardized means of measuring music perception in a clinically practical manner.

Acknowledgments

The authors thank their listeners for dedicated efforts.

This work was supported by the National Institutes of Health grant R01-DC007525 and subcontracts of National Institutes of Health grants P50-DC00242 and P30-DC004661.

REFERENCES

- 1.Friesen LM, Shannon RV, Baskent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110:1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- 2.Gfeller K, Lansing CR. Melodic, rhythmic, and timbral perception of adult cochlear implant users. J Speech Hear Res. 1991;34:916–920. doi: 10.1044/jshr.3404.916. [DOI] [PubMed] [Google Scholar]

- 3.Kong YY, Cruz R, Jones JA, Zeng FG. Music perception with temporal cues in acoustic and electric hearing. Ear Hear. 2004;25:173–185. doi: 10.1097/01.aud.0000120365.97792.2f. [DOI] [PubMed] [Google Scholar]

- 4.Leal MC, Shin YJ, Laborde MI, et al. Music perception in adult cochlear implant recipients. Acta Otolaryngol. 2003;123:826–835. doi: 10.1080/00016480310000386. [DOI] [PubMed] [Google Scholar]

- 5.Looi V, McDermott HJ, McKay CM, Hickson L. Proceedings of the VIII International Cochlear Implant Conference. Indianapolis, IN: Elsevier; 2004. Pitch discrimination and melody recognition by cochlear implant users. [Google Scholar]

- 6.Gfeller K, Christ A, Knutson JF, Witt S, Murray KT, Tyler RS. Musical backgrounds, listening habits, and aesthetic enjoyment of adult cochlear implant recipients. J Am Acad Audiol. 2000;11:390–406. [PubMed] [Google Scholar]

- 7.McDermott HJ. Music perception with cochlear implants: a review. Trends Amplif. 2004;8:49–82. doi: 10.1177/108471380400800203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gfeller K, Turner C, Mehr M, et al. Recognition of familiar melodies by adult cochlear implant recipients and normal-hearing adults. Cochlear Implants Int. 2002;3:29–53. doi: 10.1179/cim.2002.3.1.29. [DOI] [PubMed] [Google Scholar]

- 9.Gfeller K, Woodworth G, Robin DA, Witt S, Knutson JF. Perception of rhythmic and sequential pitch patterns by normally hearing adults and adult cochlear implant users. Ear Hear. 1997;18:252–260. doi: 10.1097/00003446-199706000-00008. [DOI] [PubMed] [Google Scholar]

- 10.Gfeller K, Witt S, Woodworth G, Mehr MA, Knutson J. Effects of frequency, instrumental family, and cochlear implant type on timbre recognition and appraisal. Ann Otol Rhinol Laryngol. 2002;111:349–356. doi: 10.1177/000348940211100412. [DOI] [PubMed] [Google Scholar]

- 11.Gfeller K, Knutson JF, Woodworth G, Witt S, DeBus B. Timbral recognition and appraisal by adult cochlear implant users and normal-hearing adults. J Am Acad Audiol. 1998;9:1–19. [PubMed] [Google Scholar]

- 12.Smith ZM, Delgutte B, Oxenham AJ. Chimaeric sounds reveal dichotomies in auditory perception. Nature. 2002;416:87–90. doi: 10.1038/416087a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Xu L, Pfingst BE. Relative importance of temporal envelope and fine structure in lexical-tone perception. J Acoust Soc Am. 2003;114:3024–3027. doi: 10.1121/1.1623786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kong YY, Stickney GS, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- 15.Drennan WR, Won JH, Dasika VK, Rubinstein JT. Effects of temporal fine structure on the lateralization of speech and on speech understanding in noise. J Assoc Res Otolaryngol. 2007 Jun 21; doi: 10.1007/s10162-007-0074-y. Epub ahead of print. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gfeller K, Olszewski C, Rychener M, et al. Recognition of “real-world” musical excerpts by cochlear implant recipients and normal-hearing adults. Ear Hear. 2005;26:237–250. doi: 10.1097/00003446-200506000-00001. [DOI] [PubMed] [Google Scholar]

- 17.Laneau J, Wouters J, Moonen M. Improved music perception with explicit pitch coding in cochlear implants. Audiol Neurootol. 2006;11:38–52. doi: 10.1159/000088853. [DOI] [PubMed] [Google Scholar]

- 18.Vandali AE, Sucher C, Tsang DJ, McKay CM, Chew JWD, McDermott HJ. Pitch ranking ability of cochlear implant recipients: a comparison of sound-processing strategies. J Acoust Soc Am. 2005;117:3126–3138. doi: 10.1121/1.1874632. [DOI] [PubMed] [Google Scholar]

- 19.Looi V, Sucher C, McDermott HJ. Melodies familiar to the Australian population across a range of hearing abilities. Austr NZ J Audiol. 2003;25:75–83. [Google Scholar]