Abstract

Background

Current interventions to enhance patient self-efficacy, a key mediator of health behavior, have limited primary care application.

Objective

To explore the effectiveness of an office-based intervention for training resident physicians to use self-efficacy-enhancing interviewing techniques (SEE IT).

Design

Randomized controlled trial.

Participants

Family medicine and internal medicine resident physicians (N = 64) at an academic medical center.

Measurements

Resident use of SEE IT (a count of ten possible behaviors) was coded from audio recordings of the physician-patient portion of two standardized patient (SP) instructor training visits and two unannounced post-training SP visits, all involving common physical and mental health conditions and behavior change issues. One post-training SP visit involved health conditions similar to those experienced in training, while the other involved new conditions.

Results

Experimental group residents demonstrated significantly greater use of SEE IT than controls, starting after the first training visit and sustained through the final post-training visit. The mean effect of the intervention was significant [adjusted incidence rate ratio for increased use of SEE IT = 1.94 (95% confidence interval = 1.34, 2.79; p < 0.001)]. There were no significant effects of resident gender, race/ethnicity, specialty, training level, or SP health conditions.

Conclusions

SP instructors can teach resident physicians to apply SEE IT during SP office visits, and the effects extend to health conditions beyond those used for training. Future studies should explore the effects of the intervention on practicing physicians, physician use of SEE IT during actual patient visits, and its influence on patient health behaviors and outcomes.

KEY WORDS: education, medical; patient simulation; physician-patient relations; randomized controlled trials; self-efficacy

Self-efficacy, or confidence in ones ability to take steps to attain personal goals,1 is a key mediator of patient health behavior.2–13 Self-efficacy is mutable in response to interventions, and self-efficacy enhancement improves health outcomes.14–23 Interventions to enhance self-efficacy are typically delivered to patients by specially trained non-physician personnel,14–18,20–23 often in settings separate from where care is delivered.14,17,18,20–23 Such interventions avoid over-crowding visit agendas in primary care.24 However, disadvantages include the inability to harness the power of therapeutic physician-patient relationships, the condition-specific nature of most interventions,14–18,19,21,22 and limited dissemination potential.

If primary care physicians could be taught to use effective, time-efficient interviewing techniques to bolster patient self-efficacy that could be applied generally to facilitate health behavior change, the potential for wide dissemination and improved outcomes would be considerable. Indeed, recognizing the futility of expecting primary care providers to apply an ever-increasing number of condition-specific interventions,25 recent blueprints for health system redesign have called for the development of such interventions.26,27

We conducted the Self-Efficacy Enhancing Interviewing Techniques (SEE IT) study, a randomized controlled trial (RCT) comparing the effects of an office-based, standardized patient (SP) instructor intervention on resident physician use of generic SEE IT with the effects of an attention control SP instructor condition. The intervention was grounded in self-efficacy theory1 and informed by prior successful interventions to enhance self-efficacy and improve outcomes.20,23 The SP instructor approach was chosen because it allows training to occur during usual office hours, which we anticipated busy residents and, ultimately, practicing physicians would appreciate. The training setting also enhances the salience of the techniques and allows learners to immediately practice and begin assimilating newly presented techniques. SP instructor interventions have been effective in improving physicians’ skills in breaking bad news,28 obtaining informed consent,29 and assessing human immunodeficiency virus risk.30 By contrast, didactic educational approaches may improve knowledge, but have less effect on physician behavior.31

We hypothesized that residents receiving the experimental intervention would use SEE IT more during two unannounced post-intervention (non-training) SP visits than would controls. We also explored whether greater resident use of SEE IT during post-intervention visits would generalize beyond the specific clinical contexts encountered during training visits.

METHODS

Study activities described were conducted from March 2006 through March 2008. The University of California Davis Institutional Review Board approved the study protocol.

Study Setting, Sample Recruitment, and Randomization

Resident physicians were recruited from the family medicine and internal medicine residency training programs. An a priori power calculation, based on a simple before/after analysis, determined a sample size of 64 residents would provide 80% power to detect an intervention effect size of 0.5 or greater on resident use of SEE IT, with alpha = 0.05, two-tailed, and equal size experimental and control groups. The power analysis did not account for the repeated measures, which were expected to increase power. However, that gain in power would likely be partially offset by the correlation among repeated observations. Because the correlation was unknown in this new intervention, the power analysis conservatively ignored the repeated measures.

Residents were solicited for participation via flyers, e-mails, and presentations at house staff meetings. A research assistant obtained interested residents’ informed consent for participation, using a standard consent form as a guide, and implemented a randomized allocation scheme in blocks of 32 residents via sealed opaque envelopes containing slips of paper printed with group assignments. Throughout the study, the SPs, SP trainer, and research assistant were aware of residents’ random group allocation, while the study investigators, audio recording coders, and biostatistician were blinded to resident group allocation. All residents received two $20 gift cards for participating in the study, one upon enrollment and one upon completion.

Procedures

Study interventions Residents received their randomly assigned intervention during two pre-announced, 30-min SP instructor visits, scheduled 2 weeks apart. We employed pre-announced SP instructor visits because they are effective and physicians tend to prefer them to unannounced SP visits.30 All experimental and control intervention visits were audio recorded, using pocket digital recorders carried by the SPs.During the first 15 min of each training visit, the SP instructors remained in their patient roles (Table 1), both of which involved coexisting chronic medical and mental health conditions, reflecting their frequent co-occurrence in primary care.32 To further enhance realism, most behavior-change issues in the scenarios were of the “hidden agenda” variety, requiring elicitation by the residents. The order in which patients A and B were encountered varied among residents within each group. At approximately 15 min into each training visit, the SP instructors came out of patient role, introduced themselves, briefly reviewed the purpose of the remaining portion of the visit, addressed any questions, and then delivered the resident’s randomly assigned study intervention, using standard scripts implemented on laminated flip cards. At each intervention visit, the SP instructors also provided residents with a one-page printed summary of the material being presented, referring to it throughout sessions for orientation and clarification.

Table 1.

Characteristics of Study Standardized Patient Cases

| Case | Age | Gender | Chronic medical problems | Mental health issues | Health behavior change issues |

|---|---|---|---|---|---|

| Intervention* | |||||

| A | 48 | Male | Type 2 diabetes, hyperlipidemia | Depression | Poor adherence to all medications |

| B | 42 | Female | Asthma | Generalized anxiety | Poor antidepressant adherence; desire to increase exercise, with prior attempts limited by dyspnea and resulting anxiety |

| Evaluation* | |||||

| C | 42 | Female | Type 2 diabetes, hyperlipidemia | Depression | Desire to improve diet to better control diabetes and lipids; sporadic home glucose monitoring |

| D | 66 | Female | Osteoarthritis | Post-traumatic stress disorder, alcoholism | Relapse of heavy drinking to try and cope with post-traumatic stress disorder-related symptoms |

*Note: the order in which residents encountered patients A and B during the training phase and patients C and D during the evaluation phase of the study varied among residents within each study group

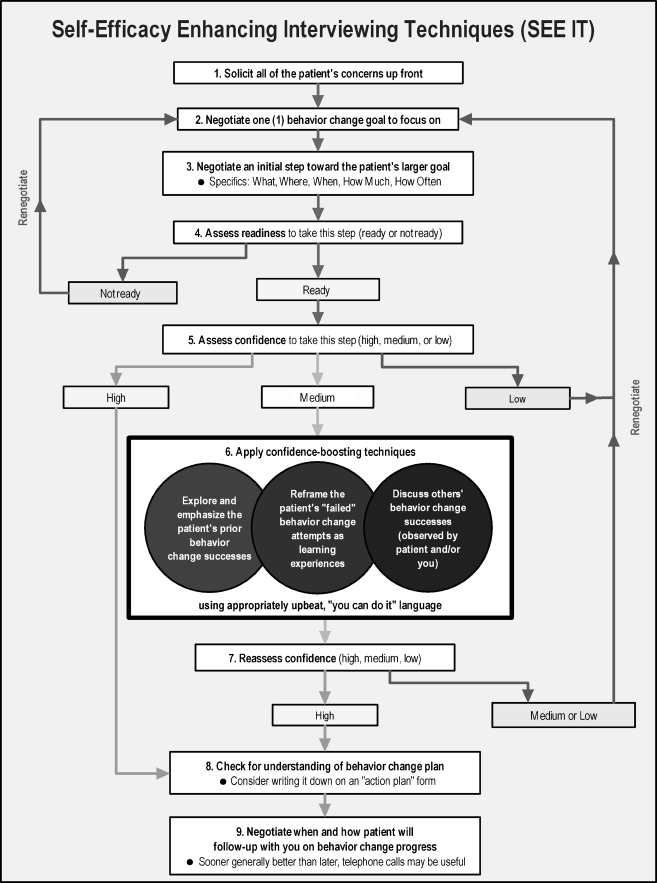

Experimental Intervention

The SP instructors presented nine discrete SEE IT components to residents in a logical sequence (Fig. 1). However, they emphasized the techniques need not always be applied in that sequence and might not be useful to apply in all visits. The nine techniques were drawn from self-efficacy and stage of change theory,1 direct observation of primary care office visits,33 and research-proven approaches to enhancing self-efficacy.20,23 SPs employed the structure outlined in Table 2 to teach each technique.

Figure 1.

Study self-efficacy-enhancing interviewing techniques and their presentation sequence.

Table 2.

Process Employed by Study-standardized Patient Instructors in Presenting SEE IT

| Step | Example statements from intervention script |

|---|---|

| 1. Briefly state the technique | “The first technique is asking the patient about all of their concerns at the start of the visit” |

| 2. Tie discussion of the technique to what happened in patient role part of visit | |

| a. If the resident used the technique–briefly reinforce, move to next technique | “You asked me about my concerns right at the start of the visit. That’s great!” |

| b. If the resident did not use the technique, or did not use it appropriately–gently point it out, then go on to step 3 | “As you’ll recall, my concern about missing my medications didn’t come out until near the end of the visit. Time was nearly up then, so we weren’t able to effectively deal with this issue” |

| 3. Provide an example of how technique might be used, or used more optimally | “You might have said near the start of the visit, |

| ‘What things would you like to talk about today?’ or, after a patient has told you some concerns, ‘Anything else you want to talk about?’” | |

| 4. Allow the resident to briefly practice using the technique | “Of course you might want to use your own words to best fit your style. What words might you use to ask for this information?” Pause to listen to resident’s response, and if reasonable: “If you’d said that, then I would have said ‘I want to talk about how tired I’ve been and about checking my blood sugars’” |

| 5. Ask for questions, clarify as needed | na |

| 6. Go to next technique | na |

Attention Control Intervention

Residents randomly allocated to control received no training in the use of SEE IT. During the second 15 min of their training visits, SP instructors presented scripted education aimed at raising resident awareness of the frequent co-occurrence of chronic medical and mental health problems in primary care (Table 1).34–37 However, there was no mention of self-efficacy or self-efficacy enhancement in the control intervention, and interviewing skills were not discussed, taught, or practiced. Residents were provided opportunities to ask clarifying questions about any of the material presented.

Post-Intervention (Evaluation) SP Visits

Within 1 month of completing training, all residents received two unannounced 30-min evaluation (non-training) SP visits. The electronic medical record system used by both participating residency programs did not support creation of complete fictional charts. Instead, the SP charts were “placeholders” containing a name and demographics, but no clinical data. Thus, while the post-intervention SP visits were unannounced, all residents were able to discern they were seeing an SP upon opening the SP electronic charts.

The patient characteristics and concerns in the post-intervention visits (Table 1) were selected to allow exploration of whether resident use of SEE IT would be context-specific—i.e., only with evaluation patient C, whose health conditions were similar to those of training patient A—or more general, occurring in both evaluation visits. The order in which patients C and D were encountered varied among residents in each study group. SPs remained in patient role throughout evaluation visits, which were again audio recorded using concealed pocket digital recorders.

Standardized Patient Training and Fidelity

Two SP instructors (one man, one woman) were each trained to deliver half of the experimental and control interventions. The other four SPs, all women, were trained to conduct evaluation visits. The training process was developed and implemented by a physician assistant with over 25 years of SP training experience. The SP trainer and principal investigator conducted quarterly fidelity audits of SP visit audio recordings, using a standardized checklist, and provided corrective feedback as indicated. Further information regarding the intervention is available from the authors.

Measures

Resident use of SEE IT The ten-item Doctors’ Observable Use of Self-Efficacy Enhancing Interviewing Techniques (DO U SEE IT) measure was developed for this study (Appendix). Six of the measure items corresponded to SEE IT Techniques 1–4, 8 and 9, respectively; three additional items corresponded to the three sub-techniques of Technique 6; and a final item was included to assess residents’ use of both Techniques 5 (assess self-efficacy) and 7 (re-assess self-efficacy). All ten items employed a yes/no response scale, with 1 point assigned for each “yes” response (resident used the technique). Individual item scores were added to yield a summary score (range 0–10).Three coders first independently applied the DO U SEE IT measure to audio recordings of the patient role portion of all study SP visits, noting the digital counter number(s) of any segments they felt justified “yes” codes. To maintain coder blinding to the resident study group, physician-patient introductions were removed from the beginning of all recordings, and training visit recordings were truncated just before the SP instructors came out of role. Cronbach’s alpha derived from the inter-correlations among the three coders’ total DO U SEE IT scores was 0.88. Subsequently, one master coder reconciled discrepancies via re-review of pertinent recording sections and discussion with the other coder(s) until consensus was reached. The consensus DO U SEE IT scores were employed in our study analyses.

Other measures Also assessed at baseline were resident age, gender, race/ethnicity, specialty (family medicine or internal medicine), and year of training. Following the final study visit, residents were also asked to complete a single item assessing the overall quality of the training they had received (5-point Likert scale, 1 = excellent to 5 = poor). Four additional items assessed whether the training was useful and/or easy to understand, whether the resident intended to apply the training in actual patient encounters, and whether they would be interested in receiving further training (5-point Likert scales, 1 = strongly agree to 5 = strongly disagree). Two final open-ended items asked residents to say what they liked least and best about their training.

Analyses

Analyses were conducted with SAS (version 9.1, SAS Institute, Inc., Cary, NC) and Stata (version 10.1, StataCorp, College Station, TX). The effects of the intervention on residents’ use of SEE IT were examined using generalized linear models (GLMs) implementing a Generalized Estimating Equations approach to accommodate the repeated measures on each resident.38 The summary DO U SEE IT score at each time point or the proportion of obtained score out of the total possible (10 points) was used as the dependent variable.

Because the score is a count with limited range and not normally distributed, we examined four alternative GLMs: Gaussian, Gaussian using an arcsin transformation of the score proportion, Poisson, and logistic using the score proportion. Results were consistent among these approaches, and we report the adjusted incidence rate ratio (IRR) for the mean intervention effect from the Poisson model. In this study, the adjusted IRR is the adjusted average number of SEE IT behaviors per visit observed in experimental group residents divided by the number observed in control group residents. The key independent variables were group (intervention vs. control), visit (2, 3, or 4), visit * group interaction (to assess learning consolidation or attenuation), and SP conditions (same as training conditions vs. different). Analyses adjusted for baseline SEE IT score, resident gender, race/ethnicity (white vs. other), specialty (family or internal medicine), year of training (1st, 2nd, 3rd, or 4th or higher). SP teaching times in training visits were longer in the experimental compared with control group (see Results), but because teaching time was collinear with study group, it was excluded from the reported analysis.

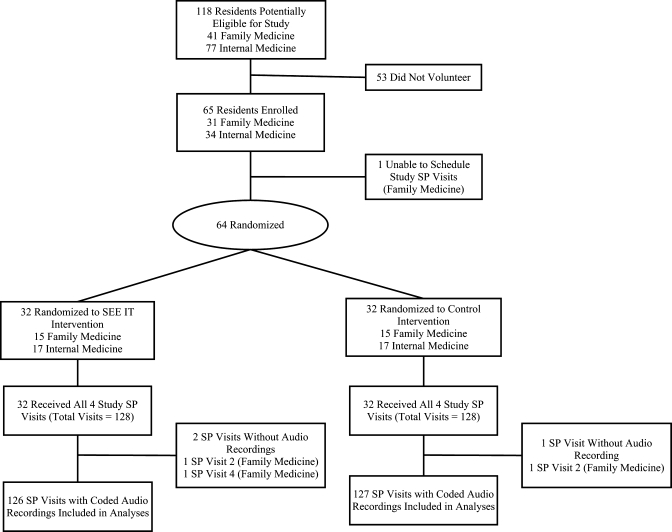

RESULTS

Figure 2 diagrams the flow of participating residents through the trial. Of 118 eligible residents 64 (54%) were randomized: 30 (73%) of 41 family medicine residents and 34 (44%) of 77 internal medicine residents. Resident characteristics are summarized in Table 3.

Figure 2.

Flow of participants through the trial. SP = standardized patient.

Table 3.

Characteristics of Participating Resident Physicians by Group

| Characteristic, no. (%) | Experimental group (N = 32) | Control group (N = 32) |

|---|---|---|

| Gender | ||

| Female | 16 (50) | 17 (53) |

| Race/ethnicity | ||

| White | 14 (44) | 18 (56) |

| Asian | 18 (56) | 12 (38) |

| Other | 0 (0) | 2 (6) |

| Specialty | ||

| Family medicine | 15 (47) | 15 (47) |

| Internal medicine | 17 (53) | 17 (53) |

| Year of training | ||

| 1st | 2 (6) | 4 (12) |

| 2nd | 15 (47) | 19 (59) |

| 3rd | 14 (44) | 8 (25) |

| 4th or higher | 1 (3) | 1 (3) |

All 64 residents received four study visits (total visits = 256). However, equipment problems led to missing audio recordings for three visits (2 experimental, 1 control). The mean [standard deviation (SD)] length of the training portions of the two intervention visits was longer in the experimental group [visit 1, 15.64 (2.15) min; visit 2, 17.42 (3.33) min] than in the control group [visit 1, 8.50 (1.12); visit 2, 7.80 (0.98)] (p < 0.001 for both comparisons). The mean (SD) number of days between study visits 2 and 3 was also significantly greater for the experimental group [25.25 (14.05)] than for the control group [18.66 (10.88)] (p = 0.03).

Table 4 shows unadjusted DO U SEE IT scores by study group and visit. At baseline, experimental group residents used SEE IT about 40% more than control group residents (p = 0.05). However, starting with visit 2, experimental group use of SEE IT doubled compared with the control group, a difference sustained through visits 3 and 4. Cronbach’s alpha derived from the inter-correlations among the residents’ ten binary scale DO U SEE IT items was 0.61.

Table 4.

Unadjusted Use of Self-efficacy Enhancing Interviewing Techniques (SEE IT) by Study Group and Visit

| Study visit | Unadjusted mean (SD, median, interquartile range, range) DO U SEE IT* scores | P value | |

|---|---|---|---|

| Experimental group (N = 32; 126 visits) | Control group (N = 32; 127 visits) | ||

| Visit 1 | 1.66 (1.07, 1, 1–2, 0–6) | 1.16 (0.92, 1, 0.5–2, 0–3) | 0.05 |

| Visit 2 | 2.87 (1.52, 3, 2–4, 1–8) | 1.48 (1.00, 1, 1–2, 0–4) | 0.001 |

| Visit 3 | 3.16 (1.30, 3, 2.5–4,1–6) | 1.69 (1.00, 1, 1–2, 1–5) | < 0.001 |

| Visit 4 | 3.23 (1.82, 3, 2–4, 1–7) | 1.16 (0.72, 1, 1–2, 0–3) | < 0.001 |

*DO U SEE IT = Doctors’ Observable Use of Self-Efficacy Enhancing Interviewing Techniques

The Poisson GLM adjusting for baseline SEE IT use and other covariates revealed a significant mean effect of the intervention [adjusted incidence rate ratio = 1.94 (95% confidence interval = 1.34, 2.79; p < 0.001)]. There were no significant resident gender, race/ethnicity, specialty, training level, SP health condition, or growth/attenuation effects.

The mean (SD) rating for overall quality of the training received was in the “good” to “excellent” range [1.94 (0.93) and 1.97 (0.80), respectively, in the experimental and control groups; p = 0.88]. Ratings for perceived usefulness and understandability of training, and intention to apply the training in future patient encounters, and interest in receiving similar additional training were in the “agree” to “strongly agree” range for both study groups. Residents cited the delivery format, practicality, concise handouts, and preparedness and skills of the SP instructors as favorable aspects of the interventions. They generally disliked the visit scheduling difficulties, limited SP instructor teaching time, and artificiality of SP encounters in general.

DISCUSSION

Though effective, prior interventions for enhancing patient self-efficacy have been focused directly on patients, have often been condition-specific14–18,19,21,22 and have been removed from physician-patient office visits.14,17,18,20–23. We found a brief, office-based, SP instructor-delivered intervention led to significant improvements in family medicine and internal medicine residents’ use of SEE IT during SP encounters. Of note, the difference became apparent after one training visit, and persisted without attenuation through two post-intervention visits conducted within a month of completing the intervention, only one of which involved patient conditions encountered by residents during a training case. These observations suggest that resident use of SEE IT may generalize to patient health behavior concerns beyond those encountered during training visits.

While our case scenarios reflected common presentations of prevalent physical and mental health conditions in primary care, studies more optimally designed to assess physicians’ use of SEE IT across patient concerns are required to further explore this issue. Prior studies indicate that physician interviewing behaviors demonstrated during SP encounters correlate with their use during real patient encounters,40 and with patient ratings of physician interviewing behaviors.41 Future studies should evaluate physician use of SEE IT during actual patient visits over longer periods of time and explore the impact of SEE IT training on patient self-efficacy, health behaviors, and outcomes.

Self-efficacy is a key mediator of patient behaviors across a wide array of health conditions and settings,42 and self-efficacy enhancement can lead to improved health outcomes.14–23 If SEE IT training is shown to facilitate patient health behavior change and improve patient outcomes, its potential to improve health care could be considerable. First, however, it would need to be disseminated from research into practice. To do so, training centers could be strategically placed to supply SP instructors to practices in various regions.

Self-efficacy also tends to be lowest in those most vulnerable to adverse health outcomes, such as minorities44 and people with depression,45 yet preliminary evidence suggests such individuals may derive the greatest benefit from well-conceived self-efficacy enhancing interventions.23,46 Thus, training physicians to employ SEE IT also holds promise as a “downstream” approach to reducing health-care disparities.47

Encouragingly, participating residents were not highly selected; 73% of eligible family medicine residents and 44% of eligible internal medicine residents enrolled. However, the Cronbach’s alpha among DO SEE IT items was moderate (0.61), suggesting that individual residents were consistent in their use of SEE IT (i.e., substantial, moderate, or minimal use). Additional work is needed to optimize resident response to SEE IT training.

The SP instructors presented SEE IT sequentially. Since the teaching lasted only 15 min, and residents had little familiarity with the material, techniques toward the bottom of Figure 1 received less attention. Exploratory analyses confirmed that most changes in experimental group resident use of SEE IT relative to controls occurred for Techniques 1 through 6 (details available upon request). Providing the SEE IT training over three to four visits might remedy this problem. However, studies are be required to examine how increasing the number of SEE IT training visits may affect resource requirements, clinical effectiveness, and dissemination potential.

Our RCT had additional limitations. The experimental group had more senior year residents and greater baseline use of SEE IT than controls; however, the analyses adjusted for these differences. The teaching portion of both training visits was twice as long in the experimental as control group, so observed differences in post-intervention SEE IT may reflect attention differences. Also, while the post-intervention SP visits were not pre-announced, participating residents discovered they were seeing an SP after logging into the electronic medical record. Thus, we have shown residents were able to apply SEE IT under “prompted” circumstances (unannounced but detected SPs). However, prior SP intervention studies suggest little impact on outcomes whether SPs are detected or not.48 Finally, studies will be needed to address whether the intervention will work in practicing physicians. A similar SP instructor intervention has been shown to improve practicing physicians’ human immunodeficiency virus risk assessment interviewing skills.30

We conclude that SP instructors can train residents to apply SEE IT during office visits with SPs portraying individuals with common primary care problems and health behavior change concerns. The effect was apparent after a single SP instructor training visit and persisted without attenuation through two additional evaluation SP visits. Studies are warranted to determine the effects of SEE IT on practicing physicians, and on actual patients, including their health behaviors and outcomes. However, our findings suggest that training primary care physicians to use SEE IT—generic techniques that can be applied to facilitate essentially any health behavior change—has potential for wide dissemination and clinical impact.

Acknowledgements

This research was funded in part by a National Institutes of Mental Health Midcareer Investigator Award in Patient-Oriented Research (5K24MH072756) to Dr. Kravitz, and a University of California Davis Department of Family and Community Medicine research award to Dr. Jerant. The authors wish to express their deepest appreciation to Betsy Reifsnider and Stephen Savage, for their outstanding contributions to the study intervention scripts and as standardized patient instructors, and to the four study evaluation standardized patients.

Conflicts of interest None disclosed

APPEDIX. Doctors’ Observable Use of Self-efficacy-enhancing Interviewing Techniques (DO U SEE IT) Measure

Doctor solicited of all of the patient’s concerns.

Doctor negotiated with the patient about discussing just one (1) behavior change during the encounter.

Doctor negotiated with the patient about making initial incremental behavior change step(s) to move the patient closer to their larger goal(s).

Doctor explored patient readiness for making any behavior change(s).

Doctor explored patient self-efficacy or confidence in their ability to take steps toward behavior change(s).

Doctor explored prior behavior change successes achieved by the patient.

Doctor reframed prior “failed” behavior change attempts by patient as learning experiences.

Doctor discussed other people’s successes with behavior change AND/OR asked patient to talk about successful behavior change attempts they had witnessed.

Doctor checked for patient understanding of the action plan(s) for behavior change(s).

Doctor negotiated when and how patient was to follow up with them regarding the progress of their health behavior change(s).

Coder response options for all items were “Yes–doctor used the technique (1 point)” or “No–doctor did not use the technique (0 points).” When relevant, coders also recorded the digital recording counter point(s) at which the techniques were used.

References

- 1.Bandura A. Self-efficacy: the exercise of control. New York: Freeman; 1997.

- 2.Aljasem LI, Peyrot M, Wissow L, Rubin RR. The impact of barriers and self-efficacy on self-care behaviors in type 2 diabetes. Diabetes Educ. 2001;27:393–404. [DOI] [PubMed]

- 3.DiClemente CC, Prochaska JO, Gibertini M. Self-efficacy and the stages of self-change of smoking. Cogn Ther Res. 1985;9:181–200. [DOI]

- 4.McDonald-Miszczak L, Wister AV, Gutman GM. Self-care among older adults: An analysis of the objective and subjective illness contexts. J Aging Health. 2001;13:120–45. [DOI] [PubMed]

- 5.Roelands M, Van Oost P, Depoorter A, Buysse A. A social-cognitive model to predict the use of assistive devices for mobility and self-care in elderly people. Gerontologist. 2002;42:39–50. [DOI] [PubMed]

- 6.Zimmerman BW, Brown ST, Bowman JM. A self-management program for chronic obstructive pulmonary disease: relationship to dyspnea and self-efficacy. Rehabil Nurs. 1996;21:253–7. [DOI] [PubMed]

- 7.Friedman LC, Nelson DV, Webb JA, Hoffman LP, Baer PE. Dispositional optimism, self-efficacy, and health beliefs as predictors of breast self-examination. Am J Prev Med. 1994;10:130–5. [PubMed]

- 8.Kloeblen AS, Batish SS. Understanding the intention to permanently follow a high folate diet among a sample of low-income pregnant women according to the Health Belief Model. Health Educ Res. 1999;14:327–38. [DOI] [PubMed]

- 9.Mahoney CA, Thombs DL, Ford OJ. Health belief and self-efficacy models: their utility in explaining college student condom use. AIDS Educ Prev. 1995;7:32–49. [PubMed]

- 10.Kobau R, DiIorio C. Epilepsy self-management: a comparison of self-efficacy and outcome expectancy for medication adherence and lifestyle behaviors among people with epilepsy. Epilepsy Behav. 2003;4:217–25. [DOI] [PubMed]

- 11.Kremers SP, Mesters I, Pladdet IE, van den Borne B, Stockbrugger RW. Participation in a sigmoidoscopic colorectal cancer screening program: a pilot study. Cancer Epidemiol Biomark Prev. 2000;9:1127–30. [PubMed]

- 12.Ammassari A, Trotta MP, Murri R, et al. Correlates and predictors of adherence to highly active antiretroviral therapy: overview of published literature. J Acquir Immune Defic Syndr. 2002;31(Suppl 3):S123–7. [DOI] [PubMed]

- 13.Brus H, van de Laar M, Taal E, Rasker J, Wiegman O. Determinants of compliance with medication in patients with rheumatoid arthritis: the importance of self-efficacy expectations. Patient Educ Couns. 1999;36:57–64. [DOI] [PubMed]

- 14.Barnason S, Zimmerman L, Nieveen J, Schmaderer M, Carranza B, Reilly S. Impact of a home communication intervention for coronary artery bypass graft patients with ischemic heart failure on self-efficacy, coronary disease risk factor modification, and functioning. Heart Lung. 2003;32:147–58. [DOI] [PubMed]

- 15.Lin EH, Von Korff M, Ludman EJ, et al. Enhancing adherence to prevent depression relapse in primary care. Gen Hosp Psych. 2003;25:303–10. [DOI] [PubMed]

- 16.Rost K, Nutting P, Smith JL, Elliott CE, Dickinson M. Managing depression as a chronic disease: a randomised trial of ongoing treatment in primary care. BMJ. 2002;325:934. [DOI] [PMC free article] [PubMed]

- 17.Brody BL, Roch-Levecq AC, Gamst AC, Maclean K, Kaplan RM, Brown SI. Self-management of age-related macular degeneration and quality of life: a randomized controlled trial. Arch Ophthalmol. 2002;120:1477–83. [DOI] [PubMed]

- 18.Dallow CB, Anderson J. Using self-efficacy and a transtheoretical model to develop a physical activity intervention for obese women. Am J Health Promot. 2003;17:373–81. [DOI] [PubMed]

- 19.Kukafka R, Lussier YA, Eng P, Patel VL, Cimino JJ. Web-based tailoring and its effect on self-efficacy: results from the MI-HEART randomized controlled trial. Proc AMIA Symp. 2002;410–4. [PMC free article] [PubMed]

- 20.Lorig KR, Sobel DS, Stewart AL, et al. Evidence suggesting that a chronic disease self-management program can improve health status while reducing hospitalization: a randomized trial. Med Care. 1999;37:5–14. [DOI] [PubMed]

- 21.Salbach NM, Mayo NE, Robichaud-Ekstrand S, Hanley JA, Richards CL, Wood-Dauphinee S. The effect of a task-oriented walking intervention on improving balance self-efficacy poststroke: a randomized, controlled trial. J Am Geriatr Soc. 2005;53:576–82. [DOI] [PubMed]

- 22.Tsay SL, Dallow CB, Anderson J, et al. Self-efficacy training for patients with end-stage renal disease. J Adv Nurs. 2003;43:370–5. [DOI] [PubMed]

- 23.Jerant AF, Kravitz RL, Moore-Hill MM, Franks P. Depressive symptoms moderated the effect of chronic illness self-management training on self-efficacy. Med Care. 2008;46:523–31. [DOI] [PubMed]

- 24.Chernof BA, Sherman SE, Lanto AB, Lee ML, Yano EM, Rubenstein LV. Health habit counseling amidst competing demands: effects of patient health habits and visit characteristics. Med Care. 1999;37:738–47. [DOI] [PubMed]

- 25.Nutting PA, Baier M, Werner JJ, Cutter G, Conry C, Stewart L. Competing demands in the office visit: what influences mammography recommendations? J Am Board Fam Pract. 2001;14:352–61. [PubMed]

- 26.The Chronic Care Model. Available at: http://www.improvingchroniccare.org/index.php?p = The_Chronic_Care_Model&s = 2. Accessed June 16, 2008.

- 27.Commitee on Quality Health Care in America. Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington, D.C.: National Academy Press; 2001.

- 28.Colletti L, Gruppen L, Barclay M, Stern D. Teaching students to break bad news. Am J Surg. 2001;182:20–3. [DOI] [PubMed]

- 29.Leeper-Majors K, Veale JR, Westbrook TS, Reed K. The effect of standardized patient feedback in teaching surgical residents informed consent: results of a pilot study. Curr Surg. 2003;60:615–22. [DOI] [PubMed]

- 30.Epstein RM, Levenkron JC, Frarey L, Thompson J, Anderson K, Franks P. Improving physicians’ HIV risk-assessment skills using announced and unannounced standardized patients. J Gen Intern Med. 2001;16:176–80. [DOI] [PMC free article] [PubMed]

- 31.Mansouri M, Lockyer J. A meta-analysis of continuing medical education effectiveness. J Contin Educ Health Prof. 2007;27:6–15. [DOI] [PubMed]

- 32.Katon WJ, Unutzer J, Simon G. Treatment of depression in primary care: where we are, where we can go. Med Care. 2004;42:1153–7. [DOI] [PubMed]

- 33.Flocke SA, Stange KC. Direct observation and patient recall of health behavior advice. Prev Med. 2004;38:343–9. [DOI] [PubMed]

- 34.Egede LE. Major depression in individuals with chronic medical disorders: prevalence, correlates and association with health resource utilization, lost productivity and functional disability. Gen Hosp Psychiatry. 2007;29:409–16. [DOI] [PubMed]

- 35.Scott KM, Von Korff M, Ormel J, et al. Mental disorders among adults with asthma: results from the World Mental Health Survey. Gen Hosp Psychiatry. 2007;29:123–33. [DOI] [PMC free article] [PubMed]

- 36.Whooley MA, Avins AL, Miranda J, Browner WS. Case-finding instruments for depression. Two questions are as good as many. J Gen Intern Med. 1997;12:439–45. [DOI] [PMC free article] [PubMed]

- 37.Spitzer RL, Kroenke K, Williams JB, Lowe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. 2006;166:1092–7. [DOI] [PubMed]

- 38.Burton P, Gurrin L, Sly P. Extending the simple linear regression model to account for correlated responses: An introduction to generalized estimating equations and multi-level mixed modeling. Stat Med. 1998;17:1261–91. [DOI] [PubMed]

- 39.Cohen J. A power primer. Psychol Bull. 1992;112:155–9. [DOI] [PubMed]

- 40.Peabody JW, Luck J, Glassman P, Dresselhaus TR, Lee M. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA. 2000;283:1715–22. [DOI] [PubMed]

- 41.Fiscella K, Franks P, Srinivasan M, Kravitz RL, Epstein R. Ratings of physician communication by real and standardized patients. Ann Fam Med. 2007;5:151–8. [DOI] [PMC free article] [PubMed]

- 42.Marks R, Allegrante JP, Lorig K. A review and synthesis of research evidence for self-efficacy-enhancing interventions for reducing chronic disability: implications for health education practice (part I). Health Promot Pract. 2005;6:37–43. [DOI] [PubMed]

- 43.Chronic Disease Self-Management Program. Available at: http://patienteducation. stanford.edu/programs/cdsmp.html. Accessed June 19, 2008.

- 44.Iren UT, Walker MS, Hochman E, Brasington R. A pilot study to determine whether disability and disease activity are different in African-American and Caucasian patients with rheumatoid arthritis in St. Louis, Missouri, USA. J Rheumatol. 2005;32:602–8. [PubMed]

- 45.Vickers KS, Nies MA, Patten CA, Dierkhising R, Smith SA. Patients with diabetes and depression may need additional support for exercise. Am J Health Behav. 2006;30:353–62. [DOI] [PubMed]

- 46.Kalauokalani D, Franks P, Oliver JW, Meyers FJ, Kravitz RL. Can patient coaching reduce racial/ethnic disparities in cancer pain control? Secondary analysis of a randomized controlled trial. Pain Med. 2007;8:17–24. [DOI] [PubMed]

- 47.Franks P, Fiscella K. Reducing disparities downstream: prospects and challenges. J Gen Intern Med. 2008;23:672–7. [DOI] [PMC free article] [PubMed]

- 48.Franz CE, Epstein R, Miller KN, et al. Caught in the act? Prevalence, predictors, and consequences of physician detection of unannounced standardized patients. Health Serv Res. 2006;41:2290–302. [DOI] [PMC free article] [PubMed]