Nowadays few would argue against the need to base clinical decisions on the best available evidence. In practice, however, clinicians face serious challenges when they seek such evidence.

Research-based evidence is generated at an exponential rate, yet it is not readily available to clinicians. When it is available, it is applied infrequently. A systematic review1 of studies examining the information-seeking behaviour of physicians found that the information resource most often consulted by physicians is textbooks, followed by advice from colleagues. The textbooks we consult are frequently out of date,2 and the advice we receive from colleagues is often inaccurate.3 Also, nurses and other health care professionals refer only infrequently to evidence from systematic reviews in clinical decision-making.4,5

The sheer volume of research-based evidence is one of the main barriers to better use of knowledge. About 10 years ago, if general internists wanted to keep abreast of the primary clinical literature, they would have needed to read 17 articles daily.6 Today, with more than 1000 articles indexed daily by MEDLINE, that figure is likely double. The problem is compounded by the inability of clinicians to afford more than a few seconds at a time in their practices for finding and assimilating evidence.7 These challenges highlight the need for better infrastructure in the management of evidence-based knowledge.

Systematic reviews and primary studies

Some experts suggest that clinicians should seek systematic reviews first when trying to find answers to clinical questions.8 Research that is synthesized in this way provides a base of evidence for clinical practice guidelines. But there are many barriers to the direct use by clinicians of systematic reviews and primary studies. Clinical practitioners lack ready access to current research-based evidence,9,10 lack the time needed to search for it and lack the skills needed to identify it, appraise it and apply it in clinical decision-making.11,12 Until recently, training in the appraisal of evidence has not been a component of most educational curricula.11,12 In one study of the use of evidence, clinicians took more than 2 minutes to identify a Cochrane review and its clinical bottom line. This resource was therefore frequently abandoned in “real-time” clinical searches.7 In another study, Sekimoto and colleagues13 found that physicians in their survey believed a lack of evidence for the effectiveness of a treatment was equivalent to the treatment being ineffective.

Often, the content of systematic reviews and primary studies is not sufficient to meet the needs of clinicians. Although criteria have been developed to improve the reporting of systematic reviews,14 their focus has been on the validity of evidence rather than on its applicability. Glenton and colleagues15 described several factors hindering the effective use of systematic reviews for clinical decision-making. They found that reviews often lacked details about interventions and did not provide adequate information on the risks of adverse events, the availability of interventions and the context in which the interventions may or may not work. Glasziou and colleagues16 observed that, of 80 studies (55 single randomized trials and 25 systematic reviews) of therapies published over 1 year in Evidence-Based Medicine (a journal of secondary publication), elements of the intervention were missing in 41. Of the 25 systematic reviews, only 3 contained a description of the intervention that was sufficient for clinical decision-making and implementation.

Potential solutions

Better knowledge tools and products

Those who publish and edit research-based evidence should focus on the “3 Rs” of evidence-based communication: reliability, relevance and readability. Evidence is reliable if it can be shown to be highly valid. The methods used to generate it must be explicit and rigorous, or at least the best available. To be clinically relevant, material should be distilled and indexed from the medical literature so that it consists of content that is specific to the distinct needs of well-defined groups of clinicians (e.g., primary care physicians, hospital practitioners or cardiologists). The tighter the fit between information and the needs of users, the better. To be readable, evidence must be presented by authors and editors in a format that is user-friendly and that goes into sufficient detail to allow implementation at the clinic or bedside.

When faced with the challenges inherent in balancing the 3 Rs, reliability should trump relevance, and both should trump readability.

More efficient search strategies

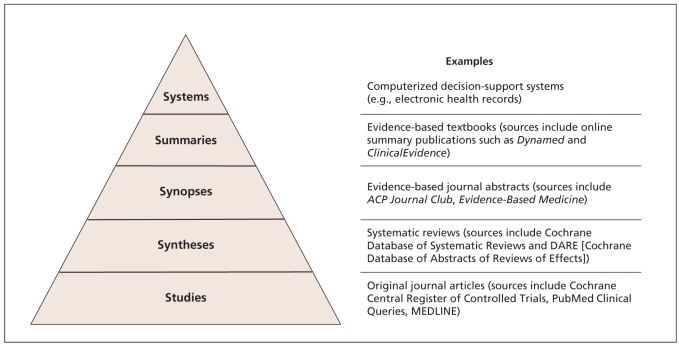

One method for finding useful evidence is the “5S approach”17 (Figure 1). This framework provides a model for the organization of evidence-based information services.

Figure 1.

The “5S” approach to finding useful evidence. This framework provides a model for the organization of evidence-based information services. Ideally, resources become more reliable, relevant and readable as one moves up the pyramid. To optimize search efficiency, it is best to start at the top of the pyramid and work down when trying to answer a clinical question.

Ideally resources become more reliable, relevant and readable as we move up the 5S pyramid. At the bottom of the pyramid are all of the primary studies, such as those indexed in MEDLINE. At the next level are syntheses, which are systematic reviews of the evidence relevant to a particular clinical question. This level is followed by synopses, which provide brief critical appraisals of original articles and reviews. Examples of synopses appear in evidence-based journals such as ACP Journal Club (www.acpjc.org). Summaries provide comprehensive overviews of evidence related to a clinical problem (e.g., gout or asthma) by aggregating evidence from the lower levels of relevant synopses, syntheses and studies.

Given the challenges of doing a good MEDLINE search, it is best to start at the top of the pyramid and work down when trying to answer a clinical question. At the top of the pyramid are systems such as electronic health records. At this level, clinical data are linked electronically with relevant evidence to support evidence-based decision-making. Computerized decision-support systems such as these are still rare, so usually we start at the second level from the top of the pyramid when searching for evidence. Examples at the second level include online summary publications, such as Dynamed (www.ebscohost.com/dynamed) and ClinicalEvidence (http://clinicalevidence.bmj.com/ceweb/index.jsp), which are evidence-based, frequently updated and available for a widening range of clinical topics. Online services such as Evidence-Updates (http://plus.mcmaster.ca/evidenceupdates), which include studies and syntheses rated for quality and relevance with links to synopses and summaries, have recently become available with open access.

Evidence-based information resources are not created equal. Users at any of the levels just described must ensure that evidence is reliable by being aware of the methods used to generate, synthesize and summarize it. They should know that just because a resource has references does not mean that it is evidence-based. And just because a resource uses “evidence-based” in its title does not mean that it is so. One publisher stated that sales can be enhanced by placing the term “evidence-based” in the title of a book (Mary Banks, Senior Publisher, BMJ Books, London, UK: personal communication, 2009). Rating scales that we find useful for evidence summaries and research articles are provided in Box 1 and Table 1.

Box 1.

Guide for appraising resources for evidence-based information

| Methods and quality of information | Clinical usefulness |

|---|---|

Rating scale for methods and quality of information

|

Rating scale for clinical usefulness

|

Details on specific resources

Evidence-based medical texts

The following points could be used as a minimum checklist:

Does the resource provide an explicit statement about the type of evidence on which any statements or recommendations are based? Did the authors adhere to these criteria? For example, claims about effectiveness of an intervention might be accompanied by a statement about either the level” of evidence (which would need to be defined somewhere in the text) or a statement about the exact type of evidence (e.g., “there have been 3 randomized controlled trials”).

Was there an explicit and adequate search for this evidence? For example, a search for evidence about an intervention might have started with a look for adequate systematic reviews. If this was done, it might be followed by a search of the Cochrane Central Register of Controlled Trials.

Is there quantification of the results? For example, statements about diagnostic accuracy should contain measures of accuracy such as sensitivity and specificity. The minimum criteria for an evidence-based resource would be adherence to the first point. Better resources should also address the other 2 points.

Meta-resources (e.g., listings or search engines for other resources)

These resources should provide an explicit statement about the selection criteria for inclusion in the listing. Better resources should also include a descriptive review such as that described in the 3 points for evidence-based medical texts.

Table 1.

Scale for rating individual studies

| Relevance | Newsworthiness |

|---|---|

| 7 Directly and highly relevant | 7 Useful information; most practitioners in my specialty definitely don’t know this |

| 6 Definitely relevant | 6 Useful information; most practitioners in my specialty probably don’t know this |

| 5 Probably relevant | 5 Useful information; most practitioners in my specialty possibly don’t know this |

| 4 Possibly relevant; likely of indirect or peripheral relevance at best | 4 Useful information; most practitioners in my specialty possibly already know this |

| 3 Possibly not relevant | 3 Useful information; most practitioners in my specialty probably already know this |

| 2 Probably not relevant: content only remotely related | 2 It probably doesn’t matter whether they know this or not |

| 1 Definitely not relevant: completely unrelated content area | 1 Not of direct clinical interest |

Source: McMaster Online Rating of Evidence (MORE) system, Health Information Research Unit, McMaster University (http://hiru.mcmaster.ca/more/AboutMORE.htm).

Promoting specialized search methods and making high-quality resources for evidence-based information available may lead to more correct answers being found by clinicians. In a small study of information retrieval by primary care physicians who were observed using their usual sources for clinical answers (most commonly Google and UpToDate), McKibbon and Fridsma18 found just a 1.9% increase in correct answers following searching. By contrast, others who have supplied information resources to clinicians have found that searching increased the rate of correct answers from 29% to 50%.19 Schaafsma and colleagues20 found that when clinicians asked peers for answers to clinical questions, the answers they received were correct only 47% of the time; if the colleague provided supportive evidence, the correct answers increased to 83%.

Question-answering services by librarians may also enhance the search process. When tested in primary care settings, such a service was found to save time for clinicians, although its impact on decision-making and clinical care was not clear.21,22

What can journals do?

Journals must provide enough detail to allow clinicians to implement the intervention in practice. Glasziou and colleagues16 found that most study authors, when contacted for additional information, were willing to provide it. In some cases, this led to the provision of booklets or videoclips that could be made available on a journal’s website. This level of information is helpful regardless of the complexity of the intervention. For example, the need to titrate the dose of angiotensin-converting-enzyme inhibitors and confusion about monitoring the use of these drugs are considered barriers to their use by primary care physicians, and yet such information is frequently lacking in primary studies and systematic reviews.23

Finally, journal editors and researchers should work together to format research in ways that make it more readable for clinicians. There is some evidence that the use of more informative, structured abstracts has a positive impact on the ability of clinicians to apply evidence24 and that the way in which trial results are presented has an impact on the management decisions of clinicians.25 By contrast, there are no data showing that information presented in a systematic review has a positive impact on clinicians’ understanding of the evidence or on their ability to apply it to individual patients.

Conclusion

Evidence, whether strong or weak, is never sufficient to make clinical decisions. It must be balanced with the values and preferences of patients for optimal shared decision-making. To support evidence-based decision-making by clinicians, we must call for information resources that are reliable, relevant and readable. Hopefully those who publish or fund research will find new and better ways to meet this demand.

Key points

Sources of information for the practice of evidence-based health care should be reliable, relevant and readable, in that order.

The “5S” approach provides a model for seeking evidence-based information from systems, summaries, synopses, syntheses and studies.

Journal editors should work with authors to present evidence in ways that promote its use by clinicians.

Journals should provide enough detail to enable clinicians to appraise research-based evidence and apply it in practice.

Footnotes

This article has been peer reviewed.

Sharon Straus is the Section Editor of Reviews at CMAJ and was not involved in the editorial decision-making process for this article.

Competing interests: Sharon Straus is an associate editor for ACP Journal Club and Evidence-Based Medicine and is on the advisory board of BMJ Group. Brian Haynes is editor of ACP Journal Club and EvidenceUpdates, coeditor of Evidence-Based Medicine and contributes research-based evidence to ClinicalEvidence.

Contributors: Both of the authors contributed to the development of the concepts in the manuscript, and both drafted, revised and approved the final version submitted for publication.

REFERENCES

- 1.Haynes RB. Where’s the meat in clinical journals? [editorial] ACP J Club. 1993;119:A22–3. [Google Scholar]

- 2.McKibbon A, Eady A, Marks S. PDQ evidence-based principles and practice. New York (NY): BC Decker; 2000. [Google Scholar]

- 3.Bero L, Rennie D The Cochrane Collaboration. Preparing, maintaining and disseminating systematic reviews of the effects of health care. JAMA. 1995;274:1935–8. doi: 10.1001/jama.274.24.1935. [DOI] [PubMed] [Google Scholar]

- 4.Kiesler DJ, Auerbach SM. Optimal matches of patient preferences for information, decision-making and interpersonal behavior: evidence, models and interventions. Patient Educ Couns. 2006;61:319–41. doi: 10.1016/j.pec.2005.08.002. [DOI] [PubMed] [Google Scholar]

- 5.Dawes M, Sampson U. Knowledge management in clinical practice: a systematic review of information seeking behaviour in physicians. Int J Med Inform. 2003;71:9–15. doi: 10.1016/s1386-5056(03)00023-6. [DOI] [PubMed] [Google Scholar]

- 6.Antman E M, Lau J, Kupelnick B, et al. A comparison of results of meta-analyses of randomised control trials and recommendations of clinical experts. JAMA. 1992;268:240–8. [PubMed] [Google Scholar]

- 7.Oxman AD, Guyatt GH. The science of reviewing research. Ann N Y Acad Sci. 1993;703:125–34. doi: 10.1111/j.1749-6632.1993.tb26342.x. [DOI] [PubMed] [Google Scholar]

- 8.Olade RA. Evidence-based practice and research utilisation activities among rural nurses. J Nurs Scholarsh. 2004;36:220–5. doi: 10.1111/j.1547-5069.2004.04041.x. [DOI] [PubMed] [Google Scholar]

- 9.Kajermo KN, Nordstrom G, Krusebrant A, et al. Nurses’ experiences of research utilization within the framework of an educational programme. J Clin Nurs. 2001;10:671–81. doi: 10.1046/j.1365-2702.2001.00526.x. [DOI] [PubMed] [Google Scholar]

- 10.Milner M, Estabrooks CA, Myrick F. Research utilisation and clinical nurse educators: a systematic review. J Eval Clin Pract. 2006;12:639–55. doi: 10.1111/j.1365-2753.2006.00632.x. [DOI] [PubMed] [Google Scholar]

- 11.Lavis JN. Research, public policymaking, and knowledge-translation processes: Canadian efforts to build bridges. J Contin Educ Health Prof. 2006;26:37–45. doi: 10.1002/chp.49. [DOI] [PubMed] [Google Scholar]

- 12.Straus SE, Sackett DL. Bringing evidence to the point of care. JAMA. 1999;281:1171–2. [Google Scholar]

- 13.Sekimoto M, Imanaka Y, Kitano N, et al. Why are physicians not persuaded by scientific evidence? BMC Health Serv Res. 2006;6:92. doi: 10.1186/1472-6963-6-92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Moher D, Cook DJ, Eastwood S, et al. Improving the quality of reports of meta-analyses of randomized controlled trials: the QUORUM statement. Lancet. 1999;354:1896–900. doi: 10.1016/s0140-6736(99)04149-5. [DOI] [PubMed] [Google Scholar]

- 15.Glenton C, Underland V, Kho M, et al. Summaries of findings, descriptions of interventions, and information about adverse effects would make reviews more informative. J Clin Epidemiol. 2006;59:770–8. doi: 10.1016/j.jclinepi.2005.12.011. Epub 2006 May 30. [DOI] [PubMed] [Google Scholar]

- 16.Glasziou P, Meats E, Heneghan C, et al. What is missing from descriptions of treatment in trials and reviews? BMJ. 2008;336:1472–4. doi: 10.1136/bmj.39590.732037.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Haynes RB. Of studies, syntheses, synopses, summaries and systems: the ‘5S’ evolution of information services for evidence-based health care decisions. ACP J Club. 2006;145:A8–9. [PubMed] [Google Scholar]

- 18.McKibbon KA, Fridsma DB. Effectiveness of clinician-selected electronic information resources for answering primary care physicians’ information needs. J Am Med Inform Assoc. 2006;13:653–9. doi: 10.1197/jamia.M2087. Epub 2006 Aug 23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Westbrook JI, Coirea WE, Gosling AS. Do online information retrieval systems help experienced clinicians answer clinical questions? J Am Med Inform Assoc. 2005;12:315–32. doi: 10.1197/jamia.M1717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Schaafsma F, Verbeek J, Hulshof C, et al. Caution required when relying on a colleague’s advice; a comparison between professional advice and evidence from the literature. BMC Health Serv Res. 2005;5:59. doi: 10.1186/1472-6963-5-59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.McGowan J, Hogg W, Campbell C, et al. Just-in-time information improved decision-making in primary care: a randomized controlled trial. PLoS ONE. 2008;3:e3785. doi: 10.1371/journal.pone.0003785. Epub 2008 Nov 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Brettle A, Hulme C, Ormandy P. The costs and effectiveness of information-skills training and mediated searching: quantitative results from the EMPIRIC project. Health Info Libr J. 2006;23:239–47. doi: 10.1111/j.1471-1842.2006.00670.x. [DOI] [PubMed] [Google Scholar]

- 23.Kasje WN, Denig P, de Graeff PA, et al. Perceived barriers for treatment of chronic heart failure in general practice: Are they affecting performance? BMC Fam Pract. 2005;6:19. doi: 10.1186/1471-2296-6-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hartley J. Clarifying the abstracts of systematic literature reviews. Bull Med Libr Assoc. 2000;88:332–7. [PMC free article] [PubMed] [Google Scholar]

- 25.McGettigan P, Sly K, O’Connell D, et al. The effects of information framing on the practices of physicians. J Gen Intern Med. 1999;14:633–42. doi: 10.1046/j.1525-1497.1999.09038.x. [DOI] [PMC free article] [PubMed] [Google Scholar]